Abstract

This paper proposes the Mesh Neural Network (MNN), a novel architecture which allows neurons to be connected in any topology, to efficiently route information. In MNNs, information is propagated between neurons throughout a state transition function. State and error gradients are then directly computed from state updates without backward computation. The MNN architecture and the error propagation schema is formalized and derived in tensor algebra. The proposed computational model can fully supply a gradient descent process, and is potentially suitable for very large scale sparse NNs, due to its expressivity and training efficiency, with respect to NNs based on back-propagation and computational graphs.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction and Background

A huge amount of research has been made during the last years on a variety of applications of Artificial Neural Networks (ANNs). As a consequence, many ANNs architectures have been developed, generating surrogate models from different types of big data, such as image, audio, video, text, time series, and so on. With ANNs, the underlying relationships among data can be approximated with little knowledge of the system to be modelled. In spite of this success, ANNs are computational models vaguely inspired to biological brains, and require relevant computation and management with respect to the biological counterpart.

Specifically, Deep Learning is achieving good levels of performance, via architectures composed of several layers. The Deep Learning research is mostly based on gradient-based optimization methods and on the well-known backpropagation (BP) algorithm. In essence, BP includes a forward and backward layer-wise computation of the loss function with respect to the neurons weights. Actually, BP is not biologically plausible. Moreover, convergence problems, such as vanishing and exploding gradients, occur when using many layers. Finally, BP can be very unstable when dealing with recurrent networks and can be ineffective to exploit long-lasting relationships [1]. In the last decade, an increasing number of alternative strategies have proposed to simplify the ANN training. A first strategy consists in removing the backward computation by deriving a forward only computation. A reference work for this approach is [2]. Specifically, the proposed method improves the efficiency of Jacobian matrix computation, for fully or partially connected ANNs. An interesting advantage of this approach is that it can train arbitrarily connected ANNs, and not just Multi-Layer Perceptron (MLP)-based architectures. Indeed, ANNs with connections across layers are much more powerful than MLPs. A more recent research in which the Jacobian matrix is calculated only in the forward computation was made by Guo et al. [3]. In general, to remove the backward computation is not costless: an additional calculation in the forward computation must be considered. However, the forward-only computation is more parallelizable than traditional forward and backward computations, as the dataset is large and the number of hidden neurons increases. A different strategy is presented in [4], in which the training method is based on a different principle called information bottleneck, which does not require backpropagation. In general, a performance comparison with BP is difficult, since performance can heavily depend on the minibatch size. The minibatch size is usually a constant that is based on available GPU memory. On the other side, a quantity of interest is the learning convergence, which is unknown for either BP or other methods. Since the backward computation is removed for such approaches, they are more suitable for parallel computation. Another type of strategy is proposed by Jaderberg [5]: a model for predicting gradient, called synthetic gradient, is calculated in place of true backpropagation error gradients. With such synthetic gradients, layers can be independently updated, removing forward and update locking.

According to this research trend, this paper formally introduces recent advances leading to a novel, arbitrarily connected, ANNs architecture, in which error gradients are computed throughout a state transition function without backward computation. The paper is organized as follows. In Sect. 2, the fundamentals of the problem are defined. A formal derivation of the proposed architecture is presented in Sect. 3. Section 4 covers the implementation and experimental aspects. Section 5 is devoted to conclusions and future work.

2 Problem Statement

An Artificial Neurons Layer (ANL) with \({n_i}\) inputs and \({n_o}\) outputs can be described by its layer weights matrix \(\mathbf {W} \in \mathbb {R}^{{n_i}\times {n_o}}\) and activation function \({\hat{\varphi }}(\mathbf {x}): \mathbb {R}^{n_o}\rightarrow \mathbb {R}^{n_o}\). Let us consider activation functions for which it holds that \({\hat{\varphi }}(\mathbf {x})_i = \varphi (x_i)\) (where \(\varphi (x) : \mathbb {R} \rightarrow \mathbb {R}\)). Each column \(\mathbf {W}_{*,i}\) of \(\mathbf {W}\) represents the weights vector from the inputs to the i-th perceptron, in which biases are represented as weights of fictitious inputs that always produce the constant value 1. Given the input vector \(\mathbf {x} \in \mathbb {R}^{n_i}\), the output vector \(\mathbf {y} \in \mathbb {R}^{n_o}\) of the ANL is \(\mathbf {y} = \varphi (\mathbf {x}\mathbf {W})\). In multilayer neural networks, or MLPs, ANLs are stacked, i.e., the ANL\(_i\) is fed by the output of the ANL\(_{i-1}\): each set of weights connecting the i-th layer is represented by a different matrix \(\mathbf {W}_i\), and the input/output layers are considered as special topological elements with respect to the hidden layers.

In the popular BP training algorithm, the gradients of the weights are iteratively computed exploiting a propagation rule between layers [6, 7]. Let us consider a generic error function \(E(\mathbf {y}, \overline{\mathbf {y}}): \mathbb {R}^{n\times {2}} \rightarrow \mathbb {R}\) that computes the error between a network output \(\mathbf {y}\) and a desired one \(\overline{\mathbf {y}}\), and a generic error function with respect to the o-th output \(y_o\) \(E_o(y_o, \overline{y_o}): \mathbb {R}^2 \rightarrow \mathbb {R}\). Let us assume that \(E(\mathbf {y},\overline{\mathbf {y}})\) is a composition of \(E_o(y_o, \overline{y_o})\) for every output unit. Considering an MLP with \({n_l}\) layers, the objective of the BP algorithm is to compute the gradients of every output error \(\frac{\partial E(y_o,\overline{y_o})}{\partial p_i}\) with respect to every parameter \(p_i\). Such gradients can be used by a Stochastic Gradient Descent (SGD) algorithm to train the MLP [8]. Let \(net_{i,o}\) be the o-th output of the i-th hidden layer. Applying the chain rule for differentiating composite functions to \(\frac{\partial E(y_o,\overline{y_o})}{\partial p_i}\), the corresponding error gradient is:

The derivative \(\frac{\partial E(net_{{n_l}-1,o},\overline{y_o})}{\partial net_{{n_l}-1,o}}\) depends on the error function and is known. In the derivative \(\frac{\partial net_{{n_l}-1,o}}{\partial p_i}\), each parameter of a layer influences the output values of all the subsequent layers. Hence, in order to compute \(\frac{\partial net_{{n_l}-1,o}}{\partial p_i}\), the chain rule is applied up to the term \(\frac{\partial net_{i,o}}{\partial p_i}\). For this purpose, the BP algorithm iteratively applies the chain rule on each layer in reverse order for efficiently computing the partial derivatives with respect to all parameters. More formally, given the output of the l-th layer, \(\mathbf {net}_{\mathbf {l}} = \varphi (\mathbf {net}_{\mathbf {l-1}}\mathbf {W}_l)\), let us say its o-th element \(t_{l,o} = (\mathbf {net}_{\mathbf {l-1}}\mathbf {W}_l)_o\). The chain rule is applied to \(\varphi (t_{l,o})\), and in order to compute the term \(\frac{\partial \varphi (t_{l,o})}{\partial t_{l,o}}\), \(\mathbf {t}_{\mathbf {l}}\) needs to be saved for each layer.

To train ANNs without a layered topology, the approach commonly used is the automatic differentiation on computational graphs (CGs) [9], in which computations are represented in a graph. In essence, for each operation (e.g., matrix multiplication, element-wise sum, etc.) the inputs \(x_0, x_1, \ldots , x_{n-1}\) and the output \(\mathbf {y}\) are represented as incoming and outgoing edges of a graph, respectively. For each edge \(\frac{\partial y}{\partial x_i}\) is computed. For a given ANN, the operations to compute its output \(y_o\) and the error \(E(y_o,\overline{y_o})\) are then represented as a CG. Let us consider, a “factoring path”, i.e., a path between two nodes in which the derivatives \(\frac{\partial y}{\partial x_i}\) encountered on the traversed edges are all multiplied together. Then, the partial derivative of the error function with respect to a parameter, i.e., \(\frac{\partial E(y_o,\overline{y_o})}{\partial p_i}\), is the sum of all the reverse factoring paths from \(E(y_o,\overline{y_o})\) to \(p_i\), i.e., the paths belonging to the set \({\mathcal {P}}_i\):

A CG representation is a general formalism to represent all network topologies, such as feedforward, recurrent, convolutional, residual, and so on. To train arbitrarily connected ANNs topologies is very important, because ANNs with connections across layers are much more powerful than classical MLP architectures. However, a CG increases the space complexity with respect to a corresponding MLP-based representation (where an MLP representation is possible). Indeed, the underlying data structure needs to store both the graph topology and the partial derivatives \(\frac{\partial y}{\partial x_i}\) of each edge. Moreover, it results in a higher time complexity, because all the reverse factoring paths have to be found.

In the next section, a novel ANNs representation is introduced, which is capable of training arbitrarily connected neural networks and, as a consequence, ANNs with reduced number of neurons and good generalization capabilities. The interesting properties of the training algorithm is the lack of a backpropagated computation, and an iteration without need of memory relationships than the one with the previous step. Hence, the proposed method is much simpler than traditional forward and backward procedure. Indeed, the training iteration can be described by three matrix operations. Due to the possibility of training unstructured ANNs, the proposed architectural model is called Mesh Neural Network (MNN).

3 Formal Derivation of a Mesh Neural Network

3.1 Structure, Activation and State of an MNN

The proposed MNN is based on a matrix representation that is not a transfer matrix, but it is an adjacency matrix (AM), i.e., a square matrix representing the ANN as a finite graph. The elements of the AM indicate whether pairs of vertices are adjacent or not in the graph, by means of a non-zero or zero weight, respectively.

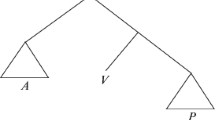

More formally, an AM \(\mathbf {A}\) is a matrix in which each element \(A_{i,j}\) represents the weight from the node i to the node j. For example MLPs are a subset of the representable topologies with AMs: since in MLPs only connections between layers are possible, their AMs are block matrices. Figure 1 shows an MLP topology with the corresponding AM. Here, each \(\mathbf {W}_i\) is the weights matrix of the i-th layer and occupies a corresponding block in the AM.

An example of unstructured topology and its corresponding AM is shown in Fig. 2.

A generic MNN topology with \(n\) neurons is represented by a matrix \(\mathbf {A} \in \mathbb {R}^{n\times n}\). It is worth noting that this representation does not include the topological distinction between input, hidden and output neurons. Let \({n_i}\),\({n_o}\), and \({n_o}\) be the number of input, hidden and output neurons. Since all neurons are identified by a position in the matrix, a good convention (hereinafter called “iho positioning convention”) to distinguish the three sets without loss of generality is to assign them a positioning: to consider the first \({n_i}\) elements as input neurons, the subsequent \({n_h}\) elements as hidden neurons, and the last \({n_o}\) elements as output neurons.

Let the state be \(\mathbf {s}_{\mathbf {i}} \in \mathbb {R}^n\) the output value of each neuron in the MNN at the i-th instant of time. The output of an MNN is provided along a temporal sequence, whose length depends on the distances between input and output neurons. This allows an MNN to exhibit temporal dynamic behavior. Let us recall that: (i) \(A_{i,j}\) represents the weight from neuron i to neuron j; (ii) the h-th neuron output is computed as \(\varphi (\sum _{k=0}^N w_{k,h} x_k)\); (iii) biases are represented as weights of fictitious inputs that always produce the constant value 1. Hence, given an initial state \(\mathbf {s}_{\mathbf {0}}\), which is set to the input value for input neurons and to zero for the other neurons, the next state is calculated as:

At each time tick, the state transition of each neuron can influence the outputs values of all adjacent neurons. For subsequent ticks, the initial piece of information contained in \(\mathbf {s}_{\mathbf {0}}\) can traverse subsequent neurons and can influence their states, up to the output neurons.

3.2 Derivation of State and Error Gradients

In this section, the error derivative \(\frac{\partial E(y,{\overline{y}})}{\partial p_i}\) for every parameter \(p_i\) of an MNN are formally determined. It can be observed from Eq. (3) that the unique parameter is \(\mathbf {A}\). Let us assume an MNN with \(n\) neurons, of which \({n_i}\) input neurons and \({n_o}\) output neurons positioned in the matrix according to the iho ordering convention. Let be the MNN processed for t states. The o-th output value is then \(y_o = s_{t-1,o} = {\hat{\varphi }}(\mathbf {s}_{t-2}\mathbf {A})_o\) where \(o \in \{n-{n_o},\ldots ,n-1\}\). Recalling the chain rule:

Let us consider a generic state \({ \mathbf {s}_{\mathbf {n}} }= {\hat{\varphi }}({ \mathbf {t}_{\mathbf {n}}})\) where \({ \mathbf {t}_{\mathbf {n}}}= { \mathbf {s}_{\mathbf {n-1}} }\mathbf {A}\). According to the chain rule, the derivative for a generic output o is:

where \(({ \mathbf {s}_{\mathbf {n-1}} }\mathbf {A})_o\) is:

Let us distinguish two cases in Eq. (6): (i) if \(o=j\), one of the \(A_{k,o}\) is \(A_{i,j}\); (ii) if \(o\ne j\), all the \(A_{k,o}\) are constant with respect to \(A_{i,j}\). Let us consider the case \(o = j\). For linearity of differentiation:

In the partial derivatives \(\frac{\partial ({ s_{n-1,k} }A_{k,j})}{\partial A_{i,j}}\), all the \({ s_{n-1,k} }\) elements depend on \(A_{i,j}\). Moreover, in the case \(k \ne i\), the matrix elements \(A_{k,j}\) are constants with respect to \(A_{i,j}\). Let us distinguish in Eq. (7) the term with \(k = i\):

Since \(A_{k,j}\) is a constant, the first term of Eq. (8) is:

By applying the product rule to the second term of Eq. (8):

The term \(\frac{\partial s_{n-1,i}}{\partial A_{i,j}}A_{i,j}\) can be integrated in the summation of Formula (9):

Similarly, considering the case \(o \ne j\) in Eq. (6), the \(A_{k,o}\) elements are constant with respect to \(A_{i,j}\), leading to:

Hence, Eq. (5) can be formulated as follows:

As a result, Eq. (13) determines a very efficient algorithm for computing the partial derivative of the MNN state, which is, in turn, essential for applying an SGD-based training. In three terms: (i) the partial derivatives of the activation function \(\frac{\partial \varphi ({ t_{n,o} })}{\partial { t_{n,o} }}\), (ii) the previous states \({ s_{n-1,k} }\), and (iii) the partial derivatives previous state \(\frac{\partial { s_{n-1,k} }}{\partial A_{i,j}}\). Consequently, it is possible to compute both the next states \(s_{n,o}\) and the next state partial derivatives \(\frac{\partial s_{n,o}}{\partial A_{i,j}}\), concurrently and in the same iteration step. Moreover, an iteration does not need to store any intermediate values except for those of the current state, which can then be overwritten in the next iteration. Since the error gradient can be directly calculated from state gradient, Eq. (4) results in a simplified iterative method without any memory dependency than the one with the previous step.

Operations in Eq. (13) can be performed with scalars, vectors, and matrices, and then can be reformulated so as to be efficiently performed with tensors. In the next section, Eq. (13) and the error gradient propagation schema are formalized and derived by tensor algebra.

3.2.1 Tensor Algebra Formulation of the Error Gradient

Let us denote by \(\nabla _\mathbf {A}\mathbf {s}_{\mathbf {n}} \in R^{N\times N\times N}\) the tensor of the partial derivatives \(\frac{\partial { s_{n,o} }}{\partial A_{i,j}}\)

and by \(\nabla _\mathbf {x} \varphi (\mathbf {x})\) the tensor of partial derivatives \(\frac{\partial \varphi (x_i)}{\partial x_i}\)

and by \({\tilde{\mathsf {S}}}_n \in R^{N\times N\times N}\) a tensor such that:

Hence, it is possible to formulate Eq. 13 as:

where the symbol \(\odot \) denotes the Hadamard product.

As a result, the error gradient Forward-Only Propagation (FOP) algorithm of an MNN can be formulated in terms of the following steps, i.e., initialization, state derivatives forward propagation, and error derivative computation:

where \({n_t}\) is the number of timesteps needed to the input to traverse the network and provide a sufficiently accurate output. In Recurrent Neural Networks (RNNs) a careful consideration is required to determine the value of \({n_t}\), because any recurrent connection results in a potentially undefined number of loops. However, a relevant advantage of MNN with respect to RNN based on back-propagation is that an MNN does not need to save the prior steps determined by a loop. In RNNs a bounded-history approximation strategy is used to simplify the computation and provide an adequate approximation to the true gradient: relevant information is saved in the fixed number of timesteps \({n_t}\) and any information older than that is forgotten. According to this strategy, in Backpropagation Through Time [10], a backward pass through the most recent \({n_t}\) time steps is performed at each time the network is run through an additional time step. In contrast, in MNN the lack of an error backpropagation sensibly reduces the impact of \({n_t}\): it should be large enough to capture the temporal structure of the problem to model. Thus, after \({n_t}\) timesteps the computation is simply truncated to take the output value. It is worth noting that already in the training phase weights are adjusted according to the specified \({n_t}\). Consequently, recurrent connections are adequately weakened when producing noise on the error, reducing the impact of the recurrent computation.In conclusion, a sufficiently large \({n_t}\) results in an adequate approximation to the true gradient, and it is not a sensitive parameter of the network.

The next section is devoted to the Python implementation and the evaluation of the proposed MNN.

4 Implementation and Experimental Studies

The MNN model has been developed, tested and publicly released on the Github platform, to make possible the initial roll-out of the approach, and to foster its application on various research environments. The implementation is based on numpy [11], a widespread package for tensor algebra in Python. The interested reader is referred to [12] for further implementation details.

The correctness of the symbolic derivatives is a critical aspect of the proposed network. To ensure it, in addition to the symbolic differentiation (SD), another implementation has been generated, in which gradients are calculated via automatic differentiation (AD) [13]. AD transforms a target function into a large graph of symbolic differentiation at elementary operation level, which are highly parallelizable [14]. This computational graph can efficiently manage orders of magnitude of gradients, providing highly accurate numerical values. Nevertheless, the AD-based system can be used for testing purposes only, since it is based on a back-propagated gradient error that has been criticized in the premise of this research work. To develop an efficient and coherent implementation of the proposed approach, the symbolic derivatives are then fundamental. To empirically evaluate the functional equivalence of the SD-based and the AD-based networks, the absolute differences between their corresponding output values have been computed over 100 tests. The two networks have been equipped with 5 input, 10 hidden and 3 output nodes. In each test, the two comparative networks have been set with (the same) random weights, and fed with a batch of (the same) 10 random inputs. As a result, the 95% confidence intervals of both the state and the gradient absolute differences are very low: \(0.00024 \pm 0.000047\) and \(0.000011 \pm 0.0000053\), respectively. The source code of the numerical test code has been publicly released [12].

4.1 Synthetic Problems

In order to investigate the capabilities of the MNN model the dataset generator of scikit-learn [15] has been used to produce five types of two-dimensional dataset well-known in the literature (Figs. 4, 5, 6): (a) Moons: a two-classes dataset made by two interleaving circles; (b) Circles: a two-class dataset made by concentric circles; (c) Spirals, which is considered as a good evaluation of training algorithms [2]; (d) Single Blobs: a three-class dataset made by isotropic Gaussian blobs with standard deviation 1.0, 2.5, 0.5; (e) Double Blobs: a three-class dataset made by two groups of isotropic Gaussian blobs with standard deviation 1.0.

Each dataset is made by 1,000 objects, balanced classes, and contains 10% of noise. Finally, a dataset from UCI Machine Learning Repository has been used, known as Iris [16]. Iris contains three classes of Iris plants. Each class consists of 50 objects characterised by 4 numeric features which describe, respectively, sepal length, sepal width, petal length and petal width. Class Iris Setosa is linearly separable from the other two. However, class Iris Versicolor and Iris Viginica are not separable from each other.

The MNN topology represented in Fig. 3 has been used. Specifically, two output units have been assigned for the two classes datasets, and three output units for the three classes datasets. On the other side, three inputs units have been used: two inputs for the (x, y) features of the dataset, and one input for the bias input (constantly set to 1). 5 hidden units have been used. The Network has been evaluated for 3 time ticks. The ReLU activation function has been used for all units. Finally, the cross-entropy loss has been used as error function. For the experiments using the Iris dataset, it has been used an MNN with 5 input units (4 features and 1 bias), 10 hidden units and 3 output units (one for each class).

The Adaptive Moment Estimation (Adam) [17] has been used to compute adaptive learning rates for each parameter of the gradient descent optimization algorithms, carried out with batch method. A learning rate of 0.001 has been set. The training has been carried on for 1000 epochs.

The dataset has been partitioned into 70% and 30% for training and testing sets, respectively. Figures 4, 5, and 6 show with different gray levels the resulting partitioning of the input domain made by the MNN. Here, the generalization capabilities of the network are apparent. As a result, the MNN achieved the 100% accuracy for all datasets. In terms of complexity, the number of nodes of the MNN are \(3+5+2=10\) and \(3+5+3=11\) for 2 and 3 class datasets, respectively. The corresponding number of parameters (weights) is \(10 \cdot 10=100\) and \(11 \cdot 11=121\), respectively. The interested reader is referred to [12] for a color animation of the MNN partitioning for each iteration. Table 1 shows the accuracy of the Spiral model generated by an MNN for increasing hidden neurons. It is interesting that, with 15 hidden neurons the problem is successfully modeled. Moreover, for a lower number of neurons, up to 7, the accuracy decreases gradually, in contrast to MLP and other approaches proposed in [2].

Figure 6a, b show the training loss and the training accuracy over time for the Iris dataset. It is worth to note the convergence capabilities of the network. As a result, the MNN achieved \(97.00\% \pm 1.62\%\) accuracy over 10 runs with a \(3\sigma \) confidence interval. In terms of complexity, the number of nodes of the MNN is \(5+10+3=18\). The corresponding number of parameters (weights) is \(18 \cdot 18=324\).

4.2 Real-World Problems

To investigate the effectiveness of the MNN architecture, some experiments have been carried out on two real-world problems used for benchmarking machine learning algorithms: MNIST [18] and Fashion-MNIST [19]. MNIST is a database of handwritten digits images, whereas Fashion-MNIST is a dataset of fashion article images. Both datasets are made by a training set of 60,000 examples, and a test set of 10,000 examples. Each example is a \(28 \times 28\) image, with pixels in 0–255 grayscale values, associated with a class label of 10 possible classes. The task is to classify a given image into one of such 10 classes. Figure 7 shows some representative samples of the datasets. Both datasets contain samples ambiguous even for humans: MNIST and Fashion-MNIST have an average human performance of 98.29% [20] and 83.5% [19], respectively. Such datasets are widely used and deeply investigated: top-performing models, based on convolutional neural networks, achieve a classification accuracy higher than 99%, and have a layered structure made by feature extraction and classification. Feature extraction can be performed by alternating convolution and subsampling layers, whereas classification can be performed via dense layers, such as a fully connected feed forward (i.e, MLP-based) neural network. The purpose of this section is to use an MNN as a classification layer, to carry out a comparative analysis between MNN and MLP. Rather than providing the top performance, this solution simplifies the design of the classification layer for the sake of simplicity. Indeed, for a fair comparison it is essential to avoid complex architectures with many hyper-parameters, whose particular choices should be subject to in-depth discussion. Similarly, there are many choices for convolutional architectures, but using a general-purpose architecture with a high degree of automation reduces such choices.

With this premise, a Convolutional Auto-Encoder (CAE) is used for feature extraction, followed by an MNN or MLP based network for classification. The CAE is commonly used for unsupervised data encoding and noise reduction [21]. The following architecture is used in experiments. Encoding: a convolutional layer with a \(3 \times 3\) kernel size and 16 channels, stride 3 and padding 1; a rectified linear unit (ReLU); a max pooling layer with \(2 \times 2\) kernel size, stride 2; a convolutional layer with a \(3 \times 3\) kernel size and 8 channels, stride 2 and padding 1; a ReLU; a max pooling layer with \(2 \times 2\) kernel size, stride 2. Decoding: a transpose convolutional layer with \(2 \times 2\) kernel size and 16 channels, stride 2; a ReLU; a transpose convolutional layer with \(5 \times 5\) kernel size and 8 channels, stride 3, padding 1; a ReLU; a transpose convolutional layer with \(2 \times 2\) kernel size and 1 channel, stride 2, padding 1; a hyperbolic tangent activation function. Overall, the CAE provides 32 features to the classification layer. Both MLP and MNN classification layers have been equipped with \({n_i}=32\) input and \({n_o}=10\) output neurons. The number \({n_h}\) of hidden neurons has been set accordingly, to have the same number of overall connections for the two comparative networks. Since both datasets are spatial, in the MNN network two recursion steps, i.e., \({n_t}= 3\) , are sufficient. The MNN network is statically pruned for better efficiency. Since the MNN model generalizes the other perceptron-based topologies, there are custom pruning that makes the MNN fully equivalent, for instance, to an RNN or to an MLP. However, for a significant comparison, such custom pruning is avoided, in favor of a randomly determined pruning.

Figure 8 represents the adjacency matrix of the MNN based classification layer. Here, \(\mathbb {I}\), \(\mathbb {H}\), and \(\mathbb {O}\), represent the sets of indexes corresponding to the input, hidden and output neurons. Each block can then be characterized by a pair of related sets. In particular, white blocks have zero connections, whereas dotted blocks have a given percentage of randomly selected connections.

Specifically, the white blocks represent the following connection types: all-to-input (\(\mathbb {I} \rightarrow \mathbb {I}\), \(\mathbb {H} \rightarrow \mathbb {I}\), \(\mathbb {O} \rightarrow \mathbb {I}\)), output-to-all (\(\mathbb {O} \rightarrow \mathbb {I}\), \(\mathbb {O} \rightarrow \mathbb {H}\), \(\mathbb {O} \rightarrow \mathbb {O}\)). The dotted blocks represent the following connection types: input-to-hidden (\(\mathbb {I} \rightarrow \mathbb {H}\)), input-to-output (\(\mathbb {I} \rightarrow \mathbb {O}\)), hidden-to-hidden (\(\mathbb {H} \rightarrow \mathbb {H}\)), and hidden-to-output (\(\mathbb {H} \rightarrow \mathbb {O}\)). As a consequence, assuming \({n_h}= 50\), the following connections and biases are available in the dotted area: \((1 - p) \cdot ({n_i}+{n_h}) \cdot ({n_h}+ {n_o}) + ({n_h}+ {n_o})\), where p is the pruning percentage, and the last term \(({n_h}+ {n_o})\) is the number of biases of hidden and output nodes. In order to have a similar number of connections, the MLP hidden neurons are made by two layers of \(h_1\) and \(h_2\) neurons. Hence, the total number of connections, considering also biases, is \(({n_i}\cdot h_1 + h_1) + (h_1 \cdot h_2 + h_2) + (h_2 \cdot {n_o}+ {n_o}) \). The 95% confidence intervals achieved via the MNN and MLP based classifiers, calculated over 10 trials, are summarized in Table 2. It important to note that, for each trial, the percentage of randomly selected connections in MNN is completely renewed. As previously discussed, such classification rates are related to the features generated by the CAE layer. Consequently, the rates are not comparable with the top absolute performance of the literature. The significant result is that the MNN and the MLP based classifiers achieve very similar performance for increasing connections. For the sake of comparability, Table 2 shows only the settings with a very similar number of connections for the two networks. The best performance, of about 0.80 classification rate, is achieved via 1044-1047 connections. To evaluate the complexity of the classification task, it has been experimentally verified that for increasing number of connections (up to more than 3 thousand connections), both classifiers are not able to overcome the 0.8 classification rate.

5 Conclusions and Future Work

The purpose of this paper is to formally introduce recent advances leading to the MNNs, providing the key points to the reader.

Overall, the main advantages of the MNN model with the related FOP algorithm are: (i) the state partial derivatives can be computed along the forward propagation; (ii) the error gradient can be directly computed from state gradient; (iii) the state partial derivative update makes use only of short-lived variables, which can be overwritten at each state iteration; (iv) the state partial derivatives concern only one multidimensional parameter; (v) the overall gradient computation relies only on tensor multiplications, which can be easily distributed on parallel computing, thus potentially enabling large-scale sparse ANNs training [22].

In contrast, the BP-based family of algorithms is limited to layer-wise architectures, and needs to store all intermediate layer outputs, by comprising a forward and backward propagation through the network. On the other side, the CG-based gradient computation is not constrained in terms of network architecture, but it needs to store a large graph topology and the partial derivatives of each computation node, and it needs to compute all factoring paths for each parameter.

Due to its unconstrained structure, an interesting research perspective of MNNs is to adopt structural regularization techniques to dynamically drive the network topology. For small datasets the network topology is highly dense to exploit the available neurons, and then the adjacency matrix is highly dense. For large datasets, in general there are two strategies that can make the adjacency matrix sparse: (a) offline pruning, i.e., to remove some types of connections according to some heuristics; (b) online pruning, i.e., to remove iteratively some connections that do not contribute to model, in the training phase. The two strategies can be combined. In general, the possibility to have a sparse matrix depends on the problem complexity. Since the MNN needs large matrix operations, such strategies should also be supported by a framework implementation that efficiently exploits the hardware resources, e.g. via memory caching and highly parallel computation. However, the commercially available machine learning framework provide optimized libraries for back-propagated models. As a consequence, to test the MNN architecture on very large datasets, an optimized framework should be implemented on specific hardware. Such development is a long-term task and it is out of the scope of this paper, which focuses on the formal derivation of the technique and on pilot experimentation showing its potential application.

As a future work, in order to compare BP, CG and FOP according to a performance perspective, the scalability of each algorithm should be evaluated in terms of computational complexity. Moreover, a statistical performance evaluation should be carried out on benchmark problems, considering large-scale applications.

References

Galatolo F, Cimino M, Vaglini G (2019) Using stigmergy as a computational memory in the design of recurrent neural networks. In: Proceedings of the 8th international conference on pattern recognition applications and methods

Wilamowski BM, Yu H (2010) Neural network learning without backpropagation. IEEE Trans Neural Netw 21:1793–1803

Guo W, Huang H, Huang T (2017) Complex-valued feedforward neural networks learning without backpropagation. In: Liu D, Xie S, Li Y, Zhao D, El-Alfy E-SM (eds) Neural information processing. Springer, Cham, pp 100–107

Ma KW-D, Lewis JP, Kleijn WB (2019) The hsic bottleneck: deep learning without back-propagation, ArXiv:1908.01580v3

Jaderberg M, Czarnecki WM, Osindero S, Vinyals O, Graves A, Silver D, Kavukcuoglu K (2017) Decoupled neural interfaces using synthetic gradients. In: Proceedings of the 34th international conference on machine learning, Volume 70, pp. 1627–1635, JMLR.org

Keller JM, Liu D, Fogel DB (2016) Multilayer neural networks and backpropagation, fundamentals of computational intelligence: neural networks, fuzzy systems and evolutionary computation. Wiley, New York, p 378

Werbos PJ (1994) The roots of backpropagation: from ordered derivatives to neural networks and political forecasting, vol 1. Wiley, New York

Theodoridis S (2015) Chapter 5—stochastic gradient descent: the lms algorithm and its family. In: Theodoridis S (ed) Mach Learn. Academic Press, Oxford, pp 161–231

Goodfellow I, Bengio Y, Courville A (2016) Deep learning. MIT Press, Cambridge

Tang H, Glass J (2018) On training recurrent networks with truncated backpropagation through time in speech recognition. In: 2018 IEEE spoken language technology workshop (SLT). IEEE, pp 48–55

Oliphant T (2006) NumPy: a guide to NumPy. Trelgol Publishing, Austin

Galatolo F (2019) https://github.com/galatolofederico/mesh-neural-networks. GitHub repository

Paszke A, Gross S, Chintala S, Chanan G, Yang E, DeVito Z, Lin Z, Desmaison A, Antiga L, Lerer A (2017) Automatic differentiation in pytorch

Baydin AG, Pearlmutter BA, Radul AA, Siskind JM (2017) Automatic differentiation in machine learning: a survey. J Mach Learn Res 18(1):5595–5637

Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, Blondel M, Prettenhofer P, Weiss R, Dubourg V, Vanderplas J, Passos A, Cournapeau D, Brucher M, Perrot M, Duchesnay E (2011) Scikit-learn: machine learning in Python. J Mach Learn Res 12:2825–2830

Anderson E (1936) The species problem in Iris. Ann Missouri Bot Garden 23:457–509

Kingma DP, Ba J (2014) Adam: a method for stochastic optimization. In: International conference on learning representations

LeCun Y, Bottou L, Bengio Y, Haffner P (1998) Gradient-based learning applied to document recognition. Proc IEEE 86(11):2278–2324

Xiao H, Rasul K, Vollgraf R (2017) Fashion-mnist: a novel image dataset for benchmarking machine learning algorithms, arXiv:1708.07747

Simard P, LeCun Y, Denker J (1993) Efficient pattern recognition using a new transformation distance. In: Hanson S, Cowan J, Giles C (eds) Advances in neural information processing systems, vol 5. Morgan-Kaufmann, Burlington, pp 50–58

Chen M, Shi X, Zhang Y, Wu D, Guizani M (2017) Deep features learning for medical image analysis with convolutional autoencoder neural network. IEEE Trans Big Data. https://doi.org/10.1109/TBDATA.2017.2717439

Irony D, Toledo S, Tiskin A (2004) Communication lower bounds for distributed-memory matrix multiplication. J Parallel Distrib Comput 64(9):1017–1026

Acknowledgements

This research was partially carried out in the framework of the following projects: (i) PRA 2018_81 project entitled “Wearable sensor systems: personalized analysis and data security in healthcare” funded by the University of Pisa; (ii) CrossLab project (Departments of Excellence), funded by the Italian Ministry of Education and Research (MIUR); (iii) “KiFoot: Sensorized footwear for gait analysis” project, co-funded by the Tuscany Region (Italy) under the PAR FAS 2007-2013 fund and the FAR fund of the Ministry of Education, University and Research (MIUR).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Galatolo, F.A., Cimino, M.G.C.A. & Vaglini, G. Formal Derivation of Mesh Neural Networks with Their Forward-Only Gradient Propagation. Neural Process Lett 53, 1963–1978 (2021). https://doi.org/10.1007/s11063-021-10490-1

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-021-10490-1