Abstract

Hyperspectral image contains abundant spectral information with hundreds of spectral continuous bands that allow us to distinguish different classes with more details. However, the number of available training samples is limited and the high dimensionality of hyperspectral data increases the computational complexity and even also may degrade the classification accuracy. In addition, the bottom line is that only original spectral is difficult to well represent or reveal intrinsic geometry structure of the hyperspectral image. Thus, feature extraction is an important step before classification of high dimensional data. In this paper, we proposed a novel supervised feature extraction method that uses a new geometric mean vector to construct geometric between-class scatter matrix (\(S_b^G\)) and geometric within-class scatter matrix (\(S_w^G\)) instead of traditional mean vector of state-of-the-art methods. The geometric mean vector not only can reveal intrinsic geometry structure of the hyperspectral image, but also can improve the ability of learning nonlinear correlation features by maximum likelihood classification (MLC). The proposed method is called geometric mean feature space discriminant analysis (GmFSDA) that uses three measures to produce the extracted features. GmFSDA, at first, maximizes the geometric between-spectral scatter matrix to increase the difference between extracted features. In the second step of GmFSDA, maximizes the between-class scatter and minimizes the within-class scatter simultaneously. The experimental results on three real-world hyperspectral image datasets show the better performance of GmFSDA in comparison with other feature extraction methods in small sample size situation by using MLC.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Hyperspectral image is a cube data containing abundant spectral information with hundreds of contiguous spectral bands. The spectral response curve of each pixel is the plot of bands intensity values versus band numbers in the hyperspectral image and allow us to better distinguish many subtle objects and materials. Therefore, in the practical application field of land cover classification, hyperspectral imagery technology has been widely applied. However, there are many challenges for hyperspectral image analysis, such as, the number of available training samples is limited and the high dimensionality of hyperspectral data increases the computational complexity and also may degrade the classification accuracy. In fact, hyperspectral imaging can provide superior capability to discriminate land-cover types than multispectral does. But, with increasing the number of spectral bands of hyperspectral image, the classification accuracy will change greatly [1]. Firstly, the classification accuracy increases slowly and reaches the maximum. And then, it decreases dramatically when a small number of training samples are available. Generally speaking, feature reduction method can relieve the Hughes phenomenon and achieve higher classification accuracy in image classification tasks. Feature reduction method is used to find a transformation that maps the original data to a lower dimensional space where the essential discriminative information can be mainly preserved. The feature reduction method can be broadly divided into two groups: feature selection and feature extraction [2,3,4,5,6,7,8,9,10,11]. For feature selection methods, there is no new features will be generated and only a subset of original features is selected. Inspired by the clonal selection theory in an artificial immune system, Zhang et al. [11] gave a stochastic search skill. In this skill, the dimensionality reduction method is formulated as an optimization problem that searches an optimum with less features in a feature space. Feature extraction mainly aims at reducing the dimensionality of original data while keeping as much intrinsic information as possible, and also exploring the inherent low-dimensional structure, reducing the computational complexity, and improving the performance of data analysis. Generally speaking, features selected by feature selection techniques maintain the physical meaning of original data while features obtained by feature extraction methods are discriminative than those selected by feature selection techniques. The authors [7] have been pointed out that the feature extraction techniques can provide more effective features than by using feature selection techniques. Therefore, feature extraction is a very important preprocessing step for hyperspectral image classification.

There are three feature extraction techniques in hyperspectral image processing field, such as unsupervised, supervised and semi-supervised. Unsupervised feature extraction methods mainly focus on another representation of the original data in a lower dimensional subspace, satisfying some criterions, and usually do not concern class discrimination, and without the need for labeled samples. Principle component analysis (PCA) [12] is one of the best known unsupervised feature extraction method and widely used for hyperspectral image [1, 13, 14]. The main idea of PCA is to project the original data into a new subspace by minimizing the reconstruction error in the mean squared sense. The nonlinear version of PCA has shown more effective than PCA in hyperspectral image analysis in addition to the computational burden [15]. Villa et al. [16] proposed an unsupervised classification method for hyperspectral image with low spatial resolution. Due to the complicated and high-dimensional data observation, the hyperspectral remote sensing image unsupervised classification still leave huge challenges, Zhang et al. [42] proposed a hyperspectral image unsupervised classification framework based on robust manifold matrix factorization. To solve the high dimensionality of the hyperspectral image, Zhang et al. gave a unified low-rank matrix factorization to jointly perform the dimensionality reduction and data clustering.

Supervised feature extraction methods main rely on the existence of labeled samples to infer class separability. Linear discriminant analysis (LDA) [17] and nonparametric weighted feature extraction (NWFE) [18] are the most popular supervised feature methods and the two methods have been widely used in hyperspectral image classification. As we all know, in LDA method, the between-class scatter matrix (\(S_b\)) is maximized and the within-class scatter matrix (\(S_w\)) is minimized simultaneously. But, there are three inherent drawbacks with LDA. (i) The performance of LDA will be very poor when the \(S_w\) is singular, especially in high-dimensional data. (ii) LDA can extract maximum \(\mathrm{{c}}-1\) features (where c is the number of classes), which may not be sufficient to represent essential information of the original data in hyperspectral image classification. (iii) LDA works very poor when the classes have non normal-like or multi-modal mixture distributions. NWFE uses the weighted mean for calculation of nonparametric scatter matrices for extraction of more than \(\mathrm{{c}}-1\) features.

Kernel principal component analysis (KPCA) [19] and generalized discriminant analysis (GDA) [20] are the nonlinear forms of PCA and LDA by using kernel trick (mapping the input data from the original space to a convenient feature space where inner products in the feature space can be computed by a kernel function). However, the computational burden of nonlinear representations (KPCA and GDA) is very heavy.

Semi-supervised feature extraction methods need labeled samples and unlabeled samples [21], and try to find a good projection. This method can preserve certain potential properties of the original data by adding a regularization term. In addition, there are some local feature extraction methods have been proposed to preserve the properties of local neighborhoods of hyperspectral images, such as, locality preserving projection (LPP) [22], linear local tangent space alignment (LLTSA) [23], maximum margin projection (MMP) [24] and monogenic binary coding (MBC) [25] with face representation and recognition. Zhou et al. [26] extracted the discriminant information from the filtered image by using a spectral-domain regularized local discriminant embedding and a spatial-domain local pixel neighborhood preserving embedding. Then the spatial-spectral discriminant information is incorporated to produce multi-scale spatial-spectral features. Of course, in real-world applications, the precise assessment of the global or local structures of the original data manifold is very difficult. Hence, all kinds of hybrid criterions are used to process the hyperspectral image such as [27,28,29,30,31,32]. In addition, there are some research results based on multiple features, such as [43,44,45,46,47]. In these papers, the authors gave a unified low-dimensional representation of these multiple features for subsequent classification.

Recently, from a curve fitting point of view, the geometric aspects of spectral response curve and the rich feature information of original data has been considered in hyperspectral image classification by Hosseini et al. [2, 3, 33, 34] and Li et al. [35]. In these methods, the reflection coefficients vector from a hyperspectral image have been used in classification process. Hosseini considered the elements of the feature vector are the points of a curve by using rational function curve fitting (RFCF). Li et al. extracted the feature of hyperspectral image by using Maclaurin series function curve fitting (MFCF). In [35], Li et al. pointed out the RFCF feature extraction has three main shortcomings. The RFCF and MFCF two feature extraction methods do not need to transform the data to the projection subspace. But, the two feature extraction methods not whole reveal inherent geometric structure of hyperspectral image, because the \(S_b\) and \(S_w\) (from the two methods) are formed by using sample average. In other words, the two methods do not reveal the inherent geometric structure in essence, especially the existence of outliers in hyperspectral image. Imani et al. [36] proposed a feature space discriminant analysis (FSDA) to produce the extracted features for hyperspectral image. The main idea of FSDA method is reduce the redundant spectral information in the extracted features by introducing the between-spectral scatter based on class sample average vector of hyperspectral image. In fact, for a few of given samples with non-ideal conditions, the assessment result and the inherent geometric structure in essence are very weak by using the class sample average vector. Therefore, the classification performance of FSDA method with MLC will decline significantly. In order to further reveal the inherent geometric structure and obtain the more robust features from hyperspectral image, we proposed a supervised feature extraction method in this paper that has good performance using small training set. The proposed method uses a geometric mean vector to construct geometric between-class scatter matrix (\(S_b^G\)) and geometric within-class scatter matrix (\(S_w^G\)) instead of traditional mean vector of state-of-the-art methods. Hence, the proposed method is called geometric mean feature space discriminant analysis (GmFSDA).

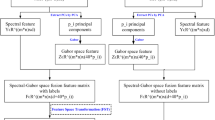

Let \({\mathcal {X}}=\{x_i: x_i\in {\mathbb {R}}^d\}_{i=1}^N\) be the training dataset with geometric mean \(\mathbf{m }\) and belonging to c classes. Each class has a geometric mean \(m_i\) of \(n_i\) data points where \(N=\sum _{i=1}^cn_i\). For extraction of p features from \(d\times 1\) original feature vector (\(\mathbf{x }\)), a transformation matrix \(\mathbf{A }\) is used. The extracted feature vector will be: \(\mathbf{y }_{p\times 1}=\mathbf{A }_{p\times d}\mathbf{x }_{d\times 1}\). In other words, GmFSDA introduce the geometric between-spectral scatter and maximizes it to reduce the redundant spectral information in the extracted features. Similarly to FSDA method, GmFSDA is a two steps method, at first, uses a primary projection to make features as different from each other as possible. After that, maximizes the between-class scatter and minimizes the within-class scatter by using another projection matrix simultaneously.

The rest of this paper is organized as follows. In Sect. 2, we introduce a new feature extraction method: geometric mean feature space discriminant analysis (GmFSDA). Experimental results and discussion are shown in Sect. 3. Finally the conclusion of this paper is listed in Sect. 4.

2 Geometric Mean Feature Space Discriminant Analysis (GmFSDA)

2.1 The Basic Idea of GmFSDA

In this subsection, extracting discriminative spectral features and building powerful representation framework for hyperspectral image are very important with a few of given samples. There are some popular feature extraction methods just only use the class discrimination for feature extraction, such as PCA, KPCA, MMP, NWFE, RFCF and MFCF. In our proposed GmFSDA method, we further consider the difference between spectral bands in the transformed fe ature space in addition to separability between classes. In other words, there are three main purposes of GmFSDA. (i) The intrinsic geometry structure of the hyperspectral image can be revealed exactly and the GmFSDA algorithm can learn nonlinear correlation features with well discriminating power by MLC. (ii) The produced features are as different from each other as possible. (iii) Separability between classes is increased. The geometric mean of n positive numbers \(x_1, x_2, \ldots , x_n\) is defined as positive n-th root of their product [37]:

It is important to emphasize that the calculation of geometric mean is either insoluble or meaning less if a data set contains negative numbers. For instance, the geometric mean of two numbers 4 and 9, is the square root of their product, i.e., \(x_G=\root 2 \of {4\cdot 9}=6\).

Let \(m_{ik}^G\) be the geometric mean of class k and spectral feature i, the number of classes and the number of features (spectral bands) are c and d, respectively. The geometric mean of classes in d dimensions is as follows:

where the geometric mean \(m_{ik}^G\) as following Eq. (3), \(n_k\) is the number of samples per class, \(x_{ikj}\) is the j-th training sample of class k in i-th band.

we can observe the difference between classes from the above matrix vertically, and meanwhile we can observe the difference between spectral features from the above matrix horizontally [formula (2)]. In the first step of GmFSDA, we maximize the difference between spectral features and in the second step, we maximize the difference between classes. Therefore, two types of vectors can be obtained from the above matrix.

Hence, \(\mathbf{h }_i\) is the geometric mean of c classes in ith dimension and \(\mathbf{M }_k\) is the geometric mean of class k in d dimensions. That is to say, \(\mathbf{h }_i\) is the representative of feature (band) i and \(\mathbf{M }_k\) is the representative of class k. In order to produce features as different from each other as possible and increase the class discrimination in GmFSDA method, we must maximize the geometric between-spectral scatter and maximize the between-class scatter and then obtain the projection matrix. The geometric between-spectral scatter matrix (\(S_f^G\)) can be calculated by following Eq. (6).

where

\((\mathbf{h }_i)_{c\times 1}\) is a column vector and \(S_f^G\) is a \(c\times c\) matrix. In the proposed GmFSDA method, the original feature space can be transformed to a new feature space by using the obtained a primary projection matrix \(W\in {\mathbb {R}}^{c\times c}\) such that features have more difference from each other in the transformed space. We maximize the \(tr(S_f^G)\) and sort the eigenvalues of \(S_f^G\) descending, then the projection matrix W can be obtained by using the eigenvectors associated with theses eigenvalues. Hence, in the transformed space, we can obtain the transformed vectors \(\mathbf{g }_i\in {\mathbb {R}}^{c\times 1}\) (\(i=1,2,\ldots ,d\)) as follows:

The class geometric mean matrix in formula (2) is transformed to formula (9) with the above transformations.

The vectors \(\mathbf{h }_i\) and \(\mathbf{M }_k\) are transformed into vectors \(\mathbf{g }_i\) and \(\mathbf{R }_k\) in terms of formula (9).

In fact, we know that the difference between spectral bands is increased in the obtained feature space from formula (9). Therefore, in the second step of GmFSDA, we maximize the between-class scatter matrix (\(S_b\)) and increase the class discriminant in the obtained feature space. The \(S_b\) can be calculated as follows:

where

Similar to above analysis, we maximize \(tr(S_b)\). For extraction of p features from the original \(d\times 1\) feature vector (\(\mathbf{x }\)), the projection matrix A can be obtained by using p eigenvectors of \(S_b\) associated with p largest eigenvalues.

2.2 The Effective of Geometric Mean

In probability theory and statistics, the geometric mean of a set of n positive numbers is defined as Eq. (1). As sample average, the geometric mean [37] can also be used to accurately estimate the central tendency. Generally speaking, the geometric mean is more resistant to outliers (or skewed data). We can see the effective of geometric mean by following example. Suppose we have \(\hbox {Data}=[4.3, 4.0, 20, 4.1, 1, 4.2, 4.4]\) with the outliers “1” and “20” then the average value \(x_m=6\) and the geometric mean \(x_G\approx 4.274\). So, we observe that 4.274 is more closer to the central tendency (\(\frac{4+4.1+4.2+4.3+4.4}{5}=4.2\)) than 6. Therefore, the geometric mean is more effective than average value.

2.3 Development and Improvement of GmFSDA

In order to improve the classification accuracy, we proposed to minimize the within-class scatter matrix in addition to maximize the between-class scatter matrix simultaneously in the second step of GmFSDA for class discrimination. But, we only can extract maximum \(c-1\) features from data, because the bank of \(S_b\) of Eq. (12) is limited. So, we also gave a nonparametric geometric mean between-class scatter matrix that allows us to extract more than \(c-1\) features. In hyperspectral image process, we use the training samples of classes instead of the geometric mean of classes in the improved GmFSDA. So, the training sample matrix is as follows:

where \(x_{ikj}\) (\(i=1,2,\ldots ,d; k=1,2,\ldots ,c; j=1,2,\ldots ,n_k\)) is the j-th training sample of class k in i-th band. \(n_k\) is the number of training samples each class. So, in this case, the vector \(\mathbf{h }_{ij}\) is defined as follows:

According to aforementioned of Sect. 2.1, \(\mathbf{h }_{ij}\) must contain a representative from each class and the representative of each class is the geometric mean of it. Of course, when we want to use the training samples of classes instead of the geometric mean of them, the representative of each class is a training sample from that class. That is to say, all classes must be involved in the composing of \(\mathbf{h }_{ij}\). In our paper, the same number of training samples must be used in all classes. Of course, the minimize number of training samples per class can be obtained by using \(n_t=min\{n_k\}_{k=1}^c\). According to Sect. 2.1, in the first step of GmFSDA, the geometric between-spectral scatter matrix (\(S_f^G\)) is calculated as follows:

where \(\overline{\mathbf{h }}_j=\frac{1}{d}\sum _{i=1}^d\mathbf{h }_{ij}\). The projection matrix \(W\in {\mathbb {R}}^{c\times c}\) can be obtained by maximizing \(tr(S_f^G)\). The original feature space of hyperspectral image is transformed into a new feature space by using obtained projection matrix W such that the spectral bands have more difference from each other in it. In other words, the spectral scatter is increased in the transformed feature space with using following transformation:

The sample matrix \(X_j\) (\(j=1,2,\ldots ,n_t\)) in (15) is transformed to (19) as follows by using the above transformations.

The vectors \(\mathbf{g }_{ij}\) and \(\mathbf{R }_{kj}\) are defined as follows:

Therefore, in the second step of GmFSDA, we maximize the class discrimination and the scatter matrices \(S_b\) and \(S_w\) can be obtained in the transformed feature space as follows:

where

We know that the matrix \(S_w\) may be singular in high-dimensional small-sized data sets, and thus, the solution cannot be obtained. Therefore, we use the regularization skill to cope with it as follows [36]:

The projection matrix A is usually transformed into the following eigenvalue problem.

Therefore, the projection matrix A can be obtained by maximizing \(tr(S_w^{-1}S_b)\) with above Eq. (26) and the Fisher criterion [38, 39]. For extracting p features from \(d\times 1\) original feature vector (x), the p eigenvectors of \(S_w^{-1}S_b\) associated with the largest p eigenvalues of \(S_w^{-1}S_b\) compose the projection matrix A. Thus, we have: \(\mathbf{y }_{p\times 1}=A_{p\times d}\mathbf{x }_{d\times 1}\).

From above analysis, if the matrix \(S_w\) is singular, we also will use the Fisher criterion and then we will also obtain the projection matrix A, that is because the following theorems 1 and 2 from [39]. In [39], the Fisher’s criterion function F(a) as follows. First of all, theorem 1 shows the subspace where we can derive the discriminant vectors based on maximizing the Fisher’s criterion. But, we can extract maximum \(r=rank(S_w)\) features from original data, i.e., \(A\in {\mathbb {R}}^{r\times d}\).

Theorem 1

Let \(V_0=span\{\alpha _i|S_w\alpha _i=0, \alpha _i\in {\mathbb {R}}^n, i=1,2,\ldots ,n-r\}\), where n is the dimensionality of samples, \(S_w\) is the within-class scatter matrix of the samples, and r is the rank of \(S_w\), \(S_b\) denote the between-class scatter matrix of the samples. For each \({\tilde{a}}\in v_0\) which satisfies \({\tilde{a}}^TS_ba\ne 0\), it will maximize the function \(F(a)=a^TS_ba/(a^TS_wa+a^TS_ba)\).

Proof

(i) Since both \(S_b\) and \(S_w\) are real symmetric, \(a^TS_ba\ge 0\) and \(a^TS_wa\ge 0\), for all \(a\in {\mathbb {R}}^n\), it follows that

It is obvious that \(F(a)=1\) if and only if \(a^TS_ba\ne 0\) and \(a^TS_wa=0\).

(ii) For each \({\hat{a}}\in V_0\), \({\tilde{a}}\) can be represented as a linear combination of the set \(\{\alpha _i\}\), i.e., \({\tilde{a}}=\sum _{i=1}^{n-r}b_i\alpha _i\), where \(b_i\) is the projection coefficient of \({\tilde{a}}\) with respect to \(\alpha _i\). Therefore, we have \(S_w{\tilde{a}}=S_w\sum _{i=1}^{n-r}b_i\alpha _i=\sum _{i=1}^{n-r}b_iS_w\alpha _i=0\Rightarrow {\tilde{a}}^TS_w{\tilde{a}}=0\). We can conclude that for each \({\tilde{a}}\in V_0\) which satisfies \({\tilde{a}}^TS_b{\tilde{a}}\ne 0\), the function F(a) will be maximized in terms of (i) and (ii).

An arbitrary vector \({\tilde{a}}\in V_0\) that maximizes F(a) is not necessarily the optimal discriminant vector when the small sample size problem occurs. The main reason is that \({\tilde{a}}^TS_b{\tilde{a}}\) is not guaranteed to reach the maximal value. Theorem 2 will show that the within-class scatter matrix of all the transformed samples in \(V_0\) is a complete zero matrix. Instead, the discriminant vector set can be derived directly from the between-class scatter matrix. \(\square \)

Theorem 2

Let \(QQ^T\) be a transformation which transforms the samples in V (original feature space) into a subspace \(V_0\), where \(Q=[\alpha _1,\ldots ,\alpha _{n-r}]\in {\mathbb {R}}^{n\times (n-r)}\) and each \(\alpha _i\) satisfies \(S_w\alpha _i=0\), for \(i=1,\ldots ,n-r\), where the subspace \(V_0\) is spanned by the orthonormal set of \(\alpha _i\)’s. If all the samples are transformed into the subspace \(V_0\) through \(QQ^T\), then the within-class scatter matrix \({\tilde{S}}_w\) of the transformed samples in \(V_0\) is a complete zero matrix.

Proof

Suppose \(x_m^k\) is the feature vector extracted from the m-th sample of the k-th class, and that the database comprised c classes, where each class contains \(n_t\) samples. Let \(y_m^k\) denote the transformed feature vector of \(x_m^k\) through the transformation \(QQ^T\). That is, \(y_m^k\,{=}\,QQ^Tx_m^k\), \({\bar{y}}^k\,{=}\,QQ^T{\bar{x}}^k\) and \({\bar{y}}\,{=}\,QQ^T{\bar{x}}\), where \({\bar{x}}^k\,{=}\,\frac{1}{n_t}\sum _{j=1}^{n_t}x_m^k\) and \({\bar{x}}=\frac{1}{c\times {n_t}}\sum _{k=1}^c\sum _{j=1}^{n_t}x_m^k\). Thus,

From the above two theorems, we know that maximizing the between-class scatter in \(V_0\) is equal to maximizing the total scatter in \(V_0\). Therefore, in order to extract more than r features, we use the Eq. (25) for hyperspectral image.

As mentioned above, the proposed GmFSDA feature extraction method not only can reveal intrinsic geometry structure of the hyperspectral image, but also it can learn nonlinear correlation features with well discriminating power by MLC. \(\square \)

3 Experiments and Analysis

In this experiments, the performances of all tested methods were quantitatively compared using the average classification accuracy (AA), average classification validity (AV), overall accuracy (OA) and kappa coefficient. The classification process was carried out using MATLAB 7.11 version on a computer equipped with an Intel Core i7 processor at 3.40-GHz. In order to evaluate the capability of the GmFSDA feature extraction in classification of hyperspectral image data, the proposed GmFSDA feature extraction method together with common feature extraction methods such as PCA, KPCA, MMP, NWFE, RFCF, MFCF and FSDA were tested on two hyperspectral datasets. The AA, AV, OA, and Kappa criteria [2, 3, 35] were used in this comparison, and these are defined as follows:

Where \(ACC(c)=n_c/N_c\) denotes classification accuracy for each subject class, \(n_c\) denotes the number of pixels of class c correctly classified and \(N_c\) denotes the number of test pixels in that class.

Where \(VAL(c)=n_c/m_c\) denotes classification validity for each subject class, \(m_c\) denotes the number of all the pixels labeled as class c in output class map.

The measure is similar to AA, expect that classes are not considered subject but as a whole. n denotes the number of all the pixels correctly classified and N denotes the number of all pixels in the test set.

Where \(P_e=(\sum _{c=1}^Cm_c\cdot N_c)/N^2\).

In our experiments, the product’s accuracy (PA) and the user’s accuracy (UA) are also used to evaluate the performance of the above mentioned eight methods in addition to AA, AV, OA, and kappa coefficient. The PA and UA are defined as Eqs. (34) and (35), respectively:

where \(\mathrm{x}_{\mathrm{i,i}}\) is the value on the major diagonal of the \(\mathrm{i}\)-th row in the confusion matrix, \(\mathrm x_{i+}\) is the total number of the \(\mathrm{i}\)-th row, and \(\mathrm{x}_{\mathrm{+i}}\) is the total number of the \(\mathrm{i}\)-th column. The \({\overline{\mathrm{PA}}}\) and \({\overline{\mathrm{UA}}}\) are the average results of \({\mathrm{PA}}_{\mathrm{i}}\) and \({\mathrm{UA}}_{\mathrm{i}}\) (\(\mathrm{i}=1,2,\ldots ,\mathrm{c}\)) and are defined as Eqs. (36) and (37), respectively.

where \(\mathrm{c}\) is the number of class in dataset.

3.1 Hyperspectral Data Description

We use three popular datasets for doing our experiments: Indian Pines Site (IPS), Pavia University and KSC. The well-known Indian Pines Site (IPS) dataset was obtained by the Airborne Visible Infrared Imaging Spectrometer (AVIRIS) sensor over the Indian Pines Site (IPS) in northwestern Indiana in 1992 [40, 41]. The hyperspectral image contains 220 spectral reflectance bands and the size is \(145\times 145\) pixels. The spatial resolution is 20 m. The ground truth available is designated into 16 classes (see Table 1) and is not all mutually exclusive. 20 spectral bands were removed because of the noise and water absorption phenomena and \(N=200\) bands were left. The three-channel false-color composition and the reference land-cover of the AVIRIS data of IPS are shown in Fig. 1.

The Pavia University dataset was gathered by the Reflective Optics System Imaging Spectrometer (ROSIS) sensor over the engineering school at university of Pavia. It is \(610\times 340\) pixels, and the spatial resolution is 1.3 m per pixel. 12 spectral bands were removed due to the noise, and the remaining 103 spectral bands were processed. 9 classes of interest are considered (see Table 2): tree, asphalt, bitumen, gravel, metal sheets, shadows, bricks, meadows, and soil. The three bands synthetic false color image and the reference land-cover image are shown in Fig. 1.

The third dataset has been acquired over the Kennedy Space Center (KSC) Florida, in March, 1996 by AVIRIS sensor [41]. AVIRIS acquires data in 224 bands of 10 nm width with center wavelengths from 400–2500 nm. The KSC data acquired from an altitude of approximately 20 km, have a spatial resolution of 18 m. After removing water absorption and low SNR bands, \(N=176\) bands were used for the analysis. The image size is \(614\times 512\) pixels, and contains 13 different classes of land-cover (see Table 3). The three-channel false-color composition and the reference land-cover of the KSC data are also shown in Fig. 1.

3.2 Experiments and Results

In this subsection, the extracted features of PCA, KPCA, MMP, NWFE, RFCF, MFCF and proposed GmFSDA methods have been fed into an maximum likelihood classifier (MLC) and then the classification results have been compared for IPS, Pavia University and KSC three hyperspectral images data. Moreover, there are many parameters of all the above mentioned feature extraction methods needed to be manually set (see Table 4). In Table 4, the G denotes Gaussian kernel, p denotes regularization parameter, D denotes the number of features for all feature extraction methods and \(D=2,3,\ldots ,14\). In RFCF feature extraction method, the parameter L is changed in the range of 0 to \(D-1\), and M is selected regarding to the constraint: \(M+L+1=D\). Of course, we have know that there are many uncertain factors for RFCF method [35]. The best classification results can be obtained with \(L=0,1,D-2,D-1\) in terms of the research results of [2, 3].

In our experiments, the parameter \(L=1\) has been used to extract feature for RFCF method. The training samples are selected randomly from entire scene and the remaining samples are used as testing samples. In order to demonstrate the capability of all above mentioned methods, the 19 samples each class is used for training the MLC. Because of random selection of training samples, we do each experiment 10 times and the average results are reported. In the experiment, the number of features is set from 2–14 in all methods and each time. The classification accuracy measures for IPS, Pavia University and KSC datasets corresponding to each approach versus the number of features are shown in Figs. 2, 3 and 4, respectively.

In the three experiments, we evaluate the performance of proposed GmFSDA in comparison with PCA, KPCA, MMP, NWFE, RFCF, MFCF and FSDA in small sample size situation for IPS, Pavia University and KSC datasets. We obtain the average classification accuracy (AA), average classification validity (AV), overall accuracy (OA) and kappa coefficient versus the number of extracted features using 16 training samples (see Figs. 2, 3, 4). The MLC is used for classification of reduced data. From Figs. 2, 3 and 4, we can obtain the fact that the superiority of the proposed GmFSDA feature extraction method in comparison with the competing algorithms is apparent. The four measures index, such as AA, AV, OA, and kappa coefficient have been dramatically improved by GmFSDA feature extraction method in comparison to other methods.

As shown in Fig. 2, with the increase of the number of features, the AA, OA and kappa coefficient are increased dramatically and then dropped slowly. We also noticed that the robustness of the proposed GmFSDA method is better than MFCF method, especially when the number of extracted feature is 13. In terms of the research results of Ref. [35] and Fig. 2, for IPS dataset, the similarity of spectral response curves is quite remarkable for most of its classes. Hence, the most feature extraction techniques cannot discriminate its classes very well and the classification results are not much satisfactory. The AA of MFCF is higher than our proposed GmFSDA method.

From Figs. 3 and 4, we can observe that the proposed GmFSDA method can reach the highest accuracy in AA, AV, OA and kappa coefficient, especially the MFCF method cannot obtain the best results in KSC dataset. Of course, for Pavia University dataset, the fluctuation of these four indicators i.e., AA, AV, OA, and kappa coefficient is larger than other two datasets. However, for KSC dataset, the similarity of spectral response curves is not quite remarkable for most of its different classes. Hence, most feature extraction techniques can discriminate its classes satisfactorily. Of course, in the three datasets, the proposed GmFSDA feature extraction method outperforms the other methods.

In addition, the proposed GmFSDA feature extraction method outperforms the PCA, KPCA, MMP, NWFE, RFCF, MFCF and FSDA with small sample size in PA and UA. The accuracy results for 19 training samples cases are shown in Tables 5, 6 and 7.

In order to further evaluate the performance of our proposed GmFSDA method, the AA, AV, OA and kappa coefficient with different numbers of training samples are shown in Figs. 5, 6 and 7 for IPS, Pavia University and KSC datasets, respectively. Figures 5, 6 and 7 illustrate the classification accuracy and kappa coefficient of each method with different number of training samples. It can be observed that the performances of all the compared methods will improve as the number of training samples increases. Furthermore, the proposed GmFSDA method consistently presents excellent performances with different numbers of training and test samples in addition to the IPS dataset. As shown in Fig. 5, it can be observed that the classification accuracy of MFCF is higher than our proposed GmFSDA method in terms of AA, AV, OA and kappa coefficient. The main reason is that for IPS dataset, the similarity of spectral response curves by using MFCF can reveal the class discriminant. However, this robustness is poor with different numbers of extracted features in terms of Fig. 2.

Figures 8, 9 and 10 show the classification maps obtained by PCA, KPCA, MMP, NWFE, RFCF FSDA and proposed GmFSDA methods with MLC and 8, 5 and 7 extracted features and 19 training samples for IPS, Pavia University and KSC datasets, respectively. From the visual point of view, the capability of GmFSDA feature extraction method is also demonstrated in terms of Figs. 8, 9 and 10. Hence, the results of GmFSDA feature extraction algorithm are better than its nearest competitors, such as FSDA, MFCF and RFCF.

The number of features can be determined in terms of the best overall accuracy of each method (see Tables 8, 9, 10). In order to further evaluate the performance of our proposed GmFSDA method, the averages and standard deviations of accuracy measures are shown in Tables 8, 9 and 10 for IPS, Pavia University and KSC datasets, respectively. The std denotes the standard deviation of accuracy measures, and # denotes the number of feature corresponding to the best result of each method.

From Tables 8, 9 and 10, the average accuracy of proposed GmFSDA method is higher than that of the other seven methods. However, its standard deviation is bigger than FSDA feature extraction method for the IPS and KSC different training sets in terms of Tables 8 and 10. Actually, in GmFSDA method, we transform the feature space two times. At first, the original feature space is transformed to a new feature space by using a primary projection and the features have more discrimination in the new space. Then, the second feature space can be obtained by using a secondary projection in the first obtained feature space, and the capability of class discrimination can be improved. The main difference of GmFSDA with other methods is in the primary projection. The primary projection can improve the performance of classification significantly when the number of available training samples is limited. On the whole, the proposed GmFSDA method has better robustness, and GmFSDA not only can reveal intrinsic geometry structure of the hyperspectral image, but also it can learn nonlinear correlation features with well discriminating power by MLC.

4 Conclusion

In this paper, an efficient GmFSDA supervised feature extraction method is proposed for hyperspectral image classification. The proposed method mainly consider the geometry structure and outlier data value of hyperspectral images pixels. Inspired by the research result of M. Imani and H. Ghassemian, the average feature space discriminant analysis (FSDA) can extract an efficient features for hyperspectral image. Hence, we use a more efficient geometric mean instead of average vales for hyperspectral image data feature extraction. The proposed method need to transform the original data to a new space. So, GmFSDA uses two projection matrices for feature extraction. The primary projection matrix is used to maximize the geometric between-spectral scatter. The secondary projection matrix which is applied to the feature space obtained in the first step, maximizes the separability between classes. The performance of GmFSDA is compared to other popular and state-of-the-art supervised/unsupervised feature extraction methods with IPS, Pavia University and KSC datasets. The GmFSDA can capture the intrinsic geometrical nature of hyperspectral images pixels. The experimental results show that the proposed method can achieve feature extraction and obtain better classification accuracy than the other seven methods.

References

David L (2002) Hyperspectral image data analysis as a high dimensional signal processing problem. IEEE Signal Process Mag 19(1):17–28

Hosseini SA, Ghassemian H (2016) Hyperspectral data feature extraction using rational function curve fitting. Int J Pattern Recognit Artif Intell 30(01):1650001. https://doi.org/10.1142/S0218001416500014

Hosseini SA, Ghassemian H (2016) Rational function approximation for feature reduction in hyperspectral data. Remote Sens Lett 7(2):101–110

Imani M, Ghassemian H (2017) High-dimensional image data feature extraction by double discriminant embedding. Pattern Anal Appl 20(2):473–484

Imani M, Ghassemian H (2016) Binary coding based feature extraction in remote sensing high dimensional data. Inf Sci 342:191–208

Jia X, Kuo BC, Crawford MM (2013) Feature mining for hyperspectral image classification. Proc IEEE 101(3):676–697

Maji P, Garai P (2013) Fuzzy-rough simultaneous attribute selection and feature extraction algorithm. IEEE Trans Cybern 43(4):1166–1177

Li S, Qiu J, Yang X et al (2014) A novel approach to hyperspectral band selection based on spectral shape similarity analysis and fast branch and bound search. Eng Appl Artif Intell 27:241–250

Esfandian N, Razzazi F, Behrad A (2012) A clustering based feature selection method in spectro-temporal domain for speech recognition. Eng Appl Artif Intell 25(6):1194–1202

Dernoncourt D, Hanczar B, Zucker JD (2014) Analysis of feature selection stability on high dimension and small sample data. Comput Stat Data Anal 71:681–693

Zhang L, Zhong Y, Huang B et al (2007) Dimensionality reduction based on clonal selection for hyperspectral imagery. IEEE Trans Geosci Remote Sens 45(12):4172–4186

Hotelling H (1933) Analysis of a complex of statistical variables into principal components. Educ Psychol 24(6):417–441

Liao W, Pizurica A, Scheunders P et al (2013) Semisupervised local discriminant analysis for feature extraction in hyperspectral images. IEEE Trans Geosci Remote Sens 51(1):184–198

Plaza A, Martinez P, Plaza J et al (2005) Dimensionality reduction and classification of hyperspectral image data using sequences of extended morphological transformations. IEEE Transn Geosci Remote Sens 43(3):466–479

Fauvel M, Chanussot J, Benediktsson JA (2009) Kernel principal component analysis for the classification of hyperspectral remote sensing data over urban areas. EURASIP J Adv Signal Process. Article ID 783194. https://doi.org/10.1155/2009/783194

Villa A, Chanussot J, Benediktsson JA et al (2013) Unsupervised methods for the classification of hyperspectral images with low spatial resolution. Pattern Recognit 46(6):1556–1568

Chang CI, Ren H (2000) An experiment-based quantitative and comparative analysis of target detection and image classification algorithms for hyperspectral imagery. IEEE Trans Geosci Remote Sens 38(2):1044–1063

Kuo BC, Landgrebe DA (2004) Nonparametric weighted feature extraction for classification. IEEE Trans Geosci Remote Sens 42(5):1096–1105

Scholkopf B, Smola A, Muller KR (1998) Nonlinear component analysis as a kernel eigenvalue problem. Neural Comput 10:1299–1319

Baudat G, Anouar F (2000) Generalized discriminant analysis using a kernel approach. Neural Comput 12(10):2385–2404

Chen S, Zhang D (2011) Semisupervised dimensionality reduction with pairwise constraints for hyperspectral image classification. IEEE Geosci Remote Sens Lett 8(2):369–373

He X, Niyogi P (2004) Locality preserving projections. In: Advances in neural information processing systems, pp 153–160

Zhang T, Yang J, Zhao D et al (2007) Linear local tangent space alignment and application to face recognition. Neurocomputing 70(7):1547–1553

He X, Cai D, Han J (2008) Learning a maximum margin subspace for image retrieval. IEEE Trans Knowl Data Eng 20(2):189–201

Yang M, Zhang L, Chi-Keung Shiu S, Zhang Z (2012) Monogenic binary coding: an efficient local feature extraction approach to face recognition. IEEE Trans Inf Forensics Secur 7:1738–1751

Zhou Y, Peng J, Chen CLP (2015) Dimension reduction using spatial and spectral regularized local discriminant embedding for hyperspectral image classification. IEEE Trans Geosci Remote Sens 53:1082–1095

Li L, Ge H, Gao J (2017) A spectral-spatial kernel-based method for hyperspectral imagery classification. Adv Sp Res 59(4):954–967

Gao J, Xu L (2016) A novel spatial analysis method for remote sensing image classification. Neural Process Lett 43(3):805–821

Gao J, Xu L, Shen J et al (2015) A novel information transferring approach for the classification of remote sensing images. EURASIP J Adv Signal Process 2015(1):38

Gao J, Xu L, Huang F (2016) A spectral-textural kernel-based classification method of remotely sensed images. Neural Comput Appl 27(2):431–446

Gao J, Xu L (2015) An efficient method to solve the classification problem for remote sensing image. AEU-Int J Electron Commun 69(1):198–205

Gao J, Xu L, Shi A et al (2014) A kernel-based block matrix decomposition approach for the classification of remotely sensed images. Appl Math Comput 228:531–545

Hosseini A, Ghassemian H (2012) Classification of hyperspectral and multispectral images by using fractal dimension of spectral response curve. In: 2012 20th Iranian conference on electrical engineering (ICEE). IEEE, pp 1452–1457

Hosseini SA, Ghassemian H (2013) A new hyperspectral image classification approach using fractal dimension of spectral response curve. In: 2013 21st Iranian conference on electrical engineering (ICEE). IEEE, pp 1–6

Li L, Ge H, Gao J (2018) Hyperspectral image feature extraction using maclaurin series function curve fitting. Neural Process Lett. https://doi.org/10.1007/s11063-018-9825-5

Imani M, Ghassemian H (2015) Feature space discriminant analysis for hyperspectral data feature reduction. ISPRS J Photogramm Remote Sens 102:1–13

Elton EJ, Gruber MJ, Brown SJ et al (2009) Modern portfolio theory and investment analysis. Wiley, Hoboken

Rietz HL (1916) The mathematical theory of probabilities. Science 43(1121):896–897

Chen LF, Liao HYM, Ko MT et al (2000) A new LDA-based face recognition system which can solve the small sample size problem. Pattern Recognit 33(10):1713–1726

Purdue Research Foundation, Hyperspectral images by multiSpec (2015). https://engineering.purdue.edu/~biehl/MultiSpec/

Universidad-del-Pais-Vasco, Hyperspectral remote sensing scenes (2014). http://www.ehu.eus/ccwintco/index.php?title=Hyperspectral_Remote_Sensing_Scenes

Zhang L, Zhang L, Du B et al (2019) Hyperspectral image unsupervised classification by robust manifold matrix factorization. Inf Sci 485:154–169

Zhang L, Zhang L, Tao D et al (2011) On combining multiple features for hyperspectral remote sensing image classification. IEEE Trans Geosci Remote Sens 50(3):879–893

Zhang L, Zhang L, Tao D et al (2013) A modified stochastic neighbor embedding for multi-feature dimension reduction of remote sensing images. ISPRS J Photogramm Remote Sens 83:30–39

Zhang Q, Zhang L, Zhang L et al (2015) Ensemble manifold regularized sparse low-rank approximation for multiview feature embedding. Pattern Recognit 48(10):3102–3112

Zhang L, Zhang Q, Du B et al (2016) Simultaneous spectral-spatial feature selection and extraction for hyperspectral images. IEEE Trans Cybern 48(1):16–28

Zhu X, Zhang L, Zhang L et al (2016) Multidomain subspace classification for hyperspectral images. IEEE Trans Geosci Remote Sens 54(10):6138–6150

Acknowledgements

This work is partially supported by the Graduate Innovation Foundation of Jiangsu Province under Grant No. KYLX16_0781, the 111 Project under Grant No. B12018, and PAPD of Jiangsu Higher Education Institutions, China, and the Doctoral Research Foundation of Jining Medical University under Grant No. 2018JYQD03, and a Project of Shandong Province Higher Educational Science and Technology Program under Grant No. J18KA217.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Li, L., Ge, H., Gao, J. et al. A Novel Geometric Mean Feature Space Discriminant Analysis Method for Hyperspectral Image Feature Extraction. Neural Process Lett 51, 515–542 (2020). https://doi.org/10.1007/s11063-019-10101-0

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-019-10101-0

Keywords

- Hyperspectral image

- Feature extraction

- Geometric mean vector

- Feature space discriminant analysis

- Classification