Abstract

This paper investigates the problem of robust exponential stabilization of uncertain discrete-time stochastic neural networks with time-varying delay based on output feedback control. By choosing an augmented Lyapunov–Krasovskii functional, we established the sufficient conditions of the delay-dependent asymptotical stabilization in the mean square for a class of discrete-time stochastic neural networks with time-varying delay. Furthermore, we obtain the criteria of robust global exponential stabilization in the mean square for uncertain discrete-time stochastic neural networks with time-varying delay. Finally, we give numerical examples to illustrate the effectiveness of the proposed results.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Over the past decades, neural networks have attracted considerable attention owing to their wide applications in various fields such as signal and image processing, pattern recognition, associative memory, parallel computing and so on [1,2,3,4,5,6]. Dynamical behaviors such as stability, instability, periodic oscillatory and chaos of the neural networks are known to be crucial in applications. Stability of neural networks is a prerequisite for many engineering problems, it received much research attention in recent years and many elegant results have been reported, for details see [5, 7, 8]. On the other hand, the stabilization of neural networks has been attracting considerable attention and several feedback stabilizing control methods have been proposed, for details see [9,10,11].

The time delays are commonly encountered in various engineering systems such as chemical processes, hydraulic and rolling mill systems, etc. The existence of time delay worsens the dynamic performance of a system and even leads to instability of the system. It is quintessential to understand the impact of time delays on dynamic systems theoretically so that, when using time delays to solve real-world problems, the unfavorable consequences of them can be circumvented. Therefore, the dynamic problem of delayed neural networks has been widely studied recently [5,6,7, 12,13,14]. Huang et al. [7] proposed a class of asymptotically almost periodic shunting inhibitory cellular neural networks with mixed delays and nonlinear decay functions. Tian et al. [8] gave an improved delay-partitioning method to stability analysis for neural networks with discrete and distributed time-varying delays. Park et al. [12] given the synchronization criterion for coupled discrete-time neural networks with interval time-varying delays. In [13], the dynamics of switched cellular neural networks with mixed delays were investigated. Dong et al. [15] investigated the robust stability and H∞ control for nonlinear discrete-time switched systems with interval time-varying delay.

However, it should be noted that most of the neural networks that have been studied are continuous-time systems. However, in the application of neural networks, discrete-time neural networks have surpassed the continuous-time networks in their importance because when implementing continuous-time networks for computer-based simulation, experimentation or computation, the continuous-time networks are usually discretized. Besides, discrete-time networks are more suitable to model digitally transmitted signals in a dynamical way. These two features of the discrete-time networks have inspired researchers to study the stability and stabilization analysis problems for discrete-time neural networks, and some stability and stabilization criteria have been proposed in the literature, see [16,17,18,19,20] and the references therein. Yu et al. [16] investigated exponential stability criteria for discrete-time recurrent neural networks with time-varying delay. Luo et al. [18] studied the robust stability for discrete-time stochastic neural networks systems with time-varying delays. In [20], the stability analysis for discrete-time stochastic neural networks with time-varying delays was given.

During the modeling process of real nervous systems, stochastic disturbances and parameters uncertainties are identified as the two main sources of performance degradations of the implemented neural networks. Therefore, it is significant to solve the stability analysis problem for stochastic neural networks, and some academic efforts devoted to this problem have recently been published, see e.g. [21,22,23,24] and the references therein. In [21], the mean square exponential stability of uncertain stochastic delayed neural networks was considered. In [22], stochastic stability of uncertain Hopfield neural networks with discrete and distributed delays was investigated. Zhou et al. [24] considered robust exponential stability of uncertain stochastic neural networks with distributed delays and reaction–diffusions. However, these papers mainly concern continuous-time neural networks. To the best of our knowledge, the robust output feedback stabilization problem for uncertain stochastic discrete-time neural networks with time-varying delays has not been adequately investigated.

In this paper, the static output feedback stabilization problem for uncertain discrete-time stochastic neural networks with time-varying delay is studied. By constructing a new augmented Lyapunov–Krasovskii functional, the delay-dependent stabilization criterion, which guarantees the asymptotical stability of the closed-loop system of a class of discrete-time stochastic neural networks with time-varying delays in the mean square sense, is obtained. Then, by static output feedback control, the sufficient conditions of robust global exponential stabilization in the mean square for uncertain discrete-time stochastic neural networks with time-varying delay is presented. Finally, some simulation examples are given to illustrate the effectiveness of our results.

The remainder of this paper is organized as follows. In Sect. 2, formulation and preliminaries are presented. In Sect. 3, robust exponential stabilization criteria in the mean square is derived for uncertain discrete-time stochastic neural networks with time-varying delays. In Sect. 4, numerical examples are given to demonstrate our results. Finally, some conclusions are given in Sect. 5.

Notation

Throughout this paper, \( N^{ + } \) stands for the set of nonnegative integers. \( R^{n} \) and \( R^{n \times m} \) denote the \( n \) dimensional Euclidean space and the set of all \( n \times m \) real matrices respectively. The superscript “\( T \)” denotes the transpose and the notation \( X \ge Y \) (respectively \( X > Y \)), where \( X \) and \( Y \) are symmetric matrices, means that \( X - Y \) is positive semi-definite (respectively positive definite).\( I \) is the identity matrix with compatible dimension. \( E\{ \cdot \} \) stands for the mathematical expectation operator with respect to the given probability measure \( P \), \( \left\| {\, \cdot \,} \right\| \) stands for the Euclidean vector norm. The asterisk \( ( * ) \) in a matrix is used to denote term that is induced by symmetry. We use \( \lambda_{\hbox{min} } ( \cdot ) \) and \( \lambda_{\hbox{max} } ( \cdot ) \) to denote the minimum and maximum eigenvalue of the real symmetric matrix.

2 Problem Formulation and Preliminaries

Consider the following uncertain discrete-time stochastic neural networks with time-varying delay:

where \( x(k) = [x_{1} (k),x_{ 2} (k), \cdots ,x_{n} (k)]^{T} \in R^{n} \) is the state vector, \( u(k) \in R^{m} \) is the control input. \( f(x(k)) = [f_{1} (x_{1} (k)), \ldots ,f_{n} (x_{n} (k))]^{T}{\kern-2pt,}\;g(x(k - \tau (k))) = [g_{1} (x_{1} (k - \tau (k))), \ldots ,g_{n} (x_{n} (k - \tau (k)))]^{T} \) denote the neuron activation functions. \( \varphi (\theta ) \) is an initial condition, the positive integer \( \tau (k) \) denotes the time-varying delay satisfying

where \( \tau_{m} \) and \( \tau_{M} \) are known positive integers. The diagonal matrix \( A = diag(a_{1} ,a_{2} , \ldots ,a_{n} ) \) is real constant diagonal matrix, \( C,B,D,N \) and K are the suitable dimension constant matrices. \( B = (b_{ij} )_{n \times n} \) and \( D = (d_{ij} )_{n \times n} \) are the connection weight matrix and the delayed connection weight matrix.\( \Delta A(k),\Delta B(k),\Delta C(k),\Delta D(k) \) denote the parameter uncertainties, and assume to satisfy the following admissible condition

where \( G,E_{a} ,E_{b} ,E_{\text{c}} ,E_{d} \) are known matrices of appropriate dimensions, \( F(k) \) is an unknown time-varying matrix function satisfying

\( \omega (k) \) is a scalar Wiener process on a probability space \( (\Omega ,\Gamma ,P) \) with

We make following assumptions for the neuron activation functions in (1).

Assumption 1

[25] For \( i \in {\text{\{ }}1,2, \ldots ,n{\text{\} }}, \) the neuron activation functions \( f_{i} ( \cdot ) \) and \( g_{i} ( \cdot ) \) are continuous and bounded, and for any \( s_{1} ,s_{2} \in R \)\( (s_{1} \ne s_{2} ) \) satisfy the following conditions:

where \( l_{i}^{ - } ,l_{i}^{ + } ,v_{i}^{ - } ,v_{i}^{ + } \) are known constants.

Remark 1

The Assumption 1 on the activation functions has been made in many other papers dealing with the stability problem for neural networks; see, for example, [6, 13, 25, 26]. We note that this assumption is weak in comparison with those made under the Lipschitz condition [27,28,29]. In Assumption 1, the constants \( l_{i}^{ - } ,l_{i}^{ + } ,v_{i}^{ - } ,v_{i}^{ + } \) are allowed to be positive, negative or zero. Hence, the resulting activation functions could be nonmonotonic, and are more general than the usual sigmoid functions and the recently commonly used Lipschitz conditions.

Assumption 2

\( \delta :Z \times R^{n} \times R^{n} \to R^{n} \) is the continuous function, and satisfies

where \( \rho_{ 1} > 0 \) and \( \rho_{2} > 0 \) are known constant scalars.

For obtaining the main results of this paper, the following lemma will be useful for the proofs.

Lemma 1

[30] For any constant matrix\( M \in R^{{n \times {\text{n}}}} \), \( M = M^{T} \ge 0 \), integers\( r_{2} \ge r_{1} , \)vector function\( \omega :{\text{\{ }}r_{1} ,r_{1} + 1, \ldots ,r_{2} \} \to R^{n} \)such that the sums in the following are well defined, then

Definition 1

[31] The system (1) is said to be robustly exponentially stable in the mean square if there exist constants \( \alpha > 0 \) and \( \mu \in ( 0, 1 ), \) such that every solution of the system (1) satisfies that

for all parameter uncertainties satisfying the admissible condition.

For presentation convenience, in the following, we denote

3 Main Results

In this section, we aim to provide new delay dependent sufficient conditions which ensure the exponential stabilization in the mean square of the neural networks (1).

Firstly, we consider following neural networks with time-varying delay

For system (7), we consider the following static output feedback controller

Under the controller (8), the corresponding closed-loop system for (7) is given by

where \( \bar{A} = A + NMK. \)

Theorem 1

Suppose that Assumptions 1 and 2 hold. For given scalars\( \tau_{m} \)and\( \tau_{M} \), satisfying\( 0< \tau_{m} \le \tau_{M} \), the discrete-time system (9) is asymptotical stability in the mean square, if there exist matrices\( \psi = diag(s_{1} ,s_{2} , \ldots ,s_{n} ) > 0, \)\( \Gamma = diag(h_{1} ,h_{2} , \ldots ,h_{n} ) > 0, \)positive definite matrices\( P,Z,Q_{1} ,Q_{2} ,R \), \( T = \left[ {\begin{array}{*{20}c} {T_{11} } & {T_{12} } \\ * & {T_{22} } \\ \end{array} } \right], \)and scalars\( \varepsilon > 0 \), \( \lambda^{*} > 0 \)such that the following matrix inequalities hold:

where

Proof

Choose the following Lyapunov–Krasovskii functional candidate:

where

Define \( \Delta V(k) = V(k + 1) - V(k) \), then along the solution of (9) we have

From Lemma 1, it’s easy to find

So, (17) can replaced that

It is easy to see

So, for any matrices P, we have

From (14)–(19), it follows that

where

In addition, from Assumption 1, we have

So, it follows that for any matrices \( \psi = diag(s_{1} ,s_{2} , \ldots ,s_{n} ) > 0, \)\( \Gamma = diag(h_{1} ,h_{2} , \ldots ,h_{n} ) > 0, \)

Combining (20) and (21), we can get

where

Applying Schur complement to (10) yields,

Therefore, there exists a sufficient small scalar \( c > 0 \), such that,

This means that the discrete-time system (9) is asymptotically stable. This completes the proof of Theorem 1.

Now, we consider the uncertain discrete-time stochastic neural networks (1) under the control (8). The closed-loop system for (1) with (8) is rewritten to

Theorem 2

Suppose that Assumptions 1 and 2 hold. For given scalars\( \tau_{m} \)and\( \tau_{M} \)satisfying (2), the closed-loop system (25) is robustly globally exponentially stable in the mean square, if there exist matrices\( \psi = diag(s_{1} ,s_{2} , \ldots ,s_{n} ) > 0, \)\( \Gamma = diag(h_{1} ,h_{2} , \ldots ,h_{n} ) > 0, \)positive definite matrices\( P, \)\( Z,Q_{1} ,Q_{2} ,R, \)\( T = \left[ {\begin{array}{*{20}c} {T_{11} } & {T_{12} } \\ * & {T_{22} } \\ \end{array} } \right], \)and scalars\( \varepsilon > 0 \)and\( \lambda^{*} > 0 \)such that the following matrix inequalities hold:

where

Proof

From (25), we have

where \( \eta (k) = x(k + 1) - x(k). \)

Choose the Lyapunov–Krasovskii functional candidate (12). Calculating the difference of \( V(k) \) along the system (25), and taking the mathematical expectation, we have

where

From (3) and (25), it follows that

where

Using Schur complement and (26), we have

So, there exists a sufficient small scalar \( c > 0 \) such that,

this means that system (25) is asymptotically stable for any time-varying delay \( \tau (k) \) satisfying (2).

Now, we prove the global exponential stability of system (25), it is easy to get that

where

For any \( \mu > 1 \), it seen that

Now, we sum up both side of (33) from 0 to \( k - 1 \) and obtain

According to the method in [16], it is easy to have

Meanwhile, from (32), we can easily get

Thus from (32)–(35), it can be obtained that

where

Because \( \vartheta_{ 1} (1) = - c < 0 \), there must be a positive scalar \( \mu_{0} > 1 \), such that \( \vartheta_{ 1} (\mu_{0} ) < 0 \), so

From definition of \( V(k), \) we also get that

So,

Then closed-loop system (25) is globally exponentially stable. This completes the proof of Theorem 2.

Remark 2

According to the proof of Theorem 2 and Definition 1, we have

Remark 3

The steps of calculating the gain matrix M of the static output feedback controller are as follows:

Step 1 Let \( \varPi = (NMK)^{T} P. \) Using MATLAB LMI Toolbox to solve (26) and (27), we can obtain matrices \( \varPi ,\quad \psi = diag(s_{1} ,s_{2} , \ldots ,s_{n} ), \)\( \Gamma = diag(h_{1} ,h_{2} , \ldots ,h_{n} ), \) positive definite matrices \( P,Z,Q_{1} , \)\( Q_{2} ,R, \)\( T = \left[ {\begin{array}{*{20}c} {T_{11} } & {T_{12} } \\ * & {T_{22} } \\ \end{array} } \right], \) and positive scalars \( \varepsilon \) and \( \lambda^{*} . \)

Step 2 From P and \( \varPi \), we calculate \( M = N^{ - 1} P^{ - 1} \varPi^{T} K^{ - 1} . \)

When \( \tau (k) = d, \) where \( d \) is a constant, the closed-loop system (25) can be written as:

The following corollary can be obtained.

Corollary 1

Suppose that Assumptions 1 and 2 hold. The discrete-time system (36) is globally exponentially stable in the mean square, if there exist matrices\( Z > 0,Q > 0,R > 0,P > 0, \)\( \psi = diag(s_{1} ,s_{2} , \ldots ,s_{n} ) > 0, \)\( \Gamma = diag(h_{1} ,h_{2} , \ldots ,h_{n} ) > 0 \), and scalars\( \varepsilon > 0 \)and\( \lambda^{*} > 0 \)such that the following matrix inequalities hold:

where

Proof

Choose the following Lyapunov–Krasovskii functional candidate:

where

Calculating the difference of \( V(k) \) along the system (36), and taking the mathematical expectation, we have

where

From (36) and Schur complement, it follows that

The rest proofs are omitted as they are similar to the proof of Theorem 2.□.

4 Numerical Examples

In this section, numerical examples are given to demonstrate the high performance of the proposed approach.

Example 1

Consider the uncertain discrete-time neural networks system (1) with the following parameters:

We have \( l_{1}^{ - } = 0,l_{1}^{ + } = 0. 6,l_{2}^{ - } = 0,l_{2}^{ + } = 0. 4,v_{1}^{ - } = 0,v_{1}^{ + } = 0.2,v_{2}^{ - } = 0,v_{2}^{ + } = 0. 6. \)

Solving (26) and (27) gives feasible solutions as follows:

The output feedback controller gain matrix is

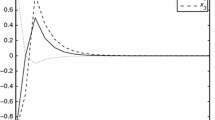

Therefore, it follows from Theorem 2 the closed-loop system (25) is robustly globally exponentially stable in the mean square. The state trajectory of closed-loop system is shown in Fig. 1.

Example 2

Consider the uncertain discrete-time system (1) with the following parameters:

We have

Solving (26) and (27) gives feasible solutions as follows:

The output feedback controller gain matrix is

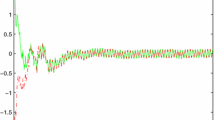

Therefore, it follows from Theorem 2 the closed-loop system is robustly globally exponentially stable in the mean square. The state trajectory of closed-loop system is shown in Fig. 2.

Example 3

Consider the uncertain discrete-time system (1) with the following parameters

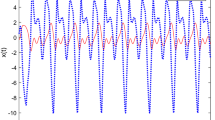

It is easy to know that the above discrete-time system with \( u(k) = 0 \) is unstable.

Solving (26) and (27) gives feasible solutions as follows:

The output feedback controller gain matrix is

Therefore, it follows from Theorem 2 the closed-loop system is robustly globally exponentially stable in the mean square. The state trajectory of closed-loop system is shown in Fig. 3.

Example 4

Consider the uncertain discrete-time system (1) with the following parameters

For different values of \( \tau_{m} \), we give the upper bounds \( \tau_{M} \) of time-varying delay in Table 1, which guarantee the robust global exponential stability in the mean square of the system (25).

5 Conclusion

In this paper, we investigate robust exponential stabilization for uncertain discrete-time stochastic neural networks with time-varying delay. Using the Lyapunov–Krasovskii functional approach, we propose the delay-dependent stabilization criteria to guarantee that the closed-loop system of a class of discrete-time stochastic neural networks with time-varying delays is asymptotical stable in the mean square. Then, we give sufficient conditions of robust global exponential stabilization for a class of discrete-time stochastic neural networks with time-varying delays via output feedback control. Finally, some examples are given to show the superiority of our proposed stability conditions.

References

Feng Z, Zheng W (2015) On extended dissipativity of discrete-time neural networks with time-delay. IEEE Trans Neural Netw Learn Syst 26:3293–3300

Zou L, Wang Z, Han Q-L, Zhou D (2017) Ultimate boundedness control for networked systems with try-once-discard protocol and uniform quantization effects. IEEE Trans Autom Control 62:6582–6588

Huang C, Liu B (2019) New studies on dynamic analysis of inertial neural networks involving non-reduced order method. Neurocomputing 325:283–287

Huang C, Zhang H (2019) Periodicity of non-autonomous inertial neural networks involving proportional delays and non-reduced order method. Int J Biomath 12:1950016

Xia J, Park J, Zeng H (2015) Improved delay-dependent robust stability analysis for neutral-type uncertain neural networks with Markovian jumping parameters and time-varying delays. Neurocomputing 149:1198–1205

Yang X, Huang C, Yang Z (2012) Stochastic synchronization of reaction-diffusion neural networks under general impulsive controller with mixed delays. Abstr Appl Anal 2012:1–25

Huang C, Liu B, Tian X, Yang L, Zhang X (2019) Global convergence on asymptotically almost periodic SICNNs with nonlinear decay functions. Neural Process Lett 49(2):625–641

Tian J, Xiong W, Xu F (2014) Improved delay-partitioning method to stability analysis for neural networks with discrete and distributed time-varying delays. Appl Math Comput 233:152–164

Dong Y, Guo L, Hao J (2018) Robust exponential stabilization for uncertain neutral neural networks with time-varying delays by periodically intermittent control. Neural Comput Appl. https://doi.org/10.1007//s00521-018-3671-2

Dong Y, Guo L, Hao J, Li T (2018) Robust exponential stabilization for switched neutral neural networks with mixed time-varying delays. Neural Process Lett. https://doi.org/10.1007/s11063-018-9928-z

Wen S, Huang T, Zeng Z, Chen Y, Li P (2015) Circuit design and exponential stabilization of memristive neural networks. Neural Netw 63:48–56

Park MJ, Kwon OM, Park JuH, Lee SM, Cha EJ (2013) On synchronization criterion for coupled discrete-time neural networks with interval time- varying delays. Neurocomputing 99:188–196

Huang C, Kuang H, Chen X, Wen F (2013) An LMI approach for dynamics of switched cellular neural networks with mixed delays. Abstr Appl Anal 2013:1–8

Wang P, Hu H, Jun Z, Tan Y, Liu L (2013) Delay-dependent dynamics of switched cohen-grossberg neural networks with mixed delays. Abstr Appl Anal 2013:1–11

Dong Y, Liang S, Wang H (2019) Robust stability and H∞ control for nonlinear discrete-time switched systems with interval time-varying delay. Math Methods Appl Sci 42:1999–2015

Yu J, Zhang K, Fei S (2010) Exponential stability criteria for discrete-time recurrent neural networks with time-varying delay. Nonlinear Anal Real World Appl 11:207–216

Tan C, Yang L, Zhang F, Zhang Z, Wong WS (2019) Stabilization of discrete time stochastic system with input delay and control dependent noise. Syst Control Lett 123:62–68

Luo M, Zhong S, Wang R, Kang W (2009) Robust stability analysis for discrete-time stochastic neural networks systems with time-varying delays. Appl Math Comput 209:305–313

Zhou B, Yang X (2018) Global stabilization of discrete-time multiple integrators with bounded and delayed feedback. Automatica 97:306–315

Ou Y, Liu H, Si Y, Feng Z (2010) Stability analysis of discrete-time stochastic neural networks with time-varying delays. Neurocomputing 73:740–748

Chen W, Liu X (2008) Mean square exponential stability of uncertain stochastic delayed neural networks. Phys Lett A 372(7):1061–1069

Wang Z, Liu Y, Fraser K, Liu X (2006) Stochastic stability of uncertain Hopfield neural networks with discrete and distributed delays. Phys Lett A 354:288–297

Wu X, Tang Y, Zhang W (2014) Stability analysis of switched stochastic neural networks with time-varying delays. Neural Netw 51:39–49

Zhou J, Xu S, Zhang B, Zou Y, Shen H (2012) Robust exponential stability of uncertain stochastic neural networks with distributed delays and reaction–diffusions. IEEE Trans Neural Netw Learn Syst 23:1407–1416

Yu J, Zhang K, Fei S (2010) Exponential stability criteria for discrete-time recurrent neural networks with time-varying delay. Nonlinear Anal Real World Appl 11:207–216

Dong Y, Chen L, Mei S (2019) Observer design for neutral-type neural networks with discrete and distributed time-varying delays. Int J Adapt Control Signal Process 33:527–544

Hu J, Wang J (2015) Global exponential periodicity and stability of discrete-time complex-valued recurrent neural networks with time-delays. Neural Netw 66:119–130

Zhang H, Liao X (2005) LMI-based robust stability analysis of neural networks with time-varying delay. Neurocomputing 67:306–312

Xu S, Chu Y, Lu J (2006) New results on global exponential stability of recurrent neural networks with time-varying delays. Phys Lett A 352:371–379

He Y, Wang G, Wu M (2005) LMI-based stability criteria for neural networks with multiple time-varying delays. Physica D 212:126–136

Liu Y, Wang Z, Liu X (2008) Robust stability of discrete-time stochastic neural networks with time-varying delays. Neurocomputing 71:823–833

Acknowledgements

This work was supported by the Natural Science Foundation of Tianjin under Grant No. 18JCYBJC88000 and the National Nature Science Foundation of China under Grant Nos. 61873186,61603272 and 61703307.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Dong, Y., Wang, H. Robust Output Feedback Stabilization for Uncertain Discrete-Time Stochastic Neural Networks with Time-Varying Delay. Neural Process Lett 51, 83–103 (2020). https://doi.org/10.1007/s11063-019-10077-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-019-10077-x