Abstract

The proportional delay is an unbounded time-varying delay, which is different from unbounded distributed delays. The neural networks system with proportional delays belongs to the category of proportional delayed differential equation, and the proportional delayed differential equation is a very important unbounded delay differential equation, which is widely used in many fields, such as light absorption in the star substance and nonlinear dynamic systems. This paper is concerned with the exponential synchronization problem of a class of chaotic neural networks with multiple proportional delays. A nonlinear transformation transforms the drive-response system with multiple proportional delays into the drive-response system with multiple constant delays and time-varying coefficients. By constructing appropriate Lyapunov functional, several new delay-dependent and decentralized control laws, which are related to the size of the proportional delay factors, are derived to achieve the exponential synchronization. The idea and approach developed in this paper can provide a more practical framework for the synchronization of chaotic systems with proportional delays. Two examples and their simulations are given to illustrate the effectiveness of the proposed method.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Synchronization control ensures two or more systems to share a common dynamical behavior by coupling or external forcing. Since Pecora and Carroll [1] originally proposed the drive-response concept for achieving the synchronization of coupled chaotic systems in, a lot of alternative schemes to ensure the control and synchronization of such systems have been proposed in [1–7], and whose potential applications have been demonstrated in various fields such as chaos generator design, information science, secure communication, biological systems, etc.

Actually, there exist natural time delays in the operation of systems which are due to the finite information processing speed and the finite switching speed of amplifiers, and can cause undesirable dynamic behaviors such as oscillation and instability. Recently, it has been revealed that some types of delayed neural networks (DNNs) can exhibit some complicated dynamics and even chaotic behaviors if the parameters and time delays are appropriately chosen. Therefore, dynamic behaviors, especially the synchronization problems of (chaotic) DNNs, have been extensively considered [8–24]. So far, most studied models of synchronization problem are (chaotic) neural networks (NNs) with constant delays [8, 22, 25], time-varying and bounded delays [9, 11, 14, 15, 17–20], distributed delays [10, 12, 13, 16, 20], etc. Most the synchronization problems of (chaotic) DNNs are mainly based on such approaches as Lyapunov functional [11, 12, 14, 15, 22, 23, 25], Lyapunov-Krasovskii functional and Linear matrix inequality [13–15, 20], etc. In addition, delay-dependent stability criteria and synchronization control laws are related to the size of delay so that they can be used to design some better networks according to the allowed time delays of networks. Thus, it has both theoretical significance and practical value to establish the suitable delay-dependent synchronization control laws for the system with delays. Delay-dependent controllers and sufficient criteria have been derived for the error system to be stable, which ensure the response system to be synchronized with the drive system in [13, 15, 20, 22, 23].

As everyone knows that the proportional delay is one of many delay types and objectively existent. For example, in Web quality of service (QoS) routing decision, the proportional delay is usually required [26–31]. In recent years, a lot of the routing algorithms based on neural networks are obtained in [32, 33], and these routing algorithms have high parallelism, which have been proved to be able to get the exact solutions to the problem. If the QoS routing algorithms based on neural networks with proportional delays are proposed, they will be the most suitable algorithms corresponding with the actual situation. Then, the primary task to solve this problem is to study on dynamic behaviors of neural networks with proportional delays, such as stability and dissipativity. The proportional delay [34–38] is time-varying and unbounded, which is different from other types of delay, such as constant delay, bounded time-varying delay and distributed delay, etc. Since a neural network usually has a spatial nature due to the presence of an amount of parallel pathways of a variety of axon sizes and lengths, it is desired to model by introducing continuously proportional delay over a certain duration of time. That is to say, it is reasonable that the proportional delays are introduced in the neural networks according to the topology structure and parameters of neural networks. The proportional delay function \(\tau (t)=(1-q)t\) (\(0<q<1\)) is a monotonically increasing function about the time \(t\), then it will be convenient to control the network’s running time according to the time delays of network. It is important to design a synchronized drive-response system with proportional delays. Nowadays, a few results of dynamic behaviors of NNs with proportional delays have been reported in [34, 35, 39, 40]. Zhou [34, 39, 40] have discussed the global exponential stability and asymptotic stability of cellular neural networks (CNNs) with proportional delays, by employing matrix theory and constructing Lyapunov functional. Dissipativity of a class of CNNs with proportional delays has been investigated by using the inner product properties in [35]. To the best of the author’s knowledge, the synchronization problems of (chaotic) neural networks with proportional delays have not been investigated so far, which are still challenging and open.

Motivated by the discussion above, one will discuss the exponential synchronization problem of (chaotic) neural networks with multiple proportional delays in this paper. In Sect. 2, models and preliminaries are presented. A couple of neural networks with multiple proportional delays are introduced, then by using the nonlinear transformation \(v_{i}(t)=x_{i}(\hbox {e}^{t}), w_{i}(t)=z_{i}(\hbox {e}^{t})\), the systems are equivalently transformed into a couple of neural networks with multiple constant delays and time-varying coefficients. In Sect. 3, by using Lyapunov functional method and some inequality analysis technique, several delay-dependent decentralized feedback control inputs are derived to achieve the exponential synchronization. In Sect. 4, two numerical examples and their simulations are given to illustrate the effectiveness of the proposed method. Conclusions are presented in Sect. 5.

Notations \(\mathbb {R}^{n}\) denotes the \(n\)-dimensional Euclidean space. \(x^{T}\) denotes the transpose of a square matrix or a vector \(x\). For \(x\in \mathbb {R}^{n}\), let \(\Vert x\Vert =\sum \limits _{i=1}^{n}|x_{i}|\). \(\hbox {sgn}(y)\) is the sign function of \(y\), if \(y>0,\,\hbox {sgn}(y)=1\); if \(y=0,\,\hbox {sgn}(y)=0\); if \(y<0,\,\hbox {sgn}(y)=-1\).

2 System Description and Preliminaries

A class of recurrent neural networks with proportional delays in a general form can be described by the following equations:

for \(i=1,2,\ldots ,n,\,t\ge 1\). Where \(n\ge 2\) denotes the number of neurons in the network, \(x_{i}(t)\) is the state variable associated with the \(i\)th neuron; \(d_{i}(x_{i}(t))\) is an appropriately behaved function remaining the solution of drive neural networks (2.1) to be bounded. \(a_{ij}\) and \(b_{ij}\) are constants which denote the strengths of connectivity between the neurons \(j\) and \(i\) at time \(t\) and \(q_{ij}t\), respectively; \(q_{ij}, i,j=1,2,\ldots ,n\) are proportional delay factors and satisfy \(0<q_{ij}\le 1,\,q=\min \limits _{1\le i,j\le n}\{q_{ij}\}\) and \(q_{ij}t=t-(1-q_{ij})t\), in which \((1-q_{ij})t\) corresponds to the time delay required in processing and transmitting a signal from the \(j\)th neuron to the \(i\)th neuron, and \( (1-q_{ij})t\rightarrow +\infty ,\) as \(q\ne 1,t\rightarrow +\infty \); \(f_{i}(\cdot )\) denotes a non-linear activation function. \(I_{i}\) is an external constant input. Furthermore, the neural networks described in (2.1) possess initial conditions of \(x_{i}(t)=x_{i0}, t\in [q,1],\,x_{i0}, i=1,2,\ldots ,n\) are constants. Before proceeding, one makes the following assumptions for the functions \(d_{i}(x_{i}(t))\) and the activation functions \(f_{i}(x_{i}(t)), i=1,2,\ldots ,n\).

Assumption 1

Functions \(d_{i}(x_{i}(t))\) and \(d_{i}(x_{i}(t))^{-1}, i=1,2,\ldots ,n\), are global Lipschitz continuous. Moreover, \(d_{i}^{'}(x_{i})=\frac{\hbox {d}d(x_{i})}{\hbox {d}x_{i}}\ge \gamma _{i}>0\) for \(x_{i}\in \mathbb {R}\).

Assumption 2

Each function \(f_{i}\) satisfies that \(f_{i}:\mathbb {R}\rightarrow \mathbb {R},\,|f_{i}(u)-f_{i}(v)|\le L_{i}|u-v|\), for \(u,v\in \mathbb {R}\). Where \(L_{i}, i=1,2,\ldots ,n\) are positive constants.

Regarding model (2.1) as the drive system, the response system is given by

where \(u_{i}(t)\) is the unidirectionally coupled term, which is regarded as the control input and appropriately designed such that the specific control objective is achieved. The initial conditions of (2.2) are given by \(z_{i}(t)=z_{i0}, t\in [q,1],\,z_{i0}, i=1,2,\ldots ,n\) are constants.

Let \(v_{i}(t)=x_{i}(\hbox {e}^{t}), w_{i}(t)=z_{i}(\hbox {e}^{t})\) [34], then a couple of neural networks (2.1) and (2.2) are equivalently transformed into the following couple of neural networks with multiple constant delays and time-varying coefficients

and

for \(t\ge 0,\,i=1,2,\ldots ,n\). In which \(\tau _{ij}=-\log q_{ij}\ge 0,\,U_{i}(t)=u_{i}(\hbox {e}^{t})\).

The neural networks described in (2.3) and (2.4) possess initial conditions of \(v_{i}(t)=\varphi _{i}(t)\in C([-\tau ,0],\mathbb {R})\) and \(w_{i}(t)=\psi _{i}(t)\in C([-\tau ,0],\mathbb {R})\), in which \(\varphi _{i}(t)=x_{i0}, \psi _{i}(t)=z_{i0}, t\in [-\tau ,0],\,\tau =\max \limits _{1\le i,j\le n}\{\tau _{ij}\}\).

Definition 2.1

[16] Systems (2.3) and (2.4) are said to be exponentially synchronized if there exist constants \(M\ge 1\) and \(\lambda >0\) such that

for \(t\ge 0\). Moreover, the constant \(\lambda \) is defined as the exponential synchronization rate.

Thus, the goal in this paper is to design an appropriate controller \(u(t)=[u_{1}(t),u_{2}(t),\ldots , u_{n}(t)]^{T}\) such that the coupled neural networks (2.1) and (2.2) remain synchronized. By the equivalence, one only requires to design an appropriate controller \(U(t)=[U_{1}(t),U_{2}(t),\ldots ,U_{n}(t)]^{T}\) such that the coupled neural networks (2.3) and (2.4) remain synchronized. From (2.3) and (2.4), the following dynamic equations of synchronization error can be obtained

for \(t\ge 0, i=1,2,\ldots ,n\). Where \(\tilde{y}_{i}(t)=v_{i}(t)-w_{i}(t),\,c_{i}(\tilde{y}_{i}(t))=d_{i}(v_{i}(t))-d_{i}(w_{i}(t)),\,g_{j}(\tilde{y}_{j}(t))=f_{j}(v_{j}(t))-f_{j}(w_{j}(t)),\,g_{j}(\tilde{y}_{j}(t-\tau _{ij}))=f_{j}(v_{j}(t-\tau _{ij}))-f_{j}(w_{j}(t-\tau _{ij})), ~i,j=1,2,\ldots ,n\).

Therefore, the problem of exponential synchronization of delayed neural networks given in (2.3) and (2.4) is transferred into an exponential stabilization problem of error dynamics (2.5).

3 Main Results

Theorem 3.1

Assume that Assumptions 1 and 2 hold. For the drive-response structure of neural networks given in (2.3) and (2.4), if the control input \(U_{i}(t)\) in (2.4) is suitably designed as

for \(t\ge 0\), where \(\sigma >1\) is a positive constant, then the exponential synchronization of systems (2.3) and (2.4) is obtained with a synchronization rate \(\alpha =\sigma -1>0\).

Proof

According to the definition of \(g_{j}(\tilde{y}_{j}(t))\) in (2.5) and Assumption 2 yields

Consider the following function

From (2.5) and (3.3), one can conclude that

Now construct the following positive Lyapunov functional as

for \(t\ge 0,\,\sigma >1\). Subsequently, the differential of \(V(t)\) along the trajectory of (3.4) is

If the control input \(U_{i}(t)\) is suitably designed as

then

which implies \(V(t)\le V(0)\) for \(t\ge 0\). Then, by (3.3) and (3.5), one has

And, it follows from (3.5) that

where \(M=\max \limits _{1\le i\le n}\{1+L_{i}\tau \hbox {e}^{\sigma \tau }\sum \limits _{j=1}^{n}|b_{ji}|\}\ge 1\).

Substituting (3.8) into (3.9) yields

where \(\alpha =\sigma -1>0\).

The proof is completed. \(\square \)

In terms of (3.10) and \(v_{i}(t)=x_{i}(\hbox {e}^{t}), w_{i}(t)=z_{i}(\hbox {e}^{t})\), one can conclude that

Let \(\hbox {e}^{t}=\eta \), then \(\eta \ge 1\) and \(t=\log \eta \ge 0\); Let \(\hbox {e}^{s}=\xi \), then \(s=\log \xi \in [-\tau ,0]\) and \(\xi \in [q,1]\). Thus, it follows from (3.11) that

Taking \(\eta =t\), the following inequality is obtained:

Thus, the drive-response systems (2.1) and (2.2) are said to be exponentially synchronized, and the exponential synchronization rate is less than \(\alpha \) for \(\log t<t\) as \(t\ge 1\).

By \(U_{i}(t)=u_{i}(\hbox {e}^{t})\), (3.1) can be written as

namely,

in which \(\tilde{y}_{i}(\log t)=v_{i}(\log t)-w_{i}(\log t)=x_{i}(t)-z_{i}(t)\). Assume \(y_{i}(t)=x_{i}(t)-z_{i}(t)\), then

Thus, the following theorem is derived.

Theorem 3.2

Assume that Assumptions 1 and 2 hold. For the drive-response structure of neural networks given in (2.1) and (2.2), if the control input \(u_{i}(t)\) in (2.2) is suitably designed as

for \(i=1,2,\ldots ,n,\,t\ge 1\), where \(\sigma >1\) is a constant, \(y_{i}(t)=x_{i}(t)-z_{i}(t)\) denotes the resulting synchronization error, then the exponential synchronization of systems (2.1) and (2.2) is obtained with an exponential convergence rate which is less than \(\alpha =\sigma -1\).

In addition, let \(q_{ij}=1, i,j=1,2,\ldots ,n\) in (2.1) and (2.2), then systems (2.1) and (2.2) become the following drive-response structure of neural networks without delays,

and

Corollary 3.3

Assume that Assumptions 1 and 2 hold. For the drive-response structure of neural networks given in (3.15) and (3.16), if the control input \(u_{i}(t)\) in (3.16) is suitably designed as

for \(i=1,2,\ldots ,n\), where \(\sigma >1\) is a constant, \(y_{i}(t)=x_{i}(t)-z_{i}(t)\) denotes the resulting synchronization error, then the exponential synchronization of systems (3.15) and (3.16) is obtained with an exponential convergence rate \(\alpha =\sigma -1\).

Remark 3.4

Systems (2.3) and (2.4) are asymptotically synchronized if the following conditions are satisfied: (i) the exponential synchronization rate is zero, i.e. \(\lambda =0\) and \(M>1\) such that \(\sum \limits _{i=n}^{n}|v_{i}(t)-w_{i}(t)|\le M\sum \limits _{i=n}^{n}\sup \limits _{-\tau \le s\le 0}|v_{i}(s)-w_{i}(s)|,~t\ge 0\); (ii) \(\lim \limits _{t\rightarrow \infty }\Vert \tilde{y}(t)\Vert =0\).

Remark 3.5

When \(\sigma =1\) in Theorems 3.1, 3.2 and Corollary 3.3, the exponential synchronization results reduce to the asymptotic synchronization results. Meanwhile, the control inputs (3.1), (3.14) and (3.17) are suitably designed as follows

and

4 Illustrative Examples

In this section, this paper presents two illustrative examples to demonstrate the effectiveness of the proposed synchronization scheme.

Example 4.1

Consider the following delayed Hopfield neural networks

where \(d_{i}(x_{i}(t))=x_{i}(t),\,A=\begin{pmatrix}2.0 &{} -0.1\\ -5.0 &{} 3.0 \end{pmatrix},B=\begin{pmatrix}-1.5 &{} -0.1\\ -0.2&{} -2.5 \end{pmatrix}\) and \(f_{i}(x_{i})=\tanh (x_{i}),~i=1,2\). The system satisfies Assumptions 1 and 2 with \(\gamma _{i}=1, L_{i}=1, i=1,2\). The chaotic behavior of system (4.1) with the initial condition \([x_{1}(s),x_{2}(s)]^{T}=[0.4,0.6]^{T}\) for \(-1\le s\le 0\) has already been reported in the case of \(\tau _{ij}(t)=\tau _{j}(t)=1,i,j=1,2\) [11, 25](See Fig. 1).

The present example specifies the unbounded time-varying delays as \(\tau _{ij}(t)=(1-q_{ij})t\), in which \(q_{11}=q_{22}=0.5, q_{12}=q_{21}=0.8\). At the same time, the dynamic behavior of this system with \(\tau _{ij}(t)=(1-q_{ij})t\) can be seen in Fig. 2a. To achieve synchronization, the response system is designed as

From (3.14), the control input \(u_{i}(t),i=1,2\) are suitably chosen as

and

where \(y_{i}(t)=x_{i}(t)-z_{i}(t), i=1,2\). Taking \(\sigma =2\). In Fig. 2a and c show the dynamic behavior of drive system (4.1) and response system (4.2) in phase space with initial conditions \(x(t)=[0.4,0.6]^{T}\) and \(z(t)=[-1.0,2.0]^{T},\,t\in [0.5,1.0]\), respectively. Figure 2b shows the dynamic behavior of response system (4.2) in phase space without control input with initial condition \(z(t)=[-1.0,2.0]^{T},\,t\in [0.5,1.0]\). Figure 3 depicts the synchronization error of the state variables between drive system (4.1) and response system (4.2) with the initial conditions \(x(t) = [0.4, 0.6]^T\) and \(z(t) = [-1.0, 2.0]^T\) for \(t \in [0.5, 1.0]\), respectively. From the simulation result, it shows that the proposed controller guarantees global exponential synchronization of the couple networks.

Example 4.2

Consider the following three-order CNNs with proportional delays

with

and \(f_{i}(x_{i})=\frac{1}{2}(|x_{i}+1|-|x_{i}-1|),\,q_{ij}=0.5, i,j=1,2,3\). Clearly, the system satisfies Assumptions 1 and 2, with \(\gamma _{i}=1,L_{i}=1, i=1,2,3\).

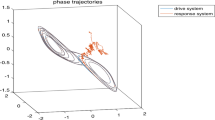

It should be noted that the CNNs is actually a chaotic CNNs with proportional delays (see Fig. 4a) with initial values \(x(t)=[2.0,1.0,1.0]^{T}\) for \(t\in [0.5,1.0]\). To achieve synchronization, the response system is designed as

Furthermore, one designs the nonlinear controller for systems (4.3) and (4.4) by using Theorem 3.2. Let us define the control parameter \(\alpha =2\). From (3.14), the control inputs \(u_{i}(t),i=1,2,3\) are suitably chosen as

and

In Fig. 4a and c show the chaotic behavior of drive system (4.3) and response system (4.4) in phase space with initial conditions \(x(t)=[2.0,1.0,1.0]^{T}\) and \(z(t)=[1.0,2.0,3.0]^{T},\,t\in [0.5,1.0]\), respectively. Figure 4b shows the chaotic behavior of response system (4.4) in phase space without control input with initial condition \(z(t)=[1.0,2.0,3.0]^{T},\,t\in [0.5,1.0]\). Figure 5 depicts the synchronization error of the state variables between the drive system (4.3) and the response system (4.4) with the initial conditions \([x_{1},x_{2},x_{3}]^{T}=[2.0,1.0,1.0]^{T}\) and \([1.0,2.0,3.0]^{T}\), respectively. It can be seen that the state error between the drive system and the response system trends to zero, which implies that the drive system synchronizes with the response system.

5 Conclusions

In this paper, a drive-response synchronization control frame has been established. Different from the prior works, delays here are multiple proportional delays which are unbounded and time-varying. A model of synchronization control of recurrent neural networks with multiple proportional delays has been firstly proposed. And then, the exponential synchronization problem has been transformed to stabilize the addressed error system. By constructing the appropriate Lyapunov functional, several delay-dependent and decentralized control laws have been derived, which ensure the response system to be exponential synchronized with the drive system. Moreover, the synchronization degree can be easily estimated. Finally, two illustrative examples have been given to verify the theoretical results. Further, recurrent neural networks with proportional delays can be applied to QoS routing in computer networks to establish the QoS routing algorithm based on neural networks with proportional delays.

References

Pecora LM, Carroll TL (1990) Synchronization in chaotic system. Phys Rev Lett 64(8):821–824

Feki M (2003) An adaptive chaos synchronization scheme applied to secure communication. Chaos Solitons Fract 18(1):141–148

Chen M, Zhou D, Shang Y (2005) A new observer-based synchronization scheme for private communication. Chaos Solitons Fract 24(4):1025–1030

Xu YH, Zhou WN, Fang JA, Sun W (2010) Adaptive lag synchronization and parameters adaptive lag identification of chaotic system. Phys Lett A 374(34):3441–3446

Ye ZY, Deng CB (2012) Adaptive synchronization to a general non-autonomous chaotic system and its applications. Nonlinear Anal 13(2):840–849

Cheng S, Ji JC, Zhou J (2013) Fast synchronization of directionally coupled chaotic systems. Appl Math Model 37(1–2):127–136

Chen LP, Chai Y, Wu RC, Ma TD (2012) Cluster synchronization in fractional-order complex dynamical networks. Phys Lett A 338(1):28–35

Zhou J, Chen T, Jiang L (2006) Robust synchronization of delayed neural networks based on adaptive control and parameters identification. Chaos Solitons Fract 27(4):905–913

Cheng CJ, Liao TL, Yan JJ, Hwang CC (2006) Exponential synchronization of a class of neural networks with time-varying delays. IEEE Trans Syst Man Cybern B 36(1):209–215

Liu YR, Wang ZD, Liang JL, Liu XH (2008) Synchronization and state estimation for discrete-time complex networks with distributed delays. IEEE Trans Syst Man Cybern B 38(5):1314–1325

Lin JS, Hung ML, Yan JJ, Liao TL (2007) Decentralized control for synchronization of delayed neural netwoks subject to dead-zone nonlinearity. Nonlinear Anal 67(6):1980–1987

Liu YR, Wang ZD, Liu XH (2008) On synchronization of coupled neural networks with discrete and unbounded distributed delays. Int J Comput Math 85(8):1299–1313

Li T, Fei SM, Zhang KJ (2008) Synchronization control of recurrent neural networks with distributed delays. Phys A 387(4):982–996

Cui B, Lou X (2009) Synchronization of chaotic recurrent neural networks with time-varying delays using nonlinear feedback control. Chaos Solitons Fract 39(1):288–294

Zhang HG, Wa TD, Huang GB, Wang ZL (2010) Robust global exponential synchronization of uncertain chaotic delayed neural networks via dual-stage impulsive control. IEEE Trans Syst Man Cybern B 40(3):831–844

Li XD, Bohner M (2010) Exponential synchronization of chaotic neural networks with mixed delays and impulsive effects via output coupling with delay feedback. Math Comput Model 52(5–6):643–653

Lu J, Ho DW, Cao J, Kurths J (2011) Exponential synchronization of linearly coupled neural networks with impulsive disturbances. IEEE Trans Neural Netw 22(2):329–36

Tu JJ, He HL (2012) Guaranteed cost synchronization of chaotic cellular neural networks with time-varying delay. Neural Comput 24(1):217–233

Gan Q (2013) Exponential synchronization of stochastic fuzzy cellular neural networks with reaction-diffusion terms via periodically intermittent control. Neural Process Lett 37(3):393–410

Song Q, Cao J (2011) Synchronization of nonidentical chaotic neural networks with leakage delay and mixed time-varying delays. Adv Differ Equ 16. doi:10.1186/1687-1847-2011-16

Yang XS, Wu ZY, Cao J (2013) Finite-time synchronization of complex networks with nonidentical discontinuous nodes. Nonlinear Dyn 73(4):2313–2327

Fang CC, Sun M, Li DD, Han D (2013) Asymptotically synchronization of a class of chaotic delayed neural networks with impulsive effects via intermittent control. Int J Nonlinear Sci 15(3):220–227

Wu HQ, Guo XQ, Ding SB, Wang LL, Zhang LY (2013) Exponential synchronization for switched coupled neural networks via intermittent control. J Comput Inform Syst 9(9):3503–3510

Xu YH, Zhou WN, Fang JA (2012) Adaptive synchronization of the complex dynamical network with double non-delayed and double delayed coupling. Int J Control Autom Syst 10(2):415–420

Cheng CJ, Liao TL, Yan JJ, Hwang CC (2005) Synchronization of neural networks by decentralized feedback control. Phys Lett A 338(1):28–35

Chen Y, Hamdi M, Tsang DHK (2001) Proportional QoS over OBS networks. In: Proceedings of Globecom 3:1510–1514

Chen Y, Qiao C (2003) Proportional differentiation: a scalable QoS approach. IEEE Commun Mag 41(6):52–58

Wei J, Xu C, Zhou X, Li Q (2006) A robust packet scheduling algorithm for proportional delay differentiation services. Comput Commun 29(18):3679–3690

Tan SK, Mohan G, Chua KC (2007) Feed back-based offset time selection for end-to-end proportion QoS provising in WDM optical burst switching networks. Comput Commun 304(4):904–921

Agarkhed J, Biradar GS, Mytri VD (2012) Energy efficient QoS routing in multi-sink wireless multimedia sensor networks. IJCSNS Int J Comput Sci Netw Sec 12(5):25–31

Kulkarm S, Sharma R, Mishra I (2012) New QoS routing algorithm for MPLS networks using delay and bandwidth constrainst. Int J Inform Commun Technol Res 2(3):285–293

Liu J, Yuan DF, Ci S, Zhong YJ (2005) Anew QoS routing optimal algorithm in mobile Ad Hoc network basked on Hopfield neural network. Adv Neural Netw 3498:343–348

Gargi R, Chaba Y, Patel RB (2012) Improving the performance of dynamic source routing protocal by optimization of neural networks. IJCSI Int J Comput Sci Issue 9(4):471–479

Zhou L (2013) Delay-dependent exponential stability of cellular neural networks with multi-proportional delays. Neural Process Lett 38(3):347–359

Zhou L (2013) Dissipativity of a class of cellular neural networks with proportional delays. Nonlinear Dyn 73(3):1895–1903

Iserles A (1997) On neural functional-differential equation with proportional delays. J Math Anal Appl 207(1):73–95

Liu YK (1996) Asymptotic behavior of functional differential equations with proportional time delays. Eur J Appl Math 7(1):11–30

Van B, Marshall JC, Wake GC (2004) Holomorphic solutions to pantograph type equations with neural fixed points. J Math Anal Appl 295(2):557–569

Zhou L, Chen X, Yang Y (2014) Asymptotic stability of cellular neural networks with multi-proportional delays. Appl Math Comput 229:457–466

Zhou L (2014) Global asymptotic stability of cellular neural networks with proportional delays. Nonlinear Dyn 77(1):41–47

Acknowledgments

The author would like to thank the editor and the anonymous reviewers for their valuable comments and constructive suggestions. The Project is supported by the National Science Foundation of China (No. 61374009) and Tianjin Municipal Education commission (No. 20100813).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Zhou, L. Delay-Dependent Exponential Synchronization of Recurrent Neural Networks with Multiple Proportional Delays. Neural Process Lett 42, 619–632 (2015). https://doi.org/10.1007/s11063-014-9377-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11063-014-9377-2