Abstract

In this paper we investigate the operations of insertion and deletion performed at the ends of a string. We show that using these operations in a P systems framework (which corresponds to using specific variants of graph control), computational completeness can even be achieved with the operations of left and right insertion and deletion of only one symbol, both in the generating as well as in the accepting case.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The operations of left and right insertion and deletion that we consider in this article correspond to the operations of left and right concatenation and quotient with a finite language. While these operations are known for a long time, their joint investigation in a distributed framework originates from the area of natural computing, where they were used in the context of networks of evolutionary processors (NEP) (Castellanos et al. 2001). Such networks are a special type of networks of language processors (Csuhaj-Varjú and Salomaa 1997) that feature a set of (rewriting) nodes rewriting languages and after that redistributing some regular subsets between the nodes. In networks of evolutionary processors, the rewriting operations are replaced by three types of operations having a biological motivation (point mutations): insertion, deletion, and substitution. The corresponding systems are quite powerful and we refer to Dassow et al. (2011) for more details. The redistribution of the contents of a node is based on a regular condition, which is a very powerful operation. In accepting hybrid networks of evolutionary processors (AHNEP) as considered in Dassow and Manea (2010) and Margenstern et al. (2005a), this redistribution condition is replaced by random context conditions, and moreover, the set of operations is changed now including the operations of insertion and deletion at the extremities of the strings.

The operations of insertion and deletion at the extremities of a string can also be seen as a restricted case of the more general variant where insertion and deletion can be performed anywhere in the string. The insertion operation defined in such a way was first considered in Haussler (1982, 1983) and after that related insertion and deletion operations were investigated in Kari (1991) and Kari et al. (1997). Another generalization of the insertion and deletion operations that involves the checking of contexts for the insertion and deletion was considered with a linguistic motivation in Galiukschov (1981) and Marcus (1969) and with a biological motivation in Benne (1993), Biegler et al. (2007), Kari et al. (1997) and Păun et al. (1998). Generally, if the length of the contexts and/or of the inserted and deleted strings are big enough, then the insertion–deletion closure of a finite language leads to computational completeness. There are numerous results establishing the descriptional complexity parameters sufficient to achieve this goal, we refer to Verlan (2010a, b) for an overview of this area.

Some descriptional complexity parameters lead to variants that are not computationally complete. An investigation of insertion and deletion operations combined with regulating mechanisms was done for these cases, more precisely, with the graph-controlled, the matrix, and the random-context controls (Freund et al. 2010; Ivanov and Verlan 2011; Petre and Verlan 2010). As it was shown in these articles, in most of the cases the additional control leads to computational completeness. The graph-controlled regulation is of particular interest, as it can be related to the notion of P systems. Such systems formalize the functioning of a living cell that topologically delimits processing units by membranes, thus leading to a tree (or graph) structure of processing nodes. The elements processed in some node (membrane) then are distributed among the neighbors in the structure. We refer to Păun (2002) and Păun et al. (2010) and to the web page The P systems Web page (http://ppage.psystems.eu/) for more details on P systems. In the case of the operations of insertion and deletion acting on strings this directly corresponds to a graph control where the control nodes correspond to the membranes.

The research on context-free insertion and deletion (i.e., without contextual dependency) shows that if the lengths of the inserted and deleted strings are 2 and 3 (or 3 and 2), respectively, then the insertion–deletion closure of finite languages is computationally complete (Margenstern et al. 2005b). When one of these parameters is decreased, this result is not true anymore (Verlan 2007); moreover, even the graph-controlled variant cannot achieve computational completeness (Krassovitskiy et al. 2011). This changes when a graph control with appearance checking is used (Alhazov et al. 2011a) or in the case of a random context control (Ivanov and Verlan 2011). In both variants, minimal operations (involving only one symbol) were considered, leading to RE (the family of recursively enumerable languages) in the case of set-controlled random context conditions and to PsRE (the family of Parikh sets of RE) in the case of graph control with appearance checking.

We note that the operations of left and right insertion and deletion are incomparable with normal insertion and deletion: because of the positional information, the regular language a + b + can be obtained even with left and right insertions of only one symbol, yet not when insertions are possible at arbitrary positions in the string. On the other hand, the Dyck language cannot be obtained when insertion is only possible at the ends of the strings, while with normal insertion this can be done easily. In Alhazov et al. (2011a, 2012), left and right insertion and deletion operations (under the name of exo-insertion and -deletion) were considered in the P systems framework (i.e., with a graph control) and it was shown that systems with insertion of strings of length 2 (respectively 1) and deletion of strings of length 1 (respectively 2) lead to computational completeness. In the case of minimal insertion and deletion (i.e., of only one symbol), a priority of deletion over insertion (corresponding to an appearance check) was used to show computational completeness.

In this paper, which is an extended version of our paper (Freund et al. 2012) presented at the conference Unconventional Computation and Natural Computation 2012 in Orléans, we continue these investigations by considering P systems with minimal left and right insertion and deletion, and we prove that computational completeness can be achieved even in this case, this time showing computational completeness for both the generating case and the accepting case. We also directly show that matrix grammars using minimal left insertion and minimal right deletion rules are computationally complete (with matrices of length at most 3). Moreover, we prove that using additional minimal substitutions (substitutions of one symbol by another one) allows for reducing the height of the tree structure of the P system to the minimal possible size, i.e., to one. In addition, we show that we can even avoid the target here in the case of channel type P systems using minimal left and right insertion and deletion, where the applications of the rules can be interpreted as being carried out when objects (strings) are passing through a membrane, in the sense of molecules being modified when passing through a specific membrane channel from one membrane (region) to another one.

2 Preliminaries

After some preliminaries from formal language theory, we define the string rewriting rules to be used in this paper. As string rewriting systems, we will consider Post systems, matrix grammars, and sequential P systems. Moreover, we will give some examples and preliminary results to illustrate our definitions.

The set of non-negative integers is denoted by \({\mathbb{N}. }\) An alphabet V is a finite non-empty set of abstract symbols. Given V the free monoid generated by V under the operation of concatenation is denoted by \(V^{\ast }; \) the elements of \(V^{\ast }\) are called strings, and the empty string is denoted by \(\lambda ; V^{\ast }\setminus \left\{ \lambda \right\}\) is denoted by V +. Let \(\left\{ a_{1},\ldots,a_{n}\right\} \) be an arbitrary alphabet; the number of occurrences of a symbol a i in x is denoted by \(\left\vert x\right\vert _{a_{i}}\); the number of occurrences of all symbols from V in x is denoted by \(\left\vert x\right\vert\). The family of recursively enumerable string languages is denoted by RE. Two string languages are considered to be equal if they differ at most in the empty string. For more details of formal language theory the reader is referred to the monographs and handbooks in this area as Dassow and Păun (1989) and Rozenberg and Salomaa (1997).

We here consider string rewriting rules only working at the ends of a string (they can be seen as restricted variants of Post rewriting rules as already introduced by Emil Post in Post (1943)):

-

Simple Post rewriting rule \(P\left[ u{\$}x/y {\$}v\right] \) with \(u,x,y,v\in V^{\ast }\):

$$ P\left[ u {\$}x/y {\$}v\right] \left( uwx\right) =ywv \hbox{ for } w\in V^{\ast }. $$ -

Normal Post rewriting rule \(P\left[ x/y\right] \) with \(x,y\in V^{\ast }: P\left[ x/y\right] \left( wx\right) =yw\) for \(w\in V^{\ast }\).

-

Left substitution \(S_{L}\left[ u/y\right] \) with \(u,y\in V^{\ast }: S_{L}\left[ u/y\right] \left( uw\right) =yw\) for \(w\in V^{\ast }\).

-

Right substitution \(S_{R}\left[ x/v\right] \) with \(x,v\in V^{\ast }: S_{R}\left[ x/v\right] \left( wx\right) =wv\) for \(w\in V^{\ast }\).

For a simple Post rewriting rule \(P\left[ u {\$}x/y {\$}v\right] \) we also write \(u {\$}x\rightarrow y {\$}v\). A normal Post rewriting rule \(P\left[ x/y\right] \) is a special case of a Post rewriting rule \(P\left[ u {\$}x/y {\$}v\right] \) with u = v = λ (we here consider the mirror version \(P\left[ {\$}x/y {\$}\right] \) of the normal form rules \(P\left[ u {\$}/ {\$}v\right] \) as originally considered in Post (1943) for Post canonical systems; the variant we take in this paper has already been used several times for proving specific results in the area of P systems, e.g., see Freund et al. (2004). Left substitution \(S_{L}\left[ u/y\right] \) and right substitution \(S_{R}\left[ x/v\right] \) are special cases of simple Post rewriting rules as well, with x = v = λ and u = y = λ, respectively.

If in a (left or right) substitution \(S_{L}\left[ x/y\right] \) or \(S_{R}\left[ x/y\right]\,x\) is empty, then we also call it an insertion and write \(I_{L}\left[ y\right] \) and \(I_{R}\left[ y\right]\), respectively; if in a (left or right) substitution \(S_{L}\left[ x/y\right] \) or \(S_{R}\left[ x/y\right]\,y\) is empty, then we also call it a deletion and write \(D_{L}\left[ x\right] \) and \(D_{R}\left[ x\right]\), respectively. If we only insert one symbol a, then we will also write +a, a+, −a, and a− for \(I_{L}\left[ a\right], I_{R}\left[ a\right], D_{L}\left[ a\right]\), and \(D_{R}\left[ a\right]\), respectively. In general, we assume that any of these operations considered in this paper—(left or right) insertion, deletion, and substitution—at least involves one symbol.

A (string rewriting) grammar G of type X is a construct \(\left( V,T,A,P\right) \) where V is a (finite) set of symbols, \(T\subseteq V\) is a set of terminal symbols, \(A\in V^{*}\) is the axiom, and P is a finite set of rules of type X. Each rule \(p\in P\) induces a relation \(\Longrightarrow _{p}\subseteq V^{\ast }\times V^{\ast }; p\) is called applicable to a string \(x\in V^{\ast }\) if and only if there exists at least one string \(y\in V^{\ast }\) such that \(\left( x,y\right) \,\in \Longrightarrow _{p}\); we also write \(x\Longrightarrow _{p}y\). The derivation relation \(\Longrightarrow _{G} \) is the union of all \(\Longrightarrow _{p}\), i.e., \(\Longrightarrow _{G}= \cup _{p\in P}\Longrightarrow _{p}\). The reflexive and transitive closure of \(\Longrightarrow _{G}\) is denoted by \(\overset{\ast }{\Longrightarrow }_{G}\).

The language generated by G is the set of all terminal strings derivable from the axiom, i.e., \(L\left( G\right) =\left\{ v\in T^{\ast }\mid A\,{\Longrightarrow}^{\ast}_{G}\,v\right\}\). The language accepted by G is the set of all terminal strings deriving the axiom, i.e., \(L\left( G\right) =\left\{ v\in T^{\ast }\mid v{\Longrightarrow}^{\ast }_{G}\,A\right\}\). The family of languages generated (accepted) by grammars of type X is denoted by \(\mathcal{L}\left( X\right) (\mathcal{L}_{a}\left( X\right) )\).

Instead of a single axiom A we may also allow a finite set of axioms; in this case, we put an A in front of the type X for this variant of grammars thus obtaining the family of languages generated (accepted) by grammars of type X denoted by \(\mathcal{L}\left( A{\hbox{-}}X\right) (\mathcal{L}_{a}\left( A{\hbox{-}}X\right) )\).

In general, we write S k,m L for a type of grammars using only substitution rules \(S_{L}\left[ x/y\right] \) with \(\left\vert x\right\vert \leq k\) and \(\left\vert y\right\vert \leq m\). In the same way, we define the type S k,m R for a type of grammars using only substitution rules \(S_{R}\left[ x/y\right] \) with \(\left\vert x\right\vert \leq k\) and \(\left\vert y\right\vert \leq m, \) as well as the types I m L , I m R , D k L , and D k R , respectively. The type D k I m allows for the deletion of strings with length ≤ k and for the insertion of strings with length ≤ m on either side of a string. If, in addition, we also allow substitutions \(S_{L}\left[ x/y\right] \) and \(S_{R}\left[ x/y\right] \) with \(\left\vert x\right\vert \leq k^{\prime }\) and \(\left\vert y\right\vert \leq m^{\prime }\), we get the type \(D^{k}I^{m}S^{k^{\prime }m^{\prime }}; \) we observe that the type \(D^{k}I^{m}S^{k^{\prime }m^{\prime }}\) is subsumed by the type \(S^{k^{\prime }m^{\prime }}\) if \(k\leq k^{\prime }\) and \(m\leq m^{\prime }\). If we allow the parameters k and/or m to be arbitrarily large, we just omit them, e.g., DI is the type allowing to use deletions and insertions of strings of arbitrary lengths.

Example 1

Let \(G=\left( V,T,A,P\right) \) be a regular grammar, i.e., the rules in P are of the form \(A\rightarrow bC\) and \(A\rightarrow \lambda \) with \(A,C\in V\setminus T\) and \(b\in T. \) Then the grammar \(G^{\prime }=\left( V,T,A,\left\{ S_{R}\left[ A/y\right] \mid A\rightarrow y\in P\right\} \right) \) with substitution rules generates the same language as G, i.e., \(L\left( G^{\prime }\right) =L\left( G\right)\). Hence, with REG denoting the family of regular languages, we obviously have got \(REG\subseteq \mathcal{L}\left( S_{R}^{1,2}\right)\).□

It is not difficult to check that grammars of type D 1 I 1 S 1,1 have a rather limited computational power. Indeed, we can show the following representation of languages generated or accepted by grammars of type D 1 I 1 S 1,1:

Theorem 1

Every language \(L\subseteq T^{\ast }\) in \(\mathcal{L}\left( D^{1}I^{1}S^{1,1}\right) \) can be written in the form \(T_{l}^{\ast }ST_{r}^{\ast }\) where \(T_{l},T_{r}\subseteq T\) and S is a finite subset of \(T^{\ast }\).

Proof

Let \(G=\left( V,T,A,P\right) \) be a grammar of type D 1 I 1 S 1,1 and let \(N:=V\setminus T\). We first construct the start set S as follows: Consider all possible derivations in G from A with only using substitutions and deletions, but without loops, i.e., no string is allowed to appear more than once in such a derivation, which means that all these derivations are of bounded length (bounded by the number of strings over V of length at most \(\left\vert A\right\vert \)). Then S consists of all terminal strings obtained in this way (finding these strings is a finitely bounded process, as to each of the possible strings over V of length at most \(\left\vert A\right\vert\), at most \(\left\vert P\right\vert \) rules can be applied). A symbol from N remaining inside a string blocks that string from ever becoming terminal by applying rules from P, and deletion of a symbol can be avoided by just not introducing the symbol which by a sequence of minimal substitutions would lead to the symbol to be deleted. Hence, for constructing the sets T l (T r , respectively) we can restrict ourselves to the terminal symbols b either directly inserted by minimal insertion rules \(I_{l}\left[ b\right] \) (\(I_{r}\left[ b\right]\), respectively) or obtained by a sequence of one minimal insertion together with a bounded (by \(\left\vert V\right\vert \)) number of minimal substitutions \(S_{l}\left[ a/b\right] (S_{r}\left[ a/b\right]\), respectively).

Therefore, in sum \(L\left( G\right) \) can be written as the finite union of languages generated by grammars of type I 1, i.e., \(L\left( G\right) =\cup _{w\in S}L\left( G_{w}\right) \) where

which yields the desired form \(T_{l}^{\ast }ST_{r}^{\ast }\) for describing \(L\left( G\right)\).□

Corollary 1

\(\mathcal{L}\left( A{\hbox{-}}D^{1}I^{1}S^{1,1}\right) =\mathcal{L}\left( A{\hbox{-}}I^{1}\right) =\mathcal{L}_{a}\left( A{\hbox{-}}D^{1}I^{1}S^{1,1}\right) =\mathcal{L}_{a}\left( A{\hbox{-}}D^{1}\right).\)

Proof

The representation of languages in \(\mathcal{L}\left( D^{1}I^{1}S^{1,1}\right) \) elaborated in the preceding proof means that for the type D 1 I 1 S 1,1 we could forget minimal deletions and substitutions and instead consider finite subsets of axioms instead of a single axiom, i.e., we have proved that \(\mathcal{L}\left( A{\hbox{-}}D^{1}I^{1}S^{1,1}\right) =\mathcal{L}\left( A{\hbox{-}}I^{1}\right)\).

Similar arguments as outlined for the generating case immediately show that

as well, because in the accepting case insertions have the same effect as deletions in the generating case and vice versa, i.e., using \(D_{\alpha }[b], \alpha \in \left\{ l,r\right\}\), in the accepting grammar means using \(I_{\alpha }\left[ b\right] \) in the corresponding generating grammar.□

2.1 Post systems

A simple/normal Post system is a grammar using only simple/normal Post rewriting rules, i.e., is a grammar of type SPS / NPS. A Post system \(\left( V,T,A,P\right) \) is said to be in normal form (a grammar of type PSNF) if and only if the Post rewriting rules \(P\left[ x/y\right] \) in P are only of the forms \(P\left[ ab/c\right], P\left[ a/bc\right], P\left[ a/b\right], \) and \(P\left[ a/\lambda \right]\), with \(a,b,c\in V\). A Post system \(\left( V,T,A,P\right) \) is said to be in Z-normal form (a grammar of type PSZNF) if and only if it is in normal form, \(A\in V\setminus T\) and, moreover, there exists a special symbol \(Z\in V\setminus T\) such that

-

Z appears only once in the string x of a Post rewriting rule \(P\left[ x/y\right]\), and this rule is \(P\left[ Z/\lambda \right]\);

-

if the rule \(P\left[ Z/\lambda \right] \) is applied, the derivation in the Post system stops yielding a terminal string;

-

a terminal string can only be obtained by applying the rule \(P\left[ Z/\lambda \right]\).

Basic results concerning Post systems are folklore since many years, e.g., see Minsky (1967). For the accepting case we have the following characterizations of RE:

Theorem 2

For every recursively enumerable language \(L\subseteq T^{\ast }\) there exists a simple Post rewriting system \(G, G=\left( V,T,A,P\right)\), accepting L, i.e., \(\mathcal{L}_{a}\left( SPS\right) =RE\).

As having an idea how the simulations of the actions of a Turing machine by an accepting grammar and a Post system work may help for a better understanding of the proofs elaborated in the following sections, we sketch a special proof for the following well-known result:

Theorem 3

For every recursively enumerable language \(L\subseteq T^{\ast }\) there exists a Post rewriting system in normal form \(G^{\prime }, G^{\prime }=\left( V^{\prime },T,A^{\prime },P^{\prime }\right)\), such that \(G^{\prime }\) accepts \(L\left\{ Z\right\}\), where \(Z\in V^{\prime }\setminus T\).

Proof

Let \(M=\left( Q, V, T, Z_{0}, B, \delta, q_{0}, q_{f}\right) \) be a Turing machine accepting L with Q being the set of states, V the tape alphabet, \(T\subset V\) the terminal alphabet, \(Z_{0}\in V\) the left end marker, \(B\in V\) the blank symbol, δ the transition function, q 0 and q f the initial and final state, respectively. A configuration of M may be represented as a string from \(\left\{ Z_{0}\right\} V^{\ast }QV^{\ast }\left\{ Z_{1}\right\} \) where Z 1 is the right end marker (a new symbol not in V) and the symbol to the left of the state symbol from Q is the current symbol to be read by M. Without loss of generality, we may assume that the transitions of M are either (i) rewriting a symbol, (ii) going one step to the left or (iii) going one step to the right on the tape. Moreover, we assume that, given the input \(w\in T^{\ast }, M\) accepts w if and only if it halts with the final configuration represented by Z 0 q f Z 1. For M, we now construct a grammar \(G^{\prime \prime }=\left( V^{\prime \prime },T,A^{\prime \prime },P^{\prime \prime }\right) \) accepting \(\left\{ Z_{0}q_{0}\right\} L\left\{ Z_{1}\right\} \) as follows:

\(V^{\prime \prime }=Q\cup Q^{\prime }\cup \left\{ Z_{1}\right\} \cup V,\, A^{\prime \prime }=Z_{0}q_{f}^{\prime }\), and \(P^{\prime \prime }\) contains the following grammar rules obtained by translating the transition rules of M:

-

rewriting a symbol \(X\in V\setminus \left\{ Z_{0}\right\} \) to \(Y\in V\setminus \left\{ Z_{0}\right\} \) going from state q to p is simulated by the rule \(Xq\rightarrow Yp\);

-

going one step to the left is simulated by the rule \(Xq\rightarrow pX, X\in V\setminus \left\{ Z_{0}\right\}\);

-

going one step to the right is simulated by the rule \(qX\rightarrow Xp, X\in V\), in \(P^{\prime \prime};\) intending to go to the right of the right end marker Z 1 can only happen when trying to simulate a rule \(qB\rightarrow Bp, q\neq q_{f}\); the simulation then has to be carried out by inserting an additional blank symbol, i.e., by the rules \(qZ_{1}\rightarrow p^{\prime }Z_{1}\) and \(p^{\prime }\rightarrow Bp; \)

-

the final configuration Z 0 q f Z 1 of M may be represented in \(G^{\prime \prime }\) by any string Z 0 q f B n Z 1 for n ≥ 0; hence, we add the rules \(q_{f}B\rightarrow q_{f}\) and \(q_{f}Z_{1}\rightarrow q_{f}^{\prime }\).

Using the well-known technique of “simulate and rotate”, from the accepting grammar \(G^{\prime \prime }=\left( V^{\prime \prime },T,A^{\prime \prime },P^{\prime \prime }\right)\) we can easily obtain a Post system in normal form \(G^{\prime }=\left( V^{\prime },T,A^{\prime },P^{\prime }\right) \) accepting \(L\left\{ Z\right\} : V^{\prime }=V^{\prime \prime }\cup Q\times V\cup V\times Q\cup \left\{ Z,Z^{\prime }\right\}, \,A^{\prime \prime }=A^{\prime }=Z_{0}q_{f}^{\prime }\), and the rules in \(P^{\prime }\) are obtained as follows: as we can only act on the right-hand side of a string with the result of the application of a rule being put to the left-hand side, we add the rotation rules \( {\$}X \rightarrow X {\$}\) for all \(X\in V^{\prime \prime }\setminus \left\{ q_{f}^{\prime }\right\}\); the initial configuration is obtained by the rules \( {\$}Z\rightarrow Z_{1}Z^{\prime } {\$} \) and \( {\$}Z^{\prime }\rightarrow Z_{0}q_{0} {\$} \); a rewriting rule \(Xq\rightarrow Yp\) is simulated by the rules \( {\$}Xq\rightarrow \left( Y,p\right) {\$} \) and \( {\$}\left( Y,p\right) \rightarrow Yp {\$}; \) the rule \(Xq\rightarrow pX\) is simulated by the rules \( {\$}Xq\rightarrow \left( p,X\right) {\$} \) and \( {\$}\left( p,X\right) \rightarrow pX {\$} \); the rule \(qX\rightarrow Xp, X\in V\), is simulated by the rules \( {\$}qX\rightarrow \left( X,p\right) {\$} \) and \( {\$}\left( X,p\right) \rightarrow Xp {\$}; \) the rule \(qZ_{1}\rightarrow p^{\prime }Z_{1}\) is simulated by the rules \( {\$}qZ_{1}\rightarrow \left( p^{\prime },Z_{1}\right) {\$} \) and \( {\$}\left( p^{\prime },Z_{1}\right) \rightarrow p^{\prime }Z_{1} {\$} \) as well as \(p^{\prime }\rightarrow Bp\) by \( {\$}p^{\prime }\rightarrow Bp {\$} \); finally, the rule \(q_{f}B\rightarrow q_{f}\) is simulated by \( {\$}q_{f}B\rightarrow q_{f} {\$} \) and \(q_{f}Z_{1}\rightarrow q_{f}^{\prime }\) by \( {\$}q_{f}Z_{1}\rightarrow q_{f}^{\prime } {\$} \). As this construction shows, in the accepting case we do not need rules of the form \( {\$} X\rightarrow {\$} \).□

For the proof of our main theorem we need the special Z-normal form; the following result is an immediate consequence of the proof given for Lemma 1 in Freund et al. (2004):

Theorem 4

For every recursively enumerable language \(L\subseteq T^{\ast }\) there exists a Post rewriting system \(G, G=\left( V,T,A,P\right)\), in Z -normal form such that \(L\left( G\right) =L, \) i.e., \(\mathcal{L}\left( SPS\right) =\mathcal{L}\left( PSNF\right) =\mathcal{L}\left( PSZNF\right) =RE\).

2.2 Matrix grammars

A matrix grammar of type X is a construct \(G_{M}=\left( G,M\right) \) where \(G=\left( V,T,A,P\right) \) is a grammar of type X, M is a finite set of sequences of the form \(\left( p_{1},\dots, p_{n}\right) \), n ≥ 1, of rules in P. For \(w,z\in V^{\ast }\) we write \(w\Longrightarrow _{G_{M}}z\) if there are a matrix \(\left( p_{1},\dots, p_{n}\right) \) in M and strings \(w_{i}\in V^{\ast },\) 1 ≤ i ≤ n + 1, such that w = w 1, z = w n+1, and, for all \(1\leq i\leq n, w_{i}\Longrightarrow _{G}w_{i+1}\). The maximal length n of a matrix \(\left(p_{1},\dots, p_{n}\right) \in M\) is called the degree of G M .

\(L(G_{M})=\left\{ v\in T^{\ast }\mid A\Longrightarrow _{G_{M}}^{\ast }v\right\}\) is the language generated by G M . The family of languages generated by matrix grammars of type X (of degree at most n) is denoted by \(\mathcal{L}\left( X{\hbox{-}}MAT\right) \) \((\mathcal{L}\left( X{\hbox{-}}MAT_{n}\right) )\).

Theorem 5

\(\mathcal{L}\left( D^{2}I^{2}{\hbox{-}}MAT_{2}\right) =\mathcal{L}\left( D^{1}I^{1}{\hbox{-}}MAT_{3}\right) =\mathcal{L}\left( PSNF\right) =RE\).

Proof

From Theorem 4 we know that \(\mathcal{L}\left(PSNF\right) =RE\), hence, we will only show that for every Post system G = (V, T, A, P) in normal form we are able to construct equivalent matrix grammars G 1 = (G, M 1) and G 2 = (G, M 2) of type D 2 I 2 and of type D 1 I 1, respectively:

As each rule in G is directly simulated by a matrix in M 1 and in M 2, respectively, we immediately infer \(L\left( G\right) =L\left( G_{1}\right) =L\left( G_{2}\right)\).□

Whereas the matrices in M 1 are only of length 2, the degree of M 2 is 3; it remains as an open question whether also with rules of type D 1 I 1 we could decrease the degree to 2 or not; we conjecture that the answer is no. As we have shown in Theorem 1, with grammars using rules of type D 1 I 1 S 1,1 we are not able to obtain RE, we even remain below the regular language class; hence, we need such regulating mechanisms as matrices to reach computational completeness.

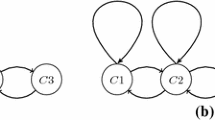

2.3 P systems

We now introduce another variant to guide the derivations in a grammar using rules of those types introduced above, especially minimal left and right substitution rules.

A (sequential) P system of type X with tree height n is a construct \(\Uppi =\left( G,\mu,R,i_{0}\right) \) where \(G=\left( V,T,A,P\right) \) is a grammar with rules of type X and

-

μ is the membrane (tree) structure of the system with the height of the tree being n (μ usually is represented by a string containing correctly nested marked parentheses); we assume the membranes to be the nodes of the tree representing μ and to be uniquely labeled by labels from a set Lab; the number of membranes in μ usually is called the degree of μ (and the degree of \(\Uppi \) itself, too);

-

R is a set of rules of the form \(\left( h,r,tar\right) \) where \(h\in Lab, r\in P\), and tar is called the target (indicator) and is taken from the set \(\left\{ here,in,out\right\} \cup \left\{ in_{j}\mid 1\leq j\leq n\right\}\); the rules assigned to membrane h form the set \( R_{h}=\left\{ \left( r,tar\right) \mid \left( h,r,tar\right) \in R\right\}, \)i.e., R can also be represented by the vector \(\left( R_{h}\right) _{h\in Lab}\);

-

i 0 is the initial membrane where the axiom A is put at the beginning of a computation.

As we only have to follow the trace of a single string during a computation of the P system, a configuration of \(\Uppi\) can be described by a pair \(\left( w,h\right) \) where w is the current string and h is the label of the membrane currently containing the string w. For two configurations \(\left( w_{1},h_{1}\right) \) and \(\left( w_{2},h_{2}\right) \) of \(\Uppi \) we write \(\left( w_{1},h_{1}\right) \Longrightarrow _{\Uppi }\left( w_{2},h_{2}\right) \) if we can pass from \(\left( w_{1},h_{1}\right) \) to \(\left( w_{2},h_{2}\right) \) by applying a rule \(\left( h_{1},r,tar\right) \in R, \) i.e., \(w_{1}\Longrightarrow _{r}w_{2}\) and w 2 is sent from membrane h 1 to membrane h 2 according to the target indicator tar. More specifically, if tar = here, then h 2 = h 1; if tar = out, then the string w 2 is sent to the membrane h 2 immediately outside membrane h 1; if \( tar=in_{h_{2}} \), then the string is moved from membrane h 1 to the membrane h 2 immediately inside membrane h 1; if tar = in, then the string w 2 is sent to one of the membranes immediately inside membrane h 1.

A sequence of transitions between configurations of \(\Uppi\), starting from the initial configuration \(\left( A,i_{0}\right)\), is called a computation of \(\Uppi\). A halting computation is a computation ending with a configuration \(\left( w,h\right)\) such that no rule from R h can be applied to w anymore; w then is called the result of this halting computation if \(w\in T^{\ast }\). \(L\left( \Uppi \right)\), the language generated by \(\Uppi\), consists of all strings over T which are results of a halting computation in \(\Uppi\).

By \(\mathcal{L}\left( X{\hbox{-}}LP\right) (\mathcal{L}\left( X{\hbox{-}}LP^{\left\langle n\right\rangle }\right) )\) we denote the family of languages generated by P systems (of tree height at most n) using rules of type X. If only the targets here, in, out are used, then the P system is called simple, and the families of languages are denoted by \(\mathcal{L}\left( X{\hbox{-}}LsP\right) \) (\(\mathcal{L}\left( X{\hbox{-}}LsP^{\left\langle n\right\rangle }\right) \)). If even only the targets in and out are used, then the P system is called a channel type P system, as any change taking place in such a P system can be interpreted as only happening when an object (a string) passes through a membrane; the corresponding families of languages are denoted by \(\mathcal{L}\left( X{\hbox{-}}LcP\right) (\mathcal{L}\left( X{\hbox{-}}LcP^{\left\langle n\right\rangle }\right) )\).

For the accepting case, i 0 is the initial membrane where the axiom A together with the input \(w\in T^{\ast }\) is put as wA at the beginning of a computation, and the input w is accepted if and only if there exists a halting computation from the initial configuration \(\left( wA,i_{0}\right) \). In contrast to the accepting variant of sequential grammars, in the case of P systems we do not care about the contents of the membranes at the end of a halting computation. By \(\mathcal{L}_{a}\left( X{\hbox{-}}LP\right), \mathcal{L}_{a}\left( X{\hbox{-}}LsP\right)\), and \(\mathcal{L}_{a}\left( X{\hbox{-}}LcP\right) (\mathcal{L}_{a}\left( X{\hbox{-}}LP^{\left\langle n\right\rangle }\right), \mathcal{L}_{a}\left( X{\hbox{-}}LsP^{\left\langle n\right\rangle }\right)\), and \(\mathcal{L}_{a}\left( X{\hbox{-}}LcP^{\left\langle n\right\rangle }\right) \)) we then denote the families of languages accepted by P systems, simple P systems, and channel type P systems (of tree height at most n) using rules of type X.

Example 2

Let \(\Uppi =\left( G,\left[ {_{1}}\left[ {_{2}}\right] {_{2}}\left[{_{3}}\right] {_{3}}\right] {_{1}},R,1\right) \) be a P system of type I 1 R I 1 L with

The computations in \(\Uppi \) start with a in membrane 1. In general, starting with a string a n+1 b n, n ≥ 0, in membrane 1, we may either add b on the right-hand side by the rule \(\left( 1,I_{R}\left[ b\right], in\right)\), getting a n+1 b n+1 as the terminal result in the elementary membrane 3 (a membrane is called elementary if and only if it contains no inner membrane) or else go into membrane 2, from there going out again into membrane 1 with adding another a thus obtaining a n+2 b n+1. These considerations show that the language generated by \(\Uppi \) is \(\left\{ a^{n+1}b^{n+1}\mid n\geq 0\right\}\).

The P system \(\Uppi ^{\prime }=\left( G,\left[ {_{1}}\left[ {_{2}}\right] {_{2}}\left[ {_{3}}\left[ {_{4}}\left[ {_{5}}\right] {_{5}}\right] {_{4}}\right] {_{3}}\right] {_{1}},R,2\right) \) of type D 1 R D 1 L I 1 R with

accepts the same language: traveling between membranes 2 and 1 allows for deleting a symbol a on the left-hand side and a symbol b on the right-hand side. The last symbol b is deleted by going into membrane 3. The rules with the trap symbol # guarantee that an infinite loop, finally looping between membranes 4 and 5, is entered whenever the input was not of the form a n+1 b n+1, n ≥ 0.

Hence, in sum we have shown

i.e., \(\mathcal{L}\left( I_{R}^{1}I_{L}^{1}{\hbox{-}}LsP^{\left\langle 1 \right\rangle }\right) \) as well as \(\mathcal{L}_{a}\left( D_{R}^{1}D_{L}^{1}I_{R}^{1}{\hbox{-}}LsP^{\left\langle 3\right\rangle }\right)\) contain a non-regular language.□

Example 3

Let \(\Uppi =\left( G,\left[ {_{1}}\left[ {_{2}}\right] {_{2}}\left[ {_{3}}\right] {_{3}}\left[ {_{4}}\right] {_{4}}\right] {_{1}},R,1\right) \) be a P system of type D 1 R I 2 L with

The computations in \(\Uppi \) start with aB in membrane 1. In general, starting with a string \(a^{{2}^{n}}B,\) n ≥ 0, in membrane 1, we may either delete B by the rule \(\left( 1,D_{R}\left[ B\right],in_{4}\right) \), getting \(a^{{2}^{n}}\) as the terminal result in the elementary membrane 4 or delete B by the rule \(\left( 1,D_{R}\left[ B\right],in_{3}\right). \) With the string \(a^{{2}^{n}}\) arriving in membrane 3, we get \(Ba^{{2}^{n}}\) in membrane 1 by the rule \(\left( 3,I_{L}\left[ B\right],out\right)\). Now we double the number of symbols a by applying the sequence of rules \(\left( 1,D_{R}\left[ a\right],in_{2}\right) \) and \(\left( 2,I_{L}\left[ aa\right],out\right)\, 2^{n}\) times, finally obtaining \(a^{{2}^{n+1}}B\). In sum we get \(L\left( \Uppi \right) =\left\{ a^{2^{n}}\mid n\geq 0\right\} \) for the language generated by the P system \(\Uppi\); hence, we have shown that

i.e., \(\mathcal{L}\left( D_{R}^{1}I_{L}^{2}{\hbox{-}}LP^{\left\langle 1\right\rangle }\right) \) contains a non-context-free language.□

3 Computational completeness of P systems with minimal substitution rules

In this section we consider several variants of P systems with substitution rules of minimal size, the main result showing computational completeness for simple P systems with rules of type D 1 I 1. Yet first we show that for any recursively enumerable language we can construct a P system, with the height of the tree structure being only 1 (which is the minimum possible according to Theorem 1, as the grammars considered there correspond to P systems with only one membrane, i.e., with tree height zero), of type D 1 R I 1 L S 1,1 R , i.e., using minimal left insertions and minimal right deletions and substitutions.

Theorem 6

\(RE=\mathcal{L}\left( D_{R}^{1}I_{L}^{1}S_{R}^{1,1}{\hbox{-}}LP^{\left\langle 1\right\rangle }\right) =\mathcal{L}_{a}\left( D_{R}^{1}I_{L}^{1}S_{R}^{1,1}{\hbox{-}}LP^{\left\langle 1\right\rangle }\right)\).

Proof

From Theorem 4 we know that \(\mathcal{L}\left( PSZNF\right) =RE\), hence, we first show that for every Post system \(G=\left( V,T,A,P\right)\) in Z-normal form we are able to construct an equivalent P system \(\Uppi \) of type D 1 R I 1 L S 1,1 R . We assume that the rules in P are labeled in a unique way by labels from a finite set Lab with \(1\notin Lab\) and \(z\in Lab\). We now construct a P system \(\Uppi\), \(\Uppi =\left( G^{\prime },\mu,R,1\right)\), with a flat tree structure μ of height 1, i.e., with the outermost membrane (the so-called skin membrane) being labeled by 1, and all the other membranes being elementary membranes inside the skin membrane being labeled by labels from

\(G^{\prime }=\left( V^{\prime },T,A,P^{\prime }\right), V^{\prime }=\left\{ x,\bar{x}^{l}\mid x\in V,l\in Lab\right\} \cup \left\{ \#\right\}\), and \(P^{\prime }\) contains the minimal left insertion, right deletion, and right substitution rules contained in the rules of R as listed in the following:

the additional membrane # is used to trap all computations not leading to a terminal string in an infinite loop by the rules \(\left( 1,I_{L}\left[ \#\right],in_{\#}\right) \) and \(\left( \#,I_{L}\left[ \#\right],out\right)\); for this purpose, the rule \(\left( h,I_{L}\left[ \#\right],out\right) \) is used in case of \(h:P\left[ a_{h}b_{h}/c_{h}\right]\), too. Due to the features of the underlying Post system in Z-normal form, all terminal strings from \(L\left( G\right) \) can be obtained as final results of a halting computation in the elementary membrane z, whereas all other possible computations in \(\Uppi \) never halt, finally being trapped in an infinite loop guaranteed by the rules leading into and out from membrane #. Hence, in sum we get \(L\left( \Uppi \right) =L\left( G\right)\).

For the accepting case, in a similar way we construct a P system \(\Uppi ^{\prime }\) of type D 1 R I 1 L S 1,1 R equivalent to a Post system \(G=\left( V,T,Z_{0}q_{f}^{\prime },P\right) \) in normal form constructed for a given recursively enumerable language according to the proof outlined for Theorem 3. \(\Uppi ^{\prime }=\left( G^{\prime \prime },\mu ^{\prime \prime },R^{\prime },z\right) \) practically has the same ingredients as the P system \(\Uppi \) described above, with two main exceptions: we now start a computation with the input word put into membrane z and instead of \(\left( D_{R}\left[ Z\right],in_{z}\right) \) take the rule \(\left( I_{R}\left[ Z\right],out\right)\); moreover, we take an additional membrane f to finish the simulation of an accepting derivation in G having lead to the final string \(Z_{0}q_{f}^{\prime }. \) According to the proof outlined for Theorem 3, we now may even omit the rules for simulating rules of the form \(P\left[ a_{h}/\lambda \right]. \) Hence, for the labels of the membranes inside the skin membrane we take

moreover, \(G^{\prime \prime }=\left( V^{\prime \prime },T,\lambda, P^{\prime \prime }\right), V^{\prime \prime }=\left\{ x,\bar{x}^{l}\mid x\in V,l\in Lab\right\} \cup \left\{ \#\right\}\), and \(P^{\prime \prime }\) contains the minimal left insertion, right deletion, and right substitution rules contained in the rules of \(R^{\prime }\) as listed in the following:

as well as \(\left( 1,I_{L}\left[ \#\right],in_{\#}\right), \left( \#,I_{L}\left[ \#\right],out\right),\) and \(\left( D_{R}\left[ q_{f}^{\prime }\right],in_{f}\right) \) as the additional rules.□

Summarizing the results of Theorems 1 and 6, we get:

Corollary 2

For all n ≥ 1,

If we want to restrict ourselves to the simple targets here, in, out and as well to use only minimal insertions and deletions, then we have to use a more difficult proof technique than in the proof of Theorem 6.

Theorem 7

\(\mathcal{L}\left( D^{1}I^{1}{\hbox{-}}LsP^{\left\langle 8\right\rangle }\right) =\mathcal{L}_{a}\left( D^{1}I^{1}{\hbox{-}}LsP^{\left\langle 8\right\rangle }\right) =RE\).

Proof

In order to show the inclusion \(RE\subseteq \mathcal{L}\left( D^{1}I^{1}{\hbox{-}}LsP^{\left\langle 8\right\rangle }\right)\), as in the proof of Theorem 6 we start from a Post system \(G=\left( V,T,A,P\right) \) in Z-normal form with assuming the rules in P to be labeled in a unique way by labels from a finite set Lab with \(1\notin Lab\) and \(z\in Lab\) and construct an equivalent simple P system \(\Uppi, \Uppi =\left( G^{\prime },\mu,R,1\right)\), of type D 1 I 1, with \(G^{\prime }=\left( V^{\prime },T,A,P^{\prime }\right) \) and

as follows:

The membrane structure μ consists of the skin membrane 1 as well as of linear inner structures needed for the simulation of the rules in G:

-

For every rule \(h:P\left[ a_{h}b_{h}/c_{h}\right] \) and every rule \(h:P\left[ a_{h}/b_{h}c_{h}\right] \) in P we need a linear structure of 8 membranes

$$ \left[ {_{\left( h,1\right) }}\left[ {_{\left( h,2\right) }}\ldots\left[ {_{\left( h,8\right) }} \right] {_{\left( h,8\right) }}\ldots\right] {_{\left( h,2\right) }}\right] {_{\left( h,1\right) }}; $$ -

for every rule \(h:P\left[ a_{h}/b_{h}\right] \) and every rule \(h:P\left[ a_{h}/\lambda \right] \) in P we need a linear structure of 6 membranes

$$ \left[ {_{\left( h,1\right) }}\left[ {_{\left( h,2\right) }}\ldots\left[ {_{\left( h,6\right) }} \right] {_{\left( h,6\right) }}\ldots\right] {_{\left( h,2\right) }}\right] {_{\left( h,1\right) }}; $$ -

for getting the terminal results by erasing the symbol Z, we need the linear structure of 3 membranes

$$ \left[ {_{\left( z,1\right) }}\left[ {_{\left( z,2\right) }}\left[ {_{\left( z,3\right) }}\right] {_{\left( z,3\right) }} \right] {_{\left( z,2\right) }}\right] {_{\left( z,1\right) }}. $$

The simulations of the rules from P are accomplished by the procedures as shown in Tables 1, 2, 3, 4 and 5, where the columns have to be interpreted as follows: in the first column, the membrane (label) h is listed, in the second one only the rule \(p\in P\) is given, which in total describes the rule \(\left( h,p,in\right) \in R\), whereas the rule p in the fifth column has to be interpreted as the rule \(\left( h,p,out\right) \in R\); the strings in the third and the fourth column list the strings obtained when going up in the linear membrane structure with the rules \(\left( h,p,in\right) \) from column 2 and going down with the rules \(\left( h,p,out\right) \) from column 5, respectively. The symbol F cannot be erased anymore, hence, whenever F has been introduced at some moment, the computation will land in an infinite loop with only introducing more and more symbols F.

The main idea of the proof is that we choose the membrane to go into by the rule \(\left( 1,K+,in\right) \) in a non-deterministic way. The goal is to reach the terminal membrane \(\left( z,3\right) \) by starting with a string \(wZ, w\in T^{\ast }\), from the skin membrane (Table 1).

Tables 2, 3, 4 and 5 are to be interpreted in the same way as above; yet mostly we only list the results of correct simulations in column 4 and omit the results of adding the trap symbol F. Moreover, the rule D− in the skin membrane is the only one in the whole system which uses the target here, i.e., it has to be interpreted as \(\left( 1,D-,here\right)\).

From the descriptions given in Tables 2, 3, 4 and 5, it is easy to see how a successful simulation of a rule \(h:P\left[ x_{h}/y_{h}\right] \in P\) works. If we enter a membrane \(\left( h,1\right) \) with a string v not being of the form ux h , then at some moment the only chance will be to use F+, introducing the trap symbol F which cannot be erased anymore and definitely leads to a non-halting computation. The additional symbols D, E, H, J, M intermediately introduced on the right-hand side of the string guarantee that loops inside the linear membrane structure for the simulation of a rule \(h:P\left[ x_{h}/y_{h}\right] \in P\) cannot lead to successful computations as well. In sum, we conclude \(L\left( \Uppi \right) =L\left( G\right)\).

As in the proof of Theorem 6, the construction for an accepting P system \(\Uppi ^{\prime }\) is only slightly different from what has already been discussed above:

The initial input string is put into membrane \(\left( z,3\right) \) in the linear structure of 3 membranes \(\left[ {_{\left( z,1\right) }}\left[{_{\left( z,2\right) }}\left[ {_{\left( z,3\right) }}\right]{_{\left( z,3\right) }}\right]{_{\left( z,2\right) }}\right] {_{\left( z,1\right) }}, \) which allows us to insert a symbol Z on the right-hand side of the input string (Table 6).

Only the last two columns describe the computations to happen at the beginning, whereas whenever a string v gets back into membrane \(\left( z,1\right) \) from membrane 1 by using the rule K+ there, we end up with introducing the trap symbol F.

Checking for the final string \(Z_{0}q_{f}^{\prime }\) is accomplished in the additional linear structure of 3 membranes \(\left[ {_{\left( f,1\right) }}\left[{_{\left( f,2\right) }}\left[{_{\left( f,3\right) }}\right]{_{\left( f,3\right) }}\right] {_{\left( f,2\right) }}\right] {_{\left( f,1\right) }}\) (Table 7).

For any \(v\neq Z_{0}q_{f}^{\prime }\) we return back to membrane 1 with vFF, thus finally ending up in an infinite loop. The remaining ingredients for \(\Uppi ^{\prime }\) are exactly the same as for \(\Uppi \) in the generating case, except that we need not take into account rules of the form \(h:P\left[ a/\lambda \right]\). Hence, we have shown \(RE\subseteq \mathcal{L}_{a}\left( D^{1}I^{1}{\hbox{-}}LsP^{\left\langle 8\right\rangle }\right)\), too.□

Due to the matrix-like membrane structure of the simple P systems constructed in the preceding proof, we could obtain the computational completeness of matrix grammars of type D 1 I 1 as an obvious consequence of Theorem 7, yet the direct transformation of the construction given in the proof of this theorem would yield a lot of matrices with lengths more than 3, whereas the direct proof given in Theorem 5 only needed matrices of length at most 3.

In the construction given in the preceding proof, there was only one rule using the target here, but this could not be avoided at all when simulating normal Post rules not keeping the parity of symbols. In order to avoid this, we now use an encoding for the strings which instead of one symbol a uses two symbols \(\hat{a}\bar{a}\).

Theorem 8

\(\mathcal{L}\left( D^{1}I^{1}{\hbox{-}}LcP^{\left\langle 8\right\rangle }\right) =\mathcal{L}_{a}\left( D^{1}I^{1}{\hbox{-}}LcP^{\left\langle 8\right\rangle }\right) =RE\).

Proof

For any arbitrary alphabet \(\Upsigma\), let \(g:\Upsigma \rightarrow \hat{\Upsigma}\bar{\Upsigma}\) be the morphism defined by \(g\left( a\right) =\hat{a}\bar{a}\) for \(a\in \Upsigma. \) We first consider the accepting case, i.e., we want to show the inclusion \(RE\subseteq \mathcal{L}_{a}\left( D^{1}I^{1}{\hbox{-}}LcP^{\left\langle 8\right\rangle }\right)\). The main idea for avoiding the target here now is to work on strings \(g\left( w\right) \) instead of w, as the length of a string \(g\left( w\right) \) is always even. As a first example, consider the Post rewriting rule \(h:P\left[ a/bc\right], \) which then extends to \(g\left( h\right) : {\$}\hat{a}\bar{a}\rightarrow \hat{b}\bar{b}\hat{c}\bar{c} {\$} \). Yet any rule of the form \(l: {\$}uv\rightarrow wxyz {\$} \) can be replaced by the rules \(l^{\prime }: {\$}uv\rightarrow z {\$}D_{l}^{\prime }, l^{\prime \prime }: {\$}D_{l}^{\prime }\rightarrow xy {\$}D_{l}\), and \(l^{\prime \prime \prime }: {\$} D_{l}\rightarrow w {\$} \). Whereas \(l^{\prime \prime \prime }\) already is a normal Post rewriting rule, it is quite easy to simulate these rules \(l^{\prime }\) and \(l^{\prime \prime }\) based on the tables explained in the proof of Theorem 7 (Tables 1, 2, 3, 4, 5, 6, 7). For example, simulating a rule of the form \( {\$}a\rightarrow bc {\$}D_{h}\) corresponds to take the simulation table of the rule \( {\$}a\rightarrow bc {\$} \) ending up with the symbol D h on the right-hand-side of the string, i.e., in the table for the simulation of \(h:P\left[ a/bc\right]\) we replace D by the specific D h and, of course, have no rule with target here working on D h in membrane 1 (Table 8).

In the same way, the simulation of any rule of the form \( {\$}ab\rightarrow c {\$}D_{h}\) corresponds to take the simulation table of the rule \( {\$}ab\rightarrow c {\$} \) ending up with the symbol D h on the right-hand-side of the string (Table 9).

The Post rewriting rule \(h:P\left[ ab/c\right] \) extends to \(g\left( h\right) : {\$}\hat{a}\bar{a}\hat{b}\bar{b}\rightarrow \hat{c}\bar{c} {\$} \). Yet any rule of the form \(l: {\$}uvwx\rightarrow yz {\$} \) can be replaced by the rules \(l^{\prime }: {\$}wx\rightarrow z {\$}D_{l}^{\prime }, l^{\prime \prime }: {\$}vD_{l}^{\prime }\rightarrow z {\$}D_{l}, \) and \(l^{\prime \prime \prime }: {\$}uD_{l}\rightarrow {\$} \). Whereas \(l^{\prime }\) and \(l^{\prime \prime }\) are rules of the form \( {\$}ab\rightarrow c {\$}D_{h}, l^{\prime \prime }\) is a new kind of rule, but easily to be simulated in the P system \(\Uppi \) to be constructed following the constructions elaborated in the proof of Theorem 7 (Table 10).

The rule \(h:P\left[ a/\lambda \right] \) extends to \(g\left( h\right) : {\$}\hat{a}\bar{a}\rightarrow {\$} \), which is of the form just described above. The rule \(h:P\left[ a/b\right] \) extends to \(g\left( h\right) : {\$}\hat{a}\bar{a}\rightarrow \hat{b}\bar{b} {\$} \), which can be replaced by the rules \(h^{\prime }: {\$}\hat{a}\bar{a}\rightarrow \bar{b} {\$}D_{h}\) and \(h^{\prime \prime }: {\$}D_{h}\rightarrow \hat{b} {\$} \); both are of forms already described above.

The main challenge to finish the proof for the accepting case is to encode a given input string w into \(g\left( w\right)\), as we need some other technical tricks to stay within the framework of linear membrane structures inside the skin membrane and to avoid the target here. A given recursively enumerable language L therefore is divided into two languages L 1 and L 2 containing exactly those strings from L which are of odd and even lengths, respectively. According to Theorem 3, for both L 1 and L 2 there exist Post systems in normal form G 1 and G 2 accepting \(\left\{ e\right\} L_{1}\left\{ Z\left( 1\right) \right\}\) and \(L_{2}\left\{ Z\left( 2\right) \right\}\), where e is an additional (terminal) symbol.

Given an input string w, in the P system \(\Uppi\) to be constructed for accepting L 1∪ L 2, we start with the initialization procedure, with the input string being put into the input membrane \(\left( I,4\right)\) (Table 11).

We then, from \(wU\tilde{U}, \) non-deterministically generate \(\hat{U}wU_{2}\) for strings w of even lengths and \(\hat{U}ewU_{1}\) for strings w of odd lengths (if this guess is wrong, no successful computation in \(\Uppi \) will be possible). In the case of input strings of odd lengths, we have to insert a single symbol e at the beginning of w (as then ew is of even length). In sum, we have to simulate the Post rewriting rules \( {\$}U\tilde{U}\rightarrow \hat{U} {\$}U_{2}\) and \( {\$}U\tilde{U}\rightarrow \hat{U}e {\$}U_{1}\); the latter rule can be split into the sequence of rules \( {\$}\tilde{U}\rightarrow \hat{U}e {\$}\tilde{U}_{1}\) and \( {\$}\tilde{U}_{1}\rightarrow {\$}U_{1}\), which itself can be simulated easily (Table 12).

The initial encoding now in principle works on strings of even lengths between \(\hat{U}\) and \(U_{i}, i\in \left\{ 1,2\right\}\), where for U 1 we have the terminal alphabet extended by the special symbol e. In order to avoid that the simulations in the P system \(\Uppi \) of computations in G 1 and G 2 interfere, we assume the alphabets of G 1 and G 2 to be disjoint, i.e., even for terminal symbols a we have different copies \(a\left( 1\right) \) and \(a\left( 2\right)\). Hence, we have to simulate Post rewriting rules of the form \( {\$}abU_{i}\rightarrow \hat{a}\left( i\right) \bar{a}\left( i\right) \hat{b}\left( i\right) \bar{b}\left( i\right) {\$}U_{i}\) and finally \( {\$}\hat{U}U_{i}\rightarrow {\$}\hat{Z}\left( i\right) \bar{Z}\left( i\right)\). In sum, we then have obtained an encoded string \(g_{1}\left( ewZ\right) \) or \(g_{2}\left( wZ\right) \) where \(g_{i}\left( a\right) =\hat{a}\left( i\right) \bar{a}\left( i\right)\). The rules \( {\$}abU_{i}\rightarrow \hat{a}\left( i\right) \bar{a}\left( i\right) \hat{b}\left( i\right) \bar{b}\left( i\right) {\$}U_{i}\) can be split into a sequence of rules \( {\$}bU_{i}\rightarrow \bar{b}\left( i\right) {\$}U_{i}\left( b\right), {\$}U_{i}\left( b\right) \rightarrow \bar{a}\left( i\right) \hat{b}\left( i\right) {\$}U_{i}^{\prime }\left( a\right) \) (here, we non-deterministically guess which will be the next symbol a), and \( {\$}aU_{i}^{\prime }\left( a\right) \rightarrow \hat{a}\left( i\right) {\$}U_{i}\).

According to the simulation tables, the P system \(\Uppi \) now can simulate the corresponding Post systems in normal form G 1 and G 2.

Checking for the final string corresponding to \(Z_{0}q_{f}^{\prime}\) means checking for the final string \(\hat{Z}_{0}\left( i\right) \bar{Z}_{0}\left( i\right) \hat{q}_{f}^{\prime }\left( i\right) \bar{q}_{f}^{\prime }\left( i\right)\) with \(i\in \left\{ 1,2\right\}\). As in the proof of Theorem 7 it is sufficient to check for the appearance of the last symbol (Table 13).

The last two columns show what happens if we enter membrane \(\left( f,1\right) \) with a wrong string v.

Hence, in sum we have shown \(RE\subseteq \mathcal{L}_{a}\left(D^{1}I^{1}{\hbox{-}}LcP^{\left\langle 8\right\rangle }\right)\).

In the generating case, again a given recursively enumerable language L is divided into two languages L 1 and L 2 containing exactly those strings from L which are of odd and even lengths, respectively. According to Theorem 4, for both L 1 and L 2 there exist Post systems in Z-normal form G 1 and G 2 generating \(\left\{ e\right\} L_{1}\left\{ Z\left( 1\right) \right\} \) and \(L_{2}\left\{ Z\left( 2\right) \right\}\), where e is an additional (terminal) symbol. Again we assume the alphabets of G 1 and G 2 to be disjoint, i.e., even for terminal symbols a we have different copies \(a\left( 1\right) \) and \(a\left( 2\right)\). Let \(G_{i}=\left( V_{i},T_{i},S_{i},P_{i}\right)\), \(i\in \left\{ 1,2\right\}\), with \(S_{i}\in V_{i}\setminus T_{i}\). The P system \(\Uppi \) we now describe simulates the computations in both Post systems G 1 and G 2. \(\Uppi \) in principle has the same structure as the one already described in Theorem 7, and it starts with the initial string \(\hat{S}\bar{S}\) in membrane 1, then simulating the Post rewriting rules \( {\$}\hat{S}\bar{S}\rightarrow \hat{S}_{i}\bar{S}_{i} {\$} \) (as already shown above, these rules can be simulated by the rules \( {\$}\hat{S}\bar{S}\rightarrow \bar{S}_{i} {\$}\hat{S}_{i}^{\prime }\) and \( {\$}\hat{S}_{i}^{\prime }\rightarrow \hat{S}_{i} {\$} \)). The simulations of the rules from G 1 and G 2 can be performed as already described above.

Finally, we end up with the strings \(g_{1}\left( ew_{1}Z_{1}\right) \) and \(g_{2}\left( w_{2}Z_{2}\right)\), respectively, with the strings ew 1 and w 2 being of even lengths; these strings \(g_{1}\left( ew_{1}Z_{1}\right)\) and \(g_{2}\left( w_{2}Z_{2}\right)\) now have to be decoded to the terminal results w 1 and w 2, respectively: As soon as the simulation of G 1 or G 2 has yielded \(g_{1}\left( ew_{1}Z_{1}\right) \) or \(g_{2}\left( w_{2}Z_{2}\right)\), respectively, we start the decoding procedure with simulating the Post rewriting rule \( {\$}\hat{Z}_{i}\bar{Z}_{i}\rightarrow \tilde{Z}_{i} {\$}Z_{i}\). Then, the decoding for two consecutive symbols is executed, i.e., we have to simulate the rule \( {\$}\hat{a}\left( i\right) \bar{a}\left( i\right) \hat{b}\left( i\right) \bar{b}\left( i\right) Z\rightarrow ab {\$}Z\), which can be decomposed into the sequence of rules \( {\$}\hat{b}\left( i\right) \bar{b}\left( i\right) Z_{i}\rightarrow {\$}Z_{i}\left( b\right), {\$}\bar{a}\left( i\right) Z_{i}\left( b\right) \rightarrow b {\$}Z_{i}^{\prime }\left( a\right),\) as well as \( {\$}\hat{a}\left( i\right) Z_{i}^{\prime }\left( a\right) \rightarrow a {\$}Z_{i}\) for \(\left( i,a\right) \neq \left( 1,e\right)\); the rule \( {\$}\hat{b}\left( i\right) \bar{b}\left( i\right)Z_{i}\rightarrow {\$}Z_{i}\left( b\right) \) can be simulated easily, too (Table 14).

At the end, we reach one of the situations \(w_{2}\tilde{Z}_{2}Z_{2}\) or \(w_{1}\tilde{Z}_{1}\hat{e}Z_{1}^{\prime }\left( e\right)\), which leads to the following final procedures in \(\Uppi\) (Tables 15, 16)

Terminal strings of even lengths appear in membrane \(\left( \left( f,2\right),4\right)\), terminal strings of odd lengths arrive in membrane \(\left( \left( f,1\right),5\right)\).

Hence, we have also shown \(RE\subseteq \mathcal{L}\left( D^{1}I^{1}{\hbox{-}}LcP^{\left\langle 8\right\rangle }\right)\).□

4 Conclusion

In this paper we have considered string rewriting systems using the operations of minimal left and right insertion and deletion. Using even only the operations of minimal left insertion and minimal right deletion, matrix grammars reach computational completeness with matrices of length at most 3; our conjecture is that this required length cannot be reduced to 2. As our main result, we have shown that sequential P systems using the operations of minimal left and right insertion and deletion are computationally complete, thus solving an open problem from Alhazov et al. (2011b). Computational completeness was not only proved for the generating case, but for the accepting case as well. In Theorem 6 we have shown that using minimal left insertion, minimal right deletion, and, in addition, minimal right substitution (substitution of one symbol by another one on the right-hand side of a string) we can obtain a P system with the height of the tree structure being the minimum 1, at the same time even avoiding the use of the target here. The P systems constructed in the other proofs showing computational completeness with only using the operations of minimal left and right insertion and deletion, had rather large tree height; it remains an open question to reduce this complexity parameter.

References

Alhazov A, Krassovitskiy A, Rogozhin Yu, Verlan S (2011a) P systems with minimal insertion and deletion. Theor Comput Sci 412(1–2):136–144

Alhazov A, Krassovitskiy A, Rogozhin Yu, Verlan S (2011b) P systems with insertion and deletion exo-operations. Fundam Inform 110(1–4):13–28

Alhazov A, Krassovitskiy A, Rogozhin Yu (2012) Circular post machines and P systems with exo-insertion and deletion. In: Gheorghe M et al (eds) Membrane computing—12th international conference, CMC 2011, Fontainebleau, France, August 23–26, 2011, revised selected papers. Lecture notes in computer science 7184. Springer, New York, pp 73–86

Benne R (1993) RNA editing: the alteration of protein coding sequences of RNA. Ellis Horwood, Chichester

Biegler F, Burrell MJ, Daley M (2007) Regulated RNA rewriting: modelling RNA editing with guided insertion. Theor Comput Sci 387(2):103–112

Castellanos J, Martín-Vide C, Mitrana V, Sempere JM (2001) Solving NP-complete problems with networks of evolutionary processors. In: Mira J, Prieto AG (eds) IWANN 2001. Lecture notes in computer science 2084. Springer, New York, pp 621–628

Csuhaj-Varjú E, Salomaa A (1997) Networks of parallel language processors. In: Păun Gh, Salomaa A (eds) New trends in formal languages. Lecture notes in computer science 1218. Springer, New York, pp 299–318

Dassow J, Manea F (2010) Accepting hybrid networks of evolutionary processors with special topologies and small communication. In: McQuillan I, Pighizzini G (eds) Proceedings of the 12th workshop on descriptional complexity of formal systems, EPTCS 31, pp 68–77

Dassow J, Păun Gh (1989) Regulated rewriting in formal language theory. Springer, New York

Dassow J, Manea F, Truthe B (2011) On normal forms for networks of evolutionary processors. In: Calude CS, Kari J, Petre I, Rozenberg G (eds) Proceedings of the unconventional computation 10th international conference, UC 2011, Turku, Finland, June 6–10, 2011. Lecture notes in computer science 6714, pp 89–100

Freund R, Oswald M, Păun A (2004) Gemmating P systems are computationally complete with four membranes. In: Ilie L, Wotschke D (eds) Pre-proceedings DCFS 2004. The University of Western Ontario, Rep. No. 619, pp 191–203

Freund R, Kogler M, Rogozhin Yu, Verlan S (2010) Graph-controlled insertion–deletion systems. In: McQuillan I, Pighizzini G (eds) Proceedings of the 12th workshop on descriptional complexity of formal systems, EPTCS 31, pp 88–98

Freund R, Rogozhin Yu, Verlan S (2012) P systems with minimal left and right insertion and deletion. In: Durand-Lose J, Jonoska N (eds) Unconventional computation and natural computation, 11th international conference, UCNC 2012, Orleans, France, September 3–7, 2012. Lecture notes in computer science 7445. Springer, New York, pp 82–93

Galiukschov B (1981) Semicontextual grammars. Logica i Matem. Lingvistika. Tallin University, pp 38–50 (in Russian)

Haussler D (1982) Insertion and iterated insertion as operations on formal languages. PhD thesis, University of Colorado at Boulder, Boulder

Haussler D (1983) Insertion languages. Inf Sci 31(1):77–89

Ivanov S, Verlan S (2011) Random context and semi-conditional insertion–deletion systems. arXiv, CoRR abs/1112.5947

Kari L (1991) On insertion and deletion in formal languages. PhD thesis, University of Turku, Turku

Kari L, Păun Gh, Thierrin G, Yu S (1997) At the crossroads of DNA computing and formal languages: characterizing RE using insertion–deletion systems. In: Rubin H, Wood DH (eds) DNA based computers III. Proceedings of the 3rd DIMACS workshop on DNA based computing, University of Pennsylvania, Philadelphia. DIMACS series in discrete mathematics and theoretical computer science 48, pp 318–333

Krassovitskiy A, Rogozhin Yu, Verlan S (2011) Computational power of insertion–deletion (P) systems with rules of size two. Nat Comput 10(2):835–852

Marcus S (1969) Contextual grammars. Rev Roum Math Pures Appl 14:1525–1534

Margenstern M, Mitrana V, Pérez-Jiménez M (2005a) Accepting hybrid networks of evolutionary systems. In: Ferretti C, Mauri G, Zandron C (eds) DNA computing: 10th international workshop on DNA computing, DNA10, Milan, Italy, June 7–10, 2004. Revised selected papers. Lecture notes in computer science 3384. Springer, New York, pp 235–246

Margenstern M, Păun Gh, Rogozhin Yu, Verlan S (2005b) Context-free insertion–deletion systems. Theor Comput Sci 330(2):339–348

Minsky ML (1967) Computation: finite and infinite machines. Prentice Hall, Englewood Cliffs

Păun Gh (2002) Membrane computing. An introduction. Springer, New York

Păun Gh, Rozenberg G, Salomaa A (eds) (1998) DNA computing: new computing paradigms. Springer, New York

Păun Gh, Rozenberg G, Salomaa A (2010) The Oxford handbook of membrane computing. Oxford University Press, Oxford

Petre I, Verlan S (2010) Matrix insertion–deletion systems. arXiv, CoRR abs/1012.5248

Post EL (1943) Formal reductions of the general combinatorial decision problem. Am J Math 65(2):197–215

Rozenberg G, Salomaa A (1997) Handbook of formal languages, 3 vols. Springer, New York

Verlan S (2007) On minimal context-free insertion–deletion systems. J Autom Lang Comb 12(1–2):317–328

Verlan S (2010a) Recent developments on insertion–deletion systems. Comput Sci J Moldova 18(2):210–245

Verlan S (2010b) Study of language-theoretic computational paradigms inspired by biology. Habilitation thesis, University of Paris Est, Paris

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Freund, R., Rogozhin, Y. & Verlan, S. Generating and accepting P systems with minimal left and right insertion and deletion. Nat Comput 13, 257–268 (2014). https://doi.org/10.1007/s11047-013-9396-3

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11047-013-9396-3