Abstract

Brain tumor is a severe condition that occurs due to the expansion of unnatural brain cells. Because tumors are rare and can take many different forms, it is challenging to estimate the endurance rate of a tumor affected patient. Examining the images obtained from Magnetic Resonance Imaging (MRI) is the fundamental method in locating the tumor affected part in the brain and detecting it with those MRI images is a labor-intensive and difficult process that may yield inaccurate findings. Implementing computer-aided methods is extremely important to overcome these limitations. With the support of the advancement in computer technologies like Artificial Intelligence (AI) and Deep learning (DL), we made use of one of the finest model in deep convolutional neural network (CNN), AlexNet to identify the tumor from MRI images. We incorporated an Enhanced AlexNet (EAN) in line to the proposed layers to categorize the images effectively. Needed data augmentation methods are used to progress the accuracy of our EAN model. From the investigation our EAN model performed well than the other traditional models with respect to accuracy, F1 score, recall and precision with minimum error rate. Our model has managed to produce accuracy rate of 99.32% in terms of classifying the brain tumor from the MRI Images.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Human body is fully built and organized with cells. These cells eventually grow and die in a regular basis in human life cycle. Brain is an important organ accountable for the central nervous system and it functions by joining the bone marrow, and completes the function of the central nervous system. The task of directing the movements of the human body falls to the brain. It gathers data from various senses, determines, and then communicates those decisions to the body. The brain, with the assistance of neurons, is the focal point of the human body's administrative system and responsible for all bodily functions. It is wrapped up within a stiff skull. Even a very tiny change in the position of the skull will lead to a very high danger to human life. Some cells grow abnormally and affect human brain severely and this disorder is termed as Brain Tumor as per Lee et al. [1] and Billah et al. [9]. If the brain is affected by abnormal cells it may cause a very serious danger in human life. Statistics provided by American Cancer Society around 18,000 adults and 3000 children under the age 15 would die due this tumor in upcoming days as mentioned by Islami et al. [2]. Two type of tumors benign and malignant are the major types found in affecting the humans. According to a study of National cancer Institute around 0.7 millions of people are affected by any one of the above said brain tumor by Hung et al. [3]. World health organization has anticipated that around 9 million people are affected with brain tumor in 2018 all around the globe as per Ostrom et al. [4]. Figure 1 depicts a sample MRI image of healthy brain and tumor affected brain. A malignant tumor will affect the entire brain in short span from when it is formed in the cell. The benign tumor is a least affecting tumor in the cells. Besides these two tumor types some common tumors are Meningioma, which develop gradually in the exterior part of the brain and get deposited under the skull. These tumors are easy to get identified as it would take around years to detect its presence. Another type is the Glioma that emerges from the glial cell and develops in the sustaining tissues of the brain. National Brain Tumor Society identified pituitary tumor that gets developed underneath the brain and affect the pituitary gland which are responsible for producing hormones and control the body. The pituitary tumor would distress the operations of these glands, more specifically the thyroid glands as mentioned by WHO [5] and Li et al. [6].

Recognizing the type of the tumor is the most herculean task for the even professional and most experienced doctors. It has to be recognized at early stages more specifically the type of the tumor, since early detection paves way easy and speedy recovery of a tumor affected patient and saves the life. The traditional method of detecting the tumors from MRI images is more complex and the correctness of the decision depends upon the expertise of the radiologists and doctors. The data (MRI Image) in detecting the disease obtained from MRI might not be in small amount in count rather it would be in large. Hence for even experienced physician it would be difficult and challenging to identify the tumor through naked eyes from the MRI Images as per Lawal et al. [7]. Moreover this method consumes more time and expensive. Video Endoscopy is another method of detecting tumor. Gastrointestinal is another type of tumor which is detected by allowing a tiny camera into human body and the video images helps in revealing the size, location and shape of the tumor mentioned by Raut et al. [8]. Even though experts and leading doctors are working for early detecting this life threatening disease, traditional methods like MRI image based tumor detection, Computer tomography, video endoscopy are used, this fast growing disease has to be curbed with the help of the modern computer techniques used at present. AI is one of the most powerful computer techniques as it thinks faster and accurate than human thinks and reacts. AI methods can access huge amount of data within a minimum amount of time and helps radiologists and physicians in identifying the tumor from the MRI images. Machine Learning (ML) algorithms which are the subset of AI are incorporated with more powerful methods in classifying the MRI images as tumor affected and not affected.

Computer scientists are working to develop AI based technique, which will enable machines to perceive, acquire, and resolve issues when presented with large amount of inputs and data, in order to recognize and diagnose brain tumors. AI is crucial and due to its intricate and complex procedures, the field AI adoption is a great opportunity for classifying and detecting brain tumors and it is been increased recently. There have been numerous attempts to develop a classification method for brain tumors that is incredibly exact and trustworthy. On the other hand the increasing data size, contour, quality and variants in tumor images needs more advanced method to handle the disease classification. Recently available DL algorithms can forecast the class label in very large amount than that of AI can handle. It handles the unlabeled data objects by using training data samples as a source of knowledge. Even though many models are developed to help the health care community in identifying the disease, few limitations are found in the existing models. We investigated and conducted the experiment by making use of the basics of AlexNet DL model enhance it to diagnose and classify brain tumor from MRI images. The motivation behind our investigation is to amplify an automated, effective model to detect brain tumor at the earlier stage and to save human life. The contributions of the proposed work are:

-

Our suggested layers have been added to the cutting-edge AlexNet model and fine tuning it, which replaces the traditional insidious brain tumor image classification model.

-

A three tier method is incorporated to improve the image quality by removing the noise level in the MRI image which is the input to the proposed model.

-

An effective data augmentation method is used to generate better output even in smaller data sets and rate of over fitting is recorded.

-

The results obtained are measured through accuracy, recall, error rate, and F1 score and compared with some of the existing approaches. By analyzing the obtained results the results demonstrate that our proposed EAN AlexNet model has settled with adequate accuracy than the other existing models.

The remaining part of the article is ordered as, literature work carried out is explained in Section 2, Section 3 with methods and materials used in our proposed investigation. Section 4 contains the results obtained and discussions are made by considering the comparative analysis. Finally the work is concluded with future goals, which could be found in Section 5 of this article.

2 Related work

Optimization techniques based DL algorithms are frequently employed in the domains of health monitoring and computing [10] and Islam et al. [11], for analyzing virus prognosis [12], gauging game player satisfaction [13], and shear strength prediction. Similar to this, several ML-DL oriented research on medical imaging are carried out to categorize brain cancers [14,15,16]. Recently, AI has transformed intracranial techniques. They include capabilities like image retrieval, image segmentation, attribute selection, and classification, as well as data preprocessing. According to the study of Tiwari et al. [17], with the support of ML and DL techniques, neurosurgeons can diagnose a patient's brain tumor more confidently than ever before they could even leave the treatment room in trust of AI. Since DL algorithms like GoogleNet, ResNet, VGG, AlexNet etc. produces promising outcomes, particularly neural networks, it becomes significantly more important. The extraordinary abilities CNNs) which is one of the preferred DL algorithm where it can learn features, preprocess and classify the input with limitless accuracy. DL applications also include automatic speech recognition, image recognition, predictive modeling, and other decision-making process in smart environments etc.

Hollon et al. [18] used CNN model based architectures to extract features from MR Images and models like ResNet, GoogleNet VGGNet for various repetitions. All those models had produced 89%, 93% and 96% of accuracy respectively with ResNet producing higher performance than the other models. A hybrid approach is proposed by Sangeetha et al. [19] that pooled traditional VGGNet piled up with a classifier named VGGSCNet. This approach is tested by VGG-16 by using MR images as input and detects brain tumor robotically. Data preprocessing first determined the region of interest by identifying the most noticeable patterns. The imbalanced class issue in the dataset was then fixed using the augmentation method. Since the sixth layer offers fewer features, the features were extracted therein. The presence of tumors in an image was ascertained using an arrayed classifier. An image of the anatomical makeup of the human body is created by image preprocessing.

To identify low and medium diseased images, Majib et al. [20] had developed a model using Machine learning algorithms to classify the brain tumor images. The authors classified brain cancers into major, tertiary, and tumors in nervous system with 90% of accuracy using an intense multilayer perceptron model. An Adaptive Fuzzy Deformable Fusion model was developed by Pashaei et al. [21] that combine the deformable techniques with C-means clustering. The experimentation revealed that the ensemble technique achieved 95% classification accuracy for the brain tumor affected images. Badza et al. [22] proposed a multi tasking model that used Convolutional Neural Network (CNN) to identify the tumor affected region in brain, type of tumor and the grade of the tumor using MR Images and had produced 92% accuracy. Sankar et al. [23] had anticipated a cascaded DL technique to detect the tumor affected region in the brain. It also classified tumor types like meningioma, pituitary tumor etc. This method concentrated on over fitting problems and also reduced the computational complexity than other models that used other deep learning models. It produced around 92% of accuracy.

3 Proposed method

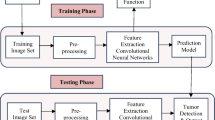

The power in computing technologies has paved way for positive growth in the welfare of human health. Due to increasing amount of diseases the human life gets affected and even some looses their lives due to unpredictable or unnoticed diseases. The motivation of this work is to make use of the improvement in computer technology more specifically in the field of machine and deep learning. These techniques have enormous of pretrained models among them. We made use one these more popular model CNN and its light weight model AlexNet by improving some of its features to recognize the human killing brain tumor disease. As stated in the beginning this section our motivation is to predict the diseases earlier and to treat brain tumor in order to save human lives. Figure 2 depicts the architectural representation of the proposed investigation. Initially we preprocess the data, perform data augmentation, training the model and finally evaluate the results obtained.

3.1 EAN: Enhanced AlexNet Model

AlexNet, a groundbreaking deep convolutional neural network, significantly advanced the field of computer vision. However, like any pioneering work, it had its limitations. These limitations included the use of a single convolutional kernel size throughout the network, which produced feature maps with limited diversity, potentially hindering the network's ability to capture intricate features. Additionally, the Fully Connected Layer (FCL) in AlexNet was burdened with an excessive number of parameters, making the network computationally intensive and prone to overfitting. Furthermore, during training, the network experienced a shift in data distribution from one layer to the next, impacting its overall performance. To overcome these limitations, Enhanced AlexNet was developed, introducing key innovations. It diversified convolutional layers by altering the depth and introducing multiple kernel sizes, enhancing the network's feature extraction capabilities. The diversity of the feature maps is shown in Fig. 3.

The FCL was replaced with Global Average Pooling (GAP), reducing the parameter count and mitigating overfitting. Moreover, Batch Normalization was introduced to stabilize training by normalizing data at each layer and reducing data distribution shifts. These enhancements collectively transformed Enhanced AlexNet into a more efficient and effective deep learning architecture for image classification and feature extraction tasks. The limitations are addressed and Enhanced AlexNet model is presented as follows:

Basically AlexNet has 60 millions of parameters, around 650,000 neurons embedded with it. Since the AlexNet network's convolution layer's convolution kernel size is a solitary number that makes the resulting features of the feature maps are not to be heterogeneous, which results in minimal feature extraction.

Mono Convolution Kernel: The use of a single convolutional kernel size throughout the network led to the production of feature maps that lacked diversity, potentially limiting the network's ability to extract intricate features. Overwhelming Parameters in Fully Connected Layer (FCL): The FCL in AlexNet contained a vast number of parameters, making the network computationally expensive and prone to overfitting. Shift in Data Distribution: Training the network could cause a shift in data distribution from one layer to the next, impacting the network's overall performance.

Enhancements in Enhanced AlexNet:

To address these limitations and enhance the AlexNet architecture, several key modifications were introduced in what we refer to as Enhanced AlexNet.

Diverse Convolution Kernels:

In the Enhanced AlexNet, the mono convolution kernel problem was tackled by diversifying the convolutional layers. Specifically, the depth of the first convolutional layer was reduced from 96 to 64, allowing for more selective feature extraction. The third convolutional layer, which originally used a single (5 × 5) kernel, was replaced with four different convolution kernels of sizes (7 × 7), (5 × 5), (3 × 3), and (1 × 1), all with a depth of 64. This diversity in kernel sizes increased the range of features that could be extracted. The third convolutional layer in the traditional AlexNet was substituted with four convolution layers of various sizes, combining them into a multi-channel convolution. This innovation amplified the variety of features captured by the network.

Global Average Pooling (GAP) to Reduce Parameters:

The excessive number of parameters in the Fully Connected Layer (FCL) was mitigated by replacing the FCL with Global Average Pooling (GAP). A new convolution layer with a (3 × 3) kernel, depth of 64, stride of 1, and padding of 1 was added, producing an output of (6 × 6 × 64). Subsequently, a max-pooling layer with a (3 × 3) filter and stride of 1 was introduced, followed by a Local Response Normalization (LRN) layer. This resulted in an output of (3 × 3 × 64). Another convolution layer with a (3 × 3) kernel, depth of 5, stride of 1, and padding of 1 was included, generating an output of (3 × 3 × 5). Finally, Global Average Pooling was applied to the entire feature map, yielding five distinct features. This architectural change effectively reduced the number of parameters in the network, addressing a major limitation of the original AlexNet.

Batch Normalization for Stable Training:

To combat the issue of shifting data distributions during training, Enhanced AlexNet incorporated Batch Normalization. Normalized preprocessing was performed at the beginning of each layer, ensuring that input data had a mean of 0 and a variance of 1. The normalized output of one layer was then passed on to the next layer, maintaining a consistent data distribution. Batch Normalization was applied to the normalized data, further reducing the shift in data distribution during training. In summary, Enhanced AlexNet addressed the limitations of the original AlexNet by introducing diverse convolution kernels, replacing the FCL with GAP to reduce parameters, and incorporating Batch Normalization to stabilize training. These enhancements collectively improved the network's ability to extract meaningful features and made it more efficient in both training and inference. We then introduce Batch Normalization towards the normalized data which reduces shift in data distribution. The mean and variance value of the Batch Normalization method is set as 0 and 1 respectively. Equation (1) contains the data normalization formula.

where M(i) represents the neurons in input and A [M(i)] is the average value of neurons during training the model.

Equation (2) is used to improve the expression ability of the input by introducing τ and δ as learnable parameters. The mean and variance value provided will well suit if the data size is minimal. If the input data becomes large then it would be more complex for calculation. Hence the mean and variance values are changed to decrease the calculation cost. Equations (3) and (4) are used for mean (μ) and variance (σ) calculation for every batch respectively.

Batch Normalization is calculated by using Eq. (5) from the values obtained from the above mean and variance.

X(i) is the Normalization value and it is calculated as in Eq. (6)

Finally the limitations identified in the regular AlexNet in image classification are addressed by resolving it in the proposed Enhanced AlexNet.

3.2 Experimental setup and implementation of proposed method

This section explains the implementation of the proposed work. The dataset used for the investigation, data preprocessing and data augmentation carried out are explained in the below section.

3.2.1 Data set

To conduct the investigation we utilized Brats2015 data set from Kaggle [24], which is a open source data set that contained around 3800 MR Images of brain tumor of two classes in which 1600 images are marked as label 1, (tumor affected), 1600 are marked as label 0(tumor not affected). The training and testing ratio is made as 80:20 respectively. Around 75 images are used for evaluating the model. No sizes are fixed to collect the images. Rather all the selected images are resized to 224 × 224 by using Keras resizing scripts.

3.2.2 Data preprocessing

The input MR images are normalized to convert the data into a standard format. It is initially transformed to grayscale image of fixed 224 × 224 pixel resolution. Second, to cut down on noise and improve output quality, the images were softened using a Gaussian filter. A high pass filter was then applied to these photographs, sharpening the image and enabling the extraction of more intricate elements. Erosion and dilation are image processing methods that remove color information in areas that are too small to hold the structural element. To remove the pixels from the image edge erosion method is applied. When the tumor affected area which is normally white in colour in the image is removed and the size of the image is reduced, subsequently the space (in tumor affected region) in image increases. To counterpart the process of erosion dilation is used which works in reverse to erosion by adding pixels to the edges of the images and fills the space of the image where the tumor affected white area is present. Finally the black portions are totally removed from every image. Figure 4 represents few samples of preprocessed image.

3.2.3 Data Augmentation

The competence, volume, and appropriateness of training data influence the efficiency of most ML and DL models. Insufficient data, though, is the common issue to pertain ML algorithms in real time applications. It is because, in many cases, acquiring related data can be time-intensive and expensive. A number of techniques are used to artificially increase the amount of data by creating new data from the available data. Data augmentation is the process of making small data alterations or using deep neural networks to create more training datasets. It is a rapid and proficient technique to increase the volume of training data and enhance it to new and novel input data as mentioned by Ranjbarzadeh et al. [25]. To amplify the data samples data augmentation pursue various image transformation process like cropping, rotations, random rotations, fixed sized rotations, colur cropping, noise insertion etc. are made to the original input images. This method improves the training efficiency of the model and decreases overfitting. Image data generator function is used for data augmentation in the proposed investigation. The reason behind the selection of Image Data Generator function is it preserves the quality of the image pixel by pixel more specifically for medical images. This function drastically enlarges the quantity of samples by increasing size of the data set. Random angle of rotations are made to images by Image Data Generator function like 30°,60°,90°, 120°,perpendicular and parallel flipping are also done. Figure 5 represents a sample of data augmentation.

3.2.4 Experimental set up

Table 1 contains the hardware and software specifications used to conduct the investigation. The experiment was conducted in two phases. In first phase the regular AlexNet network is obtained from Keras and concealed the initial layers. In the second phase the complete network is retrained by enhancing the end layers with the proposed Enhanced AlexNet network with our sample MR input images.

3.2.5 Model training

Ashfaq et al. [27] presented an innovative ML approach that utilizes a reduced amino acid alphabet based feature representation. The study evaluates the model using various activation functions, including ReLU and Tanh, with a learning rate of 0.1. To combat overfitting, L1 and L2 regularization, along with dropout techniques, are applied. It also shows how increasing training iterations significantly reduces error loss, with consistent results after 350 iterations. Farman el at [28] described, a novel AFP-CMBPred predictor, incorporating four distinctive feature representation techniques alongside SVM and RF-based classification models. Shahid et al. [29] discussed a iHBP-DeepPSSM model, a computational tool designed to utilize evolutionary features like Position-Specific Scoring Matrix and Pseudo PSSM) along with Series Correlation Pseudo Amino Acid Composition for sequence information. It employs Sequential Forward Selection with Support Vector Machine to enhance feature selection efficiency. iHBP-DeepPSSM achieves impressive accuracy of 94.41%. Masooq et al. [30] highlights the potential of peptide-based therapies for their precision in targeting cancer cells. CACP-DeepGram classification rates were done using a tenfold cross validation test where the retrieved descriptors are proportionally portioned into 10 folds with 9 folds used for model training and onefold for model test.

To analyze the input image at various aspects and based on the above study we used tenfold cross validation for model training and testing in which 8 folds are used for training and 2 folds for testing the EAN model. Hyperparameter optimization is used to tune the EAN model’s detection accuracy. The hyper parameters used are as given in Table 2.

The detailed explanation about the training and testing are discussed in results section. Algorithm 1 outlines the execution process of the proposed EAN model.

4 Results and analysis

4.1 Performance evaluation

The results obtained from the investigation has to be checked for its correctness and efficiency. Significant machine learning parameters like Accuracy, F1 Score, Precision, Recall, Sensitivity are recorded and used to analyze the proposed EAN model performance. Equations (7) to (11) express the respective metrics.

True positive denotes all positive samples predicted as positive (tumor affected). True negative denotes all negative samples predicted as negative (no tumor). False negative denotes negative samples identified as positive. False positive predicted as positive but not actually positive. Accuracy denotes rate of prediction efficiency of the model. Precision indicates the rate how effectively the model detects the positive samples. Specificity denotes the rate of true negatives that are correctly predicted. To analyze and assess the model’s performance the values are recorded in a confusion matrix. It’s a data cube that is used to record the numerical values related to the prediction done by the experimental model. It is also used to visualize and analyze the correctly and incorrectly predicted labels by the model. This could help the researchers to identify and further improve the correctness of the experiment accuracy.

Figure 6 exhibits the true and false values that are predicted by the proposed model. Around 402 samples were correctly predicted as tumor and only 7 images are not correctly predicted by the model. Similarly 264 images were correctly predicted as tumor not affected from which 4 were falsely identified. Figure 7 explores the metric wise results obtained by the proposed model.

We obtained the dataset from kaggle that had MR images of tumor affected and not affected. Data augmentation and preprocessing methods are used on the data set to improvise the data sample quality and quantity and the proposed Enhanced AlexNet model is trained and tested towards the dataset for its correctness and accuracy. For higher accuracy hyper parameter optimization is used. The model is trained by the batch size of 64 with 50 steps of epochs. With the learning rate of 0.001 Adam optimizer is used. Figure 8a exhibits the model accuracy as the graph drastically increases after the 10th epoch and reaches the highest saturation point from 20th epoch. The model was able to produce maximum accuracy of 99.32%.

As plotted in Fig. 8b rate of loss function tends to decrease slowly as the training time is increased. Finally the loss stays constant after the 10th epoch which counterpart with the model accuracy.

We compared our proposed with other existing models that used the same network for brain tumor detection. Table 3 contains accuracy and other machine merics wise assessment of our proposed model with other existing approaches. The result explicitly proves that our Enhanced AlexNet model had performed better than the other existing models. Few of those existing models extorted only the edge information’s of the feature maps but be deficient in preprocessing, this may be the reason for reduced accuracy of the existing models. We addressed this limitation by replacing the mono sized convolution layer with multi channel layers with different sizes ((7 × 7), (5 × 5), (3 × 3), (1 × 1)). When compared with the existing approaches, in Table 3 our proposed Multichannel convolution enhances the uniqueness of the convolution kernel and retrieves additional features when compared to the mono sized convolution kernel. Additionally, batch normalization and GAP are included to further enhance the network. Consequently, the Enhanced AlexNet network has considerably increased the accuracy of brain tumor detection from MR images. Due to variations in data preprocessing, retraining, validating methodology, and processing capacity used in their methods, the existing methods are not directly compared in this research. However, we found that the suggested model generated a superior accuracy of 98.3%

5 Conclusion and future work

Detection of brain tumor at the early stage need to be done to save human life. Due to the availability of medical data and tremendous growth in computing technologies this experiment was investigated. The motivation of this work is to reduce the time and human expertise involvement in detecting the tumor with the help of vastly available medical data and modern computer technologies. A habitual mechanism is required in detecting the tumor and this study has addressed the need by providing good accuracy in tumor detection. Our proposed approach replaced the regular AlexNet network of mono sized convolution layer with multichannel layers of different sizes which resulted in higher feature map extraction through effective data preprocessing. We incorporated Image data generator function as data augmentation to improve the size of the data set without compromising in the data quality. Batch Normalization is further introduced to reduce the difference in data distribution. GAP was added to the proposed layer to solve overwhelming number of parameters in the FCL. These enhancements in existing approach had produced highest accuracy rate of 98.32% in detecting the brain tumor. Although our investigation has provided remarkable accuracy, the future scope of this research can be done through more Deep Learning models with huge data set. The research could also be extended to examine other medical images like X rays, ultrasound images etc. in detecting the disease as future research.

Data availability

The data that support the findings of this study are available on request from the corresponding author.

References

Lee DY (2015) Roles of mTOR signaling in brain development. Exp Neurobiology 24(3):177–185. https://doi.org/10.5607/en.2015.24.3.177

Islami F, Guerra CE, Minihan A, Yabroff KR, Fedewa SA, Sloan K, Wiedt TL, Thomson B, Siegel RL, Nargis N, Winn RA, Lacasse L, Makaroff L, Daniels EC, Patel AV, Cance WG, Jemal A (2022) American Cancer Society’s report on the status of cancer disparities in the United States, 2021. CA Cancer J Clin 72(2):112–143. https://doi.org/10.3322/caac.21703

Hung C-Y, Chen W-C, Lai P-T, Lin C-H, Lee C-C (2017) Comparing deep neural network and other machine learning algorithms for stroke prediction in a large scale population-based electronic medical claims database. In: 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), IEEE, pp 3110–3113

Ostrom QT, Cioffi G, Gittleman H, Patil N, Waite K, Kruchko C, Barnholtz-Sloan JS (2019) CBTRUS statistical report: primary brain and other central nervous system tumors diagnosed in the United States in 2012–2016. Neuro-Oncol 21(5):v1–v100

World Health Organisation (2021) Cancer. Accessed: Jan. 23, 2022. [Online]. Available: https://www.who.int

Li Q et al (2018) Glioma segmentation with a unified algorithm in multimodal MRI images. IEEE Access 6:9543–9553. https://doi.org/10.1109/ACCESS.2018.2807698

Kadry S, Nam Y, Rauf HT, Rajinikanth V, Lawal I (2021) Automated detection of brain abnormality using deep-learning-scheme: a study. In: Seventh International conference on Bio Signals, Images, and Instrumentation (ICBSII), Chennai, India, 2021, pp 1–5. https://doi.org/10.1109/ICBSII51839.2021.9445122

Raut G, Raut A, Bhagade J, Bhagade J, Gavhane S (2020) Deep learning approach for brain tumor detection and segmentation. In: 2020 International Conference on Convergence to Digital World - Quo Vadis (ICCDW), Mumbai, India, pp 1–5. https://doi.org/10.1109/ICCDW45521.2020.9318681

Billah M, Waheed S, Rahman MM (2017) An Automatic gastrointestinal polyp detection system in video endoscopy using fusion of color wavelet and convolutional neural network features. Int J Biomed Imaging 2017:Article ID 9545920, 9 pages. https://doi.org/10.1155/2017/9545920

Brain Tumor. Accessed: Apr. 11, 2021. [Online]. Available: https://www.healthline.com/health/brain-tumor

Islam MN, Mahmud T, Khan NI, Mustafina SN, Islam AKMN (2021) Exploring machine learning algorithms to find the best features for predicting modes of childbirth. IEEE Access 9:1680–1692

Aishwarja A, Jahan N, Mushtary S, Tasnim Z, Imtiaz Khan N, Islam MN (2021) Exploring the machine learning algorithms to find the best features for predicting the breast cancer and its recurrence. In: Vasant P, Zelinka I, Weber GW (eds) Intelligent Computing and Optimization. ICO 2020. Advances in Intelligent Systems and Computing, vol 1324. Springer, Cham. https://doi.org/10.1007/978-3-030-68154-8_48

Islam MN, Islam AN (2020) A systematic review of the digital interventions for fighting COVID-19: the Bangladesh perspective. IEEE Access 8:114078–114087

Zaki T, Khan NI, Islam MN (2021) Evaluation of user's emotional experience through neurological and physiological measures in playing serious games. In: Proc. Int. Conf. Intell. Syst. Des. Appl. (ISDA). Springer, New York, pp 1039–1050

Rahman J, Ahmed KS, Khan NI, Islam K, Mangalathu S (2021) Data-driven shear strength prediction of steel fiber reinforced concrete beams using machine learning approach. Eng Struct 233:111743

Kaur P, Singh G, Kaur P (2020) Classification and validation of MRI brain tumor using optimised machine learning approach. In: Kumar A, Paprzycki M, Gunjan V (eds) ICDSMLA 2019. Lecture Notes in Electrical Engineering, vol 601. Springer, Singapore. https://doi.org/10.1007/978-981-15-1420-3_19

Tiwari A, Srivastava S, Pant M (2020) Brain tumor segmentation and classification from magnetic resonance images: review of selected methods from 2014 to 2019. Pattern Recognit Lett 131:244–260

Hollon TC, Pandian B, Adapa AR, Urias E, Save AV, Khalsa SSS et al (2020) Near real-time intraoperative brain tumor diagnosis using stimulated Raman histology and deep neural networks. Nat Med 26(1):52–58. https://doi.org/10.1038/s41591-019-0715-9

Sangeetha R, Mohanarathinam A, Aravindh G, Jayachitra S, Bhuvaneswari M (2020) Automatic detection of brain tumor using deep learning algorithms. In: 2020 4th International Conference on Electronics, Communication and Aerospace Technology (ICECA), Coimbatore, India, pp 1–4. https://doi.org/10.1109/ICECA49313.2020.9297536

Majib MS, Rahman MM, Sazzad TMS, Khan NI, Dey SK (2021) VGG-SCNet: a VGG net-based deep learning framework for brain tumor detection on MRI images. IEEE Access 9:116942–116952

Pashaei A, Sajedi H, Jazayeri N (2018) Brain tumor classification via convolutional neural network and extreme learning machines. In: Proceedings of the 2018 8th International Conference on Computer and Knowledge Engineering (ICCKE), Mashhad, Iran, 25–26 October 2018, pp 314–319

Badža MM, Barjaktarović MČ (2020) Classification of brain tumors from MRI images using a convolutional neural network. Appl Sci 10(6):1999. https://doi.org/10.3390/app10061999

Khan AH, Abbas S, Khan MA, Farooq U, Khan WA, Siddiqui SY, Ahmad A (2022) Intelligent model for brain tumor identification using deep learning. Appl Comput Intell Soft Comput 2022:8104054, 10 pages. https://doi.org/10.1155/2022/8104054

Nickparvar M (2021) Brain tumor MRI dataset. Kaggle. https://doi.org/10.34740/KAGGLE/DSV/2645886

Kasgari AB, Ghoushchi SJ, Anari S, Naseri M, Bendechache M (2021) Brain tumor segmentation based on deep learning and an attention mechanism using MRI multi-modalities brain images. Sci Rep 11(1):1–17

Kurup RV, Sowmya V, Soman KP (2019) Effect of data pre-processing on brain tumor classification using capsulenet. In: International conference on intelligent computing and communication technologies. Springer Science and Business Media LLC, Berlin, pp 110–119

Ahmad A, Akbar S, Khan S, Hayat M, Ali F, Ahmed A, Tahir M (2021) Deep-AntiFP: prediction of antifungal peptides using distanct multi-informative features incorporating with deep neural networks. Chemometr Intell Lab Syst 208:104214. https://doi.org/10.1016/j.chemolab.2020.104214

Ali F, Akbar S, Ghulam A, Maher ZA, Unar A, Talpur DB (2021) AFP-CMBPred: computational identification of antifreeze proteins by extending consensus sequences into multi-blocks evolutionary information. Comput Biol Med 139:105006. https://doi.org/10.1016/j.compbiomed.2021.105006

Akbar S, Khan S, Ali F, Hayat M, Qasim M, Gul S (2020) iHBP-DeepPSSM: Identifying hormone binding proteins using PsePSSM based evolutionary features and deep learning approach. Chemometr Intell Lab Syst 204:104103. https://doi.org/10.1016/j.chemolab.2020.104103

Akbar S, Hayat M, Tahir M, Khan S, Alarfaj FK (2022) cACP-DeepGram: classification of anticancer peptides via deep neural network and skip-gram-based word embedding model. Artif Intell Med 131:102349. https://doi.org/10.1016/j.artmed.2022.102349

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

Authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Azhagiri, M., Rajesh, P. EAN: enhanced AlexNet deep learning model to detect brain tumor using magnetic resonance images. Multimed Tools Appl 83, 66925–66941 (2024). https://doi.org/10.1007/s11042-024-18143-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-024-18143-w