Abstract

This study aimed to evaluate the split-attention effect in multimedia learning environments via objective measurements as EEG and eye-tracking. Two different multimedia learning environments in a focused (integrated) and split-attention (separated) format were designed. The experimental design method was used. The participants consisted of 44 students divided into two groups for focused attention and split-attention. There were significant differences between the fixation, brain wave, and retention performance of the two groups. Fixations of the split-attention group were higher than the focused attention group. A significant difference was found in the focused attention group in the alpha brain wave in the frontal region for intra-group comparisons and in the split-attention group in the beta brain wave in the frontal area for the inter-group comparison. The retention performance of the focused attention group was higher than the split-attention group. Accordingly, more cognitive activity emerged in environments where the text was not integrated into the picture. Additionally, the narration of text instead of printed text is effective for focusing attention. To prevent the emergence of a split-attention effect, the text should be integrated into the picture in designs. Due to the split-attention effect, the eye-tracking and EEG data were different between the groups.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

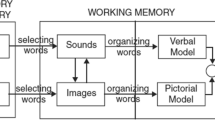

What is the contribution of integrating pictures to words and supporting with audio in learning environments? Do the students learn better in environments where these pictures, words, and audio stimuli are presented alone than in environments where they are used together? Such questions facilitated the creation of the multimedia learning theory. A multimedia-based learning environment is defined as the media that presents the words (narration or text) and pictures (illustration, video, etc.) together [45, 61]. Considering the design of multimedia principles is recommended for transferring information to memory instead of unplanned presentations designed with multi-sources [9, 15, 40]. These principles highlighted the significance of using multi-sources, sources simultaneously, and integrating [49, 56]. When multi-sources were not presented considering the principles adapted by Multimedia Learning Theory frameworks, they could cause increased mental effort for learners [61].

An extensive research base exists around human cognitive architectures, and instructional designers have tested various design methods to increase learning performance. It is important that they consider how learners process verbal and visual information when creating knowledge structures and use specific design principles to best facilitate learning. Some of these design methods aimed to reveal the principles regarding the spatial location of visual and verbal information on the screen. Diversifying the stimuli while presenting information to a learner is effective to attract attention; however, multiple non-integrated stimuli might cause distraction [45, 61]. Various studies have defined this as the principle of split-attention effect [18, 22, 25]. More effective learning has been reported when picture and text are viewed in a physically integrated manner compared to the environments where they are presented spatially separated [10, 46]. Although students obtain information from multi-source presentations, providing knowledge in an organized way creates better comprehension for them. If the content is not presented following this theory, the attention of the learners is divided between the two tasks [67].

It is reported that it is sufficient to include text beneath a picture when presented together [42]. When the text is integrated into a picture, there is a less mental effort [46] and higher learning performance outcomes [76]. Otherwise, when the split-attention effect occurs, the cognitive process becomes complicated since learners need to be integrated the various sources of information mentally [10].

1.1 Split-attention effect

Studies show that learning from mutually intending text and pictures is more successful when the text is displayed near the corresponding area of the image rather than when the text and pictures are placed at geographically separate positions. This phenomenon is known as the split-attention effect [22] or the spatial contiguity effect [40]. When learners are taught to use a mental or physical integration strategy to learn from spatially separated text and images, their learning improves. Previous research looked at how the spatial gap between text and images affected the efficacy of mental and physical integration strategies.

In their research, Pouw et al. [58] investigated whether the split-attention effect is triggered by the spatial separation per se. They found in Experiment 1 that having to incorporate two pictographic stimuli at larger distances reduced efficiency on a secondary visual working memory task but did not result in slower integration. In Experiment 2, when participants would have to incorporate an image and written text, a greater gap resulted in slower integration of the stimuli, but not in decreased performance on the secondary task. Experiment 3 revealed that displaying spatially divided (vs. integrated) textual and picture knowledge resulted in less integrative eye motions. To summarize, they show that greater distances between spatially separated information sources affect the process of learning; however, spatial separation on its own is probably not to be the primary nor a qualified mechanism for influencing learners’ performance.

Schroeder & Cenkci [62] investigate the impact of integrated designs through various moderator variables to understand better how integrated designs affect learning under different conditions. The incorporation of the text into the picture has an impact on learning efficiency and cognitive load concerning the studies reviewed.

Mutlu-Bayraktar & Bayram [50] examined the effect of integrating texts into visuals and their simultaneous presentation on recall performance by the eye tracking method. Higher recall performance was reported in the multimedia learning environment where the texts were integrated into the pictures during the experiment process compared to the multimedia environments provided separately.

As a common result of recent studies, it is recommended that written texts should be presented together with visuals and in an integrated manner. This study presents eye movements and brain wave analyses to report more precise data beyond those studies.

1.2 Eye-tracking measurements in multimedia learning

Eye movements provide details on the areas people are careful about, the information they disregard, and what they are distracted from [59]. The fixation, duration, scanpath, and saccades on the screen are the basis of the measurements obtained from the eye-tracking technique. In multimedia learning environments, fixation is seen as an indicator of cognitive activity. The fixation is defined as looking at objects or areas with a minimum of 100–200 ms. In other words, fixation on an area is considered as a sign that the learner performs a cognitive activity about this information on that area [54, 55]. The use of eye movement measurements via this technology enables us to test assumptions about the areas where people commonly concentrate on during text and picture integrations [71].

There are mainly two approaches to analyze eye movements [6]. They include the analysis of data obtained from the previously determined areas of interest, and the analysis of gaze movements, respectively. In the analysis of areas of interest, the researcher deals with the fixation duration specific to these areas [57].

Eye-tracking makes it possible to obtain information about perceptual processing while learning and provides information about cognitive processes combined with the learning success indicators [43]. In a study presented by Alemdag & Cagiltay [3], the researchers reported the current review of 54 papers about eye-tracking research in multimedia learning. Results indicated the relationship among eye movement measurements as the multimedia content, multimedia learning principles, individual differences, emotions, and metacognition with learning and cognitive processes. Lai et al. [36] performed a content analysis of 81 studies utilized the eye-tracking technique. They categorized the studies learning under seven themes. The themes were the information processing patterns, examination of the existing theories, effect of instructional strategies, individual differences, patterns of decision-making, the impact of learning strategies, and conceptual development. As it is seen in this categorization, eye-tracking is a technique in the literature that is used for assessing individual differences and learning. Researchers used eye-tracking as a tool to establish links between cognitive processes and learning outcomes.

The fixation duration during eye-tracking may be used to determine the cognitive activity and the processing depth. Some studies that investigated the eye movements in the multimedia learning environment explained fixation and difficulties in mental processing ([16, 54, 55]In addition, multimedia learning studies utilized eye-tracking for investigating multimedia principles and effects [24, 31]. The most frequently investigated ones are spatial contiguity principles [40], multimedia principles [63], cueing-signaling effect [4], split-attention effect [22], redundancy effect [34], and seductive details effect [69].

The eye-tracking analysis provides important data for multimedia learning. It is used in testing many principles and in examining the split-attention effect [3]. This method, which presents important findings for the analysis of cognitive processes such as attention and perception, is important in terms of evaluating the design principles more accurately in learning environments [65]. Only the expressions of the participants are insufficient to explain the cognitive processes. For this reason, the eye-tracking method is used in many studies aimed at examining multimedia learning environments. In a study that tested split-attention with the eye-tracking method, it was reported that visuals and texts were better focused on content in environments where they were integrated [11]. In this study, cognitive processes in multimedia environments were analyzed by the EEG method in addition to the eye-tracking method.

1.3 EEG measurements in multimedia learning

Electroencephalography (EEG) is a typical and cost-effective neuroimaging approach that uses electrodes and bio-amplifiers to measure electrical potentials over the scalp [23, 41]. The electrical potentials occur due to the activation of neurons in the brain during various physiological processes. The usage of EEG offers a novel and promising opportunity to deal with the field of educational neuroscience. EEG provides a means for continuous and real-time monitoring of cognitive activity during learning. Using EEG, it is possible to recognize subtle fluctuations during an instantaneous load, which can assist in clarifying the impacts of educational interposition [8].

EEG has a prospective value for estimating cognitive processes during multimedia learning because of having various benefits compared to subjective measures. Moreover, physiological signal measurement is susceptible to alterations over time and can be obtained while learning occurs, rather than depending on a measurement of cognitive activity after learning is fulfilled. The temporal resolution of EEG is high, and this empowers it to quantify alterations in attention on the millisecond scale.

Furthermore, EEG signals consist of different frequency bands. The lowest frequency band is the delta band, which ranges from 0.5 Hz to 4 Hz. The other bands defined are the theta (4–7 Hz), alpha (7.5–13.5 Hz), beta (13.5–30 Hz), and gamma (over 30 Hz) bands [1, 13]. Each band reflects various important tasks in mental assessment [47].

There are numerous research instances of how EEG is utilized to give significant knowledge about the learning procedure, including multimedia research. Antonenko & Niederhauser [7] utilized the subjective measures and EEG signals to investigate learning in hypertext. They were able to decide that alpha, beta, and theta frequency bands were remarkably lower when hypertext was utilized and that hypertext caused more decreased cognitive activity. In an alternative study, Makransky et al. [40] utilized EEG to evaluate the cognitive process in multimedia learning a three-dimensional (3D) version versus a desktop version of virtual reality (VR) simulation. They indicated that the 3D VR version of simulation induced lower learning performances. However, the VR version caused a higher self-reported presence. Several specialists have suggested consolidating EEG based metrics of cognitive load with the metrics obtained using a self-reported cognitive load, tests of retention and transfer [7, 37] and eye-tracking [48].

van Gog et al. [73] demonstrated that theta and alpha waves are of great significance in estimating cognitive process. The properties of delta waves can be utilized to separate cognitive states. Another study investigated a diverse set of feature-extraction strategies for estimating cognitive activity and monitoring brainwaves during various cognitive tasks [70]. The power spectral densities of alpha waves are generally utilized in brain signals to separate cognitive process in various mental states. The feature-extraction strategies used in EEG include time, frequency, and time-frequency domain approaches [27]. The features are computed on the signals obtained from different parts of the scalp, and thus they also have a space dependency. The time-space features contain approximate entropy and permutation entropy [26] in addition to non-linear features like the Hurst component [72], Hjorth parameters, Lyapunov exponent, and fractal dimension [74]. The frequency-space features depend on the signal power or energy of the total signal or the individual band. Power features consist of relative power, absolute power, and the ratio between absolute and relative power values [47].

EEG metrics of theta–alpha bands in parietal [20] and frontal [68] brain regions have shown that they have a correlation with the cognitive process. The results of many studies demonstrated that frontal theta ascends with higher cognitive activity and alpha descends with a higher cognitive activity [17, 21, 77]. Despite these findings, there are still unclear points concerning the utilization of EEG measurement for the assessment of split-attention.

Current study reveals important results in terms of multimedia design and to strengthen the findings by supporting eye movements and EEG data by considering cognitive features and design principles and. As stated before, there are no reports available showing the analysis of the split-attention effect in multimedia learning via different learning principles and strategies utilizing EEG signals and eye-tracking. However, new method interpretations are needed using EEG signals and other neuroimaging techniques. This study aims to propose an evaluation about split-attention utilizing metrics objective measurements (extracted from the multichannel EEG signals & eye-tracking) in multimedia learning.

1.4 Research questions

In this study, it is aimed to test the split attention effect with objective measurements in terms of learning outcome and cognitive process. For this purpose, the following research questions (RQ) are determined.

1.4.1 RQ1. Retention

Some previous studies show that split attention effect has impacted the learning outcome [22], but other studies report that it has no effect on learning outcome [58]. Overall, the studies described above highlight the importance of the impact of integrated designs in order to understand the conditions in which they influence learning outcomes. Hence, we needed to examine the retention in this study.

RQ1. Do learners’ retention performances show differences depending on the use of multimedia in split or focused attention type?

1.4.2 RQ2. Fixation – RQ3. Scanpath

The eye-tracking method overcomes the limitations of self-reporting measurements and reveals cognitive activities in multimedia learning with objective measurements. In studies examining the split attention effect, it is seen that the eye-tracking method is used in a limited number of studies [51, 75]. In this study, eye-tracking method from objective measurements is used to determine the effect of integrating text with images on cognitive activity, and the following research questions are formed.

RQ2. Do learners’ fixations show any significant differences depending on multimedia use in the split or focused attention type?

RQ2.1. Are there any significant differences between fixations on images and text where the text is integrated into the image and positioned separately?

RQ3. How is the participants’ scanpath on the scene?

1.4.3 RQ4. & RQ5. EEG Frequency Band Powers

In multimedia learning studies, neuroimaging measurements provide more detailed data to explain cognitive activity in the brain [37]. Although these measurements are used in many multimedia design studies, they have not been used before to test the split attention effect. In order to analyze the difference between the power bands measured in the resting state of the brain and the power bands measured after the learning process, RQ4 is determined. The RQ5 is tested to analyze the differences in brain signals to interpret the cognitive activities of learners while working in focused and split learning environments.

RQ4. Is there any statistical difference between the learners’ EEG frequency band powers in the resting-state and the last scene measurements?

RQ5. Do learners’ EEG frequency band powers show any significant differences depending on the use of multimedia in split or focused attention type?

RQ5.1. Do learners’ overall brain regions’ EEG frequency band powers show any significant differences depending on the use of multimedia in split or focused attention type?

RQ5.2. Do learners’ different brain regions’ EEG frequency band powers show any significant differences depending on the use of multimedia in split or focused attention type?

2 Method

In this research, the experimental design method was used. In the experimental model, the participants were separated into randomly formed groups as experimental and control [14]. In this method, the effect of the independent variable on the dependent variable is investigated via the cause and effect relationship. In this study, while retention scores, EEG frequency band (alpha, delta, beta, gamma) powers, and fixation was described as dependent variables, and type of multimedia environment was described as independent variables. After the experiment, an analysis was made by comparing the results of the two groups.

2.1 Study group

Overall, 44 undergraduate students from a public university volunteered to attend the study. Their mean age was 20.8 (sd = 3.60), with a range of 19 to 34. There were 19 women and 25 men. The participants were divided into a focused attention format for the multimedia learning group and a split-attention format for the multimedia learning group. The mean age of FAM group was 21.1 (sd = 3.80), the mean age of SAM group was 20.5 (sd = 3.50). In FAM group, there were 10 women and 12 men, in SAM group, were 9 women and 13 men. Before attending the experiment, participants were informed about the content and procedure of the study. The consent form was given to volunteer students. A pool of subjects was created from the participants who signed the consent form. Participants selected randomly from this pool were assigned to the groups.

2.2 Procedure

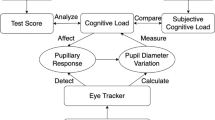

The experiments were held in a single session. First, a performance test with two open-ended questions was applied to all participants to assess their prior knowledge about the parts of a car engine. These questions are “What are the parts of a car engine?” and “What are the functions of these parts?”. Three students showing high scores in the pre-test were eliminated from the study without being included in the experimental process. The remaining students were assigned randomly to focus attention multimedia (FAM) and split-attention multimedia (SAM) groups after deciding that the participants’ prior knowledge was similar. Before the multimedia learning activities started, the wireless EEG cap with 16 electrodes and the eye-tracking device were worn by and calibrated for each student. While students were studying in the FAM and the SAM learning environments, their eye movements and brain signals were recorded. Once the recording sessions were over, they completed the retention test with relevant tasks (See Fig. 1).

2.3 Instructional materials

Two multimedia learning environments, including image, video, text, and narration related to the car engine and its parts, were designed by the researchers. The FAM learning environment was designed considering multimedia learning principles mentioned in (Richard E. [44]) and was configured with visual and audio resources avoiding any split-attention effects (Table 1). Videos and text were integrated in such a way that the text was presented as audio narration simultaneously with the related scene. Furthermore, this approach focused attention by signaling on the visual presentation ([18, 38, 53, 66], See Fig. 2).

The Split-Attention Multimedia Learning Environment was designed to induce a split-attention effect so that the car engine contents were presented with video and the text along with audio narrations (See Fig. 3). Besides, the names of the concepts were not integrated into the image but added as a text below. Moreover, the concept described was presented using two separate visuals as an image and a video on the same scene. It becomes difficult for the learner to focus his/her attention on both visuals, text, and spoken content; therefore, attention could be divided both visually and audibly [5, 12, 44, 64].

There were nine self-paced scenes in both learning environments. In the transition between scenes, different time was spent in each scene, though the average scene transition times (135 s) of the two groups were equal. The scenes were in the same order in both multimedia.

2.4 Data collection materials

The data collection materials were described in this section. EEG devices were used to record brain signals, and eye tracking devices were used to record eye movements. A retention test was used to determine the learning outcome.

2.4.1 EEG signal acquisition and processing

In this study, a wireless EEG amplifier (g.Nautilus, g.tec, Schiedlberg, Austria) with 16 dry electrodes (FP1, FP2, Fz, F3, F4, Cz, C3, C4, T7, T8, Pz, P4, P3, PO7, PO8, Oz) placed on the scalp according to the international 10–20 system using a flexible cap was employed to obtain EEG signals during multimedia learning activities. The sampling frequency was adjusted to 250 sample/s. In the bio-amplifier, the signals were bandpass filtered with cut-off frequencies of 0.5 and 70 Hz.

The frequency band power values of the EEG signals coming from 16 electrodes were computed for the delta, alpha, beta, and gamma bands for each scene. For this purpose, the signals were bandpass-filtered using Butterworth filters according to the cut-off frequencies of each band one by one, and then the samples from the filtered signals were squared and summed in order to obtain each band power value. Then, the electrodes corresponding to the scalp regions like frontal, parietal, temporal, central, and occipital lobes [2] were combined in order to obtain the region-based EEG band power features (See Fig. 4).

We should note here that the EEG recordings of 4 participants were removed from the study due to signal acquisition problems and highly corrupted signals, and thus those of 40 participants’ were analyzed. There was a problem in the EEG recording of 2 participants from both groups.

2.4.2 Eye-tracking data analysis

Mobile eye-tracking glasses (SensoMotoric Instruments, Teltow, Germany) were used in this study to acquire eye movement data (Fig. 4). They were wirelessly connected to the observer computer or a mobile phone. Software called BeGaze (version 2.4) was used to perform the analysis on eye-tracking data. The fixation points that corresponded to the objects or areas on the screen at which a participant gazed with a distribution level of 2 degrees and a minimum of 100–200 ms duration were tracked and recorded. Because of the fixation point analysis, heat maps that displayed colors from red to blue on the screen according to gaze duration and number [29] were obtained. The duration for a heat map generation was adjusted in such a way that the eye movements were analyzed between the starting time of a particular scene until the time instant the participant moved to the next page. The areas with the highest and the lowest focus were colored with red and blue, respectively, on the scene. An example of a heat map from our study is shown in Figs. 5, 6, 7, 8, 9, 10. The fixations on the screen for each scene for two multimedia learning environments were obtained and statistically compared. The other analysis is scanpath followed by the learners’ eyes when studying multimedia. In this study, the scanpath data of the students were presented as video (See Appendix 1 and 2).

2.4.3 Retention test

The retention performance test for a multimedia learning environment for car engine parts included the following 5 open-ended questions. And it was applied to all participants to evaluate the retention performance of the learners with a paper-pencil test.

-

1.

Write down the parts of engine.

-

2.

Which part controls the flow of cloth-air mixture entering the cylinder?

-

3.

What is the part that moves back and forth in the cylinder, cylindrical or disc-shaped, and ensures air compression?

-

4.

What is the figure below that ignites the fuel-air mixture?

-

5.

Write down the names of the parts indicated by the arrow in the following figure showing the engine parts.

The participants were also expected to remember the names of engine parts depicted on the picture included in the test. Each correct answer was worth 20 points (partial credit was possible), and the test score out of 100 for each participant was recorded.

2.5 Statistical data analysis

SPSS 21.0 was used to analyze the research questions. Fixation and heat map analyses were performed for each scene with the eye-tracking method. Similarly, EEG frequency band powers were obtained for different brain regions (frontal, parietal, temporal, central, occipital) for each scene. The Shapiro-Wilk normality test was performed on the data; the EEG frequency band powers did not exhibit a normal distribution. Retention performance, fixation exhibited a normal distribution.

The Mann-Whitney-U Test, one of the non-parametric tests, was used to analyze whether there was a difference between the different brain regions of the groups. Retention performance and fixations of learners who studied in two different multimedia learning environments were compared with the Independent Sample t-Test. Also, in these two environments, the participants’ fixations on pictures and texts were analyzed with the Independent Sample t-Test. Effect sizes (Cohen’s d) were coded on the basis of Cohen [19] as follows: negligible <0.1, small ~0.2, medium ~0.5, large ~0.8 and larger. The Wilcoxon Signed Ranks test was used to compare the resting-state and last scene measurements changes in the EEG frequency band powers.

3 Results

-

RQ1. Retention Performance

The Independent Sample t-Test outputs were investigated, and it was reported that retention performance of learners utilizing multimedia environments with split or focused attention type demonstrated significant distinction (p < 0.05, t(41) = 8.672, Table 2). Independent samples t-test results revealed that differences across experimental conditions were statistically significant (p = 0.00) with a medium effect size (d = 0.794, [19]). It was seen that the retention performances of the FAM group were higher (M = 74.45) than the SAM group (M = 34.41, See Table 2).

-

RQ3. Fixation

In the third research question, the difference between fixations for two different environments was investigated. Fixations on the same scenes for both groups were analyzed with Independent Sample t-Test. In the SAM group, higher fixation was obtained compared to the FAM group (p < 0.05, Table 2).

-

RQ3.1. Fixation on the Images and the Text

To focus students’ attention, it was recommended that the presented texts were to be integrated into the picture. This study inserted texts into pictures in a multimedia learning environment with focused attention and presented separately in a multimedia learning environment with split-attention. In these two environments, the participants’ fixations on pictures and texts were analyzed. The focused attention group focused more on pictures, while the split-attention group focused more on texts. There was a significant difference between the two groups’ fixations on the pictures with a medium effect size (p < 0.05, d = 0.78, Table 3). The fixations of the FAM group (M = 3420.4) on the images were higher than the SAM group (M = 2717.8). There was a significant difference between the fixations of the groups on the texts (p < 0.05). A medium effect size calculated (d = 0.53). In contrast to the images, the SAM group was more focused on texts than the FAM group (See Table 3).

-

RQ3. Scanpath

In the third research question, the eye-tracking data of the students were analyzed, and their scanpaths were examined. Participants first looked at the visual content. SAM group’s scanpath has made much more browsing than the FAM group. In SAM group, scanpath was scattered over both images and texts. In the FAM group, where the texts were integrated into the picture, scanpath has navigated in one area. Furthermore, SAM group had more saccades (See App 1–2).

-

RQ4. EEG Frequency Band Powers in the Resting-State and the Last Scene Measurements

Before analyzing the data of the participants, the difference between resting-state measurements was analyzed with the Mann-Whitney U test. There was no significant difference in resting-state measurements of the FAM and the SAM groups (p > 0.05, Table 4).

There were substantial differences when comparing EEG frequency band powers in the resting-state and the last scene measurements. When looking carefully, there was a statistical difference in temporal and parietal lobes for delta waves in the SAM group in Table 7; however, the statistical difference was available in the central lobe, parietal lobe, and occipital lobe in the FAM group as depicted in Table 5. When the effect sizes were analyzed in SAM group, a high effect size (d = 0.72) was found in the parietal and occipital lobes. In the FAM group, the effect size was high in the central lobe (d = 0.81), parietal lobe (d = 0.73), and occipital lobe (d = 0.81). Besides, although the alpha frequency band had a statistical difference in all brain regions apart from the frontal lobe in the SAM group, there was a statistical difference in all brain regions in the FAM group. Lastly, similar results were presented for beta brainwaves in the SAM and the FAM group, having statistically significance in temporal, parietal, and occipital lobes.

-

RQ5. EEG Frequency Band Powers

Two sub-research questions were analyzed to answer this research question.

-

RQ5.1. Overall EEG frequency band powers

In the fifth research question, where we evaluated the cognitive process of learners with EEG signals, the difference between the EEG frequency band powers of the two groups was examined. There was no significant distinction between the frequency band powers of groups for all learning processes (p > 0.05, See Table 6).

-

RQ5.2. Brain Regions’ EEG Frequency Band Powers

In this research question, different brain regions as frontal (F), central (C), temporal (T), parietal (P) of the alpha, beta, and delta frequency bands of the two groups were compared. The frequency bands obtained separately for all scenes were analyzed with the Mann-Whitney U test. There were significant differences between the beta frequency bands of the FAM and the SAM groups in the third, fifth, and last scenes (p < 0.05, See Tables 7, 8, and 9). The frontal lobe signals of the SAM group were higher than the signals of the FAM group. Note that theta and gamma frequency band values were excluded from any tables since there was no statistical significance between the groups for them.

In both environments, the learners stayed on the scenes for the same duration on average. The heatmaps of the eye movements in these scenes showed that attention was focused on integrated objects in the focused attention group. Attention was divided between the pictures and texts given separately in the split-attention group (See Figs. 5, 6, 7, 8, 9, 10). Heat map results showing the fixations obtained according to the areas in the scene provide the average number of focuses. In the split-attention multimedia learning environment, it was seen that the fixations were distributed over many items.

4 Conclusion

Numerous studies are investigating the cognitive process under the split-attention effect [18, 32] and learning outcomes [5, 25]. These studies are mainly presented with subjective measurement findings in which the participants express themselves via self-assessment reports. In recent years, eye-tracking [60] has been used as a tool for objective measurement in split-attention effect studies. In this study, the effects of split-attention on cognitive activity were evaluated via objective measurements using eye-tracking and EEG methods. Two separate learning environments were designed in accordance with multimedia learning principles, which can reveal the split attention effect and not (focused and split). During the experiment, the eye movements and EEG signals of the participants were recorded. In addition, the split-attention effect and its impact on learning outcomes were investigated.

There was a significant difference with medium effect size between the retention scores and fixations of the FAM and the SAM group. The FAM group had higher retention scores. In the SAM group, higher fixation was obtained compared to the FAM group. According to eye movements, both reading the text and viewing the pictures while watching the video caused the attention to be divided. The focused attention group focused more on pictures, while the split-attention group focused more on texts. SAM group’s scanpath has made much more browsing on the scene than the FAM group. At the same time, SAM group had more saccades.

In the EEG analyzes there were substantial differences in frequency band powers in the resting-state and the last scene measurements. There was a difference in temporal and parietal lobes for delta waves in the SAM group. Also, the difference was available in the central lobe, parietal lobe, and occipital lobe in the FAM group. Furthermore, in the SAM group, the alpha frequency band showed a statistical difference in all brain regions except the frontal lobe. Still, in the FAM group, the alpha wave band showed a statistical difference in all brain regions. Finally, results for beta brainwaves in the SAM and FAM groups were similar to each other, with statistical significance in the temporal, parietal, and occipital lobes. The SAM group’s frontal lobe signals were stronger than the FAM group’s signals.

5 Discussion

Several researchers have examined the learning outcomes and the cognitive process associated with the split-attention effect [5, 11, 25]. This present study aimed to evaluate the split-attention effect in multimedia learning environments via objective measurements as EEG and eye-tracking. The subjective measurement findings in this study were in line with previous studies [11]. There was a significant difference between the retention test scores of the FAM and the SAM group. The FAM group had higher retention scores. While the learners follow the instruction from multiple source presentations, giving the information in an integrated manner enables them to comprehend the information better. According to this principle, the knowledge that is not interpreted causes the learner’s attention to be divided into two different tasks [67]. According to eye movements, both reading the text and viewing the pictures while watching the video caused the attention to be divided. In multimedia learning, limitations of self-report-based assessment can be overcome using eye-tracking technology to explore cognitive processes. The use of eye movement measurements with the help of this technology allows testing the assumptions about the areas that people look at during text and image integration [71]. In this study, learners were able to focus more on the content in the MLE with the focused attention than the split-attention format. Similarly, differences in fixation duration values were obtained in the previous split-attention effect studies and a long fixation time ([30, 52];). Looking at the fixation duration of these groups, the FAM group focused more on images, while the SAM group focused more on the text. Differences in heat maps obtained using eye-tracking data were also supportive of this situation. In the scenes with visual and verbal representation, it was seen that the SAM group’s attention was distributed to all sources, and it became difficult to focus. This resulted in both low retention values and high mental effort results.

The EEG findings, one of the objective measures of cognitive activity also supported this situation partially. In more detail, EEG frequency band powers in the resting-state and the last scene measurements were compared. In intra-group comparison, considering Tables 7 and 8, the following concepts were noticed. It is known that alpha waves are the resting-state for the brain. Hence, it is quite reasonable to have a large amount of alpha waves in resting-state measurement. Both in the SAM group and the FAM group, every brain region created alpha waves apart from the frontal lobe of the SAM group. It is possible for the FAM group’s utilization of the attention skills of the frontal lobe.

In inter-group comparison, it was observed that the frequency band power values in the frontal lobe in the beta band of the SAM group were higher than the FAM group in the third, fifth, ninth scenes prepared in split-attention format. Then, the first question is “which multimedia features were placed in these scenes and how the cognitive activity showed a statistically significant difference in the SAM group?”. In all three scenes, the name of car engine parts in the FAM environment was signaled and integrated on the image or video. In the SAM environment, the text was given separately from the image. The split-attention effect emphasizes that more effective learning has been reported when picture and text are viewed in a physically integrated manner in comparison to the environments where they are presented spatially separated [18, 25].

Alternatively, the beta band power of the frontal region channels is considered as an index of CL. Kumaar et al. [33] have stated that CL can be assessed by alpha and beta band oscillations in the temporal region [35], frontal region, and fronto-central region of the cerebellum cortex. Many studies demonstrate that mental workload rises with an escalation in alpha band power [8, 28]. This study indicates that important alterations in the beta band power were only triggered on the frontal lobe when the split-attention effect is available during the watched slides.

In that case, why might the beta wave be dominant in the SAM group’s mentioned scene? The benefits of the beta wave are problem-solving, focused mental activity, attention, decision making, alert, higher mental endurance, and judgment. At this point, the following conclusion may be drawn: The beta wave was in a higher mental endurance during the SAM groups’ third, fifth and ninth scene measurements, having difficulty in the frontal lobe about attention. Therefore, it is reasonable for the beta brain wave to differ in the frontal brain region in those three scenes mentioned above.

So how may it evaluate this according to resting-state and last scene measurements? The difference in the frontal lobe in resting-state and last scene measurements in the intra-group measurements appeared in the alpha brain signal in the FAM group. Like beta waves, the alpha wave is also used in problem-solving, learning creatively [39], however, unlike the rationale of beta waves.

Multimedia learning studies that benefit from EEG signals and interpret brain signals deduced the results as an increase or decrease in frequency bands. In our study, it was presented using statistical tests, whether the results obtained in different brain signals were significant or not. Although there were differences in the theta frequency band in the literature, as stated in section 1.4, we could not find it. Despite these results, there are still unanswered concerns regarding the use of EEG analysis for cognitive activity assessment.

6 Suggestions

Some suggestions for future studies may be presented.

-

It is suggested to interpret the relationship of the learners with design by measuring their cognitive properties.

-

The use of current machine learning and advanced signal processing methods in the EEG field can also facilitate results interpretation.

-

It is recommended to analyze the redundancy effect together with the Split-attention effect, which are two close principles, in order to strengthen the results of the study.

-

In designs where text and pictures are used together as suggestions for Instructional designers, the texts should be positioned close to the pictures. Texts can be presented as narration instead of printed. The names of the parts of a picture should be directly integrated into that part. It has been observed that there is less focus on visuals in environments where texts are presented as both written text and narration.

7 Limitations

The limitations of this study are important in terms of giving an idea to future studies. EEG and eye-tracking experiments are laborious processes. In particular, finding volunteers to participate in the experiment is also challenging. The results of this study are limited to the accessible study group and designed multimeters. In our study, eye glasses were used instead of desktop sensors. Eye-tracking glasses are less sensitive in terms of calibration than desktop devices. These limitations were taken into account in the analysis of the data. This study conducted with the available number of samples. It would be better to do it with more samples in future studies.s

References

Abdul Kadir RSS, Rashid AHY, Abdul Rahman H, Taib MN, Murat ZH, Lias S (2009) Analysis of correlation between body mass index (BMI) and brain wave using EEG for Alpha and Beta frequency band. In: 2009 5th International Colloquium on Signal Processing Its Applications, pp 278–283

Adcock JE, Panayiotopoulos CP (2012) Occipital Lobe Seizures and Epilepsies. J Clin Neurophysiol 29(5):397–407

Alemdag E, Cagiltay K (2018) A systematic review of eye tracking research on multimedia learning. Comput Educ 125:413–428. https://doi.org/10.1016/j.compedu.2018.06.023

Alpizar D, Adesope OO, Wong RM (2020) A meta-analysis of signaling principle in multimedia learning environments. Educ Technol Res Dev 68(5):2095–2119. https://doi.org/10.1007/s11423-020-09748-7

Al-Shehri S, Gitsaki C (2010) Online reading: A preliminary study of the impact of integrated and split-attention formats on L2 students’ cognitive load. ReCALL 22(3):356–375. https://doi.org/10.1017/S0958344010000212

Andrienko G, Andrienko N, Burch M, Weiskopf D (2012) Visual Analytics Methodology for Eye Movement Studies. IEEE Journals & Magazine 18(12):2889–2898

Antonenko PD, Niederhauser DS (2010) The influence of leads on cognitive load and learning in a hypertext environment. Comput Hum Behav 26(2):140–150. https://doi.org/10.1016/j.chb.2009.10.014

Antonenko P, Paas F, Grabner R, van Gog T (2010) Using electroencephalography to measure cognitive load. Educ Psychol Rev 22(4):425–438. https://doi.org/10.1007/s10648-010-9130-y

Arslan-Ari I (2018) Learning from instructional animations: how does prior knowledge mediate the effect of visual cues? J Comput Assist Learn 34(2):140–149. https://doi.org/10.1111/jcal.12222

Ayres P, Sweller J (2005) The split-attention principle in multimedia learning. In: The Cambridge handbook of multimedia learning, pp 135–146

Bayram S, Bayraktar DM (2012) Using eye tracking to study on attention and recall in multimedia learning environments: the effects of design in learning. World Journal on Educational Technology 4(2):81–98

Bohec, O. L., & Jamet, E. (2008). Levels of verbal redundancy, note-taking and multimedia learning. In J.-F. Rouet, R. Lowe, & W. Schnotz (Eds.), Understanding multimedia documents (pp. 79–101). Springer US. https://doi.org/10.1007/978-0-387-73337-1_5

Calomeni MR, Furtado da Silva V, Velasques BB, Feijó OG, Bittencourt JM, Ribeiro de Souza e Silva, A. P. (2017) Modulatory effect of Association of Brain Stimulation by light and binaural beats in specific brain waves. Clinical Practice and Epidemiology in Mental Health : CP & EMH 13:134–144. https://doi.org/10.2174/1745017901713010134

Campbell DT, Stanley JC (2015) Experimental and quasi-experimental designs for research. Ravenio Books

Chen C-Y, Yen P-R (2019) Learner control, segmenting, and modality effects in animated demonstrations used as the before-class instructions in the flipped classroom. Interact Learn Environ 29:1–15. https://doi.org/10.1080/10494820.2019.1572627

Chen S-C, Hsiao M-S, She H-C (2015) The effects of static versus dynamic 3D representations on 10th grade students’ atomic orbital mental model construction: evidence from eye movement behaviors. Comput Hum Behav 53:169–180. https://doi.org/10.1016/j.chb.2015.07.003

Chik A (2013) Becoming English teachers in China: identities at the crossroads. The European Journal of Applied Linguistics and TEFL 2(1):135–147

Cierniak G, Scheiter K, Gerjets P (2009) Explaining the split-attention effect: is the reduction of extraneous cognitive load accompanied by an increase in germane cognitive load? Comput Hum Behav 25(2):315–324. https://doi.org/10.1016/j.chb.2008.12.020

Cohen J (1988) Statistical power analysis for the behavioral sciences, Second edn. Lawrence Erlbaum Associates, Publishers, Hillsdale, NJ

Culham JC, Valyear KF (2006) Human parietal cortex in action. Curr Opin Neurobiol 16(2):205–212. https://doi.org/10.1016/j.conb.2006.03.005

Dan A, Reiner M (2017) Real time EEG based measurements of cognitive load indicates mental states during learning. 9:31–44

de Koning BB, Rop G, Paas F (2020) Learning from split-attention materials: effects of teaching physical and mental learning strategies. Contemp Educ Psychol 61:101873. https://doi.org/10.1016/j.cedpsych.2020.101873

Delorme A, Makeig S (2004) EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J Neurosci Methods 134(1):9–21. https://doi.org/10.1016/j.jneumeth.2003.10.009

Fiorella L, Mayer RE (2014) Role of expectations and explanations in learning by teaching. Contemp Educ Psychol 39(2):75–85. https://doi.org/10.1016/j.cedpsych.2014.01.001

Florax M, Ploetzner R (2010) What contributes to the split-attention effect? The role of text segmentation, picture labelling, and spatial proximity. Learn Instr 20(3):216–224. https://doi.org/10.1016/j.learninstruc.2009.02.021

Gandhi T, Panigrahi BK, Anand S (2011) A comparative study of wavelet families for EEG signal classification. Neurocomputing 74(17):3051–3057. https://doi.org/10.1016/j.neucom.2011.04.029

Guo L, Rivero D, Dorado J, Rabuñal JR, Pazos A (2010) Automatic epileptic seizure detection in EEGs based on line length feature and artificial neural networks. J Neurosci Methods 191(1):101–109. https://doi.org/10.1016/j.jneumeth.2010.05.020

Huang R-S, Jung T-P, Makeig S (2009) Tonic changes in EEG power spectra during simulated driving. In: Schmorrow DD, Estabrooke IV, Grootjen M (eds) Foundations of augmented cognition. Neuroergonomics and operational neuroscience. Springer, pp 394–403. https://doi.org/10.1007/978-3-642-02812-0_47

Jacob RJK, Karn KS (2003) Commentary on section 4 - eye tracking in human-computer interaction and usability research: ready to deliver the promises. In: Hyönä J, Radach R, Deubel H (eds) The mind’s eye. North-Holland, pp 573–605. https://doi.org/10.1016/B978-044451020-4/50031-1

Jarodzka H, Janssen N, Kirschner PA (2015) Avoiding split attention in computer-based testing. Is neglecting additional information facilitative? 46(4):803–817

Johnson CI, Mayer RE (2012) An eye movement analysis of the spatial contiguity effect in multimedia learning. J Exp Psychol Appl 18(2):178–191

Kalyuga S, Chander P, Sweller J (1999) Managing split-attention and redundancy in multimedia instruction. 13(4):351–371

Kumaar MA, Kumar GA, Shyni SM (2016) Advanced traffic light control system using barrier gate and GSM. In: 2016 international conference on computation of power, energy information and Commuincation (ICCPEIC), pp 291–294. https://doi.org/10.1109/ICCPEIC.2016.7557213

Kutbay E, Akpinar Y (2020) Investigating modality, redundancy and signaling principles with abstract and concrete representation. International Journal of Education in Mathematics, Science and Technology 8(2):131–145

Lah S, Smith ML (2014) Semantic and episodic memory in children with temporal lobe epilepsy: do they relate to literacy skills? Neuropsychology 28(1):113–122

Lai M-L, Tsai M-J, Yang F-Y, Hsu C-Y, Liu T-C, Lee SW-Y, Lee M-H, Chiou G-L, Liang J-C, Tsai C-C (2013) A review of using eye-tracking technology in exploring learning from 2000 to 2012. Educ Res Rev 10:90–115. https://doi.org/10.1016/j.edurev.2013.10.001

Lee H (2014) Measuring cognitive load with electroencephalography and self-report: focus on the effect of English-medium learning for Korean students. Educ Psychol 34(7):838–848. https://doi.org/10.1080/01443410.2013.860217

Liu T-C, Lin Y-C, Tsai M-J, Paas F (2012) Split-attention and redundancy effects on mobile learning in physical environments. Comput Educ 58(1):172–180. https://doi.org/10.1016/j.compedu.2011.08.007

Lustenberger C, Boyle MR, Foulser AA, Mellin JM, Fröhlich F (2015) Functional role of frontal alpha oscillations in creativity. Cortex 67:74–82. https://doi.org/10.1016/j.cortex.2015.03.012

Makransky G, Terkildsen TS, Mayer RE (2019) Role of subjective and objective measures of cognitive processing during learning in explaining the spatial contiguity effect. Learn Instr 61:23–34. https://doi.org/10.1016/j.learninstruc.2018.12.001

Marzbani H, Marateb HR, Mansourian M (2016) Neurofeedback: A comprehensive review on system design, methodology and clinical applications. Basic and Clinical Neuroscience 7(2):143–158. https://doi.org/10.15412/J.BCN.03070208

Mayer RE (1997) Multimedia learning: are we asking the right questions? Educ Psychol 32(1):1–19. https://doi.org/10.1207/s15326985ep3201_1

Mayer RE (2010) Unique contributions of eye-tracking research to the study of learning with graphics. Learn Instr 20(2):167–171. https://doi.org/10.1016/j.learninstruc.2009.02.012

Mayer RE (2014) Multimedia instruction. In: Spector JM, Merrill MD, Elen J, Bishop MJ (eds) Handbook of research on educational communications and technology. Springer, pp 385–399. https://doi.org/10.1007/978-1-4614-3185-5_31

Mayer RE, Fiorella L (2014) 12 principles for reducing extraneous processing in multimedia learning: coherence, signaling, redundancy, spatial contiguity, and temporal contiguity principles. In: The Cambridge handbook of multimedia learning (p. 279). Cambridge University press

Mayer RE, Moreno R (1998) A split-attention effect in multimedia learning: evidence for dual processing systems in working memory. J Educ Psychol 90(2):312–320. https://doi.org/10.1037/0022-0663.90.2.312

Mazher M, Aziz AA, Malik AS, Amin HU (2017) An EEG-based cognitive load assessment in multimedia learning using feature extraction and partial directed coherence. 5:14819–14829

Mills C, Fridman I, Soussou W, Waghray D, Olney AM, D'Mello SK (2017) Put your thinking cap on: detecting cognitive load using EEG during learning. In: Proceedings of the seventh international learning analytics & knowledge conference, pp 80–89

Moreno R, Park B (2010) Cognitive load theory: historical development and relation to other theories. In: Cognitive load theory, Cambridge University Press, pp 9–28. https://doi.org/10.1017/CBO9780511844744.003

Mutlu-Bayraktar D, Bayram S (2018) Evaluation of situations causing Split of attention in multimedia learning environments via eye-tracking method [chapter]. In: Digital multimedia: concepts, methodologies, tools, and applications. Global, IGI. https://doi.org/10.4018/978-1-5225-3822-6.ch018

Mutlu-Bayraktar D, Cosgun V, Altan T (2019) Cognitive load in multimedia learning environments: A systematic review. Computers & Education 141:103618

Mutlu-Bayraktar D, Ozel P, Altindis F, Yilmaz B (2020) Relationship between objective and subjective cognitive load measurements in multimedia learning. Interact Learn Environ:1–13

Ozcelik E, Arslan-Ari I, Cagiltay K (2010) Why does signaling enhance multimedia learning? Evidence from eye movements. Comput Hum Behav 26(1):110–117. https://doi.org/10.1016/j.chb.2009.09.001

Park B, Knörzer L, Plass JL, Brünken R (2015a) Emotional design and positive emotions in multimedia learning: an eyetracking study on the use of anthropomorphisms. Comput Educ 86:30–42. https://doi.org/10.1016/j.compedu.2015.02.016

Park B, Korbach A, Brünken R (2015b) Do learner characteristics moderate the seductive-details-effect? A cognitive-load-study using eye-tracking. Journal of Educational Technology & Society 18(4):24–36 JSTOR

Plass JL, Heidig S, Hayward EO, Homer BD, Um E (2014) Emotional design in multimedia learning: effects of shape and color on affect and learning. Learn Instr 29:128–140. https://doi.org/10.1016/j.learninstruc.2013.02.006

Poole A, Linden B, Phillips P (2007) In search of salience: A response-time and eye-movement analysis of bookmark. In: People and computers XVIII - Design for Life: proceedings of HCI 2004. Springer Science & Business Media, pp 363–380

Pouw W, Rop G, de Koning B, Paas F (2019) The cognitive basis for the split-attention effect. J Exp Psychol Gen 148(11):2058–2075. https://doi.org/10.1037/xge0000578

Russell M (2005) Using eye-tracking data to understand first impressions of a website. 7(1):1–14

Schmidt-Weigand F, Kohnert A, Glowalla U (2010) A closer look at split visual attention in system- and self-paced instruction in multimedia learning. Learn Instr 20(2):100–110. https://doi.org/10.1016/j.learninstruc.2009.02.011

Schnotz W (2005) An integrated model of text and picture comprehension. In: The Cambridge handbook of multimedia learning, pp 49–69

Schroeder NL, Cenkci AT (2020) Do measures of cognitive load explain the spatial split-attention principle in multimedia learning environments? A systematic review. J Educ Psychol 112(2):254–270. https://doi.org/10.1037/edu0000372

Schwan S, Dutz S, Dreger F (2018) Multimedia in the wild: testing the validity of multimedia learning principles in an art exhibition. Learn Instr 55:148–157. https://doi.org/10.1016/j.learninstruc.2017.10.004

Širanović Ž (2007) Guidelines for designing multimedia learning materials. In: Proceedings of the 18th international conference on information and intelligent systems, pp 79–89

Slykhuis DA, Wiebe EN, Annetta LA (2005) Eye-tracking students’ attention to PowerPoint photographs in a science education setting. J Sci Educ Technol 14(5):509–520. https://doi.org/10.1007/s10956-005-0225-z

Song HS, Pusic M, Nick MW, Sarpel U, Plass JL, Kalet AL (2014) The cognitive impact of interactive design features for learning complex materials in medical education. Comput Educ 71:198–205. https://doi.org/10.1016/j.compedu.2013.09.017

Sorden SD (2012) The cognitive theory of multimedia learning. In: Handbook of educational theories. Information Age, pp 155–168

Stuss DT, Knight RT (2013) Principles of frontal lobe function. OUP USA

Sundararajan N, Adesope O (2020) Keep it coherent: A Meta-analysis of the seductive details effect. Educ Psychol Rev 32(3):707–734. https://doi.org/10.1007/s10648-020-09522-4

Sweller J (2010) Element interactivity and intrinsic, extraneous, and germane cognitive load. Educ Psychol Rev 22(2):123–138. https://doi.org/10.1007/s10648-010-9128-5

Tabbers HK, Paas F, Lankford C, Martens RL, van Merriënboer JJG (2008) Studying eye movements in multimedia learning. In: Rouet J-F, Lowe R, Schnotz W (eds) Understanding multimedia documents. Springer US, pp 169–184. https://doi.org/10.1007/978-0-387-73337-1_9

Taghizadeh-Sarabi M, Daliri MR, Niksirat KS (2015) Decoding objects of basic categories from electroencephalographic signals using wavelet transform and support vector machines. Brain Topogr 28(1):33–46. https://doi.org/10.1007/s10548-014-0371-9

van Gog T, Paas F, van Merriënboer JJG (2006) Effects of process-oriented worked examples on troubleshooting transfer performance. Learn Instr 16(2):154–164. https://doi.org/10.1016/j.learninstruc.2006.02.003

Vidaurre C, Krämer N, Blankertz B, Schlögl A (2009) Time domain parameters as a feature for EEG-based brain–computer interfaces. Neural Netw 22(9):1313–1319. https://doi.org/10.1016/j.neunet.2009.07.020

Wang J, Antonenko P, Dawson K (2020) Does visual attention to the instructor in online video affect learning and learner perceptions? An eye-tracking analysis. Comput Educ 146:103779

Yeung AS, Jin P, Sweller J (1998) Cognitive load and learner expertise: Split-attention and redundancy effects in Reading with explanatory notes. Contemp Educ Psychol 23(1):1–21. https://doi.org/10.1006/ceps.1997.0951

Zarjam P, Epps J, Lovell NH, Chen F (2012) Characterization of memory load in an arithmetic task using non-linear analysis of EEG signals. In: 2012 annual international conference of the IEEE engineering in medicine and biology society, pp 3519–3522. https://doi.org/10.1109/EMBC.2012.6346725

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Mutlu-Bayraktar, D., Ozel, P., Altindis, F. et al. Split-attention effects in multimedia learning environments: eye-tracking and EEG analysis. Multimed Tools Appl 81, 8259–8282 (2022). https://doi.org/10.1007/s11042-022-12296-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-12296-2