Abstract

An automatic estimation of age from face images is gaining attention due to its interesting applications such as age-based access control, customer profiling for targeted advertisements and video surveillance. However, age estimation from a face image is challenging due to complex interpersonal biological aging process, incomplete databases and dependency of facial aging on extrinsic and intrinsic factors. The published literature on age estimation utilizes multiple existing feature descriptors and then combines them into a hybrid feature vector. There is still an absence of specially designed aging feature descriptor which encodes facial aging cues. To address this issue we propose aging feature descriptor; Local Direction and Moment Pattern (LDMP), which capture directional and textural variations due to aging. We encode the orientation information available in eight unique directions. The texture is embedded into the magnitudes of higher order moments which we extract using local Tchebichef moments. Next, orientation and texture information is combined into a robust feature descriptor. To learn the age estimator, we apply warped Gaussian process regression on the proposed feature vector. Experimental analysis demonstrates the effectiveness of the proposed method on two large databases FG-NET and MORPH-II.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Among various biometric attributes, human face is an important attribute which conveys information about identity, age, emotions and expressions. Hence facial image analysis is an active research area for past two decades. Various applications of facial images analysis includes face recognition, 3D face reconstruction, facial age progression and age estimation. Apart from significant research on face recognition [8, 42, 63, 64], relatively few publications have been reported on age estimation [5, 19, 21, 33] This is due to fact that facial aging, a complex biological process, mostly affects the shape and texture of a person’s face [11]. Shape change is a prominent factor during developmental or formative years of humans. The underlying skeletal changes due to aging further alter the shape and in turn the appearance of a face. Along with the structural development, skin texture related deformations are also observed due to aging. Skin related aging effects are dependent on both intrinsic as well as extrinsic factors [11]. Intrinsic factors include wrinkle formation due to reduced skin elasticity and muscle strength. Extrinsic factors which affect the skin texture include exposure to sunlight, pollution or nicotine, and lifestyle components such as diet, sleep quality or position and overall health. Along with these difficulties, intra-person variations (e.g. expression, illumination, pose etc.) and incompleteness of the facial aging databases make age estimation problem even more challenging. Hence it is difficult to precisely predict a person’s age from the face image, even for humans. Thus, it is gaining more research attention due to the intricacies involved and its wide range applications, such as age-based access control, law enforcement customer profiling for targeted advertisements, video surveillance, cosmetology etc.

In typical age estimation approaches, after preprocessing, the first step is to extract the facial features. Based on the type of facial features, age estimation has been broadly categorized into, anthropometry based approach and image based approach. The anthropometry based approach relies on the anthropometric measurements taken from the subject (human face). For anthropometric measurements, first particular locations called landmark points on a subject are identified either manually or automatically. Then, series of measurements between these landmarks are taken and ratios of distances between these landmarks are computed. These ratios provide the information about the age of the subject. Kwon et al. [30] classified facial images into babies, young adults and seniors adults using anthropometry based geometric features along with wrinkle features. Anthropometric measurements can be used to extract distance ratios of facial components, which are then used to classify the face images [30, 45, 51]. Although, anthropometry based geometric features are able to distinguish babies from adults; they are not able to classify young adults from senior adults.

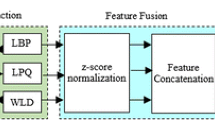

A class of image based approaches, that utilize statistical shape and appearance models such as Active Appearance Model (AAM) [9] were initially used for age estimation [17, 31, 32]. These methods generate a statistical model to capture the shape and texture variations in face due to aging. Lanitis et al. [31] proposed AAM for person-specific age estimation. Wherein they have extracted craniofacial growth and aging pattern of childhood and adults. Aging patterns have been captured using AAM to differentiate among various age groups [5, 16, 17, 32]. Performance of both the anthropometric and appearance based approaches is highly dependent on accurate localization of facial landmarks. Other class of image based approaches utilizes local feature descriptors such as Local Binary Pattern (LBP), Scale Invariant Feature Transform (SIFT), Histogram of Oriented Gradients (HOG), Biologically Inspired Features (BIF) and Gabor for appearance feature extraction. These extracted local features are classified by a classification or regression algorithm to predict age group or exact age. Guo et al. [21] proposed BIF for age estimation where, a bank of multiscale and multi-orientation Gabor filters are used to extract age related facial features from a facial image. Recently, BIF and its variants have been used in [16, 19, 20, 22] for age estimation. Histogram based local features extracted from local neighborhoods have also been widely used in age estimation [14, 25, 43, 58, 65]. Multiple combinations of LBP, BIF and Principle Component Analysis (PCA) are used as multi-feature vector in [58]. Fusion of local and global features is proposed in [43], where AAM is used as global feature and LBP, Gabor, and LPQ [1] are used as local features. Age estimation using HOG is proposed in [14, 25]. SIFT and MLBP are used as feature vectors in [65] for global age estimation approach. Recently in [41], a matched filter based wrinkle descriptor called called Local Matched Filter Binary Pattern (LMFBP) has been proposed for age estimation.

Appearance-based approach incorporates local encoding scheme to describe shape and texture information in the image. Various appearance-based methods used as aging feature are directional edge-based approaches, like LPQ, HOG, LDP, LDN, SIFT and BIF. However, these approaches may not be efficient to encode the textural changes in the image. Also, existing age estimation methods discussed earlier either use global and local features [43] or combine multiple local features [14, 25, 58] to capture both shape and texture. Such combination or concatenation leads to a high dimensional feature vector which achieves marginal improvement in the performance. This leaves a scope for research in developing a single feature descriptor which can efficiently encode the facial aging cues.

In this paper, we propose an edge and moment based face descriptor, local direction and moment pattern, which encompasses both shape and texture variations effectively. The proposed method encodes apparent facial aging features using eight orientations and higher order moments at every position or facial point. The combination of orientation of edge and moments in the coding scheme of the proposed feature outperforms the existing local features. The proposed method describes aging cues such as shape variations in formative years and textural changes in adults and seniors. Existing appearance based methods use only directional filters comprising of only four direction to encode both shape and texture. Whereas, in our method we used eight direction to encode shape and slow varying texture. We have also integrated local Tchebechief moments to capture slow to large textural variations. Hence, our approach is able to preserve shape and textural changes efficiently. Overview of the proposed approach for automatic age estimation is illustrated in Fig. 1. Further we use regression as an age estimation tool. We evaluate proposed descriptor for age estimation task on two facial aging databases and results show its superiority over existing methods.

Outline of the paper is as follows. Section 2 explains directional filters, Local Tchebichef moments and Warped Gaussian Process Regression. Further, the proposed novel feature extraction scheme is presented in Section 3. Experimental analysis and results are presented in Section 4. Section 5 summarizes and concludes the results.

2 Methods and materials

In this section, we present a brief introduction of directional filters, Local Tchebichef Moment and the Gaussian Process Regression (GPR).

2.1 Directional filters

The most common method for edge enhancement is to take a discrete approximation of the first order derivative in a given direction. Prewitt [44] suggested a two dimensional differencing scheme based on a set of gradient masks called compass masks. Prewitt masks can be used to enhance edges in Horizontal and Vertical directions. Kirsch compass masks proposed in [28] detect direction and magnitude of edges which are at eight different orientations, obtained by rotating the first mask 450. However, the above mentioned masks cannot be used for enhancement of edges along any given direction. Hence, to subdue/enhance edges in an image in a particular direction, a directional filtering method has been proposed in [23]. The directional derivative of f at a point (r, c) in the direction 𝜃 is defined by \( f_{\theta }^{\prime }\left (r,c \right ) \) in [23] as

where 𝜃 is the clockwise angle from the vertical axis. In directional filtering, (1) is used for designing the directional filters at different orientations. Note that in (1), we can select any arbitrary angle 𝜃. The filter generated from (1) is able to extract the edge information at any desired orientation. The directional Filter (DF) at an angle 𝜃 is defined as

where 𝜃 is the angle taken clockwise from the column axis.

2.2 Local Tchebichef moments

Image moments have been widely used for pattern recognition tasks, and texture analysis [15, 24]. They describe the shape of a probability density function. Mathematically, the Tchebichef moment Tpq represents the projection of the image f (x, y) onto a polynomial basis mpq (x, y) and is computed as

where p and q are non-negative integers, p + q is called the order of the moment and value Tpq denotes the correlation between the image and the moment polynomial function. Moments are grouped as non-orthogonal and orthogonal depending on the basis functions (polynomial). The basis set of the geometric and complex moments both are non-orthogonal. These moments have been used in image analysis for texture extraction in [2, 55]. However, non-orthogonal moments have redundant information and are computationally expensive. The reconstructed image from such moments is ill posed [34]. These problems are overcome by the continuous orthogonal moments such as Legendre and Zernike [6, 27, 34, 52]. Continuous orthogonal moments do not have the problem of large dynamic range, but they are defined on a transformed space which is completely different from the image coordinate space. Such transformation leads to computational complexity.

Discrete orthogonal moments hold the useful properties of the continuous orthogonal moments. The basis set in discrete orthogonal moments is defined on the image space; hence image transformation and discretization errors observed in continuous orthogonal moments are prevented. Discrete orthogonal moments are computed using discrete Tchebichef [40] and Krawtchouk [61] polynomials. Mukundan [40] suggested Discrete Tchebichef Moment (DTM) which are computed using Tchebichef polynomial tn (x) of degree n. Higher order moments in the DTMs are generally computed using recurrent equations (5). The Tchebichef polynomial tn (x) of degree n is computed using (4).

where x = 0,1,2,⋯, N − 1 and n = 2,⋯, N − 1 and

The Discrete Tchebichef Moment (DTM) represents the oscillating shapes, hence higher-order moments correspond to the high frequency components [57, 60]. It provides the correlation between the Tchebichef kernels and the image. Therefore, different order moments correspond to the oscillating patterns similar to the texture observed in the image. Various methods are available to encode the information present in the higher order moments. One of them is Local Tchebichef Moment (LTM) [39], which extracts the textural information by evaluating discrete Tchebichef Moment on N × N local neighborhoods of pixels. Lehmer code [29] is used for encoding the texture information extracted by the Tchebichef Moments. We observe that in [37], low value of higher order moment represents slow variations in the texture, whereas a high value implies rapid changes in the underlying texture. The relative strength of the various order moments is represented using the Lehmer code.

2.3 Gaussian process regression

Given a training set of N data points \( X=\left \{\begin {array}{llll} x_{i} \end {array}\right \}_{i=1}^{N} \) , and their corresponding real valued targets \( Y=\left \{\begin {array}{llll} y_{1},{\cdots } ,y_{N} \end {array}\right \}^{T} \), where \( x_{i} \in \mathbb {R}^{D} \) is training feature vector, D is dimension D and yi is corresponding training target. We then predict the target values y∗ of test data x∗. In Gaussian Process Regression, each target is assumed to be generated from a latent function g and the additive Gaussian noise ε i.e. yi = g (xi) + ε where ε is the Gaussian noise with zero mean and σ2 variance, i.e. ε ∼ N (0, σ2) [46]. The kernel parameters mean and covariance completely describe the Gaussian Process. The prior distribution of latent variable gi is defined as

where N (μ,Σ) denotes the multivariate normal distribution with mean μ and covariance matrix Σ and 𝜃 represents the kernel hyper-parameters. The following squared exponential kernel has been considered;

where l is the length parameter and \( {\sigma _{g}^{2}} \) is the scaling parameter. The likelihood of the target results in p (y|g) = N (y|g, σ2I) where I is N × N identity matrix because of the Gaussian noise ε assumption on the regression model. Model parameters are learnt by maximizing marginal likelihood. The marginal likelihood can be obtained as

where KT ≡ K + σ2I represents a noisy covariance matrix. The marginal likelihood function is maximized using the gradient descent method. In GPR the predictive distribution is used to estimate the output y∗ for the test data x∗. The predictive distribution p (y∗|x∗, 𝜃) is Gaussian with mean μ∗ and variance σ∗ i.e. \( N\left (y_{*}|\mu _{*},\sigma _{*}^{2} \right ) \). The predictive distribution mean μ∗ and variance σ∗ are given by (7) and (8) respectively. The mean of the predictive distribution represents the predicted age and variance denotes the confidence.

where

2.4 Warped Gaussian process

Gaussian Process (GP) Regression assumes the target data as a multivariate Gaussian in presence of Gaussian noise. This limits the GP model’s usage in practical applications, as the data generation mechanism and noise are not always Gaussian. Therefore, Warped Gaussian Process (WGP) [50] is a better approach, in which a non-linear function is used to transform the regression targets such that the transformed function is well modeled by a GP. In WGP, the regression targets yi are transformed using a monotonic transformation function f parameterized by ϕ, to generate the transformed targets zi , where zi = f (yi). After such transformations the regression problem is similar to the one in the GPR. Hence, we use the same approach for estimating the kernel hyper-parameters, noise variance and transformation function parameters. The predictive distribution of z∗, is given by

Using the inverse warping function the prediction for the test data is obtained by \( f^{-1}\left (\mu _{z_{*}} \right ) \), where f− 1 (.) is the inverse of f (.).

3 Proposed work

In this section, we propose the local direction and LTM based feature descriptor. In the proposed method we first extract local dominant direction to encode the aging cues. Next, we extract LTM from the image to encode the textural variations. Using the orientation and moment information, we construct a novel feature descriptor. Further, we learn the warped Gaussian process regression to estimate the exact age.

3.1 Difference with previous works

Current directional descriptors such as LDN [48] and LDP [26] use Kirsch compass mask to extract directional information. The orientations extracted using Kirsch mask are always multiples of 450 (i.e. 00, 450, 900, 1800). However, the orientations developed on the human face due to aging are not guaranteed to be of exactly 450. Hence these descriptors are not able to capture other orientations. Also, LDN and LDP do not explicitly encode the textural variations on the face. The proposed method use directional filters to encode 8 unique angles from 00 to 1800 (not limited to 450). Along with the shape or orientation information, the proposed descriptor explicitly extracts the texture information using higher order moments.

LDMP aims at capturing shape variations in formative years as well as textural changes in the facial regions of adults and seniors. As mentioned in [35] directional information encodes shape and edge or wrinkle patterns, LDMP encodes shape variations using eight unique directional filters. Also, large textural changes observed in the higher age group are captured in the directional information. Even the textural changes during developmental years and teenagers are also different. Also, large textural variations have been observed in adults and seniors. Therefore, only directional information would not be sufficient to capture such large textural variations. We address this problem by encoding texture using local Tchebichef moments. Thus, LDMP encodes shape changes during craniofacial growth along with wrinkle patterns and large textural variations during higher ages.

In summary, the key features of the proposed method are: 1) the coding scheme extracts directional information using directional filters, instead of Kirsch compass mask which avoids duplication of information and encodes more unique directions; 2) the explicit use of local moments capture a wide range of textural variations during large age span; and 3) the use of local moments makes the technique scale invariant and use of gradient information makes it robust against illumination changes and noise.

3.2 Extraction of dominant direction

So far published [12, 26, 48, 49] edge-based feature descriptors generally use Kirsch compass masks [28] to encode edge responses. But it encodes those directions which are in multiples of 450. However, it has been observed that lines and wrinkles on face can take any direction.

Figure 2 shows edges in various directions present on the facial image. Therefore to encode the structural information present in the facial image, we use directional filters at eight different directions. The responses for eight directional filters are computed using

where k is direction constant, DFk×i is computed using (2) with 𝜃i = k × i and Di denotes the directional filter at orientation 𝜃i. To get the dominant orientation we apply Di and compute eight local orientation responses, considering a 3 × 3 neighborhood. The filter responses corresponding to eight directions for each pixel are represented as

where f (x, y) is the input image, Di is the ith directional filter among eight orientations and DRi is corresponding edge response value. To analyze the effect of directional filters and their use for extracting edge information we constructed a sample image containing multidirectional edges. Next, we applied directional filters at angles 00,300,600,900,1200,1500,1800 on the sample image. The sample image and its response to various directional filters is shown in Fig. 3. It is clear from Fig. 3 that we can use directional filters to extract various edges present on the facial images. The extraction of dominant edge direction is shown in Fig. 4. The dominant orientation in the local neighborhood is computed as

where DDir holds the maximum response among the eight orientations DRi and Dir in (12) is the directional number of the maximum response. The numerical value of the directional number of the dominant direction is further used to construct the complete feature vector.

3.3 Extraction of local Tchebichef moments

Different texture variations on facial images have been observed at different stages of life. During formative years negligible or slow textural changes are observed in a face image. However, noticeable texture variations have been observed in adults. We use DTM to capture these textural changes on face images. The low value of higher order moment represents slow variations in the texture, whereas increased value implies rapid changes in the underlying texture. Therefore the magnitude of various higher order moments can effectively capture the changes in the face texture due to aging. In our approach, Tchebichef polynomials are used for calculating discrete orthogonal moments. We use Local Tchebichef Moment to encode the texture in the local neighborhood. We have considered a 5 × 5 local neighborhood for computing the LTM using (3).

The convolution mask required for computing local Tchebichef moment is computed from Tchebichef polynomials as given in (13).

We have considered Tchebichef polynomials up to degree 2 to calculate the moments. Since the dynamic range of the moment varies with its order, it is important to normalize using appropriate scaling. The normalization of the moments for LTM calculation is carried out using (14).

where wi are positive real numbers used for normalization of Li. The details of this process can be found in [39].

The normalized moment represents the local distribution of intensities and local texture variations. Instead of the respective magnitudes, their relative strengths are effectively encoded using the Lehmer code due to normalization. Since we have considered four moments the texture information is encoded in 5-bit code. Figure 5 describes the steps for computation of the LTM of an image.

After extraction of the dominant direction and texture information, we encode both of them into a single feature vector. The orientation information is available in Dir as shown in (12) and the Lehmer code applied on (14) represents the texture. Let the Lehmer code is represented by M. Eight bit code for the proposed LDMP is obtained by encoding Dir from the direction into 3 bits (since we have considered eight directions) and the Lehmer code M into 5-bit. The resulting 8-bit local feature vector is represented in (15).

The proposed LDMP code computation is summarized in Algorithm 1.

3.4 Proposed age estimation framework

In the preprocessing, an input face image is first aligned and then cropped to size 200 × 150. The cropped face image is converted to a gray-scale image and then histogram equalized. Next, we extract LDMP features from each patch using the method described in Sections 3.1 and 3.2. The final feature vector is represented by concatenation of histogram of each patch. For local feature extraction we have considered overlapping factor as 0.5, patch size 16, number of orientations as 8 and 3 different scales (0.75,1,1.5). The extracted feature results into a very high dimension therefore we apply widely used principle component analysis (PCA) for dimensionality reduction. After feature extraction and dimensionality reduction, the next step in age estimation is learning the regressor and estimating the age. To handle regression problem,we train the warped Gaussian process to learn the model parameters. Various parameters learned during the training step are the kernel hyper-parameters, noise variance and warping function parameters. For predicting the exact age value we make use of the learned parameters. Algorithm 2 presents the complete age estimation process.

4 Experiments and results

4.1 Databases and preprocessing

We have performed age estimation experiments on two most popular facial aging databases: FG-NET [53] and MORPH II [47]. The FGNET contains 1002 images from 82 different subjects (6 to 18 images per subject) collected by mostly scanning photographs of the subjects with ages ranging between newborns to 69 years old. Even if the overall age range is large (0 to 69 years), almost 70% of the face images are of subjects younger than 20 years. MORPH II is much larger database than FGNET. It contains 55,314 face images in the age range 16 to 77 years. The images in this database represent adverse population with respect to age, gender, and ethnicity, available as metadata.

There are different types of appearance variations in facial images of both the facial aging databases. The facial images are either in gray-scale or color. In order to mitigate the influence of inconsistent colors, all the color images are first converted into gray-scale images. Since, images from FG-NET are personal collection of subjects, large amount of pose variation is also observed. Sample images with pose, scale, and color variations from both the databases are shown in Fig. 6. In the preprocessing, images are rotated to align them vertically and then cropped to size 200 × 150 to remove background and hair regions. The cropped face image is converted to a gray-scale image and then histogram equalized. For local feature extraction we have considered overlapping factor as 0.5, patch size 16, number of orientations as 8 and 3 different scales (0.75, 1, 1.5). Original and preprocessed and images are shown in Fig. 6.

4.2 Evaluation metrics and protocol

Performance of age estimation techniques is assessed using two evaluation metrics, Mean Absolute Error (MAE) and Cumulative Score (CS). The smaller the MAE, the better the age estimation performance. MAE shows the average performance of the age estimation technique and is an appropriate measure when the training data has many missing images. MAE indicates the mean absolute error between the predicted result and the ground truth for testing set, and is given by,

In addition to MAE, CS is another performance metric which computes the overall accuracy of the estimator and is defined as:

where Ne<k is the number of test images for which the absolute error by the age estimation algorithm is not higher than k years. The higher the CS value, the better the age estimation performance. CS is a useful measure of performance in age estimation when the training dataset has samples at almost every age. However, in age estimation, due to imbalanced and skewed databases both MAE and CS are used for evaluation.

Performance of an algorithm depends on availability of sufficient training images corresponding to the complete age range. But available databases do not have equal and sufficient training images in each age span, which results in different absolute errors for different age spans. Therefore, along with the MAE and CS we have also computed the MAE for each age group as one of the evaluation criteria. For such evaluation we divided the test dataset into various age groups and computed the MAE for each age group separately. We have also computed the variance of the error of each group. We have shown the performance of the proposed methods in terms of error graphs for both the databases.

Evaluation protocol determines criteria for train and test data selection and system performance measure. A good evaluation strategy should be independent of training data and representative of the population from which it has been drawn [3]. Validation of age estimation method is carried out using unseen data to evaluate generalizability of the method and to avoid overfitting. Cross validation is a widely used statistical technique for the estimation of model generalization ability.

Since the FG-NET dataset has limited number of images,the Leave-One-Person-Out (LOPO) protocol is used to evaluate the performance of the proposed approach. The LOPO protocol considers all samples of one subject to test the approach while the samples of all other subjects are used to train. This scheme ensures that a person is not in the training and test set simultaneously,so the classifier does not learn individual characteristics. To evaluate the accuracy and robustness of our algorithm on the MORPH II database, we have followed similar settings as in [4, 43] and [56]. We have randomly selected 10,000 images and used 80% of the data as a training set, and 20% of it for testing.

4.3 Comparison for computational efficiency

First, we compare the computational efficiency between our descriptor and different local feature descriptors used for age estimation. We report computational complexity in terms of time required to extract features from different number of images and then present average time. For fair comparison we computed all the features on the same machine. The experiments were conducted on Intel(R) Core(TM)i7-4770 CPU @ 3.4GHz with 32GB RAM system. The comparative results are reported in Table 1. It is observed that there is a significant improvement in computational efficiency in our approach. Although the proposed descriptor takes 22 milliseconds more compared to BIF for 10 images, it takes less time for large number of images. Thus, the proposed descriptor is suitable for large sized databases and also computationally efficient compared to reported local feature.

4.4 Experiments on FG-NET

To validate the age estimation algorithm on FG-NET database we follow LOPO and compare our results with the state-of-the-art methods. Table 2 summarizes the age estimation results of the state-of-the-art methods and the proposed approach on FG-NET database in terms of MAE and CS. The best performing algorithm on FG-NET in terms of MAE is [36]. This method achieved slightly better MAE than our approach (MAE of 4.6 years). It shows the effectiveness of AAM to capture the facial growth during formative years. In FG-NET, over 70% of images are concentrated under the age of 20 years. Hence, use of AAM in [36] justifies the result. However, [36] is not the best performing method on MORPH-II database which has large number of images. In practical age estimation systems, it is not advisable to use different feature vectors for different databases. It is essential that a single feature descriptor should perform well on all the databases. It is interesting to see that the proposed approach provides better results on both the databases and hence is more suitable for practical implementation. In Fig. 7 we compare the results of state-of-the-art methods with the proposed method in terms of CS at error levels from 0 to 10 years on the FG-NET database. The CS curve shows nearly 70% of the age estimation has an error less than or equal to 5 years. Also, about 15% of images are predicted with zero year error.

As MAE reflects only overall performance of the system, it is equally important to evaluate performance over different age groups. For such analysis, we divide the test dataset into six age groups (each age group spans 10 years) and compute the MAE for each age group separately. We also compute the variance of the MAE for each group. Figure 8 shows the age group specific MAE on FG-NET database. It shows less than 4 years of MAE for age range up to 20 years. Since 70 % of images of FG-NET database corresponds to age range less than 20 years, the regressor get sufficient number of images to learn the aging pattern. However, per age group MAE is higher for age groups more than 20 years. The main reason for higher per age group in this age range is the significantly skewed age distribution of FG-NET. The error bars in Fig. 8 also shows the error variance for each age group. Figure 9 shows examples of good and poor age estimations using our approach on FG-NET.

4.5 Experiments on MORPH II

To evaluate the accuracy and robustness of our algorithm on the MORPH II database, we have followed similar settings as in [4, 43] and [56]. We have randomly selected 10,000 images and used 80% of the data as a training set, and 20% of it for testing. Note that in [4] and [56] all the images correspond to people from only Caucasian descent. However, we have not imposed such restriction in our experiments. Hence the proposed descriptor is expected to be robust across ethnic variations. For benchmarking, we compare the results of the proposed method against several state-of-the-art published methods as presented in Table 3. We have the following observations from these results. The best performing method on this database among the published age estimation methods so far is [21]; it shows the effectiveness of Bio-Inspired Features (BIF) in representing the aging information. The performance of our approach is better than BIF and also other existing age estimation methods in terms of MAE and CS both. Compared to the existing GPR based methods our approach performs better than MTWGP [62] and provides similar MAE as that of OGP [65]. But note that [65] performed age estimation using two feature vectors DSIFT and MLBP. Concatenation of feature vectors generally results in high dimensionality and computational complexity. Also in [65], 9000 subjects were used for training and 1000 subjects were used for testing, which is computationally very expensive. However, we have used only 8,000 images for training and 2,000 for testing.

Experimental result in terms of CS on MORPH II is shown in Fig. 10. The CS shows that, about 73% of the images are estimated with MAE less than 5 years. It implies that the proposed feature vector with GPR not only improves overall MAE, but also predicts more than 70% of images with prediction error less than 5 years. To compute age group specific MAE, we divide the test set of MORPH-II database into 6 age groups and compute the MAE for each group. For such age group specific analysis we also compute the error variance for each group. This analysis highlights the reason for increase or decrease in the overall MAE. The age group specific MAE and corresponding variance is shown in Fig. 11. It is clearly seen in Fig. 11 that the per age group MAE is less than 5 years in all the age groups except the last two age groups. Last two age groups have comparatively less number of images than others. Figure 12 shows examples of good and poor age estimations by our approach on MORPH-II.

4.6 Significance test

Statistical significance tests are widely used tool for analysis of machine learning methods [10, 38]. Given two learning algorithms and a training set, a significance test is used for determining whether there is a significant difference between their performance. In our experiments we use one-sample t-test to compare the performance of the proposed method and the reported state-of-the-art methods. The one-sample t-test compares the difference in mean scores found in an observed sample (through our experiments) to a hypothetically assumed value. Typically the hypothetically assumed value can be the population mean or pre-defined value or some derived expected value or results of a replicated experiment. In our experiments, we consider MAE of the state-of-the-art methods as the hypothetically assumed value. We define the null hypothesis and calculate statistical measures and then decide whether or not to accept or reject the null hypothesis. If the result of the test suggests that there is sufficient evidence to reject the null hypothesis, then we confirm that the improvements in the results are mainly due to the proposed method and not by fluke. However, if the result of our test suggests that there is insufficient evidence to reject the null hypothesis, then we decide that the achieved improvement in the MAE is mostly due to statistical chance. Table 4 represents, the results of one-sample t-test between the proposed method and the state-of-the-art age estimation methods. Column 3 and 5 of Table 4 report the probability of rejecting null hypothesis on databases, FG-NET and MORPH-II respectively. As Seen from the Table 4, the test has a lower probability of rejecting the hypothesis when the two classifiers have similar MAE and has a larger probability when they are different. In case of FG-NET, the proposed method surpasses six state-of-the-art methods with large difference. Therefore, the probability of rejecting the null hypothesis is more in the corresponding six cases. However, in case of MORPH-II database the proposed method achieves the probability of rejecting null hypothesis more than 0.99 in eight out of ten reported methods. The main reason for this achievement is large improvement in MAE compared to the corresponding state-of-the-art methods. It is clear from the significance test that improvement in the MAE is mainly due the proposed method and not by the statistical chance.

5 Conclusion

In this paper, we proposed a novel local feature descriptor, LDMP, that encodes aging information of facial images. The proposed descriptor captures aging cues at various stages of life, such as, shape changes during formative years and skin textural variations in adults. LDMP preserves shape and edge or wrinkle patterns with the help of directional filters. It also captures large textural variations in adults and seniors using local Tchebichef moments. This is the first time that moments and direction information is combined together to form a single byte local feature descriptor. We have performed age estimation experiments on two widely used databases FG-NET and MORPH II. Per age group MAEs in both the databases demonstrate that in general, LDMP efficiently captures both shape and textural changes. Experimental results on both the databases show that LDMP outperforms state-of-the-art methods in terms of both MAE and CS.

References

Ahonen T, Rahtu E, Ojansivu V, Heikkila J (2008) Recognition of blurred faces using local phase quantization. In: 19th International conference on pattern recognition, 2008. ICPR 2008. IEEE, pp 1–4

Bigun J, du Buf JH (1994) N-folded symmetries by complex moments in Gabor space and their application to unsupervised texture segmentation. IEEE Trans Pattern Anal Mach Intell 16(1):80–87

Budka M, Gabrys B (2013) Density-preserving sampling: robust and efficient alternative to cross-validation for error estimation. IEEE Trans Neural Netw Learn Syst 24(1):22–34

Chang KY, Chen CS (2015) A learning framework for age rank estimation based on face images with scattering transform. IEEE Trans Image Process 24(3):785–798

Chang KY, Chen CS, Hung YP (2011) Ordinal hyperplanes ranker with cost sensitivities for age estimation. In: 2011 IEEE conference on computer vision and pattern recognition (CVPR). IEEE, pp 585–592

Chihara TS (2011) An introduction to orthogonal polynomials. Courier Corporation

Choi SE, Lee YJ, Lee SJ, Park KR, Kim J (2011) Age estimation using a hierarchical classifier based on global and local facial features. Pattern Recogn 44(6):1262–1281

Chu Y, Zhao L, Ahmad T (2018) Multiple feature subspaces analysis for single sample per person face recognition. Vis Comput, 1–18

Cootes TF, Edwards GJ, Taylor CJ (2001) Active appearance models. IEEE Trans Pattern Anal Mach Intell 23(6):681–685

Dietterich T (1998) Approximate statistical tests for comparing supervised classification learning algorithms. Neur Comput 10(7):1895–1923

Farage M, Miller K, Elsner P, Maibach H (2008) Intrinsic and extrinsic factors in skin ageing: a review. Int J Cosmet Sci 30(2):87–95

Faraji MR, Qi X (2015) Face recognition under illumination variations based on eight local directional patterns. IET Biometrics 4(1):10–17

Feng S, Lang C, Feng J, Wang T, Luo J (2017) Human facial age estimation by cost-sensitive label ranking and trace norm regularization. IEEE Trans Multimed 19(1):136–148

Fernández C., Huerta I, Prati A (2015) A comparative evaluation of regression learning algorithms for facial age estimation. In: Face and facial expression recognition from real world videos. Springer, pp 133–144

Flusser J, Zitova B, Suk T (2009) Moments and moment invariants in pattern recognition. Wiley

Geng X, Yin C, Zhou ZH (2013) Facial age estimation by learning from label distributions. IEEE Trans Pattern Anal Mach Intell 35(10):2401–2412

Geng X, Zhou ZH, Smith-Miles K (2007) Automatic age estimation based on facial aging patterns. IEEE Trans Pattern Anal Mach Intell 29(12):2234–2240

Günay A, Nabiyev V (2018) A new facial age estimation method using centrally overlapped block based local texture features. Multimed Tools Appl 77(6):6555–6581

Guo G, Mu G (2011) Simultaneous dimensionality reduction and human age estimation via kernel partial least squares regression. In: 2011 IEEE conference on computer vision and pattern recognition (CVPR). IEEE, pp 657–664

Guo G, Mu G (2013) Joint estimation of age, gender and ethnicity: Cca vs. pls. In: 2013 10th IEEE international conference and workshops on Automatic face and gesture recognition (fg). IEEE, pp 1–6

Guo G, Mu G, Fu Y, Huang TS (2009) Human age estimation using bio-inspired features. In: IEEE Conference on computer vision and pattern recognition, 2009. CVPR 2009. IEEE, pp 112–119

Han H, Otto C, Liu X, Jain AK (2015) Demographic estimation from face images: human vs. machine performance. IEEE Trans Pattern Anal Mach Intell 37 (6):1148–1161

Haralick RM (1987) Digital step edges from zero crossing of second directional derivatives. In: Readings in computer vision. Elsevier, pp 216–226

Hu MK (1962) Visual pattern recognition by moment invariants. IRE Trans Inform Theory 8(2):179–187

Huerta I, Fernández C, Prati A (2014) Facial age estimation through the fusion of texture and local appearance descriptors. In: European conference on computer vision. Springer, pp 667–681

Jabid T, Kabir MH, Chae O (2010) Local directional pattern (LDP) for face recognition. In: 2010 Digest of technical papers international conference on consumer electronics (ICCE). IEEE, pp 329–330

Khotanzad A, Hong YH (1990) Invariant image recognition by Zernike moments. IEEE Trans Pattern Anal Mach Intell 12(5):489–497

Kirsch RA (1971) Computer determination of the constituent structure of biological images. Computs Biomed Res 4(3):315–328

Knuth DE (2007) Seminumerical algorithms

Kwon YH, da Vitoria Lobo N (1999) Age classification from facial images. Comput Vis Image Understand 74(1):1–21

Lanitis A, Taylor CJ, Cootes TF (2002) Toward automatic simulation of aging effects on face images. IEEE Trans Pattern Anal Mach Intell 24(4):442–455

Lanitis A, Draganova C, Christodoulou C (2004) Comparing different classifiers for automatic age estimation. IEEE Trans Syst Man Cybern Part B (Cybern) 34(1):621–628

Li Y, Peng Z, Liang D, Chang H, Cai Z (2016) Facial age estimation by using stacked feature composition and selection. Vis Comput 32(12):1525–1536

Liao SX, Pawlak M (1996) On image analysis by moments. IEEE Trans Pattern Anal Mach Intell 18(3):254–266

Ling H, Soatto S, Ramanathan N, Jacobs DW (2007) A study of face recognition as people age. In: IEEE 11th International conference on computer vision, 2007. ICCV 2007. IEEE, pp 1–8

Lu J, Liong VE, Zhou J (2015) Cost-sensitive local binary feature learning for facial age estimation. IEEE Trans Image Process 24(12):5356–5368

Marcos JV, Cristóbal G (2013) Texture classification using discrete tchebichef moments. JOSA A 30(8):1580–1591

Mitchell T, Buchanan B, DeJong G, Dietterich T, Rosenbloom P, Waibel A (1990) Machine learning. Ann Rev Comput Sci 4(1):417–433

Mukundan R (2014) Local tchebichef moments for texture analysis

Mukundan R, Ong S, Lee PA (2001) Image analysis by Tchebichef moments. IEEE Trans Image Process 10(9):1357–1364

Ouloul IM, Moutakki Z, Afdel K, Amghar A (2018) Improvement of age estimation using an efficient wrinkles descriptor. Multimed Tools Appl, 1–35

Phillips PJ, Moon H, Rizvi SA, Rauss PJ (2000) The feret evaluation methodology for face-recognition algorithms. IEEE Trans Pattern Anal Mach Intell 22 (10):1090–1104

Pontes JK, Britto Jr AS, Fookes C, Koerich AL (2016) A flexible hierarchical approach for facial age estimation based on multiple features. Pattern Recogn 54:34–51

Prewitt JM (1970) Object enhancement and extraction. Picture Process Psychopictorics 10(1):15–19

Ramanathan N, Chellappa R (2006) Modeling age progression in young faces. In: 2006 IEEE Computer society conference on computer vision and pattern recognition, vol 1. IEEE, pp 387–394

Rasmussen C (2006) Cki williams gaussian processes for machine learning mit press. Cambridge

Ricanek K, Tesafaye T (2006) Morph: a longitudinal image database of normal adult age-progression. In: 7th International conference on automatic face and gesture recognition, 2006. FGR 2006. IEEE, pp 341–345

Rivera AR, Castillo JR, Chae OO (2013) Local directional number pattern for face analysis: face and expression recognition. IEEE Trans Image Process 22(5):1740–1752

Rivera AR, Castillo JR, Chae O (2015) Local directional texture pattern image descriptor. Pattern Recogn Lett 51:94–100

Snelson E, Ghahramani Z, Rasmussen CE (2004) Warped gaussian processes. In: Advances in neural information processing systems, pp 337–344

Suo J, Wu T, Zhu S, Shan S, Chen X, Gao W (2008) Design sparse features for age estimation using hierarchical face model. In: 8th IEEE International conference on automatic face & gesture recognition, 2008. FG’08. IEEE, pp 1–6

Teh CH, Chin RT (1988) On image analysis by the methods of moments. IEEE Trans Pattern Ana Mach Intell 10(4):496–513

The fg-net aging database. http://www.fgnet.rsunit.com/

Thukral P, Mitra K, Chellappa R (2012) A hierarchical approach for human age estimation. In: 2012 IEEE International conference on acoustics, speech and signal processing (ICASSP). IEEE, pp 1529–1532

Wang M, Knoesen A (2007) Rotation-and scale-invariant texture features based on spectral moment invariants. JOSA A 24(9):2550–2557

Wang S, Tao D, Yang J (2016) Relative attribute svm+ learning for age estimation. IEEE Trans Cybern 46(3):827–839

Wee CY, Paramesran R, Mukundan R, Jiang X (2010) Image quality assessment by discrete orthogonal moments. Pattern Recogn 43(12):4055–4068

Weng R, Lu J, Yang G, Tan YP (2013) Multi-feature ordinal ranking for facial age estimation. In: 2013 10th IEEE international conference and workshops on automatic face and gesture recognition (FG). IEEE, pp 1–6

Wu T, Turaga P, Chellappa R (2012) Age estimation and face verification across aging using landmarks. IEEE Trans Inf Forens Secur 7(6):1780–1788

Yap PT, Raveendran P (2004) Image focus measure based on chebyshev moments. IEE Proc-Vis Image Signal Process 151(2):128–136

Yap PT, Paramesran R, Ong SH (2003) Image analysis by Krawtchouk moments. IEEE Trans Image Process 12(11):1367–1377

Zhang Y, Yeung DY (2010) Multi-task warped gaussian process for personalized age estimation. In: 2010 IEEE Conference on computer vision and pattern recognition (CVPR). IEEE, pp 2622–2629

Zhao W, Krishnaswamy A, Chellappa R, Swets DL, Weng J (1998) Discriminant analysis of principal components for face recognition. In: Face recognition. Springer, pp 73–85

Zhao W, Chellappa R, Phillips PJ, Rosenfeld A (2003) Face recognition: A literature survey. ACM Comput Surveys (CSUR) 35(4):399–458

Zhu K, Gong D, Li Z, Tang X (2014) Orthogonal Gaussian process for automatic age estimation. In: Proceedings of the 22nd ACM international conference on multimedia. ACM, pp 857–860

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Sawant, M., Addepalli, S. & Bhurchandi, K. Age estimation using local direction and moment pattern (LDMP) features. Multimed Tools Appl 78, 30419–30441 (2019). https://doi.org/10.1007/s11042-019-7589-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-019-7589-1