Abstract

This paper proposes a novel histogram-based multi-level segmentation scheme of hyper-spectral images. In the proposed scheme an Improved Particle Swarm Optimization (IPSO) algorithm is implemented as a nature-inspired evolutionary algorithm to overcome the drawback of premature convergence and hence getting stuck in local optima problem of PSO. The high-dimension of PSO is decomposed into several one-dimensional problems and premature convergence is removed from each one-dimensional problem. This algorithm is further extended for replacing the worst particles by the fittest particles, determined by their fitness values. Multiple optimal threshold values have been evaluated based on fuzzy-entropy aided with the proposed algorithm. The performance of the IPSO is compared statistically with other global optimization algorithms namely Cuckoo Search (CS), Differential Evolution (DE), FireFly (FF), Genetic Algorithm (GA), and PSO. The produced segmented output of IPSO-fuzzy is then combined with the available ground truth values of image classes to train a Support Vector Machine (SVM) classifier via the composite kernel approach to improving the classification accuracy. This hybrid approach (IPSO-SVM) is then applied to popular hyper-spectral imageries acquired by AVRIS and ROSIS sensors. The final evaluated outcomes of the proposed scheme are also qualitatively compared to show its effectiveness over the other state-of-art global optimizers.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Image segmentation is a fundamental step for meaningful analyzing and interpretation of an image. It is considered as a mandatory preprocessing step for extracting objects from its backgrounds in many computer vision oriented applications. Remote sensing Hyper-Spectral Imageries (HSI) have got importance as it played an important role to provide solutions in many applications like soil erosion monitoring, land-cover study, flood monitoring, assessment of forest resources etc. Unlike other spectral imaging, HSI collects information across the electromagnetic spectrum to obtain the spectrum of each pixel with the purpose of finding objects. As sensors capture a wide range of wavelengths of contiguous spectral bands so, it may cause a curse of dimensionality problem for effective segmentation [24]. Effective HSI segmentation still demands a challenging issue for the researchers. Segmentation denotes to put all homogeneous pixels in clusters concerning to some common features like color, intensity, and texture so that it can be analyzed properly. Thresholding approach is broadly accepted to achieve this goal to discriminate the objects from their background pixels.

In bi-level thresholding, the total image region is subdivided into two homogeneous pixel regions based on the histogram, minimum-variance, edge-detection etc. The reconstructed final image is a binary image where all pixels carry higher gray values than a threshold level, are put into a class whereas pixels having fewer values than a threshold level are put into another class. Some useful histogram, edge detection, minimum variance, interactive pixel classification bi-level thresholding techniques are surveyed in the literature [3, 41, 47, 55, 62]. Some global entropy-based bi-level segmentation techniques available in the literature like [15, 25, 29, 42, 48, 63]. These techniques are effective enough for bi-level thresholding, but not preferred for complex segmentation problems where multiple threshold values are desired. Adaptive neuro-fuzzy, hierarchical, histogram-based approaches for multilevel effective color image segmentation schemes are noted in the literature [8, 13, 28]. Multilevel approaches may still struggle because of its high time and working complexities [4, 27, 45, 61]. Researchers tried to overcome those issues by incorporating various exhaustive search process techniques to enhance the computational speed like [14, 54, 65] etc. Multilevel thresholding techniques with entropy maximization or minimization along with any meta-heuristic technique holds recent research interest [21].

Section 2 presents a literature survey related to meta-heuristic approaches, used to find multiple threshold values and a brief survey regarding supervised classification. Section 3 formulates the problem using fuzzy entropy for multilevel segmentation. Section 4 solves the multilevel problem by generating optimal solutions using the proposed Improved Particle Swarm Optimization (IPSO) technique. Dataset, experimental parameter setup are covered up in Section 5. The simulated results of the unsupervised segmentation scheme with its performance evolution along with hybrid classification obtained by different meta-heuristics are presented in Section 6. Finally, Section 6 draws a conclusion of the paper.

2 Related works

A meta-heuristic procedure is used to generate a search algorithm to provide the best solution to an optimization problem with limited computation capacity. As these approaches provide a better and easy sample set of solutions for a wide variety of complex problems, so it has been flourishing since almost last decade. These techniques are quite easy to generate optimal solutions to NP-hard problems as users don’t need to have detailed knowledge regarding the selection of the initial population. Some well-known derivative-free optimizing techniques like Genetic Algorithm (GA) [11, 43], Firefly (FF) [38, 44], Artificial Bee Colony (ABC) [7, 23, 26], Cuckoo search algorithm [6, 56], Differential Evolution (DE) [50] based approaches to find optimal solutions of meta-heuristic problems are surveyed in the literature.

Particle Swarm Optimization (PSO) is another popular stochastic global optimization algorithm of recent category [12, 17, 40, 52]. The algorithm was inspired by the real-life swarm behaviors and has become an effective tool for multilevel problem optimization. But the main drawback of this basic PSO is that it may get stuck in sub-optimal solution regions and the problem may increase further in high-dimensional spaces as its success depends on the combination of global exploration and local exploitation features during the optimization process. Researchers identified this problem and tried to improve the algorithm. Cooperative and comprehensive learning- based PSO algorithm surveyed in the literature [5, 35, 60]. Further literature reveals that effective multi-swarm based PSO algorithm proposed by various researchers like [37, 68]. In the year 2004, Ratnaweera et al. [46] proposed a self-organizing hierarchical particle swarm optimizer with time-varying acceleration coefficients. They incorporated mutation concept into PSO first and later on social and cognitive parts of PSO had been updated to generate new velocity of each particle whenever stagnancy occurred. In the recent year, Nayak et al. [39] proposed a modified PSO aided with extreme learning machine mechanism for pathological brain detection. Modified PSO successfully optimized the hidden node parameters and combined MPSO-ELM overcame the drawback of single SVM or ELM based classifiers. So there is a scope to form an improved PSO algorithm so that premature convergence caused by the curse of dimensionality can be removed and as well as high complexity and slow rate of convergence problem can also be improved.

2.1 A brief survey of supervised classification

In supervised classification, remote sensing image data are analyzed quantitatively with the help of its known ground truth values. Challenge is to develop an approach to classify the spectral domain into regions so that it matches with ground cover classes and hence increase the accuracy of the technique. One lightweight sparsity-based algorithm, Basic Thresholding pixel-wise classifier is one of the approaches proposed by [58, 59] increased the accuracy of classification and reduced computational cost a lot. Some popular multiple learning kernel-based approaches for hyper-spectral data are surveyed in the literature [9, 10, 53] as well. A simple composite kernel-based approach that controlled the relative proportion of the spatial and segmented information found in the literature [31]. A multi-modal logistic regression and Markov random fields based hyper-spectral image segmentation produced effective results proposed by [30]. Some more approaches also exist in the literature which combined supervised and unsupervised classification to form hybrid approaches to obtain better accuracy and less computational cost [16, 57]. Mughees et al. [36] proposed boundary adjustment technique by merging spatial and spectral information of hyper-spectral data that enhanced the accuracy over the ground truth. Ghamsi et al. [18] proposed a Majority Voting approach to combine the segmented results of hyper-spectral images obtained by Fractional-Order Darwinian PSO as an optimization algorithm with the supervised classification map to generate high accuracy of classified objects. Sarkar et al. [51] proposed Differential Evolution (DE) based optimized segmentation of land cover images and then formed one composite kernel by SVM with the known training and test data to improve the performance of supervised classification. Some current CNN-based spatial-spectral classification approaches of hyper-spectral images have been surveyed as well in the literature. A novel deep pixel-pair method had been proposed to increase the accuracy over conventional CNN-based approaches [32]. In that approach, center and surrounding pixels had been combined and got trained by CNN and final results determined by the voting strategy. Li et al. [33] combined composite kernels (spatial+spectral) with Adaboost framework which later incorporated with weighted Extreme Learning Machine to increase accuracy in imbalanced large datasets. Zhang et al. [67] proposed a diverse region-based CNN classification framework to exhibit spatial-spectral context- sensitivity which resulted accurate pixel classification. To remove the curse of dimensionality and excessive computation time problem at the time of classifying spectral images, a collaborative classification framework had been proposed [66]. In that method, deep spectral features of HSI had been extracted by the trained CNN model and later effective statistical image features had been learned through contextual vectors which were formed by the combination of the rich spectral, spatial and statistical information of the images. In the year 2018, Xu et al. [64] proposed a two-branch CNN to extract spatial-spectral information from multi-band images. Extracted spatial-spectral information had been combined through a dual-tunnel branch of cascade network to achieve more accurate classification results. Li et al. [34] presented a survey paper of dimension reduction of HSI based on discriminant analysis. The approaches aimed to reduce the dimensionality of highly correlated spectral bands without losing important information. Experimental results were compared between four well-known approaches namely Kernel Discriminant Analysis, Kernel Local Fisher’s Discriminant Analysis, Kernel Sparse Graph-based Discriminant Analysis, and Kernel Composite Graph-based Discriminant Analysis (KCGDA). In KCGDA, spectral-spatial information were integrated to achieve the highest accuracy. Motivated by the spectral-spatial classification approaches, one innovative spectral-spatial approach has been presented here to achieve highest accuracy. Though CNN based approaches generated higher accuracies for HSI dataset, but the computational cost for small datasets like HSI classification may discourage researchers. So, motivated by the above stated spatial-spectral combination approaches, we have proposed a composite spectral-spatial kernel-based application got trained by the conventional SVM classifier. The detailed contribution has been discussed next.

2.2 Author’s contribution

The key contribution of our approach is to produce accurate, robust and effective unsupervised segmentation results which will later be given as input to the supervised SVM classifier for improving the classification accuracy. Fuzzy entropy has been generated for multi-level segmentation problem. As problem has been extended to convex optimization search space, so improving the stagnancy caused by PSO has been essentially required. To remove the stagnancy of the PSO problem, an Improved version of PSO has been proposed which communicates through the introduction of context parameter. Features have been extracted using Principal Component Analysis (PCA) and then Attribute Profile (APs) have been formed from each PC to finally form Extended Multi-Attribute Morphological Profiles (EMAPs) which has been used here as spatial information [31]. Segmentation Map obtained by multi-level fuzzy entropy and IPSO has been used here as spectral information. The combined spatial and spectral kernels have been trained by the SVM classifier to generate the final results. Step-wise contribution is determined like following:

First of all the required parameters along with the threshold levels are set for the IPSO algorithm.

Next, composite high-dimensional swarm (formed with the help of threshold level) is broken into several one-dimensional swarms in search space.

In the proposed approach each swarm communicates with each other by exchanging information to find composite fitness of an entire system with the introduction of Context Parameter (CP) discussed in detail later.

Next, each particle’s new velocity is updated based on the Pbest information of any particle within a swarm to avoid premature convergence. Hence the new position of the particle is also updated for each generation by the tournament selection procedure.

Membership function based fuzzy entropy is calculated from image histogram which helps to find best velocity and position of the particle stated in the above step.

The segmented output as spectral information and known spatial information (obtained by the PCA and EMAP [31]) are then combined to form one composite Kernel (CK), based on the Gaussian radial basis function kernel with SVM is known as (SVM-CK) [31] is then considered to achieve better classification accuracy shown in the Fig. 1.

Next section formulates multilevel thresholding problem by the fuzzy entropy based objective function and is solved by using the proposed IPSO technique to get desired optimal thresholds.

3 Problem formulation

Multilevel thresholding of color image determines two or more optimum thresholds for each of the three components (red, green, blue) of the image. L*a*b* color space is used for segmentation problem than non-uniform color space like RGB to represent colors clearly. First of all, the RGB images have been converted to CIE L*a*b* color space using MATLAB. L* indicates lightness, a* red-green and b* blue-yellow color where each component value lies between 0 to 255. Let I is an original color image with size M × N and ri be the histogram of the image which is the combination of three components to store the color information i.e. \(r_{i}=(L^{*}_{i};a^{*}_{i};b^{*}_{i})\) for i = (1,2,..L) where \(L^{*}_{i}\), \(a^{*}_{i}\), \(b^{*}_{i}\) denote ith intensity value of the channels. L denotes the number of levels (0-256).

3.1 Multi-level fuzzy entropy

Traditional classical set (S) states that a collection of elements will either belong to or not belong to set S. But a fuzzy set is known as the extension of a classical set where an element can belong to partially also. Consider a fuzzy set F and can be defined as

where 0 ≤ μF(y) ≤ 1 and μF(y) is called the membership function, which finds the closeness of x to F.

In this paper we have chosen trapezoidal membership function of a fuzzy set to calculate the membership of k segmented regions, μ1, μ2,....μk by using 2 × (k − 1) unknown fuzzy parameters. Parameters are chosen as a1, b1..., ak − 1, bk − 1, where 0 ≤ a1 ≤ b1 ≤ ... ≤ ak− 1 ≤ bk− 1 ≤ L − 1 displayed in the Fig. 2. Hence following membership function can be defined for k-level thresholding:

The maximum fuzzy entropy for each of k-level segmentation can be expressed as:

where, \(R_{1}={\sum }_{i=0}^{L-1}{r_{i}}*\mu _{1}(i),\enspace R_{2}={\sum }_{i=0}^{L-1}{r_{i}}*\mu _{2}(i),....,\enspace R_{k}={\sum }_{i=0}^{L-1}{r_{i}}*\mu _{k}(i)\) Next, the optimum value of parameters can be achieved by maximizing the total entropy described in the following equation:

For easy computation, two dummy thresholds are t0 = 0 and tk = L − 1 are introduced with t0 < t1 < ... < tk− 1 < tk. The computational complexity for this multilevel problem is very expensive and high O(Lk− 1). It is also noted that growth of complexity is proportional to the increase of levels [20]. So, IPSO as a global optimization technique is proposed to optimize the (6) by reducing the time complexity and hence produce best optimal solutions in exhaustive search space. Multiple (k − 1) number of thresholds using fuzzy parameters can be obtained by the following formula:

4 Overview of particle swarm optimization (PSO) algorithm

In PSO algorithm, particles (multiple solutions) are put into multidimensional spaces and the fitness of each particle is evaluated in each iteration. Here the new velocity, as well as new position of a particle, is calculated on the based on its present velocity and position at the start of a new iteration. The distance between particle’s present and the best position called as pb whereas the distance between the present position of a particle and the position of the best particle among the total swarms is called as the gb. Assume it is required to solve a D-dimensional optimization problem by minimizing the objective function f(x) given as

where D denotes the number of parameters to be optimized. Here in basic PSO algorithm, a swarm of P “particles” is flown in a D-dimensional search space in a random manner to find the optimum solution of a fitness function. Let \(S_{a}=({S_{a}^{1}},{S_{a}^{2}},....{S_{a}^{D}})\) represents the position, \(V_{a}=({V_{a}^{1}},{V_{a}^{2}},....{V_{a}^{D}})\) the velocity and \(pb_{a}=(p{b_{a}^{1}},p{b_{a}^{2}},....p{b_{a}^{D}})\) the best previous position obtained so far for every ith particle which consists of the lowest fitness values. Similarly, the best position noted for each iteration in the entire search space is denoted by gb = (gb1, gb2,....gbD). The velocity \({V_{a}^{d}}\) of each particle in dth dimension for each iteration is determined by the following equation:

The additive influence of \({V_{i}^{d}}\) found in the previous iteration known as “momentum” component, individual weighting distance of particles from \(P{b_{a}^{d}}\) known as a cognitive component and its distance from gbd known as social component, cx and cy are the acceleration coefficients, r1, r2 are the random numbers randomly generated from 0 to 1, w is the inertia weight contains a high value at initial then gradually decreases according to the following equation:

winitial is the inertia weight at the starting condition, it is the present iteration count, 𝜃IW is the slope of inertia weight variation. The formula to get the best position of a particle in PSO is:

However, PSO algorithm suffers from two basic problems like “curse of dimensionality” and a tendency of premature convergence which is quite common in several optimization algorithms. This type of problem leads particle’s motion to get stuck and hence algorithm get prone to local optima convergence. As a result of that, some particles will gain low fitness values although their dimension values lie very close to the global optimal solution. Cooperation operation can remove this problem by acquiring information from “best” dimensions and preventing useful information from being unnecessarily discarded.

4.1 Motivation for improved PSO

“Curse of dimensionality” problem can be resolved by dividing a composite high-dimensional swarm into several one-dimensional swarms, which will cooperate with each other dynamically by exchanging the information to form composite fitness of an entire system. High-dimensional space can be divided into several one-dimensional spaces, so cooperation between each one-dimension can help to save the most useful information to accelerate convergence. Further premature convergence, caused by traditional PSO algorithm may be overcome by modifying the new velocity of each particle based on its pb information of within the swarm. In the next subsection, the detailed Improved Particle Swarm Optimization (IPSO) is proposed to overcome both the problems like “curse of dimensionality” and the tendency of premature convergence at a time. Figure 3 describes the block-diagram of the algorithm where as Algorithm 1 illustrates the detail working of the algorithm with code snippet.

4.2 Proposed improved particle swarm optimization (IPSO)

In this proposed approach, a D-dimensional problem is decomposed into D-one dimensional swarms where each swarm consists of S particles. A final global solution is evaluated by aggregating all the gb solutions achieved from each individual swarm. The fitness evaluation of particles is done based on the introduction of a new parameter called Context parameter (denoted by CP) that will be used to exchange information among all the individual swarms. The size of Context Parameter (CP) for a D-dimensional problem is equal to D-dimension itself. Here, when a bth swarm is active, then the CP is formed by remaining (D − 1) swarm’s gb values. Then the bth row of the CP is filled by each particle of bth swarm one by one. Each such CP is calculated for finding its composite fitness. So the pb value (Pb.pba) for the ath particle and the gb solution (Pb.gb) for bth swarm are determined in that manner so that they not only depend on the performance of the bth swarm alone. Now the velocity and position of each particle in the bth swarm (denoted by Pb.Sa and Pb.Va) are updated based on these pb and gb solutions. Lastly the bth entry of the CP is filled by the newly calculated Pb.gb and this process is continued for each bth swarm, till all the relevant context parameters are filled one by one. So, in brief, it can be concluded that the total search space is divided into D subspaces for D individual swarms and these swarms communicate with each other through their corresponding CP to determine their individual Pb.pba and Pb.gb. The final context vector is calculated by concatenating all the evaluated Pb.gb determined across all the swarms.

However, this above mentioned technique may still be get trapped in suboptimal locations within search space, where all individual solutions may fail to produce a better solution every time. To overcome this problem, we propose some modifications to the above-mentioned approach to determine new velocities and positions of each particle in each individual swarm in subspaces. So, the created problem of stagnation because of premature convergence is seriously taken care off, by permitting each particle to adjust its velocity (so, the position also), based on the pb information of any particle within the swarm. This helps to discourage premature convergence within the swarm strongly.

Now to select the particle, whose pb can be used to find the new velocity of any given particle within a given one-dimensional swarm is shown in the Algorithm 2. Hence, the new velocity update relation for each particle in a given swarm is demonstrated as

where fa decides which particle’s pb should be followed by this ath particle. It is defined in the Steps 1-4 in the Algorithm 2.

4.3 “Best” particles cloning and “Worst” particle destruction

This module is employed to each one-dimensional local swarm for further improvement of this optimization strategy. As the local swarms may get be stagnated for the last few iterations because of almost insignificant improvements in the fitness values of the gb particle for the given swarm, then particles are needed to sort according to their pb values. Assume Preplace are the number of particles identified based on their lowest fitness values, corresponding to their pb positions (called “Worst” particles), to be replaced. Similarly, Preplace number of particles are also being identified in this swarm, based on their highest fitness values, corresponding to their pb positions (called as “Best” particles). These particles will be used to replace the worst particles identified so far. Now a superior swarm will be formed to obtain better optimization performance in the search space. At the end of this approach, it can be found that each swarm contains Preplace number of positions where two particles are present simultaneously. So these particles are “cloned” to each other and this process is repeated for each one-dimensional swarm. We have considered 50% of the particles (Preplace = P/2) are declared as “Worst” whereas remaining 50% are designated as “Best” particles.

Our goal is to maximize the optimum k-dimensional vector [t0, t1, t2,....tk] by the proposed IPSO algorithm. But PSO is usually designed to solve minimization problem, so we solve the formulated problem of (6) by constructing fitness function as the reciprocal of it and then maximize ψ(a1, b1,...ak− 1, bk− 1). Now the computational complexity of the fuzzy entropy based IPSO algorithm is calculated as O(Np ∗ 2(k − 1) ∗ G), where Np is the maximum populations, D denotes dimensionally which is equal to the number of threshold levels (in this case 2 ∗ (k − 1), 2 unknown fuzzy parameters), G is the maximum iteration. It is clearly seen the complexity of the multilevel problem has been reduced effectively in the exhaustive search space because of applying the meta-heuristic optimization algorithm.

5 Experimental setup

5.1 Datasets

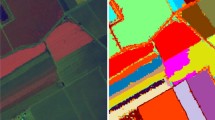

Three well-known publicly available datasets namely Indian Pines, Pavia University and Salinas are used for our experiments. Indian Pines image taken over the agricultural Indian Pine test site of North-western Indiana in June 1992, by the Airborne Visible/Infrared Imaging Spectrometer (AVIRIS) sensor mounted from an aircraft flown at 65,000 ft altitude. The image has 220 bands of size 145 × 145 with a spatial resolution of 20 m per pixel and a spectral coverage ranging from 0.4 to 2.5 μ m. 20 water absorption bands no. 104–108, 150–163, and 220 were removed before experiments and therefore 200 out of 220 will be used for evaluation.

The University of Pavia image holding an urban area surrounding the University of Pavia was captured by the Reflective Optics System Imaging Spectrometer (ROSIS-03) optical sensor. The image contains 115 bands and spatial dimensions 610 ×340 with a spatial resolution of 1.3 m per pixel and a spectral coverage of 0.43 to 0.86 μ m. 12 most noisy channels were removed and 103 bands are considered in our experiments.

The Salinas image was also captured by the AVIRIS sensor over Salinas Valley, CA, USA, and with a spatial resolution of 3.7 m per pixel and a spectral coverage of 0.4 to 2.5 μ m. The image has 224 bands of size 512 × 217. Like other images 20 water absorption noise bands no. 108–112, 154–167, and 224 were discarded for better experiments. Indian Pines and Salinas images have 16 different classes whereas Pavia image carries 9 classes (Figs. 4, 5 and 6). The brief description of each dataset is given in the Table 1.

5.2 Parameters setup

Simulations of the proposed scheme are evaluated in MATLAB R2015a in a workstation with Intel coreTM i3 3.2 GHz processor. Parameters setup of the proposed algorithm along with different optimization approaches like CS [1], DE [49], FF [44], GA [20], PSO [2] listed in the Table 2. All the tested algorithms run for 100 independent times where each run was carried out for the D × 1000 (D denoted the dimension of search space) number of fitness evaluations. The dimension of the search space is calculated by the input segmentation level (k) which varies for multilevel problems. Segmentation levels are set from 10 to 14 to avoid under segmentation problem. Performance of the meta-heuristics in terms of quantitative measurements are tested by evaluating the best-mean fitness values (fmean), standard deviations (fstd), and computation time (T) whereas the quality of segmentation

are evaluated by the known metrics like Peak Signal to Noise Ratio (PSNR), Structure similarity Index Measurement (SSIM).

6 Results and discussion

6.1 Unsupervised segmentation results

Computation speed, best-mean fitness value, and stability of the different optimization algorithms for segmentation level 10,12, and 14 of three specified images are compared and values are noted in the Tables 3, 4, and 5. The best values are marked as bold in these tables and results infer that the proposed algorithm produces best values for all the datasets in terms of time, fitness values and standard deviations. It is also to be noted that the computational complexity of IPSO is lesser compared to others even increasing levels (k). As the multilevel problem is treated as a maximization problem, so best mean fitness values suggest better working of the algorithm along with less standard deviations indicate robustness and stability of the algorithm. Further, convergence plots are generated for different datasets for 10, 12 and 14 levels. IPSO algorithm for Indian Pines image has converged at 11000 iterations whereas CS, DE, FF, GA, and PSO have started to converge at 13000, 14100, 14500, 16000, and 18500 iterations for level 10 segmentation, IPSO converged at 14000 and 15500 for level 12 and 14 segmentations where others not only struggled to converge fast but also produce less fitness values, hence trapped in premature convergence problem shown in the Fig. 7. Similarly, the fast convergence rate can be noticed for the Pavia and Salinas datasets in the Figs. 8 and 9. These figures state that proposed IPSO along with CS can only overcome the challenge to be get trapped in local optima problem where remaining can’t. The segmented 10, 12, and 14 level images obtained by the various optimization algorithms along with false-color images of Indian Pines, Pavia, and Salinas are listed in the Figures 10, 11, and 12 respectively. Quality of the reconstructed images for different levels are judged by the PSNR and SSIM values mentioned at the right corner of the Tables 3, 4, and 5. PSNR and SSIM values for color images are calculated by the given following equation:

Peak Signal to noise ratio:

where I and I′ are the original and segmented images and R × C is the size of the image and RMSE stands for root mean square error. Structural Similarity Index:

where μI and \(\mu _{I^{\prime }}\) are the mean values of the original image I and segmented image I’, σI and \(\sigma _{I^{\prime }}\) are the standard deviation of the original image I and segmented image I’, \(\sigma _{II^{\prime }}\) is the cross correlation and C1 and C2 are constants, c indicates color.

6.2 Hybrid classification results

In our experiment, 10% data of Indian pines are chosen as training samples and remaining 90% from ground-truth pixels are used as test samples for supervised classification. 16 classes and its corresponding training and test samples of Indian Pines data are shown in the Fig. 4. For both Pavia and Salinas data, 5% ground-truth pixels are chosen as training data and remaining 95% work as a test data shown in the Figs. 5 and 6. Now, level-wise segmentation results are mapped with the EMAP data to form a multiple learning kernel which is used to train SVM classifier. The following four accuracy metrics are considered for checking the classification accuracy:

Overall accuracy (OA)

OA calculates out of all the classes how much proportion of classes are mapped properly. It is measured in percentage and provides easiest accuracy information to users. It is calculated like following:

Kappa index (KI)

KI is measured by a statistical test to evaluate the accuracy. It is performed based on assigning some random values and checked how classification works better than random. Outcomes lie between -1 to + 1 which indicate accurate classification. Matlab function can evaluate KI effectively.

Mean accuracy (MA)

This accuracy calculates the ratio of correctly classified pixels to the total number of pixels in each class. MA score is measured with the help of ground truth values as per the following equation:

where TP is the number of true positives and FN is the number of false negatives in the classes. MA is the average Accuracy of all classes in that particular image.

Intersection over union (IoU)

IoU also known as the Jaccard similarity coefficient and is often used in statistical accuracy measurement. Like MA, IoU is also computed with the ground truth images as per the below equation:

where TP, FP, and FN describes true positive, false positive, and false negative predictions of images respectively. Mean IoU is the average IoU score of all classes in the particular image.

Table 6 displays the comparative results of traditional SVM and different meta-heuristic algorithms based SVMCK results for levels 10,12 and 14 of Indian Pines data. It is observed that the results produced by SVMCK with optimization algorithms are better than traditional SVM. Similarly, composite kernel-based classification results of Pavia and Salina datasets are shown in the Tables 7 and 8 respectively. All these results conclude that the composition of spatial and spectral information based kernel has produced more accurate and better classification than conventional SVM. All the accuracy parameters which have produced the best values for the proposed algorithm for every level of segmentation have been marked as bold. Finally, the outcomes of there datasets along with their ground-truth and training data are displayed in the Figs. 13, 14, and 15. The results are further compared with some current proposed CNN-based approaches like Deep Pixel Pair (DPP) [32], Extreme Learning Machines (ELM) [33], Diverse-Region based CNN [67], Collaborative Classification [66], Two-branch CNN [64], Kernel Composite Grapg Discriminant Analysis (KCGDA) [34], region-based fast R-CNN [19], and Mask R-CNN [22]. The parameters of the R-CNN based approaches have been set by the guidelines given in the manuscript [19]. As CNN performs better with large data, so above-stated approaches are tested along with the proposed approach on Pavia and Salinas dataset. To compare the outcome of the proposed algorithm with CNN-based approaches, 50% data instead of 5% have been trained and tested for both the dataset. The outcomes of the proposed technique has been passed by the morphological filter for comparing with CNN-based approaches. The detailed outcome of the proposed approach along with the mentioned CNN-based approaches for Pavia and Salinas dataset have been shown in the Figs. 16 and 17 respectively. Results of the accuracy parameters like OA, KI, MA, IoU are shown along with the individual accuracy of various classes in the Tables 9 and 10. Best values produced by the proposed algorithm are marked as bold and it is observed that the outcomes of the proposed scheme are marginal better as compared to the CNN-based approaches.

7 Conclusion and future work

An improved PSO based multi-level image thresholding scheme has been proposed. Among different entropies, membership function-based fuzzy entropy has been chosen as an objective function because of its easiness and effectiveness over color images to calculate threshold values. IPSO has successfully overcome the challenges of premature convergence compared to other known popular optimization algorithms. The composite-kernel based approach has generated better classification outcomes with respect to the accuracy parameters. The approach has been further compared with some CNN-based approaches and results infer that the values are closely following to them. This proposed approach can be applied to different types of machine-learning based classification fields like Optical Character Recognition, object identification in multiple frames of video surveillance problems etc. Like CNN, this approach can be applied on some larger dataset like ImageNet, COCO (Common Objects in Context) to recognize objects. Further, some parameters can be modified of the proposed IPSO to form kernel so that accuracy can reach to the higher side.

References

Agrawal S, Panda R, Bhuyan S, Panigrahi BK (2013) Tsallis entropy based optimal multilevel thresholding using cuckoo search algorithm. Swarm Evol Comput 11:16–30

Akay B (2013) A study on particle swarm optimization and artificial bee colony algorithms for multilevel thresholding. Appl Soft Comput 13(6):3066–3091

Arifin AZ, Asano A (2006) Image segmentation by histogram thresholding using hierarchical cluster analysis. Pattern Recogn Lett 27(13):1515–1521

Arora S, Acharya J, Verma A, Panigrahi PK (2008) Multilevel thresholding for image segmentation through a fast statistical recursive algorithm. Pattern Recogn Lett 29(2):119–125

Baskar S, Alphones A, Suganthan P, Liang J (2005) Design of yagi–uda antennas using comprehensive learning particle swarm optimisation. IEE Proceedings-Microwaves, Antennas and Propagation 152(5):340–346

Bhandari AK, Singh VK, Kumar A, Singh GK (2014) Cuckoo search algorithm and wind driven optimization based study of satellite image segmentation for multilevel thresholding using Kapur’s entropy. Expert Syst Appl 41(7):3538–3560

Bhandari AK, Kumar A, Singh GK (2015) Modified artificial bee colony based computationally efficient multilevel thresholding for satellite image segmentation using Kapur’s, Otsu and Tsallis functions. Expert Syst Appl 42(3):1573–1601

Boskovitz V, Guterman H (2002) An adaptive neuro-fuzzy system for automatic image segmentation and edge detection. IEEE Trans Fuzzy Syst 10(2):247–262

Camps-Valls G, Bruzzone L (2005) Kernel-based methods for hyperspectral image classification. IEEE Trans Geosci Remote Sens 43(6):1351–1362

Camps-Valls G, Gomez-Chova L, Muñoz-Marí J, Vila-Francés J, Calpe-Maravilla J (2006) Composite kernels for hyperspectral image classification. IEEE Geosci Remote Sens Lett 3(1):93–97

Cao L, Bao P, Shi Z (2008) The strongest schema learning ga and its application to multilevel thresholding. Image Vis Comput 26(5):716–724

Chander A, Chatterjee A, Siarry P (2011) A new social and momentum component adaptive pso algorithm for image segmentation. Expert Syst Appl 38(5):4998–5004

Cheng HD, Sun Y (2000) A hierarchical approach to color image segmentation using homogeneity. IEEE Trans Image Process 9(12):2071–2082

Dong L, Yu G, Ogunbona P, Li W (2008) An efficient iterative algorithm for image thresholding. Pattern Recogn Lett 29(9):1311–1316

Du J (2008) Property of tsallis entropy and principle of entropy increase. arXiv:08023424

Fauvel M, Tarabalka Y, Benediktsson JA, Chanussot J, Tilton JC (2013) Advances in spectral-spatial classification of hyperspectral images. Proc IEEE 101(3):652–675

Gao H, Xu W, Sun J, Tang Y (2010) Multilevel thresholding for image segmentation through an improved quantum-behaved particle swarm algorithm. IEEE Trans Instrum Meas 59(4):934–946

Ghamisi P, Couceiro MS, Martins FM, Benediktsson JA (2014) Multilevel image segmentation based on fractional-order darwinian particle swarm optimization. IEEE Trans Geosci Remote Sens 52(5):2382–2394

Girshick R (2015) Fast r-cnn. In: Proceedings of the IEEE international conference on computer vision, pp 1440–1448

Hammouche K, Diaf M, Siarry P (2008) A multilevel automatic thresholding method based on a genetic algorithm for a fast image segmentation. Comput Vis Image Underst 109(2):163–175

Hammouche K, Diaf M, Siarry P (2010) A comparative study of various meta-heuristic techniques applied to the multilevel thresholding problem. Eng Appl Artif Intel 23(5):676–688

He K, Gkioxari G, Dollár P, Girshick R (2017) Mask r-cnn. In: Proceedings of the IEEE international conference on computer vision, pp 2961–2969

Horng MH (2011) Multilevel thresholding selection based on the artificial bee colony algorithm for image segmentation. Expert Syst Appl 38(11):13,785–13,791

Hughes G (1968) On the mean accuracy of statistical pattern recognizers. IEEE Trans Inf Theory 14(1):55–63

Kapur JN, Sahoo PK, Wong AK (1985) A new method for gray-level picture thresholding using the entropy of the histogram. Comput Vis Graph Image Process 29 (3):273–285

Karaboga D, Gorkemli B, Ozturk C, Karaboga N (2014) A comprehensive survey: artificial bee colony (abc) algorithm and applications. Artif Intell Rev 42(1):21–57

Kittler J, Illingworth J (1986) Minimum error thresholding. Pattern Recogn 19(1):41–47

Kurugollu F, Sankur B, Harmanci AE (2001) Color image segmentation using histogram multithresholding and fusion. Image Vis Comput 19(13):915–928

Li CH, Lee C (1993) Minimum cross entropy thresholding. Pattern Recogn 26(4):617–625

Li J, Bioucas-Dias JM, Plaza A (2012) Spectral–spatial hyperspectral image segmentation using subspace multinomial logistic regression and Markov random fields. IEEE Trans Geosci Remote Sens 50(3):809–823

Li J, Marpu PR, Plaza A, Bioucas-Dias JM, Benediktsson JA (2013) Generalized composite kernel framework for hyperspectral image classification. IEEE Trans Geosci Remote Sens 51(9):4816–4829

Li W, Wu G, Zhang F, Du Q (2017) Hyperspectral image classification using deep pixel-pair features. IEEE Trans Geosci Remote Sens 55(2):844–853

Li L, Wang C, Li W, Chen J (2018) Hyperspectral image classification by adaboost weighted composite kernel extreme learning machines. Neurocomputing 275:1725–1733

Li W, Feng F, Li H, Du Q (2018) Discriminant analysis-based dimension reduction for hyperspectral image classification: a survey of the most recent advances and an experimental comparison of different techniques. IEEE Geosci Remote Sens Mag 6 (1):15–34

Liang JJ, Qin AK, Suganthan PN, Baskar S (2006) Comprehensive learning particle swarm optimizer for global optimization of multimodal functions. IEEE Trans Evol Comput 10(3):281–295

Mughees A, Chen X, Tao L (2016) Unsupervised hyperspectral image segmentation: merging spectral and spatial information in boundary adjustment. In: 2016 55th Annual Conference of the Society of instrument and control engineers of Japan (SICE). IEEE, pp 1466–1471

Mukhopadhyay S, Banerjee S (2012) Global optimization of an optical chaotic system by chaotic multi swarm particle swarm optimization. Expert Syst Appl 39 (1):917–924

Naidu M, Kumar PR, Chiranjeevi K (2017) Shannon and fuzzy entropy based evolutionary image thresholding for image segmentation. Alexandria Engineering Journal

Nayak DR, Dash R, Majhi B (2018) Discrete ripplet-ii transform and modified pso based improved evolutionary extreme learning machine for pathological brain detection. Neurocomputing 282:232–247

Önüt S, Tuzkaya UR, Doǧaç B (2008) A particle swarm optimization algorithm for the multiple-level warehouse layout design problem. Comput Indu Engineering 54(4):783–799

Otsu N (1979) A threshold selection method from gray-level histograms. IEEE Trans Syst Man Cybern 9(1):62–66

Pal NR (1996) On minimum cross-entropy thresholding. Pattern Recogn 29 (4):575–580

Pare S, Bhandari AK, Kumar A, Singh GK, Khare S (2015) Satellite image segmentation based on different objective functions using genetic algorithm: a comparative study. In: 2015 IEEE International conference on digital signal processing (DSP). IEEE, pp 730–734

Pare S, Bhandari A, Kumar A, Singh G (2017) A new technique for multilevel color image thresholding based on modified fuzzy entropy and Lévy flight firefly algorithm. Computers & Electrical Engineering

Perez A, Gonzalez RC (1987) An iterative thresholding algorithm for image segmentation. IEEE Trans Pattern Anal Mach Intell 6:742–751

Ratnaweera A, Halgamuge SK, Watson HC (2004) Self-organizing hierarchical particle swarm optimizer with time-varying acceleration coefficients. IEEE Trans Evol Comput 8(3):240–255

Revol C, Jourlin M (1997) A new minimum variance region growing algorithm for image segmentation. Pattern Recogn Lett 18(3):249–258

Sahoo PK, Arora G (2004) A thresholding method based on two-dimensional Renyi’s entropy. Pattern Recogn 37(6):1149–1161

Sarkar S, Paul S, Burman R, Das S, Chaudhuri SS (2014) A fuzzy entropy based multi-level image thresholding using differential evolution. In: International conference on swarm, evolutionary, and memetic computing. Springer, pp 386–395

Sarkar S, Das S, Chaudhuri SS (2015) A multilevel color image thresholding scheme based on minimum cross entropy and differential evolution. Pattern Recogn Lett 54:27–35

Sarkar S, Das S, Chaudhuri SS (2016) Hyper-spectral image segmentation using Rényi entropy based multi-level thresholding aided with differential evolution. Expert Syst Appl 50:120–129

Sathya P, Kayalvizhi R (2010) Pso-based tsallis thresholding selection procedure for image segmentation. Int J. Comput Appl 5(4):39–46

Schölkopf B, Smola AJ (2002) Learning with kernels: support vector machines, regularization, optimization, and beyond. MIT Press

Sezgin M, Taşaltın R (2000) A new dichotomization technique to multilevel thresholding devoted to inspection applications. Pattern Recogn Lett 21(2):151–161

Sezgin M, et al. (2004) Survey over image thresholding techniques and quantitative performance evaluation. J Electron Imag 13(1):146–168

Suresh S, Lal S (2016) An efficient cuckoo search algorithm based multilevel thresholding for segmentation of satellite images using different objective functions. Expert Syst Appl 58:184–209

Tarabalka Y, Chanussot J, Benediktsson JA (2010) Segmentation and classification of hyperspectral images using watershed transformation. Pattern Recogn 43(7):2367–2379

Toksöz MA, Ulusoy I (2016) Classification via ensembles of basic thresholding classifiers. IET Comput Vis 10(5):433–442

Toksöz MA, Ulusoy I (2016) Hyperspectral image classification via basic thresholding classifier. IEEE Trans Geosci Remote Sens 54(7):4039–4051

Van den Bergh F, Engelbrecht AP (2004) A cooperative approach to particle swarm optimization. IEEE Trans Evol Comput 8(3):225–239

Wang S, Chung Fl, Xiong F (2008) A novel image thresholding method based on parzen window estimate. Pattern Recogn 41(1):117–129

Weszka JS (1978) A survey of threshold selection techniques. Comput Graph Image Process 7(2):259–265

Wong AK, Sahoo PK (1989) A gray-level threshold selection method based on maximum entropy principle. IEEE Trans Syst Man Cybern 19(4):866–871

Xu X, Li W, Ran Q, Du Q, Gao L, Zhang B (2018) Multisource remote sensing data classification based on convolutional neural network. IEEE Trans Geosci Remote Sens 56(2):937–949

Zahara E, Fan SKS, Tsai DM (2005) Optimal multi-thresholding using a hybrid optimization approach. Pattern Recogn Lett 26(8):1082–1095

Zhang M, Li W, Du Q (2017) Collaborative classification of hyperspectral and visible images with convolutional neural network. J Appl Remote Sens 11(4):042,607

Zhang M, Li W, Du Q (2018) Diverse region-based cnn for hyperspectral image classification. IEEE Trans Image Process 27(6):2623–2634

Zheng H, Jie J, Hou B, Fei Z (2014) A multi-swarm particle swarm optimization algorithm for tracking multiple targets. In: 2014 IEEE 9th Conference on industrial electronics and applications (ICIEA). IEEE, pp 1662–1665

Acknowledgments

The authors would like to thank Mehmet Altan Toksöz, Student Member, IEEE, for helping by providing the color map information of ground-truth classes as source code and Prof. Jun Li, School of Geography and Planning, Sun Yat-Sen University, China for kindly providing the partial source codes of SVM-based the composite kernel used in this paper. The authors also would like to show their gratitude to Prof. P. Gamba and Prof. D. A. Landgrebe for kindly providing the data sets used in this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chakraborty, R., Sushil, R. & Garg, M.L. Hyper-spectral image segmentation using an improved PSO aided with multilevel fuzzy entropy. Multimed Tools Appl 78, 34027–34063 (2019). https://doi.org/10.1007/s11042-019-08114-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-019-08114-x