Abstract

No-reference image quality assessment (NR-IQA) aims to evaluate the perceived quality of distorted images without prior knowledge of pristine version of the images. The quality score is predicted based on the features extracted from the distorted image, which needs to correlate with the mean opinion score. The prediction of an image quality score becomes a trivial task, if the noise affecting the quality of an image can be modeled. In this paper, gradient magnitude and Wiener filtered discrete wavelet coefficients are utilized for image quality assessment. In order to reconstruct an estimated noise image, Wiener filter is applied to discrete wavelet coefficients. The estimated noise image and the gradient magnitude are modeled as conditional Gaussian random variables. Joint adaptive normalization is applied to the conditional random distribution of the estimated noise image and the gradient magnitude to form a feature vector. The feature vector is used as an input to a pre-trained support vector regression model to predict the image quality score. The proposed NR-IQA is tested on five commonly used image quality assessment databases and shows better performance as compared to the existing NR-IQA techniques. The experimental results show that the proposed technique is robust and has good generalization ability. Moreover, it also shows good performance when training is performed on images from one database and testing is performed on images from another database.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Recent advances in technology has resulted in a rapid increase in the use of multimedia content, which consists of audio, images and videos. Image acquisition systems and transmission mediums introduce distortions and artifacts in images that result in a degradation of their quality. Human beings who are the end-user of the multimedia content demands high-quality, while network providers strive for scalable and adaptable solutions. A trade-off between perceptual quality of multimedia content and the resources has to be reached. Therefore, a need for system that can perform image quality assessment (IQA) has gained importance. The IQA techniques can be broadly categorized into subjective and objective IQA. IQA performed by human beings is known as subjective IQA, which suffer from certain drawbacks, like tedious nature of the task, a large amount of time required for evaluation, and the results may vary depending upon the mood of the evaluator. This yields a need for designing new techniques that can perform IQA without human evaluators based on objective features extracted from distorted images. These IQA techniques are known as objective IQA techniques.

Objective IQA techniques are categorized into full reference IQA (FR-IQA), reduced reference IQA (RR-IQA), and no-reference IQA (NR-IQA). FR-IQA techniques [1, 9, 14, 37, 48] can only assess the image quality when the reference image is available. Structural similarity index (SSIM) and peak signal to noise ratio (PSNR) are commonly used FR-IQA techniques [8]. Quality score prediction performed by FR-IQA techniques may not correlate with the perceptual quality score of the distorted image because FR-IQA used pixel-wise approach. In real world scenarios, reference image is usually not available, therefore FR-IQA techniques have limited applications. In comparison to FR-IQA, RR-IQA techniques [4, 27, 28, 41, 54, 67] require some parameters extracted from the reference image to perform IQA. Similar to FR-IQA, RR-IQA techniques also require parameters extracted from the reference image, which are not be available in the majority of the real world scenarios. NR-IQA techniques [11, 18, 22, 29, 38,39,40, 46, 55, 65, 68] does not require any prior information regarding the reference image to perform IQA, due to which these techniques are widely acceptable for many applications.

Several NR-IQA techniques that extract features in spatial and transform domain have been proposed. In transform domain methods, image is decomposed into various subbands also called channels by using series of linear filters. The aim of this processing is to mimic the human visual system. Researchers have used different sets of linear filters and decompositions based on the requirement, computational complexity, and perceptual quality. These include discrete cosine transform (DCT) [47], Gabor decomposition [33], discrete wavelet transform (DWT) with steerable pyramids [36], curvelet [24], and shearlet [20] etc. In the spatial domain methods, NR-IQA techniques have utilized this ability of gradient magnitude to predict the image quality score [15, 19, 23, 61]. In [58], gradient magnitude (GM) and laplacian of Gaussian (LOG) have been used to predict the quality score. The technique follows a two-step approach i.e., identification of distortion type and quality score prediction. GM-LOG exploits the joint statistical relationship between the local contrast features of LOG and GM. Joint adaptive normalization based on gain control and divisive normalization models on the local neighborhood is used to remove the spatial redundancies of GM and LOG coefficients.

Many NR-IQA techniques have used DCT, DWT, GM-LOG and spectral domain features [26, 47, 58, 62] to de-correlate the images and assess the distortion in the image, but none of these methods try to estimate the noise within the image. The estimation of noise affecting an image makes IQA a trivial task and it can help in a better prediction of the image quality score. Wiener filter can be used with DWT to estimate the noise in the image. DWT coefficients are conditionally independent Gaussian variables and since noise is usually estimated by a stationary zero mean Gaussian variable therefore, noise can be estimated using a Wiener filter [44]. To the best of our knowledge no existing NR-IQA technique has used Wiener filter to estimate the noise affecting an image for the prediction of image quality score despite of its ability to model noise.

This paper proposes a new NR-IQA technique that takes advantage of the multi-resolution statistical properties of DWT coefficients for the estimation of noise in the images using Wiener filter. The proposed technique also offers the advantage of combining spatial and transform domain features. The task of IQA becomes trivial and straight forward if the noise present in the image can be estimated. Gradient magnitude and DWT is computed for the distorted image. Wiener filter is applied on the DWT coefficients of the image to estimate the noise. The estimated noise image is constructed by taking the inverse DWT. Joint adaptive normalization is applied on the gradient magnitude and the estimated noise image to extract features that are statistically independent of each other. These features are given as input to compute the image quality. Most of the success contributed to the newly proposed technique arises from the Wiener filtered features, which characterize various image semantic structures like lines, edges, and corners. These structures are closely related to the perceptual quality of images. Wiener filtered DWT coefficients are sensitive to intensity changes in the image. Moreover, the Wiener filtered DWT coefficients based features respond well to local contrast in a small spatial neighborhood. These characteristics allow the proposed technique to correlate well with perceptual quality of image. The major contributions of the proposed technique are:

-

1.

Wiener filtered DWT coefficients are used to estimate noise affecting the image to better predict the quality score.

-

2.

Three new universal IQA methods are proposed based on the estimated noise image and Gradient magnitude features, which improve the natural scene statistics (NSS) of extracted features for better image quality score prediction.

-

3.

To better represent noise affecting the image, decomposition level for DWT is determined using PSNR between the Wiener filtered and the distorted image.

-

4.

The proposed technique shows better performance in predicting image quality and provides a robust model that is independent of the database.

Rest of the paper is organized as follows. Some recent NR-IQA methods are reviewed in Section 2. Section 3 explains the proposed methodology. Section 4 presents the experimental results followed by conclusion in Section 5.

2 Related work

NR-IQA techniques are broadly divided into two categories i.e., distortion specific and general purpose techniques [36]. In distortion specific techniques, prior information regarding the type of distortion affecting the image is available [3, 6, 16, 49, 50, 53]. These techniques show better performance, but in practical scenarios the distortion type affecting the image is not known, which limits their application. In contrast to distortion specific techniques, general purpose NR-IQA techniques require no prior information regarding the distortion type affecting the image. General purpose NR-IQA techniques used either opinion unaware features [34, 59, 64] or opinion aware features [2, 24, 26, 58].

Recently some NR-IQA techniques based on opinion unaware features have been proposed, which do not require any training over difference mean opinion score (DMOS) for the prediction of image quality score. Natural image quality evaluator (NIQE) [34] is an opinion unaware NR-IQA technique that extracts features in spatial domain and does not require training on DMOS. NIQE extracts a collection of quality aware statistical features based on a corpus of natural images. The extracted features are then fitted on a multivariate Gaussian (MVG) model. A multivariate Gaussian fit extracted from the corpus of natural images is used to compute the quality of the test image by measuring the distance between the features extracted from the test and pristine images. Integrated local natural image quality evaluator (IL-NIQE) is also an opinion-unaware NR-IQA technique [64], which extracts natural scene statistics (NSS) based features that are used to learn a MVG distribution. The distance between the MVG distribution of natural and distorted images is used to assess the quality of images. IL-NIQE is based on NIQE, but it differs from NIQE in terms of quality aware features and global MVG model. In [59], a codebook representation using raw image patches and soft descriptors are used to predict the quality of images. The technique is termed as codebook representation for no-reference image assessment (CORNIA). Opinion unaware NR-IQA techniques are highly dependent on the quality and content of images in the corpus. Furthermore, the performance of these techniques is limited by the number of images in the corpus i.e., the performance decreases if the set of images in the corpus is small [57]. Although opinion unaware NR-IQA techniques offer an attractive proposition of requiring no training for IQA, but the ultimate users of images are usually human beings. Due to this reason subjective evaluation performed by human beings is considered as standard and DMOS is the best solution for image quality assessment.

Opinion aware NR-IQA techniques require DMOS values evaluated by human observers for training the prediction model. Majority of the opinion aware NR-IQA techniques proposed in literature have used NSS properties of images to predict the quality score. NSS based techniques work under the assumption that natural images follow a regular pattern, which is disrupted in the presence of distortion. NSS based NR-IQA techniques measure the deviation in statistics between the natural and distorted images to assess the quality of images. NSS based features can effectively represent the degradation in images therefore, they are the most commonly used.

Blind image integrity notator using DCT statistics (BLIINDS-II) utilizes DCT to predict the quality score of images [47]. Twenty four features are extracted using DCT, which are given as input to the Bayesian inference model for the prediction of quality score. Wavelet transform often substitutes typical Fourier transform based on the property of concentrating significant data into a fewer coefficients. In image quality assessment the use of wavelet transform is wide and varied. In [13], just noticeable blur (JNB) concept and the wavelet transform is used to develop blur objective quality metric. This model rely on human visual system (HVS) and used psychometric functions to approximate the perceived blur in the edge image. In [31], the ability of wavelet transforms to extract high frequencies has been exploited for edge analysis. Multi-scale wavelet based structure aids in blur image quality assessment, which represent the gradient information of the color images. In [60], the probability of blur detection based on granularity, sharpness, and L1-norm estimation of the given image in DWT is cumulated to form a metric for quality assessment.

In distortion identification-based image verity and integrity evaluation (DIIVINE) [36], DWT over three scales and six orientations is used to extract a feature set. These features are subjected to support vector classification (SVC) for the prediction of distortion type and subsequently given as an input to support vector regression (SVR) for the prediction of quality score. Complex distortion identification-based image verity and integrity evaluation (C-DIIVINE) has used complex DWT over two scales and six orientations [62]. A complex Gaussian mixture model, generalized Gaussian distribution and Cauchy distribution is used to model the complex DWT features. In C-DIIVINE, the distortion type affecting the image is determined using SVC and then quality score is predicted using SVR. In [63], quality aware features are extracted using joint generalized local binary pattern (GLBP). The images are decomposed into multi-scale subbands using Laplacian of Gaussian. These subbands are encoded with GLBP and the quality aware features are formed from the joint GLBP histograms, which are given as input to SVR to predict image quality score. In [5], free energy based brain theory is used to extract three group of features i.e., HVS features using free brain theory, structural information and gradient information. The extracted features are given as input to SVR to assess the quality of image. The major disadvantage of opinion aware NR-IQA techniques is the requirement of large number of DMOS for training a robust model that can predict the quality score of images. Execution time and computational expense is of vital importance when designing the NR-IQA techniques for real-time scenarios. It is established in literature that GM-LOG is less computationally complex and also captures local image features effectively in comparison to many NR-IQA techniques. These properties motivate us to use image gradient and estimated noise image using Wiener filtered DWT coefficients for NR-IQA.

3 Proposed methodology

Figure 1 presents the proposed methodology for NR-IQA using Wiener filtered wavelet coefficients and gradient magnitude. The DWT and GM is applied to the input distorted image. Wiener filter is applied on wavelet coefficients to estimate the noise affecting the image in wavelet domain. Inverse DWT is then applied on filtered DWT coefficients to reconstruct the estimated noise image N(i, j). Joint adaptive normalization is then applied on N(i, j) and GM(i, j) of the distorted image to extract feature vector Fv, which are given as input to the SVC for classification of distortion type and computation of threshold value TH. The threshold value indicates the confidence with which the distortion affecting the image is believed to belong to a certain distortion type. If the total number of distortion types are ℵ then, the extracted features are given to ℵ distortion specific regression models to compute ℵ quality scores. The ℵ quality scores are combined together using threshold value to compute a single universal quality score that contains contributions from all the distortion types. The details of each block are as follows.

3.1 Discrete wavelet transform (DWT)

DWT has been extensively used in many imaging problems including NR-IQA. Fourier transform only provides information about the frequency component of the image. However, DWT uses windows of variable size to provide spectral as well as spatial information of the image. DWT provides precise frequency and time information at low and high frequencies respectively. This property makes DWT suitable for analysis of irregular patterns such as noise. Two dimensional DWT is given as,

where I represents the input image, q0 is an arbitrary starting scale, \(W_{\phi (q_{0},m,n)}\) represents an approximation of I(i, j), \(W_{\psi ^{t}(q,m,n)}\) represents the horizontal. vertical and diagonal details of I(i, j). ϕq, m, n and \(\psi ^{t}_{q,m,n}\) are given as,

3.2 Gradient magnitude

Human visual system is highly sensitive to image local structures, which are effectively captured by the image gradient. IQA techniques proposed in [15, 19, 23, 61] have utilized this ability of gradient magnitude to predict the image quality score. The gradient magnitude of image is calculated as,

where hx and hy are gradient windows in horizontal and vertical direction respectively, and ⊗ denotes the convolution operator.

3.3 Wiener filter

Wiener filter works by minimizing the error between the observed signal and desired signal, which is generally considered as minimum mean square error filter. The wavelet coefficients are assumed to be conditionally independent random variables. The parameters for these wavelet coefficients are spatially varying and can be estimated by neighborhood in the adjacent scales and spatial locations. The noise in image is modeled as stationary independent zero-mean Gaussian variables. As both the wavelet coefficients and noise are modeled as Gaussian distribution, therefore noise can be estimated using Wiener filter [12]. The wavelet coefficients are computed over the distorted image, which can be written as,

where W(m, n) are the wavelet coefficients including approximation, horizontal, vertical and diagonal details, sm, n are the coefficients of the clean image, and ηm, n are the coefficients of noise component. Noise affecting the image in wavelet domain is estimated using Wiener filter as,

where ℜ(m, n) is the estimated noise in wavelet domain, \({\sigma ^{2}_{n}}\) is the variance of ηm, n and q(m, n) is calculated as,

where R is the size of subbands and Qm, n is calculated as

The estimated noise image is then computed by applying the inverse DWT on estimated noise in wavelet domain as,

where ℜϕ(q0, m, n) represents the approximation and \(\Re _{\psi }^{t}(q,m,n)\) represents the horizontal, vertical and diagonal details of the noise component. The noise image obtained using inverse DWT is then used to extract features for NR-IQA.

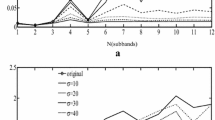

In order to show the significance of Wiener filtered DWT coefficients, the Euclidean distance between the marginal distribution of pristine and distorted images are calculated. The feature set, which gives larger Euclidean distance is considered to be better for IQA. Figure 2 shows the plots for distribution of LOG and Wiener filtered DWT coefficients for pristine and synthetic images. It can be observed that the marginal distributions for the Wiener filtered DWT coefficients show larger difference for synthetic and natural images as compared to LOG coefficients. Therefore, Wiener filtered DWT coefficients show better performance as compared to LOG features. This is also validated by the Euclidean distance of 0.1091 and 0.2355 between the top row pristine image and bottom row synthetic image for the LOG feature distribution and Wiener filtered DWT coefficient features respectively.

Figure 3 shows the change in marginal distribution of LOG features and Wiener filtered DWT coefficient features over varying levels of Gaussian blur distortion type. It can be observed that the difference between the marginal distributions of pristine and distorted images for the Wiener filtered DWT coefficients show larger difference than the distribution of LOG features. This is also validated by measuring the Euclidean distance between the distributions of LOG features and Wiener filtered DWT coefficients of pristine and distorted images with different levels. The Euclidean distance for the Wiener filtered DWT coefficients between the pristine and distorted images for level 1, level 2, level 3 and level 4 distortion is 0.2398, 0.2655, 0.2965, 0.4089 respectively. In comparison the Euclidean distance for the LOG features between the pristine and distorted images for level 1, level 2, level 3 and level 4 distortion is 0.0768, 0.1131, 0.1386, 0.1552 respectively. It can be seen that the Euclidean distance increases as the level of distortion increases for both the LOG features and Wiener filtered DWT coefficients, but Euclidean distance is higher for Wiener filtered DWT coefficients, which shows that Wiener filtered DWT coefficients will perform better than LOG features.

3.4 Joint adaptive normalization (JAN)

GM and Wiener filtered DWT coefficients remove significant amount of redundant information present in the image but correlation among neighboring pixels also known as local redundancy may still be present. This is also valid for other bandpass based feature extraction techniques such as discrete cosine transform, Laplacian of Gaussian, mean subtracted contrast normalized coefficients etc. The local redundancies are removed by using adaptive gain control [7] or divisive normalization models [30, 45]. These techniques divide the image into channels of different frequencies and then normalize each coefficient with the average energy of a local neighborhood, which is centered at the current coefficient. This kind of normalization whitens the image and causes loss in the contrast. In the proposed technique, the features are divided into two channels i.e., GM and N. Unlike [30, 45] where normalization is performed over each channel, joint normalization of GM and N is performed to remove the correlation between gradient image and the estimated noise image. JAN is performed on GM(i, j) and N(i, j) components of the image as,

where ε is a small positive constant to avoid numerical instability when GI is small, which is calculated as,

where Ωi, j is a local window centered at (i, j) and whose size is dependent on a spatially truncated Gaussian kernel ω of scale 1, ω consists of positive weights whose sum is equal to 1, and \(F_{I} = \sqrt {{G^{2}_{M}}(i,j) + N^{2}(i,j)}\).

3.5 Feature extraction

After JAN, the joint empirical distribution of gradient image \(\tilde {G}_{M}(i,j)\), and noise image \(\tilde {N}_{I}(i,j)\) is computed. Quantization is applied to get a bivariate histogram of \(\tilde {G}_{M}(i,j)\) and \(\tilde {N}_{I}(i,j)\). \(\tilde {G}_{M}(i,j)\) is quantized into O bins i.e., g1, g2, … , gO, and \(\tilde {N}_{I}(i,j)\) into R bins i.e., l1, l2, … , lR. In terms of probability function, the joint empirical distribution is given as,

where u = 1, … , O and v = 1, … , R. In order to reduce the high dimensionality of F(u, v), smaller set of quality determining features are extracted from F(u, v). The marginal probability functions PG and PN of \(\tilde {G}_{M}(i,j)\) and \(\tilde {N}_{I}(i,j)\) are then obtained as,

The dependency between gradient magnitude and the estimated noise image is not modeled by PG and PN. If gradient magnitude and estimated noise image features are independent then Fu, v = PG × PN for all u and v. An index can be defined, which measures the dependence between gradient magnitude and estimated noise image features. It is given as,

Usually gradient magnitude and estimated noise image features are not independent. Therefore, using Ku, v directly as features is not a good choice since, it has the same dimensions as Fu, v. As a substitute we can calculate the dependency of each specific value \(\tilde {G}_{M}(i,j)=g_{m}\) against all possible values of \(\tilde {N}_{I}(i,j)\) using marginal probabilities \(P(\tilde {G}_{M}(i,j)=g_{u})\) and the dependency of each specific value of \(\tilde {N}_{I}(i,j)=l_{v}\) against all possible values of \(\tilde {G}_{M}(i,j)\) using marginal probability \(P(\tilde {N}_{I}(i,j)=l_{v})\). Since, QG ≥ 0, QN ≥ 0 and \({\sum }_{m}P(\tilde {G}_{M}(i,j)=g_{u})={\sum }_{u}Q(\tilde {N}_{I}(i,j)=l_{v})= 1\). Hence, they can be viewed as probability distributions distributions and are given as,

where QG and QN describe the statistical interaction between normalized gradient magnitude features and estimated noise image features. It can be seen from Fig. 4 that the marginal distribution for natural images and synthetic images are dissimilar. Three different combinations of marginal distributions are explored to constitute three different models labeled as GM-WFW1, GM-WFW2 and GM-WFW3. PG and PN are employed in model GM-WFW1, QG and QN are used in model GM-WFW2 and all of the statistical features PG, PN, QG and QN are used in the model GM-WFW3.

3.6 Quality score prediction using support vector classification and regression

The extracted features are given as input to the a pre-trained SVC that aims to identify the distortion type affecting the input image. The input to the pre-trained SVM classifier model are extracted features and the output is the threshold value (TH) defining the confidence with which a distortion type affecting the input image belongs to a certain distortion type. The statistical features obtained in the previous step are given as input to the SVR, which aims to find a function to predict the quality score. A distortion specific regression model for each distortion type is trained i.e., if there are ℵ number of distortion types, ℵ number of regression models are trained and ℵ quality scores for each input image are obtained. Once ℵ number of quality scores over each distortion type for the input image is computed using distortion specific regression models, the overall quality score Qtotal is obtained as,

where \(q_{\hbar }\) is the estimated quality score for the \(\hbar ^{th}\) distortion type. The weight of the predicted quality score for each distortion type \(\tau _{\hbar }\) is obtained by utilizing TH as,

where \(P(\wp =\aleph ^{\hbar }|F_{v})\) is the posterior probability that random identity function ℘ belongs to the \(\hbar ^{th}\) distortion type i.e., \(\aleph ^{\hbar }\). \(T_{H}^{\hbar }\) is the threshold function value obtained from SVC for the \(\hbar ^{th}\) distortion type, Fv are the extracted features for NR-IQA and \(\hbar = 1\ldots \aleph \).

Typically, the fitting of DMOS values is performed by using a nonlinear regression logistic function, because the behavior of the subjective score given by the user is non-linear. This non-linear mapping is used while calculating the indices of correlation constant and error, which is described as,

where xi is the non-linear mapped score corresponding to the predicted DMOS score x. β1, β2, β3, β4, and β5 are the non-linear curve fitting function parameters.

4 Experimental results

4.1 Performance evaluation parameters

The proposed method is evaluated using Spearman rank ordered correlation constant (SROCC) and Pearson correlation constant (PCC). All the parameters measure the consistency between the predicted quality score using NR-IQA techniques and DMOS. A value close to 1 for SROCC and PCC, signifies superior performance. SROCC is given as,

where Xi = rank(Dp), \(\bar {X}=midrank(D_{p})\), Yi = rank(D) and \(\bar {Y}=midrank(D)\). PCC is given as,

where Dp is the predicted quality score and D is the DMOS.

4.2 IQA databases

Five commonly used IQA databases i.e., LIVE [51], CSIQ [17], TID2008 [43], TID2013 [42] and CID2014 [25] are used for evaluating the proposed NR-IQA models. The LIVE database is formed at the University of Austin, Texas. The database is composed of 29 high resolution (768 × 512) 24-bits/pixel RGB color reference images with 5 types of distortions, i.e., fast fading (FF), Gaussian blur (GB), JPEG compression (JPEG), JPEG2000 compression (JP2K), and white noise (WN). The LIVE database contains a total of 779 distorted images. The TID2008 contains 25 original reference images that are distorted using 17 type of distortions. Each reference image is distorted at 4 different levels of individual distortions. TID2008 contains a total of 1700 distorted images. The distortions present in TID2008 database are WN, additive noise in color components (ANC), spatially correlated noise (SCN), masked noise (MN), impulse noise (IN), quantization noise (QN), GB, image de-noising (ID), JPEG, JP2K, JPEG transmission errors (JPEGTE), JPEG2000 transmission errors (JP2KTE), non-eccentricity pattern noise (NEPN), local block-wise distortions (LBD), mean shift (MES) and contrast change (CC). CSIQ database consists of 30 reference images that are distorted by 6 different distortions that include GB, WN, global contrast decrements (GC), JPEG, JP2K and additive Gaussian pink noise (PN). A total of 900 images are present in the CSIQ database. The subjective quality score of 866 images is provided. The evaluators selected for the evaluation of images were from different countries and diverse social levels. The CID2014 database consists of 23 reference images of resolution 800 × 800. Six type of distortions are considered, which include Poisson noise (PNO), JPEG, JP2K, GB, SGCK gamut mapping distortion (GMD) and delta GMD. Each image is distorted at five different levels of distortion. The subjective quality scores are obtained from 17 subjects who viewed the images at two distances i.e., 100cm and 50cm. CID2014 database consists of a total of 690 images. The TID2013 consists of 25 pristine images that are affected by 24 types of distortions i.e., WN, ANC, SCN, MN, high frequency noise (HFN), IN, QN, GB, ID, JPEG, JP2K, JPEGTE, JP2KTE, NEPN, LBD, MES, CC, change of color saturation (CCS), multiplicative Gaussian noise (MGN), comfort noise (CN), lossy compression of noisy images (LCNI), image color quantization with dither (ICQD), chromatic aberrations (CA), sparse sampling and reconstruction (SSR). TID2013 contains DMOS of 3000 images for quality evaluation of images.

4.3 Parameter selection of gradient image and wiener filtered image

The range of variance σ for gradient image is tested between 0.5 to 0.8. It is observed that there is no considerable difference in the overall performance of the proposed NR-IQA technique, if σ is varied between 0.5 to 0.8 except for the case of fast fading distortion type. The variation of σ from 0.5 to 0.8 leads to an increase in performance of spearman correlation coefficient, from 0.90 to 0.92. Therefore, a value of 0.5 is selected for GB, JP2K, JPEG, and WN, whereas a value of 0.8 is used for FF.

It has been observed that the performance of the proposed methodology is affected by the level of wavelet decomposition. Therefore, for optimal performance different wavelet decomposition levels are used for each distortion type. In order to find the correct decomposition level for each distortion type, the decomposition level is raised from 1 to 4. PSNR value is calculated between the attenuated wavelet coefficients and the distorted wavelet coefficients. The level with the maximum PSNR value is chosen as the decomposition level. Similarly, the PSNR values are plotted against the base function of wavelet transform in Fig. 5. Haar function shows the highest ratio for WN, JP2K, GB and FF and coiflet function shows highest for JPEG. Figure 6 shows the ratio between distorted and Wiener filtered image values for five distortion types when noise variance \({\sigma _{n}^{2}}\) is varied. The value of \({\sigma _{n}^{2}}\) that produces the largest value of ratio for a certain distortion type is chosen as the \({\sigma _{n}^{2}}\) for that distortion type.

4.4 Performance comparison

The proposed NR-IQA technique is compared with three FR-IQA i.e., PSNR, SSIM [59] and FSIM [61] and ten NR-IQA techniques i.e., blind image quality index (BIQI) [35], BLIINDS-II [47], blind/referenceless image quality evaluator (BRISQUE) [33], codebook representation for no-Reference image assessment (CORNIA) [59], DIIVINE) [36], blind image quality assessment using joint statistics of gradient magnitude and Laplacian features (GM-LOG) [58], GLBP [63], NR free energy metric (NFERM) [5], NR structural and luminance features (NRSL) [21], PATCH-IQ [32], Hybrid [56] and improved NSS [66]. The performance is evaluated in terms of SROCC and PCC. The dataset is portioned into two non-overlapping sets i.e., training and testing set. The training and testing is performed 1000 times by randomly selecting 80% images for training and 20% for testing. It is ensured that there is no content overlap between the training and testing dataset. Median values of SROCC and PCC over 1000 runs are reported. The optimal parameters for SVR regression are computed using grid search.

Table 1 shows the overall performance of the proposed methodology in comparison to the state-of-the-art NR-IQA techniques. A universal quality score is predicted using all the distortion specific regression models based on threshold values TH. The SROCC and PCC scores of top two performing techniques are shown in bold. It can be observed that FSIM gives the best performance overall, which is a FR-IQA technique and is expected to give better performance than NR-IQA techniques. The proposed method GM-WFW3 shows the best performance and outperforms all the NR-IQA techniques in terms of higher SROCC and PCC scores. GM-WFW3 uses gradient magnitude and the estimated noise image using Wiener filter for the prediction of quality score. GM-WFW2 and GM-WFW3 also show promising results as only M3 and NRSL show better performance in comparison. Table 2 shows the performance comparison of proposed methodology with state-of-the-art NR-IQA techniques when all the images in a particular database are considered irrespective of distortion type and a single universal quality score is computed using threshold values TH. The bold face values in Table 2 show the top two performance values. It can be observed that the proposed NR-IQA GM-WFW3 and GM-WFW2 shows the best performance over TID2013 and CID2014 IQA databases. The third best performing technique is also the proposed technique i.e., GM-WFW1.

Comparison of the proposed method with NR-IQA techniques in terms of SROCC values, on individual distortion types over three subjective IQA databases is presented in Table 3. The NR-IQA techniques achieving the best performance in comparison to existing NR-IQA techniques are presented in boldface. The hit-count in Table 3 is the number of times each model is ranked best or better than existing NR-IQA techniques for a particular distortion type. The proposed method performs better than the state-of-the-art NR-IQA techniques. It can be observed that the proposed model GM-WFW3 ranks first, having hit count value of 48, followed by proposed model GM-WFW1, having hit-count value of 26. The third ranked model is GM-WFW2, which has a hit count of 21.

In Tables 1, 2 and 3 training and testing is performed on images from the same database. NR-IQA technique should be database independent i.e., the model trained on one database should be equally affective in predicting the quality score of images in other databases. Therefore, to establish the generality and robustness of the proposed technique, cross database validation is performed. Table 4 presents the performance comparison of proposed models with state-of-the-art NR-IQA techniques when training is preformed on images from one database and testing is performed on the images from another database in terms of SROCC score. Three databases are considered, which gives a combination of six training and test database pairs. The GM-WFW3 model outperforms the NR-IQA techniques by achieving best SROCC scores over all the six combination pairs.

The execution time of a technique is of importance in many real-time imaging applications. Hence, the runtime is important when evaluating an NR-IQA technique. The computational complexity of the proposed model is computed along with the existing NR-IQA models, on Core i3@2GHz MATLAB 8.1.0.604 programming environment. The performance comparison in terms of execution time is presented in Table 5. The proposed method is ranked sixth in terms of execution time. However it shows the best performance in terms of highest SROCC and PCC score. It can also be observed that the run-time of proposed methods i.e., GM-WFW1, GM-WFW2 and GM-WFW3 is very close to the run-time of other NR-IQA techniques.

5 Conclusion

No-reference image quality assessment has gained importance due to rapid increase in the use of multimedia content. In this paper, three new NR-IQA models based on NSS features, extracted using Wiener filtered wavelet coefficients and gradient magnitude is proposed. Estimating the noise affecting the quality of an image is important since, it can make the task of IQA trivial. In this work, Wiener filter is utilized to improve the NSS of the extracted features for better prediction of quality score by estimating the noise in the image. Selection of optimum parameters used to compute the NSS features include the decomposition level of DWT, basis function of DWT and standard deviation of Wiener filter. The proposed methodology is tested on five commonly used subjective IQA databases and compared to three FR and ten NR-IQA techniques. It is observed that the proposed method, which utilizes features from both the gradient magnitude and Wiener filtered estimated noise image i.e., GM-WFW3 outperforms the state-of-the art image quality assessment techniques, in terms of higher SROCC and PCC score. The experimental results show that proposed technique is robust and learns a generalized model that is database independent. The proposed technique is also competitive in terms of execution time when compared with established NR-IQA techniques.

References

Bosse S, Maniry D, Müller K-R, Wiegand T, Samek W (2016) Neural network-based full-reference image quality assessment. In: Picture coding symposium (PCS), 2016. IEEE, pp 1–5

Fang Y, Ma K, Wang Z, Lin W, Fang Z, Zhai G (2015) No-reference quality assessment of contrast-distorted images based on natural scene statistics. IEEE Signal Process Lett 22(7):838–842

Ferzli R, Karam LJ (2009) A no-reference objective image sharpness metric based on the notion of just noticeable blur (jnb). IEEE Trans Image Process 18(4):717–728

Golestaneh S, Karam LJ (2016) Reduced-reference quality assessment based on the entropy of dwt coefficients of locally weighted gradient magnitudes. IEEE Trans Image Process 25(11):5293–5303

Gu K, Zhai G, Yang X, Zhang W (2015) Using free energy principle for blind image quality assessment. IEEE Trans Multimed 17(1):50–63

Guan J, Zhang W, Gu J, Ren H (2015) No-reference blur assessment based on edge modeling. J Vis Commun Image Represent 29:1–7

Heeger DJ (1992) Normalization of cell responses in cat striate cortex. Vis Neurosci 9(2):181–197

Hore A, Ziou D (2010) Image quality metrics: Psnr vs. ssim. In: 2010 20th international conference on pattern recognition (icpr). IEEE, pp 2366–2369

Huang Y, Chen X, Ding X (2016) A harmonic means pooling strategy for structural similarity index measurement in image quality assessment. Multimed Tools Appl 75(5):2769–2780

Huynh-Thu Q, Ghanbari M (2008) Scope of validity of psnr in image/video quality assessment. Electron Lett 44(13):800–801

Jenadeleh M, Moghaddam ME (2017) Biqws: efficient wakeby modeling of natural scene statistics for blind image quality assessment. Multimed Tools Appl 76(12):13859–13880

Kazubek M (2003) Wavelet domain image denoising by thresholding and wiener filtering. IEEE Signal Process Lett 10(11):324–326

Kerouh F, Serir A (2014) A perceptual blind blur image quality metric. In: 2014 IEEE international conference on acoustics, speech and signal processing (ICASSP). IEEE, pp 2784–2788

Khosravi MH, Hassanpour H (2017) Model-based full reference image blurriness assessment. Multimed Tools Appl 76(2):2733–2747

Kim D-O, Han H-S, Park R-H (2010) Gradient information-based image quality metric, IEEE Trans Consum Electron 56(2):930–936

Kristan M, Perš J, Perše M, Kovačič S (2006) A bayes-spectral-entropy-based measure of camera focus using a discrete cosine transform. Pattern Recognit Lett 27(13):1431–1439

Larson EC, Chandler DM (2010) Most apparent distortion: full-reference image quality assessment and the role of strategy. J Electron Imaging 19(1):011006–011006

Lee D, Plataniotis KN (2016) Toward a no-reference image quality assessment using statistics of perceptual color descriptors. IEEE Trans Image Process 25(8):3875–3889

Li C, Bovik AC (2010) Content-partitioned structural similarity index for image quality assessment. Signal Process Image Commun 25(7):517–526

Li Y, Po L-M, Xu X, Feng L, Yuan F, Cheung C-H, Cheung K-W (2015) No-reference image quality assessment with shearlet transform and deep neural networks. Neurocomputing 154:94–109

Li Q, Lin W, Xu J, Fang Y (2016) Blind image quality assessment using statistical structural and luminance features. IEEE Trans Multimedia 18(12):2457–2469

Li J, Zou L, Yan J, Deng D, Qu T, Xie G (2016) No-reference image quality assessment using prewitt magnitude based on convolutional neural networks. Signal Image and Video Process 10(4): 609–616

Liu A, Lin W, Narwaria M (2012) Image quality assessment based on gradient similarity. IEEE Trans Image Process 21(4):1500–1512

Liu L, Dong H, Huang H, Bovik AC (2014) No-reference image quality assessment in curvelet domain. Signal Process Image Commun 29(4):494–505

Liu X, Pedersen M, Hardeberg JY (2014) Cid: Iq–a new image quality database. In: International conference on image and signal processing. Springer, pp 193–202

Liu L, Hua Y, Zhao Q, Huang H, Bovik AC (2016) Blind image quality assessment by relative gradient statistics and adaboosting neural network. Signal Process Image Commun 40:1–15

Liu M, Gu K, Zhai G, Le Callet P, Zhang W (2017) Perceptual reduced-reference visual quality assessment for contrast alteration. IEEE Trans Broadcast 63(1):71–81

Liu D, Li F, Song H (2017) Regularity of spectral residual for reduced reference image quality assessment. IET Image Process 11(12):1135–1141

Lu W, Xu T, Ren Y, He L (2016) Statistical modeling in the shearlet domain for blind image quality assessment. Multimed Tools Appl 75(22):14417–14431

Lyu S, Simoncelli EP (2008) Nonlinear image representation using divisive normalization. In: IEEE conference on computer vision and pattern recognition, CVPR 2008. IEEE, pp 1–8

Maalouf A , Larabi M-C (2010) A no reference objective color image sharpness metric. In: 2010 18th European signal processing conference. IEEE, pp 1019–1022

Manap RA, Shao L, Frangi AF (2017) Patch-iq: a patch based learning framework for blind image quality assessment. Inf Sci 420:329–344

Mittal A, Moorthy AK, Bovik AC (2012) No-reference image quality assessment in the spatial domain. IEEE Trans Image Process 21(12):4695–4708

Mittal A, Soundararajan R, Bovik AC (2013) Making a “completely blind” image quality analyzer. IEEE Signal Process Lett 20(3):209–212

Moorthy AK, Bovik AC (2010) A two-step framework for constructing blind image quality indices. IEEE Signal Process Lett 17(5):513–516

Moorthy AK, Bovik AC (2011) Blind image quality assessment: from natural scene statistics to perceptual quality. IEEE Trans Image Process 20(12):3350–3364

Ni Z, Ma L, Zeng H, Chen J, Cai C, Ma K-K (2017) Esim: Edge similarity for screen content image quality assessment. IEEE Trans Image Process 26(10):4818–4831

Nizami IF, Majid M, Afzal H, Khurshid K (2017) Impact of feature selection algorithms on blind image quality assessment. Arab J Sci Eng 43(8):4057–4070

Nizami IF, Majid M, Khurshid K (2017) Efficient feature selection for blind image quality assessment based on natural scene statistics. In: 2017 14th international bhurban conference on applied sciences and technology (IBCAST). IEEE, pp 318–322

Nizami IF, Majid M, Khurshid K (2018) New feature selection algorithms for no-reference image quality assessment. Appl Intell 48:3482–3501

Omari M, El Hassouni M, Abdelouahad AA, Cherifi H (2015) A statistical reduced-reference method for color image quality assessment. Multimed Tools Appl 74(19):8685–8701

Ponomarenko N, Jin L, Ieremeiev O, Lukin V, Egiazarian K, Astola J, Vozel B, Chehdi K, Carli M, Battisti F et al (2015) Image database tid2013: peculiarities, results and perspectives. Signal Process Image Commun 30:57–77

Ponomarenko N, Lukin V, Zelensky A, Egiazarian K, Carli M, Battisti F (2009) Tid2008-a database for evaluation of full-reference visual quality assessment metrics. Adv Mod Radioelectron 10(4): 30–45

Portilla J, Strela V, Wainwright MJ, Simoncelli EP (2001) Adaptive wiener denoising using a gaussian scale mixture model in the wavelet domain. In: Proceedings 2001 international conference on image processing, vol 2. IEEE, pp 37–40

Rao RP, Olshausen BA, Lewicki MS, Jordan MI, Dietterich TG (eds) (2002) Probabilistic models of the brain: perception and neural function. MIT Press, Cambridge

Rezaie F, Helfroush MS, Danyali H (2017) No-reference image quality assessment using local binary pattern in the wavelet domain. Multimed Tools Appl 77(2):2529–2541

Saad MA, Bovik AC, Charrier C (2012) Blind image quality assessment: a natural scene statistics approach in the dct domain. IEEE Trans Image Process 21(8):3339–3352

Saha A, Wu QJ (2016) Full-reference image quality assessment by combining global and local distortion measures. Signal Process 128:186–197

Sang Q, Qi H, Wu X, Li C, Bovik AC (2014) No-reference image blur index based on singular value curve. J Vis Commun Image Represent 25(7):1625–1630

Serir A, Beghdadi A, Kerouh F (2013) No-reference blur image quality measure based on multiplicative multiresolution decomposition. J Vis Commun Image Represent 24(7):911–925

Sheikh HR, Sabir MF, Bovik AC (2006) A statistical evaluation of recent full reference image quality assessment algorithms. IEEE Trans Image Process 15(11):3440–3451

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4):600–612

Wang C, Shen M, Yao C (2015) No-reference quality assessment for dct-based compressed image. J Vis Commun Image Represent 28:53–59

Wu J, Lin W, Shi G, Li L, Fang Y (2016) Orientation selectivity based visual pattern for reduced-reference image quality assessment. Inf Sci 351:18–29

Wu J, Xia Z, Li H, Sun K, Gu K, Lu H (2017) No-reference image quality assessment with center-surround based natural scene statistics. Multimed Tools Appl 77:20731–20751

Wu M, Chen L, Tian J (2018) A hybrid learning-based framework for blind image quality assessment. Multidim Syst Sign Process 29(3):839–849

Xu S, Jiang S, Min W (2017) No-reference/blind image quality assessment: a survey. IETE Tech Rev 34(3):223–245

Xue W, Mou X, Zhang L, Bovik AC, Feng X (2014) Blind image quality assessment using joint statistics of gradient magnitude and laplacian features. IEEE Trans Image Process 23(11):4850–4862

Ye P, Kumar J, Kang L, Doermann D (2012) Unsupervised feature learning framework for no-reference image quality assessment. In: 2012 IEEE conference on computer vision and pattern recognition (CVPR). IEEE, pp 1098–1105

Yoneyama A, Minamoto T (2015) No-reference image blur assessment in the dwt domain and blurred image classification. In: 2015 12th international conference on information technology-new generations (ITNG). IEEE, pp 329–334

Zhang L, Zhang L, Mou X, Zhang D (2011) Fsim: a feature similarity index for image quality assessment. IEEE Trans Image Process 20(8):2378–2386

Zhang Y, Moorthy AK, Chandler DM, Bovik AC (2014) C-diivine: no-reference image quality assessment based on local magnitude and phase statistics of natural scenes. Signal Process Image Commun 29(7):725–747

Zhang M, Muramatsu C, Zhou X, Hara T, Fujita H (2015) Blind image quality assessment using the joint statistics of generalized local binary pattern. IEEE Signal Process Lett 22(2):207–210

Zhang L, Zhang L, Bovik AC (2015) A feature-enriched completely blind image quality evaluator. IEEE Trans Image Process 24(8):2579–2591

Zhang C, Pan J, Chen S, Wang T, Sun D (2016) No reference image quality assessment using sparse feature representation in two dimensions spatial correlation. Neurocomputing 173:462–470

Zhang Y, Wu J, Xie X, Li L, Shi G (2016) Blind image quality assessment with improved natural scene statistics model. Digital Signal Process 57:56–65

Zhang Y, Phan TD, Chandler DM (2017) Reduced-reference image quality assessment based on distortion families of local perceived sharpness. Signal Process Image Commun 55:130–145

Zhou W, Yu L, Qiu W, Zhou Y, Wu M (2017) Local gradient patterns (lgp): an effective local-statistical-feature extraction scheme for no-reference image quality assessment. Inf Sci 397:1–14

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Khan, M., Nizami, I.F. & Majid, M. No-reference image quality assessment using gradient magnitude and wiener filtered wavelet features. Multimed Tools Appl 78, 14485–14509 (2019). https://doi.org/10.1007/s11042-018-6797-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-018-6797-4