Abstract

Due to recent development in technology, the complexity of multimedia is significantly increased and the retrieval of similar multimedia content is an open research problem. In the service of multimedia service, the requirement of Multimedia Indexing Technology is increasing to retrieve and search for interesting data from huge Internet. Since the traditional retrieval method, which is using textual index, has limitation to handle the multimedia data in current Internet, alternatively, the more efficient representation method is needed. Content-Based Image Retrieval (CBIR) is a process that provides a framework for image search and low-level visual features are commonly used to retrieve the images from the image database. The basic requirement in any image retrieval process is to sort the images with a close similarity in term of visual appearance. The color, shape, and texture are the examples of low-level image features. The feature combination that is also known as feature fusion is applied in CBIR to increase the performance, a single feature is not robust to the transformations that are in the image datasets. This paper represents a new Content-Based Image Retrieval (CBIR) technique to fuse the color and texture features to extract local features as our feature vector. The features are created for each image and stored as a feature vector in the database. The proposed research is divided into three phases that feature extraction, similarities match, and performance evaluation. Color Moments (CM) are used for Color features and extract the Texture features, used Gabor Wavelet and Discrete Wavelet transform. To enhance the power of feature vector representation, Color and Edge Directivity Descriptor (CEDD) is also included in the feature vector. We selected this combination, as these features are reported intuitive, compact and robust for image representation. We evaluated the performance of our proposed research by using the Corel, Corel-1500, and Ground Truth (GT) images dataset. The average precision and recall measures are used to evaluate the performance of the proposed research. The proposed approach is efficient in term of feature extraction and the efficiency and effectiveness of the proposed research outperform the existing research in term of average precision and recall values.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Different forms of multimedia contents are growing and Content-Based Image Retrieval (CBIR) has become a challenging research area. With the development of technology and usage of internet, the volume of shared multimedia contents especially digital images has grown exponentially [14, 30, 31]. The retrieval of similar multimedia contents from a large repository is an open research problem. Images are widely used and are covering various application areas such as quality inspection, security for human identification, medical images, geography and so on. In the last few decades image is retrieved by Content instead of text retrieval. Two types of approaches are used to search an image collection; First one is based on a search by metadata known as Text Base Image Retrieval (TBIR) used to retrieve an image from a database. Google, yahoos, Bing are examples of TBIR system. In a text-based image retrieval system, retrieving an image is based on tag or text in a widely used database [21, 48]. This approach is not used to extract the visual information of an image from a database. Additionally, this procedure is normally inadequate because the user doesn’t sort this clarification ineffective way. Three main disadvantages of the text-based image retrieval for a huge database are manual annotation is not feasible because one word may have two or three meanings. Homonyms and Synonyms are a big factor in text-based image retrieval system. Emotions and unexpressed feelings are also causing a problem in a retrieval system. The second approach is based on Content information in the image, also known as Content-Base Image Retrieval (CBIR). The main idea of CBIR is to find the most similar images with the query image from a dataset using distance metrics [8, 36]. It is an exceptionally difficult task to simulates visual consideration in CBIR system. With the improvement of computerized digital image innovation, expansive accumulations of image information have moved toward becoming promptly accessible. Content-Based Image Retrieval method distinguishes images on the base of their visual features such as low level attributes corresponding to Color, Texture, shape and spatial layout, etc. But low-level features are failed to explain the high-level semantic known as a semantic gap. To reduce the semantic gap researcher used machine learning techniques [6, 17, 28]. In this paper color and texture feature, fusion technique is used to develop a CBIR system. The rest of the paper is organized as: Related work is briefly described in Section 2. Feature extraction techniques are described in Section 3. Similarity measure, the proposed method and performance evaluation and Result method are explained in Sections 4, 5 and 6. Finally, we have concluded our findings in Section 7.

2 Related work

Most of the researchers use only a Color [32, 37] feature for CBIR system because as compared to texture and shape feature, Color provides an efficient and robust result. Some of them combine two features for color and texture descriptor [41, 44, 47] and some of them combine the color, texture and shape feature [25, 27]. An effective method in CBIR system is to merge the two or more feature vector. The main purpose of the researcher is to improve the CBIR system. In the work of [4, 26] Present a survey on color, shape, and texture in content-based image retrieval system. In this paper, the author gives a brief overview of color, shape and texture features in CBIR system also describing the challenging face when features are extracted from a database. The central purpose of this paper is to present the fundamental appearances to represent an image in a very effective and compact way also the discriminative way that will permit their proficient ordering and recovery. In the work of [15] proposed a new method by using fuzzy support decision mechanisms for color, shape, and texture features extraction. Shape descriptor is extracted by Hue Moments and Fast Wavelet transform and the Texture feature is built to compute the energy at a different decomposition stage. Finally, their implementation based on the color feature extraction using the fuzzy record of Color bins. On the other hand, to improve the performance result, they drive FDSS (Fuzzy Decision Support System) to abstract the texture, shape, and color features. In the work of [26, 46] presented a CBIR system to combine a texture and color features. Color histogram and co-occurrence matrix are used to extract a feature. Global color histogram and block color histogram to compute the color feature. Wang et al. [40] used to capture the color content Zernike chromaticity distribution moments are used from the chromaticity space and texture features are extracted by the scale and rotation invariant. Chen et al. [22] proposed a three image feature for an image retrieval system. Color co-occurrence matrixes (CCM) and DBPSP are used for color and texture features. Color histogram for K-mean (CHKM) is used for color distribution as a third feature. In the work of [29] author introduced a new low-level feature known as CEDD (Color and Edge Directivity Descriptor), which relate to texture, edge and color statistics in the histogram. A comparison to the MPEG-7, this descriptor takes less computational time in large databases. To check the performance of the system, Averaged Normalized Modified Retrieval Rank (ANMRR) method is used. Color features extracted by applying a collection of fuzzy rule for a fuzzy linking histogram that was proposed in [11, 50]. For the extraction of Texture features information MPEG-7 is used which include five digital filters in EDH. The implementation of the system is that firstly query image is provided than in color unit (10 Bin Fuzzy linking then 24 Bin Fuzzy linking) is used to extract the color information. Texture Unit (digital filter then Texture classification) is used to extract the Texture information. Texture and color features are then fused together to contract the CEDD descriptor. After the CEDD implementation they also used relevance feedback information [13, 34, 36]. According to [45] the early fusion with a weighted average of clustering is robust for image retrieval as it maintains a balance in feature vector representation. The research models presented in [8, 9, 18] are based on image classification framework while the proposed research is different from the previous as it is based on the fusion of color,texture and shape features and images are retrieved by applying distance measures instead of a using a classification-based approach (training-testing model). According to [39], the high computational pre-trained Convolution Neural Networks (CNN) models can be used for image retrieval tasks. The use of CNN along with proper feature refining schemes outperforms hand-crafted features on all image datasets.

3 Feature extraction

Feature extraction is a basic and fundamental step in a CBIR system which is depend on the how the scholar define the visual content or visual signature (composition of multiple features). Feature extraction is the process of transforming the input data into a set of features which can very well represent the input data. Features are distinctive properties of input patterns that help in differentiating between the categories of input patterns. feature extraction starts from an initial set of measured data and builds derived values features intended to be informative and non-redundant, facilitating the subsequent learning and generalization steps, and in some cases leading to better human interpretations. Feature extraction is an important role in computer vision. If the feature is more powerful than image retrieval system perform efficient result. The main critical problems in CBIR system is the high dimensionality because of the big feature vector. To overcome this issue we propose the method to reduce the computational time by decreasing the magnitude of highlight extraction. Feature Extraction techniques are shown in the Fig. 1.

3.1 Color moments feature extraction

Color Moments [1, 5, 10] used to find the characteristic of an image and color distribution. It is proposed to overcome the Color histogram quantization problems. Quantization of an image using Color histogram is a time-consuming task and it will not show a better result as compared to Color Moments. The main purpose of Color Moments is indexing, to retrieve an image feature vector and then compare the query image feature vector with the database feature vector with a small distance. Color moments are scaling and rotation invariant. It is usually the case that only the first three color moments are used as features in image retrieval applications as most of the color distribution information is contained in the low-order moments. The basis of color moments lays in the assumption that the distribution of color in an image can be interpreted as a probability distribution. Color Moments divide into three central moments for color distribution. The basis of color moments lays in the assumption that the distribution of color in an image can be interpreted as a probability distribution. Color Moments are mean, standard deviation, skewness, and Kurtosis. Kurtosis is similar to skewness and it’s used for shape feature. But in literature almost every researcher used a first three Moments. First three Moments calculated.

First Color Moment(Mean)

$$ E_{i}={\sum}_{N}^{j = 1}\frac{1}{N}p_{ij} $$(1)It calculates the average color distribution of an image. Here N is the number of pixels in the image and pij is the j pixel at i channel.

Second Color Moment (Standard Deviation)

$$ \sigma_{i}=\sqrt{1/2{\sum}_{N}^{j = 1}(p_{ij}-E_{i})^{2}} $$(2)Standard Deviation calculated by the square root of the variance for Color distribution and Ei is the mean which is calculated in (1).

Third Color Moment (Skewness)

$$ S_{i}=\sqrt[3]{1/N{\sum}_{N}^{j = 1}(p_{ij}-E_{i})^{3}} $$(3)Skewness used to find how asymmetric the color distribution. The feature vector for the first there Color Moments with HSV color space is nine-dimensional vector is

$$ F_{colormoment}={E_{h}\sigma_{h} S_{h} E_{s}\sigma_{s} S_{s} E_{v}\sigma_{v} S_{v}} $$(4)Color indexing of an image can be calculated as:

$$ d_{mom}(X,Y)={\sum}_{n = 1}^{r}w_{n_{1}}\mid {E_{n}^{1}}- {E_{n}^{1}} \mid +w_{n_{2}} \mid \sigma^{1}- \sigma^{2} \mid + w_{n_{3}} \mid {S_{i}^{1}}- {S_{i}^{2}} \mid $$(5)Here X and Y are two images, n is a current channel for HSV channel H = 1, S = 2 and V = 3, E1, E2 is the first Moment of mean between the two images, σ1,σ2 is the second Moments of standard deviation between the two images, S1,S2 is the third Moments of skwness between the two images and w represent the weight assign. In our experimental result feature vector for Color Moments is

$$ F_{colormoment}={E_{h}\sigma_{h} E_{s}\sigma_{s}E_{v}\sigma_{v}} $$(6)Feature extraction of Color Moments is shown in Fig. 2.

3.2 Texture feature using Gabor Wavelet descriptor

To extract a texture feature in the image, Gabor Wavelet descriptor is deployed. In literature to find the texture classification, Gabor Wavelet is a very important technique because it will provide an efficient result as compared other texture features. Gabor Wavelet is the cluster of Wavelets, the mathematical definition of Gabor Wavelet is defined as [20, 43].

In (7) x and y represent the pixel position in image space where all pixel positions are in centre frequency and here x0 = x ∗ cos𝜃 + y ∗ sin𝜃 and y0 = −x ∗ sin𝜃 + ycos𝜃

The direction of Gabor Wavelet represented by 𝜃. For DC composition is used and at each two-axis, σ is a standard deviation of Gaussian function. When we implement the Discrete Gabor filter to transmute Gmn the image I(x, y) with the size R ∗ C, with a dissimilar angle at a different scale, we find the array of scales: [22]

where m = 0,1.....,M − 1 and n = 0,1,....N − 1 The principal reason for shape-based retrieval is to discover locales or images with comparable texture. These magnitudes represent its energy content at a different orientation and scale of an image. Following standard deviation and mean of the extent of the changed coefficients are utilized to symbolize to the comparative texture component of the local image.

To find the texture representation, we use standard deviation σmn and mean μmn for a feature vector. Gabor channels (6 orientation and 5 scales) are used to extract a texture feature, which record the sizes of the Gabor channel reactions. If we decrease the scales, then the image shows a bluer result, so the detail of the image is expanded. The feature vector for our Gabor Wavelet is:

3.3 Discrete wavelet transform (DWT)

Discrete Wavelet Transform [2, 33] is a function which is used to transform the pixel of an image into the frequency domain. Discrete wavelet Transforms (DWT) is used to modify images from the spatial domain to the domain of frequency [5, 33]. In discrete wavelet transforms represent the levels and decompose it into different levels, it is decomposed image on four frequencies subband LL(low-low), LH(low-high), HL(high-low) and HH(high-high) (where, L is a low frequency, H is a high frequency) as shown in Fig. 3.

First, we have to apply a high pass filter to preserve the high frequency and low pass filter to preserve the low frequency of an image. After Apply a low pass filter we get a horizontal approximation and applying a high pass filter we get a horizontal detail. The Same process is deployed to the column, then we get Approximation (LL) and (LH, HL, HH) magnitudes.

3.4 Color and edge directivity descriptor (CEDD)

CEDD [12] is a Descriptor which is used to extract the low-level feature from the images for retrieval and indexing purpose. It is deal with the color and texture features as well as the edges of an image. Color feature is extracted by fuzzy linking histogram and texture features are extracted by using five type of edges in MPEG-7 EHD (edge histogram descriptor) [42]. A block diagram of CEDD is.

Block diagram of CEDD is shown in Fig. 4; the first block for texture feature extraction known as texture unit block and the second one is a color unit block for color feature extraction. In the first stage 10 bin fuzzy histogram is calculated which represent a number of colors such as red, white, magenta and orange, and then each color one of the three hues to compute a 24 bin fuzzy histogram. Each Bin represents a number of color such as light yellow, dark yellow, dark green and dark magenta. When the CEDD is calculated then the image is quantized into 3 bins because to restrict the color and Edge Directivity Descriptor length.

4 Similarity measure

The similarities between the database images and query images are measured by Euclidean distance. To form a feature vector, we used texture and color features. Texture features are extracted by Discrete Wavelet transform and Gabor Wavelet Descriptor. Color feature is extracted by using Color Moments for HSV color space. Furthermore, we have also used color and edge directivity descriptor to extract features. The Euclidean distance calculated as

Here Q represents the query Image and Db represent the database images. Query image feature vector and total number of database feature vector shown in Table 1.

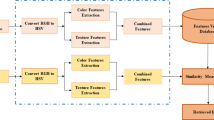

5 Proposed method

The color, shape, and texture are the examples of low-level image features. These features are the visual attributes of an image and characteristic of every image from datasets and kept in a separate dataset called feature repository. The step by step algorithm proposed in our paper for the texture and color based image retrieval using Color Moments, Gabor Wavelet, Discrete Wavelet Transform, and CEDD is explained below:

- 1.

Query image is uploaded

- 2.

For the query image, Image resize into 384 and 256-pixel value at x and y-direction. Then Color Moments for HSV color space is calculated for the color feature and Gabor wavelet (number of orientation = 6 and number of scale= 5) and Discrete Wavelet Transform is deployed to find the texture feature. After that CEDD is calculated for the texture and color features as well as the edges of an image.

- 3.

The Dataset is created for all the images to store as a feature vector.

- 4.

Find the Global and local feature extraction for the image.

- 5.

Dataset is loaded for the input image.

- 6.

Euclidean distance is used to find similarities between the query image and a database image.

- 7.

Determine the recall and precision of the query image.

- 8.

Repeat the step from 2 to 7 for a different image.

- 9.

Stop

The Complete process of the proposed method is represented in Fig. 5.

The performance of our proposed research is evaluated by precision and recall method. Precision is the ratio of the number of images retrieved to the total number of images retrieved. Recall method is the ratio of the number of relevant images retrieved to the total number of relevant images. Precision and recall can be calculated as:

Where Rq represent the relevant images matching against to the query image, Req represents the retrieved images against the query image, and Td the total number of relevant images available in the repository (database).

6 Performance evaluation

6.1 Experimental result

In this section, we describe our proposed method and shows relevant image retrieval results with precision and recall method. We combined the color and texture feature and then fused with the CEDD feature to enhance the result for our system. Query performance analysis is checked by a random selection of an image. Here we have displayed the retrieval results which represent the precision obtained by our method against these query images. The experimental result is discussed in next section.

6.2 Image retrieval result using corel 1k, 1500 and 5k data

The Corel dataset consists of a large number of images with the collection of real images and natural images. Corel 1-k Data Set which contains 1000 natural Images. In 1-K database each image are assembled into 10 classes, each group of images contains 100 images. It contains the images of Africans, buildings, beaches, dinosaur, elephant, buses, hills, food, mountains and flower. All the images are in JPEG format with the size of 384 × 256 and 256 × 384 pixels and all the images were characterized with RGB color-space.

The image on a left corner is the query image and other images are resultant images which calculated with the shortest distance between the query image and database images. A query image from ‘bus’ and ‘elephant’ category is provided to a system, then top 20 images are shown in Fig. 7 by using only Color Moments for RGB Color space is shown.

A query image from ‘elephant’ category is provided to a system, then top 20 images are shown in Fig. 6 by using all previous method (a, b, c, d, and e) and our proposed method (f) is shown. Figure 6 is shown the sample of dataset and Figs. 7, 8 and 9 are the output of our system.

6.3 Image retrieval result and performance evalution using corel 1-k dataset

One image for each category of Africa, beach, building, bus, dinosaur, elephant, flower, horse, mountain and food is provided as a query image then precision and recall rate is calculated. Figures 10 and 11 describe the performance comparison in terms of Precision and recall rates with the same systems (Tables 2, 3 and 4).

Tables 5, 6 and 7 present comparison of the proposed system with other comparative systems in terms of average precision and recall values (Figs. 12 and 13).

6.4 Image retrieval result and performance evaluation using Corel 1500 dataset

Corel 1500 is a subset of corel 5-k dataset. It contains 15 categories. Each category contains 100 images with the size of 384 × 256 and 256 × 384. In our experiments, we randomly selected images as a query image for each category. Then average precision and recall method checked with the [7, 47] methods. A total number of image retrieval result is 20. The Table shows the comparison result with the state of the art systems.

Average Precision and Recall comparison with state of the art system for the Corel-1500 dataset (Figs. 14, 15 and 16).

6.5 Image retrieval result and performance evaluation using corel 5k dataset

Corel 5k data contain 50 categories, and there are 50000 images. Each category contains 100 images with the size of 126 × 187 and 187 × 126 in the RGB color plane. Total Number of images is set to 12 (Table 8). Table shows the compassion, precision, and recall with the [3, 24, 49] methods (Figs. 17 and 18).

6.6 Image retrieval result and performance evaluation using GHIM-10k dataset

In this experiment, we evaluate our results with a GHIM-10 data set and compare our result with [23, 38]. This database consists of 10,000 images with different structures and diverse contents. It contains 20 categories and all the images are collected from web and camera’s which are Fireworks, Buildings, Walls, Cars, Flies, Mountains, Flowers, Trees, Beaches etc. Each category consists of 500 images with the size of 300 ∗ 400 or 400 ∗ 300 in JPEG format. The names of the images are sunset, building, car, ship, flower, mountains, insect, etc. The sample images of the GHM-10 dataset are shown in the Figure. We pick one image for each category (Tables 9 and 10).

Average Precision and Recall comparison with state of the art system for GHM-20k dataset (Figs. 19, 20, 21 and 22).

7 Conclusion

This paper is based on the fusion of multiple features for the efficient CBIR system. A Color based image retrieval and Texture framework have been explained in our work by local level features and Euclidean distance to find similarities of an image. In this manuscript, we proposed a mechanism for automatic image retrieval. Mostly, all CBIR systems used color, shape, texture and spatial information. We used a more appropriate Color and Textures features to form a feature vector. Then we compare our query image with all the database images using Euclidean distance. Our proposed method outperforms with existing CBIR systems. This application may use in internet image searching, Bio-medical image retrieval in high semantic meaning, decision support systems, digital libraries, accurate image retrieval from large image archives, and Manipulation of geographic images. The performance of the proposed method is better than all another existing system in terms of average precision and recall rates. In future work, we used different features to combine with the existing features as well as we used machine learning techniques such Artificial Neural network (ANN)and Support Vector Machine (SVM) to improve the performance of the system and will be continued for the more enhanced feature extraction method and meaningful retrieval. CBIR is found to be faster and more effective than keyword based search strategy. The system can be further enhanced and made a web based user interactive system. The idea can be further extended for other multimedia objects like audio and video files. The system can be further implemented as online media based search engine that can search images and well as media files, both audio and video based on the contents of the query file.

References

Afifi AJ, Ashour WM (2012) Content-based image retrieval using invariant color and texture features. In: 2012 International Conference on Digital Image Computing Techniques and Applications (DICTA). IEEE, pp 1–6

Agarwal S, Verma A, Dixit N (2014) Content based image retrieval using color edge detection and discrete wavelet transform. In: 2014 International Conference on Issues and Challenges in Intelligent Computing Techniques (ICICT). IEEE, pp 368–372

Ahmad J, Sajjad M, Mehmood I, Baik SW (2015) Ssh: Salient structures histogram for content based image retrieval. In: 2015 18th International Conference on Network-Based Information Systems (NBis). IEEE, pp 212–217

Ahmad J, Sajjad M, Mehmood I, Rho S, Baik SW (2015) Describing colors, textures and shapes for content based image retrieval-a survey. arXiv:150207041

Ashraf R, Ahmed M, Jabbar S, Khalid S, Ahmad A, Din S, Jeon G (2018) Content based image retrieval by using color descriptor and discrete wavelet transform. J Med Syst 42(3):44

Ashraf R, Bajwa KB, Mahmood T (2016) Content-based image retrieval by exploring bandletized regions through support vector machines. J Inf Sci Eng 32 (2):245–269

Ashraf R, Bajwa KB, Mahmood T (2016) Content-based image retrieval by exploring bandletized regions through support vector machines. J Inf Sci Eng 32 (2):245–269

Ashraf R, Bashir K, Irtaza A, Mahmood MT (2015) Content based image retrieval using embedded neural networks with bandletized regions. Entropy 17 (6):3552–3580

Ashraf R, Mahmood T, Irtaza A, Bajwa K (2014) A novel approach for the gender classification through trained neural networks. J Basic Appl Sci Res 4:136–144

Bu H-H, Kim N-c, Moon C-J, Kim J-H (2017) Content-based image retrieval using combined color and texture features extracted by multi-resolution multi-direction filtering. J Inf Process Syst 13(3):464–475

Chatzichristofis S, Boutalis Y (2007) A hybrid scheme for fast and accurate image retrieval based on color descriptors. In: IASTED international conference on artificial intelligence and soft computing (ASC 2007), Spain

Chatzichristofis SA, Boutalis YS (2008) Cedd: color and edge directivity descriptor: a compact descriptor for image indexing and retrieval. In: International Conference on Computer Vision Systems Springer, pp 312–322

Chatzichristofis SA, Zagoris K, Boutalis YS, Papamarkos N (2010) Accurate image retrieval based on compact composite descriptors and relevance feedback information. Int J Pattern Recognit Artif Intell 24(02):207–244

Datta R, Joshi D, Li J, Wang JZ (2008) Image retrieval: Ideas, influences, and trends of the new age. ACM Comput Surv 40(2):5

ElAdel A, Ejbali R, Zaied M, Amar CB (2016) A hybrid approach for content-based image retrieval based on fast beta wavelet network and fuzzy decision support system. Mach Vis Appl 27(6):781–799

ElAlami ME (2014) A new matching strategy for content based image retrieval system. ApplSoft Comput 14:407–418

Fakheri M, Sedghi T, Shayesteh MG, Amirani MC (2013) Framework for image retrieval using machine learning and statistical similarity matching techniques. IET Image Proc 7(1):1–11

Farhan M, Aslam M, Jabbar S, Khalid S, Kim M (2017) Real-time imaging-based assessment model for improving teaching performance and student experience in e-learning. Journal Real-Time Image Processing, pp 1–14

Irtaza A, Jaffar MA (2014) Categorical image retrieval through genetically optimized support vector machines (gosvm) and hybrid texture features Signal, Image and Video Process, pp 1–17

Kokare M, Chatterji BN, Biswas PK (2004) Cosine-modulated wavelet based texture features for content-based image retrieval. Pattern Recognit Lett 25(4):391–398

Lieberman H, Rosenzweig E, Singh P (2001) Aria: An agent for annotating and retrieving images. Computer 34(7):57–62

Lin C-H, Chen R-T, Chan Y-K (2009) A smart content-based image retrieval system based on color and texture feature. Image Vision Comput 27(6):658–665

Liu G-H (2015) Content-based image retrieval based on visual attention and the conditional probability. In: International Conference on Chemical, Material, and Food Engineering, Atlantis Press, pp 838–842

Liu G-H, Yang J-Y (2013) Content-based image retrieval using color difference histogram. Pattern Recognit 46(1):188–198

Pavithra L, Sharmila TS (2017) An efficient framework for image retrieval using color, texture and edge features Comput Electr Eng

Piras L, Giacinto G (2017) Information fusion in content based image retrieval: A comprehensive overview. Inf Fusion 37:50–60

Pujari J, Hiremath P (2007) Content based image retrieval based on color texture and shape features using image and its complement. Int J Comput Sci Secur 1(4):25–35

Sankar SP, Vishwanath N et al. (2017) An effective content based medical image retrieval by using abc based artificial neural network (ann). Current Med Imag Rev 13 (3):223–230

Shah DM, Desai U (2017) A survey on combine approach of low level features extraction in cbir. In: 2017 International Conference on Innovative Mechanisms for Industry Applications (ICIMIA). IEEE, pp 284–289

Shleymovich M, Medvedev M, Lyasheva SA (2017) Image analysis in unmanned aerial vehicle on-board system for objects detection and recognition with the help of energy characteristics based on wavelet transform. In: XIV International Scientific and Technical Conference on Optical Technologies in Telecommunications International Society for Optics and Photonics, pp 1034210–1034210

Singh H, Agrawal D (2016) A meta-analysis on content based image retrieval system. In: Emerging Technological Trends (ICETT), International Conference on IEEE, pp 1–6

Singh VP, Srivastava R (2017) Improved image retrieval using color-invariant moments. In: 2017 3rd International Conference on Computational Intelligence & Communication Technology (CICT). IEEE, pp 1–6

Srivastava P, Khare A (2017) Integration of wavelet transform, local binary patterns and moments for content-based image retrieval. J Visual Commun Image Represent 42:78–103

Stejić Z, Takama Y, Hirota K (2003) Genetic algorithm-based relevance feedback for image retrieval using local similarity patterns. Inf Process Manag 39(1):1–23

Tian X, Jiao L, Liu X, Zhang X (2014) Feature integration of eodh and color-sift: Application to image retrieval based on codebook. Signal Process Image Commun 29(4):530–545

Tzelepi M, Tefas A (2016) Relevance feedback in deep convolutional neural networks for content based image retrieval. In: Proceedings of the 9th Hellenic Conference on Artificial Intelligence ACM, p 27

Upadhyaya N, Dixit M (2016) A novel approach for cbir using color strings with multi-fusion feature method. Digital Image Proc 8(5):137–145

Varish N, Pradhan J, Pal AK (2017) Image retrieval based on non-uniform bins of color histogram and dual tree complex wavelet transform. Multimedia Tools Appl 76 (14):15885–15921

Wan J, Wang D, Hoi SCH, Wu P, Zhu J, Zhang Y, Li J (2014) Deep learning for content-based image retrieval: A comprehensive study. In: Proceedings of the 22nd ACM international conference on Multimedia ACM, pp 157–166

Wang X-Y, Yang H-Y, Li D-M (2013) A new content-based image retrieval technique using color and texture information. Comput Electr Eng 39(3):746–761

Wei G, Cao H, Ma H, Qi S, Qian W, Ma Z (2018) Content-based image retrieval for lung nodule classification using texture features and learned distance metric. J Med Syst 42(1):13

Won CS, Park DK, Park S-J (2002) Efficient use of mpeg-7 edge histogram descriptor. ETRI J 24(1):23–30

Yalavarthi A, Veeraswamy K, Sheela KA (2017) Content based image retrieval using enhanced gabor wavelet transform. In: 2017 International Conference on Computer, Communications and Electronics (Comptelix). IEEE, pp 339–343

Youssef SM (2012) Ictedct-cbir: Integrating curvelet transform with enhanced dominant colors extraction and texture analysis for efficient content-based image retrieval. Comput Electr Eng 38(5):1358–1376

Yu J, Qin Z, Wan T, Zhang X (2013) Feature integration analysis of bag-of-features model for image retrieval. Neurocomputing 120:355–364

Yue J, Li Z, Liu L, Fu Z (2011) Content-based image retrieval using color and texture fused features. Math Comput Modell 54(3):1121–1127

Zeng S, Huang R, Wang H, Kang Z (2016) Image retrieval using spatiograms of colors quantized by gaussian mixture models. Neurocomputing 171:673–684

Zhang D, Islam MM, Lu G (2012) A review on automatic image annotation techniques. Pattern Recognit 45(1):346–362

Zhao M, Zhang H, Meng L (2016) An angle structure descriptor for image retrieval. China Commun 13(8):222–230

Zheng L, Yang Y, Tian Q (2017) Sift meets cnn: A decade survey of instance retrieval. IEEE Transactions on Pattern Analysis and Machine Intelligence

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ashraf, R., Ahmed, M., Ahmad, U. et al. MDCBIR-MF: multimedia data for content-based image retrieval by using multiple features. Multimed Tools Appl 79, 8553–8579 (2020). https://doi.org/10.1007/s11042-018-5961-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-018-5961-1