Abstract

In this paper, we present an efficient set of moment invariants, named Direct Krawtchouk Moment Invariants (DKMI), for 3D objects recognition. This new set of invariants can be directly derived from the Krawtchouk moments, based on algebraic properties of Krawtchouk polynomials. The proposed computation approach is effectively compared with the classical method, which rely on the indirect computation of moment invariants by using the corresponding geometric moment invariants. Several experiments are carried out so as to evaluate the performance of the newly introduced invariants. Invariability property and noise robustness are firstly investigated. Secondly, the numerical stability is discussed. Then, the performance of the proposed moment invariants as pattern features for 3D object classification is compared with the existing Geometric, Krawtchouk, Tchebichef and Hahn Moment Invariants. Finally, a comparative analysis of computational time of these moment invariants is illustrated. The obtained results demonstrate the efficiency and the superiority of the proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Moment invariants are defined as a function of the image moments, which have the property to remain unchangeable or invariant under certain group of image transformations, like rotation, translation, scaling, convolution, etc [16]. Due to this property, one can represent object features independently of the aforementioned deformations. As a result, the moment invariants descriptors have been attracting the interest of the scientific community and have become an important tool for the recognition and the classification of deformed objects. Indeed, the notion of moment invariants has been effectively applied in various domains such as image analysis [4, 28, 37, 53, 60, 64,65,66], pattern recognition [5, 15, 19, 50], image retrieval [2, 3, 46, 47], medical image analysis [6, 13, 20] and image watermarking [14, 54, 62]. In this diversity of applications the moment invariants were proved to be a very powerful tool for feature representation and extraction.

Generally, the family of moments and moment invariants can be categorized into two main groups: (1) non-orthogonal moments that is based on non-orthogonal kernel function, such as the geometric or the complex basis. (2) the orthogonal moments, where the basis function is typically represented by a continuous or discrete orthogonal polynomials. As well-known, the orthogonality property insures that no information redundancy over the extraction features and provide high description capability, which is considered as a major advantage over non-orthogonal ones. Moreover, according to the definition domain of the used polynomials basis, orthogonal moments can be further divided into moments defined in polar coordinate or Cartesian coordinate. One advantage of the moments defined in the Cartesian coordinate is the facility of obtaining scale and translation invariants in comparison with those defined in polar coordinate. While rotation invariants can be easily achieved in polar coordinate. A complete classification of the existing families of image moment is illustrated in Fig. 1.

Recently, the rapid development of the 3D imaging technologies and scanning devices, such as Computer Tomography (CT), Magnetic Resonance Imaging (MRI) and Light Detection And Ranging (LiDAR). Has leads to a variety of applications, like 3D surface image reconstruction [55] and 3D image registration [30], to tackle the problem of 3D object recognition and representation. As one of the most important tools in 3D image analysis, three-dimensional moment invariants has gained an increasing interest in latest years, especially in pattern recognition applications [7, 34, 43, 51, 59]. The reason is due to their capability to extract shape features independently of 3D geometric deformations. Actually, there are three methods for deriving invariants: (1) The normalization method, which is based on image normalization and aims to transform a distorted image input into its corresponding normal form, such that it is invariant to certain deformations [17, 18, 21, 39, 61]. (2) The indirect method, it relies on the algebraic relation between the image moments and geometric ones, in order to express moment invariants as a linear combination of Geometric Moment Invariants. Due to the simplicity of the indirect method, it has been extensively discussed [4, 36, 37, 40, 60]. (3) The direct method, it seeks to directly derive invariants from the image moments [9, 10, 56, 57, 67]. In fact, the main advantage of this method, is that moment invariants can be algebraically derived from orthogonal moments without the requirement of calculating the normalization parameters of the deformed image, or using the indirect methods to achieve the invariance through the Geometric Moment Invariants. So far, only few research studies concerning the derivation of moments invariants utilizing the direct method has been presented [38, 56], and no such paper dealing with the derivation of three-dimensional Rotation, Scaling and Translation (RST) moment invariants, using the direct method, has been published.

Motivated by the excellent properties of the Krawtchouk moments, which are the discrete orthogonality, the simplicity of implementation, representation in matrix form, the capability to extract local features and the high tolerance to different kinds of noise [4, 7, 60]. Also, noticing that the orthogonal moments, especially discrete orthogonal moments, have shown more robustness against image noise and high discrimination power for object detection and recognition in comparison with the non-orthogonal moments [16]. In the top of that, the study of the Krawtchouk Moment Invariants, using direct derivation method, for 3D image analysis and representation has not been carried out in the literature.

In this paper, we introduce a new set of discrete orthogonal moment invariants, named Direct Krawtchouk Moment Invariants (DKMI). This new set is algebraically derived from Krawtchouk Moments based on the corresponding Krawtchouk polynomials. Moreover, the proposed invariants can be used for the extraction of 3D shape features independently of rotation, scaling and translation deformations. As already mentioned, this proposed set will eliminate the need for the image normalization process or the computation of geometric moments to achieve the desired invariants.

It is well-known that the description of deformed objects with respect to geometric transformations as translation, scale and rotation, has a high significance in many computer vision tasks and very useful in pattern recognition. Therefore, the potential applications of the proposed three-dimensional moment invariants, can be found in variety of fields: such as the recognition of complex activities [31, 32], human motion identification and computer interaction [12, 33]. In addition, their applicability can be extended to object matching and tracking, where the tracked object usually encounters some appearance variations in-plane rotation, translation and scale change [23,24,25,26,27].

In summary, we will initially provide a short overview of the indirect derivation method of some existing moment invariants, namely Tchebichef, Krawtchouk and Hahn Moment Invariants. Then, we will present the detailed process for the direct derivation of our new set of invariants from 3D Krawtchouk Moments. Subsequently, several numerical experiments are presented, so as to validate the effectiveness of the proposed DKMI. First, we investigate their RST invariance and noise robustness. Second, the effect of image size on their numerical stability is discussed. Then, the recognition performance of the new DKMI is compared with the traditional indirect invariants in pattern recognition application. Finally, a comparison of computational speed between the proposed descriptors and the traditional ones is presented.

The rest of this paper is structured as follows: In Section 2, we present the traditional indirect method for deriving discrete orthogonal moment invariants. In Section 3, we introduce the proposed Direct Krawtchouk Moment Invariants. Section 4, is devoted to provide the appropriate experiments to demonstrate the usefulness of our proposed invariants. Finally, concluding remarks are presented in Section 5.

2 Classical 3D moment invariants

The usual method for obtaining rotation, scaling and translation moment invariants is to express the image moments as a linear combination of geometric ones, and then make use of rotation, scaling and translation geometric invariants instead of geometric moments.

2.1 Geometric moment invariants

The (n + m + k)-th order geometric moments mnmk of an image f(x, y, z) with the size N × M × K is defined using the discrete sum approximation as:

And the corresponding central geometric moments μnmk, which are translation invariants, are given by:

where (x0, y0, z0) denotes the centroid coordinates of the 3D object, that are given by:

The 3D image rotation is usually performed as a series of three 2D rotations about each axis. In this section we consider the Euler Angle Sequence (x − y − z), which is commonly used in aerospace engineering and computer graphics. The 3D rotation matrix associated with the Euler Angle Sequence (x − y − z), is defined by the rotation along x axis by angle ϕ, along y axis by angle 𝜃 and along z axis by angle ψ:

In general way, the 3D rotation matrix can be expressed as a linear transformation of the 3D object coordinates, by:

Hence, in analogy with the geometric rotation invariants of the 2D case, we can obtain the (n + m + k)-th order of 3D Geometric Moment Invariants (GMI), which are independent of rotation, scaling and translation transforms, by the following formula:

where \(\gamma = \frac {n+m+k}{3} + 1\).

By using the trinomial theorem, which is given by:

the (7) can be further expressed in terms of central moments, as introduced in [7, 16], as follows:

2.2 Indirect derivation of orthogonal moment invariants

Before presenting the traditional indirect method for deriving 3D Tchebichef, Krawtchouk and Hahn Moment Invariants, using geometric moment invariants, let us introduce some necessary notations and useful relations. Firstly, we need to provide the definition of the generalized hypergeometric functions 2 F1(⋅) and 3 F2(⋅):

where (a)k is the Pochhammer symbol, also called raising factorial, given by:

and Γ(n) = (n − 1)! is the gamma function.

We can also introduce the falling factorial denoted by 〈x〉k defined as:

As mentioned in [11], 〈x〉k can be expanded as:

where S1(k, i) are the Stirling numbers of the first kind, obtained by the following recurrence relation:

with S1(k,0) = S1(0, i) = 0, and S1(0, 0) = 1.

2.2.1 Indirect Tchebichef moment invariants

The Tchebichef polynomials was introduced by Pafnuty Chebychev in [52], and firstly used in image analysis by Mukundan et al. [37] as a basis function for image moments. The (n + m + k)-th order 3D Tchebichef Moments, for an image function f(x, y, z) of the size N × M × K is defined as:

where \(\bar {t}_{n}(x;N)\) is the n-th order weighted Tchebichef polynomials defined by:

and tn(x; N) is n-th order discrete orthogonal Tchebichef polynomials, with respect to the weight function wt(x) and normalization function ρt(n), which are defined respectively by :

and

The classical Tchebichef orthogonal polynomials with x = 0,1,..., N − 1, are defined in terms of generalized hypergeometric function as:

and can be expanded as:

with

Substituting (21) to (16), the Tchebichef Moments can be written in terms of geometric moments as:

and by replacing mpqr in (23) by νpqr of (9) we can obtain Tchebichef Moment Invariants (TMI) of the order n + m + k, which are rotation, scaling and translation invariants of Tchebichef Moments.

2.2.2 Indirect Krawtchouk moment invariants

The Krawtchouk polynomials was introduced by Mikhail Kravchuk in [22], and applied in image analysis by Yap et al. [60] as a basis function of discrete Krawtchouk Moments. The (n + m + k)-th order 3D Krawtchouk Moments, for an image f(x, y, z) of the size N × M × K is defined as:

where \(\bar {k}_{n}(x;p,N)\) is the n-th order weighted Krawtchouk polynomials defined by:

and kn(x; p, N) are the Krawtchouk polynomials with x = 0,1,..., N − 1,0 < p < 1, which forms a discrete orthogonal basis, with respect to the weight function:

and the squared norm:

Generally, the Krawtchouk polynomials of the n-th order can be expressed in terms of the generalized hypergeometric function (10) as follows:

where x, n = 0,1,..., N − 1, N > 0, 0 < p < 1. And can be expanded as a linear combination of monomials xi as:

with

According to [60], the Krawtchouk Moments can be written in terms of geometric moments as:

by replacing mpqr in of (31) by νpqr (9) we can obtain Krawtchouk Moment Invariants (KMI) of the order n + m + k, which are rotation, scaling and translation invariants of Krawtchouk Moments.

As well-known the Krawtchouk moments are well suited for extracting local features according to any ROI (Region Of Interest) of the 3D image [7]. In fact, the 3D Krawtchouk Moments (24) involves three binomial distribution parameters px, py and pz of Krawtchouk polynomials, which correspond respectively to the x-axis, y-axis and z-axis.

These parameters can be used to shift the Region of Interest to the desired position. Where, px is used to shift the ROI horizontally along x-axis, if px < 0.5 the ROI is shifted to the left, while for px > 0.5 the ROI is shifted to the right. While, py is used to shift the ROI horizontally along y-axis, for py < 0.5 we shift the ROI to the left, while for py > 0.5 the ROI is shifted to the right. Finally, pz is used to shifting the ROI vertically along z-axis, when pz < 0.5 the ROI is shifted to bottom while pz > 0.5 the ROI is shifted to top.

For detailed discussion about the Krawtchouk Moments, we refer readers to [4, 7, 60].

2.2.3 Indirect Hahn moment invariants

The discrete orthogonal Hahn polynomials has been firstly introduced in the field of image analysis by Zhu et al. in [64]. Similarly to 3D Tchebichef and Krawtchouk Moments, we can define the (n + m + k)-th order 3D Hahn Moments, for an image f(x, y, z) of the size N × M × K, as follows:

where \(\bar {h}_{n}^{(a,b)}(x;N)\) is the normalized Hahn polynomials \(h_{n}^{(a,b)}(x;N)\), which can be obtained by utilizing the squared norm and weight function as:

ρh(n) denotes the squared norm, defined by:

and wh(x) is the weight function associated with the discrete Hahn polynomials:

The n-th order Hahn polynomials is defined by using hypergeometric function as:

with x, n = 0,1,..., N − 1, a > − 1 and b > − 1.

The \(h_{n}^{(a,b)}(x;N)\) can be expanded as:

with

In a similar way to the 3D Krawtchouk Moments, the 3D Hahn Moments can be written in terms of the geometric moments as:

To obtain 3D Hahn Moment Invariants (HMI), we can simply replace the geometric moments mpqr in (39) by the geometric moment invariants νpqr defined by (9).

3 Proposed approach: direct derivation of 3D moment invariants

In the previous section we have briefly discussed the indirect method for obtaining rotation, scale and translation invariance of some existing discrete orthogonal moments, which make use of the respective invariants of the geometric moments. In this current section, we introduce a direct derivation method of a new set of 3D object shape descriptors based on Krawtchouk polynomials, where the rotation, scaling and translation invariants are achieved directly from the Krawtchouk Moments. It worth noting that, the proposed method could be easily generalized in order to derive invariants from the other discrete orthogonal moments.

As previously mentioned in Section 2.2.2, the Krawtchouk polynomials can be defined in terms of monomials xi:

with

For notation simplicity, we introduce a matrix representation of (40) as:

where Km(x) = (k0(x; p, N), k1(x; p, N),..., kn(x; p, N))T , Xm(x) = (x0, x1,..., xn)T and Cm = (Cn, i) with 0 ≤ i ≤ n ≤ m.

From (41) we can deduce that Cm is a lower triangular matrix, and since all its diagonal elements \(C_{i,i}=\left (\frac {-1}{p}\right )^{i} \frac {(N-1)!}{N!} \) are different from zero, the matrix Cm is non singular (invertible). As a result of the above analysis, we can give the following Lemma.

Lemma 1

The corresponding inverse formula of (40), which can be used to represent xi in terms of Krawtchouk polynomials ks(x; p, N) with s ≤ i, is given by:

where Di, s is an element of the matrix Dm = (Di, s) with 0 ≤ s ≤ i ≤ m, and Dm is the inverse matrix of Cm of (42). The elements of the matrix Dm are given as follows:

and S2(i, m) is the Stirling numbers of the second kind, which can be computed by using the following recurrence relation:

with S2(i, 0) = S2(0, m) = 0, and S2(0, 0) = 1.

Similar proof of Lemma 1 can be found in [63], with slight modifications.

It is important to note, that the Stirling numbers of the first and second kinds can be considered inverses of one another, and satisfy the following property:

and

where δj, k is the Kronecker delta.

3.1 3D translation invariants

Let ft(x, y) be the translated version of the original image f(x, y), we have:

We can define the Krawtchouk Moments \(KM_{nm}^{t}\) of the translated image as follows:

To simplify the previous expression, we give the following proposition:

Proposition 1

The Krawtchouk Moments \(KM_{nmk}^{t}\) of a translated image ft(x, y, z) can be written in terms of KMuvw of the original image f(x, y, z) as:

The proof of Proposition 1 is given in Appendix A.

As can be concluded from the Proposition 1, the Krawtchouk Moments of any translated image by a translation vector (x0, y0, z0) can be expressed in terms of the Krawtchouk moments of the original image.

At this point, the translation moment invariants can be directly derived from the Krawtchouk moments, by replacing the vector (x0, y0, z0) by the image centroid coordinates \((\bar {x}, \bar {y}, \bar {z})\). Where the centroids of x-, y- and z-coordinate, denoted respectively by \(\bar {x}, \bar {y}, \bar {z}\), and can be derived as:

Hence, any effect of image translation on the \(KM_{nmk}^{t}\), can be canceled by this translation normalization. Which makes \(KM_{nmk}^{t}\) translation invariant. And along this paper, will be denoted by \(I_{nmk}^{t}\):

3.2 3D Rotation and scale invariants

In this subsection, we discuss the direct derivation of rotation and scaling invariants of a 3D object from the Krawtchouk Moments.

Suppose that fd(x, y, z) is a deformed version of the original object f(x, y, z), which has been transformed according to (6), we have

The Krawtchouk Moments of the deformed object fd(x, y, z) is defined as

To simplify the previous equation, we give the following proposition:

Proposition 2

The Krawtchouk Moments \(KM_{nmk}^{d}\) of any deformed image fd(x, y, z) can be written in terms of KMuvw of the original image f(x, y, z) as:

where δ = s + t + f, σ = u + v + w and 𝜖 = i − s − h + j − t + e − f − w.

The proof of Proposition 2 is given in Appendix B.

The above Proposition 2 shows that The Krawtchouk Moments of any 3D scaled and rotated object, can be expressed as a linear combination of The Krawtchouk Moments KMrld of the original object. Based on this relationship, we can construct a set of rotation and scaling invariants \(I_{nmk}^{rs}\)from the Krawtchouk Moments, as follows:

where \( \gamma = -\frac {i+j+e + 3}{3}\) and λ = KM000 are used for scale normalization. And aij are corresponding to the elements of the rotation matrix (5). Where it’s angles vales are given by \( \phi = \frac {1}{2} tan^{-1}((u KM_{011}- v KM_{000})/(KM_{020}-KM_{002})) \), \( \theta = \frac {1}{2} tan^{-1}((u KM_{101}- v KM_{000})/(KM_{200}-KM_{002})) \) and \( \psi = \frac {1}{2} tan^{-1}((u KM_{110}- v KM_{000})/(KM_{200}-KM_{020})) \) with u = 2C22 C00/(C11)2 and v = 2C22(C10)2/C00(C11)2.

Based on (56), we can directly derive the 3D rotation, scaling and translation invariants of Krawtchouk moments \(I_{nmk}^{rst}\), if we replace the Krawtchouk Moments KMrld on the right sides of (56) by the direct translation invariants \(I_{nmk}^{t}\) of (52). The 3D moment invariants \(I_{nmk}^{rst}\) developed in this section, will be denoted along the rest of this paper by the Direct Krawtchouk Moment Invariants (DKMI).

4 Experimental results and discussion

In this section, several numerical experiments are carried out to validate the effectiveness of the newly introduced moment invariants. This section is divided into four subsections. In the first one, we investigate the invariability property of the proposed Moment Invariants against different geometric deformations and noise degradation. In the second subsection, we will demonstrate the numerical stability of the proposed invariants, conjointly with the illustration of the image size effect on the traditional moment invariants. In the third subsection, the classification performance of the proposed moment invariants is effectively compared with the traditional Geometric, Tchebichef, Krawtchouk and Hahn Moment Invariants, using the McGill 3D Shape Benchmark [45]. Finally, we provide a comparative analysis concerning the computational time in the last subsection.

It is important to note that all algorithms are implemented in MATLAB 8.5, and all numerical experiments are performed under Microsoft Windows environment on a PC with Intel Core i3 CPU 2.4 GHz and 4 GB RAM.

4.1 Invariability

In this experiment, the property of invariability of the proposed invariants is examined under various sets of rotation, scaling and translation transformations. Moreover, the effect of different densities of noise on their numerical accuracy is investigated. As well, the influence of the Krawtchouk polynomials parameter p on the invariability of our introduced invariants have been also illustrated in this subsection.

The 3D Airplane and Vertebra Model, which shown in Fig. 2, are firstly affected by various deformations of rotation, scaling, translation and noise adding. Then, moment invariants of the original and the transformed objects are computed up to the sixth order (n, m, k ≤ 2), using three cases: (A) p1 = p2 = p3 = 0.4, (B) p1 = p2 = p3 = 0.5 and (C) p1 = p2 = p3 = 0.6. Subsequently, the relative error between moment invariants coefficients of the original and the transformed images is calculated as follow:

where ||⋅||, f and g denote respectively the Euclidean norm, the original and the transformed images. It worth noting that a low relative error leads to high numerical accuracy.

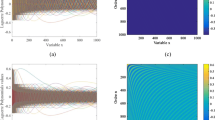

To verify translation invariance, the test objects are transformed according to a translation vector that takes values between (-16, -16, -16) and (16, 16, 16) with step (4, 4, 4). Consequently, the relative errors of the invariants are presented in Fig. 3. Similarly, scale invariance of the proposed invariants is examined by using the test objects, which are transformed by scaling factors starting from 0.75 to 1.25 with step 0.05. Then, the corresponding results are depicted in Fig. 4.

To demonstrate the rotation invariance, we are conducted in this experiment to verify the rotation invariability about each of the three axis (x-axis, y-axis and z-axis). In fact, the test images is rotated about each axis by a rotation angle varying between 0∘ and 90∘ with interval 10∘. Accordingly, the relative errors of the introduced invariants about the x-axis, y-axis z-axis are respectively presented in Figs. 5, 6 and 7.

Finally, in order to depict the noise robustness of the proposed moment invariants. In a similar way to the previous experiments, the test objects have been corrupted by different densities of Salt-and-Pepper noise varying from 0% to 5% with interval 0.5%. Then, the corresponding results are presented in Fig. 8.

Examining the Figs. 3–8, it is clear that the relative error rates is very low (in the order of 10− 5), which indicate that the proposed moment invariants exhibit good performance and express high numerical accuracy under different geometric transformations, as well as, in the presence of noisy effects. Moreover, one can observe the influence of the parameter p on the invariability property of DKMI, where the special case (B) p1 = p2 = p3 = 0.5 gives the best performance, which could help in choosing the best parameters for pattern recognition applications. As a conclusion, this new set of invariants could be highly useful to extract features for pattern recognition and 3D object classification.

4.2 Numerical stability

Generally, the computation of moments and moment invariants includes a significant number of factorial and power terms, which can undoubtedly leads to overflow and finite precision errors, especially for moment invariants of high orders. Consequently, the classification accuracy will be highly influenced by numerical instability, when higher order moment invariants are required to provide additional description of the image contents [8, 48].

Therefore, in this subsection we will examine the image size effects on the numerical stability of the proposed moment invariants. In fact, we will use the Airplane test image, shown in Fig. 2, with variety of sizes: 32 × 32 × 32,48 × 48 × 48,⋯ ,128 × 128 × 128. Indeed, we are particularly interested, in this experiment, to the maximum moment’s order, which can be correctly computed without overflow.

In Table 1, we depict respectively in the second, third and fourth columns the maximum computation order (n + m + k), concerning GMI, KMI and the proposed DKMI for different image sizes. It worth noting that, the computation of (n + m + k)-th order of RST moment invariants , using (9) or (56), depends on computing translation invariants up to the (3n + 3m + 3k)-th order, which means that the maximum order that could be theoretically computed is equal to \((\frac {N-1}{3} + \frac {M-1}{3} + \frac {K-1}{3})\).

The results presented in Table 1 clearly show that the computation of GMI is numerically unstable which is reflected in the limited number of moment invariants that can be correctly computed. Whereas, Geometric Moment Invariants up to the order \((\frac {N-1}{3} + \frac {M-1}{3} + \frac {K-1}{3})\) must be theoretically computed. This limitation is practically associated with the finite precision errors and overflow conditions. Moreover, the traditional KMI produce similar results, which is mainly inherited the use of Geometric Moment Invariants. And can be justified by the fact that the traditional KMI is expressed as a linear combination of Geometric Moment Invariants. In the contrary, the proposed invariants presents high numerical stability due to the reason that these invariants are explicitly derived from the Krawtchouk Moments, which have the orthogonality property. In fact, the main advantage of Direct Krawtchouk Moment Invariants, relies on the fact that the Krawtchouk polynomials kn(x; p, N) have a limited range of values and can be computed exactly for any value of x ∈ [0, N − 1], while the geometric basis xn can leads to overflow problem for large values of x. Finally, it is important to note that, although the computation of DKMI is very accurate, we can not achieve the theoretical maximum moment’s order.

4.3 3D object recognition

In the current subsection, the recognition accuracy of the proposed moment invariants is evaluated on the public 3D image database [45], where all images are of size 128 × 128 × 128 voxels. In fact, this experiments is conducted on a testing set composed of ten classes, each one contains three different objects. All images in this set are affected by different transformations (4 translations + 4 scaling + 4 rotations + 4 mixed transforms), in order to generate 480 objects per database, some samples are shown in Fig. 9. Moreover, to study the noise robustness of proposed invariants, five additional testing sets are created by adding different densities of Salt-and-Pepper noise {1%; 2%; 3%; 4%; 5%}.

The recognition performance of our proposed method is compared with the classical Geometric, Tchebichef, Krawtchouk and Hahn Moment Invariants. In addition, the k-Nearest Neighbors classifier with k = 1 is employed for the classification task with 5-folds cross validation, where moment invariants up to the 9-th order (n, m, k ≤ 3) are used to construct the features vector. For each testing set, the correct recognition rates are obtained and summarized in Table 2.

As can be clearly observed from Table 2, the average recognition accuracy of our proposed method are significantly higher than those obtained by classical methods. In addition, experimental results on the datasets corrupted by additive noise, demonstrate that the proposed descriptors are more effective than the existing ones. Eventually, our new invariants could be a highly useful in the field of 3D object recognition.

4.4 Computational time

To test the computational efficiency of the proposed method. In this current numerical experiment, we have used a set of five test images, shown in Fig. 10, selected from the public McGill 3D Shape Benchmark [45]. The average computational time of the proposed invariants and the existing methods, is measured for the five images using two different resolutions 64 × 64 × 64 and 128 × 128 × 128 voxels. Subsequently, the TRR (Time Reduction Rate) of our proposed invariants is calculated using the following formula:

where Time1 and Time2 are respectively the average times of the proposed and the traditional moment invariants. Moreover, this experiment is conducted for different maximum moment invariants orders 3, 6 and 9. The corresponding average times and TRR are summarized in Table 3.

It should be noted that in this experiment, we will compare the results of the proposed method with only the classical Krawtchouk Moment Invariants. Since the Tchebichef and Hahn Moment Invariants follow the same computational process for extracting invariants from the geometric moments.

Based on the results of Table 3, it can be clearly seen that the computation of the proposed invariants is much faster than traditional method. Moreover, the presented time reduction rates was very significant for both resolutions 64 × 64 × 64 and 128 × 128 × 128 voxels. In fact, this improvement in speed is achieved because the computation of the translation invariants are performed by transforming the original 3D image to the geometric center, and then the calculation of Krawtchouk moments is carried out. Instead of computing translation invariants by using (52), which is very time consuming. Eventually, this introduced new set of invariants could be very useful for object classification and recognition, especially for real time applications or when large databases are used.

5 Conclusion

The main contribution of this paper relies on the derivation of a new set of moment invariants, namely Direct Krawtchouk Moment Invariants. Where we have established a theoretical framework for the direct computation of this set of moment invariants from the Krawtchouk Moments, using some algebraic properties of Krawtchouk polynomials. This new set can be used for extracting shape features independently of geometric rotation, scaling and translation distortions, and can be employed as pattern features for 3D object classification applications.

Accordingly, several numerical experiments are performed, including invariability to geometric transforms and noise robustness, numerical stability, object classification accuracy and computational efficiency. As demonstrated in the numerical experiment section, the proposed 3D invariants DKMI are not only accurate, but also provide very fast computation even for high moment invariants orders. In addition, this new set shows sufficient stability and discrimination power to be used as pattern feature. To conclude, the proposed Direct Krawtchouk Moment Invariants are potentially useful as features descriptor for 3D object recognition, and the method described in this paper can be easily generalized for the construction of moment invariants from other discrete orthogonal moments.

In our future works, we plan to introduce new sets of 3D moment invariants based on the proposed direct method and other orthogonal polynomials, like Racah, and dual Hahn orthogonal polynomials. Also, we will concentrate on generalizing the proposed method for extracting 3D affine moment invariants. Finally, we will focus on some potential applications of the proposed 3D invariants in the fields of action recognition, 3D image retrieval and medical image analysis.

Abbreviations

- DKMI:

-

Direct Krawtchouk Moment Invariants

- TMI:

-

Tchebichef Moment Invariants

- KMI:

-

Krawtchouk Moment Invariants

- HMI:

-

Hahn Moment Invariants

- GMI:

-

Geometric Moment Invariantsx

- TRR:

-

Time Reduction Rate

- ROI:

-

Region Of Interest

- RST:

-

Rotation, Scaling and Translation

References

Abu-Mostafa YS, Psaltis D (1984) Recognitive aspects of moment invariants. IEEE Trans Pattern Anal Mach Intell:698–706

Ananth J., Bharathi V. S. (2012) Face image retrieval system using discrete orthogonal moments in

Anuar FM, Setchi R, Lai Y (2013) Trademark image retrieval using an integrated shape descriptor. Expert Syst Appl 40:105–121

Batioua I, Benouini R, Zenkouar K, El Fadili H (2017) Image analysis using new set of separable two-dimensional discrete orthogonal moments based on Racah polynomials. EURASIP J Image Video Process 2017:20

Belkasim SO, Shridhar M, Ahmadi M (1991) Pattern recognition with moment invariants: a comparative study and new results. Pattern Recognit 24:1117–1138

Bharathi VS, Ganesan L (2008) Orthogonal moments based texture analysis of CT liver images. Pattern Recognit Lett 29:1868–1872

Batioua I, Benouini R, Zenkouar K, Zahi A, El Fadili H (2017) 3D image analysis by separable discrete orthogonal moments based on Krawtchouk and Tchebichef polynomials, Pattern Recognit

Camacho-Bello C, Toxqui-Quitl C, Padilla-Vivanco A, Báez-Rojas JJ (2014) High-precision and fast computation of Jacobi-Fourier moments for image description. JOSA A 31:124–134

Chong C-W, Raveendran P, Mukundan R (2003) The scale invariants of pseudo-Zernike moments. Pattern Anal Appl 6:176–184

Chong C-W, Raveendran P, Mukundan R (2004) Translation and scale invariants of Legendre moments. Pattern Recognit 37:119–129

Comtet L (2012) Advanced combinatorics: the art of finite and infinite expansions. Springer, Berlin

Cui J, Liu Y, Xu Y, Zhao H, Zha H (2013) Tracking generic human motion via fusion of low-and high-dimensional approaches. IEEE Trans Syst Man Cybern Syst Hum: Syst 43:996–1002

Dai XB, Shu HZ, Luo LM, Han G-N, Coatrieux J-L (2010) Reconstruction of tomographic images from limited range projections using discrete Radon transform and Tchebichef moments. Pattern Recognit 43:1152–1164

Deng C, Gao X, Li X, Tao D (2009) A local Tchebichef moments-based robust image watermarking. Signal Process 89:1531–1539

Flusser J, Suk T (1993) Pattern recognition by affine moment invariants. Pattern Recognit 26:167–174

Flusser J, Suk T, Zitova B (2016) 2D and 3D image analysis by moments. Wiley, New York

Galvez J, Canton M (1993) Normalization and shape recognition of three-dimensional objects by 3D moments. Pattern Recognit 26:667–681

Goh H-A, Chong C-W, Besar R, Abas FS, Sim K-S (2009) Translation and scale invariants of Hahn moments. Int J Image Graph 9:271–285

Hu M-K (1962) Visual pattern recognition by moment invariants. IRE Trans Inf Theory 8:179–187

Iscan Z, Dokur Z, Ölmez T (2010) Tumor detection by using Zernike moments on segmented magnetic resonance brain images. Expert Syst Appl 37:2540–2549

Khotanzad A, Hong YH (1990) Invariant image recognition by Zernike moments. IEEE Trans Pattern Anal Mach Intell 12:489–497

Krawtchouk M (1929) On interpolation by means of orthogonal polynomials. Mem Agric Inst Kyiv 4:21–28

Lan X, Ma AJ, Yuen PC (2014) Multi-cue visual tracking using robust feature-level fusion based on joint sparse representation. In: 2014 IEEE Conference on Computer vision and pattern recognition (CVPR). IEEE, pp 1194–1201

Lan X, Ma AJ, Yuen PC, Chellappa R (2015) Joint sparse representation and robust feature-level fusion for multi-cue visual tracking. IEEE Trans Image Process 24:5826–5841

Lan X, Zhang S, Yuen PC (2016) Robust joint discriminative feature learning for visual tracking. In: Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence. AAAI Press, pp 3403–3410

Lan X, Yuen PC, Chellappa R (2017) Robust MIL-based feature template learning for object tracking. In: Thirty-first AAAI conference on artificial intelligence

Lan X, Zhang S, Yuen PC, Chellappa R (2018) Learning common and feature-specific patterns: a novel multiple-sparse-representation-based tracker. IEEE Trans Image Process 27:2022–2037

Liao SX, Pawlak M (1996) On image analysis by moments. IEEE Trans Pattern Anal Mach Intell 18:254–266

Liao S, Chiang A, Lu Q, Pawlak M (2002) Chinese character recognition via gegenbauer moments. In: 2002 Proceedings 16th International Conference on Pattern Recognition. IEEE, pp 485–488

Liang L, Wei M, Szymczak A, Petrella A, Xie H, Qin J, Wang J, Wang FL (2018) Nonrigid iterative closest points for registration of 3D biomedical surfaces. Opt Lasers Eng 100:141–154

Liu Y, Nie L, Han L, Zhang L, Rosenblum DS (2015) Action2activity: recognizing complex activities from sensor data. In: Twenty-fourth international joint conference on artificial intelligence

Liu Y, Nie L, Liu L, Rosenblum DS (2016) From action to activity: sensor-based activity recognition. Neurocomputing 181:108–115

Liu Y, Zhang L, Nie L, Yan Y, Rosenblum DS (2016) Fortune teller: predicting your career path. In: Thirtieth AAAI conference on artificial intelligence

Lo C-H, Don H-S (1989) 3-D moment forms: their construction and application to object identification and positioning. IEEE Trans Pattern Anal Mach Intell 11:1053–1064

Mukundan R (2005) Radial Tchebichef invariants for pattern recognition. In: TENCON 2005 2005 IEEE Reg. 10, IEEE, pp 1–6

Mukundan R, Ramakrishnan K (1998) Moment functions in image analysis—theory and applications. World Scientific

Mukundan R, Ong SH, Lee PA (2001) Image analysis by Tchebichef moments. IEEE Trans Image Process 10:1357–1364

Ong L-Y, Chong C-W, Besar R (2006) Scale invariants of three-dimensional legendre moments. In: IEEE, pp 1–4

Palaniappan R, Raveendran P, Omatu S (2000) New invariant moments for non-uniformly scaled images. Pattern Anal Appl 3:78–87

Pandey VK, Singh J, Parthasarathy H (2016) Translation and scale invariance of 2D and 3D Hahn moments. In: IEEE, pp 255–259

Ping Z, Wu R, Sheng Y (2002) Image description with Chebyshev-Fourier moments. JOSA A 19:1748–1754

Raj PA, Venkataramana A (2007) Radial krawtchouk moments for rotational invariant pattern recognition. In: 2007 6th international Conference on Information Communication Signal Processing. IEEE, pp 1–5

Sadjadi FA, Hall EL (1980) Three-dimensional moment invariants. IEEE Trans Pattern Anal Mach Intell:127–136

Sheng Y, Shen L (1994) Orthogonal Fourier-Mellin moments for invariant pattern recognition. JOSA A 11:1748–1757

Siddiqi K, Zhang J, Macrini D, Shokoufandeh A, Bouix S, Dickinson S (2008) Retrieving articulated 3-D models using medial surfaces. Mach Vis Appl 19:261–275

Singh C (2012) An effective image retrieval using the fusion of global and local transforms based features. Opt Laser Technol 44:2249–2259

Singh C (2012) Local and global features based image retrieval system using orthogonal radial moments. Opt Lasers Eng 50:655–667

Singh C, Upneja R (2014) Accurate calculation of high order pseudo-Zernike moments and their numerical stability. Digit Signal Process 27:95–106

Singh C, Walia E, Upneja R (2012) Analysis of algorithms for fast computation of pseudo Zernike moments and their numerical stability. Digit Signal Process 22:1031–1043

Suk T, Flusser J (2003) Combined blur and affine moment invariants and their use in pattern recognition. Pattern Recognit 36:2895–2907

Suk T, Flusser J, Boldyš J (2015) 3D rotation invariants by complex moments. Pattern Recognit 48:3516–3526

Tchebychev PL (1853) Théorie des mécanismes connus sous le nom de parallélogrammes Imprimerie de l’académie impériale des sciences

Teague MR (1980) Image analysis via the general theory of moments*. JOSA 70:920–930

Tsougenis ED, Papakostas GA, Koulouriotis DE (2015) Image watermarking via separable moments. Multimed Tools Appl 74:3985–4012

Wei M, Wang J, Guo X, Wu H, Xie H, Wang FL, Qin J (2018) Learning-based 3D surface optimization from medical image reconstruction. Opt Lasers Eng 103:110–118

Wu H, Coatrieux JL, Shu H (2013) New algorithm for constructing and computing scale invariants of 3D Tchebichef moments. Math Probl Eng:2013

Xiao B, Zhang Y, Li L, Li W, Wang G (2016) Explicit Krawtchouk moment invariants for invariant image recognition. J Electron Imag 25:023002–023002

Yang B, Dai M (2011) Image analysis by Gaussian-Hermite moments. Signal Process 91:2290–2303

Yang B, Flusser J, Suk T (2015) 3D rotation invariants of Gaussian-Hermite moments. Pattern Recognit Lett 54:18–26

Yap P-T, Paramesran R, Ong S-H (2003) Image analysis by Krawtchouk moments. IEEE Trans Image Process 12:1367–1377

Zhang Y, Wen C, Zhang Y, Soh YC (2002) Determination of blur and affine combined invariants by normalization. Pattern Recognit 35:211–221

Zhang L, Xiao W, Qian G, Ji Z (2007) Rotation, scaling, and translation invariant local watermarking technique with Krawtchouk moments. Chin Opt Lett 5:21–24

Zhang H, Shu HZ, Haigron P, Li B-S, Luo LM (2010) Construction of a complete set of orthogonal Fourier-Mellin moment invariants for pattern recognition applications. Image Vis Comput 28:38–44

Zhou J, Shu H, Zhu H, Toumoulin C, Luo L (2005) Image analysis by discrete orthogonal hahn moments. In: Image Analysis Recognition. Springer, pp 524–531

Zhu H, Shu H, Liang J, Luo L, Coatrieux J-L (2007) Image analysis by discrete orthogonal Racah moments. Signal Process 87:687–708

Zhu H, Shu H, Zhou J, Luo L, Coatrieux J-L (2007) Image analysis by discrete orthogonal dual Hahn moments. Pattern Recognit Lett 28:1688–1704

Zhu H, Shu H, Xia T, Luo L, Coatrieux JL (2007) Translation and scale invariants of Tchebichef moments. Pattern Recognit 40:2530–2542

Acknowledgments

The authors thankfully acknowledge the Laboratory of Intelligent Systems and Applications (LSIA) for his support to achieve this work.

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

The authors declare no conflict of interest.

Appendices

Appendix A: Proof of proposition 1

With the help of (41), the translated version of Krawtchouk polynomials can be expressed by a linear combination of monomials as

According to the binomial theorem, it is possible to expand any power of x − x0 into a sum of the form:

and with the help of Proposition 1 we can express xs in terms of Krawtchouk polynomials. Hence, (59) can be written as:

Similarly we can deduce that,

and

As a consequence, by substituting (61), (62) and (63) into (49), we can write \(KM_{nmk}^{t}\) of a translated image in terms of KMuvw of the original image as:

Therefore, the proof is completed.

Appendix B: Proof of proposition 2

With the help of (40), the deformed version of Krawtchouk polynomials can be expressed as follows:

By using the trinomial theorem, it is possible to write the (65) as:

Similarly, we can deduce that,

and

Hence, by substituting (66), (67) and (68) into (54), we can write \(KM_{nmk}^{t}\) of a deformed image in terms of KMuvw of the original image as:

where δ = s + t + f, σ = u + v + w and 𝜖 = i − s − h + j − t + e − f − w.

The proof is completed.

Rights and permissions

About this article

Cite this article

Benouini, R., Batioua, I., Zenkouar, K. et al. Efficient 3D object classification by using direct Krawtchouk moment invariants. Multimed Tools Appl 77, 27517–27542 (2018). https://doi.org/10.1007/s11042-018-5937-1

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-018-5937-1