Abstract

This paper discussed some improved algorithms for multiple moving targets detection and tracking in fisheye video sequences which based on the moving blob model. The view field of fisheye lens achieved 183 degree which used in our system, so it has more effective in the no blind surveillance system. However, the fisheye image has a big distortion that makes it difficult to achieve an intelligent function. In this paper we try to establish a moving blob model to detect and track multiple moving targets in the fisheye video sequences, in order to achieve the automation and intelligent ability for no blind surveillance system. It is divided into three steps. Firstly, the distortion model of fisheye lens was established, we are discussing the character of the imaging principle of fisheye lens, and calculate the distortion coefficient which can be used in the moving blob model. Secondly, the principle of the moving blob model was analyzed in detail which based on the fisheye distortion model. It was included four main algorithms, which the first is the traditional algorithm of background extraction; and the background updating algorithm; the algorithm of the fisheye video sequence with the background subtracted in order to get the moving blobs; the algorithm of removing the shadow of blobs in RGB space. Thirdly, we determined that every extracted blob is a real moving target by calculating the pixels with a threshold, which can discard the faulty moving targets. Lastly, we designed the algorithm for tracking the moving targets based on the moving blobs selected through calculating the geometry center. The experiment indicated that every algorithm has a better processing efficiency of multiple moving targets in fisheye video sequences. Compared the traditional algorithm, the improved algorithm can be detected the moving target in a circular fisheye image effectively and stably.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Vision sensor is very important in the computer vision system. It can be divided into pinhole camera and fisheye camera [25]. The pinhole camera can shot a normal image with limited Field of View(FOV), and the fisheye camera like a fish look the scenery form water to air, the FOV can achieved 180 degree or more. So, fisheye cameras can be used at some specific applications such as surround view systems [11, 14] or super large range surveillance systems [16, 23]. Compared with pinhole cameras, the FOV of fisheye cameras was shown as Fig. 1. Red is the fisheye camera view field and green is the pinhole view field. The surveillance area of one 180 degree fisheye camera is equivalent to four 45 degree pinhole cameras. So, two fisheye cameras can take a 360 degree no blind visual surveillance.

However, the fisheye camera used the fisheye lens of special imaging principle that it is a circular fisheye image with much distorted. So, when processing the fisheye image it is very difficult to achieve ideal results by using the normal digital image processing algorithm. In realizing the effective processing of the fisheye image, the main methods are to correct the fisheye image to a 2D normal image, and then use the traditional image processing algorithm to deal with it [4, 19]. In the study of fisheye image correction, many researchers have carried out much fruitful work, such as Ying et al. [22] used the spherical perspective projection(SPP) constraint method to correct the fisheye image, because the space line are generally projected into curves on a fisheye image, the SPP constraint means that a space line must be projected into a great circle on a unit sphere under the SPP model. Wei et al. [19] present a minimize time-varying distortion and preserves salient content in a coherent manner. It is controlled by user annotation, and takes into account a wide set of measures addressing different aspects of natural scene appearance. Bellas et al. [1] designed a fisheye lens distortion correction system on a custom board that includes a Xilinx Virtex-4 FPGA, it is achieved real-time processing. At the same time, lots of fisheye image calibration and correction algorithms are proposed [5,6,7,8]. These research methods may be better in order to realize the calibration and transformation of the circular fisheye image into a plane image.

But in the fisheye image, it has much more information in the distortion part. However, the corrected image will lose a lot of information, especially at the edge of the fisheye image [9]. At the same time, it is also requires more time to run the correction algorithm. So in the fisheye video surveillance system, the method of first correcting the fisheye image and then processing the 2D normal image using the traditional algorithm will not achieve “seamless” monitoring. Therefore, it is necessary to study direct processing algorithms for the fisheye image and design a series of algorithms for direct fisheye image processing.

In this paper, we proposed a method of multi-moving object detection in fisheye video based on the moving blob model, and the detection objects are vehicles run on the road. This is a systematical discuss on the moving blob method [20]. In the area of traditional moving object detection, there are a lot of algorithms had been researched and designed [17, 24]. Mitiche et al. [13] and Wedel et al. [18] used the Optical Flow method to detect the moving object such as vehicle and pedestrian. Then the Mean shift method and the Cam shift method are used in moving object detection widely [2, 3, 15, 21]. These methods can detect moving targets in normal video sequences, but with them it is difficult to detect the moving target in fisheye video sequences because of the distortion. It’s especially important in order to enable accurate motion tracking for a large set of points in the video as close to real time as possible. To ensure accuracy, many methods only track a sparse set of points [10, 12]. In this paper, the improved moving target detection algorithm of fisheye sequences was proposed based on the moving blob model. Some moving blobs can synthesize like pixels in the foreground of the fisheye sequences, which have the same motion attribute. We can detect and track these moving blobs, and thus realize moving target detection and tracking in the fisheye images. The experiment indicates that it has good detection effective in a moving foreground, especially at the edge of the circular fisheye image. Furthermore, this method can detect multiple targets in a fisheye image at the same time, as well as preserve the integrity of information. This method has achieved lossless information processing of fisheye video sequences and has a good practical value.

2 The distortion model of the fisheye lens

The fisheye lens is one kind of the imaging lens in which the field of view is generally 180 degrees to 230 degree. It has ultra-big view field which can image a hemispherical space with single lens. However, the fisheye image also has a big distortion because of the special image-forming principle of the fisheye lens. In order to discuss the distortion principle, a geometric model of the fisheye lens was first established, and then the distortion model based on the geometric model was established.

2.1 The fisheye lens model

In this paper, we only discuss the fisheye image forming principle of equidistant projection. One point P in space is imaged in the image plane P″ through equidistant projection, as the optical imaging model was shown in Fig. 2. This model includes three coordinates, (X,Y,Z) for the lens, which is imaging every point in space to the image plane. These coordinates are the real world coordinate while (x,y) indicate the image plane coordinates, and (u,v) indicate the circular fisheye image pixel coordinates. In this model, the point P is imaging a point P″ in the (x,y) plane through a fisheye lens. OO’ is the optical axis, h is the vertical distance from the point P to the lens surface, w is the angle relative to the optical axis, θ is an azimuth, r is the radial distance of P″ on the CCD surface to the optical center O′, y is the azimuth of P″, L is the virtual imaging distance of the fisheye lens, f is the virtual focal length of the fisheye lens, and dx and dy indicate the physical size of CCD pixels.

For the point P in Fig. 2, the radial distance of r as the imaging point to the optical center and the optical axis angle of ω is:

There is a one-to-one correspondence between r and ω. Allowing K = kf, we can get

We can know from formula (2) that for every one point, there is a one-to-one correspondence between r and ω. If K can be calculated, we can calculate the distortion for every pixel in the fisheye circular image. So the parameter K can be defined as the distortion coefficient.

2.2 The simplify model

In order to calculate the distortion coefficient of K, we established a simple model based on Fig. 1, shown here in Fig. 3.

We can calculate the radial distance of r as

The angle of ω between the optical center and the optical axis can be calculated:

Substitute (3) and (4) into (2), then

For every fisheye image, (u,v) is one pixel coordinate in the image and R, H, L are constants of one fisheye lens which has been designed. If we can calculate the optical center (u0,v0) of lens, then the distortion coefficient K of every pixel in the fisheye image can be calculated. Every pixel at every position in the circular fisheye image has a different distortion coefficient, from (5) we can know that the K value become bigger when the pixel was at the edge of the fisheye image, and it became zero when the pixel was at the optical center point. This helped us to process the fisheye image which used this distortion coefficient.

2.3 The optical center calibration

Rays parallel to optical axis is imaging by uncorrected fisheye lens, every line extension will collect at a point, and this point will be the optical center of the lens. In this paper, we designed a special cylinder calibration target that included several straight with evenly spaced, and then put the camera with fisheye lens into the vertical cylinder calibration targets, the image was shown as Fig. 4(a). All of straights are clear and we can use Hough transform to detect all the straights. It was shown in Fig. 4(b).

For every straight, it can be fitting a straight function as formula (6), n is the line amount.

From Fig. 4 we can know every two line has an intersection point (xi,yi), so all of lines have N intersection points, it can be calculated the average of all point as the optical center.

The statistical error of (u0,v0) can be calculated as

In our experiment, the image resolution of the fisheye camera is 720*576. We test 8 times of the optical center of this camera, every time of the (u0,v0) was calculated in Table 1. The average is (347.2, 289.4), the average error is (12.8, −1.4).

3 The principle of the moving blob model based on the distortion model

In this paper we want to detect the moving object effectively in fisheye image by using the moving blob model and the distortion coefficient of the fisheye lens. In fisheye image processing, it is very difficult to detect the object at the edge in fisheye image because of the distortion. The distortion coefficient for every pixel in the fisheye image based on the distortion model will help to process this pixel. An improved algorithm will be designed as follows.

3.1 The detection flow of the moving blobs model

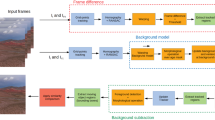

It can be divided into three levels to detect the moving targets in fisheye video sequences in which the video capture system has a camera in a fixed position and the lens has a fixed focal length. The first level is the pixel level in the image of each frame, the second is the moving blob level which has the same motion attribute as the moving blob model, and the third level is the moving target level which is also detected according to the moving blob level. A progressive relationship exists among the three levels. The moving blob will be obtained by analyzing the pixel level, and subsequently the moving target can be detected and tracked according to the moving blob. An explanatory diagram is shown in Fig. 5.

The three levels wholly cover moving target detection in fisheye video sequences, and they can be subdivided further. The algorithms flow chart of moving target detection was drawn based on the moving blob model. This is shown in Fig. 6. There are three key steps to processing. The first step is background image acquisition and updating according to time and environmental changes. This is needed in order to study how to calculate a stable background image from the fisheye video sequences. This step decides the accuracy of moving blob detection. The second step is the algorithm of extracting the moving blob from video sequences, which means separating the moving blob from the background. The third step is detecting moving targets based on the moving blobs from the video sequences. The three steps progressively calculate the background of moving blobs to moving targets in fisheye video sequences. We will analyze these three steps in detail as shown below.

3.2 Improved algorithm of mean background extraction and updated

For the acquisition of fixed focal fisheye video sequences, the times of the background are more than the prospect target. Therefore, we can take samples from the video sequence in a period of time, such as 3 frames per second. For each pixel of the sample image frame, we calculated the average. According to the principle we know the average value will be close to the background. The schematic diagram as shown in Fig. 7.Especially when the background is more than the prospect, the average value will be more close to the background. At the same time, the noise will be suppressed because of the average method.

From Fig. 7, we can designed the algorithm as follows:

-

(1)

N fisheye image frames Fi were obtained through sampling, here i = 1,2,...N.

-

(2)

For every pixel (x,y) in every frame, we calculated the background with:

This simple method depended on the sample frames, and it is not optimal when there are many moving targets in the video. If we can remove the pixels which are not the background, the average value will be close to the real background. However, an effective method to detect the pixels which are not the real background is difficult because of the unknown moving pixel points. But we can analyze some fisheye image frames where we know that the background is more times than the foreground and the color of foreground is not the same as the background. One pixel in the fisheye video sequences, if it is a background pixel, will be collected around the center of the background points in the RGB space during the time of the sample, while the foreground pixels are far away from the center. So we can consider the pixel as not background which is the distance of one color vector to this standard deviation. The calculation formula of the standard deviation is

Here C is the three color components of R, G, B, and X0 is the average value of the sample. So we can improve the above method of background extraction, which calculated the standard deviation after averaging the pixel value of all sample frames, then removed the pixel of an average value more than standard deviation. The steps of improved algorithm with distortion coefficient K is

-

(1)

N fisheye image frames Fi were obtained through sampling, here i = 1,2,...N.

-

(2)

For every pixel (x, y),

-

a)

Calculate the center point, here K is the distortion coefficient,

-

a)

-

b)

Calculate the standard deviation

-

c)

Calculate the average value of all elements in the collection of

This average value is the background B(x,y) which is close to the real background. But the background will be changed along with the time and the environment, so it needs to be updated automatically.

-

(1)

The background changes gradually. For the current time t, the background is modified as:

-

(2)

The background changes abruptly. We supposed the illumination of the background change satisfies the following linear relation:

Through complex calculated, the background is renewed as:

Where F(x,t) is the current image, σ(t) is the image discrimination criterion which defined as:

In formulate (17), Max(Dg) is the predefined maximum gradient difference for image. When the background which is closer to the true and real time background is extracted, we can calculate the moving blob in fisheye video frames.

3.3 The moving blob extraction and shadow remove

We can decide which pixels compose the foreground or background in fisheye video frames through the Euclidean distance, the steps of the algorithm for extracting the moving blobs are

-

(1)

Set the background image of B(x,y), which has been calculated.

-

(2)

Scan every pixel of F(x,y) in the processing frame, if

Then output G(x,y) = 1, otherwise G(x,y) = 0.

-

(3)

G(x,y) is the moving blob of the current frame.

For every moving blob include pixel of G(x,y) = 1, it is need to judge whether real moving blob because some blobs maybe a false blob. We can use the distortion coefficient K to judge every moving blob.

-

(1)

Calculated the area S of every moving blobs.

-

(2)

S is not the real value of the target because of the distortion in fisheye image; it is need to correction with the K value. For every S, do

Here Ta is a threshold of moving blob judgment. This value can be calculated through experiment. When R > 0 then the moving blob S is the real blob, and discard any blob when R < 0.

This method can reduce the complex of judging true moving blobs effectively that only use one threshold Ta. If use normal method, it is difficult to calculate because of the distortion of fisheye circular image, especially when the moving blob is at the edge of the image.

The moving target which extracted in the fisheye video sequences has some shadow, especially on sunny days. The shadow is not part of the moving targets, but the moving blobs extracted using the above algorithm includes the shadow because the shadow is moving with the moving target. This will make the foreground targets link up into a single stretch, and seriously affect the accuracy of target detection and tracking. In this paper, a shadow removal method was proposed based on the RGB space.

The steps of the improved shadow removal algorithm in RGB space is

-

(1)

Calculated the background image of B(x,y) and get the current frame F(x,y).

-

(2)

For the foreground moving blob pixels F(x,y) extracted using the moving blobs extraction algorithm, when

Then this pixel is the shadow and needs to be removed from the foreground.

4 Experiment results and analysis

A fisheye video was captured with a frame rate of 25 per second, and a resolution of 720*576. We used this video to test all algorithms based on the moving blob model. Some original fisheye image was shown as Fig. 8 which extracted from the fisheye video.

4.1 Background effecting

We used the improved mean value algorithm to extract the background. Fig. 9(a) is the extraction result using 30 frames with 1 frame per second and no distortion coefficient, and Fig. 9(b) is the extraction result of 30 frames with 1 frame per second and using distortion coefficient to correction. We can know from Fig. 9 that the background has some moving marks when we did not used the distortion coefficient K, so the distortion coefficient K could made the background more clear and it will make the moving blobs extraction more effective in next steps.

4.2 The Detection of moving blobs

We used the Euclidean distance method to detect the moving blobs in the fisheye video sequences. The experiment results using different thresholds were shown in Fig. 10. The Fig. 10(a) is the background was calculated 5 frames per second of frames rate, Fig. 10 (b) is the sample of original frame form the video sequences, Fig. 10(c) is the object detection result of a threshold of 40, it had a lot of adhesions of moving blobs making it is difficult to separate every moving target, especially at the edge of the fisheye image. The Fig. 10(e) was the result of a threshold of 50, and since the moving blobs were not fully extracted some moving targets were discarded. Fig. 10(d) was the result of a threshold of 45; we found that moving blobs could be detected completely when using this threshold. We can select this threshold to detect the moving blobs in the fisheye images.

4.3 Remove the shadow of moving blobs

In Fig. 8 we can see there is some interference of shadows for moving targets. This makes some targets appear to be connected. We used the method of the improved RBG space shadow removal algorithm and selected an adapted threshold value of Tβ = 0.1, Td = 60. The experiment results after removing the shadow of the moving blobs is shown in Fig. 6. Figure 11(a) includes the shadow, and in Fig. 11(b) the shadow of the moving blobs has been removed. This algorithm is effective at removing the shadow.

When the shadow of the extracted moving blobs has been removed, we can mark the moving blobs with rectangles as shown in Fig. 12. Because the adhesion of moving blobs has been reduced, every blob can now be detected separately.

4.4 Tracking the moving target

The pixel of every blob which could be detected was calculated. Through many times experiments, and combined with the characteristic of fisheye videos, we found when pixels of one blob were less than 15*12 can be discarded as interference object at 800*600 resolution. Because the object at the edge could be small but if it moved to center that became big. So blobs which more than 15*12 pixels could be marked from the origin in the fisheye video sequences. A fisheye video was experiment used the moving blob model discussed above. The tracking results of moving targets are shown in Fig. 13. Column (a) is the original video frames, column (b) is the tracking result which used moving blob model, and column (c) is the result which used the traditional algorithm of background subtraction. From column (b) we can know that the moving targets can be tracked stably when they moved from far to near, and every moving objects more than 180 pixels are detect and tracking. When the object is far away and very small, sometimes we think this is not the really object in fisheye image and did not marked. But if it moved near to the center of the picture, it could be marked. Column (c) could detect some center big moving object, but some object marked error, especial at the edge of image. So compared some traditional algorithms, the improved algorithm of moving blob model is good to the fisheye video sequences process.

5 Conclusion

In this paper, we discussed an improved algorithm based on the moving blobs model for the moving object detection and tracking in fisheye video sequences. This algorithm is divided into two main steps, where the first step is detecting the moving blobs which based on the traditional algorithm of subtracting the background and the second step is tracking the moving targets by determining the moving blob. The experiment results indicate that this improved algorithm can track some of the moving targets in fisheye video sequences stably, and it is efficient when the targets are moved from far to near the center of the image. The fisheye image has a big view field but at the same time it has a big distortion, especially when too many targets are on the edge of the fisheye image which make very difficult to detect them. So it need more deep research in this field.

References

Bellas N, Chai S M, Dwyer M, et al. (2009) Real-time fisheye lens distortion correction using automatically generated streaming accelerators[C]//Field Programmable Custom Computing Machines. FCCM'09. 17th IEEE Symposium on. IEEE 149–156

Chahooki M, Charkari N (2016) Bridging the semantic gap for automatic image annotation by learning the manifold space. Comput Syst Sci Eng 31(1)

Comaniciu D, Ramesh V, Meer P (2000) Real-time tracking of non-rigid targets using Mean Shift. Comput Vis Patt Recog 02:142–149

Ding Y, Xu Z, Zhang Y, Sun K (2017) Fast lane detection based on bird's eye view and improved random sample consensus algorithm. Mult Tools Appl 76(21):22979–22998

Du H, Liu Z, Song H, Mei L, Xu Z (2016) Improving RGBD Saliency Detection Using Progressive Region Classification and Saliency Fusion. IEEE Access 4:8987–8994

Gennery DB (2006) Generalized Camera Calibration Including Fish-Eye Lenses. Int J Comput Vis 68(3):239–266

Hughes C, Denny P, Glavin M et al (2010) Equidistant Fish-Eye Calibration and Rectification by Vanishing Point Extraction. IEEE Trans Pattern Anal Mach Intell 32(12):2289–2296

Kannala J, Brandt SS (2006) A Generic Camera Model and Calibration Method for Conventional,Wide-Angle, and Fish-Eye Lense. IEEE Trans Pattern Anal Mach Intell 28(8):1335–1310

Kweon G, Choi YH, Laikin M (2008) Fisheye lens for image processing applications[J]. J Opt Soc Korea 12(2):79–87

Li J, Wang JZ, Wei S (2010) Moving object detection in framework of compressive sampling. J Syst Eng Electron 05:740–745

Lin CC, Wang MS (2012) A vision based top-view transformation model for a vehicle parking assistant[J]. Sensors 12(4):4431–4446

LIU B, ZHOU XH, ZHOU HQ (2004) Vehicle Detection and Recognition in Multi-traffic Scenes. J Univ Sci Technol China 34(5):599–606

Mitiche A, Mansouri AR (2004) On convergence of the Horn and Schunck optical-flow estimation method. IEEE Trans Image Process 13(6):848–852

Nielsen F (2005) Surround video: a multihead camera approach[J]. Vis Comput 21(1–2):92–103

Qian YQ, Xie QL (2010) Camshift and Kalman Predicting Based on Moving Target Tracking. Comput Eng Sci 21(8):81–83

Song HZ, Fu Y, Zhang L et al (2013) Cursor caging: enhancing focus targeting in interactive fisheye views. SCIENCE CHINA Inf Sci 5:115–130

Tu LF, Zhong SD, Peng Q (2014) Moving object detection method based on complementary multi resolution background models. J Cent South Univ 6:2306–2314

Wedel A, Pock T, Zach C et al (2008) An improved algorithm for TV-L1 optical flow. Stat Geometric App Vis Motion Anal: Int Dagstuhl Seminar 7:23–45

Wei J, Li CF, Hu SM et al (2012) Fisheye video correction. Vis Comput Graph, IEEE Trans 18(10):1771–1783

Wu J, Zhang G, Shuai Y et al (2014) Study the Moving Objects Extraction and Tracking Used the Moving Blobs Method in Fisheye Image[C]. Pattern Recognition: 6th Chinese Conference, CCPR. Changsha, China 484:255–265

Yang SX (2011) Fast-moving target tracking based on mean shift and frame-difference methods. J Syst Eng Electron 04:587–592

Ying XH, Hu ZY (2003) Fisheye Lense Distortion Correction Using Spherical Perspective Projection Constraint. Chin J Comput 26(12):1702–1708

Yuan X, Song YR, Wei XY (2011) Automatic surveillance system using fish-eye lens camera. Chin Opt Lett 2:37–41

Zhang ZK, Zhou JJ, Wang F et al (2011) Multiple-target tracking with adaptive sampling intervals for phased-array radar. J Syst Eng Electron 5:760–766

Zhang B, Appia V, Pekkucuksen I, Batur A, Shastry P, Liu S, Sivasankaran S, Chitnis K, Liu Y (2014) A surround view camera solution for embedded systems,” in Computer Vision and Pattern Recognition Workshops (CVPRW), 2014 I.E. Conference on, 676–681

Acknowledgements

We should like to acknowledge that this work was supported in part by the Hunan Provincial Natural Science Foundation (2016JJ2064, 2017JJ3099), and the Open Fund of Education Department of Hunan Province(15 K051). The experiment of this work also was supported in part by the Research Fund of Science and Technology Program of Hunan Province (2016TP1021) and Fund of Education Department of Hunan Province(16C0723). Its contents are solely the responsibility of the authors and do not necessarily represent the official views. At the same time, we are thanks to provide lots of experimental support by the Research Center for Heterogeneous Computing and Applications, Hunan Institute of Science and Technology.

Author information

Authors and Affiliations

Corresponding authors

Rights and permissions

About this article

Cite this article

Wu, J., Huang, F., Hu, W. et al. Study of multiple moving targets’ detection in fisheye video based on the moving blob model. Multimed Tools Appl 78, 877–896 (2019). https://doi.org/10.1007/s11042-018-5763-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-018-5763-5