Abstract

In recent years many methods for image watermarking have been proposed to overcome the growing concern of copyright protection. The goal of all these methods is to satisfy the tradeoff between two important characteristics of robustness and imperceptibility. In this paper an adaptive blind watermarking method in the Contourlet transform domain is proposed. In this method we apply a two-level Contourlet transform on the original image. The first level approximate image is partitioned into blocks. Using a novel edge detection algorithm, important edges of each block of the approximate image are detected and the entropy of each block is also computed. Then by concatenating some portions of the second level subbands we form blocks. These formed blocks are transformed into DCT domain. Watermark is embedded by modification of the DCT coefficients. The severity of the embedding is controlled depending on the complexity of the corresponding block in the approximate image. For higher robustness against attacks, we embedded the watermark redundantly and used voting mechanism in extraction stage. Experimental results reveal that our method has high robustness and acceptable imperceptibility.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Nowadays due to the expansion of the internet and social networks, multimedia products, such as images, audio files, etc., could be easily shared online. In such environments, sometimes multimedia data are forged and replicated which results in violation of the copyright. Watermarking is a way of embedding a message in an image, audio clip, video clip, or in any other digital media, in order to protect the media’s copyright and intellectual property. The message is embedded by the owner of the media to indicate the ownership and to preserve the intellectual property. For this purpose in recent years many image watermarking methods have been proposed. Robustness against possible attacks and imperceptibility are the two main challenges for any image watermarking methods [3]. Two main stages in watermarking methods are embedding and extraction. In the embedding stage the watermark is somehow embedded into the image to better satisfy the tradeoff between robustness and imperceptibility. In the extraction stage the embedded watermark is extracted from the watermarked media.

In [17] a review of watermarking techniques is presented. Watermarking methods are classified into two groups based on whether they need to have the original image in the extraction stage or not. Blind detection techniques, which are advantageous, do not need the original image or the watermark. On the other hand non-blind methods require the original image and/or the watermark logo. This has made non-blind methods to be less appealing. Also watermarking methods based on their embedding environment are classified into spatial domain methods and frequency domain methods. Many spatial domain watermarking methods have been proposed. In [12] a copyright protection method for digital images in spatial domain is proposed. In this method in the embedding phase the watermark is fused with noise bits to enhance the security. Then a XOR operator is applied between the watermark and the feature value of the image by 1/T rate forward error correction. Also a voting mechanism is used in the extraction phase. In [23] a method for enhancing the feature-based image watermarking against geometric distortions and common image processing operations is proposed. It combines an affine invariant point detector, image normalization and the orientation alignment. In [24] a watermarking method, robust to geometric moments, is proposed. In this method a set of affine invariants are derived from Legendre moments. Then these affine Legendre moment invariants are used in the embedding process. In [25] a Gaussian low-pass filter is applied to the original image and using a secret key, a number of gray levels are randomly selected. Then the histogram of the smoothed image is constructed using the selected gray levels. By selecting pixel groups with the highest number of pixels a safe band between chosen and not chosen groups is built. A watermark bit is embedded into a group by moving some pixels to certain gray levels within the pixel group. This method can embed a small number of bits in an image. One of the main characteristics of spatial domain watermarking methods is that they are highly robust against geometric attacks.

Even though highly robust spatial domain watermark methods do exist but they usually have low embedding capacities [25]. Transform domain based watermarking methods can easily achieve high robustness by modifying the transform domain coefficients [17]. Frequency domain based methods use transform techniques, such as discrete Fourier transform (DFT) [19], discrete cosine transform (DCT) [7, 8, 18, 20, 21], discrete Wavelet transform (DWT) [11, 13–16, 22] and Contourlet transform (CT) [1, 4]. In [19] a watermark is embedded in a ring in the DFT domain. In this method the watermark has circular symmetry and it is added in middle frequencies of the DFT domain of the original image. In [20] the input image is first segmented in different parts based on Voronoi algorithm and then a sequence of real numbers is embedded into DCT domain of these segmented parts. In [21] by applying DCT to the blocks of the input image and using the DC coefficient (coefficient (0, 0)) of each block, a low-resolution approximation image is constructed. Then the watermark is embedded by adding it to high frequencies of the produced approximation image. In [18] a non-blind copyright protection scheme for e-government document images, using DCT domain, is proposed. In this method by applying DCT transform on the original image and using SFC, coefficients are mapped into four rectangular areas from low to high frequencies. Then SVD is applied to each area and the original image is modified by embedding the watermark using singular values of the DCT transformed image. Also in this method the optimal value of the scaling factor of each area is determined using a genetic algorithm. A blind version of [18] which only uses the maximum singular value of the DCT of an image is presented in [8]. One problem of [8, 18] is that these two methods use Genetic algorithm which does not always result in a global optimum value. In [7] at first a mask of the original image is built by luminance masking. The image is embedded by modifying the singular values of DCT of the original image with singular values of the generated mask. Embedding uses a control parameter which is found using genetic algorithm.

In [16] a review of the watermarking methods in DWT domain is proposed. In [22] a SS-based method is proposed, where a key dependent randomly generated wavelet filter bank is used for embedding the watermark. In [13] a method based on the significant difference of wavelet coefficient quantization is proposed. In this method every seven non overlap wavelet coefficient of the original image are considered as a group and the two largest coefficients are used in the embedding phase. A watermark bit is embedded by adjusting the magnitude of the difference of these two coefficients. Also in the extraction phase an adaptive threshold value is used to extract the watermark. In [11] despite of embedding the watermark in wavelet coefficient the watermark is embedded in the singular value of the wavelet transform of the original image which is obtained by applying SVD on the DWT sub bands. In [15] wavelet trees are classified into two clusters. One cluster is denoted as the watermark bit 1, and the other denotes bit 0. These trees are constructed using distance vector which is determined by the two smallest coefficients of a wavelet tree. These trees are quantized to increase the statistical difference between a watermark bit 1 and a watermark bit 0. This difference is used in the extraction phase for determination the watermark bit. In [14] wavelet coefficients of the original image are grouped into different blocks with different sizes. Then watermark is embedded in different sub-bands. Every block is embedded by adjusting the local maximum coefficient of that block by increasing or decreasing its energy to embed 1 or 0 respectively. In the extraction phase, energy is subtracted from the local maximum coefficient. If the coefficient still remains the maximum in the block, then watermark bit is 1, otherwise the watermark bit is 0. One of the drawbacks of this method is that it has low NC values against severe JPEG attacks.

In recent years CT transform features motivated researchers to use it in watermark field. In [4] the watermark is embedded into different bands in order to have more robustness against attacks. Due to low sensitivity of the human visual system to the intensity change in edge areas of the image, watermark data in [1] is embedded into directional sub bands with the highest energy. In [9] a non-blind image watermarking is proposed in which the watermark is embedded in the singular values of CT of the original image and for more robustness the watermark is embedded in three scales of the CT domain. In [2] for the watermark embedding, both CT and DCT are cascaded and the strength factor for the embedding severity is determined by block complexity in the CT domain. In [10] a block based method is proposed and the watermark is embedded into CT coefficients. In this method in order to increase the robustness, the watermark is embedded in the DCT coefficient of the CT blocks. This method is not very robust against some attacks such as Gaussian noise, salt & pepper noise and also JPEG attacks.

In this paper, we propose a new blind adaptive image watermarking method in the CT domain. In this method at first a two level CT transform is applied on the original image and the approximate image of the first level is decomposed into blocks. Then the second level detail image is partitioned into blocks in such a way that each detail block has a corresponding block in the approximate image. The watermark is embedded in detail blocks. In order to increase the robustness against attacks, the watermark is embedded in DCT coefficients of detail blocks. To satisfy the tradeoff between robustness and imperceptibility, stronger embedding is done in complex blocks and weaker embedding is performed in smooth blocks. The severity of embedding in every detail block is controlled by determining the complexity of corresponding block in the approximate image. The complexity of each approximate image block is determined using two criterions of edge concentration and entropy of that block. In this method, to find edges of each block, a new modified edge detection algorithm is proposed. Also in order to further increase the robustness of our method, we redundantly embedded the watermark and in extraction stage a voting mechanism is used. In brief, we define a novel method of finding complex regions where human visual system is not sensitive to changes in such regions. Embedding in such complex regions is done with higher strength which increases the robustness of our method. Also, our embedding method is novel. It is performed in the detail sub-bands of CT which causes minor distortions in the image. This is done by gathering sub-blocks from four different detail sub-bands. After embedding modifications are performed, the sub-blocks are distributed back in their corresponding sub-bands. This is actually an error-diffusion mechanism which enhances the transparency of our algorithm.

The rest of the paper is organized as follows. In the Section II details of the proposed method are discussed. Experimental results are presented in Section III. Finally, we conclude the paper in Section IV.

2 Method

Figure 1 shows the block diagram of the proposed watermarking method. In this diagram one bit of watermark, W(B i ), is embedded in a block (B i ). Different parts of the proposed method are discussed in this section.

In the proposed method at first a two-level CT transform is applied to the input image. As shown in Fig. 2, the original image is decomposed into two 4 directional subbands.

In our method the embedding process and the embedding severity is controlled with respect to the first level approximate image. For this purpose first level approximate image is decomposed into m × m blocks and for each block the complexity measure is determined. Hence, with our method in an w × w input image, a watermark with \( \frac{w^2}{4\times N\times {m}^2} \) bits and N-bit redundancy can be embedded. Later we will discuss how complexity measure is obtained for each approximate image block.

In order to decompose the second level detail image to blocks and embed the watermark in them, we propose a new blocking scheme which is shown in Fig. 3. In this scheme in order to embed a watermark bit in a m × m block, each of four detail images is decomposed to \( \frac{m}{2}\times \frac{m}{2} \) sub-blocks and a m × m block is constructed by four \( \frac{m}{2}\times \frac{m}{2} \) sub-blocks that are from four different detail images. After constructing each m × m block, by applying DCT transform on it and modifying DCT coefficients as follows, we could embed a watermark bit in a block. This gathering of sub-blocks from four different detail images and then distributing them back to their corresponding detail images help us diffuse the modification errors which are caused by the embedding procedure.

Figure 4 shows the pseudo code of the proposed embedding procedure. Let us denote DCT transform of any block B i by D i . In the “DCT Coefficients Modification” stage of the algorithm we modify two DCT coefficients of D i (x, y) and D i (w, z) in each block based on the watermark bit that is to be embedded in any B i block. Also α i and β i definition is explained later.

In above equations, the function Swap (D i (x, y), D i (w, z)) switches around the values of coefficients D i (x, y) and D i (w, z). Also the strength factor α i which determines the complexity measure of each block i is computed as bellow:

In (1), the term \( Complexit{y}_{B_i} \) is calculated as bellow:

In (2), Entropy(B i ) is the entropy of block B i and Edge_Density(B i ) represents the sum of edge pixels in block B i .

In order to find the edges one could use Canny [5]. We propose a new version of multi-scale multi-threshold Canny edge detector [6] algorithm which can find a wide range of edges. In this method at first, for each scale s, Canny edge detector algorithm is applied with different thresholds on each input block. Then the results of different thresholds in each scale are averaged as bellow:

In the above equation, E s is the output of averaging of Canny results using different thresholds at the scale s. B i is the input image block, Min_t and Max_t are the minimum and maximum thresholds and K is the number of thresholds. Now the maximum edges of the block among all scales are detected as bellow:

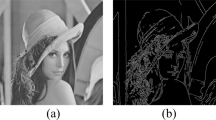

In (4), Edge is the set of block edges obtained from different scales, E j is the averaged out edges of the block in scale j, and S is the number of scales. Unlike [6], which uses min E j , we use max E j . We know that by using multi-thresholding we identify all existing edges in a scale. When we use max E j , it identifies the scale which contains largest range of edges, including stronger edges too. Hence, more edges are detected. We can see that stronger edges have higher intensity values and weak edges have lower intensity values. In our method we call the number of edge pixels in a block as the edge concentration of that block. We want to embed stronger in blocks which have high edge concentrations. Hence, we have to ignore weak edges. In order to maintain the stronger set of edges in each block and get rid of weak edges, we apply Otsu thresholding [5] on the results obtained from (4) for each block. Otsu’s method divides intensity values of an image into two classes by finding an intensity threshold. The threshold is chosen in a way to maximize the inter-class variance and to minimize the intra-classes variance. In Fig. 5 the original first level approximate image, the result of method [6] and the results of our modified multi-scale multi-threshold Canny edge detector method before and after applying Otsu thresholding are shown. By comparing Fig. 5b and c we notice that by using max E j instead of min E j in (4) more edges are detected.

Result of proposed edge detector algorithm, (a) original first level approximate image, (b) result of applying [6], (c) and (d) results of applying proposed edge detector before and after Otsu thresholding

Since (2) can be zero for a block a constant value C is added to prevent \( Complexit{y}_{B_i} \) from becoming zero.

Also β i is computed based on the intended watermark bit, W(B i ), as follows:

Hence, using (1), in blocks with high edge concentration and high entropy, due to large value of \( Complexit{y}_{B_i} \), watermark bits are strongly embedded by increasing the difference between D i (x, y) and D i (w, z). These are blocks that human visual system can hardly detect modifications. On the other hand, for blocks which have low edge concentration and low entropy, which cause the term \( Complexit{y}_{B_i} \) to become low, watermarking is performed with low strength factors.

This would cause minor alterations in the block and it is suitable for smooth regions of the image. Human visual system is sensitive to changes in smooth areas. In this manner the watermarked image is visually good with low distortion. In other words, a good tradeoff between robustness and imperceptibility is established.

After the DCT coefficient modifications, we apply inverse DCT on each block and retile them as before in the detail image. Finally by applying an inverse CT transform the watermarked image is obtained.

Because of the N-bit redundant embedding of the watermark in the input image, in order to extract the watermark from the watermarked image we employ a voting mechanism among the N neighboring blocks as follows:

In the above equation, d 1 to d N represent the difference between D i (x, y) and D i (w, z) in each of the N neighboring blocks. Since N-bit redundant embedding is performed, blocks 1 to N contain the same watermark bit. Also, Sum (d 1 : d N ) is the sum of the differences of the D i (x, y) and D i (w, z) in blocks 1 to N. In other words, by using (6), when the sum of neighboring blocks which vote to 1 is more than blocks which vote to 0, the extracted watermark bit from these N neighboring blocks is 1. Same voting procedure applies for extraction of 0. With this voting mechanism robustness against attacks is intensely enhanced. We embed one watermark bit repeatedly in N neighboring blocks. In the extraction process, we perform voting among the N neighboring blocks. In this method if the difference between Di (x, y) and Di (w, z) in a block is more than 0 it shows that a 0 has been embedded and otherwise a 1 is in that block. By summing the N difference values from the N neighboring blocks we can confidently tell what the value of embedded bit is. A positive sum indicates embedded 1 and a negative result means an embedded 0. If a block has high complexity then it has higher difference between its Di (x, y) and Di (w, z). It means it has stronger bit embedded in it and it can dominate the votes of the other N-1 blocks. When the image is under attack, even if only one of N neighboring blocks has high complexity, it can guarantee the survival of the watermark.

3 Experimental results

For simulation of the proposed method we used Matlab R2013a (8.1.0.604) and we applied it on gray scale 512 × 512 pixels images (w = 512). In this method the first level approximate image which is 256 × 256 pixels is decomposed into 8 × 8 blocks (m = 8). Also, 128 bits of message, with a redundancy of 8 (N = 8), is randomly generated and embedded in (3,6) and (6,3) DCT coefficients of second level detail image blocks. The minimum and the maximum thresholds values can arbitrarily be selected. Larger difference between the maximum and minimum thresholds can help finding more edges. Hence, we set these thresholds, in the edge detector stage, to 0.1 and 0.8. Also, a step size of 0.1 is considered and 8 thresholds are applied (i.e., K = 8). We used 5 scales of 1 to 5 for Canny edge detector (S = 5). In the following the visual quality, effect of using our proposed modified edge detection algorithm and robustness of our watermarking method against attacks is evaluated.

We have evaluated our method on five images (Barbara, Baboon, Map, couple, and Bridge). The Peak-Signal-to-Noise-Ratio (PSNR) results between the original and watermarked images are 42.89, 40.06, 40.62, 44.06, and 41.74. In Fig. 6 Barbara, Baboon, Map original images and their watermarked versions and their SSIM and PSNR values are shown. By comparing the visual quality of each image with its watermarked version, we notice that our method’s imperceptibility is high. We embed each watermark bit in an image block. Embedding in DCT coefficients of CT domain of original image diffuses the embedding effect throughout the block. Hence, blockiness artifacts are not produced. Also by combining the modified multi-scale multi-threshold Canny edge detector algorithm and by using entropy for measuring the complexity of each block, we properly alter the strength factor in each block. Hence, the visual quality of the watermarked image is not diminished.

To measure the robustness of the algorithm we find the normalized correlation (NC) between the original and the extracted watermarks. Higher correlations show higher robustness of the algorithm against attacks. In Table 1 NC results of the proposed method against cropping attack, with different percentages, are presented. For this purpose watermarked images were cropped from 5 to 20 %. In order to perform cropping attack 5 to 20 % of the pixels on the right side of the image are replaced by zeros. We see that for up to 10 % cropping almost no loss of information is caused and complete watermark is extracted. The worst situation in Table 1 is for 20 % cropping of the Couple image where a high correlation of 91.29 % is achieved.

In order to further evaluate the robustness of our method, we performed Gaussian filter attack. In this experiment, 3 different window sizes, and also with 3 different sigma values, are used. The NC results of our method against Gaussian filter attack are presented in Table 2. High NC values reveal high robustness of our method against this attack.

In Table 3 the results of comparing our method with the state of the art method of [1] for JPEG attack and scaling attack are shown. For a fair comparison, the PSNR value for each image, produced by our method and [1], is the same. By comparing the results, we see that our method in most cases outperforms [1] against JPEG attack. Also comparison of BER results of the proposed method with [1] reveals that our method is highly robust against scaling attack.

Furthermore, to evaluate our method against different attacks, such as median filter and histogram equalization attacks, we compared our results with [22]. In order to compare our method with [22] we increased the message size to 256 bits. The NC results against these two mentioned attacks are shown in Table 4. This comparison is under equal PSNR values. These results evidently reveal that our method is highly robust against the two mentioned attacks.

4 Conclusion

In this paper, we proposed a novel blind adaptive watermarking method in CT domain. In this method at first a two-level CT transform was applied on the original image and the first level approximate image was decomposed into blocks. Embedding was applied in the second level detail sub-bands. In order to regulate the strength factor for each block, we determined the complexity of each first level approximate image block. This complexity was determined by combining the entropy of a block with a proposed edge concentration measure. In this way, in complex blocks the watermark bits were embedded strongly and in simple and smooth blocks the watermark bits were embedded weakly. Because of low sensitivity of human visual system to changes in complex blocks, we could satisfy a good trade-off between robustness and imperceptibility. Also, in order to further improve the robustness of our method we redundantly embedded the watermark. The robustness of our method was tested against different attacks and experimental results demonstrated that the proposed method was able to achieve better performance in comparison with compared methods.

Most of the exiting watermarking algorithms are for gray scale single-still-images. The use of color images, audio, video, and stereo contents is on increase. These media contents require suitable copy protection schemes. Some of the existing image-embedding methods do not have the potential of being applied to other types of media. For future works we would like to extend our method to video sequences. This could be possible by computing complexity in video cubes rather than image blocks. This may also require 3D contourlet transform.

References

Akhaee MA, Sahraeian SME, Marvasti F (2010) Contourlet-based image watermarking using optimum detector in a noisy environment. IEEE Trans Image Process 19(4):967–980

Azizi S, Samavi S, Mohrekesh M, Shirani S (2013) Cascaded transform space watermarking based on analysis of local entropy variation. IEEE International Conference on Multimedia and Expo Workshops (ICMEW), 1–6

Cox, Ingemar J, Matthew LM, Jeffrey AB, Honsinger C (2002) Digital watermarking. 53

Ghannam S, Abou-Chadi FE (2009) Enhancing robustness of digital image watermarks using Contourlet transform. 16th IEEE International Conference on In Image Processing (ICIP), 3645–3648

Gonzalez RC, Woods RE (2002) Digital image processing

Hernández-Vela A, Gatta C, Escalera S, Igual L, Martin-Yuste V, Sabaté M, Radeva P (2012) Accurate coronary centerline extraction, caliber estimation, and catheter detection in angiographies. IEEE Trans Inf Technol Biomed 16(6):1332–1340

Horng SJ, Rosiyadi D, Fan P, Wang X, Khan MK (2014) An adaptive watermarking scheme for e-government document images. Multimedia Tool Appl 72(3):3085–3103

Horng SJ, Rosiyadi D, Tianrui L, Takao T, Guo M, Khan MK (2013) A blind image copyright protection scheme for e-government. J Vis Commun Image Represent 24(7):1099–1105

Kaviani HR, Karimi N, Samavi S (2011) Robust watermarking in singular values of contourlet coefficients. Machine Vision and Image Processing (MVIP), 1–5

Kaviani HR, Samavi S, Karimi N, Shirani S (2012) Elevating watermark robustness by data diffusion in Contourlet coefficients. IEEE International Conference on Communications (ICC), 6739–6743

Lai CC, Tsai CC (2010) Digital image watermarking using discrete wavelet transform and singular value decomposition. IEEE Trans Instrum Meas 59(11):3060–3063

Lin WH, Horng SJ, Kao TW, Chen RJ, Chen YH, Lee CL, Terano T (2009) Image copyright protection with forward error correction. Expert Syst Appl 36(9):11888–11894

Lin WH, Horng SJ, Kao TW, Fan P, Lee CL, Pan Y (2008) An efficient watermarking method based on significant difference of wavelet coefficient quantization. IEEE Trans Multimedia 10(5):746–757

Lin WH, Wang YR, Horng SJ, Kao TW, Pan Y (2009) A blind watermarking method using maximum wavelet coefficient quantization. Expert Syst Appl 36(9):11509–11516

Lin WH, Wang YR, Horng SJ (2009) A wavelet-tree-based watermarking method using distance vector of binary cluster. Expert Syst Appl 36(6):9869–9878

Meerwald P, Andreas U (2001) Survey of wavelet-domain watermarking algorithms. International Society for Optics and Photonics 505–516

Potdar, Vidyasagar M, Song H, Chang E (2005) A survey of digital image watermarking techniques. 3rd IEEE International Conference on In Industrial Informatics, 709–716

Rosiyadi D, Horng SJ, Fan P, Wang X, Khan MK, Pan Y (2012) Copyright protection for e-government document images. MultiMedia, IEEE 19(3):62–73

Solachidis V, Ioannis P (2001) Circularly symmetric watermark embedding in 2-D DFT domain. IEEE Trans Image Process 10(11):1741–1753

Suhail MA, Obaidat MS (2003) Digital watermarking-based DCT and JPEG model. IEEE Trans Instrum Meas 52(5):1640–1647

Taherinia AH, Jamzad M (2009) A robust spread spectrum watermarking method using two levels DCT. Int J Electron Secur Digit Forensics 2(3):280–305

Wang Y, Doherty JF, Van Dyck RE (2002) A wavelet-based watermarking algorithm for ownership verification of digital images. IEEE Trans Image Process 11(2):77–88

Xinbo G, Deng C, Li X, Tao D (2010) Geometric distortion insensitive image watermarking in affine covariant regions. IEEE Trans Syst Man Cybern 40(3):278–286

Zhang H, Shu H, Coatrieux G, Zhu J, Wu QJ, Zhang Y, Zhu H, Luo L (2011) Affine Legendre moment invariants for image watermarking robust to geometric distortions. IEEE Trans Image Process 20(8):2189–2199

Zong T, Xiang Y, Natgunanathan I, Guo S, Zhou W, Beliakov G (2014) Robust histogram shape based method for image watermarking. IEEE Trans Circut and Syst for Video Tech 25(5):717–729

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Fazlali, H.R., Samavi, S., Karimi, N. et al. Adaptive blind image watermarking using edge pixel concentration. Multimed Tools Appl 76, 3105–3120 (2017). https://doi.org/10.1007/s11042-015-3200-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-015-3200-6