Abstract

This paper proposes a cost-effective and edge-directed image super-resolution scheme. Image super-resolution (image magnification) is an enthusiastic research area and is desired in a variety of applications. The basic idea of the proposed scheme is based on the concept of multi-kernel approach. Various stencils have been defined on the basis of geometrical regularities. This set of stencils is associated with the set of kernels. The value of a re-sampling pixel is obtained by calculating the weighted average of the pixels in the selected kernel. The time complexity of the proposed scheme is as low as that of classical linear interpolation techniques, but the visual quality is more appealing because of the edge-orientation property. The experimental results and analysis show that proposed scheme provides a good combination of visual quality and time complexity.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Image super-resolution increases the pixel density of low resolution (LR) images to obtain high resolution (HR) images while preserving the geometrical regularities of the original LR image. The terms magnification [24], super-resolution [4, 22, 40], image scaling [37], zooming [3] and up-sampling [23, 39] have been used interchangeably in literature for image super-resolution. The resolution of digital devices (e.g. cell phone, surveillance cameras etc.) is often limited by the low-cost imaging sensors. LR images obtained from low-resolution digital devices are enlarged to effectively utilize the growing capabilities of high resolution displays (e.g. high definition LCDs). The high resolution images are used in many applications for a wide variety of purposes. In medical imaging, HR images are studied for analysis and diagnoses where it can be enormously complicated from LR images. High quality video conferencing and Blu-ray movies effectively use resolution-enhancing technology. For this purpose, in order to obtain HR images from LR images taken by low-cost digital devices or to acquire high definition videos from existing standard footage is becoming growing demand. Furthermore, web videos need video sequence enhancement [29] because web videos are stored in low quality due to limited bandwidth and server storage. Thus low resolution images cannot be converted to HR image arbitrarily. Super-resolution(SR) can be recovered by taking into account the original detail of the low resolution image to preserve the geometrical regularities of the LR image.

Various digital image magnification techniques have been used to construct super-resolution from LR images by preserving the image detail. The traditional zooming schemes generating an SR image normally requires multiple LR images as an input to compute the details of the edges orientations. The reconstruction of the SR image is either based on prior information or rational supposition about the observation model that constructs the magnified image from the LR images. The reconstruction considers the problem in the inverse direction to compute the original details about the geometrical regularities of the SR image by fusing one or more LR images [4, 22, 32]. However due to less number of LR images for prior knowledge map model and ill-conditioned registration problems, the quality of the magnified image is generally quite poor. Several schemes have been proposed to fix these problems [34]. Some machine learning based zooming schemes construct HR images using prior-knowledge learned by computing the co-occurrence between LR and HR image patches [12, 26, 27, 30]. A set of LR images and their corresponding high quality HR image patches are stored in the database. The zooming algorithm is trained by stored LR and HR image patches in the database, such techniques have certain limitations due to the dependency on various parameters e.g. numbers and type of the images in training dataset and size of the dictionary-atoms trained from the generic training images. The quality and running time of machine learning based schemes get change on variation of these parameters. They also have high time complexity. Therefore these schemes cannot be used for soft-real time applications (e.g. mobile, theater, radar, and printer etc. application). There must be a tradeoff between time complexity and image quality. Interpolation based zooming schemes are frequently used when working with a single image [8, 14, 28]. Most prevailing interpolation techniques are nearest neighbor (NN), bilinear and bicubic [1, 2, 21]. These interpolation schemes are non-adaptive and computationally very simple. They also suffer from the problems of aliasing, blurring and edge halo. However, they are used frequently in many applications to date. Various edge directed and adaptive non-linear interpolation schemes have been developed to minimize the problems faced by non-adaptive interpolation schemes [23–25, 28]. They determine the re-sampling information according to the geometrical regularities. On one hand these schemes have low time complexity, but on the other hand produce unwanted artifacts by ignoring the texture invariance and smoothness information.

The aim of this paper is to provide a cost-effective zooming scheme that combines the high quality of the reconstruction and time complexity. Our approach is motivated by recent work on interpolation [7, 23–25, 28] and various edges detection filters [13]. The basic idea of the proposed scheme is to define various stencils \( {\mathbb G} {\mathbb s} \) j based on geometrical regularities. The weight of each pixel in the pattern \( {\mathbb G} {\mathbb s} \) j associated with kernel Ψ k is computed. Then the weighted average value of the sampling points in the kernel Ψ k is assigned to re-sampling point. Thus, a high quality SR image is produced from a LR image. The experimental results prove the superiority of the proposed technique not only in visual results but also in quantitative analysis as compared with other recent well know zooming schemes[24, 40] as well as classic interpolation techniques e.g. bicubic. The computational time is also appropriate and suitable for applications that need real time response.

The rest of the paper is organized as follows: Section 2 describes the related work, Section 3 presents the proposed scheme, Section 4 describes in detail the experimental results and analysis, and Section 5 concludes the paper with brief remarks.

2 Related work

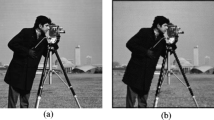

This section summarizes the major categories of the techniques presented in literature for the task of image magnification. There are various image zooming schemes, which are used in many reputed commercial image processing tools e.g. Adobe Photoshop, Windows Photo viewer and IrfanView [1, 16, 17, 19] etc. For instance nearest neighbor (NN) is the most basic and simplest non-adaptive interpolation technique. It needs the least execution time of all the interpolation schemes because it only considers the nearest one pixel to the interpolated point. The arrow in Fig. 1a shows the assignment of the closest pixel to the re-sampling (interpolated) point u(x, y). NN is also called pixel replication because it has the effect of simply making each pixel larger by replication. Bilinear interpolation comparatively gives good visual result than NN because it considers the nearest 2 × 2 neighborhood of the known pixels (sampling points) values surrounding the unknown (interpolated) pixel u(x, y). Figure 1b shows that x, y are the coordinates of the interpolated pixel and x, y with subscript are the coordinates of the sampling pixels surrounding the interpolated pixels. Bilinear interpolation takes a weighted average of these 4 sampling points to arrive at its final interpolated value as

where u(x, y) is the re-sampling pixel and u(x 1 , y 1 ), u(x 2 , y 1 ), u(x 1 , y 2 ), and u(x 2 , y 2 ), are the intensity values of the sampling pixels surrounding the re-sampling pixel. From the Fig. 1(b), each weight is equivalent to the normalized distance between the re-sampling pixel u(x, y) and diagonally opposite pixel i.e. u(x 2 , y 1 ) is diagonally opposite to u(x 1 , y 2 ) and weight of u(x 2 , y 1 ) is w 2 . Bilinear interpolation also suffer from unwanted artifacts but it is comparatively good than nearest neighboring algorithm. Bicubic scheme goes one step beyond bilinear algorithm by considering the nearest 4 × 4 neighborhood of known pixels i.e. sixteen pixels in total. Pixel closer to the re-sampling point gets the higher weight in the computation. Interpolated point u(x, y) can be calculated as:

The gradients are calculated in both x and y directions and also across the four corners of the grid square in bicubic. It produces 16 equations which determine the coefficients arc where r = 1,2.. 4, c = 1, 2, .. 4 as explained in [21, 31]. Bicubic algorithm achieves comparatively sharp and attractive visual results than NN and bilinear. For this reason bicubic scheme is a standard in many image editing programs e.g. Adobe Photoshop, printer drivers, and in-camera interpolation etc. However it suffers from blurring and blocking-effect due to its non-adaptive nature. A number of adaptive and nonlinear schemes have been proposed [5, 21, 24, 28, 34] to overcome the problems associated with non-adaptive schemes. For instance, Lee and Yoon [23] presented an edge-directed up-sampling method based on the radial basis function interpolation. It is a non-linear method which determines re-sampling information according to the geometrical regularities. Kim et al. [25] introduced a partial differential-equation based curvature interpolation method. Jurio et al. [24] has proposed an “Image magnification using interval information” (IInfo) that associates each pixel with an interval F(qij, δ.w) obtained by weighted aggregation of the pixels in its neighborhood.

w is the difference between the largest and the smallest intensities of the pixels in kernel. F(qij, δ.w) has been explained in detail in [24]. The magnified image obtains from the interval and with a linear K α operator. The reader can find more detail about K α operator in [24]. Such techniques also ignore some important features e.g. texture invariance and geometrical invariance information, which causes aliasing and blurring artifacts in zoomed image. Reconstruction based zooming schemes were developed to reduce the aliasing effects of the conventional magnification techniques [20, 38]. Mallat et al. [29] computed a set of inverse problem estimators by adaptively fusing a class of linear estimators corresponding to different priors. Then coefficients of blocks in a frame providing sparse signal representation were used to compute the sparse mixing weights. In [35], the image registration parameters function based on a marginalization over the unidentified SR image problem was treated by the Bayesian approach, which allows [35] to approximate the unknown point spread function. Baker and Kanade [4] proposed a SR scheme that gains prior knowledge for specific classes of LR images and makes use of this prior knowledge to construct the HR image. The visual quality of the zooming schemes based on reconstruction also produce some blurring and blocking effects in SR images due to less number of LR images for prior knowledge map model and ill-conditioned registration problems. Machine learning based algorithms have also been developed to minimize the unwanted artifacts. Gajjar & Joshi [12] proposed a new discrete wavelet transform based approach for learning that uses a group of LR images and their corresponding HR images. The prior model of [12] is based on an inhomogeneous Gaussian Markov random field. Freeman et al. [10] suggested an example-based Markov Random Field (MRF) classifier to convert LR image to HR image. This approach was enhanced by Sun et al. [33] by using Primal Sketch priors to refine sharp luminance variation. Yang et al. [40] proposed a zooming scheme “Image Super-Resolution Via Sparse Representation” (SpR) that calculate sparse representation coefficients α for each patch y of the LR input image, and then use α to construct high resolution patch x of the HR image. To be more specific, let D ∈ Rnxk be a dictionary of sparse representation of k bases functions where k > n and assume a signal x ∈ Rn can be represented as a sparse linear combination with respect to D i.e. x can be written as x = Dα where α ∈ Rk. α can be optimized as

where β is the parameter to balance sparsity and fidelity of the approximation to y. The patch x is used to construct the HR image. It uses gradient decent to find the zoomed image closest to HR image which satisfies the reconstruction constraint [40]. It has comparatively good result then previous zooming schemes but due to high time complexity and limitations [10, 12, 30, 33, 40] it cannot be incorporated in real time application. In the following section, we present a zooming technique which provides an ideal combination of good quality and low time complexity.

3 Proposed methodology

Suppose u is a low resolution (LR) image of the size m × n and ũ is the corresponding λ-times zoomed image of size N × M i.e. n = N/λ, m = M/λ. Initially the geometrical transformation of LR image u is performed by factor λ, which can be written as

where x' and y' are the coordinates of the geometrically transformed image u'. λx, and λy in Eq. (6) are the zooming scales in horizontal and vertical directions, respectively. This transformed image u' contains undefined pixels, i.e. re-sampling points (undefined pixels). Assume ƒ is an ordinary linear zooming function, which is applied to u' in order to compute the re-sampling points as

where ℰ is the error, i.e. undesirable artifacts, which gets introduce during zooming process. ũ is the magnified image. ℰ must be reduced to suppress the annoying artifacts. In order to avoid this drawback, the interpolator Ψ k of the proposed technique has been designed to maintain the knowledge of high resolution covariance, smoothness, and texture of the source image. Ψ k is an edge-oriented interpolator which minimizes the occurrence of aliasing frequencies ℰ. Assuming that ƒ' is the zooming function of proposed technique, it must fulfill the following property:

The edge-orientation property of the proposed interpolator Ψ k adjusts the interpolation coefficient to match the local geometrical regularities of the LR image. The geometrical regularities are the high frequencies across the boundaries angles and smooth intensities along these boundaries orientation. The proposed interpolator maintains both geometrical regularities as well as texture inside the regions of high frequencies, which consist on original details of the source image. Particularly, conventional interpolation schemes are non-adaptive and use only one interpolation kernel, which produces noise during the interpolation process. The proposed interpolation scheme is adaptive and uses thirty three interpolation kernels. It is implemented on a domain in ℝ2, involving a range of pixels. The proposed technique uses different numbers of pixels depending on their pattern in the geometrical stencils. Figure 2 shows various geometrical stencils \( {\mathbb G} {\mathbb s} \) j (interpolation region), \( {\mathbb G} {\mathbb s} \) j ⊂ u where j denotes the number of pixels in each stencil, which play a significant role in the construction of re-sampling points. Moreover different gray-shades (in the form of grid) in Fig. 2 represent the orientation of frequencies. The dark gray-shade indicates the shape of the stencil. Sampling points (known pixels) inside the stencil are considered to compute the re-sampling point, while other pixels in the white region are excluded from computation. Hence, the interpolation kernel Ψ k consists of geometrical stencils \( {\mathbb G} {\mathbb s} \) j having variant number of sampling points under the shifting of high frequencies in different directions. Let P be an interpolating point

where i in vi indicates the position of sampling point in stencil based on geometrical regularities and n shows the total number of these sampling vertices in \( {\mathbb G} {\mathbb s} \) j associated with interpolation kernel Ψ k . Therefore the kernel Ψ k can be pre-calculated, and then it is applied to compute the re-sampling point. This feature of the proposed technique allows fast calculation of the re-sampling pixels as that of bicubic technique [1, 2, 14], but the orientation-adaptive property makes it more accurate than the bicubic technique.

\( {\mathbb G} {\mathbb s} \) j reveal thirty three different interpolation kernels Ψ k where k = 1, 2…33. Only fifteen kernels are shown in Fig. 2. The proposed technique divides the kernel into different interpolation regions due the shifting of high frequencies in different directions. Figure 2(a) is the type of interpolation kernel that considers the entire interpolating pixels i.e. constant interpolation region. Figure 2(b) shows the diagonal edges from right, left, top and bottom direction that divides the interpolation area of the interpolator Ψ k in to two different interpolation regions. Hence, Fig. 2(c) indicates various stencils \( {\mathbb G} {\mathbb s} \) j in different directions based on high frequencies variations from only left side of the interpolation kernel. It can be also from right, top and bottom directions. The vertical and horizontal edges are also shown in Fig. 2(d). Thus, the kernel Ψ k chooses appropriate sampling pixels while excluding other unsuitable sampling points. To make sure that all the sampling points inside a stencil of particular interpolation kernel have dispersion less than a threshold θ. For this purpose, the standard deviation σΨ is computed to avoid the inclusion of discontinuity inside each stencil and aliasing effect around the edges, as

where μ is the mean of the sampling points in stencil \( {\mathbb G} {\mathbb s} \) j associated with selected kernel Ψ k and \( {\mathbb v} \) i represents the sampling points inside the stencil. If the standard deviation σΨ of a particular stencil is less than the threshold θ, i.e. dispersion among the frequencies of sampling points is insignificant then all the sampling points are appropriate to be considered inside a stencil \( {\mathbb G} {\mathbb s} \) j . Figure 2(a) indicates that the dispersion among the frequencies of all sampling pixels is less than threshold θ. This includes all sixteen interpolating vertices to compute the re-sampling point. Hence, Fig. 2(b) includes ten sampling points that have dispersion σ Ψ less than θ. It works similarly for all stencils \( {\mathbb G} {\mathbb s} \) j associated with each type of kernel Ψ k . To preserve the texture of the interpolation region and sharpness constraint across the edges orientation, the weight of each sampling point is calculated. In order to calculate the weight of sampling point inside a stencil, the distance of individual sampling point \( {\mathbb v} \) i is calculated from the re-sampling point ℙ as

where \( \mathrm{d}_{\mathrm{x}}^2 \) and \( \mathrm{d}_{\mathrm{y}}^2 \) are the difference of sampling point (x, y) from the re-sampling point ℙ (xp, yp) i.e. \( \mathrm{d}_{\mathrm{x}}^2 = {{(\mathrm{x} - {{\mathrm{x}}_{\mathrm{p}}})}^2},\ \mathrm{d}_{\mathrm{y}}^2 = {{(\mathrm{y} - {{\mathrm{y}}_{\mathrm{p}}})}^2} \). The sum of the distance of each sampling point (computed in Eq. 10) inside interpolation region of the kernel is computed as

where \( {D_G}{{_v}_i} \) is the total distance of the sampling points in the interpolation region of the kernel. The weight of each individual pixel in \( {\mathbb G} {\mathbb s} \) j associated to a kernel Ψ k can be computed as

In order to compute the re-sampling point ℙ, the weighted average of the interpolation kernel is computed as

In Section 4, experimental results show the accuracy and precision of the re-sampled value ℙ. It preserves the geometrical regularities as well as the texture inside high frequencies of the magnified image. The statistics of coefficients of cross correlation (CCC) in Table 2 (Section 4.2.2) show that the computed frequencies of the zoomed image have a high correlation with original details. The qualitative outcomes also proved the high quality of the proposed technique. The proposed technique can be summarized as follows

Algorithm:

-

1

Input: Original LR image u of size m × n

-

2

Geometrical Transformations: u' geometrically transformed λ-times using Eq. (6).

-

3

Edge-directed interpolation

-

3.1

Precalculation steps

-

i.

Define various stencils \( {\mathbb G} {\mathbb s} \) j (interpolation region) based on Geometrical Regularities

-

ii.

Associate each stencils with kernel Ψ k

-

i.

-

3.2

Compute the weight of each sampling point in stencil \( {\mathbb G} {\mathbb s} \) j associated with Ψ k using Eqs. 10, 11 and 12

-

3.3

Compute the value of the sampling point ℙ using Eq. (13)

-

3.4

[Repeat step 3.2 and 3.3 for u' until all sampling points are calculated]

-

3.1

-

4

Output: Image ũ of size N × M i.e. n = N/λ, m = M/λ.

3.1 Applications

The proposed technique can be used to magnify the grayscale image by factor λ . Figure 3 shows a flow chart of the proposed scheme for gray-scale image. For the color image, the proposed technique uses YCbCr color space. The benefit of YCbCr color space is based on the features of the human visual perception where Y component denotes the luma i.e. the brightness, Cb is the blue difference (B-Y) and Cr is the red difference (R-Y). The human eye is more perceptive for the luma as compared to chrominance information. The proposed technique gives less importance to the color information because their signals are smoother than the luma’s signals. This effect has been shown in Figs. 4 and 5. Thus Cb and Cr components of the source image are magnified by the linear interpolation method [1, 2]. It interpolates the re-sampling points from the nearest four mapped sample points. In each dimension, computes and estimates two linear interpolation functions. As it was assumed earlier that u is a two-dimensional source image, for an arbitrary re-sampling point (x,y) in u, the bilinear interpolation [1, 2] equations are :

Assume u YCbCr is a color image and u Y , u Cb and u Cr denote Y, Cb and Cr components respectively. The proposed techniques applies only on the u Y component of the image u YCbCr and u Cb, u Cr are magnified using Eq. (9). Experimental results show that the visual quality of the zoomed image is appealing using this approach. The flow chart of the proposed technique for color image has been shown in Fig. 4.

4 Experimental results and analysis

A range of image quality assessment methods have been used for the evaluation of zooming techniques with no compromise on any standard schemes. In order to properly assess the proposed technique a number of experiments were conducted. The following sections provide the details of the experimental results and analysis.

4.1 Dataset

This sub-section describes about the source of the images used for comparison purposes. Images were taken from the websites of Computer Vision Group (CVG) Department of Computer Science and Artificial Intelligence, University of Granada [15] and Center for Image Processing Research (CIPR) Electrical, Computer, and Systems Engineering Department of Rensselaer Polytechnic Institute [18]. Images downloaded from CIPR are originally Kodak and are in Raster file format having resolution 768 × 512. Most of the images downloaded from CVG are in PBM or PGM format having resolution 512 × 512. To achieve consistency in the size and format of the images, we adjusted the size of Kodak images to 512 × 512 using bicubic algorithm and convert these images into single format which is PGM. In this paper, fifty standard gray-scale images, each of the size 512 × 512 have been used for the quantitative analysis of the proposed technique.

4.2 Quantitative evaluation

In this sub-section, the procedure of the quantitative analysis is described. For the purpose of comparison, six sub-images S k = {S 1 , S 2 … S 6 } where S k ∈ u (original source image of size 512 × 512) with resolution 128 × 128 are cropped randomly from the source image as shown in Fig. 6. These random sub-images S k are down-sampled to s k = {s 1 , s 2 … s 6 } by factor λ = 0.5. Then these reduced sub-images s k are zoomed by factor λ = 2 to \( \mathrm{S}_k^{'} = \left\{ {\mathrm{S}_{{_1}}^{'},\ \mathrm{S}_2^{'}\ldots\ \mathrm{S}_6^{'}} \right\} \)using the proposed technique, as well as the nearest-neighbor (NN), bilinear, bicubic and the two latest techniques, SpR[40] and IInfo[24]. The software for SpR and IInfo were provided by the authors and for three other classic interpolations, we used software implemented in MATLAB R2011b. Now there are two sets of sub-images S k and \( \mathrm{S}_{{_k}}^{'} \) (Magnified by mentioned zooming schemes to the size of S k ). Next, different evaluation metrics are applied on these two sets of images (S k and \( \mathrm{S}_{{_k}}^{'} \) ) which will be discussed in subsequent section in detail. The image quality assessment metrics scores obtained in comparison for six sub-images are then averaged to get single values for each image. Table 1 denotes image quality evaluation metrics scores for each zooming technique computed from fruit image and average score is highlighted by bold letters. Figure 6 and Table 1 show the brief description of the computational procedure for quantitative analysis. This procedure of assessment has been applied on fifty original source images each of size 512 × 512. Moreover the algorithm provided by Jurio et al. [24] is only for gray-scale images. There are no such hints about color image magnification in [24] and all its experimental results consist on gray-scale images. Yang et al. [40] uses YCbCr color space for color image magnification, but the algorithm of SpR only applies on Y component of the image. For other two components Cb and Cr, SpR uses bicubic algorithm. Therefore, to compare purely the zooming schemes under consideration, only gray-scale images were used for evaluation.

4.2.1 Image quality assessment metrics

In order to compare the proposed technique with other mentioned prominent zooming schemes, three objective image quality assessment metrics were used, which are cross-correlation coefficient (CCC) [5, 6], mean absolute error (MAE) [2, 28, 36]and peak signal to noise ratio (PSNR)[6, 24, 40]. CCC is a quantity that gives the quality of a least squares fitting to the original data. CCC calculates that how much the zoomed sub-images S' k computed by a particular zooming technique is closely related to original sub-images S k (cropped from source image u). The value of CCC is in range of 0 and 1, i.e. value near to zero shows poor quality of the magnification technique and vice versa. CCC is computed between source sub-images Sk and its corresponding zoomed sub-images \( \mathrm{S}_{{_k}}^{'} \) as

where R, C, \( {\mu_{{{{{S^{\prime}}}_k}}}} \) and \( {\mu_{{{S_k}}}} \) indicate numbers of rows, number of columns, the mean of source sub-images Sk and the mean of zoomed images \( \mathrm{S}_{{_k}}^{'} \), respectively. Equation (15) is a measure of association between two sub-images Sk and \( \mathrm{S}_{{_k}}^{'} \). Equation (16) and Eq. (17) are better known as the variance of \( \mathrm{S}_{{_k}}^{'} \) and Sk respectively. CCCk denotes coefficient of the cross-correlation for each sub-image where k = 1,2… 6. The average of CCCk is calculated in Eq. (19) to get single value for each image.

where \( {\mu_{{CC{C_k}}}} \) represents average of CCCk and ns is the number of randomly cropped sub-images i.e. ns = 6 as shown in Fig. 6. \( {\mu_{{CC{C_k}}}} \) is computed for fifty images downloaded from open standard databases [15] and [18]. The MAE is also an image quality assessment metric which is relatively simple computationally. It involves summing the magnitudes (absolute values) of the errors to obtain the ‘total error’ and then dividing the total error by the size of an image. Equations (20) and (21) compute the total error between the original luminance information of Sk and the computed luminance information of \( \mathrm{S}_{{_k}}^{'} \). It has also been applied on the same fifty images. The greater value of MAE indicates the weakness of the magnification technique and vice versa.

Where MAEk illustrates mean absolute error of each cropped sub-image where k = 1, 2…6. R.C is the size of sub-image. It also follows the same approach as that of CCC. Equation (21) calculates the average value of MAEk to acquire single value for each large image.

where \( {\mu_{{MA{E_k}}}} \) is the average value of MAEk. ns is the number of sub-images which are six in number. k run from one to six. The peak signal to noise ratio assesses quality of the magnified image. It is the ratio between the strength of the corrupting noise and the strength of the maximum achievable power of the signal. The signal represents the original pixel information and the noise is the error introduced during the magnification process. It is an approximation of the magnification’s technique superiority to human insight. A higher value of PSNR illustrate that the magnification scheme is of higher quality and vice versa. Equation (22) calculates PSNR for each randomly cropped sub-image where MSEk (Eq. 23) is the mean squared error of the corresponding sub-image and its zoomed version. MSEk involves a sequence of two simple steps. First, total square error is obtained by calculating the sum of the individual squared errors i.e. each error influences the total in proportion to its square, rather than its magnitude. As a result, large errors have a comparatively larger impact on the total square error than do the smaller errors. This means that the total square error will raise as the total error is focused within a reducing number of increasingly large individual error. Total square error, then is divided by the size of sub-image incorporated which produces the MSEk. In Eq. (24), the average PSNR is calculated for the sample images calculated in Eq. (22) to obtain single value for each source image.

where fm in Eq. (22) is the maximum fluctuation in the input image data type (e.g. for unsigned 8-bit integer fm = 255 and for double-precision floating point fm = 1). The same methodology of computation as earlier for CCC and MAE is also adopted for PSNR

The proposed technique was also evaluated by Mean Opinion Score (MOS) based on a subjective User Studies base criterion. This method of assessment was used by Furini et al. [8] and others [9, 11]. The MOS test can also be used to evaluate the quality of the magnification technique. Both source image and zoomed image (magnified by proposed scheme and other mentioned techniques) are examined by the users and then they are asked to rate the quality of the magnification technique i.e. scale from 1 (bad quality) to 5 (good quality). Both source and zoomed version of the images were presented to three users for evaluation.

4.2.2 Quantitative results and discussion

In this sub-section, the comparison of the proposed technique is performed with five zooming schemes: NN, bilinear, bicubic, SpR[40] and IInfo[24]. NN, bilinear, bicubic and IInfo are interpolation based algorithms and SpR is machine learning based algorithm. Table 2 shows the scores of CCC (\( {\mu_{{CC{C_k}}}} \)) for twenty five images. The bottom line of the Table 2 presents the average score of fifty images. The proposed zooming scheme clearly dominates other zooming techniques by consistently achieving the highest score. The average scores for fifty images at the bottom line also prove the high quality of the proposed technique. SpR and IInfo has relatively low values close to NN but the visual result of both schemes are good than NN. IInfo has the lowest coefficient for cross correlation. The overall result of the proposed technique is comparatively good than bicubic algorithm but higher than other zooming techniques. This consistency in obtaining higher values of CCC (\( {\mu_{{CC{C_k}}}} \)) proves that the proposed technique is more effective than other zooming schemes and have a high correlation with original details

The results of MAE (\( {\mu_{{MA{E_k}}}} \)) are listed in Table 3. The outcomes can be easily examined to make the required judgment. The average of fifty images in the last tuple of Table 3 notifies that the proposed technique produces minimum error while computing the re-sampling point. The signal error of the proposed technique is less than bicubic as well as other algorithms due to the edge-orientation adaptive property.

Table 4 shows values of PSNR (\( {\mu_{{PSN{R_k}}}} \)) for twenty five images and average of fifty standard images at last line. By assessing carefully the results listed in Table 4 demonstrate the excellence of the proposed technique. It maintains the strength of the magnified signal and less prone to introduce noise error than other zooming schemes. This distinction in score of PSNR (\( {\mu_{{PSN{R_k}}}} \)) illustrate higher accuracy of the proposed zooming schemes than other magnification techniques.

Figure 7 illustrates the MOS of all zooming techniques for the 20 standard images of the data set, under consideration. It is observed that the proposed technique attains the highest MOS score among all the zooming schemes consistently. This subjective assessment based on direct opinion of the users, shows that the proposed zooming technique is more superior to the other zooming schemes under consideration. We did not include NN and bilinear in MOS because the visual results of both these techniques are poorer than bicubic, SR, IInfo and the proposed technique. In Fig. 7, the numbering on the x-axis from 1 to 20 represents the standard images obtained from data sets discussed in sub-section 4.1.

4.3 Qualitative analysis

In order to evaluate the visual quality of the magnification techniques, we consider the seven parameters that were used by Wittman [37]. These are (1) geometric invariance: the relative size and shape of the objects in picture should be preserved by the magnification technique; (2) contrast invariance: overall contrast of the image and luminance information of the objects in image must be maintained; (3) noise: the zooming technique must keep free the method from noises and bad artifacts like ringing artifacts near the borders; (4) edge Preservation: the edges and borders of the image must be protected; (5) aliasing: jagged and staircases edges must not be introduced; (6) texture preservation: texture region should not be smooth or blur by the zooming technique; and (7) over-smoothing: unwanted piecewise constant or blocking effect should not be introduced. In Fig. 8, few magnification results of the proposed technique are presented. A cropped image (enclosed by rectangle) of size the 141 × 151 from the zebra image shown in Fig. 8, was magnified four times of the original sub image. The cropped images of the Baboon and human eye of the size 132 × 158 and 193 × 117, respectively, were also magnified by 4× i.e. four times of the original source image. The visual quality shows that proposed scheme covers up seven parameters [37] up to the greater extent and preserves the geometrical regularities of the source image in the magnified image. In Fig. 9 the proposed scheme has been compared with Nearest Neighbor, Bilinear, Bicubic, SpR[40] and IInfo[24] visually. The visual results were evaluated diligently, and found that the visual results of the proposed technique are more appealing. The visual quality of SpR is also good than NN, bilinear, bicubic and IInfo but numerical results in Tables 2, 3, and 4 prove the high quality of the proposed work.

4.4 Execution time measurement

The execution time of the proposed scheme was also judged against NN, Bilinear, Bicubic, SpR and IInfo. All the algorithms were executed on processor Intel Core(TM)2 Quad Q6600 @ 2.40 GHz, 2.40 GHz with 6.00 GB RAM using a MATLAB R2011b over 64-bit operating system. The cropped image from the baboon of the size 186 × 178 was zoomed by λ = 2 and λ = 4, and the corresponding execution time has been given in Table 5. NN, bilinear and bicubic are the fastest algorithms. The execution time of the IInfo is comparatively less than proposed technique but the quantitative and qualitative results of the proposed scheme are better than IInfo The running time of the SpR scheme is very high. Hence due to the high complexity of SpR, it is unsuitable for applications that need a real time response. However, it can be used for offline applications. The proposed technique is a good combination of quality and time.

4.5 Advantages and limitations of the proposed scheme

Bicubic, bilinear and NN are non-adaptive interpolation algorithms. Qualitative and quantitative results of bicubic is better than NN and bilinear. But due to the static interpolation property of bicubic algorithm, it also susceptible to unwanted artifacts e.g. blocking effects, blurring, and aliasing etc. Experimental evaluation in sections 4.2.2, 4.3, 4.4 shows that IInfo is inferior to bicubic. The scores of different image quality assessment metrics prove the superiority of the proposed technique over bicubic and SpR. The visual results of the proposed scheme also compete with SpR and bicubic. The proposed scheme is better than bicubic by having adaptive interpolation features. SpR did not give the same results as that of the proposed scheme even on higher cost. Due to the high time complexity of SpR, it cannot be used for real time application e.g. theaters and cinemas etc. The proposed technique can be easily incorporated for the same purpose (real time applications) by having better results at lower cost. SpR also depends on various parameters i.e. the size of the dictionary atoms trained from the generic training images and number of the images in the training data set [40]. Its execution time and quality of the visual results gets change on variation in corresponding parameters of the SpR. While the proposed scheme has no such dependency. The proposed technique has a limitation that it cannot differentiate between re-sampling point and noise. It also magnifies the noisy pixel by considering it the luminance information. Due to this limitation, the noisy pixels are grown with ripple effect by magnifying the source image with different magnification factors λ.

5 Conclusions

The proposed zooming scheme presented in this paper produces high quality super-resolution images and at the same time it has low time complexity. It is easy to implement. The basic idea of the proposed edge-directed interpolation technique is based on pre-calculated multi-kernel approach. The various stencils \( {\mathbb G} {\mathbb s} \) j (interpolation regions) based on geometrical regularities are defined. Then they are associated with kernel Ψ k . The weight of each sampling point in stencil \( {\mathbb G} {\mathbb s} \) j associated with Ψ k is calculated. The sampling point gets the weighted average of the corresponding kernel. The resultant super-resolution image is of high quality and appealing. The experimental results also show that the proposed technique has reduced the aliasing effects across the sharp luminance variation to a greater extent and also preserves the texture and smoothness of the image.

In the future, we plan to develop a visual-attention based interpolation scheme. In order to bring the time complexity close to classic bicubic interpolation technique, the salient part will be magnified by the proposed technique and the other part will be magnified by the low-cost linear interpolation scheme.

References

Acharya T, Tsai P (2007) Computational Foundations of Image Interpolation Algorithms, ACM Ubiquity vol:8

Amanatiadis A, Andreadis I (2009) A survey on evaluation methods for image interpolation. Meas Sci Technol 20:104 015–104 023

Arcelli C, Brancati N, Frucci M, Ramella G, Baja GSD (2011) A fully automatic one-scan adaptive zooming algorithm for color images. Signal Process 91:61–71

Baker S, Kanade T (2002) Limits on super-resolution and how to break them. IEEE Trans Pattern Anal Mach Intell 24:1167–1183

Battiato S, Gallo G, Stance F (2002) A locally adaptive zooming algorithm for digital images. Image Vis Comput 20:805–812

Chang CC, Chou YC, Yu YH, Shih KJ (2005) An image zooming technique based on vector quantization approximation. Image Vis Comput 23:1214–1225

Chen HY, Leou JJ (2010) Saliency-directed interpolation using particle swarm optimization. Signal Process 90:1676–1692

Ejaz N, Baik SW (2012) Video summarization using a network of radial basis functions. Multimedia Systems 18:483–497

Ejaz N, Mehmood I, Baik SW (2012) Efficient visual attention based framework for extracting key frames from videos. Signal Process-Image Commun. doi:10.1016/j.image.2012.10.002

Freeman WT, Pasztor EC, Carmichael OT (2000) Learning low level vision. Int J Comput Vis 40:25–47

Furini M, Geraci F, Montangero M, Pellegrini M (2010) STIMO: STIll and MOving video storyboard for the web scenario. Multimed Tools Appl 46:47–69

Gajjar PP, Joshi MV (2011) New learning based super-resolution: use of DWT and IGMRF prior. IEEE Trans Image Process 19:1201–1213

Gonzalez RC, Woods RE (2008) Digital image processing. Pearson Prentice Hall, New Jersey

Hou HS, Andrews HC (1978) Cubic splines for image interpolation and digital filtering, IEEE Transaction. Acoust Speech Signal Process 26:508–517

http://www.cambridgeincolour.com/tutorials/image-interpolation.htm

Hung KW, Siu WC (2009) New motion compensation model via frequency classification for fast video super-resolution, ICIP

Hwang JW, Lee HS (2004) Adaptive image interpolation based on local gradient features. IEEE Signal Process Lett 11:359–362

Irani M, Peleg S (1993) Motion analysis for image enhancement: resolution occlusion and transparency. J Vis Commun Image Represent 4:324–335

Ju Y, Lee YJ (2010) Nonlinear image upsampling method based on radial basis function interpolation. IEEE Trans Image Process 19:2682–2692

Jurio A, Pagola M, Mesiar R, Beliakov G, Bustince H (2011) Image magnification using interval information. IEEE Trans Image Process 20(1):3112–3122

Kim H, Cha Y, Kim S (2011) Curvature interpolation method for image zooming. IEEE Trans Image Process 20:1895–1903

Kim K, Kwon Y, (2008) Example-based learning for single image super-resolution and jpeg artifact removal, Technical Report 173, Max Planck Institute

Kim C, Seong S, Lee J, Kim L (2010) Winscale: An image-scaling algorithm using an area pixel model. IEEE Trans Image Process 19:2682–2692

Li X, Orchard MT (2001) New edge-directed interpolation. IEEE Trans Image Process 10:1521–1527

Mallat S, Yu G (2010) Super-resolution with sparse mixing estimators, IEEE Transaction. Image Process 19:2889–2900

Ni KS, Nguyen TQ (2007) Image super-resolution using support vector regression. IEEE Trans Image Process 16:1596–1610

Press WH, Teukolsky SA, Vetterling WT, Flannery BP (2002) Numerical recipes in C++: The art of scientific computing. Cambridge University Press, New York

Shan Q, Li Z, Jia J, Tang CK (2008) Fast image/video upsampling, ACM Transactions on Graphics (SIGGRAPH), ASIA

Sun J, Zheng NN, Tao H, Shum H (2003) Image hallucination with primal sketch priors, IEEE Conf. Comput Vis Pattern Recognit 2:729–736

Tam WS, Kok CW, Siu WC (2010) A modified edge directed interpolation for images. J Electron Imaging 19(013011):1–20

Tipping ME, Bishop CM (2003) Bayesian image super-resolution, Advances in Neural Information Processing Systems 15. MIT Press, pp 1303–1310

Willmott CJ, Matsuura K (2005) Advantages of the Mean Absolute Error (MAE) over the Root Mean Square Error (RMSE) in assessing Average Model Performance. Clim Res 30:79–82

Wittman T (2005) Mathematical Techniques for Image Interpolation, Department of Mathematics University of Minnesota

Xiong Z, Sun X, Wu F (2010) Robust web image/video super-resolution Interpolation. IEEE Trans Image Process 19:2017–2028

Y. Lin C, Liu YN, Chien SY (2010) Direction-adaptive image upsampling using double interpolation, Picture Coding Symposium (PCS), 254–257

Yang J, Wright J, Huang TS, Yi M (2010) Image super-resolution via sparse representation. IEEE Trans Image Process 19:286–2873

Acknowledgments

This research is supported by the Industrial Strategic technology development program, 10041772, (The Development of an Adaptive Mixed-Reality Space based on Interactive Architecture) funded by the Ministry of Knowledge Economy (MKE, Korea).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Sajjad, M., Ejaz, N. & Baik, S.W. Multi-kernel based adaptive interpolation for image super-resolution. Multimed Tools Appl 72, 2063–2085 (2014). https://doi.org/10.1007/s11042-012-1325-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-012-1325-4