Abstract

The convergence of information and medical technologies has resulted in the emergence and active development of the ubiquitous healthcare (U-Healthcare) industry. The U-healthcare industry provides telepathology and anytime-anywhere wellness services. The main purpose of these wellness services is to provide health information to improve the quality of life. Human skin is an organ that can be easily examined without expensive devices. In addition, there has recently been rapidly increasing interest in skin care products, resulting in a concomitant increase in their consumption. In this paper, we propose a new scheme for a self-diagnostic application that can estimate the actual age of the skin on the basis of the features on a skin image. In accordance with dermatologists’ suggestions, we examined the length, width, depth, and other cell features of skin wrinkles to evaluate skin age. Using our highly developed image processing method, we could glean detailed information from the surface of the skin. Our scheme uses the extracted information as features to train a support vector machine (SVM) and evaluates the age of a subject’s skin. Evaluation of our proposed scheme showed that it was more than 90% accurate in the analysis of the skin age of three different parts of the body: the face, neck, and hands. Therefore, we believe our model can be used as a standard or as a scale to measure the degree of damage or the aging process of the skin. This scheme is implemented into our Self-Diagnostic Total Skin Care system, and the information obtained from this system can be utilized in various areas of medicine.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The skin is the outermost part of the human body and is therefore easily visible to everyone. Thus, people aspire to have beautiful, smooth, and young-looking skin. Therefore, many cosmetic companies are trying to develop cosmetic products that can reduce the appearance of deep wrinkles on the skin. Although consumers use these products, there are no effective means of measuring the effect of these products on improving skin texture.

At present, the only reliable method is to get a dermatologist’s opinion, but the point of view of each dermatologist may be different. Dermatologists observe several visual features on a skin image to determine the condition of each patient’s skin. Among these visual features, the most significant ones are the length, width, and depth of wrinkles.

Currently, most dermatologists analyze a subject’s skin images and evaluate the condition of the skin based on their personal experience and expertise. This kind of subjective assessment can be inaccurate and inconsistent. Therefore, a clinically feasible, objective method (that is applicable to various fields) is needed to improve the validity of evaluating skin texture.

In this paper, we propose a wrinkle feature extraction and classification scheme for skin age evaluation. Our scheme can be used to evaluate the degree of improvement in skin condition. In order to arrive at an objective standard method for skin evaluation, we collected reliable skin-related clinical data from several dermatologists and accumulated ground-truth data to prove the accuracy and objectiveness of our study.

Skin age is defined as the age of human skin based on the state of several features associated with wrinkles. Specifically, it is very distinct from actual human age. Four different images of skin are depicted in Fig. 1. Fig. 1a and b show images of skin texture for a 10-year-old and a 50-year-old subject, respectively. In Fig. 1a, the skin texture has a finely-split pattern, which is a normal pattern observed for teenagers. In Fig. 1b, the skin texture has a loosely-split pattern, which is a normal pattern observed for people in their 50s. However, Fig. 1c and d show patterns opposite to each other. Therefore, to ascertain the age and state of such skin types, we need to evaluate the skin age.

2 Related work

Since the 1950s, various methods have been utilized for analyzing skin surface topography [1, 6, 8, 10, 15–17, 19, 20]. According to the dimensional nature of the parameters measured, these approaches can be divided into two categories: three-dimensional topography analysis (3DTA) and two-dimensional image analysis (2DIA). 3DTA needs negative or positive silicon replicas of skin surface for further analysis by profilometry (mechanical, optical, laser, transmission, or interference). It primarily involves the following roughness parameters: depth of roughness (Rt), maximal depth of roughness (Rm), mean depth of roughness (Rz), depth of smoothness (Rp), and mean roughness value (Ra) [1, 6, 10, 15, 16]. Rt is the peak-to-valley roughness of a surface and a single feature has a huge impact on the value derived. The profile length is divided into five equal segments. The peak-to-valley roughness is measured in each segment and the largest of these five values is Rm. Rz characterizes the mean value of the peak-to-valley roughness in each segment. The depth of smoothness Rp is calculated as the mean distance of every point on the curve from the highest peak. Ra is the most useful international parameter for roughness. To compute Ra, an average line is generated to run through the center of the profile, and the area in which the profile deviates above and below this line is determined. 3DTA is mainly used for clinical investigations of the local effects of cosmetic and medical substances but is limited by the relatively long duration for the replica to harden and the long measuring time [8, 16] (Fig. 2).

2DIA analysis, on the other hand, does not require silicone replicas of the skin, and it can quickly acquire a skin surface image, which makes the method suitable for routine detection of skin surface topography. It primarily involves two parameters: tau and density. Tau represents the level of anisotropy, i.e., the percentage of furrows oriented in a different direction; the higher this level, the greater the anisotropy of the surface [6]. Density is the furrow density, which is quantified on the basis of the occurrence of intersections between primary wrinkles [1, 6].

To detect skin wrinkles, Tanaka et al. employed a cross-binarization method to get a binary image from a digital skin image, and then, they used the short straight-line matching method to detect wrinkles from the binary image and measure their length [9]. Their method is as follows: for each base line in a cross-binarized image, if more than 70% of its pixels are marked black, then the line is considered a wrinkle. After that, the system extends from the end of the previous base line to create a new base line. The system extends the line until it reaches the end of the wrinkle or the end of the image.

Hayashi et al. implemented an age- and gender-estimation system based on facial images [13, 21]. They detected the wrinkles in a facial image by using a special Hough transform called the Digital Template Hough Transform (DTHT).

Hatzis proposed a method that uses wrinkle replication [12]. In this method, wrinkles are replicated using a silicon-rubber dental impression material. The wrinkles’ microtopography in negative replicas is then studied and photographed using a scanner or stereomicroscope under suitable lighting so that the appearance of the replica is changed from negative to positive. Hatzis applied silicon material to his subjects’ foreheads and left it for a few minutes for the silicon to harden. This replication method only provides an imprint of the superficial picture of a wrinkle. Hatzis used a computer to analyze the picture of the replications.

In our previous work, we proposed a scheme to detect skin wrinkles by analyzing microscopic skin images and estimating their length and width [11]. In this scheme, the skin wrinkles were detected using the watershed algorithm, which was first introduced by Lantuejoul and Beucher for image segmentation purposes and has since been used with diverse images including X-ray images, road sign images, and aerial photographs [4]. The wrinkle length was estimated simply by counting the pixels on the wrinkles. To estimate the wrinkle width, we proposed a block thickening method. However, we observed that these methods had high error rates.

To improve the accuracy, we proposed a new method for calculating wrinkle length and width [2]. In the new method, we used a line sieving method for wrinkle length calculation and a morphological region growing method for wrinkle width calculation. To get more features, we proposed a wrinkle-depth estimation method based on the color difference between the wrinkle and non-wrinkle regions—in contrast to Zou et al., who detected the area mean of superficial skin texture block formed by primary and secondary lines crossing each other [14]. Finally, we showed how to calculate skin age based on these features using an SVM [22].

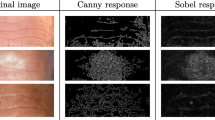

3 Pre-processing

In this section, we describe the pre-processing steps for detecting wrinkles on a digital microscopic image. Due to the limitations of the camera and the interference of the light source, captured images may have noise and vignetting. Vignetting causes captured images to have different color histogram distributions. Therefore, to avoid vignetting having any effect on the result, we applied a gradation masking method and cropped its center area to facilitate accurate results. We also used contrast equalization for better contrast in the selected area. To eliminate noise in the image, we first binarized the image using Otsu’s method and then applied a de-noising technique.

3.1 Region of interest (ROI)

A Region of Interest (ROI) is a selected subset of samples within a dataset identified for a particular purpose. The luminous source of a digital microscope image is concentrated around its center area. Therefore, detecting wrinkles outside of the center area may prove to be difficult due to vignetting. As a result, we cropped the center area of the image and set it as the ROI to get the best luminous source. We then measured the wrinkles only in that area. We empirically set the ROI size to 300 × 300 because that area of the image showed the most homogeneous luminance.

3.2 Image normalization

Since images can be captured under different light conditions, they may have different brightness. However, in an image, there is only a small difference in contrast between wrinkled and non-wrinkled areas. Therefore, to enhance the difference between the areas, we used the contrast stretching method, which increases image contrast and achieves histogram equalization as well.

Contrast stretching is a process that expands the range of intensity levels in an image so that it spans the full intensity range of the recording medium or display devices. The following equation expresses the contrast stretching method.

where I(x, y) represents the original image and min(I) and max(I) indicate the minimum and maximum intensities of the image, respectively. The images used in this paper are 8-bit grayscale images, which gives a maximum intensity of 255.

3.3 Binarization and de-noising

In order to detect wrinkles, we first converted a normalized grayscale image to a binary image. We had a choice of various methods including the global threshold method and the partial threshold method. However, we chose Otsu’s method. Otsu’s method is used to automatically perform histogram shape-based image thresholding or the reduction of a gray level image to a binary image. The method automatically calculates the threshold by using Eq. 2 and then applies it to the image if the image histogram is bimodal. The equation is given as

where i is a gray value ranging from 0 to 255; μ, the mean value; P(i), the ratio of the number of pixels in the image to the number of pixels with brightness i; σ2, the variance; and t, the optimum threshold for images. The desired threshold corresponds to the maximum σ2(t) that minimizes the within-class variance.

The binary image generated by Otsu’s method may contain many salt-and-pepper noises. Such noise may result in over-segmentation during the wrinkle detection process. Usually, such noise comprises only a small number of adjacent pixels in the image. Therefore, if the size of the particle is small enough, it is considered noise and can be eliminated from the image. Since wrinkles are connected and occupy a significant portion of the image, noise can easily be differentiated from wrinkles.

To remove noise from the processed image, our scheme first scans the binary image and searches for a pixel with the target color. Next, it searches for neighboring unexamined pixels that have the same color. This step is repeated for four neighboring pixels recursively. The algorithm stops the search process when all the neighboring pixels with the same color are discovered. If the number of connected pixels with the same color is lower than a particular cutoff value c, then the scheme considers them to be noise and removes them from the image. In addition, the scheme fills in the blank left by the pixels that are located in the wrinkle area.

4 Wrinkle feature extraction

In this section, we will briefly explain the process of extracting wrinkle features from the 2D image. For more accurate analysis of wrinkles, we extracted various features including length, width, depth, and wrinkle-bordered polygons. These features were used to train our classification model that was used to determine skin age.

4.1 Wrinkle length estimation

In order to detect wrinkle regions in the skin image, we used the Watershed algorithm. This algorithm segments images into regions according to the topology of the image. In our case, we focused more on the border lines than on the other regions since the border lines correspond to the skin wrinkles. Watershed transformation performs one-pixel line-based segmentation. By counting these one-pixel lines, we could determine the approximate lengths of the wrinkles. However, this method was not very accurate because merely counting pixels is prone to errors such as false counting.

Therefore, we used the line sieving method to calculate the wrinkle length. Figure 3 depicts the workflow for the line sieving method. The method is fast and very precise in the calculation of wrinkle length. The original purpose of the method was to count the cross line components quickly and easily. As can be seen from Fig. 4a, if the wrinkle is in a straight line, we can easily count the number of pixels to estimate the wrinkle’s length. However, if we have cross lines, as in Fig. 4b, the actual length is not just four pixels. Hence, just counting these pixels on the image would cause the resulting length derived for the wrinkle to be in error.

The line sieving method solves this kind of problem. It operates by counting the pixels that have straight-line components based on the x- and y-axis. As illustrated in Fig. 5, the scheme first executes the line sieving process for the straight-line components along the y-axis, i.e., it counts and eliminates the pixels from the one-pixel line (Fig. 5b). Next, it carries out the same procedure for the x-axis straight-line components (Fig. 5c). Finally, we count the pixels comprising the diagonal components left on the image (Fig. 5d) and multiply the slope of the line to estimate the wrinkle length. After the x- and y-axis line sieving processes, there may be some one-pixel islands left on the image. Since these islands are not connected to any wrinkle, we simply count these islands and add to the result for the straight-line component length.

Our scheme for wrinkle length estimation can be improved further. For example, line tracing is a more precise method. However, it requires too much computation, and hence, the feature extraction cost could be very high. Since we place more emphasis on cost-effectiveness and fast estimation, other methods requiring high computation cost were excluded.

4.2 Wrinkle width estimation

To measure wrinkle width, we need to recover the original wrinkle from its one-pixel skeleton image. For this purpose, we proposed a morphology-based region growing method described by Algorithm 1. In this method, we first apply a dilation process to the wrinkle skeleton to expand the skeleton lines according to the actual width. We then find wrinkle area from the dilated region and expand the wrinkle region continuously. During this step, we perform cross-comparison for output image and grayscale image to estimate the wrinkle regions. This process is repeated until the affected area gets n pixels, where n is the parameter representing noise. If the regions are smaller than n pixels, we consider them to be noise. Figure 2c is an example of a wrinkle region recovered by our region growing method.

4.3 Wrinkle depth estimation

Careful analysis of skin images reveals that the wrinkle region can be identified by its darker color. Furthermore, regions of deeper wrinkles show more clear color differences due to the interference from the light source. On the basis of this color difference, we used the following equation to estimate the wrinkle depth:

In Eq. 3, the skeleton is the center line of the wrinkle, so we extract n Random points from this line. For each center point ci∈C, a set of all the center points c1, …, cn, we evaluate the maximum color difference value within the group of points, Si. Si contains all the points that have a distance r from each center point. We add all the maximum color differences from n center points and average them out. We then define this value as the depth of the wrinkle for the affected area. In a grayscale image, we can deduce the color difference as shown in Fig. 6. Since we are calculating a color-based unit, the extracted difference has an arbitrary unit. However, as we are only using these features to train the classification model, we do not need to get an actual unit for depth.

4.4 Wrinkle unit-cell analysis

Close scrutiny of skin images uncovers the fact that they are composed of closed regions separated by wrinkles. For example, Boyer et al. [5] showed that the skin of younger people has more cells in the skin wrinkle image than that of older people. On the basis of this observation, we propose a Polygon Mesh Detection Algorithm (PMDA) for detecting the number of cells and average area of the cells. In the algorithm, the region bordered by the skeleton line is regarded as a cell and the algorithm counts the number of cells and computes the average cell area. Average cell area can be derived by dividing the entire cell area by the number of cells detected in the image. From the analysis, we found that as the density decreased, the number of polygons also decreased. In other words, as the number of polygons and the average cell area change, people age. While collecting the dataset, we were able to verify that the number of cells and the average area are dependent on the subjects’ ages. We were also able to verify that as people age, the shape of their polygons gets simpler with wider borders. The PMDA can analyze and calculate the average cell area and number of cells per unit area quantitatively.

The procedure used for cell detection is defined by Algorithm 2. As mentioned previously, the image is based on the skeleton graph, which is obtained from the watershed algorithm. In Fig. 7a, there are polygons inside the border lines, and each polygon represents one cell. To evaluate the number of cells and the dimension of each cell, we used a connected component detection scheme. Connected component detection recursively searches for four neighbors (top, down, left, right) from the start point and increases local cell. If the scheme encounters the border line or the end of the image, it terminates the process. If it encounters the end of the image, it discards the current cell. It does this because only cells that are wholly in the image are counted. After all pixels are examined, the scheme calculates the number of cells and the total area of the cells. It can also calculate the average area of the cells. However, the selected cells must have validity, which means they should be fully included cells.

5 Merging over-segmented cells

Cell information is crucial for the accurate evaluation of skin age. Over-segmented cells appear for many reasons, however, these should be eliminated before feature extraction in order to increase the accuracy of skin age estimation. In this section, we outline a method by which over-segmented cells can be detected in our evaluated cell image. We also propose a method that merges cells with neighboring cells.

5.1 Detection of over-segmented cells

Correct wrinkle representation is crucial for the estimation of skin age. As mentioned before, even though over-segmented cells may appear in the skeleton image for various reasons, they should be eliminated before feature extraction in order to increase the accuracy of skin age estimation.

To detect over-segmented cells, we first examine each cell in the image to see whether its border lines are closed. If they are, we assume that the border lines represent the cell’s contour and record their coordinates. Figure 8 shows an example of detected cells and their contour lines. We consider only fully closed cells in this step. In addition, we calculate the center point of gravity for each detected cell, as shown in Fig. 9b. This point plays an important role in detecting over-segmented cells. (Fig. 10)

To detect over-segmented cells in the skeleton image, we apply a convex hull to each cell. The convex hull connects a cell’s nodes with straight lines to represent the cell by a minimized convex function by using a minimum set of points. After finding the convex hull for each cell, we check whether any cell with its center point of gravity inside another cell’s convex hull exists. If so, we consider the cell to be an over-segmented cell. For instance, Fig. 9a shows convex hulls constructed for the wrinkles in the sample image; the detected over-segmented cells are indicated in blue in Fig. 9b.

Algorithm 3 details the steps for detecting over-segmented cells. For each closed cell, cel, in the image, we first calculate its adjacent cells and their convex hulls. After that, we compare their area. For the adjacent cell, adj, whose area is larger than that of cel, we check whether the gravity point of cel is inside the convex hull of adj. If it is, then we put a pair < cel, adj > into list O.

5.2 Merging over-segmented cells

After detecting all the over-segmented cells, we eliminate them by merging. Algorithm 4 details the steps involved in merging these over-segmented cells.

For each over-segmented cell pair in the list, we first check whether the area of the cell on the left is less than the average cell area. If it is, we merge it with its neighbor on the right. This information is then recorded in list M, which will eventually contain a list of all the merged cells. Finally, we update list C based on list M to get the final set of cells. For instance, Fig. 11 shows the result of cell merging for the image in Fig. 4; in the uppermost box, one over-segmented cell is merged, while two over-segmented cells are merged in the lowermost box. After cell merging, we calculate the new contour and area of the merged cells.

6 Classification

In this section, we explain the features that were used to train the classification model with a brief introduction to the classification model. We used a support vector machine (SVM) to test our scheme’s performance and accuracy.

6.1 Feature selection

To perform skin age estimation based on the wrinkle features, we first took skin images of different parts of the body for 238 subjects from five different age groups. We then collected 11 features that were used to train the classification model. Six of these were observed features such as total wrinkle length, average width, average depth, average cell area, total number of cells, and standard deviation of cell areas. The others were personal questionnaire data such as sex, age, skin care regimen, use of makeup, and smoking.

6.2 Support vector machine (SVM)

For feature classification, we used a nonlinear multi-class support vector machine (SVM) with a radial basis function (RBF). We defined five classes based on the subjects’ age group: teens, 20s, 30s, 40s, and 50s. An SVM does not establish kernel function and cost gamma parameter automatically. As a result, to determine the optimal parameter value for classification, we used a grid-search method.

In addition, an SVM needs ground-truth data for training. To construct the ground truth for our SVM model, we had five dermatologists investigate magnified skin images and determine their skin age on the basis of wrinkle features alone. We used those data to train our SVM. Through a blind test by the dermatologists, we found that our classification model achieved accuracy greater than 90%.

7 Experiment

To evaluate the performance and accuracy of our scheme, we performed several experiments on our prototype system using the SVM. Our results are presented in this section.

7.1 System architecture

Figure 12 depicts overall architecture of our prototype system. Our system utilizes three different data: cell-related feature data, wrinkle-related feature data, and survey data. We first performed some pre-processing of the microscopic skin images. This included contrast stretching, noise reduction, and binarization. Next, we applied the watershed algorithm to get the skeleton image of the wrinkles. We then used this skeleton image to extract all the wrinkle-related and cell-related features. For instance, to measure a wrinkle’s length, width, and depth, we performed a cross-comparison between the skeleton and grayscale skin images. For cell features, we used the skeleton image to detect all the cells in a fixed region. After that, we detected all the over-segmented cells and merged them. Finally, we calculated the total number of cells and the average cell area of the wrinkle features. Our scheme used these features as input to an SVM in order to make a skin-age-classification model and finally verify skin age as a result.

7.2 Experimental setup

To collect skin images from subjects, we used the PSI Well-Being Aphrodite-I with 60X lens. The dataset consisted of 834 face, neck, and hand skin images from 238 male and female subjects whose ages ranged from 10 years to 50 years.

Our prototype system was implemented in C#. It used the EmguCV [7] library for processing microscope images and LibSVM [18] as the classifier to estimate skin age. The system ran on an Intel® Core™ 2 Duo 2.8-Ghz CPU with 4 GB of memory and the Microsoft Windows® 7 operating system.

To obtain the ground truth for the SVM, we solicited feedback from dermatologists on the wrinkle features and dataset. The dermatologists investigated the skin images without any prior information as to a subject’s age, gender or other details. Figure 13 shows the user interface of our system.

7.3 Wrinkle features

Extracted wrinkle features are useful if they can show a trend over ages. Since we are not skin experts, we had to verify our feature results with dermatologists, and we discovered that the trend is based on age-related facts. Since we collected the skin images from Random people, our trend result may have shown an abnormal graph. However, each result graph showed some kind of trend over ages.

The wrinkle features extracted were length, width, depth, the number of cells, and average area of cells. Figure 14 shows the trend of wrinkle length versus subject age. The y-axis indicates the average pixel value of the wrinkle, while the x-axis indicates the ages of the subjects. The region of observation for the microscopic camera was fixed for all the subjects: however, since the skin’s elasticity decreases as people age, older subjects’ skins were stretched out. In consequence, our graph indicates that the wrinkles length decreases as people age.

Figure 15 shows how wrinkle width varies with age for various parts of the body. The width of hand wrinkles has a clearly discernible trend. As people get older, the average width of their wrinkles increases. Wrinkles on the hand and neck show different results because people get treatment or use cosmetic products to improve their skin. In Fig. 15, the x-axis indicates subjects’ ages, while the y-axis indicates the average wrinkle width in pixels.

Figure 16 shows the results obtained using the PMDA after the merging, which counts the cells in the wrinkle image. As shown in the figure, the number of cells in the image decreases as the subject gets older. As we age, skin wrinkles get thicker and wider, and hence, the number of cells in the image decreases. In the graph, the y-axis indicates the number of cells, while the x-axis indicates subjects’ ages.

Figure 17 shows the average area of the merged cells for each subject. The graph shows a steady increase in the average area as people get older. In other words, with aging, the average area of the cells increases in the fixed region because the skin loses elasticity. In the graph, the y-axis indicates the average area of the cells, while the x-axis indicates the ages of the subjects.

7.4 Skin image classification

In this section, we discuss how we used the extracted features to train the SVM classifier to evaluate skin age. Table 1 shows the collected dataset. We divided the dataset based on sex and body part as aging of the skin is dependent on these two factors.

We then tested the relationship between the extracted features and environmental efficiency. The result was tested with four different feature combinations: all features, all features without cell features, all features without the age feature, and all features without the age and wrinkle length feature. As previous results did not indicate a strong correlation between the wrinkle length and aging, we decided to omit that feature. We executed the SVM for each body part to figure out which body part shows the strongest argument for skin age. In Fig. 18, the y-axis indicates the accuracy of our scheme, while the x-axis indicates various features used in the experiment.

As can be seen from the graph, the age of a subject strongly influences the result of the classification. When a subject’s actual age was used as a part of the classification, the result was a biased skin age based on the subject’s actual age. In contrast, without the subject’s age as a parameter, skin age was estimated based solely on extracted features, and the accuracy of our results decreased. Thus, it is clear that the age feature is very important in skin age analysis even though it is essentially not a wrinkle-related feature. This indicates that in reality, wrinkle-related features show great diversity owing to various factors. Here, the age feature works as a filter and can narrow down the target.

In this paper, we proposed a skin analysis scheme using the cell features with cell merging as a feature for skin age classification. As can be seen from the results, when we used the cell features our accuracy increased by as much as 10%, which indicates that the cell features is a good method for verifying skin age.

The accuracy of the result may have been higher in male subjects than in female subjects due to the fact that female subjects get more cosmetic treatment for their skin than male subjects do. In addition, when we collected the dataset, female subjects were wearing a little make-up, which might have had an effect on the final analysis of their skin age.

8 Conclusion

In this paper, we proposed a skin feature extraction and processing model for statistical skin age estimation. To set up the model, we collected various wrinkle features from two-dimensional skin images and had dermatologists estimate their age. They were used as ground truth for training the SVM for age estimation. Wrinkle features we used include length, width, depth, average cell area and number of cells. They all can be calculated automatically from microscopic skin images. The trained SVM can be used as an objective standard model for estimating skin condition quantitatively. Throughout extensive experiments, we observed more than 90% accuracy in the skin age estimation for major body parts, which shows the effectiveness of our model. Even though we had dermatologists verify the ground truth for the SVM, we believe that the ground truth might still include some personal bias. This could be moderated by using more age-related skin features.

References

Akazaka S, Nakagawa H, Kazama H, Osanai O, Kawai M, Takema Y, Imokawa G (2002) Age-related changes in skin wrinkles assessed by a novel three-dimensional morphometric analysis. Br J Dermatol 147:689–695

Beucher S, Lantuejoul C, (1979) “Use of Watersheds watersheds in contour detection,”. In procProc. International Int’l workshop on image processing, Real-time edge and motion detection/estimation

Boyer G, Laquièze L, Le Bot A, Laquièze S, Zahouani H (2009) Dynamic indentation on human skin in vivo: ageing Ageing effects. Skin Research and Technology 15:55–67

Choi Y, Kim K, Hwang E (2008) WASUP: A wrinkle analysis A using microscopic skin image. In Proceedings of Int’l Conference on Ubiquitous Information Technologies & Applications

Christopher JC, Burges CJC (1998) A tutorial on Support Vector Machines for Pattern Recognition. Data Mining and Knowledge Discovery 2:121–167

Edwards C, Heggie R, Marks R (2003) A study of difference in surface roughness between sun-exposed and unexposed skin with age. Photodermatol Photoimmunol Photomed 19:169–174

EmguCV: cross Cross platform .Net wrapper to the OpenCV, http://www.emgu.com/wiki

Fischer TW, Wigger-Alberti W, Elsner P (1999) Direct and nondirect measurement techniques for analysis of skin surface topography. Skin Pharmacol Appl Skin Physiol 12:1–11

Fujimura T, Haketa K, Hotta M, Kitahara T (2007) Global and systematic demonstration for the practical usage of a direct in vivo measurement system to evaluate wrinkles. Int J Cosmet Sci 29:423–436

Ilive D, Hinnen U, Elsner P (1997) Skin roughness is negatively correlated to irritation with DMSO, but not with NaOH and SLS. Exp Dermatol 6:157–160

John Hatzis J (2004) The wrinkle and its measurement -: A skin surface profilometric method. Micron 35(3):201–219

Jun-Ichiro Hayashi J, Koshimizu H, Hata S. et al. (2003) “Age and Gender gender Estimation estimation Based based on Facial facial Image image Analysisanalysis,”. KES 2003,; LNAI 2774, : pp. 863–-869

Jun-Ichiro Hayashi J, Yasumoto M, Ito H, Koshimizu H et al (2002) Age and Gender gender Estimation estimation Based based on Wrinkle wrinkle Texture texture and Color color of Facial facial Imagesimages. Int Conf on Pattern Recognition 1:10405405–408

Kim K, Choi Y, Hwang E (2009) Wrinkle feature-based skin age estimation scheme. In Proceedings of Int’l Conference on Multimedia and Expo

Lagarde JM, Rouvrais C, Black D (2005) Topography and anisotropy of the skin surface with ageing. Skin Res Technol 11:110–119

Lagarde JM, Rouvrais C, Black D, Diridollou S, Gall Y (2001) Skin topography measurement by interference fringe projection: a A technical validation. Skin Res Technol 7:112–121

Leveque JL, Querleux B (2003) SkinChip, a new tool for investigating the skin surface in vivo. Skin Res Technol 9:343–347

LIBSVM – A Library for Support Vector Machine, http://www.csie.ntu.edu.tw/~cjlin/libsvm

Nita D, Mignot J, Chuard M, Sofa M (1998) 3-D profilometer using a CCD linear image sensor: application to skin surface topography measurement. Skin Res Technol 4:121–129

Purba MB, Kouruis-Blazos A, Wattanapenpaiboon N, Lukito W, Rothenberg E, Steen B, Wahlqvist ML (2001) Can skin wrinkling in a site that has received limited sun exposure be used as a marker of health status and biological age?, Age Ageing 30:227–234

Tanaka H, Nakagami G, Sanada H, Sari Y, Kobayashi H, Kishi K, Konya C, Tadaka EH, Tanaka et al (2008) Quantitative evaluation of elderly skin based on digital image analysis. Skin research and technology 14:192–200

Yaobin Zou Y, Song E, Jin R et al (2009) Age-dependent changes in skin surface assessed by a novel two-dimensional image analysis. Skin Research and Technology 15(4):399–406

Acknowledgments

This research was supported by Basic Science Research Program through the National Research Foundation of Korea (NRF) funded by the Ministry of Education, Science and Technology (2011-0026448) and the MKE (Ministry of Knowledge Economy), Korea, under the ITRC (Information Technology Research Center) support program supervised by the NIPA (National IT Industry Promotion Agency) (NIPA-2012- C1090-1201-0008).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Choi, YH., Tak, YS., Rho, S. et al. Skin feature extraction and processing model for statistical skin age estimation. Multimed Tools Appl 64, 227–247 (2013). https://doi.org/10.1007/s11042-011-0987-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-011-0987-7