Abstract

The automatic modulation recognition of communication signal has been widely used in many fields. However, it is very difficult to recognize the modulation in low SNR. Based on information entropy features and Dempster-Shafer evidence theory, a novel automatic modulation recognition methods is proposed in this paper. Firstly, Rényi entropy and singular entropy is used to obtain the modulation feature. Secondly, based on the normal test theory, a novel basic probability assignment function(BPAF) is presented. Finally, Dempster-Shafer evidence theory is used as a classifier. Experiment results indicate that the new approach can obtain a higher recognition result in low SNR.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

With the increasingly complex modern electronic surveillance systems, the automatic modulation recognition of communication and radar signal has become more and more difficult. In addition, signals with different frequencies and different modulation modes are usually scattered in a wider frequency band. However, the limited priori knowledge is not flexible for traditional automatic modulation recognition. Therefore, modern automatic modulation recognition technology not only needs stable and effective signal characteristics, but also needs a smart and fast classifier [1].

The key technology of non-cooperative modulation signal recognition is modulation feature extraction and classifier design. Typical modulation feature extraction includes instantaneous parameters extraction [1], higher order cumulants [2], cyclic spectrum [3], fractal dimension [4,5,6,7]. Due to the influence of different conditions, such as the environment of signal and noise, the requirement of modulation feature has become more and more strict. In recent years, the information feature has become a hot research topic. Entropy is used to measure the uncertainty of signal distribution and represents the complexity degree of the signal, therefore information entropy provides a theoretical basis for signal characterization description [8]. In reference [9], the approximate entropy (ApEn) is used to implement continuous phase modulation classification, which is fit for adjustable parameters when calculating the ApEn indices. In reference [10], in order to solve this problem under low SNR environment, a new feature extraction method is presented based on entropy cloud character. Firstly, it extracts different signal modulation entropy, and then the entropy and cloud model theory is combined together. Experiments show that entropy cloud character has better antinoise performance. However, it needs a prior knowledge of the signals. In addition, information entropy has also been used in different fields, such as mechanical fault diagnosis [11, 12] and radar emitter recognition [13, 14]. In this paper, singular entropy [14] and Rényi entropy [15, 16] are used as modulation features.

D-S evidence theory is a type of method to describe uncertainty problem, which utilizes the probability interval representing the degree of information unsureness and the uncertainty of the proposition into a set of uncertain problems. D-S evidence theory has been widely used in feature recognition and expert system. In reference [17], a joint D-S evidence theory detection for superposition modulation was proposed. At the receiver, the joint detection recovers all users’ information. The experiments results indicate that it outperforms the conventional minimum mean square error and MMSE-SIC with arbitrarily chosen modulation parameters. In reference [18], in order to reduce the impact of shadowing, deep fading and other problems, based on D-S evidence theory, a new cooperative recognition method was proposed to correctly identify different modulation signals, such as 2ASK, 2FSK, 16QAM, 64QAM, OFDM, and so on. The experiment results indicate that it can improve the average recognition performance and increase the identifiable signal types. In addition, D-S evidence theory has been used in targets detection, classification and identification [19,20,21,22,23].

In this paper, firstly, the Rényi and singular entropy of modulation signal was extracted as modulation feature. Secondly, an obtaining method of the basic probability assignment function was proposed based on entropy normality. Finally, the D-S evidence theory was used as a classifier. In the process of simulation, the influences of signal’s parameter and noise were considered, which can better reflect actual signal environment. Signal symbol was generated randomly, and the Gauss noise was added to the signal. Simulation was carried out for different modulation signals, and it proves the effectiveness of this new method.

2 Preliminaries

2.1 Dempster-Shafer Evidence Theory

D-S evidence theory is shown as follows [24]:

-

(1)

Frame of Discernment(FD)

Suppose, θ is the recognition framework, which consists of N elements:

Suppose, P(θ) is the power set of θ, which can be expressed as:

Where ϕ is an empty set.

-

(2)

Basic Probability Assignment Function(BPAF)

There is a mapping relationship between the power set P(θ)and closed interval in the FD θ. The mass function m expresses the mapping relationship:

And then the mass function m is subjected to:

-

(3)

Combination Rule of Evidence Theory (CR)

In the FD θ, E1 and E2 are two evidences, and the corresponding mass functions are m1 and m2. Then D-S combination rule is shown as follows:

Here, k is the conflict coefficient, which reveals the interference degree among different evidences. The bigger value of k is, the bigger interference degree of different evidences will happen, which will make a great influence of evidence fusion.

2.2 Entropy Theory

2.2.1 The Rényi Entropy Based on Wigner-Ville Distribution

Wigner-Ville distribution has many excellent features, such as time-invariance, frequency shift invariance and so on. However, it also has some shortcomings, such as multi-frequency component and cross-generation. Therefore, the SPWVD is used in this paper, which is shown as follows:

Here h(τ) is a rectangular window and g(u) is smoothing function. They should meet h(0) = g(0) = 1.

Rényi entropy is widely used to evaluate complexity, which is defined as follows:

Here, the parameter α is the order of the Rényi entropy.

For the two-dimensional continuous probability density distribution,α order Rényi entropy is defined as follows:

The time frequency distribution of the signal is similar to the two-dimensional probability density function f(x, y). Therefore, WVD time frequency distribution Rényi entropy can be defined as follows:

If the signals have low complexity, the entropy will be small, but if the signal is of high complexity, such as different modulation type, the entropy will be much bigger.

2.2.2 The Rényi Entropy Based on Continuous Wavelet Transform (CWT)

Wavelet transform can be used to analyze nonstationary signals. The wavelet function has both high frequency and low frequency components, therefore it can effectively cover the time and frequency domain. Therefore, compared to Fourier transform, local subtle features of the signal can be described more finely.

If the signal is x(t) ∈ L2(R), the mother wavelet is ψ(t) ∈ L2(R), the CWT is \( \tilde{x}\left(a,b\right);\left(a,b\right)\in {R}^2 \), which is shown as follows:

Similar to the Rényi entropy based on Wigner-Ville distribution, the Rényi entropy of the continuous wavelet transform can be defined as follows,

2.2.3 The Singular Spectrum Entropy

Singular spectrum entropy is used to descirbe a measure for primitive signal segmentation. It reflects non-determinacy of signal segmentation energy under singular. To illustrate the definition of singular spectrum entropy well, the number of communication channels is defined as L, and the sampled signal is described as {xi, i = 1, 2, ⋯, N}, where the number of samples is described as N, while supposing\( {X}_t=\left\{{x}_t^1,{x}_t^2,\cdots, {x}_t^L\right\} \) represents the received sequence.

Firstly, the signal is segmented, and the length is M. Each signal is the intercept of the previous signal delay l, and then the signal xi is divided into N-M segments, and the (N − M) × M dimensional signal segmentation matrix A is shown as follows.

Then matrix A will be performed by singular value decomposition, and the distribution of the signal on the N-M basis vectors is obtained as {δi, 1 ≤ i ≤ N − M}. In order to be fit for the probability format, the decomposing matrix will be normalized, and the weight of each singular value can be obtained during the transformation. The definition of singular spectral entropy is shown as follows:

Where Pi represents the weight of the i-th singular value.

2.3 Normal Test Theory

The normality test is used to determine whether the data obeys the normality distribution or how much it belongs to the normal distribution. Common methods of normality test are D’Agostino’s K-squuare detection [25], Jarque-Bera detection [26], the Lilliefors test for normality [27] as well as moment method [28]. In this paper, the moment test is used to check the normality distribution [29].

The moment method is calculated by the third order moments and the fourth order moments of the sample data X, which are the skew and kurtosis coefficients of the data. They can be used to check the normality distribution with the following properties: 1) skewness for sample data and 2) normal distribution for kurtosis.

The skewness is shown as follows:

The kurtosis is shown as follows:

The standard deviation of skewness and kurtosis are σe1 and σe2.

In the above formula, the notation n represents the sample size.

If the distribution of sample data is normal, the skewness and kurtosis are respectively equal to 0. However, in practice, the skewness g1 and kurtosis g2 are not necessarily equal to 0. Therefore, the approximate degree of the normal distribution should be calculated. In this paper, the u test is used to hypothesis testing, andu1 is the test of skewness and u2 is the test of kurtosis.

According to u1 and u2, the u boundary value is shown in Table 1.

3 Experiments

-

Step 1:

Signal entropy features extraction

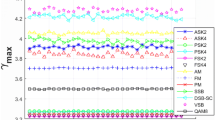

In this section, two different Rényi entropy and singular spectral entropy are used as the signal features. The length of signal x(t) is 2048 and the signal symbol is generated randomly. The symbol rate is fd=1KHz, and the sample rate is fs=16KHz. In the simulation process, the Gauss white noise is added to the signal. Through the time-frequency transform of SPWVD and CWT, the Rényi entropy is extracted. Through signal decomposition, the singular spectrum entropy is extracted. They are shown in Fig. 1.

In Fig. 1, it is clearly explained that under different SNR the three kinds of entropies can be used to classify.

The first picture is the Rényi entropy based on SPWVD, which is apparently that the entropy curves are stable, and small fluctuations can be obtained under different SNR. The QAM signals have large feature distance to the others signals.

The second picture is the Rényi entropy based on CWT, it shows that 2FSK has a large separation character under low SNR, but its curve has a crossing with QAMs. For BPSK and MSK, their entropy curves have evident separation characteristic when the SNR > 5 dB.

The third picture is the singular entropy, and it has a better separation character with QAMs and 2FSK, BPSK, MSK, but the 16QAM and 32QAM signal entropy curve completely overlap.

-

Step 2:

The normal detection of entropy features

The normal test step is shown as follows:

Through the normal test, the u1 and u2 can be obtained, which are shown in Table 2, and by checking the double-sided test Table 1, the normal degree can be decided.

According to the Table 2, it shows ∣u∣ <1.96, so the probability of the entropy subject to the normal distribution is 1-P = 95%. The probability distribution curve of entropy is shown in Fig. 3 and Fig. 4.

The stability of entropy can be seen from the entropy probability distribution curve. The curve is “fat”, that means the entropy has poor stability, otherwise the entropy has better stability. The Fig. 3 and Fig. 4 shows that the SPWVD Rényi entropy and CWT Rényi entropy have poor stability though they have better separation degree characteristic, and the singular spectrum entropy has better stability but it has poor separation degree characteristic. With the increase of SNR, the Rényi entropy based on CWT has good recognition performance for BPSK and MSK, however 2FSK, 16QAM and 32QAM signals is overlap.

-

Step 3:

BPA acquisition

D-S evidence theory can be used to combine with other theories to solve the related problem. Compared with the Bayesian classifier, D-S evidence theory has better flexibility because of no prior knowledge, conditional probability and other factors. In order to realize the signal classification and recognition, the BPA function should be defined firstly.

Through the normal test of the entropy, it shows that the entropy obeys the normal distribution. Therefore, the BPA can be calculated from the training feature set.

-

(1)

A large number of entropy in different SNR can be calculated. Through the the normality test, the mean μi and variance \( {\sigma}_i^2 \) can be obtained.

-

(2)

The entropy feature h is obtained, and then the probability value pi can be calculated by formula (27).

-

(3)

Through the normalization of m(p), the BPA m(s) can be obtained.

For three different types of the entropy, three BPA value can be calculated as follows:

-

(4)

According to the BPA function, the combination rule of D-S evidence theory is used to fuse the three different BPA, the final probability assignment function ms(D) = {D1, D2,…,DK} can be calculated, the fusion process is shown in Fig. 5.

-

(5)

Find the max Di, and the ith signal as the recognition signal.

-

Step 4:

Simulation results

In this paper, 2FSK, BPSK, MSK, 16QAM and 32QAM are used to prove the performance. The simulation results are given in Fig. 6 and Fig. 7.

As shown in Fig. 6 and Fig. 7, the recognition rate can achieve 100% when the SNR is higher than 6 dB. The 2FSK and QAM signals can obtain a better recognition performance under low SNR. The trend of the result curves is consistent with the SNR for BPSK and MSK. It means when SNR reduces, the recognition rate also decreases. From the view of signal modulation, the BPSK and MSK belong to digital phase modulation signal which will result in a high similarity degree. Based on the above reason, the recognition rate of these two kinds of signals can be reduced sharply under low SNR.

The Table 3 and Table 4 is the judgment result matrix, each signal is input 100 times. The judgment result shows that there is error judgment between BPSK and MSK signal, and the error judgment rate will decrease with the increasing of the SNR.

4 Conclusion

In the modern complex environment, how to improve the recognition rate of modulation signal is a very hard task. In this paper, based on entropy features and D-S evidence theory, a novel algorithm is proposed. Based on the entropy and the normal test, a new BPA acquisition method was proposed, and then D-S combination rule is used to fuse the different evidence. Simulation results show that the method has a good recognition performance. When the SNR is higher than 5 dB, the recognition rate is 90%. For QAMs and 2FSK, although the SNR is lower than -10 dB, the recognition rate is higher than 90%. However, for MSK and BPSK, the recognition rate will be worse in low SNR. It means this method has a limitation to the pure phase signal, therefore, it is left to the future research work. At the meantime, the stability of entropy feature and the accuracy of norm test should be further improved. Finally, the conflict of D-S combination rule should be considered in the future work.

References

Nandi AK, Azzouz EE (1998) Algorithms for automatic modulation recognition of communication signals[J]. IEEE Trans Commun 46(4):431–436

Guo J-j, Hong-dong Y, Lu J, Heng-fang M (2014) Recognition of Digital Modulation Signals via Higher Order Cumulants[J]. Communications Technology 11:1255–1260

Zhu L, Cheng H-W, Wu L-n (2009) Identification of Digital Modulation Signals Based on Cyclic Spectral Density and Statistical Parameters[J]. J Appl Sci 2:137–143

Liu S, Fu W, He L et al (2017) Distribution of primary additional errors in fractal encoding method [J]. Multimedia Tools and Applications 76(4):5787–5802

Liu S, Pan Z, Fu W, Cheng X (2017) Fractal generation method based on asymptote family of generalized Mandelbrot set and its application [J]. Journal of Nonlinear Sciences and Applications 10(3):1148–1161

Li J (2015) A New Robust Signal Recognition Approach Based on Holder Cloud Features under Varying SNR Environment[J]. KSII Transactions on Internet and Information Systems 9(12):4934–4949 12.31

Liu S, Pan Z, Cheng X (2017) A Novel Fast Fractal Image Compression Method based on Distance Clustering in High Dimensional Sphere Surface [J]. Fractals, 25(4), 1740004: 1–11

Liu S, Lu M, Liu G et al (2017) A Novel Distance Metric: Generalized Relative Entropy [J]. Entropy 19(6):269. https://doi.org/10.3390/e19060269

Pawar SU, Doherty JF (2011) Modulation Recognition in Continuous Phase Modulation Using Approximate Entropy[J]. IEEE Transactions on Information Forensics & Security 6(3):843–852

Li J, Guo J (2015) A New Feature Extraction Algorithm Based on Entropy Cloud Characteristics of Communication Signals[J]. Math Probl Eng 2015:1–8

He Z-y, Yu-mei C, Qing-quan Q (2005) A Study of Wavelet Entropy Theory and its Application in Electric Power System Fault Detection[J]. Proceedings of the CSEE 5:40–45

Bo J, Dong X-z, Shen-xing S (2015) Application of approximate entropy to cross-country fault detection in distribution networks[J]. Power System Protection and Control 7:15–21

Lin-yi Z, Zhi-cheng L, He J-z (2009) Application of Hierarchy-Entropy Combination Assigning Method in Radar Emitter Recognition[J]. Command Control & Simulation 6:27–29

Jing-chao Li, Yu-long Ying (2014) Radar Signal Recognition Algorithm Based on Entropy Theory[C]. 2014 2nd International Conference on Systems and Informatics, 718–723. https://doi.org/10.1109/ICSAI.2014.7009379

Sucic V, Saulig N, Boashash B (2014) Analysis of local time-frequency entropy features for nonstationary signal components time supports detection[J]. Digital Signal Processing 34(1):56–66

Hang B, Yong-jun Z, Shen W, Xu Y-g (2013) Radar emitter recognition based on rényi entropy of time-frequency distribution[J]. Journal of Circuits and System 1:437–442

Rui Z, Si Z, He Z, et al (2015) A joint detection based on the DS evidence theory for multi-user superposition modulation[C]. IEEE International Conference on Network Infrastructure and Digital Content. IEEE, 390–393. https://doi.org/10.1109/ICNIDC.2014.7000331

Xin Y, Zhu Q (2013) Cooperative Modulation Recognition Method based on Multi-Type Feature Parameters and Improved DS Evidence Theory[J]. J Converg Inf Technol 8(11):258–266

Lei L, Wang X-d, Ya-qiong X, Kai B (2013) Multi-polarized HRRP classification by SVM and DS evidence theory[J]. Control and Decision 6:861–866

Lin Y, Wang C, Ma C et al (2016) A new combination method for multisensor conflict information[J]. J Supercomput 72(7):2874–2890

Luo X, Luo H, Jin-deng Z, Lei L (2012) Error-correcting Output Codes Based on Classifier’ Confidence for Multi-class Classification[J]. Science Technology and Engineering 22:5502–5508

Wen-sheng DENG, Xiao-mei SHAO, Hai LIU (2017) Discussion of Remote Sensing Image Classification Method Based on Evidence Theory[J]. Journal of Remote Sensing 4:568–573

Peng-fei NIU, Sheng-da WANG, Jian MA (2007) Radar target recognition based on Subordinate Function and D-S Theory[J]. Microcomputer Information 31:218–220

Jie X (2006) Fuzzy recognition of airborne radar based on D-S evidence theory[J]. Command Control & Simulation 4:33–36

D’Agostino R (1970) Transformation to normality of the null distribution of g1. Biometrika 57(3):679–681

Jarque C, Bera A (1980) Efficient tests for, normality, homoscedasticity and serial independence of regression residuals. Econ Lett 6(3):255–259

Lilliefors H (1967) On the Kolmogorov-Smirnov test for normality with mean and variance unknown. J Am Stat Assoc 62:399–402

Deng X-m, Ying-sheng Z (1964) The introduction of a simple method for the normal test[J]. Chinese School Health 3:167–169

Xu Pei-da; Deng Yong, Su, Xiao-yan. A new method to determine basic probability assignment from training data[J]. Knowl-Based Syst, 2013, 46(1): 69-80

Acknowledgments

This work is supported by the National Natural Science Foundation of China (61771154,61301095), the Key Development Program of Basic Research of China (JCKY2013604B001), the Fundamental Research Funds for the Central Universities (GK2080260148 and HEUCF1508).

We gratefully thank of very useful discussions of reviewers.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

Meantime, all the authors declare that there is no conflict of interests regarding the publication of this article.

Rights and permissions

About this article

Cite this article

Wang, H., Guo, L., Dou, Z. et al. A New Method of Cognitive Signal Recognition Based on Hybrid Information Entropy and D-S Evidence Theory. Mobile Netw Appl 23, 677–685 (2018). https://doi.org/10.1007/s11036-018-1000-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11036-018-1000-8