Abstract

Ranking systems such as The Times Higher Education’s World University Rankings and Shanghai Jiao Tong University’s Academic Rankings of World Universities simultaneously mark global status and stimulate global academic competition. As international ranking systems have become more prominent, researchers have begun to examine whether global rankings are creating increased inequality within and between universities. Using a panel Tobit regression analysis, this study assesses the extent to which markers of inter-institutional stratification and organizational segmentation predict global status among US research universities as measured by position in ARWU. Findings indicate some support that both inter-institutional stratification and organizational segmentation predict global status.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

The “global research university” (GRU) is the preeminent form of contemporary higher education organization (Marginson 2010).Footnote 1 GRUs represent a specific set of relations between higher education, states, and markets. These universities have cross-border connections and are integrated with the global political economy through ties with nation-states, corporations, non-governmental organizations, and individuals from around the world. Their researchers publish papers in international journals, and their faculty, staff, and students often move across national borders to affiliate with the university. Above all, GRUs generate a large quantity of research outputs that are recognized beyond national borders. As a result, despite the fact that GRUs remain situated in local and national contexts, these universities compete globally for status, labor, and resources. Some of the drive towards this form of organization can be attributed to the decisions of status-seeking students, faculty, and administrators. However, states also encourage global-scale competition through funding mechanisms and other incentives created to support policy that ties competitiveness in academic research with innovation and economic wellbeing (Slaughter and Cantwell 2012).

Ranking systems such as The Times Higher Education’s World University Rankings (THE) and Shanghai Jiao Tong University’s Academic Rankings of World Universities (ARWU)Footnote 2 simultaneously mark global status and stimulate global academic competition (Altbach 2007; Bowman & Bastedo 2011; Hazelkorn 2011; Salmi 2009). That is, on the one hand, inclusion in these rankings indicates a university’s global status as a GRU. On the other hand, rankings produce the metrics, or benchmarks, along which universities compete (Bruno 2009). These metrics, especially in the case of ARWU rankings, emphasize research output and prestigious research achievement awards. Insofar as universities attempt to attain status by stressing the organizational elements that are rewarded by these metrics, they emphasize research over and above other activities. Perhaps not surprisingly, then, ARWU can be used as an internationally comparable index for research output (Halffman and Leydesdorff 2010).

The emergence of GRUs and global ranking systems clearly demonstrate that university competition is global. Yet the existence of global competition does not imply the end of national and local competition (Marginson 2006). Competition in higher education is desired as an end in itself, sometimes obscuring embedded power relations (Naidoo 2011). Competition is a set of processes that distinguish the more ‘fit’ from the less ‘fit’ through discrimination in the allocation of status and resources. Competition in higher education tends to award resources and status on a zero-sum basis. Research funding is scarce, and when one researcher receives funding, other researchers cannot access those resources. Research and development (R&D) funds that are targeted for biotechnology departments are not available for physics or sociology. Likewise, when one university advances in a rankings system, it displaces another for that status position. The consequences of competition as an allocative device therefore include inter-institutional stratification and organizational segmentation (Slaughter and Cantwell 2012).

Inter-institutional stratification is hierarchal differentiation among universities both globally and nationally. One classic example of inter-institutional stratification is the California Master Plan, in which the University of California (UC) system is explicitly differentiated from the California State University system (CSU). UC campuses were designed to enroll only the top few percent of California’s secondary students and to conduct extensive research enterprises. CSU campuses, by contrast, were designed as a point of access for a wider-range of students and to have more limited research endeavors (Kerr 2001). In unitary systems, like many in Western Europe, all universities are legally identical. Nonetheless, an informal system of inter-institutional stratification tends to emerge wherein the “best” students and research staff, and the most resources, flow into a handful of universities. For example, Münch and Baier (2012) document this phenomenon in Germany. We speak most directly about this informal, yet discernable, system of inter-institutional stratification by focusing on input resources as key lines of stratification.

We also identify two types of within-university segmentation. The first type of segmentation is the separation of sub-units, or the concentration of staff and programs, in particular areas of study. In effect, this first type of organizational segmentation refers to material asymmetries that separate some units from others within universities. Such segmentation might result in the advantaging of some fields, such as the applied sciences, relative to others. The second type of organizational segmentation includes the use of casual academic labor. This form of segmentation distinguishes sessional or adjunct lecturers and temporary or contingent research staff, including postdocs, from regular full-time academic staff and faculty. Universities effectively segregate one pool of workers from another by task definition, compensation, and status. Assuming global rankings like ARWU both indicate the most competitive universities in the world, and define the lines of global competition, and further assuming that separation of the material conditions of sub-units and increased division of labor is part of efficiency seeking within competition regimes (Slaughter and Cantwell 2012), we posit that indicators of inter-institutional stratification and organizational segmentation will predict position in the ARWU table.

Research Purpose

Despite substantial and growing interest in GRUs, there is little consensus on the organizational practices and processes that characterize these organizations.Footnote 3 We propose that one way of understanding GRUs is through the differentiation created by intense competition. The purpose of this study is to assess the extent to which markers of inter-institutional stratification and organizational segmentation predict global status among US research universities as measured by position in ARWU. Drawing both on a Foucauldian theory of government and on academic capitalism theory, we identify a set of markers that correspond with the concepts of inter-institutional stratification and organizational segmentation. We test whether these markers predict global status, as measured by ARWU position,Footnote 4 through a panel Tobit regression analysis. Our findings indicate strong but not complete support for the proposition that both inter-institutional stratification and organizational segmentation predict ARWU position. Before moving to the theory, data and methods, and findings of this study, a discussion of GRU within the context of current literature and policy is needed to fully situate our work.

Global Research Universities

We begin this review with a brief discussion on terminology. We refer to Global Research Universities as opposed to other similar terms. Many influential organizations such as the World Bank (Salmi 2009) and prominent researchers (e.g. Altbach 2007) speak of world class universities. In many ways these terms are interchangeable; however, substantive differences underpin our decision to use GRU. We argue that “world class” is normatively laden,Footnote 5 implying that the most research productive universities, which are heavily concentrated in North America and Europe, are more virtuous than other institutions. Following Marginson (2010), we instead use the term GRU to denote universities that operate on a global basis, meaning that they draw students, staff, and resources transnationally, engage in cross-border research and educational activities, and generate research that is visible worldwide. With that said, we acknowledge that GRU status also has clear normative implications as indicated by the presence of such institutions at the top of ranking systems such as ARWU.

Much has been written about GRUs in recent years, and a complete review of this literature is beyond our scope. However, we have distilled four key features of GRUs as described in the literature. First, GRUs emphasize knowledge generation, and particularly prize-winning research in science, technology and other innovation-related fields. Research is often prioritized over teaching at GRUs (Altbach 2007; Cremonini et al. 2010; Deem, Mok and Lucas 2008; Geiger 2004; Salmi 2009).

Second, GRUs reflect the logics of Western, and especially American, higher education organization. For example, GRUs rely heavily on English in instruction and research (Altbach 2004; Marginson 2007). Third, this organizational script for GRUs also implies increased managerial capacity (Salmi 2009), which may include weaker academic governance, a more flexible and contingent academic workforce, and the right of managers to account for academic productivity (Deem and Brehony 2005; Rhoades and Sporn 2002; Slaughter and Cantwell 2012).

Fourth, GRU status is produced, at least partly, by global university ranking systems (Altbach 2007). These ranking systems insert new lines of competition into the relations between universities by facilitating competition within and across national borders. Because global rankings are central to this study we later discuss them more fully.

GRUs and Society

GRUs are understood as organizations central to a contemporary “knowledge society” in which the production, dissemination, and application of knowledge is assumed to be central to economic growth and expanded social welfare (Altbach & Balán 2007). Analyses of the knowledge society generally assume that GRUs contribute to economic growth. Research and its potential commercialization receive particular attention because activities in this area demonstrate intuitive connections to innovation and growth (Dill and van Vught 2010). Yet GRUs are not the only entities that maintain extensive research enterprises in knowledge societies. Corporate and government research and development has also expanded. As a result, it is assumed GRUs must collaborate and compete with an array of other knowledge producing organizations (Clark 1998; Etzkowitz and Leydesdorff 2000; Gibbons et al. 1994; Scott 2000; Scott 2011).

Despite these general trends toward contact with markets, states often have led the development of GRUs. Both global universities and the markets in which they compete generally result from governmental policy and state-sponsored competition regimes (Cermonini, Benneworth, and Westerheijden 2010; Horta 2009; Shin 2009). States have created new streams of resources, for example through centers of excellence initiatives and new or expanded research grant programs. States also have reformed regulation to create incentives that promote investment in research, internationalization, and university-industry partnerships, as well as established the competition regimes that drive universities to pursue ever more resources with which to generate knowledge (Slaughter and Cantwell 2012). Thus, while it is important to consider GRUs as exposed to market-like competition, public revenues and policies also shape GRUs’ structure and activities.

Universities themselves also contribute to the development and articulation of the GRU as an elite organizational form. Leading US research universities generally support initiatives to develop GRUs (Association of American Universities 2010). State policies aimed at creating GRUs tend to yield additional resources that flow disproportionately to already-advantaged universities. This pattern of resource allocation further separates “excellent” institutions from other universities within national systems. As Cermonini and colleagues (2010) put it, “Most policies to support excellence focus on vertical stratification, which in turn is based on research indicators” (p. 31). Vertical stratification is achieved by measuring the quantity and quality of research outputs as well as by differential institutional inputs such as student achievement and monetary resources. Global rankings systems, which have been designed for various purposes, constitute one mechanism by which the vertical stratification of GRUs is both created and articulated.

Global University Rankings

University rankings are not new. Beginning in the 1980s the magazine US News and World Report began publishing popular rankings for colleges and universities in the United States. In the domestic context, rankings influence both students’ enrollment choices (Monks and Ehrenberg 1999) and the resources that flow into institutions including tuition fees, alumni giving, and research grants (Bastedo and Bowman 2010). However, US-based rankings are not a strong predictor of research productivity (Porter and Toutkoushian 2006; Volkwein and Sweitzer 2006). Despite their limited ability to rate research capacity effectively, similar national ranking systems and league tables emerged around the world.

Beginning around the turn of the century, a host of global university rankings emerged. Where prior efforts such as the US News listings rated universities within a national context, global rankings drew dissimilar universities from diverse national systems into a single competitive field (Hazelkorn 2011; Marginson and van der Wende 2007; Tierney 2009). This permits not only cross-institutional comparison but also cross-national competition. Further, global rankings provide a set of metrics along which universities are able to compete. Put simply, universities could not compete across national borders until technologies were developed that permitted such competition (Bruno 2009).

There are now numerous world university rankings including Webometrics, QS Top Universities, the Leiden Rankings, and US News’ World’s Best Universities. Among these many systems, The Times Higher Education’s World University Rankings (THE) and Shanghai Jioa Tong University’s Academic Rankings of World Universities (ARWU) stand out for their influence (Hazelkorn 2011). These two rankings systems differ, however, in important ways. The World University Rankings is a commercial product of a British newspaper. ARWU, by contrast, was developed by higher education researchers at a Chinese university to assess the status of Chinese universities in the world. THE rankings include research measures, but also account for the internationalization of students and staff, industry income, and most notably several reputational components.Footnote 6 By contrast, ARWU primarily measures research paper output, especially in the sciences and in English language journals. ARWU rankings also include prestigious awards received by faculty and alumni, which can be considered a sort of reputational measure. Because awards recognize research achievement, however, these factors also may reflect research prowess.

To be sure, both rankings have been widely criticized for privileging English speaking universities over others, for valuing research over teaching, and for promoting a single type of university organization (Hazelkorn 2011; Marginson and van der Wende 2007; Tierney 2009; van der Wende 2008). We focus on ARWU ranking not because we believe it to provide a complete measure of a university’s status, then, but because of its broad influence over the decisions of policymakers and university officials. This ranking system is well recognized and has become a de facto authority on the positions of GRUs.

Do World Rankings Matter in the US?

As we have already stated, the purpose of this study is to assess the extent to which markers of inter-institutional stratification and organizational segmentation predict global status among US research universities as measured by position in ARWU. However, observers may question the salience ARWU and other global university rankings to US higher education, which tends to be inward looking and concerned with domestic rankings. We acknowledge this critique, but argue that our assessment of ARWU with a sample of US universities is appropriate for at least two reasons.

Unlike some European and Asian countries, there have been no systemic policy efforts responding to global rankings in the US. Nevertheless, policy at both federal and institutional levels tacitly or explicitly acknowledges the importance of global academic competition. Hence, the first reason we argue the study of US universities is appropriate is because global competition is of growing importance in US policymaking. The Obama Administration placed higher education and academic R&D at the center of its national competitiveness agenda (Cantwell 2011a; Slaughter and Cantwell 2012). This includes making R&D investments in top universities so that they remain globally pre-eminent. Global engagement and competition, and position in global rankings, are increasingly important priorities in setting institutional policy at US research universities. The Association of American Universities (2010), a group of the top North American research universities, explicitly endorses efforts to enhance the global competitiveness of its member intuitions. Michigan State University prominently describes itself as “a hub for international programs, centers, and events and worldwide partnerships” (MSU 2012, para 1). Arizona State University (ASU) boasts of its position in global rankings (ASU 2012) and, in a recent newspaper article, ASU president Michael Crow is cited as dismissing the domestic US News and World Report ranking as a “beauty contest” but praising ARWU for focusing on globally relevant research output (Ryman 2011). As these anecdotes demonstrate, rankings remain influential, and at least some policymakers and university leaders perceive a shift from domestic to global metrics.

Second, US research universities remain (more or less) the prototypical model that is normatively valorized around the world. Global university rankings use features of US universities to inform the metrics that result on league table ordering (Altbach 2007; Marginson and van der Wende 2007). Regardless of whether policy specifically shapes these universities to perform well in global rankings, there are potentially wide effects if markers of inter-institutional stratification and organizational segmentation predict ARWU status among US universities. Put more directly, because US universities are well represented at the top global rankings, ARWU rankings may justify policymakers and organizational leaders who seek to implement reforms that also may increase stratification between universities and segmentation within universities in other academic systems. In this way, we argue that ARWU may normalize rather than directly cause stratification and segmentation. We develop this argument more fully in our theoretical discussion.

Theoretical Background

A firm theoretical base to explain GRUs has not yet developed. Nevertheless, existing scholarship identifies key aspects of these organizations. This research suggests that GRUs exist locally and nationally because states, markets, and educational organizations intersect in particular contexts. These national contexts explain GRUs as organizations developed by policymakers interested in utilizing universities as potential engines of economic growth. However, national contexts by definition do not offer spaces or metrics by which GRUs can compete globally for resources and status. We explain this dimension of GRUs by utilizing contemporary scholarship on governance technologies. In particular, we draw on the philosophy of Michel Foucault and its applications. These texts help us to develop a robust understanding of observation, measurement, and evaluation by independent bodies. Foucault’s (1990, 1984, 1979) work suggests that governance technologies both regulate and produce behavior, thereby implying that the technologies that assess GRU status – that is, ARWU and other rankings systems – simultaneously evaluate and shape the behavior of the organizations that they observe. Because of its generality, however, Foucault’s theory does not highlight the particular organizational characteristics that might correlate with high or low status for any particular GRU. We utilize the theory of academic capitalism to fill this explanatory gap. This framework, developed by Slaughter and Leslie (1997) to interpret the complex relationships between universities, states, and markets, suggests that competition-based allocative devices increase inter-institutional stratification by favoring the already advantaged. Simultaneously, academic capitalist regimes increase within-organization segmentation by advantaging some aspects of a GRU, such as science and engineering (S&E) fields, while ignoring or disadvantaging others. Through academic capitalism theory, we identify several areas of a university that may predict position in ARWU league tables.

GRUs and research policy

As noted above, previous research has identified at least four key features of GRUs. First, GRUs emphasize knowledge generation, particularly in science, technology and engineering. Second, GRUs reflect the logics of Western, and especially American, higher education organization. Third, the organizational script for GRUs also implies increased managerial capacity. Fourth, the very global university ranking systems that assess research universities produces GRU status, at least partly.

Policymakers tend to cast research universities as vehicles for economic growth but acknowledge that GRUs compete with other organizations for resources and status (Dill and van Vught 2010; Gibbons et al. 1994). For example, research capacity among corporations, government agencies, not-for-profit organizations, and think tanks has also expanded. Within this context of increased academic competition and change in relations between the academy and society, numerous observers have described the rise of market-like systems of university resource allocation. These systems are heterogeneous, but generally designed to reward the ‘fittest’ or most ‘excellent’ university organizations.Footnote 7 In North America and Europe, new resource allocation systems involve both market competition and directed state support for selected areas of research (Slaughter and Cantwell 2012).

These patterns of resource allocation segregate ‘excellent’ institutions from other universities within national systems. This competition, we argue, increases inter-institutional stratification by advantaging some universities and disadvantaging others. Those universities deemed the ‘fittest’ or ‘most excellent’ generally possess extensive resources yet secure further wealth because they prevail in competitive settings. Other universities start with fewer funds and fewer credentialed laborers, and fall farther behind their advantaged peers by losing competitions for additional resources.

Targeted support for research also segments university organization internally. Research funding agencies and excellence initiatives tend to favor engineering, technology, and natural and physical science fields over other areas. In the US, for example, the National Institutes of Health (NIH) and National Science Foundation (NSF) together confer more than 78% of all federal funding for university-based research. In fact, the NSF reports that six federal agencies focused on science (including the NIH and NSF) fund 97% of all academic R&D from federal sources, leaving non-science based agencies, including the National Endowment for the Arts (NEA) and National Endowment for the Humanities (NEH), with about 3% of all federal funds for university-based research (National Science Board 2010, Chapter 5). Further, funds from industry to university-based research flow almost exclusively to the applied sciences in an effort to convert novel discoveries into products that can be produced and reproduced on a wide scale. For example, funding for biomedical research nearly doubled in inflation-adjusted dollars between 1994 and 2003 (Moses et al. 2005). This unequal flow of resources leads to within-organizational segmentation, which is especially prominent in the United States (Slaughter and Cantwell 2012).

It is not particularly important whether resources were allocated unequally with the intent to improve global university ranking positions – indeed, in the case of the US it is likely that they were not – so long as unequal allocations predict ARWU position. ARWU is accepted as an arbiter of global status (Saisana, d’Hombres, and Saltelli 2011). Consequently, organizational segmentation is legitimized (at least tacitly) if it is a positive predictor of ARWU position.

Technologies of Governance and Competition

A recent empirical assessment of the robustness of both the ARWU and THE global ranking systems found these systems reflect the founders’ goals rather than produce a sound statistical metric reflecting a broad consensus in university assessment. Nevertheless, these ranking systems are presented as if they had been “calculated under conditions of certainty” and, though controversial, are widely influential (Saisana, d’Hombres, and Saltelli 2011 p. 174). Foucault’s (1979) theory of government is useful in understanding the influence of such problematic metrics by highlighting the role of “disciplinary” technologies in both regulating and producing social behavior. This understanding of governance differs notably from traditional models of power. Rather than being embodied in a visible and charismatic ruler, disciplinary power works through “humble” mechanisms such as hierarchical observation by “specialized personnel” (Foucault 1979, p. 170, 174). Individuals such as physicians and teachers receive advanced training in the practice of supervision, allowing their gazes to define what forms of social conduct are considered normal. In other words, their observations codify and classify social activity. Through these ceaseless attentions, disciplinary power incites actors both to comply with certain regulations and to produce certain kinds of behavior (Foucault 1990).

Foucault’s understanding of disciplinary technologies casts ranking systems as mechanisms to evaluate and regulate universities. At the same time, however, rankings incite university officials to behave in the manner that seems likely to elicit favor from the rankings system (e.g., Bruno 2009; Pestre 2009). Rankings technologies therefore both establish the parameters under which competition occurs and generate behaviors that these parameters assess positively. The former of these dimensions proves crucial to an understanding of GRUs because most formal mechanisms of university governance remain local and national. In order for universities to compete globally, bodies must establish a set of standardized measurement technologies that compare and categorize university organizations in many different national contexts. Universities, in the Foucauldian understanding, can only compete if they are observed in the act of competition. Rankings systems provide just such a competitive space. In this way, universities do not compete directly against each other, but rather competition is mediated through assessment metrics that act as technologies that discipline actors’ behaviors.

A Foucauldian perspective also suggests that, beyond creating a space in which universities can compete for status, evaluative technologies such as rankings systems incite universities to behave in particular ways. For example, ARWU weighs research output heavily in its evaluation of universities’ performances. These rankings therefore confer status upon the most research productive institutions, simultaneously increasing the space between these institutions and their peers, on the one hand, and suggesting that research productivity offers the best mechanism through which to pursue status, on the other. Further, since ARWU (and other metrics) privileges research outputs in science and related fields – for example, publications in the journals Science and Nature – we assume that evaluative technologies orient actors’ focus towards S&E fields. Following Foucault, we suggest that resources and status are likely to flow through channels created by the evaluative technologies of ranking systems. The first of these potential flows heightens inter-institutional stratification by conferring status on the already-advantaged, while the latter suggests organizational segmentation by incentivizing universities to advantage the S&E fields as a component of the pursuit of status.

Despite these valuable insights, a focus on metrics as technologies of government does not help to identify specific institutional and organizational lines along which stratification and segmentation occur. What metrics will signal stratification between institutions? Which organizational units will be favored or disfavored by evaluative observation? In order to situate general insights about evaluative technologies within the specific context of GRUs, we turn to academic capitalism. This theory identifies markers of inter-institutional stratification and organizational segmentation.

Academic Capitalism

Academic capitalism identifies the mechanisms by which institutional and organizational structures link universities with the state, corporations, and interstitial organizations. Through complex negotiations and competition for resources, these entities create new circuits of knowledge in support of market-oriented research (Slaughter and Rhoades 2004). Academic capitalism provides a useful elaboration upon Foucauldian perspectives by positing particular policy and organizational channels through which observation technologies shape social behavior. As such, academic capitalism theory illustrates the mechanisms by which market-oriented competition regimes are established and reproduced. It also highlights the varied consequences of these regimes. We identify several of these outcomes of academic capitalism as ‘markers’ of inter-institutional stratification and organizational segmentation.

Direct state support for public colleges and universities in the US declined throughout the 1980s and 1990s (Rizzo 2006). Colleges and universities also receive indirect public support in the form of portable student financial aid awards that subsidize student tuition fees. Yet even this indirect state support for US higher education provides a smaller source of university support than do federal grants to support university-based research (College Board 2010; Yamaner 2009).Footnote 8 In other words, as public support for instruction declines as a share of total university expenditures, resource dependent universities become more reliant on competitive research grants and contracts from public sources and industry (Slaughter and Leslie 1997). The largest share of these resources flow to the upper strata of research universities in the national system. These already-advantaged universities may compound their financial advantages by using additional funds to hire additional faculty members or contingent laborers. These additional workers, in turn, may seek still more funds through application for further externally funded grants and contracts. As a result, stratification between universities, as measured both by financial and by human resources, may increase markedly around universities’ research functions. Moreover, the rhetoric of academic capitalism regimes typically valorizes competition and markets. As such, these policy environments imply a type of privatization, and so may mark an institutional preference for private rather than public universities (Slaughter and Rhoades 2004). Taken together, these elements of academic capitalism theory suggest that inter-institutional stratification among research universities is best measured through the consideration of externally-funded R&D expenditures, the number of full-time and contingent laborers, and a university’s public or private locus of control.

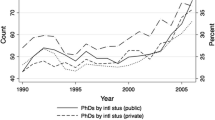

Academic capitalism also indicates new forms of organization that segment universities. Segmentation implies the increasing significance of boundaries between different units within the same university. Typically, though not always, segmentation results in the advantaging of some organizational sub-units and groups of employees relative to others (Slaughter and Cantwell 2012). We understand organizational segmentation in two ways. The first type of segmentation reflects the place of the different disciplines within a single university through special designation or enhanced resource allocation. In general, federal research funding for S&E fields far exceeds dollars available to university-based researchers in other areas. This tendency to favor the sciences proves particularly acute in the case of the life sciences (Britt 2010). For these reasons, we suggest that segmentation may prove evident in the number of S&E Ph.D. degrees awarded and in the maintenance of costly but prestigious units like medical schools. The second type of organizational segmentation reflects changes in academic labor practices in US higher education. Historically, US research universities relied on full-time, tenure-line faculty members to conduct a variety of tasks in the fields of instruction, research, and service. Increasingly, however, universities rely upon part-time academic workers who specialize in a particular task such as instruction or research (Schuster & Finkelstein 2006). Academic capitalism theory suggests that a growing group of contingent workers including part-time faculty and postdoctoral researchers may be positively associated with GRU status (Cantwell 2011b).

Summary

From prior studies of GRUs, we identified core characteristics of these institutions such as their emphasis on research, their situation in local and national contexts, and their reliance upon external bodies to generate spaces and metrics by which they can compete for global status. Foucault’s theory of government suggests that rankings systems function as evaluative technologies that provide these very spaces and metrics. In keeping with Foucault’s understanding of disciplinary power, ranking systems such as ARWU both assess the activities of GRUs and incite GRUs to behave in certain ways. Academic capitalism theory identifies particular channels and behaviors that ARWU likely favors, such as external research funding, private institutional control, and an emphasis on doctoral education in S&E fields.

These three strands suggest that ARWU creates both a space in which GRUs compete for status and an evaluative apparatus that assesses those competitors. ARWU then confers status on those who have competed successfully. This legitimation process is likely to increase inter-institution stratification by conferring high status on the already advantaged. Simultaneously, ARWU may increase segmentation within organizations by rewarding universities that emphasize units such as S&E research and particular groups of workers such as contingent academic laborers. With this general conceptual model in mind, we now turn to a discussion of the data and methods that we utilize to develop an empirical portrait of the relationship between ARWU rankings and GRU status.

Methods

Sample, Data, and Variables

Comparable cross-national data are not widely available for all universities in the ARWU rankings; indeed, metrics like ARWU (2012) are intended in part to address this deficit of comparable cross-national data by providing figures that are “globally sound and transparent” (para. 3). For this reason we limit ourselves to universities based in a single country. Further, while GRUs compete for status globally, they remain situated in specific national contexts. We study GRUs based in the US because these institutions constitute the majority (around 60% each year) of the top 100 universities in ARWU. Publicly available comprehensive data sources that span several years report figures on US universities, allowing us to examine the relationship between these organizational characteristics and ARWU scores.

ARWU explicitly considers research as the key component of world-class status. Yet many US colleges and universities conduct little or no research. We therefore limit our sample to research universities. The Carnegie Foundation for the Advancement of Teaching recognizes 89 US universities that perform undergraduate instruction and engage in a “very high” level of research activity. Unfortunately, since 21 universities reported no values for one or more independent variables, 68 observations are included in our final data set. We observe the independent characteristics of these 68 universities annually over a 6-year time period (2003–2008), yielding a sample of 408 observations. After deleting one case with missing values on one independent variable, our analysis includes 407 university-year observations.

We use three sources of data for this analysis. The first source is the Institute of Higher Education of Shanghai Jiao Tong University ARWU. Raw aggregate scores for American universities from the ranking system’s inception in 2003 through 2009 constitute the dependent variable in our analysis. All US research universities receive an ARWU score and are placed in the rankings. However, the classification system only publicizes raw scores for universities ranked in the top 100. For non-top 100 institutions, we know only the institution’s position in the rankings. We return to the implications of this data censoring in the subsection that follows.

Two additional sources supply data on US research universities. These figures measure various institutional characteristics in the years of our sample. Because our theoretical model posits that ARWU score both measures and produces organizational behavior, we employ these data as independent variables that might both measure universities’ resources and correlate with their ARWU scores. First, we include figures on a university’s finances, enrollment, and institutional characteristics. For these figures, we use data provided by the Delta Project (2011), an independent nonprofit organization that standardizes figures collected by the National Center for Education Statistics for all US colleges and universities. We also employ data on numbers of postdoctoral researchers, R&D expenditures by funding source, and S&E doctorates from the National Science Foundation’s “WebCASPAR” portal.

ARWU score cannot be reproduced using the ranking system’s published methodology (Florian 2007). This “irreproducibility” adds methodological rationale to our theoretical justification; accordingly, we seek to predict ARWU score using university-level characteristics, and do not attempt to identify the factors that constitute ARWU score. We include independent variables that reflect our substantive concerns of inter-institutional stratification and organizational segmentation. For inter-institutional stratification, we measure university financial resources through the inclusion of net tuition revenues and R&D expenditures. We also consider human resources in two different ways. These alternate measurements reflect different strategies by which to measure a university’s total labor capacity. In a first analysis, we include the number of full-time faculty members employed at each university. We utilize the proportion of total faculty members who are employed full-time in a second, supplementary analysis. We also consider the possibility that evaluative technologies prefer public or private institutions by including a measure of institutional control.

We measure organizational segmentation by considering a university’s level of emphasis on science and engineering fields and reliance on contingent workers. Each of these markers suggests the extent to which a university’s position in the rankings may correlate with the advantaging or disadvantaging of certain portions of the organization. We include the proportion of doctorates awarded in science and engineering fields and the presence of a medical schoolFootnote 9 to measure an institution’s emphasis on science. To measure the extent to which the university relies on contingent labor, we include both the count of part-time faculty and the count of postdoctoral researchers. We also include a range of control variables, such as enrollment size, student selectivity, and geographic region, which might explain university status but are not of particular theoretical interest in this analysis.

Finally, because panel data report multiple observations of the same unit over a period of time, we also account for the relationship between time and ARWU score. We consider both within-university and historical time. Within-university time reflects the temporal nature of the various characteristics of a single unit. For example, universities receive an ARWU score based upon a review of activities from the previous year, not based upon the current year’s activities. For this reason, we “lead” our dependent variable forward by one year. Thus, university characteristics from 2005 predict status in 2006, and so on. We also consider historical time, or the possibility that all observations from a single year differ in a systematic way from observations made in other years. We employ three different techniques – a series of dummy variables, a linear trend, and a log-linear trend – to account for historical time. These three different measurements yield similar results for specified independent variables. For simplicity, we therefore report only a single analysis. This analysis defines historical time through a series of dummy variables for all years in the analysis minus one. Of note, the use of temporal dummy variables does not lend itself to theoretical interpretation (Beck 2010; Carter & Signorino 2010). For this reason, many analysts prefer the use of time trend variables that can be interpreted conceptually as indicators of change over time. While acknowledging the strengths of time trends in most analyses, we are reluctant to impart conceptual importance to time due to the short time span (seven years) considered in this analysis. The universities in the sample are large and complex organizations, and we assume that they change slowly. As such, our time fame proves too small to capture meaningful macro-organizational change. We therefore utilize the dummy variable approach precisely to avoid any suggestion that a meaningful temporal trend might occur in such a brief window of time. Because these dummy variables do not lend themselves to theoretical interpretation, we do not report estimated coefficients for years.

Variable Transformations

Although most of the data provided by the Delta Project and by WebCASPAR may be used readily, we employ a few minor transformations to tailor figures more precisely to our analysis. Because we observe universities for multiple years, we control all finance figures for inflation using the Higher Education Price Index (HEPI) calculated by Commonfund International (2011). We also perform a logarithmic transformation on finance and count variables. For count variables, this technique limits the possibility that outlying observations in over-dispersed variables will bias model estimates upward. For finance figures, this reflects the common assumption that university officials spend their nth dollar in a different manner than they expend their first dollar. Accordingly, we account for the possibility that finance figures net a non-linear return to the dependent variable by transforming a linear term (dollars) into a non-linear term (logged dollars).

Our use of natural logarithms also rests on conceptual ground. Revenues and faculty sizes may rise indefinitely in absolute terms. Universities can spend an infinite amount of money in pursuit of their espoused goals; indeed, institutions such as Yale University garner almost universal acclaim as high-status schools, yet appear “inefficient” when considered through a lens that evaluates dollars expended per output attained (Archibald & Feldman 2008). While resources can and, in some cases, do increase almost indefinitely, position in a global league table always will be relative to peer universities. Further, this position is upward bounded, because no GRU can rise higher than the top of the rankings index. As a result, an infinite increase in revenues or human resources can net only a finite increase in position. By adjusting the distribution of finance and count variables, the natural logarithmic transformation acknowledges these non-linear returns to scale.

One additional variable, the proportion of applicants granted admission, also merits some attention. Although the Delta Project provides data on all US colleges and universities from 1987–2008, admission rate does not become available until 2003, which is the same year as the initial ARWU rankings. Since we “lead” our dependent variable by one year, we lose the first year of analysis due to missing values on the variable admission rate in 2002. We therefore supplement our main analysis, which lacks this initial year of data, with three different sub-analyses. First, we utilize a different measure of admission selectivity, the 75th percentile of admitted student scores on the mathematics portion of the SAT standardized admission test. Second, we replace 2002 admission rate figures with the known values from 2003. Third, we replace 2002 admission rate figures with the per-institution mean of that variable from 2003-2008. Each of these analyses returned estimates that substantially parallel the model that omits 2002 institutional characteristics. This broad agreement suggests that the missing year of data does not bias our results significantly. For this reason, we present only results that omit 2002 independent characteristics (and ARWU score from 2003). We judge these analyses to be more reliable both because they utilize a more literal measure of admission selectivity than the proxy of standardized test scores and because they rest on observed rather than imputed values.

Analytic Strategy

As mentioned previously, the values of our dependent variable (ARWU score) are known only for universities in the ARWU top 100. Universities ranked outside of the top 100 also receive scores, but these scores are not published. Such a variable is “censored,” meaning that it may attain a wide range of values but cannot be observed for all values in that range. We include a visual representation of a hypothetical censored variable in Fig. 1. In order to analyze a censored dependent variable fully, research methods must consider all values that the variable attains – that is, the full distribution of the variable on both sides of the dividing line depicted in Fig. 1 – rather than simply the values that are not censored, and so fall on the right-hand side of the vertical line. Yet an Ordinary Least Squares (OLS) analysis would consider only cases to the right of the vertical line, because only these cases have known values for the dependent variable. OLS regression of our data therefore would consider only the highest-status institutions, because universities outside of the top 100 would be dropped from the analysis due to missing data. Since status is relative, a more complete analysis would include both higher-status and lower-status observations (that is, universities ranked among the top 100 by ARWU and those not ranked among the top 100). It is likely that some characteristics – such as levels of financial resources – systematically predict both whether an institution appears in the top 100 and its overall position in the ranking table. Because inclusion in (or exclusion from) the top 100 may be predicted systematically, an analysis that omits cases from outside the top 100 would produce systematically biased results.

We employ Tobit regression to address this concern. Tobit regression includes a component that corresponds to classic regression analysis, allowing marginal effects to be interpreted like those in a linear model.Footnote 10 Alongside the linear regression, however, Tobit includes a second component that uses probit techniques to estimate the likelihood of an observation being censored, given the known data included in the model. Thus, “Tobin’s probit” yields results that reflect both cases with observed values of the dependent variable and estimated values for cases with a censored value for the dependent variable (Sigelman and Zeng 1999). Our analysis therefore considers all sampled US universities for which data on independent variables are available, and not just those ranked in ARWU’s top 100.

While Tobit regression techniques address the problem of analyzing a censored dependent variable, they do not provide a fail-safe guarantee of the accuracy of estimated results. For this reason, we conducted multiple analyses in an effort to ensure that the results reported below indicate justifiable inferences based upon data. First, as discussed above, we conducted the three sub-analyses to address missing values in the variable admission rate. Our second supplemental analysis construed our sample more broadly by including all US research universities considered to attain either a “high” or “very high” level of research output. These results proved substantially similar to those that we report below. We omit them for this reason, although results may be obtained from the paper's authors by request. Finally, we measured some institutional characteristics in multiple ways as a means of addressing variation in institutional scale. Our substantive interest in human resources as a marker of inter-institutional stratification, for example, could be measured either as total resources, meaning the logged count of full-time faculty members, or as the proportion of high-value resources, meaning the share of total faculty members who hold full-time appointments. Just so, a measure of segmentation could be the logged count of S&E Ph.D.s, since we are controlling for university size, or the share of all Ph.D.s conferred in the sciences, which would measure concentration of doctoral education in S&E fields. As a check against the possibility that we account for size inadequately with one of these measures, we utilize both logged counts and proportions for these two variables. We therefore report results from separate model for counts and proportions of these independent variables in Table 2 below. These two sets of estimates broadly parallel one another, suggesting that our inferences are well supported by the data.

A Note on Causality

Our application of Tobit regression to a panel dataset that includes multiple observations of a set of sampled universities serves to strengthen inferences relative to a cross-sectional analysis. For example, in a panel context we may utilize clustered standard errors to adjust for repeated errors in estimation (Cameron & Trivedi 2010). Despite these benefits, however, our methods are not experimental and therefore are not well suited to establish causal relationships. We therefore make no claims that any particular university feature directly causes position within the ARWU table, nor do we assert that a desire to improve ARWU position directly causes policymakers to reform universities. Further, our theories do not assume that x directly causes y. Instead, we theorize that observation and governance technologies such as ARWU produce GRUs by defining and normalizing the characteristics of these universities. We further theorize that these characteristics reflect patterns of an academic capitalist regime and create incentives for universities outside of the top ARWU positions to emulate the top GRUs in order to improve their own position. We argue that the results we present next offer some empirical support to these claims.

Results

Description of Data

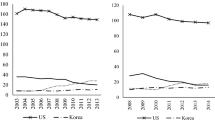

Table 1 reports descriptive statistics for sampled universities. These figures reveal that average top-ranked and unranked universities differ substantially from one another. Universities ranked in the top 100 generally command far greater financial resources than do their peers. Average net tuition revenues among rankedFootnote 11 universities exceed those at unranked universities by approximately $170 million. Perhaps more importantly, given that ARWU attempts to measure research output, ranked universities exceed unranked universities in R&D monies received both from the federal government and from private industry. Further, ranked universities spend a greater number of their own dollars on R&D expenditures. By all selected measures, then, universities ranked in the top 100 in a given year possess a higher average level of financial resources than do their peers that fall outside the top 100. On average, universities in the top 100 also boast far greater human resources than do their peers. The average ranked university employs approximately 150% as many full-time faculty members as does the average unranked university, even though they enroll on average only 10% more students. Ranked and unranked universities also tend to have different organizational characteristics. For example, private institutions comprise a far greater share of ranked (58%) than of unranked universities (39%).

The characteristics discussed above highlight inter-institutional stratification, meaning the separation of advantaged institutions from those that are less advantaged. On average, ranked universities are wealthier, larger, and more likely to be controlled privately than are their unranked peers. Notably, however, descriptive statistics also present evidence of organizational segmentation, or the increased significance of boundaries within a single university. For example, ranked universities seem to give greater emphasis to science and engineering fields than do their unranked peers. Universities ranked in the top 100 confer an average of 230 Ph.D.s in S&E fields each year. Almost four-fifths of these universities also confer a medical degree. By contrast, unranked universities award an average of 59 Ph.D.s in S&E fields per year, and only 37% of these institutions award a medical degree. On average, then, universities ranked in the top 100 appear to be more focused on science, engineering, and medicine than are their peers that do not rank in the top 100. Moreover, ranked and unranked institutions appear to employ different classes of laborers. On average, top 100 universities employ threefold more postdocs – the vast majority of whom work in science and engineering fields (NSF 2009) – than do their non-top 100 peers. Ranked universities also employ approximately 150% more part-time faculty members than do unranked schools. These differences suggest segmentation. The evaluative technologies that constitute the ARWU ranking system appear to favor universities that emphasize certain areas of study, such as S&E fields, and certain labor practices, such as the use of contingent laborers.

Tobit Analyses

Table 2 presents results from two Tobit panel regressions. The first column reports figures that employ logged counts of full-time faculty members and Ph.D.s in S&E fields. The second column utilizes proportions rather than logged counts. These two analyses, as discussed in our methods section, employ two alternative techniques – the natural logarithmic transformation and the use of proportions rather than counts – to account for the different scales on which universities operate. Although these two sets of estimates operationalize the concepts that undergird our analysis in slightly different ways, they yield substantially similar portraits of the relationship between institutional characteristics and ARWU position. As a result, we view these models as complementary findings that together present a robust view of our subject of interest.

Findings offer evidence that some markers of inter-institutional stratification predict ARWU scores. Federal government funding for research, for example, exhibits a positive association with ARWU score. Net of other factors, a 1% increase in federally funded R&D expenditures predicts an increase of .09 points of ARWU score.Footnote 12 This finding is confirmed in model two, in which a 1% increase in federal R&D expenditures predict a 0.11 point increase in ARWU score. The importance of federal R&D expenditures is not surprising given that the federal government supports around 60% of all academic R&D in the US (National Science Board 2010). R&D expenditures derived from industry emerge as a significant predictor of ARWU scores only in our second model. A 1% increase in industry R&D funding predicts a net increase of 0.01 points in the ARWU ranking score. While this result may seem to differ between the two sets of estimates, we note that the marginal effect proves sufficiently small as to suggest that R&D expenses funded by industry may attain statistical significance without mattering substantially. Of note, institutionally sourced R&D expedites – that is, intramural research expenditures – do not predict ARWU scores. Status, as measured by ARWU score, appears to correlate more closely with a university’s ability to secure extramural funding than with its strategies for allocating its own discretionary resources.

Institutional control also significantly predicts ARWU score. Ceteris paribus, private universities receive ARWU scores that are approximately 15 points higher than do public universities. In column two, this estimated relationship increases to a predicted 20-point increase. These results comply with the predictions of academic capitalism theory, which highlights the ways in which competitiveness regimes valorize the alleged nimbleness of the private sector while viewing public institutions as unwieldy and slow to adapt to market logics (Slaughter and Rhoades 2004, 1996). We therefore interpret the positive and significant relationship between private control and ARWU score as evidence of inter-institutional stratification.

Despite evidence from the analysis of R&D expenditures and private control, support for the narrative that ARWU evaluation exacerbates inter-institutional stratification is not uniform. For example, despite predictions from theory, full-time faculty members do not significantly predict ARWU score. This proves to be the case whether we consider human resources as a logged count, as in column one, or as a proportion of all faculty members, as in column two. We suggest that this result may reflect full-time faculty members’ conduct of both research and teaching, whereas ARWU focuses entirely on research output.

The findings reported in Table 2 also provide limited evidence of organizational segmentation. Results of our analysis suggest that emphasis on S&E programs predicts ARWU score. A one percent increase in the number of science and engineering Ph.D.s conferred by a university, a proxy measure of human resource inputs, yields a net increase of 0.11 points of ARWU score. As mentioned in the methods section, we also utilize the proportion of Ph.D.s conferred in S&E fields rather than the logged count of those degrees as an alternative means of adjusting for university scale. Results that utilize proportions appear in the second column of Table 2. These results support the findings presented in the first column. Net of other factors, an increase of one point in the proportion of total Ph.D.s conferred in S&E fields predicts an increase of 0.15 points in total ARWU score. These findings strongly suggest that concentrating graduate training and research efforts in science and engineering fields positively predicts status among the world class.

Notably, however, other measures of organizational segmentation do not appear to predict ARWU portion. For example, the presence of a medical school does not emerge as a significant predictor of university status. The reliance on contingent academic workers such as part-time faculty members and postdoctoral associates also fails to predict ARWU scores. Taken together, then, our findings offer partial support for the proposition that evaluative technologies such as ARWU correlate with organizational segmentation. Segmentation appears to occur along disciplinary lines, with an emphasis on S&E fields positively and significantly predicting ARWU score. However, these fissures appear to be confined to non-medical science and engineering fields. Further, segmentation between contingent and traditional academic labor practices does not appear to predict ARWU ranking position.

Before proceeding to a broader discussion of the implications of our results, we briefly summarize findings pertaining to control variables. We do not provide extensive commentary on these results because they do not relate directly to our underlying theoretical concerns. Admission selectivity constitutes an important component of national rankings systems such as U.S. News & World Report. However, this characteristic does not appear to predict position in the ARWU rankings. This result reinforces Florian's (2007) observation that the ARWU rankings emphasize research outputs relative to other university functions such as instruction. Second, variables measuring institutional size yield mixed results. Enrollment size does not appear to be a significant predictor of ARWU score in column one.Footnote 13 In column two, however, enrollment size positively predicts status but nets diminishing returns to scale. This suggests that larger universities generally fare better than do smaller ones, but that universities that become very large may find their status diminished. Finally, geographic location in the Southeastern US negatively predicts ARWU score. Southeastern universities scored 15 points lower on ARWU rankings, net of other factors, than did universities located in the referent region of New England.Footnote 14

Discussion

Summary of Findings

Taken together, the descriptive statistics and Tobit regression results presented above suggest that evaluative technologies may correlate with inter-institutional stratification among US research universities. Descriptive figures indicate that ranked universities are generally larger and wealthier than are their unranked peers. Tobit regression results confirm that input resources such as R&D income positively and significantly predict ARWU raw scores. Further, net of other factors, being a privately controlled university also predicts a higher ARWU score. These results suggest that markers of inter-institutional stratification predict position in league tables. Indeed, among the various measures of stratification included in our models, only figures indicating human resources in the form of full-time faculty members failed to demonstrate significant relationships with ARWU status.

Our results also unearth some support for the proposition that evaluative technologies increase organizational segmentation. Conferring greater numbers of S&E Ph.D.s or larger proportions of total Ph.D.s in science and engineering fields positively predicts ARWU scores. Thus, evaluation regimes such as the ARWU scoring system appear to privilege certain areas of the university (e.g., the science and engineering fields) relative to other areas of the same university. However, our results provide no support for segmentation along other lines, such as the use of traditional or contingent academic labor. Equipped with these insights, we now turn to a fuller discussion of the implications and limitations of our results.

Implications

At first glance, our findings seem to conflict with a recent study by Halffman and Leydesdorff (2010), which found that countries with universities ranked in the ARWU top 500 did not experience greater overall inter-institutional stratification – as measured by inequality of publication output among universities – since the ranking system was first published. However, we contend that there are substantial differences between our analysis and their study. These differences may partially explain the two analyses’ seemingly opposed findings. First, the Halffman and Leydesdorff study examined only changes in inequality of outputs, not inequality of inputs such as research funding and human resources. As noted above, Archibald and Feldman (2008), among others, have noted that the relationship between inputs and outputs is rarely linear; indeed, some of the most productive universities, in terms of outputs, also appear to be the most “inefficient” when measured by the number of inputs consumed to produce these outputs. Second, the present study examines levels of within-organization segmentation, which is not a part of the Halffman and Leydesdorff (2010) study. Third, and perhaps most important, our study demonstrates that markers of inequality – variables measuring organizational segmentation and inter-institutional stratification – predict ARWU score. This contrasts our analysis markedly with the Halffman and Leydesdorff study, which sought to demonstrate the effect of ARWU on inequality in research outputs. The importance of this final difference becomes clear through our discussion of descriptive data. US universities that rank in the top 100 differ systematically, as measured by inputs, from those that rank outside the top 100. Had we analyzed only those ranked inside the top 100, we might have found limited evidence of an association between ARWU score and inputs because all sampled universities would have controlled large numbers of resources. Such a result would have proven almost wholly consistent with Halffman and Leydesdorff’s work. Instead, Tobit regression techniques allowed us to analyze both ranked and unranked universities on the basis of descriptive evidence indicating the qualitative difference between these two groups. Regression results confirm that, when including both higher-status and lower-status universities, the consideration of inputs provides a useful lens through which to view stratification between institutions.

The implications of this third difference merit further consideration. It is easy to dismiss as banal the finding that stratification and differentiation along one set of measures predicts stratification and differentiation along another set of measures. But to do so would be to overlook the real power of evaluative technologies such as ARWU. Foucault’s (1990) insistence that observational technologies both codify and produce social behavior calls our attention to the relationship of ARWU to the universities that it evaluates. In keeping with Foucault’s theory, which emphasizes the power of observation to define standards and to mark deviants, ARWU appears to be a technology that does not directly generate inequality. Instead, ARWU normalizes, or makes appear natural, inequality both within and between universities. Input inequality might alarm policymakers and analysts, particularly those accustomed to unitary systems in which universities generally receive similar levels of resources. However, many observers take stratification for granted because evaluative technologies such as ARWU proclaim that advantaged universities are not only handsomely resourced; they are also excellent and, as the literary critic Bill Readings (1996) asked rhetorically, who could oppose excellence? Legitimating existing inequalities serves to produce additional inequalities, as ARWU incites universities to behave in the manner rewarded by the rankings technology. This interpretation accords with descriptive analysis of the ARWU rankings. The United States has 116 more universities in the top 500 of ARWU than do the next best-represented countries (Germany and the UK). The US, perhaps not coincidentally, also features the highest level of output inequality among universities (Halffman and Leydesdorff 2010).Footnote 15 In other words, ARWU rewards with status, and so legitimates with the label of “fitness” or “excellence,” academic systems that are already unequal.

ARWU and other evaluative technologies therefore mobilize bias towards the organizational forms that are deemed most fit. Such technologies encourage competition along the lines that are measured by them, and tacitly discourage activities not valorized by these metrics (Pusser 2011: 39–40). Policymakers, university administrators, and social stakeholders who accept these metrics at face value – that is, those who accept rankings as both “normal” and normalizing – simultaneously take for granted that those universities at the top of league tables are “best.” Such acceptance implies support for policies that promote competition to determine which universities are the most fit. Yet, as academic capitalism theory highlights (Slaughter and Rhoades 2004), competition allocates resources in a matter likely to increase both between-organization stratification and within-organization segmentation. Such policies effectively reproduce the moral order concretized by evaluation technologies; as Foucault (1990) predicts, the act of observation produces the social behaviors that the observers set out to measure and codify. In concrete terms, this amounts to a reproduction of the hegemonic status of US universities, which consistently perform best in global ranking systems (see Marginson 2007). Legitimation and normalization therefore becomes twofold. First, the high level of inter-institutional stratification characteristic of the US system is cast as normal rather than aberrant. Second, and crucially, a highly stratified system of higher education is cast as a normal state of affairs to be emulated by other countries should they, too, seek to develop an “excellent” higher education system.

Admittedly, our interpretation remains rooted in theory. Descriptive analysis and Tobit results imply support for Foucault’s understanding of the relationship between observation technologies and social behavior, but they do not demonstrate it conclusively. We also acknowledge that Foucault’s theory assumes complex causality, meaning that the act of observation both measures and incites certain behaviors. This assumption certainly cautions against excessively strong interpretations of our results; indeed, as Münch and Baier (2012) discovered in a study of German universities, an organization’s status may explain the resources that it receives at least as well as those resources predict its status. Our support for propositions drawn from academic capitalism theory proves less qualified. Because academic capitalism theory provides specific propositions that we can explore through the inclusion of particular independent variables in our regression analysis, support for this theory proves more robust than does evidence buttressing Foucault’s model of governance technologies.

Despite this important limitation, our analysis suggests that higher education policies designed to achieve “world class” status effectively channel resources to specific universities, thereby increasing stratification, and to specific areas of universities, thereby increasing segmentation. The favored organizations and sub-organizations tend to be those viewed as best able to compete in schemes such as ARWU (see Slaughter and Cantwell (2012) for a detailed discussion of these policies in the US and EU). To be sure, we agree with Halffman and Leydesdorff (2010) that world rankings may not immediately generate increased inequality. After all, we acknowledge that organizational and university system change occurs only slowly. It is for these reasons that we decline to employ a linear or log-linear time trend in our Tobit analyses. Nonetheless, our findings also support the Foucauldian idea that observational technologies such as rankings systems serve both to normalize and to justify existing social divisions – in this case, stratification and organizational segmentation. Further, we agree with academic capitalism theory that, in the medium term, these evaluative technologies promote continued competition. This endless competition requires ever more resources. The European Commission’s recommendation to increase the share of GDP devoted to higher education, or calls from the Obama Administration to invest in innovation to “win the future,” provide ample evidence of the relationship between competition and resource consumption. Yet the return on resources consumed remains, at best, uncertain. Competition for world-class status among US universities appears to have produced ARWU scores, but position in global league tables does not translate necessarily to employment, economic growth, or a participatory citizenry. The high status of a few universities does not necessarily indicate social or economic benefits. Given both the deep fiscal constraints in the US and much of Europe and the enormous challenges universities in less economically developed countries face in attaining “world class” status (Cantwell and Maldonado-Maldonado 2009), one questions the wisdom of investments in forms of competition that seem to produce only additional competition.

Notes

Universities with global presence are sometimes called “world-class” universities. We chose to use Marginson’s “global research university” instead because this terminology is explicit about the emphasis given to research output and denotes a departure from the “multiversity,” which was the, largely nation bound, preeminent form of higher education organization in the 20th century.

ARWU is sometimes described as the Shanghai ranking.

There is no essential definition of GRU but there is some consensus in the literature of features of global or world-class status. We review these features below.

Our study deals exclusively with ARWU. We selected ARWU as the evaluative technology for our analysis for several interrelated reasons. First, global rankings like ARWU are seen as integral to the development of global university competition and GRUs. Second, ARWU is a prominent ranking system that is both well known and influential. Third, there is precedent of using ARWU in academic research on university competition, stratification, and research output. Finally, ARWU are published annually, allowing time-series analysis, and are publicly available.

The normative implications embedded in the term world class are not absolute. For example, Altbach and Balán’s book (2007) makes the world class research universities include an array of institutions in middle and low income countries that are central to state building by linking their countries with global science. In this way they do not limit the world class to a handful of North American and European universities that are most research productive.

Of note, THE changed its methodology in 2010 in response to widespread criticism.

Books from the mid-1990s into the mid-2000s constitute the bulk of this research. Higher education scholars doing research in English speaking countries, including Bok (2004), Clark (1998), Marginson and Considine (2000), Slaughter and Leslie (1997) and Slaughter and Rhoades (2004), broadly described increased competition and marketization among universities, albeit from varying perspectives. Studies into science and society, including Gibbons and colleagues (1994), and Etzkowitz and Leydesdorff (2000), also identified and theorized changing relations between the market, state, and knowledge producing organizations such as universities. Taken together, this work provides compelling empirical and theoretical evidence of a major shift towards market-like competition in higher education.

Notably, 2008 is a recent exception where student aid grants exceeded R&D funding. This was part of the Obama Administration’s economic stimulus and is a temporary variation rather than an indicator of a new trend.

Medical schools both train physicians and conduct an extensive amount of research in the life sciences. Indeed, the National Institutes of Health provide more funding for university-based research than does any other federal agency (Yamaner 2009). The majority of these funds go to medical schools. Thus, while we consider the presence of a medical school as an indicator of a university’s emphasis on the applied sciences, this variable could instead indicate the institution’s level of research focus. In such an interpretation, this variable would indicate inter-institutional stratification rather than within-organization segmentation.

This is because a Tobit analysis assumes that a latent variable, commonly denoted Y it *, underlies our observed dependent variable, Y it . The latent dependent variable Y it * – in our case, ARWU score for all US research universities – is continuous, even though the observed dependent variable is censored due to the limited availability of data about this variable. We therefore interpret model coefficients as marginal effects like those in a linear regression because our project studies the underlying phenomenon denoted by Y i *, meaning university status as measured by ARWU score. For a detailed discussion of the latent variable motivation of Tobit regression, see Sigelman and Zeng (1999).

All sampled universities are “ranked” in the general sense that all received an ARWU score. However, we use the word “ranked” specifically to describe the subset of sampled universities that rank in the top 100. Some of the “unranked” universities appear in the top 200 ARWU, but the raw ARWU scores for these schools are censored. In this sense, these universities are “unranked.”

We interpret marginal effect by dividing the estimated coefficient by 100. This interpretation is common among “lin-log” models, in which the dependent variable appears in its raw, or linear, form and independent variables are logged (Gujarati 2003: 181–183).