Significant discrepancies in estimates of the Hubble constant are discussed in terms of the measurementproblem of calibrating the cosmological distance scale. It is shown that representing the Freedman–Robertson–Walker problem by a 3rd order Taylor expansion with respect to the criterion of minimal error inthe inadequacy is not optimal in terms of accuracy. An anisotropic 2nd order model based on the Heckmannrepresentation is more accurate.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Since E. Hubble discovered the red shift law z = (H0/c)D(where c is the speed of light, and D is the distance) in1929, estimates of the Hubble parameter H0, which plays a decisive role in cosmological models, have ranged from 530 to50 km/s/Mpc [1]. In the 1970’s, a joke attributed to A. Sandage to the effect that “There is nothing more variable than theHubble constant” was popular among cosmologists [2]. But estimates of H0derived from observations of various cosmicobjects continued to diverge in later decades (Table 1), despite the greater accuracy of astrophysical measurements. Here andin the following, we use the abbreviations: HST KP, Hubble Space Telescope Key Project; WMAP, Wilkinson MicrowaveAnisotropy Probe; SH0ES, Supernovae H0for the Equation of State of Dark Energy; BAO, Baryon acoustic oscillations;CSР, Carnegie Supernova Project; and РС, Planck Collaboration.

In 1998, the High-Z SN Search Team, which discovered the “accelerated expansion of the universe” by the so-calledRiessχ2method from a sample of 37 SN Ia supernovae, obtained an estimate for H0ranging from 63.8 ± 1.3 to 65.2 ± 1.3km/s/Mpc [3] (the spread occurs because various methods we used for the estimates), while in 2016 this group obtained anotherestimate H0= 73.24 ± 1.74 km/s/Mpc [4]. At the same time, the PC campaign obtained an estimate of 67.2 ± 0.7 km/s/Mpc based on data from a space probe [5]. A spread in different estimates from 61.4–69.8 km/s/Mpc was found by the BaryonOscillation Spectroscopic Survey [6]. Yet another estimate of 91.8 ± 5.3 km/s/Mpc was obtained in that same year (2016) [7].In 2017, staff from the Carnegie-Chicago Hubble project pointed out a statistically significant (more than three sigma) spreadin estimates of H0= 74 ± 3 km/s/Mpc based on Cepheids in the “distance ladder” and H0= 67.3 ± 1.2 km/s/Mpc based onmicrowave background measurement data [8].

W. Freedman, the leader of HST KP, referred to this situation in cosmology as an impasse [9]. Freedman believesthat the way out of this is to increase the accuracy of the extragalactic distance scale to 1%. If this can be done, it will only bethrough a reexamination of the calibration technique.

A similar dynamic of the accuracy in determining the fundamental gravitational constant G, which plays an equallyimportant role in cosmology, was noted at the end of the twentieth century. Then the confidence intervals for three of the fourbest determinations of G did not overlap at all [10] and the problem of “incorrect confidence intervals” was examined in connectionwith an analysis of experiments searching for neutrino oscillations [11]. Thus, in 1998, the Committee on Data forScience and Technology (CODATA), recommended a new value of 6.673(10)·10–11m3/s2/kg for the gravitational constant.This was a “step backwards” relative to the earlier 6.67259(85)·10–11m3/s2/kg (1986). It was followed by the recommendations6.67428(67)·10–11 m3/s2/kg (2008), 6.67384(80)·10–11m3/s2/kg (2010), and 6.67408(31)·10–11 m3/s2/kg (2014). In2014, precision atomic interferometry yielded an unexpectedly accurate estimate of G = 6.67191(99)·10–11 m3/s2/kg [12].

At a special session of the British Academy of Sciences, even before the publication of Ref. 9, T. Quinn (International Bureau of Weights and Measures) [13] referred to the discrepancy in the determinations of the fundamental gravitational constant as a “metrological and scientific impasse.”

An analysis of the situation. An estimate of H0 = 72 ± 7 km/s/Mpc that was independent of distance over a range of 56–467 Mpc was obtained in 2000 at the HST KP for 36 SN Ia supernovae with z ≤ 0.1 [14]. A statistical test of the data [14] with respect to the criterion of the minimum average modulus of the error in the inadequacy (AMEI) with the program MMK-stat 2.0 [15] confirmed the independence of the estimates of H0 from distance in the class of continuous models for the estimates of \( {H}_0^{\left[1\right]} \) = 71.725 ± 4.014 km/s/Mpc and \( {H}_0^{\left[2\right]} \) = 72.186 ± 3.969 km/s/Mpc, respectively, of MMKMEDS, based on a median algorithm, and MMKMNK, for a least squares algorithm. Otherwise, conventional (parametric) averaging of the data using the MMK and MEDS algorithms yielded estimates of \( {H}_0^{\left[2\right]} \) = 72.186 km/s/Mpc and \( {H}_0^{\left[1\right]} \) = 71.725 km/s/Mpc, respectively. However, models with “disorder” seemed more plausible:

Here it turned out that there were only two SN Ia supernovae out of the 36 within an interval of 391.5–467 Mpc.

In 2012, the HST KP estimate was refined in the Carnegie-Chicago Hubble program by essentially the same group of researchers: 74.3 ± 2.1 km/s/Mpc [16].

A still larger difference in estimates of H0 was found at the Special Astrophysical Observatory of the Russian Academy of Sciences in 2004–2005 based on photometric data from a combined sample of N = 220 elliptical galaxies: from 71.5 ± 10 to 77.7 ± 10 km/s/Mpc and from 53.0 ± 10 to 65.4 ± 10 km/s/Mpc according to the GISSEL and PEGASE models, respectively (the spread is caused by the step sizes for grouping the data in z, 0.2 and 0.3) [17].

The situation with parametric identification of the Freedman–Robertson–Walker model [3, 18] for the cosmological distance scale is equally complicated. First of all, the simplest version of the Freedman–Robertson–Walker model with a curvature parameter of Ωk = 0 is used in Ref. 3; in terms of the standard cosmology, this is determined by the position of the first peak in the angular spectrum of the fluctuations in the cosmic microwave background (CMB) near an angle of roughly 1° [19] and in Ref. 4 a Taylor series expansion of the Freedman–Robertson–Walker model [20] was used:

where q0 is the slowing down parameter, and j0 is the impulse parameter.

The first order Taylor expansion (the Heckmann model) was already known in 1942 [21]:

Second of all, the best results in terms of the “minimum” χ2 = 1.04 of parametric fits for a specified structure of the model yielded different estimates for the parameters of dark matter ΩM = 0.72+0.44/–0.56 and dark energy ΘΛ = 1.48+0.56/–0.68 or ΩM = 0.80+0.40/–0.48 and ΘΛ = 1.56+0.52/–0.70 [3]. There was also a result for χ2 = 1.03. But “...” instead of that in Ref. 3. It was assumed that ΘΛ = 0.76 and ΩM = 0.24, with Ωk = 1 – 0.76 – 0.24 = 0. This was a fit of the Freedman–Robertson–Walker model to a “flat” universe.

Third of all, the statistical nonuniformity and anisotropy of the data [3, 17] were also noticed previously during identification of uniform interpretive models [22,23,24]. Their clustering with respect to the transparency windows of the Milky Way justified a transition from equatorial to galactic (l, b) coordinates. Ultimately, a Taylor series expansion with anisotropy parameters θk(l, b) was assumed in the form [25]

The model of Eq. (3) turned out to be stable to the “outburst” of SN 1997ck at z = 0.97. Doubts have been raised [17] about the justification for including it in the main sample.

Fourth of all, the unexpected thing was that to combine the samples of data from Refs. 3 and 17 into a set of N = 79 SN Ia the model with optimum complexity with respect to the minimum AMEI criterion [15] became the model of Eq. (3) with K = 3, but without the z3 term. This is equivalent to taking the more complicated (than Eq. (2)), but less exact, model (1) in Ref. 4.

In other words, a purely statistical description of the accuracy of the models without accounting for the structural component of the inadequacy error leads to the illusion of greater accuracy without a justified increase in the complexity of the models or an increase in the size of the data samples. This does not mention the fact that the accuracy estimates used for the cosmological distance scale apply only to its position characteristic, as assumed in the classical linear regression analysis.

The metrological interpretation is optimal in terms of the complexity of the mathematical model of the object of measurements in the theory of measurement problems. Separation of the inadequacy error into dimensional, parametric, and structural components [15] has shown that their sum as a function of the number of model parameters when the parametric and structural components are balanced has a minimum whose position depends on the dimensional component. And this minimum decreases and shifts toward more complicated Kolmogorov models (with a larger number of parameters) only when the accuracy of the measurements is increased, i.e., when the dimensionality of the components reduced. Otherwise, for less accurate measurements the minimum increases and shifts toward simpler models (with fewer parameters).

Special cases with estimates of the fundamental cosmological constants have the well known general “statistical” causes:

-

“confusion” between confidence and tolerance intervals;

-

postulating a “normal” probability distribution law;

-

breakdown of the condition for a stationary statistical measurement data series owing to a trend with respect to a controlled factor or a correlation in uncontrolled factors, including multicollinearity;

-

breakdown of the condition of homoscedasticity (uniformity of dispersions) for regression analysis models when data from different sources are combined, “superaccuracy” in the dispersion weighted average, and an “increase” in accuracy with increasing sample size when the data are statistically nonuniform.

All of these problems in measurement calibration problems are related to the lack of a test of the conditions for application of probabilistic-statistical methods [22, 26].

The cosmological distance scale and mathematical statistics. The problems of verifying the conditions for applicability of statistical methods, and not just in cosmology, have been well known since the time of the discussion between R. Fisher and A. Eddington [2, 22]. An unexpected acknowledgement of the existence of these problems during identification of the Freedman–Robertson–Walker model appeared in B. Schmidt’s Nobel lecture: the High-Z SN Search Team and Supernova Cosmology Project “were both grappling with how to deal with these statistical issues – it wasn’t that they hadn’t been solved by science, it was just that we were in new territory for us ... in 1996, none of us had ever seen this technique used before in astronomy” [27]. A method of this sort was proposed in 1934 by A. Aitken: a least squares method for correlated observational data with different dispersions. The first use of a least squares method in astronomy was by A. Legendre for determining the orbits of comets in 1805.

Increasing the accuracy of the cosmological distance scale is directly related to the problem of calibrating it with respect to standards employing “standard samples” of luminosity – type SN Ia supernovae at the brightness maximum with known red shifts.

We recall that for calibration of means of measurement for specified conditions, in the first stage a relationship is established between values of the quantities provided by the standards and the readings from the means of measurement with corresponding accuracy characteristics and in the second stage, a relationship between these readings and the results of the measurements is established [28].

For calibrating the cosmological distance scale, the “standard” values are provided by the luminosity maxima of SN Ia supernovae, while the specified conditions correspond to the physical mechanism of the outburst in the Chandrasekhar model and a photometric distance model. The specific feature of this calibration problem is that the relationships for the first stage are the empirical luminosity functions of the supernovae as functions of time for determining the brightness maximum, while the relationship for the second stage is the Freedman–Robertson–Walker model as an isotropic function of red shift and the free parameters. Both relationships are ultimately the result of statistical parametrization of the identification without testing of the nonparametric hypothesis regarding the form of the distribution of the deviations from the characteristic position of the assumed model. They are usually assumed to follow a normal distribution.

Since the result of calculating the photometric distance from the measured red shift in the spectrum of an SN Ia supernova or “parent” galaxy is often referred to as the result of the measurement, this “insignificant” incorrectness in the terminology “hides” the inadequacy of the formulas used for these calculations. Indeed, an instrumental aspect of the problem shows up first in an analysis of the error budget of this kind of “measurements,” while the errors in the corrections are associated to a substantial extent with inadequacy errors and untested hypotheses which should have supported the results of the parametric identification.

Of course, far from all supernovae can serve as “standard candles.” They must be carefully selected spectrally and “certified” as standards by constructing the luminosity function. Supernova outbursts, however, are not always predictable and, despite the extensive catalogs of these objects, by no means all supernovae are “certified.” This requires extremely detailed work on refinement of photometric distances by extrapolating an empirical model of brightness attenuation based on the limited sample of photometric data at the time of the luminosity maximum [3].

The problem was created in the χ2-method proposed by A. Riess [27] as “a weighted least squares method” involving taking the logarithm of a likelihood function in the form of a product of Gaussian distributions with different dispersions. And the problem is not that the distributions of the deviations of the astrophysical estimates from the interpreted models are more plausible, but more precisely that their characteristic positions are different and the distributions are truncated, such as a truncated Laplace distribution. It is also not that the dispersion of the weighted mean with respect to the dispersions of the components in the least squared method is less than the smallest dispersion among the components, despite the fact that the heteroscedacity of the components signifies a statistical nonuniformity. Declared “percentage” estimates of the accuracy of the extragalactic distance scale of the type 2.4% are actually estimates of the accuracy of a regression curve or position characteristic, rather than of the model as a whole with the distribution of its residues, for which (P, γ) statistical tolerance intervals are used [29].

As an example, Ref. 30 gives the limits of 95% confidence intervals for the regression curve of a Hubble diagram with respect the photometric distance which characterize the error in a statistical estimate of the position characteristic of the random function DL(z) and clearly do not contain 95% of the sample of “pure” supernovae. For this, it is necessary to construct broader, tolerant boundaries for the same confidence coefficient. And if now a “cross” centered on the regression curve is drawn at some point on the regression curve, the tolerance limits will indicate the spread intervals of the data for DL and z. Then it is immediately clear that the fraction of supernovae with the same red shifts will be considerably greater with a reduction in the slope of the diagram, i.e., as the red shift increases. But for z = 1.00+0.10/–0.08, based on the red shift the uncertainty interval of the distance modulus for “confidence intervals of 95%” will be 44.07+0.47/–0.60 (+1.05/–1.34%) and for z = 1.4, it will exceed ±4.5%. For the tolerance limits, the uncertainty interval will be “slightly” wider. This is a fundamental point; consequently, the cosmological distance scale based on red shift still does not have the status of a metric. Ultimately, the minimum AMEI criterion in the calibration problem has made it possible to proceed from parametric to structural-parametric identification.

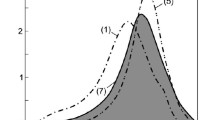

The accuracy of the model of the scale and the volume of the sample of data on a type SN Ia supernova. An analysis [25] according to Ref. 15 of the combined data from Refs. 3 and 17 on SN Ia supernovae used to detect “the accelerated expansion of the universe” shows that representing the Freedman–Robertson–Walker model by the 3rd order Taylor expansion (1) based on the minimum AMEI criterion is not optimal in terms of accuracy [15]. But a return to the isotropic model (2) is impossible for the same reasons. In this regard, the minimum AMEI criterion is extremely stringent. An anisotropic model of lower order in the galactic coordinates (l, b) for the photometric distance,Footnote 1 given by

was more accurate according to this criterion.

Models (4) correspond to an AMEI of \( {\overline{\upvarepsilon}}_2^{\left[2\right]} \) = 249.81485 Mpc, as well as the parameters H0 = 61.43289962 km/s/Mpc and q0 = –0.1676786792. Models (4) have the feature of structural-parametric identification with respect to the criterion of minimal inadequacy error [15], while the other models associated with the data of Table 1 are results of parametric identification alone. We emphasize that the model with maximum complexity among all the models mentioned above is an expansion of the Freedman–Robertson–Walker model in a multivariate Taylor series.

It is important that the more complicated version of model (2) adopted in Ref. 4 led to a higher inadequacy error owing to the inclusion of 3rd order Taylor series terms. In addition, the doubt expressed in Ref. 17 about the possibility of increasing the estimates H0 = 65.2 km/s/Mpc for ΘΛ = 0.76 [3] and H0 = 63.0 km/s/Mpc for ΘΛ = 0.72 [17] by 10% to H0 = 70.0 km/s/Mpc is confirmed in Ref. 25. The doubt arises because the “age of the universe” (\( {H}_0^{-1} \) > 13 billion years) is consistent with the “age” of old stars in globular clusters only for the Hubble constant H0 < 70.0 km/s/Mpc. In Ref. 5, the Hubble constant is included in the functional χ2 for the estimate and in Ref. 17 the photometric distance is determined independently of H0.

We recall that the data on supernovae [3, 17] used to derive model (1) consist of sample sizes N = 27+10 [3] and N = 42 [17]. Each element of the samples required special handling: selection in terms of type, recovery of the luminosity curve, and an estimate of the brightness at the maximum and the errors in the “luminosity standard,” as well as (which was not done) an estimate of the inadequacy errors for the theory of the assumed mechanism of the outburst and testing of the data for statistical uniformity. In this case, we should consider not just statistical, but, strictly speaking, compositional uniformity [15]. Table 2 lists the results of this kind of testing with respect to the condition of “minimum AMEI of the model for combining samples less than the total AMEI of the components” by the MMKMNK algorithm [15] based on a maximum complexity model with K = 3 for different combinations of samples: 27 SN Ia with z = 0.00834–0.1245 and 10 SN Ia with z = 0.30–0.97 [3]; 42 SN Ia with z = 0.172–0.830 [17]; and 33 SN Ia with z = 0.010–1.390 [30]. The brackets {·} denote samples that have been combined or separated for hypothesis testing. The distinctive feature of the test is the indicated structural elements of model (4), which make it possible to determine the cosmological parameters q0 and H0: θ001 is the parameter for the term in an expansion containing only the variable z; θ002 is the parameter for the term in an expansion containing only the variable z2.

We note a number of anisotropy parameters that are not related to the variable z and represent the zero-point, which serve more as an index of the statistical (compositional) nonuniformity of the data: θ000, the free term in the expansion; θ..0(l, b), anisotropy parameters unrelated to to the variable z but related to the variables l and b at nonzero powers. Here θ..3z3 is the parameter for the term in z3.

An analysis of the data of Table 2 showed the following: all the listed samples and their combinations do not form a compositionally uniform set, since any combination leads to an increase in the total AMEI. This is (primarily) related to the unbalanced and random character of the plan for forming the samples because of the unpredictability of the times at which the supernovae appear. The position characteristics of the DL(z) dependence for the boundary structure did not reach the maximum complexity model (4) with K = 3, except for the data of Refs. 3 and 17. In two cases the zero-point is compensated:

The full combination of the data is described by the model

There may be no “impasse in cosmology.” Indeed, large values of H0 are obtained from type SN Ia supernovae for red shifts of z ≈ 1 or less, and smaller values are obtained from the microwave background radiation corresponding to the boundaries of the observable part of the universe and very large red shifts.

An idea of an answer to the question of the discrepancy in the estimates of H0 was indicated in Ref. 31 and involved “direct measurement” of H(z) based on the derivatives (Δz/Δt). The corresponding diverging estimates of H0 are given in Ref. 17, and the competing hypothesis posits a “discrepancy” in the H(z) dependence. Thus, it is possible to avoid dramatizing the situation with the Hubble constant, although the discrepancies in estimates of the fundamental constant of gravitation and the Hubble constant are related to one another in the model for the cosmological distance scale.

Conclusion. Testing for compositional uniformity of the data of [3, 17, 30] with respect to a structured expansion in a three-dimensional Taylor series [15, 25] for the Freedman–Robertson–Walker model is a more rigorous test than with a structured expansion in a one-dimensional Taylor series [23]. And the main conclusion that follows from calibrating the distance scale with SN Ia supernovae is thus far that this is yet another argument in favor of the statement that the cosmological distance scale is not metric. This scale is an analog of the “standards method” [28] based on an indirect measurements [15], which only supplements the estimate of the leader of the HST KP. Nevertheless, these calibration approaches are also useful in other problems.

We recall that the basic metrological characteristic of any means of measurement besides a primary standard that establishes a measurement scale for a physical quantity is a function of its error [32,33,34]. In accordance with the classification of identification measurement problems, distinctions are made between statistical and dynamic, and individual and type models of the errors of a means of measurement as functions or operators [15]. In the statistical case, the error function of a means of measurement consists of a systematic component (position characteristic) and a multivariate probability distribution (spread characteristic). which represents a combination (convolution or composition) [35] of random and unexcluded systematic components. Indeed, a more successful name for these components is “observed” and “unobserved,” which corresponds to their sources in a measurement problem.

If verification, calibration, gradation, and testing of means of measurement can be regarded as varieties of control and defining tests, when they are conducted under laboratory (normal) conditions the error function can be represented as a function of a single argument – the measured quantity. However, during research tests, including in accelerated tests and tests for confirming the type of a means of measurement, the error function can also become a function of influencing quantities. And in this case, the method described here for analyzing the cosmic distance scale based on multivariate models in the form of structured Taylor series in a scheme with crossover observation of the inadequacy error [15] turns out to be useful. And the more so, since for many means of measurement within the range of the measurements, the systematic component of the error function makes at least one or two full oscillations and is described by a trigonometric function or a polynomial of no higher than 3rd order [36].

Notes

The protocol form (without rounding) is assumed for the estimates in this article. The accuracy characteristic for the models is taken to be the AMEI \( {\overline{\upvarepsilon}}_{\vartheta}^{\left[s\right]} \), where ϑ is the binary code for the model structure or the exponent on the highest power of the arguments in the Taylor series (MMS); [s] is the index for the structural-parametric identification algorithm in the scheme for overlapping observation of the inadequacy error with s = 1 corresponding to MMKMEDS and s = 2, to MMKMNK [12].

References

K. Lang, Astrophysical Formulas. Part 2 [Russian translation], Mir, Moscow (1978).

S. F. Levin, Optimal Interpolation Filtration of Statistical Characteristics of Random Functions in a Deterministic Version of the Monte-Carlo Method and the Red Shift Law, AN SSSR, NSK, Moscow (1980).

A. G. Riess et al., “Observational evidence from supernovae for an accelerating universe and a cosmological constant,” Astron. J., 116, 1009–1038 ( 1998).

A. G. Riess et al., “A 2.4% determination of the local value of the Hubble constant,” Preprint Astrophys. J., http://arXiv:1604.01424v3 [astro-ph.CO] 9 Jun 2016, acc. Feb. 24, 2017.

Planck Collaboration, “Planck intermediate results. XLVI. Reduction of large-scale systematic effects in HFI polarization maps and estimation of the reionization optical depth,” Astron. & Astrophys. Manuscr., http://arXiv:1605. 02985v2 [astro-ph.CO] 26 May 2016, acc. Dec. 31, 2017.

S. Alam et al., “The clustering of galaxies in the completed SDSS-III Baryon Oscillation Spectroscopic Survey: cosmological analysis of the DR12 galaxy sample,” http://arXiv:1607.03155v1 [astro-ph.CO] 11 Jul 2016, acc. Feb. 18, 2017.

M. Moresco et al., “A 6% measurement of the Hubble parameter at z ~ 0.45: direct evidence of the epoch of cosmic re-acceleration,” http://arXiv:1601.01701v2 [astro-ph. CO] 2 May 2016, acc. March 24, 2018.

R. L. Beaton, W. L. Freedman, B. F. Madore, et al., “The Carnegie-Chicago Hubble program. I. An independent approach to the extragalactic distance scale using only population II distance indicators,” http://arXiv:1604.01788v3 [astro-ph.CO] 11 Nov 2016, acc. Aug. 10, 2017.

W. L. Freedman, “Cosmology at a crossroads: Tension with the Hubble constant,” http://arXiv.org:1706.02739 13 Jul 2017, acc. Dec. 31, 2017.

S. F. Levin, “Identification of interpreting models in general relativity and cosmology,” in: Physical Interpretation of Relativity Theory: Proc. Int. Sci. Meeting PIRT-2003, Moscow, June 30–July 3, 2003, Coda, Moscow, Liverpool, Sunderland (2003), pp. 72–81.

G. Feldman and R. Cousins, “Unified approach to the classical statistical analysis of small signals,” Phys. Rev. D, 57, No. 7, 3873–3889 (1998).

G. Rosi, F. Sorrentino, L. Caccia-puoti, et al., “Precision measurement of the Newtonian gravitational constant using cold atoms,” Nature, 510, 518–521 (2014).

T. Quinn, H. Parks, C. Speake, and R. Davis, “Improved determination of G using two methods,” Phys. Rev. Lett., 111, 101102 (2013).

W. L. Freedman, B. F. Madore, B. K. Gibson, et al., “Final results from the Hubble space telescope key project to measure the Hubble constant,” Astrophys. J., 553, 47–72 (2001).

R 50.2.004-2000, GSI. Determination of the Characteristics of Mathematical Models of Relationships between Physical Quantities during Solution of Measurement Problems. Basic Assumptions.

W. L. Freedman, B. F. Madore, V. Scowcroft, et al., “Carnegie Hubble program: a mid-infrared calibration of the Hubble constant,” Astrophys. J., 758:24, Oct. 10 (2012).

O. V. Verkhodanov, Yu. N. Pariiskii, and A. A. Starobinskii, “Determination of ΩΛ and H 0 from photometric data on radio galaxies,” Byull. SAO RAN, 58, 5–16 (2005).

S. Perlmutter et al., “Measurements of Θ and Λ from 42 high-red shift supernovae,” Astrophys. J., 517, 565–586 (1999).

Planck Collaboration, “Planck 2015 results. XIII. Cosmological parameters,” Astron. & Astrophys., 594, A13 (2016).

M. Visser, “Jerk, snap, and the cosmological equation of state,” http://arXiv:gr-qc/0309109v4 31 Mar 2004, acc. April 8, 2017.

O. Heckmann, Theorien der Kosmologie, Springer, Berlin (1942).

S. F. Levin and A. P. Blinov, “Scientific-methodological support for guaranteed solution of metrological problems by probabilistic-statistical methods,” Izmer. Tekhn., No. 12, 5–8 (1988).

S. F. Levin, “The measurement problem of identifying red-shift anisotropy,” Metrologiya, No. 5, 3–21 (2010).

S. F. Levin, “Philosophical problems and statistical methods in fundamental metrology,” Metafizika, No. 3 (5), 89–118 (2012).

S. F. Levin, “Cosmological distance scale. Part 6. Statistical anisotropy of red shift,” Izmer. Tekhn., No. 5, 3–6 (2017).

S. F. Levin, “Statistical methods for the theory of measurement problems in cosmology,” Yad. Fiz. Inzhinir., 4, No. 9–10, 926–932 (2013).

B. P. Schmidt, “Accelerated expansion of the universe based on observations of distant supernovae,” Usp. Fiz. Nauk, 183, No. 10, 1078–1089 (2013).

RMG 29-2013, GSI. Metrology. Basic Terminology and Definitions.

GOST R ISO 16269-6-2005, Statistical Methods. Statistical Representation of Data. Definition of Statistical Tolerance Intervals.

M. V. Pruzhinskaya, Supernova Stars, Gamma-Bursts, and the Accelerated Expansion of the Universe: Auth. Abstr. Candid. Dissert. in Phys.-Math. Sci., Lomonosov Moscow State University, Moscow (2014).

R. Jimenez and A. Loeb, “Constraining cosmological parameters based on relative galaxy ages,” Astrophys. J., 573, 37–42 (2002).

S. F. Levin and B. S. Migachev, “The problem of selecting points for measurement monitoring during testing of means of measurement,” Izmer. Tekhn., No. 9, 69–72 (1998).

S. F. Levin, “The measurement problem of identifying the error function,” Zakonodat. Prikl. Metrol., No. 4, 27–33 (2016).

S. F. Levin, “The measurement problem of verifying the agreement between means of measurement and established specifications,” Kontr.-Izmer. Prib. Sist., No. 6, 27–33 (2016).

GOST 8.009–84, GSI. Normalized Metrological Characteristics of Means of Measurement.

MI 188–86, GSI. Means of Measurement. Establishing Values of the Parameters of Verification Methods.

Author information

Authors and Affiliations

Corresponding author

Additional information

Translated from Izmeritel’nayaTekhnika, No. 11, pp. 15–21, November, 2018.

Rights and permissions

About this article

Cite this article

Levin, S.F. Cosmological Distance Scale. Part 7. A New Special Case with the Hubble Constant and Anisotropic Models. Meas Tech 61, 1057–1065 (2019). https://doi.org/10.1007/s11018-019-01549-6

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11018-019-01549-6