Abstract

Abundant literature has been produced for the last two decades about multiple-point statistics simulation, or MPS. The idea behind MPS is very simple: reproduce patterns from a 2D, or most often a 3D, training image that displays the type of geological heterogeneity deemed to be relevant to the reservoir or field under study, while honoring local data. Replicating an image is a traditional computer science problem. Thus, it should come as no surprise if a growing number of publications on MPS borrow ideas and techniques directly from computer vision and machine learning to improve the reproduction of training patterns. However, quoting Andre Journel, “Geostatistics is not about generating pretty pictures.” Models have a purpose. For example, in oil and gas applications, reservoir models are used to estimate hydrocarbon volumes and book reserves, run flow simulations to forecast hydrocarbon production and ultimate recovery, and make decisions about field development or optimal well drilling locations. Specific key features such as the extent and connectivity of shale barriers may have a major impact on the reservoir performance forecasts and the field development decisions to be made. Those key features that need to be captured in the model, along with the available subsurface data and constraints of the project, should be the primary drivers in selecting the most appropriate modeling techniques and options to obtain reliable results and make sound decisions. In this paper, the practitioners’ point of view is used to evaluate alternative MPS implementations and highlight remaining gaps.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The day I met Andre Journel for the first time at Stanford University in Spring 1997 changed the course of my life. I was passionate about statistics but had never heard about geostatistics. Andre gave me a quick lecture and told me that, if I was interested in joining his group, there was a very promising, but challenging, PhD topic I could work on: multiple-point statistics, or MPS. With the full support of my future spouse Audrey, who had already been instrumental in my application to Stanford, it did not take me long to accept Andre’s offer.

At that time, traditional two-point variogram-based geostatistics was well established. The theoretical foundations were solid, based on kriging equations (Goovaerts 1997), and an efficient public implementation was available in GSLIB (Deutsch and Journel 1998). However, variogram-based models were unable to reproduce realistic geological features, for example curvilinear features such as channels or lobes, which are quite common in geological environments. Figure 1 illustrates that limitation: the three binary facies models shown in that figure have very similar variograms, yet they display very different patterns, resulting in different linear connectivity profiles. Only the first model can be generated by the conventional variogram-based program SISIM (Deutsch and Journel 1998). The two other models, generated by the object-based programs FLUVSIM and ELLIPSIM (Deutsch and Tran 2002), display features whose descriptions require going behind two-point correlation moments. Capturing those features calls for inferring and reproducing specific higher-order, multiple-point statistics. Andre Journel had a famous analogy: suppose that you are in the dark and need to guess what object stands in front of you. Palpating that object with only two fingers would make your guess much more uncertain than if you can use your ten fingers to explore the shape of that object.

Binary facies models with similar variograms: a SISIM model; b ELLIPSIM model; c FLUVSIM model; d–f Indicator variograms along the EW and NS directions, and linear connectivity function for the SISIM (dashed line), ELLIPSIM (thin line), and FLUVSIM (thick line) models; from Strebelle (2000)

That inability of variogram-based geostatistics to model long-range curvilinear features, hence to capture actual geological connectivity, is critical in numerous applications, especially when the geostatistical models are processed through a dynamic flow simulator, for example in hydrocarbon and groundwater reservoir modeling studies.

Moving from two-point to multiple-point geostatistics was a major contribution from Andre prior to retirement. The next section provides a brief history of the emergence of MPS in the geostatistical toolbox. Today, however, a very large number of MPS implementations are available, creating confusion among practitioners. To help modelers choose what implementation to use, Andre’s motto has never been more relevant: “Geostatistics is not about generating pretty pictures.” A geostatistical model has a purpose: it can be estimating the ultimate recovery expected from a hydrocarbon reservoir, or the risk of contamination of an aquifer. The objectives of the study should always drive the choice of tools and workflows used to build the geostatistical models. In this paper, we review a wide range of MPS implementation options: while some address the constraints and priorities of most modeling applications, other, even if they produce prettier pictures, suffer from important limitations that considerably reduce their practical interest.

2 A Brief History of MPS

Once the need for multiple-point statistics to improve the reproduction of connected patterns was identified by Journel and Alabert (1989), the very first challenge was to infer those multiple-point statistics moments that characterize the complex object shapes to be modeled. Inferring a two-point variogram from sparse conditioning data was already arduous; going beyond the variogram was mission impossible. But then came a simple and elegant idea: why not extract multiple-point statistics from an analogous training image that would display those geological features the modeler wants to capture? When I started my PhD in September 1997, the pioneers of that idea had left the campus (Srivastava 2018). All that remained was an early attempt to impose a limited number of multiple-point statistics moments through simulated annealing (Deutsch 1992; Farmer 1992), and, most importantly, the foundational paper from Guardiano and Srivastava (1993) describing the actual first MPS implementation: ENESIM.

ENESIM is very close to SISIM: it is a direct sequential simulation algorithm whereby unsampled locations are simulated along a random path, using initial hard data and previously simulated locations as conditioning data. The difference from SISIM is the inference of the conditional probability distribution at each unsampled location. In SISIM, conditional probabilities are calculated from kriging equations using the variogram model, whereas in ENESIM, they are inferred from the training image by looking for replicates of the conditioning data event (same relative locations and same data values as the conditioning data) and computing the distribution of categorical values from the central locations of those training replicates. Then, in ENESIM just like in SISIM, a simulated value is drawn by Monte-Carlo from the resulting cumulative probability conditional function and assigned to the location being simulated. However, inferring the local conditional probabilities in ENESIM required scanning the full training image again every time a new unsampled location was visited, which made the program extremely slow, quite impossible to use in practice.

Solving the computational limitation of ENESIM required several unforgettable discussions with Andre, including diners at his place in Sunnyvale where the excellent wine Andre was serving, and the poetry he was sharing, surely helped us to be creative. In Fall 2000, I eventually defended my thesis, proposing an alternative, much less computationally intensive, MPS implementation: SNESIM. The idea, again simple, as Andre liked them, was to introduce search trees to store all multiple-point statistics from the training image for a data template corresponding to a given data search window (Strebelle 2000). Prior to the simulation, the training image is scanned, only once, with that data template, and all the training data events are stored in the search tree. During the simulation, at each unsampled location, the conditional probabilities can be quickly retrieved from that search tree, which made SNESIM much faster than ENESIM. Because the size of the data template is limited for memory and speed reasons, a multiple-grid approach, consisting in simulating nested, increasingly finer, grids, and rescaling the data template proportionally to the node spacing of the current nested grid, was introduced to capture long-range structures. A few years later, the SNESIM implementation was further improved by optimizing the data template definition and the multiple-grid simulation approach (Strebelle and Cavelius 2014).

The use of multiple-grid simulation is critical in SNESIM to capture long-range connectivity patterns from the training image. However, nested grids are simulated independently, one after another. To maintain the full consistency between small and large-scale simulated patterns, the algorithm should remember what areas of the training image contain the multiple-point statistics moments that were used to simulate the large-scale patterns, and then use only those areas to infer multiple-point statistics moments needed to simulate small-scale patterns. Accounting for the relationship between small- and large-scale patterns could be a subject of future research.

With multiple-point statistics, Andre’s research group, SCRF, entered a new area, borrowing ideas, concepts and algorithms from computer vision and machine learning. Andre embraced that transformation (Journel 2004), encouraging his students to take computer sciences classes, at the risk of seeing some of them called by the sirens of Silicon Valley.

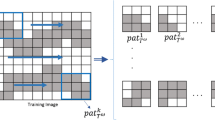

In the last 20 years, many alternative MPS implementations have been proposed. Some of them were initiated or advised by Andre, more particularly the first pattern-based MPS implementations SIMPAT (Arpat and Caers 2007), or FILTERSIM (Zhang et al. 2006; Wu et al. 2008). In pattern-based implementations, instead of simulating one grid cell at a time, a full set of cells are simulated, mimicking a jigsaw approach. Better training image reproduction can be typically achieved using pattern-based programs compared to the pixel-based programs ENESIM and SNESIM, but at the expense of the perfect hard data conditioning allowed by the pixel-based approach.

New MPS implementations focus more and more on training pattern reproduction. The resulting models look prettier and prettier, but they lose sight of the constraints they should honor to be able to answer the questions raised in the study. For example, recent MPS implementations based on machine-learning techniques are often limited to 2D applications or do not even allow any data conditioning. The next three sections review the typical expectations of practitioners regarding geostatistical models and discuss MPS implementation options that fulfil those expectations.

This paper focuses on the use of MPS to simulate categories, more particularly geological facies, which remains today the main MPS application. However, most observations made for categorical MPS implementations, and the conclusions drawn about preferred options, can be easily extended to MPS for continuous variables.

3 Training Images

The training image is the central piece of MPS simulation, and yet, almost 30 years after the advent of MPS, important questions persist about what makes a “good” training image: How large should a training image be? What level of geological detail should it contain? Can training images be non-stationary?

In the early days of MPS, photographs of outcrops, or simple sketches hand-drawn by a geologist, were proposed as potential training images; see Fig. 2a. However, not only do those images require a fair amount of tedious processing before they can be used by MPS simulation algorithms, but, more importantly, they are only two-dimensional, while most geostatistical applications are three-dimensional. Practice has shown over the time that unconditional object-based modeling was the most straightforward method to generate training images; see Fig. 2b. Photographs of outcrops and hand-drawn sketches, but also good quality seismic data and geological databases, are particularly useful to set input parameters, such as object shapes, dimensions, and orientation, in object-based modeling programs. Freed from any conditioning, traditional object-based modeling algorithms can be easily extended to incorporate additional geological features, for example channel avulsion or stacking patterns. Finally, object-based training images are easy and fast to generate. Thus, alternative training images can be quickly built to represent the full range of geological uncertainty.

Different types of training images: a 2D hand-drawn sketch; b Unconditional object-based model; c Process-based model from Willis and Sun (2019)

Object-based models are usually considered as simplistic representations of geological environments, but this turns out to be an advantage for MPS. Indeed, as demonstrated by Emery and Lantuejoul (2014), to provide a statistically robust distribution of all possible multiple-point statistics patterns, the training image should theoretically have an extremely large size, orders of magnitude larger than typical multi-million cell training images. It is therefore critical to optimize the content of the training image by displaying only those geological features that will have the most important impact on the study objective, for example the continuity of sand channels or shale layers that have a first order impact on flow performance predictions in hydrocarbon reservoir studies. Through their simplicity, object-based training images reduce the diversity of patterns, allowing MPS simulation to focus on the geological features that really need to be reproduced.

For that same reason, it is important to limit the number of categories to be modeled, as the greater the number of categories, the higher the number of possible multiple-point statistical moments. For example, in hydrocarbon reservoir modeling applications, even if the geologist can provide a very detailed well log interpretation consisting of many different facies, the modeler should try to combine facies with similar petrophysical properties into a small number of classes, 2 to 4 ideally, that capture the main subsurface heterogeneous features.

Compared to object-based modeling, process-based modeling allows generating much more geologically reasonable models; see Fig. 2c from Willis and Sun (2019). However, not only are process-based models computationally intensive, but, as explained above, because of their high level of geologic detail, reproducing patterns from those models by MPS algorithms is also much more challenging. Process-based models are still very useful in MPS because they mimic physical processes and generate physically plausible models. Thus, when mature reservoir analogs are difficult to identify, process-based modeling can generate “digital” analogs. Parameters such as object dimensions or sinuosity can be estimated from those digital analogs to build simpler training images using object-based modeling.

Determining the minimum level of geological detail needed in the training image to provide reliable answers to the questions under study is a complex task. A procycling approach is recommended, whereby the simplest possible training image with a small number of classes, two or three ideally, is used first, and then complexity is added progressively until additional detail does not impact the results or conclusions of the study (e.g. the ultimate recovery estimated from a hydrocarbon reservoir to assess the optimal number of wells needed to develop that reservoir). Another procycling approach can help to optimize the size of the training image by starting from a relatively small training image, consisting of, say, less than one million cells, and increasing the size of the training image until no further impact on the study can be observed.

An important, but unfortunately often discarded, step before running MPS simulation is to check the consistency of the training image with the conditioning data. Different consistency measures have been proposed (Boisvert et al. 2007). The most basic one consists of comparing the variogram computed from the training image with the experimental variogram estimated from the conditioning data.

4 Data Conditioning

In many applications, honoring hard conditioning data is an absolute necessity. For example, in hydrocarbon or groundwater studies, not honoring the facies conditioning data from available well logs leads to facies mismatches at well locations that make flow performance predictions unreliable. Perfect data conditioning has been a strength of conventional variogram-based programs such as Sequential Indicator Simulation (SIS), whereas it has regularly been pointed out as a major limitation of object-based programs. The original MPS implementations ENESIM and SNESIM provide the same rigor as SIS in terms of data conditioning. If no replicate of the conditioning data event can be found in the training image, the furthest away conditioning data are dropped until at least one replicate of the reduced conditioning data event can be found. This could alter the quality of the reproduction of the training patterns, but it is generally the preference of practitioners to honor all conditioning data rather than reproduce patterns from an uncertain, size-limited, training image. In addition, postprocessing techniques have been proposed to identify and re-simulate patterns in the MPS model that are not consistent with the training image (Strebelle and Remy 2004).

Pattern-based implementations do not offer that same guaranty of perfect data conditioning. They indeed use a rigid data template, and none of the training patterns corresponding to that template may be consistent with the conditioning data, resulting in potential local mismatches like those observed in object-based modeling. A hybrid solution combining a pixel-based implementation in the vicinity of conditioning data, and a pattern-based implementation in the areas free of conditioning data is however possible (Tahmasebi 2017).

In the last few years, some new implementations based on advanced computer vision techniques have been proposed. They offer fast and efficient training pattern reproduction, for example the implementation based on spatial generative adversarial networks (SGAN) proposed by Laloy et al. (2018). Unfortunately, a lot of those techniques completely ignore any form of conditioning or only offer very limited conditioning capabilities, and one could question the interest that practitioners may find in those solutions.

5 Non-stationary Constraints

Most events in nature are not stationary. There are always spatial constraints influencing the distribution of the properties to be modeled. For example, when modeling depositional facies, spatial constraints may be the source and concentration of sediments, the local topography, and/or the sea level. In most studies, soft data are available to inform the modeler about the presence of such non-stationarity. For example, in subsurface modeling, seismic data can provide an idea about the distribution of sand and shale. In the presence of good quality soft data, a calibration can be performed using the available collocated hard data to estimate facies probabilities at each location (Strebelle 2000). Different methods, such as the tau-model proposed by Journel (2002), can be used to combine such a soft data-derived probability cube with the training image-derived probabilities in pixel-based MPS implementations. In pattern-based implementations, the integration of soft probabilities is less straightforward. As the quality of the soft data becomes poorer, qualitative trends can be interpreted and used as an uncertainty parameter in MPS.

Even in the absence of soft data, it is often important to generate soft constraints to model the potential spatial distribution of the properties to be simulated by MPS. Such trends can be computed from hard conditioning data using block kriging or derived from the interpretation of the depositional environment. Building a stationary model, without considering any uncertainty about potential trends, is a very strong decision that can lead to the wrong conclusions.

In hydrocarbon or groundwater reservoir modeling, the spatial distribution of petrophysical properties is likely to have a major influence on the flow behavior of the reservoir. Attention should be brought to vertical trends, and more specifically to the presence of extended layers with extreme property values, such as low porosity/low permeability shale layers. Continuous shale layers indeed form flow barriers, isolating reservoir compartments from each other. Identifying these layers can be challenging since the grid layering may not be perfectly consistent with the actual reservoir stratigraphy, and correlative shale data from different wells may not correspond to the same grid layer. Lateral trends away from conditioning data also needs special attention due to risky extrapolation effects. Alternative interpretations away from conditioning data control need to be considered, based on experience and analogs.

Developed in the early days of MPS, but still a common practice, the use of one-dimensional vertical proportion curves, two-dimensional horizontal proportion maps, or three-dimensional probability cubes, allow imposing non-stationarity features in MPS models (Harding et al. 2004). Although this is the simplest practice, as it requires only one stationary training image, the problem is ill-posed: in a facies model, local proportions higher than the training image proportions can mean either a local density of facies geobodies higher than the training image, or the local presence of facies geobodies larger than in the training image. In a lot of cases, the modeler does not have the answer to that question, and will let the MPS simulation program provide the answer, most likely something in-between higher density of objects and larger objects.

Two solutions exist, however, for the modeler to control the outcome of the simulation. In one solution, several training images can be generated with different facies proportions, and regions can be defined in the simulation grid to let MPS use the most appropriate training image in each region. In the alternative, a non-stationary training image displaying regions with variable facies proportions can be provided, as well as an auxiliary property that will restrict the selection of training patterns to the specific regions of the training image with similar proportions (Chugunova and Hu 2008; Honarkhah and Caers 2012).

Geometrical constraints corresponding to variable geobody dimensions and/or directions can be easily processed in pixel-based MPS implementations through a simple affinity transform (rotation and/or stretching) of the training image (Strebelle and Zhang 2004). In this case, a two-dimensional or three-dimensional variable azimuth field, or variable object size field, comprise the only input data needed by the MPS algorithm. That approach can be extended to pattern-based MPS implementations. However, more recent MPS implementations, borrowed from computer vision where non-stationarity is not a concern, do not allow such constraints; this severely limits, once again, the range of applications of those implementations.

6 Practical Considerations

MPS is a fabulous, still largely unexplored, research territory. While the theory behind variogram-based geostatistics is well established with the kriging equations, MPS is still an experimental field where results largely depend on implementation details rather than theoretical rationale. Because of the inability of variogram-based geostatistics to reproduce realistic geological features and the limitations of object-based approaches regarding hard and soft data conditioning, MPS simulation has become a very attractive intermediary solution for practitioners, and it is now available in most commercial geomodeling programs. As in any other software program, one critical condition for the adoption of a specific MPS implementation by a large population of modelers, is the user-friendliness of that implementation and the robustness of the results.

6.1 User-Friendliness Through Default Input Parameters

In most MPS implementations, a data template must be specified by the user, which is similar to the search neighborhood used in variogram-based simulation programs. In the GSLIB library, several input parameters need to be set to define the search neighborhood in sequential simulation programs (Deutsch and Journel 1998): the maximum number of original data, the maximum number of previously simulated nodes, the number of data per octant, and the dimensions and potential anisotropy of the search neighborhood. In most commercial packages, parameter values are automatically estimated (e.g. search neighborhood dimensions derived from variogram ranges) or set by default, and they can be modified by the user only in an expert or advanced mode. Therefore, the practitioners can focus on the inference of the variogram and possible non-stationarity constraints, instead of dealing with obscure parameters whose influence on the final geostatistical realizations is hard to predict and analyze.

The situation is similar for the data template in MPS. Expecting non-expert users to define the size and geometry of that data template only leads to misunderstanding and frustration. In the original pixel-based MPS implementations ENESIM and SNESIM, the conditioning data event is reduced by dropping the furthest conditioning data whenever no replicate of that conditioning data event can be found in the training image. Considering a relatively high template size in SNESIM, say 50 nodes, as a default value is a conservative, safe, choice; increasing that value would slow down the MPS simulation, as more conditioning data would need to be dropped, but it would not fundamentally modify the quality of the reproduction of the training patterns. Furthermore, Strebelle and Cavelius (2014) proposed a method to automatically minimize the size of the data template based on the MPS moments that could be inferred from the training image. The anisotropy of the template also can be automatically inferred from the training image.

Regarding the multiple-grid simulation approach, the number of nested, increasingly finer, grids can be set by the user in SNESIM. On the one hand, an insufficient number of nested grids may prevent MPS models from reproducing large-scale structures from the training image. On the other hand, an excessive number of nested grids requires additional simulation time, but it does not entail any deterioration of the MPS models. Thus, the number of nested grids should be set to a conservative value on the high side. For example, the default value can be simply computed such that the extent of the rescaled data template corresponding to the first, coarser, grid, covers the whole simulation grid. In summary, default values to define the data template and the number of multiple-grids can be automatically estimated, which allows the user to focus on the fundamental inputs of MPS simulation: the training image and the conditioning constraints. In contrast, pattern-based implementations and other computer vision derived implementations, such as the wavelet-decomposition based method proposed by Gloaguen and Dimitrakopoulos (2009), provide results that highly depend on various parameters, more particularly the choice of the data template. The extensive tuning of parameter values required to obtain reasonable results always make practitioners quite uncomfortable.

6.2 Target Proportions

A debate exists among researchers working on MPS regarding the need to impose target categorical proportions on MPS models. In SIS, discrepancies between target proportions and simulated proportions are usually accepted, as they correspond to ergodic fluctuations due to the limited size of the simulation grid. In MPS, the simulated proportions highly depend on algorithm details. In applications where volumes are computed from MPS models, those arbitrary fluctuations in the simulated proportions are unacceptable. Target proportions must be honored by the MPS models, using for example the servosystem proposed in SNESIM (Strebelle 2000): the current simulated proportions are computed as the simulation progresses from one unsampled location to the next, and local conditional facies probabilities inferred from the training image are slightly increased to boost the simulation of those facies for which the current simulated proportions are significantly lower than the target proportions.

Evaluating the target proportions is a critical step, since it has a direct impact (e.g. hydrocarbon volume) or indirect impact (flow performance forecasts) on the conclusions of the study. That evaluation requires not only checking and correcting for any potential bias in the data locations, but also assessing the uncertainty about those proportions. Many different methods have been proposed for quantifying probabilistic distributions associated with target proportions uncertainty (Haas and Formery 2002; Caumon et al. 2004; Hadavand and Deutsch 2017). Whatever the method selected by the modeler, target facies proportions can be randomly drawn from the resulting probabilistic distribution using Monte-Carlo simulation, and then the servosystem will help match those target facies proportions. Repeating that experience several times allows building a set of models that span the full facies proportions uncertainty range.

Some MPS implementation options make it difficult, however, to impose target proportions. This is the case for MPS implementations using the raster path. The raster path consists of simulating all the unsampled locations along a path cycling through one axis at a time (e.g. x-axis, then y-axis, and finally z-axis) instead of using a traditional random path. By simulating consecutive nodes, the raster path significantly improves the reproduction of training patterns (Daly 2004). While the servosystem works very well with random paths as the current facies proportions are computed from a random set of locations, a bias may exist along a raster path as the current facies proportions are computed from the specific area where the raster path started, which could have locally lower or higher proportions than the global target proportions.

7 Conclusions

Multiple-point statistics simulation has opened a new area in geostatistics, an area where connections to computer vision were identified very early and continue to be investigated today. It is important, however, to understand the specificities of geostatistical applications compared to computer vision, and make sure that the techniques borrowed from computer vision are consistent with the expectations of users of geostatistical tools. Hard and soft conditioning, as well as non-stationarity features, are fundamental components of geostatistical modeling that should never be overlooked. MPS implementations that have trouble honoring conditioning data or non-stationary constraints are likely to be ignored by practitioners. User-friendliness, which primarily consists of minimizing the number of input parameters and providing robust results that do not require extensive fine tuning, is critical for practitioners. Close reproduction of training image features becomes important only when those features play a critical role in the results and conclusions of the study. Fit-for-purpose options may be worth developing for common applications of MPS simulation. For example, MPS has been quite extensively used for the simulation of channel reservoirs, where long-range channel connectivity is a critical factor impacting the reservoir flow behavior. Yet, none of the numerous MPS implementations developed to date guarantees the perfect connectivity of the simulated channels as displayed in the training images in the resulting MPS models, not even in two-dimensional models. The solution may be directly part of the simulation process, or it could consist in a customized post-processing. More generally, a vast avenue of research exists in identifying the multiple-point statistics moments that are important to filter from the training image, and to make sure that those moments are indeed reproduced in the MPS models. This would be a major step towards generating useful images to make the right project decisions instead of just pretty pictures.

References

Arpat B, Caers J (2007) Stochastic simulation with patterns. Math Geol 39:177–203

Boisvert J, Pyrcz M, Deutsch C (2007) Multiple-point statistics for training image selection. Nat Resour Res 16(4):313–321

Caumon G, Strebelle S, Caers J, Journel A (2004) Assessment of global uncertainty for early appraisal of hydrocarbon fields. SPE paper 89943

Chugunova TL, Hu LY (2008) Multiple-point simulations constrained by continuous auxiliary data. Math Geosci 40:133–146

Daly C (2004) Higher order models using entropy, Markov random fields and sequential simulation. In: Leuangthong O, Deutsch C (eds) Proceeding of the 2004 international geostatistics congress. Springer, Banff, pp 215–224

Deutsch C (1992) Annealing Techniques Applied to Reservoir Modeling and the Integration of Geological and Engineering (Well Test) Data. Dissertation, Stanford University

Deutsch C, Journel A (1998) GSLIB: geostatistical software library and user’s guide, 2nd edn. Oxford University Press, Oxford

Deutsch C, Tran T (2002) FLUVSIM: a program for object-based stochastic modeling of fluvial depositional systems. Comput Geosci 28(4):525–535

Emery X, Lantuéjoul C (2014) Can a training image be a substitute for a random field model? Math Geosci 46(2):133–147

Farmer C (1992) Numerical rocks. In: King P (ed) The mathematical generation of reservoir geology. Clarendon Press, Oxford

Gloaguen E, Dimitrakopoulos R (2009) Two-dimensional conditional simulations based on the wavelet decomposition of training images. Math Geosci 41:679–701

Goovaerts P (1997) Geostatistics for natural resources evaluation. Oxford University Press, Oxford

Guardiano F, Srivastava M (1993) Multivariate geostatistics: beyond bivariate moments. In: Soares (ed) Geostatistics-Troia. Kluwer Academic, Dordrecht, pp 133–144

Haas A, Formery P (2002) Uncertainties in facies proportion estimation I. Theoretical framework: the Dirichlet distribution. Math Geol 34:679–702

Hadavand M, Deutsch C (2017) Facies proportion uncertainty in presence of a trend. J Pet Sci Eng 153:59–69

Harding A, Strebelle S, Levy M, Thorne J, Xie D, Leigh S, Preece R, Scamman R (2004) Reservoir facies modeling: new advances in MPS. In: Leuangthong O, Deutsch C (eds) Proceeding of the 2004 international geostatistics congress. Springer, Banff, pp 559–568

Journel A (2002) Combining knowledge from diverse sources: an alternative to traditional data independence hypotheses. Math Geol 34:573–594

Journel A (2004) Beyond covariance: the advent of multiple-point geostatistics. In: Leuangthong O, Deutsch C (eds) Proceeding of the 2004 international geostatistics congress. Springer, Banff, pp 225–233

Journel A, Alabert F (1989) Non-Gaussian data expansion in the earth sciences. Terra Nova 1:123–134

Laloy E, Herault R, Jacques D, Linde N (2018) Training-image based geostatistical inversion using a spatial generative adversarial neural network. Water Resour Res 54(1):381–406

Srivastava M (2018) The origins of the multiple-point statistics (MPS) algorithm. In: Sagar, Cheng and Agterberg (eds) Handbook of mathematical geosciences. Springer, pp 652–672

Strebelle S (2000) Sequential simulation drawing structures from training images. Dissertation, Stanford University

Strebelle S, Cavelius C (2014) Solving speed and memory issues in multiple-point statistics simulation program SNESIM. Math Geosci 46:171–186

Strebelle S, Remy N (2004) Post-processing of multiple-point geostatistical models to improve reproduction of training patterns. In: Leuangthong O, Deutsch C (eds) Proceeding of the 2004 international geostatistics congress. Springer, Banff, pp 979–988

Strebelle S, Zhang T (2004) Non-stationary multiple-point geostatistical models. In: Leuangthong O, Deutsch C (eds) Proceeding of the 2004 international geostatistics congress. Springer, Banff, pp 235–244

Tahmasebi P (2017) Structural adjustment for accurate conditioning in large-scale subsurface systems. Adv Water Resour 101:60–74

Willis B, Sun T (2019) Relating depositional processes of river-dominated deltas to reservoir behavior using computational stratigraphy. J Sediment Res 89:1250–1276

Wu J, Zhang T, Journel A (2008) A fast FILTERSIM simulation with score-based distance. Math Geosci 40:773–788

Zhang T, Switzer P, Journel A (2006) Filter-based classification of training image patterns for spatial simulation. Math Geol 38:63–80

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Strebelle, S. Multiple-Point Statistics Simulation Models: Pretty Pictures or Decision-Making Tools?. Math Geosci 53, 267–278 (2021). https://doi.org/10.1007/s11004-020-09908-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11004-020-09908-8