Abstract

This paper describes a proposed method for clustering attributes on the basis of their spatial variability and the uncertainty of cluster membership. The method is applied to geometallurgical domaining in mining applications. The main objective of geometallurgical clustering is to ensure consistent feed to a processing plant by minimising transitions between different types of feed coming from different domains (clusters). For this purpose, clusters should contain not only similar geometallurgical characteristics but also be located in as few contiguous and compact spatial locations as possible so as to maximise the homogeneity of ore delivered to the plant. Most existing clustering methods applied to geometallurgy have two problems. Firstly, they are unable to differentiate subsets of attributes at the cluster level and therefore cluster membership can only be assigned on the basis of exactly identical attributes, which may not be the case in practice. Secondly, as they do not take account of the spatial relationships they can produce clusters which may be spatially dispersed and/or overlapped. In the work described in this paper a new clustering method is introduced that integrates three distinct steps to ensure quality clustering. In the first step, fuzzy membership information is used to minimise compactness and maximise separation. In the second step, the best subsets of attributes are defined and applied for domaining purposes. These two steps are iterated to convergence. In the final step a graph-based labelling method, which takes spatial constraints into account, is used to produce the final clusters. Three examples are presented to illustrate the application of the proposed method. These examples demonstrate that the proposed method can reveal useful relationships among geometallurgical attributes within a clear and compact spatial structure. The resulting clusters can be used directly in mine planning to optimise the ore feed to be delivered to the processing plant.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Geometallurgy provides new opportunities for mine planning by integrating primary and response properties to enhance the value of information for decision-making processes (Coward et al. 2009, 2013; Coward and Dowd 2015). Even though significant progress has been made in sensing and collecting geometallurgical data, there is still a significant gap in achieving effective use of these data in practical applications. Discriminating among geometallurgical characteristics is a step towards an effective use of geometallurgy for mine planning, as similar geometallurgical characteristics will have similar responses in mineral processing. From this perspective, a proper clustering of in-situ resources based on geometallurgical characteristics is essential to optimising operations across the entire value chain from mining to mineral processing.

Traditional geological clustering, commonly known as rock type domaining, is important in understanding the nature of the deposit but it does not necessarily reflect the responses of the ore to the various processing stages. Geometallurgical clustering (or domaining) is similar to geological clustering but focusses on the geometallurgical characteristics of the orebody to provide a basis for integrated optimisation from mining to processing (Hoal et al. 2013). Clustering is an important problem in machine learning and, for unsupervised problems, it is one of the hardest to formulate and solve. Regression and classification are supervised because the response is known, whereas clustering partitions data on the basis of similar characteristics and, at the same time, maximises the separation of those partitions.

The classic cut-off grade approach to ore selection clearly does not consider the mineral complexity and its responses to processing, such as the energy consumption due to different hardness and grindability, concentration of deleterious elements, different recovery rates due to different geometallurgical attributes. Clustering based on geometallurgical attributes has been an active research topic over the past decade. Having more material classes (clusters) may improve the ability to select the best processing route for each parcel of mined ore so that the overall operation is optimised (Dunham and Vann 2007; Hunt et al. 2013). However, the risk of misclassification increases as more clusters are defined and this risk must be considered in any geometallurgical clustering.

Geological domains are not necessarily useful in defining processing domains that are required to reflect characteristics such as the Bond ball mill work index (BMWi), which relates to the energy used in a ball mill (Bond 1961), or the \(\hbox {A}{\times }\hbox {b}\) comminution index (Napier-Munn et al. 1996), which is a measure of the ore impact breakage resistance. To remediate this problem, Keeney and Walters (2011) used principal component analysis (PCA) (Wold et al. 1987) to project variables onto a two-dimensional space representing geometallurgical attributes such as mineralogy and grindability indices. Different classes were then manually defined by drawing polygons around spatially contiguous projected points on the basis of mineralogical association. These classes were used to build predictive models and propagate them into the block model using standard geostatistical indicator approaches. The same method was used in a similar case study to define four geometallurgical domains at drill-hole scale which were then scaled up to block scale by four different methods: sectional interpretation and wireframe modelling, nearest neighbour assignment, indicator kriging, and stochastic trend analysis (Newton and Graham 2011). The two comminution parameters, \(\hbox {A}\times \hbox {b}\) and BMWi, were then populated into the block model by applying specific regression models in each geometallurgical domain.

Leichliter and Larson (2013) developed a geometallurgical model to cluster a deposit into two classes for two different recovery circuits: flotation circuit for less oxidized ore and heap leaching for oxidized ore. They used the variables of assays, geological mapping, mineralogy, hardness, gravity and floatability attributes to define the classes. Hunt et al. (2014) manually clustered copper recovery domains on the basis of Al and Fe content (Low Al–High Fe and High Al–Low Fe). They pre-clustered 24 archetypes using chemical and mineralogical information. For each recovery domain, they built linear regression models using Al, Cu, Fe and grinding index from drill hole data and batch flotation tests. These models were scaled up for the block model using standard geostatistical methods. Nguyen and Keeney (2014) built a geometallurgical domaining system by hierarchical clustering at sample scale using assay values, geotechnical logging and petrophysical attributes to model and estimate grindability response indices. Goodfellow and Dimitrakopoulos (2017) performed clustering using grades and material types to define different ore destination policies, which were used to optimise scheduling. Garrido et al. (2017) used clay content as a measure to define the concept of geometallurgical dilution in a manner similar to mining dilution. They defined geometallurgical dilution as the ratio between the most common clay cluster and all other clusters. This dilution concept can be used in scheduling optimisation to avoid excessive changes in clay content in the ore to be sent to the processing plant.

The research discussed above demonstrates the use and ability of geometallurgical domaining in improving processing decisions and optimising scheduling to processing plants. However, most of this research uses standard clustering methods and the resulting clusters are then up-scaled to the block model using geostatistical approaches. There is no explicit imposition of spatial contiguity and compactness in the determination of clusters. In addition, the uncertainty of the clustering is not assessed. For the explicit use of the spatial component, Oliver and Webster (1989) incorporated into the dissimilarity measure a spatial variogram model, using an isotropic exponential structure with parameters of nugget effect, sill and range. Bourgault et al. (1992) generalised the Oliver and Webster (1989) method by using a multivariate (co)variogram to account for both spatial and attributes correlations in clustering. Allard and Guillot (2000) modelled the hard clustering problem as a mosaic of independent stationary normal random functions for the univariate case. Three different optimisation approaches were tested. One approach was based on minimising the ratio between the variance within a cluster and the variance between clusters. The second optimisation method used negative log-likelihood to estimate the parameters. Finally, in the third approach they used the expectation–maximisation (EM) algorithm. The spatial structure is accounted for by the kriged (estimated) mean of the random function and the associated kriging variance. Guillot et al. (2006) assumed that the spatial component is characterised by a second order stationary random field. The inference of the parameters that define the covariance function and the clusters are found by a Markov chain Monte Carlo algorithm. This method uses quantitative and categorical multivariate data. For hard clustering in the univariate case, Carlo et al. (2017) incorporated the spatial component as a non-stationary Markov random field conditioned to the k-nearest neighbourhood structure. The optimisation was performed by the EM algorithm. The method allows each cluster to have different spatial interaction modulated by a spatial covariate. Fouedjio (2016) incorporated the spatial component in the definition of the dissimilarity measure in the agglomerative hierarchical clustering method. The dissimilarity/similarity between two observations is not a simple Euclidean measure but rather a function of their spatial correlation. It is not clear what effect negative correlations in cross-variograms have on this dissimilarity measure and its performance when the spatial correlation is low, but the method is consistent with geostatistical approaches.

Based on Gaussian mixture models, Ambroise et al. (1996) proposed a method that adds a regularisation component, derived from the spatial structure, to the clustering optimisation formulation. This method takes into account the membership of all neighbours of any observation for clustering. Romary et al. (2015) incorporated the spatial component into the distance metric using a hierarchical clustering method. The distance function takes into account the spatial connectivity introduced by a moving neighbourhood. Weights for each attribute can be defined by the user and incorporated into the distance function. The coordinates are also included as attributes.

In addition to clustering methods that incorporate a spatial component, cleaning realisations of lithofacies in a regular grid, or image, helps to preserve spatial continuity. Schnetzler (1994) used two image processing pixel-base methods of dilatation and erosion, to produce cleaner images. The resulting grid does not necessarily reproduce the original statistics of the lithofacies. To overcome this issue, a post-process changes the categorical value of the pixels to match the original statistics. The probability of accepting changes is defined as the ratio of the kriging variance to the total variance. This method is only applicable to a regular grid as it was designed to correct ’noisy’ grids for visualisation purposes. Deutsch (1998) improved the method of Schnetzler (1994) by using the quantile transformation to correct proportions and produce less-noisy realisations. Locations in the borders between regions are candidates for relocation. The maximum a posteriori selection algorithm replaces each location by the most probable value according to the local neighbourhood structure, based on three aspects: closeness, conditioning data, and target proportions.

In this paper, a new adapted method is proposed to cluster diverse attributes to build geometallurgical domains. The method, spatial weighted fuzzy clustering (SWFC), is based on traditional fuzzy clustering (Dunn 1973) with a novel adaptation to support mixed attributes together with the capacity to include expert knowledge and spatial structures. The formulation of the clustering algorithm based on fuzzy clustering, flexible distance metrics and feature selection is given in the methodology section. The mathematics of the proposed SWFC method is then described in the following section. Three case studies are presented to illustrate the application of SWFC, starting with a very simple illustrative example, followed by a two-dimensional case, and finally, a comprehensive three-dimensional synthetic geometallurgical block model.

2 Methodology

A dataset is defined as a set of observations or samples. Each sample is a k-dimensional vector, where each dimension represents a feature or an attribute. Each attribute can be a continuous or a categorical variable (ordinal or nominal). The goal of clustering is to partition the dataset into P sets where samples within a partition are similar and partitions are well separated. The concept of similarity within a cluster (defined as compactness, see below) and separation distance between clusters are key aspects of clustering.

2.1 Definition of Symbols and Indices

Symbols:

- \(\mathcal {P}\) :

-

is a set of partitions or clusters.

- P :

-

is the number of partitions or clusters.

- K :

-

is the number of dimensions of a multivariate sample.

- N :

-

is the total number of samples.

- \(S_j\) :

-

is the number of samples in the \(j{\mathrm{th}}\) cluster.

- \(v_j\) :

-

is the centroid of the \(j{\mathrm{th}}\) cluster.

- m :

-

is the fuzzier used in the fuzzy clustering algorithm.

- u :

-

is the membership matrix with N rows and P columns.

- w :

-

are the weights of attributes.

Indices:

- i :

-

indicates the \(i{\mathrm{th}}\) sample, \(1<= i <= N\). For example \(x_i\).

- j :

-

indicates the \(j{\mathrm{th}}\) cluster, \(1<= j <= P\). For example \(v_j\).

- k :

-

indicates the \(k{\mathrm{th}}\) dimension, \(1<= k <= K\). For example \(w_k\).

2.2 Hard Clustering

Hard clustering, or crisp clustering in the machine learning literature, seeks a non-overlapped, hard partition of a dataset and therefore the partitions \(\mathcal {P}\) are disjoint sets and each sample belongs only to one partition. One option for clustering is to find the centroids of clusters that minimise the overall distance of each sample to the centroid of its cluster, that is,

where D is any distance metric, \(x_i\) is the \(i{\mathrm{th}}\) sample belonging to the \(j{\mathrm{th}}\) cluster.

There are many hard clustering methods and among the most used are K-Means, for continuous variables, K-Mode for categorical variables and several variants for mixed variables. K-Means is probably the most used clustering method due to its simplicity. K-Means is solved by a two-stage iterative procedure to minimise the variance of the distances within clusters. In the first stage the centroids of clusters are assumed to be fixed and each sample is assigned to the closest centroid. In the second stage, the centroids are updated as the average of all samples within a cluster. The two stages are iterated until the overall variance of clusters is minimised. It is common to select initial centroids at random. A very common variation is to perform an initial dimension reduction to compress the information into two or three dimensions by PCA (Ding and He 2004). After the dimensionality has been reduced, K-Means is applied to the compressed data.

For geometallurgical applications, it is important to quantify the uncertainty of belonging to a cluster but hard clustering cannot provide this assessment. The fuzzy clustering method assigns the grade of cluster membership to all samples. This grade can be used as a probability measure and, therefore, it can provide a simple way to quantify the uncertainty of clustering.

2.3 Fuzzy Clustering

Fuzzy clustering, as opposed to hard clustering, is a method that seeks to find the grade of membership of a sample with regard to each cluster (Ruspini 1969). The objective for optimisation, therefore, changes to

and

where m is the fuzzier, which controls the degree of fuzziness. When m is close to 1, the fuzzy partition becomes a hard partition, that is, \(u_{ij}\) will be 0 or 1, and when m is large, \(u_{ij}\) will tend to be uniformly distributed, but always subject to \(\sum _{j=1}^{P}u_{ij}=1, \forall i\).

There are several methods to find the optimal membership, for example, fuzzy c-means, fuzzy k-modes and fuzzy k-prototypes. These methods are not designed to use mixed attributes and they do not perform feature selection. To take these features into account, a distance-based approach must be used.

2.4 Distance Metrics

Clustering essentially relies on similarity among observations and therefore the most critical aspect is the definition of a distance metric between observations. In general, the Euclidian distance [Eq. (4)] is the default selection when all attributes are continuous, however when there is a mix of continuous and categorical attributes the Euclidian distance

is not the best choice.

For two multivariate attributes x and y, the distance function can be formulated as the contribution of each dimension to the total distance (Friedman and Meulman 2004), which is given by

This formulation gives a high degree of flexibility in the definition of specific distance functions for different kinds of attributes.

2.4.1 Continuous Attributes

For continuous attributes, such as grades, recovery rates and milling indices, the distance function is defined as

where \(s_k\) is any measure of dispersion, such as variance, standard deviation, interquartile range (Friedman and Meulman 2004). The importance of including dispersion is to avoid distortions with different scale values of the attributes. In this paper the standard deviation was used as dispersion measure.

2.4.2 Categorical Attributes

For categorical attributes, such as lithology, alteration types and mineralisation styles, the distance function is defined by a distance matrix, which is a symmetric square matrix of size \(M \times M\), where M is number of unique values of that attribute. For example, for a categorical attribute taking a set of possible values \({h_1,h_2,\ldots ,h_M}\), the distance matrix is

where each \(\theta _{ij}\) is a fixed value corresponding to the distance between the value \(h_i\) and \(h_j\) and \(\theta _{ij}=\theta _{ji}, \forall i,j\) as the distance is symmetric. If the categorical attribute has no preference among values, \(\theta _{ij}\) can be a constant positive value for all i and \(j \in {1,\dots ,M}\). For example, when \(\theta _{ij}\) is 1, the matrix becomes the traditional transformation to indicators, and is equivalent to

The flexibility of the matrix distance function allows for the definition of distance between categorical values, which is very useful for geometallurgical applications since there are, in general, categorical variables related to rock property attributes. For example, silication and silicification alterations are more similar compared to silication and argillic alteration. In this case, the distance between silication and silicification alterations should be smaller than that between silication and argillic alterations based on the definition above. The same can be considered in the case of metamorphic rocks, for example, phyllite and schist rocks are more similar compared to slate and gneiss. The distance for the categorical attribute can be defined as

where h(x) denotes the value of the categorical variable x used in the definition of its distance matrix.

2.4.3 Targeted Attributes

Another flexibility of the proposed distance function is the option of including a target value in any distance function. There are situations when similarity needs to be defined as closeness to a target value; for example, the focus of interest could be on low, medium and high recoveries. Setting specific low, medium and high values of recoveries will tend to yield clusters according to those targets. Friedman and Meulman (2004) defined a distance function for one and two targets, t and u, as

and

These two metrics are not strictly distance metrics because they violate the identity property, but they work in practice. The problem arises when they are used to compare two values very close to each other. For example, both distances, \(g_k (x,y,t)\) and \(g_k (x+\epsilon ,y,t)\) with \(x+\epsilon <y\), are the same, due to the maximisation criterion in Eq. (9). To correct this problem a new criterion for a single target t is defined as

and its extension to multiple targets T is given by

Including the target is applicable to both continuous and categorical distance functions.

2.5 Feature Selection

In geometallurgy there are, in general, many attributes that can be used for clustering. The contributions of attributes to clustering may vary from very important to little or no importance. It is desirable that the clustering procedure considers the degree of importance of different attributes, which can sometimes be defined by expert knowledge. In this context, feature selection is an important procedure to determine the involvement of attributes and their degree of involvement as part of the clustering process. The most basic method is to consider all permutations of attributes and to select a set which performs the best. This approach obviously is computationally intensive and, as the number of attributes increases, the number of possible permutations increases exponentially. Note that the number of permutations of n attributes without repetitions is \(2^{n}-1\), which is equal to 1,048,575 permutations for a reasonable case of 20 attributes. On the other hand, forward and backward methods are greedy methods for feature selection. The forward method starts with one attribute and iteratively adds the attribute that most improves the distance metric. The backward method starts with all variables and removes the least useful attribute one at a time.

Another strategy is based on weights. Each attribute has, as an indicator of its degree of importance, a positive number within the range of [0, 1] as its weight. This weight can then be included in the distance metric

where \(w_k\) is the weight of the k-feature, subject to \(\sum _{k=1}^K w_k=1\).

In the case of clustering, by default all attributes have the same weight in different clusters. In practice, it may be desired in some cases to impose different weights for attributes in different clusters, that is,

where \(w_{ck}\) is the weight of the attribute k in the cluster c, subject to

This weight-based feature selection mechanism can also be included in the optimisation process to determine the best weights for each attribute in each cluster.

As pointed out by Friedman and Meulman (2004), the best minimisation strategy is to assign all weight to the attribute with the lowest dispersion of observations in each cluster, which provides an incentive to spread the weights to more attributes, the distance is defined as

where

The parameter \(\lambda \) controls how the weights are spread to other attributes. For larger \(\lambda \), the weights will tend to be similar for all attributes whereas for smaller \(\lambda \) the weights will tend to be given one or a few attributes.

2.6 Spatial Correction

Another important characteristic is the spatial structure. Traditional clustering methods do not incorporate any spatial structure. In fact, if coordinates of samples are included as attributes, traditional methods are likely to produce erroneous results as samples in the same cluster are not necessarily spatially connected and these clustering procedures will tend to separate them into different clusters on the basis of their coordinates. Within the geometallurgical context, a cluster (ore parcels with similar geometallurgical characteristics) may, in many sectors, not be directly spatially connected across the deposit, and therefore a more advanced technique is required for taking the coordinates into account. As one of the goals of geometallurgical clustering is to generate clusters as spatially connected as possible, it is essential to apply a spatial correction to avoid compact zones that include a few observations that belong to a cluster different to that to which the majority belong.

Spatial correction is conceptually similar to image segmentation. In computer vision, image segmentation tries to simplify any image by assigning to each pixel a label (here equivalent to a cluster) from a small set of labels. Image segmentation has been successfully applied for applications such as cancer detection and automated driving (López and Malpica 2008; Tarabalka and Charpiat 2013; Tarabalka and Rana 2014; Wang et al. 2016). There are many techniques for image segmentation, but the graph cut method (Boykov and Veksler 2006) is of special interest in this work because it can be integrated easily with fuzzy clustering.

The image segmentation, or labelling, problem can be formulated as an energy minimisation problem in a graph, given by

where \(L_p\) represents the label of a pixel p of the image I, \(D_p\) is the data penalty function, \(V_{pq}\) is the interaction potential, or the spatial relationship, and \(\mathcal {N}\) is the neighbourhood (spatial connectivity).

Clearly, there are some similarities between the image segmentation problem and our proposed clustering method. An image corresponds to the entire deposit whereas a pixel corresponds to an observation and the pixel value corresponds to an attribute of a sample. The fuzzy membership information (Eq. (3)) of each observation can be interpreted as the data penalty function. This means that each observation has a probability of belonging to a cluster and using \(D_p (L_p )=-\log (u_{pL_p})\) assigns a lower data cost when the membership probability is higher and vice versa. The interaction potential \(V_{pq}\) corresponds to the spatial relationship among observations.

The neighbourhood can be determined by the k-nearest neighbour in the case of unstructured locations, or the surrounding cells in the case of a regular grid, which defines the connections of data in the form of a graph. Complex interaction potential functions can be formulated in the form of geostatistical (co)variograms or correlograms as defined in Bourgault et al. (1992), but a simpler one is the Potts model, which focuses on discontinuities. The Potts model is defined as

where \(K_{pq}\) may be a constant value or the cost of the spatial relationship between p and q, for example, the distance between p and q. The Potts model favours a clearer segmentation among clusters, opposite to smooth transitions, which is desired for domaining.

3 Proposed Method

The proposed method, SWFC, combines two components: (i) an adapted version of fuzzy clustering, termed weighted fuzzy clustering (WFC), and (ii) the spatial correction using the graph cut method. Both components are formulated as optimisation problems.

3.1 Optimisation Formulations

For the proposed fuzzy clustering, the concepts of compactness and separation are combined in a single objective formulation, and they are defined below.

3.1.1 Compactness

Compactness is defined as

where \(\lambda \) controls the weight values among attributes. The u matrix is given by

3.1.2 Separation

Separation is defined as

The maximum criterion in Eq. (22) is required because different clusters may not share the same weights, in which case the maximum weight is used for the \(k{\mathrm{th}}\) dimension.

3.1.3 Objective

The proposed clustering seeks to minimise compactness of clusters and, at the same time, to maximise separation between clusters. A single objective formulation that incorporates both aims is defined as

where C is a constant which scales the importance of separation as a criterion in the optimisation formulation. The lower the value of C, the less important is any increment in separation. Our experiments indicate that a value of \(C = 15\) is appropriate to give more relative importance to compactness over separation but a complete assessment of the impact of different values is recommended. Other expressions that combine compactness and separation in a single objective may also be explored.

There are two main obstacles to solving this optimisation problem. The first is the non-convexity of the problem, meaning that the global minimum is hard to find. The second is the difficulty of finding the cluster centroids and defining a proper set of weights that can be used. The first obstacle can be dealt with by the use of metaheuristics, which is a simple technique to solve optimisation problems with many local optima. The second obstacle is solved by a two-stage procedure in the proposed method. In the first stage, the optimal centroids and membership are found for a given set of fixed weights. In the second stage, the weights are optimised given the clusters and memberships found in the first stage. These two steps are iterated until convergence.

3.2 Implementations

Genetic algorithm (GA) metaheuristic is used not only because of its simplicity, flexibility and good performance, but also because GA has been successfully used as an optimisation method for clustering (Luchi et al. 2016; Maulik and Bandyopadhyay 2000; Nanda and Panda 2014).

3.2.1 Genetic Algorithm

A genetic algorithm is a metaheuristic optimisation method that emulates the process of evolution. There are three main concepts involved in GA: selection, crossover and mutation. The selection operation imitates the natural selection process in which better individuals have more chances to pass their genes to the next generation. A fitness value is assigned to each individual, which corresponds to the evaluation function to be optimised. Crossover produces new individuals combining the genes of the parents. Mutation produces a new individual by mutating a small part of the gene of an individual. These three operations are executed for many generations to ensure that the best final individual of the population is a good local optimum. A complete tutorial on GA can be found in Whitley (1994).

The hyper-parameters of GA are the number of individuals in the population, the number of generations, the operations of selection, crossover and mutation, and the probabilities of crossover and mutation. Given these hyper-parameters, which depend on the problem to be solved, the GA procedure for minimisation is given by Algorithm 1.

The most important design aspect of any GA is the solution codification (genome), which is problem dependent. For a given problem codification, its corresponding crossover and mutation operations must also be defined. In our implementations, the crossover function is the standard uniform crossover. Selection is performed by tournament selection.

3.2.2 GA for Optimising Centroids

For the first stage discussed above, the problem reduces to finding the cluster centroids that minimise the objective function given in Eq. (23). Thus, the genome in this case represents the centroids of each cluster.

The initial centroids are selected from samples at random. The mutation operation perturbs one dimension of one centroid: for continuous variables, the perturbation corresponds to a random value drawn from a normal distribution \(\mathcal {N}(\mu =0, \sigma =0.1)\), whereas for categorical variables, the perturbation simply selects a different value of their categories at random.

The evaluation function for optimising centroids is given by Algorithm 2 and the mutation operator is given by Algorithm 3. The function \(\text {dim}(A)\) returns the number of the rows and columns of a matrix A.

3.2.3 GA for Optimising Weights

In the second stage, the weights are optimised with fixed centroids, and the problem reduces to finding the weights that minimise the objective function given in Eq. (23). As the weights are within the range [0, 1], the mutation adds a small number drawn from the normal distribution, \(\mathcal {N}(\mu =0.0,\sigma =0.01)\). Two integer numbers are selected at random, one for a cluster and the other for an attribute to modify. Note that the weights must sum to one. In addition, expert knowledge can be used to set a specific weight to an attribute add the perturbation can preserve these values.

The optimisation of weights is given by Algorithm 4 and the mutation operator by Algorithm 5.

3.2.4 Proposed Clustering Method (SWFC)

The final proposed method is shown in Algorithm 6 and the spatial correction is given by Algorithm 7.

The function \(\text {BuildNeighbourhood}(locations)\) returns the edges of the spatial structure of the locations. The spatial structure can be defined using Delaunay tessellation, k-nearest neighbour, or the surrounding blocks in a structured block model.

3.2.5 Efficiency and Scalability

The efficiency of SWFC relies mainly on its two components, the optimisation of the centroids and weights and the spatial correction by the graph-cut method. When the centroids are optimised, GA calculates, for each individual, the membership matrix, separation and compactness. The complexity of the calculation of the membership matrix is \(\mathbf {O}(N*P^2)\), separation is \(\mathbf {O}(N*P*K)\), and compactness is \(\mathbf {O}(K*P^2)\). As usually \(N \gg K\) and assuming that \(K>P\), an upper bound for the complexity of the evaluation of each individual is \(\mathbf {O}(N*P*K)\).

The GA algorithm needs to evaluate npop individuals over ngen generations, therefore, the total complexity of the WFC algorithm is \(\mathbf {O}(N*P*K*npop*ngen)\). Our results indicate that WFC converges in less than 20 iterations (main loop in Algorithm 6). The complexity of the graph-cut algorithm used is \(\mathbf {O}(N*P^2)\) (Boykov and Veksler 2006). The complexity of the fuzzy clustering is comparable to the K-Means algorithm at each iteration, but the difference is in the optimisation procedure, where SWFC is more computational intensive. Nevertheless, it overcomes two aspects: the use of GA helps in escaping from local minima and both fuzzy clustering and feature selection are jointly optimised. The complexity of SWFC does not have a high impact on the number of samples N, since it scales linearly as a function of N. Also, P is usually small for practical reasons (no greater than 10) and K is, in general, less than 100.

GA is a stochastic optimisation method and the results may be affected by the initial random seed. Our experiments showed that different seeds produce very stable results. All results reported are based on a single, representative run.

3.2.6 Assessing the Number of Clusters

There is no common choice for the number of clusters and this selection largely depends on the data and the application of the clusters. However, there are several indices that can be used to assess the cluster quality. The silhouette index (SI) [Eq. (24)] comprises compactness and separation (Rousseeuw 1987). This index is a real number in the range [− 1,1]. An index close to − 1 means there is little or no cluster structure and close to 1 indicates perfect compactness within clusters and clear separation between clusters. This index uses only the distance among observations and it is defined as

where

N is the total number of points, \(a_i\) is the average distance between point i and all other points in its own cluster, and \(b_i\) is the minimum of the average distances between i and points in other clusters.

Another index for assessing the quality of clusters is the Davies-Bouldin index (DBI), which describes how well the clustering has been done as measured by the distance between observations and cluster centroids. Values of this index close to 0 suggest better cluster structures (Davies and Bouldin 1979). DBI is calculated using the following equations

with \(p=2\) for the Euclidean norm.

4 Application

Three examples are presented in this paper to demonstrate the application of the proposed method. The first example is a simple synthetic case that illustrates the difficulties of traditional methods when clustering using different attributes. The second example is a cross-section of a simulated copper porphyry deposit (Garrido et al. 2017). The third example is a full synthetic geometallurgical block model (Lishchuk 2016).

The results of K-Means and PCA clustering methods are compared. The spatial correction also is applied to K-Means and PCA, using the membership matrix as the inverse of the squared distance between each sample to the centroids, given by

K-Means and PCA with spatial correction are denoted by SK-Means and SPCA respectively. Table 1 shows the values of the parameters used in the algorithms for the three examples.

4.1 Illustrative Example

In this example, there are four attributes: grades of copper, gold and iron, and recovery of copper, denoted as Cu, Au, Fe and Rec respectively. Although these attributes do not usually follow normal distributions, for the sake of simplicity, they were taken from normal distributions, but their means and standard deviations are different so as to form four clusters, see Table 2. All attributes follow their default normal distributions but for specific clusters, they follow normal distributions with different means and lower variances. Cluster 1 includes only Cu, Fe and Rec; cluster 2: Cu, Fe and Au; cluster 3: Cu, Fe, Rec; and cluster 4 only Fe and Au.

A spatial component was assigned to each cluster: half of cluster 1 is uniformly located in the region of \([(10.0{-}35.0), (10.0{-}35.0)]\) and the other half in \([(65.0{-}85.0), (65.0{-}85.0)]\); all of cluster 2 is located uniformly in the region of \([(0.0{-}100.0), (30.0{-}70.0)]\); all of cluster 3 is located uniformly in the region of \([(5.0{-}45.0), (65.0{-}100.0)]\); and finally, cluster 4 is located uniformly in \([(55.0{-}100.0), (0.0{-}35.0)]\).

The illustrative example comprises 200 samples of cluster 1, 200 samples of cluster 2, 400 samples of cluster 3, and 100 samples of cluster 4. Of these 900 samples, the locations of 100 samples are randomised uniformly for the entire region of \([(0.0{-}100.0), (0.0{-}100.0)]\). Figure 1 shows the final locations of all samples. In this example, the number of clusters is known and therefore, the example can be used to assess the efficacy of different clustering methods. Both K-Means and PCA perform better with three clusters, although PCA has similar results with four clusters. The proposed method, with or without spatial correction, significantly outperforms K-Means and PCA in finding the correct number of clusters (Table 3).

Using four clusters, the performance of different clustering methods can be further assessed. The imposed spatial structure and added noise make it difficult for the K-means clustering method to reproduce four clusters (Fig. 2a). The poor performance of the K-means method is clearly shown in the figure in which green and blue clusters are correctly identified but the other two clusters are not. In addition, the importance of different attributes is not identified. For example, the Cu attribute is well clustered (good separation and low variance) across all clusters (Fig. 3a) despite the fact that no Cu dependency is imposed in cluster 4 (Table 2).

PCA overcomes some of the problems of K-Means. Two components were used. Table 4 depicts the contribution of each component to the total variance. When the data are projected, and therefore compressed to only two dimensions, the cluster structure can be clearly seen by visual inspection (Fig. 4). Despite the obvious cluster structure, PCA clustering performs better than K-Means but clusters 1 and 4 are still misclassified to some extent (Fig. 5).

There are two hyper-parameters that need to be defined to apply SWFC: the fuzzier m and the parameter \(\lambda \) for weights. Most cases reported in the literature suggest that a value of 2.0 for m is a reasonable choice to account for uncertainty (Pal and Bezdek 1995; Ren et al. 2016), and \(m = 2.0\) is used in all applications of SWFC discussed in this paper. For parameter \(\lambda \), there is no rule of thumb guidance in the literature. A small value close to 0 means that all weight will be assigned to one attribute, while a large value will tend to assign the same weights to all attributes. For this example, the influences of different \(\lambda \) values between 0.05 and 1.0 on the weights assigned to different attributes are shown in Fig. 6. This figure is useful for assessing the impact of \(\lambda \) on the number of attributes that are considered significant for finding the cluster structure so that an appropriate \(\lambda \) value can be selected. For this example, a value of 0.25 was selected for \(\lambda \) because it tends to give importance to two or three attributes for clustering, which matches the number used to create the clusters in the first place.

The spatial correction was also applied to the three clustering algorithms, K-Means, PCA and WFC, to compare the effect of this correction. The spatial correction applied to K-Means results in the loss of cluster 1 (Fig. 5b), due to its weak membership values, which are reassigned to cluster 4 (Fig. 2b). Although the correction improves PCA compared to K-Means, its performance remains the same as WFC (Fig. 2e). The spatial correction applied to PCA gives results that are similar to SWFC (Fig. 5d, f). WFC is impressively exact, even identifying the correct clusters for the randomly located observations. The performance of SWFC slightly decreases but it still significantly outperforms SK-Means (Fig. 5b, f). The spatial correction alters the final cluster membership of only a few observations in order to make the clusters more spatially compact (Fig. 5f). The boxplots for WFC and SWFC show no substantial difference in their performance statistics (Fig. 3e, f).

This simple illustrative example clearly shows that traditional methods struggle to find the cluster structure correctly when those clusters are defined by different attributes. The proposed method significantly outperforms the traditional methods and can perfectly reveal the cluster structure in this case as well as producing compact clusters in terms of spatial connectivity.

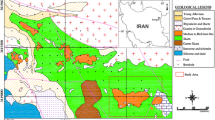

4.2 Simulated Copper Porphyry Deposit Example

This example is a simulated deposit based on actual data from a copper porphyry deposit (Garrido et al. 2017). The orebody is mainly dominated by disseminated chalcopyrite and with four categories of large, moderate, small and minimum presence of clay. A cross-section, comprising 6462 blocks is used to illustrate the results of SWFC in two dimensions. The first level clustering results in 4268 blocks of waste and 2194 blocks of ore. The ore cluster has two grade elements (copper and arsenic), two response attributes (copper recovery and bond index), and one categorical attribute (presence of clay in low, medium and high degree). SWFC is applied to the ore super-cluster to find four sub-clusters using these 5 attributes. For PCA clustering, three principal components were used. Table 5 depicts the explained variance of each component of the total variance.

Cluster 1 is characterised by high content of clay (category 2), low grade values of copper and arsenic (detection limit of 20 for arsenic), and low recovery due to the clay content and low hardness. Cluster 2 is characterised by medium content of clay (category 1), low grade values of copper and arsenic, and slightly higher recovery. Interestingly, SWFC has identified two additional clusters for low content of clay (cluster 3 and 4), which are characterised by high recovery and high bond index but are well separated by arsenic content (low and high), see Table 6.

Table 7 lists the weights assigned to different attributes in SWFC, which effectively shows the degree of importance of each attribute for each cluster. Clay content is the most important attribute for all clusters, which is consistent with the copper recovery performance and hardness as high clay content is related to low copper recovery and softer rocks. The second most relevant attribute differs for different clusters. Arsenic content is more relevant for clusters 1, 2 and 4, whereas recovery is for cluster 3. One interpretation is that SWFC was capable of separating clusters 3 and 4 in terms of arsenic attribute although both have low clay content.

Figure 7 shows the statistics of the four most relevant attributes for K-Means, PCA and WFC. Clay content is well separated, but K-Means separates clays in a different way. The clusters found by PCA and WFC look very similar, except for the size of cluster 3. For all methods, copper grade is split into two main groups: low and high. High content of arsenic is very relevant for cluster 3 in both PCA and WFC, whereas recovery is well separated among clusters. In general, PCA and WFC perform similarly and both are superior to K-Means.

The spatial connectivity of the clusters is another important aspect for SWFC. The results in terms of spatial connectivity for SK-Means, SPCA, and SWFC are shown in Fig. 8. SK-Means does not preserve cluster 2 (Fig. 8a, b) and SPCA does not preserve cluster 4 (Fig. 8c, d) due to the poor connectivity of the clusters. For WFC, there are several blocks in cluster 4 (blue) that are spatially unconnected (Fig. 8e); the spatial correction generates much more spatially compact clusters (Fig. 8f), which is a desirable property achieved by the proposed method.

This simple two-dimensional case study illustrates the power of SWFC to produce compact and well separated clusters while preserving their spatial connectivity. It does so by selecting the appropriate attributes relevant for the cluster structure using the optimisation technique discussed above. The resulting clusters can then be much more effectively used for scheduling. Taking into account the characteristics of each cluster, for example, the scheduler may avoid too many jumps between different clusters in order to derive sets of blocks with similar characteristics to be delivered to the plant for a particular time period.

4.3 Simulated Geometallurgical Block Model Example

This geometallurgical block model was built based on the Malmberget iron deposit in northern Sweden using simulation modules for geology, sampling, production and mining economics. The complete methodology used to build this geometallurgical block can be found in (Lishchuk 2016; Lund et al. 2015).

This geometallurgical model has \(50\times 50\times 50\) number of blocks of size \(5\times 5\times 5\)m, where 21,710 of them are ore blocks. The 23 attributes used for clustering are: lithology, 6 mineral grades, 14 chemical element grades, specific gravity, and iron recovery (Table 8). In this example, the focus is on building geometallurgical domains for iron recovery. Lithology should play an important role in clustering, but lithology alone in this case is not sufficient to discriminate iron recovery. Finding the other attributes that can contribute to a better identification of clusters is very important.

The flexibility of SWFC is illustrated by setting the objective as achieving a geometallurgical domaining for Fe recovery. To do so, the targeted distance is used for iron recovery with the values at 15, 50 and 85% percentiles of its distribution, corresponding to recovery values of 82.41, 88.98 and 91.22% respectively, and a weight of 15% was used for the recovery attribute. These conditions provide a guide for SWFC to find three clusters. The purpose of imposing the target and weight to Fe recovery is to find which secondary attributes would be useful for clustering the structure as it is expected that discovered clusters will tend to have Fe recovery values close to the defined targets.

The number of clusters is set to three according to both DBI and SI values (Table 9). For PCA clustering, three principal components were used. Table 10 shows the explained variance of each component of the total variance.

The results for apatite, magnetite, iron, iron recovery, and rock type are used to compare the performance of the clustering methods. Some interesting observations can be made about the centroids of the three clusters (Table 11). The targeted distance applied to iron recovery is very well represented by the SWFC method, but not so by SK-Means and SPCA because they do not use targeted distance functions. Lithology is also well discriminated and the positive correlation between apatite and iron recovery is maintained. Apatite is separated only as low content for cluster 3 in SWFC, whereas SK-Means and SPCA do not separate apatite to the same extent in cluster 3 but are similar for clusters 1 and 2. The centroids of the three clusters for iron grade show a similar separation for the three methods.

Figure 9 shows the statistics of the six clustering methods. SPCA separates each lithology into each cluster as does SK-Means. SWFC clusters lithology in a different way, for example, cluster 1 contains the three lithologies, but clusters 2 and 3 contain only lithology 1 and 3 respectively. This difference may be explained by the fact that WFC and SWFC seek the imposed targets for iron recovery. A summary of the differences of clustering among K-Means, PCA and WFC is given in Fig. 10a. K-Means shows some differences compared to PCA and WFC, while PCA and WFC are very similar in performance (Fig. 10b).

This case study of a complete three-dimensional geometallurgical block model demonstrates the flexibility of applying SWFC in practice. The objective was for the three clusters to be centred in specific values of iron recovery, which was fully achieved. The spatial correction step in the three clustering methods makes some changes in the final membership (Fig. 10b). Although these changes are small, they are worthwhile as they ensure that the derived clusters are as spatially compact as possible.

5 Conclusions and Future Work

Identifying geometallurgical clusters or domains in mining applications is very important not just to characterise geology and geochemistry, but also to assist in choosing optimal processing routes for parcels of ore with different properties. Geometallurgy is increasingly incorporating more information and more variables, which makes it more difficult to find useful cluster structures for mine planning purposes.

In this paper, the difficulty of traditional clustering methods is demonstrated when dealing with multivariate scenarios in which the cluster structures depend on different attributes, as is commonly the case in practice. A new clustering method is proposed which is based on fuzzy clustering but incorporates additional valuable characteristics such as feature selection, spatial correction and the flexibility of including expert knowledge. Expert knowledge in the proposed method can be incorporated through an appropriate distance definition (categorical or targeted distances) and forcing a specific weight to a particular attribute.

Three case studies were presented to illustrate the application of SWFC. The first case study was explicitly designed to construct clusters that depend on different subsets of attributes. While the traditional methods fail to discover the true clusters, WFC and SWFC can readily find the designed cluster structure and SWFC also constructs well-connected clusters by incorporating spatial information. The second case study was used to illustrate graphically the effectiveness of SWFC using a two-dimensional synthetic geometallurgical model. The clusters found by SWFC are spatially well-connected and the most compact. Finally, SWFC was applied to a complete synthetic geometallurgical block model to demonstrate its capability and flexibility in building clusters, which in this case are geometallurgical domains for iron recovery. By imposing a targeted distance for iron recovery and weight, SWFC can find the relevant secondary attributes that control the cluster structures. In summary, SWFC has been demonstrated to be capable of defining meaningful geometallurgical domains for different application scales, based either on samples or complete block models.

In future research, the geometallurgical uncertainty will also take into account for the clustering method. Uncertainty can be introduced by generating many realisations of the block model. The SWFC method can then include the distances between realisations to account for uncertainty. Also, the interaction potential could be reformulated to incorporate some form of multivariate spatial correlation, such as semivariogram or correlogram, instead of the Potts model. It is also necessary to investigate an optimisation formulation that can include the minimisation of compactness, maximisation of separation, feature selection and spatial correction all within a single integrated step.

References

Allard D, Guillot G (2000) Clustering geostatistical data. In: Proceedings 6th International Geostatistics Congress. Cape Town, SA, pp 49–62

Ambroise C, Dang M, Govaert G (1996) Clustering of spatial data by the EM algorithm. In: Geostatistics for Environmental Applications. Springer, Lisbon, pp 493–504

Bourgault G, Marcotte D, Legendre P (1992) The multivariate (co) variogram as a spatial weighting function in classification methods. Math Geol 24(5):463–478

Boykov Y, Veksler O (2006) Graph cuts in vision and graphics: theories and applications. In: Handbook of Mathematical Models in Computer Vision. Springer, Boston, pp 79–96

Carlo G, Paolo G, Roberto P (2017) Spatial clustering of curves with an application of satellite data. Spat Stat 20:110–124

Coward S, Dowd P (2015) Geometallurgical models for the quantification of uncertainty in mining project value chains. In: 37th APCOM Conference, Fairbanks Alaska, pp 360–369

Coward S, Dowd, PA, Vann J (2013) Value chain modelling to evaluate geometallurgical recovery factors. In: 36th APCOM Conference, Fundação Luiz Englert, Brazil; ISBN 978-85-61155-02-5, Porto Alegre, Brazil, pp 288–289

Coward S, Vann J, Dunham S, Stewart M (2009) The primary-response framework for geometallurgical variables. In: 7th International Mining Geology Conference. AusIMM, Perth, WA, pp 109–113

Davies DL, Bouldin DW (1979) A cluster separation measure. IEEE Trans Pattern Anal Mach Intell 1(2):224–227

Deutsch CV (1998) Cleaning categorical variable (lithofacies) realizations with maximum a-posteriori selection. Comput Geosci 24(6):551–562

Ding C, He X (2004) K-means clustering via principal component analysis. In: Twenty First International Conference on Machine Learning, ACM, Banff, Canada, p 29

Dunham S, Vann J (2007) Geometallurgy, geostatistics and project value–does your block model tell you what you need to know? In: Project Evaluation Conference. AusIMM, Melbourne, pp 189–196

Dunn JC (1973) A fuzzy relative of the isodata process and its use in detecting compact well-separated clusters. Cybernetics 3:32–57

Fouedjio F (2016) A clustering approach for discovering intrinsic clusters in multivariate geostatistical data. Mach Learn Data Min Pattern Recognit 9729:491–500

Friedman JH, Meulman JJ (2004) Clustering objects on subsets of attributes. J R Stat Soci Ser B Stat Methodol 66(4):815–839

Garrido M, Sepúlveda E, Navarro F (2017) Optimization of planning and scheduling of ore body with open pit extraction considering homogeneity in clays as geometallurgical variables. In: 5th International Seminar on Mine Planning, Santiago, pp 294–300

Goodfellow R, Dimitrakopoulos R (2017) Simultaneous stochastic optimization of mining complexes and mineral value chains. Math Geosci 49(3):341–360

Guillot G, Kan-King-Yu D, Michelin J, Huet P (2006) Inference of a hidden spatial tessellation from multivariate data: application to the delineation of homogeneous regions in an agricultural field. J R Stat Soc Ser C (Appl Stat) 55(3):407–430

Hoal KO, Woodhead J, Smith KS (2013) The importance of mineralogical input into geometallurgy programs. In: 2nd AusIMM International Geometallurgy Conference, October, Brisbane, QLD, pp 17–25

Hunt J, Berry R, Bradshaw D, Triffett B, Walters S (2014) Development of recovery domains: examples from the prominent hill IOCG deposit, Australia. Miner Eng 64:7–14

Hunt J, Kojovic T, Berry R (2013) Estimating comminution indices from ore mineralogy, chemistry and drill core logging. In: 2nd AusIMM International Geometallurgy Conference, October. Brisbane, QLD, pp 173–176

Keeney L, Walters SG (2011) A methodology for geometallurgical mapping and orebody modelling. In: 1st AusIMM International Geometallurgy Conference. September, Brisbane, QLD, pp 217–225

Leichliter S, Larson D (2013) Geometallurgy for two recovery process operations at Cripple Creek & Victor gold mine. Min Eng 65(1):29–33

Lishchuk V (2016) Geometallurgical programs—critical evaluation of applied methods and techniques, Licentiate thesis, Lulea University of Technology

López AA, Malpica JA (2008) High resolution satellite classification with graph cut algorithms. In: Lecture Notes in Computer Science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics) 5359 LNCS (PART 2), pp 105–112

Luchi D, Rodrigues A, Varejão FM, Santos W (2016) A genetic algorithm approach for clustering large data sets. In: 2016 IEEE 28th International Conference on Tools with Artificial Intelligence (ICTAI), IEEE, pp 570–576

Lund C, Lamberg P, Lindberg T (2015) Development of a geometallurgical framework to quantify mineral textures for process prediction. Miner Eng 82:61–77

Maulik U, Bandyopadhyay S (2000) Genetic algorithm-based clustering technique. Pattern Recognit 33:1455–1465

Nanda SJ, Panda G (2014) A survey on nature inspired metaheuristic algorithms for partitional clustering. Swarm Evolut Comput 16:1–18

Napier-Munn TJ, Morrell S, Morrison RD, Kojovic T (1996) Mineral comminution circuits: their operation and optimisation, vol 2. University of Queensland, Julius Kruttschnitt Mineral Research Centre

Newton M, Graham J (2011) Spatial modelling and optimisation of geometallurgical indices. In: First AusIMM International Geometallurgy Conference. Brisbane, QLD, pp 247–261

Nguyen K, Keeney L (2014) Spatial domaining of highly variable continuous geometallurgical data. In: XXVII International Mineral Processing Congress—IMPC 2014, Gecamin Digital Publications, pp 116–127

Oliver MA, Webster R (1989) A geostatistical basis for spatial weighting in multivariate classification. Math Geol 21(1):15–35

Pal NR, Bezdek JC (1995) On cluster validity for the fuzzy c-means model. IEEE Trans Fuzzy Syst 3(3):370–379

Ren M, Liu P, Wang Z, Yi J (2016) A self-adaptive fuzzy c-means algorithm for determining the optimal number of clusters. Comput Intell Neurosci. https://doi.org/10.1155/2016/2647389

Romary T, Ors F, Rivoirard J, Deraisme J (2015) Unsupervised classification of multivariate geostatistical data: two algorithms. Comput Geosci 85:96–103

Rousseeuw PJ (1987) Silhouettes: a graphical aid to the interpretation and validation of cluster analysis. J Comput Appl Math 20(C):53–65

Ruspini EH (1969) A new approach to clustering. Inf Control 15(1):22–32

Schnetzler E (1994) Visualization and cleaning of pixel-based images, Ph.D. thesis, Stanford University

Tarabalka Y, Charpiat G (2013) A graph-cut-based method for spatio-temporal segmentation of fire from satellite observations. Int Geosci Remote Sens Symp (IGARSS) 2:3494–3497

Tarabalka Y, Rana A (2014) Graph-cut-based model for spectral-spatial classification of hyperspectral images. In: International Geoscience and Remote Sensing Symposium, Quebec, Canada

Wang Y, Song H, Zhang Y (2016) Spectral-spatial classification of hyperspectral images using joint bilateral filter and graph cut based model. Remote Sens 8(9):748

Whitley D (1994) A genetic algorithm tutorial. Stat Comput 4(2):65–85

Wold S, Esbensen K, Geladi P (1987) Principal component analysis. Chemom Intell Lab Syst 2(1–3):37–52

Acknowledgements

This work was supported with the supercomputing resources provided by the Phoenix HPC service at the University of Adelaide. The first author acknowledges the support of CONICYT in the form of a Becas Chile scholarship.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Sepúlveda, E., Dowd, P.A. & Xu, C. Fuzzy Clustering with Spatial Correction and Its Application to Geometallurgical Domaining. Math Geosci 50, 895–928 (2018). https://doi.org/10.1007/s11004-018-9751-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11004-018-9751-0