Abstract

In this work we consider the learning setting where, in addition to the training set, the learner receives a collection of auxiliary hypotheses originating from other tasks. We focus on a broad class of ERM-based linear algorithms that can be instantiated with any non-negative smooth loss function and any strongly convex regularizer. We establish generalization and excess risk bounds, showing that, if the algorithm is fed with a good combination of source hypotheses, generalization happens at the fast rate \(\mathcal {O}(1/m)\) instead of the usual \(\mathcal {O}(1/\sqrt{m})\). On the other hand, if the source hypotheses combination is a misfit for the target task, we recover the usual learning rate. As a byproduct of our study, we also prove a new bound on the Rademacher complexity of the smooth loss class under weaker assumptions compared to previous works.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the standard supervised machine learning setting the learner receives a set of labeled examples, known as the training set. However, very often we have additional information at hand that could be beneficial to the learning process. One such an example is the use of unlabeled data drawn from the marginal distributions, which gives rise to the semi-supervised learning setting (Chapelle et al. 2006). Another example is when the training data is coming from a related problem, as in multi-task learning (Caruana 1997), domain adaptation (Ben-David et al. 2010; Mansour et al. 2009), and transfer learning (Pan and Yang 2010; Taylor and Stone 2009). Among others, there is the use of structural information, such as taxonomy, different views on the same data (Blum and Mitchell 1998), or even a sort of privileged information (Vapnik and Vashist 2009; Sharmanska et al. 2013). In recent years all these directions have received considerable empirical and theoretical attention.

In this work we focus on a less theoretically studied direction in the use of supplementary information—learning with auxiliary hypotheses, that is classifiers or regressors originating from other tasks. In particular, in addition to the training set we assume that the learner is supplied with a collection of hypotheses and their predictions on the training set itself. The goal of the learner is to figure out which hypotheses are helpful and use them to improve the prediction performance of the trained classifier. We will call these auxiliary hypotheses the source hypotheses and we will say that helpful ones accelerate the learning on the target task. We focus on the linear setting, that is, we train a linearFootnote 1 classifier and the source hypotheses are used additively in the prediction process, weighted by arbitrary weights. This generalizes the setting in which the outputs of the source hypotheses are concatenated with the feature vector, a widely used heuristic (Bergamo and Torresani 2014; Li et al. 2010; Tommasi et al. 2014).

The scenario described above is related to the Transfer Learning (TL) and Domain Adaptation (DA) ones, or learning effectively from a possibly small amount of data by reusing prior knowledge (Thrun and Pratt 1998; Pan and Yang 2010; Taylor and Stone 2009; Ben-David et al. 2010). However, transferring from hypotheses offers an advantage compared to the TL and DA frameworks, where one requires access to the data of the source domain. For example, in DA (Ben-David et al. 2010), one employs large unlabeled samples to estimate the relatedness of source and target domains to perform the adaptation. Even if unlabeled data are abundant, the estimation of adaptation parameters can be computationally prohibitive. This is the case, for example, when a large number of domains is involved or when one acquires new domains incrementally.

A recently proposed setting, closer to the one we consider, is Hypothesis Transfer Learning (HTL) (Kuzborskij and Orabona 2013; Ben-David and Urner 2013), where the practical limitations of TL and DA are alleviated through indirect access to the source domain by means of a source hypothesis. Also, in the HTL setting there are no restrictions on how the source hypotheses can be used to boost the performance on the target task.

Albeit empirically the setting considered in this paper has already been extensively exploited in the past (Yang et al. 2007; Orabona et al. 2009; Tommasi et al. 2010; Luo et al. 2011; Kuzborskij et al. 2013). A first theoretical treatment of this setting was given by Kuzborskij and Orabona (2013), where we analyzed a linear HTL algorithm that solves a regularized least-squares problem with a single fixed, unweighted, source hypothesis. We proved a polynomial generalization bound that depends on the performance of the fixed source hypothesis on the target task.

1.1 Our contributions

We extend the formulation in Kuzborskij and Orabona (2013), with a general regularized Empirical Risk Minimization (ERM) problem with respect to any non-negative smooth loss function, not necessarily convex, and any strongly convex regularizer. We prove high-probability generalization bounds that exhibit fast rate, that is \(\mathcal {O}(1/m)\), of convergence whenever any weighted combination of multiple source hypotheses performs well on the target task. In addition, we show that, if the combination is perfect, the error on the training set becomes deterministically equal to the generalization error. Furthermore, we analyze the excess risk of our formulation, and conclude that a good source hypothesis also speeds up the convergence to the performance of the best-in-the-class. As a byproduct of our study, we prove an upper bound on the Rademacher complexity of a smooth loss class that provides extra information compared to that of Lipschitz loss classes. Our analysis, which might be of independent interest, is an alternative to the analysis of Srebro et al. (2010) and it holds under much weaker assumptions.

The rest of the paper is organized as follows. In the next section we make a brief review of the previous work. Next, we formally state our formulation in Sect. 4 and present the main results right after, in Sect. 5. In Sect. 5.1 we discuss the implications and compare them to the body of literature in learning with fast rates and transfer learning. Next, in Sect. 6, we present the proofs of our main results. Section 7 concludes the paper.

2 Related work

Kuzborskij and Orabona (2013) showed that the generalization ability of the regularized least-squares HTL algorithm improves if the supplied source hypothesis performs well on the target task. More specifically, we proposed a key criterion, the risk of the source hypothesis on the target domain, that captures the relatedness of the source and target domains. Later, Ben-David and Urner (2013) showed a similar bound, but with a different quantity capturing the relatedness between source and target. Instead of considering a general source hypothesis, they have confined their analysis to the linear hypothesis class. This allowed them to show that the target hypothesis generalizes better when it is close to the good source hypothesis. From this perspective it is easy to interpret the source hypothesis as an initialization point in the hypothesis class. Naturally, given a starting position that is close to the best in the class, one generalizes well.

Prior to these works there were few studies trying to understand the learning with auxiliary hypotheses subject to different conditions. Li and Bilmes (2007) have analyzed a Bayesian approach to HTL. Employing a PAC-Bayes analysis they showed that given a prior on the hypothesis class, the generalization ability of logistic regression improves if the prior is informative on the target task. Mansour et al. (2008) analyzed a setting of multiple source hypotheses combination. There, in addition to the source hypotheses, the learner receives unlabeled samples drawn from the source distributions, that are used to weight and combine these source hypotheses. They have studied the possibility of learning in such a scenario, however, they did not address the generalization properties of any particular algorithm.

Unlike these works, we focus on the generalization ability of a large family of HTL algorithms that generate the target predictor given a set of multiple source hypotheses. In particular, we analyze Regularized Empirical Risk Minimization with the choice of any non-negative smooth loss and any strongly convex regularizer. Thus our analysis covers a wide range of algorithms, explaining their empirical success. One category of those, prevalent in computer vision (Kienzle and Chellapilla 2006; Yang et al. 2007; Tommasi et al. 2010; Aytar and Zisserman 2011; Kuzborskij et al. 2013; Tommasi et al. 2014), employs the principle of biased regularization (Schölkopf et al. 2001). For example, instead of penalizing large weights by introducing the term \(\Vert \mathbf {w}\Vert ^2\) into the objective function, one enforces them to be close to some “prior” model, that is \(\Vert \mathbf {w}- \mathbf {w}^{\text {prior}}\Vert ^2\). This principle also found its applications in other fields, such as NLP (Daumé III 2007; Daumé III et al. 2010), and electromyography classification (Orabona et al. 2009; Tommasi et al. 2013). Many empirical works have also investigated the use of the source hypotheses in a “black box” sense, sometimes not even posing the problem as transfer learning (Duan et al. 2009; Li et al. 2010; Luo et al. 2011; Bergamo and Torresani 2014), and recently in conjunction with deep neural networks (Oquab et al. 2014).

In the literature there are several other machine learning directions conceptually similar to the one we consider in this work. Arguably, the most well known one is the Domain Adaptation (DA) problem. The standard machine learning assumption is that the training and the testing sets are sampled from the same probability distribution. In such case, we expect that a hypothesis generated by the learner from that training set will lead to sensible predictions on the testing set. The difficulty arises when training and testing distributions differ, that is we have a training set sampled from the source domain and testing set from the target domain. Clearly, the hypothesis generated from the source domain can perform arbitrarily badly on the target one. The paradigm of DA, addressing this issue has received a lot of attention in recent years (Ben-David et al. 2010; Mansour et al. 2009). Although, this framework is different from the one we study in this work, we identify similarities and compare our findings with the theory of learning from different domains in Sect. 5.2.

3 Definitions

In this section we introduce the definitions used in the rest of the paper.

We denote random variables by capital letters. The expected value of a random variable distributed according to a probability distribution \(\mathcal {D}\) is denoted by \({{\mathrm{\mathbb {E}}}}_{X \sim \mathcal {D}}[X]\) and the variance is denoted by \(\mathrm {Var}_{X \sim \mathcal {D}}[X]\). The small and capital bold letters will stand respectively for the vectors and matrices, e.g. \(\mathbf {x}= [x_1, \ldots , x_d]^{\top }\) and \(\mathbf {A}\in \mathbb {R}^{d_1 \times d_2 }~\).

Denoting by \(\mathcal {X}\) and \(\mathcal {Y}\) respectively the input and output space of the learning problem, the training set is \(S=\{(\mathbf {x}_i,y_i)\}_{i=1}^m\), drawn i.i.d. from the probability distribution \(\mathcal {D}\) defined over \(\mathcal {X}\times \mathcal {Y}\). Without the loss of generality we will have \(\mathcal {X}= \{\mathbf {x}: \Vert \mathbf {x}\Vert \le 1\}\) and we will focus on the problems where \(\mathcal {Y}= [-C, C]\).

To measure the accuracy of a learning algorithm, we introduce a non-negative loss function \(\ell (h(\mathbf {x}), y)\), which measures the cost incurred predicting \(h(\mathbf {x})\) instead of y. The risk of a hypothesis h, with respect to a probability distribution \(\mathcal {D}\), and the empirical risk measured on the sample S are then defined as

In the following, the risk is measured with respect to the probability distribution of the target domain, unless stated otherwise. We capture the smoothness of the loss function via following definition.

3.1 H-smooth loss function

We say that a non-negative loss function \(\ell : \mathcal {Y}\times \mathcal {Y}\mapsto \mathbb {R}_+\) is H -smooth iff,

In this work we will make use of strongly convex regularizers, functions that are defined as follows.

3.2 Strongly convex function

A function \(\varOmega \) is \(\sigma \)-strongly convex w.r.t. a norm \(\Vert \cdot \Vert \) iff for all \(\mathbf {w}, \mathbf {v}\), and \(\alpha \in (0, 1)\) we have

We will quantify the complexity of a hypothesis class by the means of Rademacher complexity (Bartlett and Mendelson 2003). In particular, the empirical Rademacher complexity of the hypothesis class \(\mathcal {H}\) measured on the sample S and its expectation are defined as

Here, \(\varepsilon _i\) is a random variable such that \(\mathbb {P}(\varepsilon _i=1) = \mathbb {P}(\varepsilon _i=-1) = \frac{1}{2}\). Similarly, as in the case of the risk, the Rademacher complexity is measured with respect to the probability distribution of the target domain, unless stated otherwise.

4 Transferring from auxiliary hypotheses

In the following we will capture and generalize many transfer learning formulations that employ a collection of given source hypotheses \(\{h^{\text {src}}_i : \mathcal {X}\mapsto \mathcal {Y}\}_{i=1}^n\) within the framework of Regularized Empirical Risk Minimization (ERM). These problems typically involve a criterion for source hypothesis selection and combination with the goal to increase performance on the target task (Yang et al. 2007; Tommasi et al. 2014; Kuzborskij et al. 2015). Indeed, some source hypotheses might come from tasks similar to the target task and the goal of an algorithm is to select only relevant ones. In this work we will consider source combination

and target hypothesis

with the relevance of the sources characterized by the parameter \(\varvec{\beta }\in \mathbb {R}^n\). We will focus on the Regularized ERM formulations with the choice of any non-negative smooth loss function and any strongly-convex regularizer. This puts our problem into the class of the ones that can be solved efficiently, yet endowed with interesting properties.

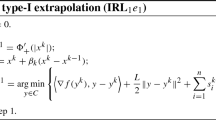

4.1 Regularized ERM for transferring from auxiliary hypotheses

Let \(\ell : \mathcal {Y}\times \mathcal {Y}\mapsto \mathbb {R}_+\) be an H-smooth loss function and let \(\varOmega : \mathcal {H}\mapsto \mathbb {R}_+\) be a \(\sigma \)-strongly convex function w.r.t. a norm \(\Vert \cdot \Vert \). Given the target training set \(S = \{(\mathbf {x}_i, y_i)\}_{i=1}^m\), \(\lambda \in \mathbb {R}_+\), source hypotheses \(\{h^{\text {src}}_i\}_{i=1}^n\), and parameters \(\varvec{\beta }\) obeying \(\varOmega (\varvec{\beta }) \le \rho \), the algorithm generates the target hypothesis \(h_{\hat{\mathbf {w}}, \varvec{\beta }}\), such that

Note that (2) is minimized only w.r.t. \(\mathbf {w}\), that is, we do not analyze any particular algorithm that searches for the optimal weights of the source hypotheses. However, we assume that \(\varOmega (\varvec{\beta }) \le \rho \), that is we constrain \(\varvec{\beta }\) through a strongly convex function. Thus, we cover regularized algorithms generating \(\varvec{\beta }\), which includes most of the empirical work in this field, and potential new algorithms.

In the following we will pay special attention to a quantity that captures the performance of the source hypothesis combination \(h^{\text {src}}_{\varvec{\beta }}(\mathbf {x})\) on the target domain

Our analysis will focus on the generalization properties of \(h_{\hat{\mathbf {w}}, \varvec{\beta }}\). In particular, our main goal will be to understand the impact of the source hypothesis combination on the performance of the target hypothesis. In our analysis we will discuss various regimes of interest, for example considering perfect and arbitrarily bad source hypothesis. Our discussion will cover scenarios where the auxiliary hypotheses accelerate the learning and the conditions when we can provably expect perfect generalization. Finally, we will consider the consistency of the algorithm (1) and pinpoint conditions when we achieve faster convergence to the performance of the best-in-the-class.

One special example covered by our analysis, commonly applied in transfer learning, is the biased regularization (Schölkopf et al. 2001). Consider the following least-squares based algorithm.

4.2 Least-squares with biased regularization

Given the target training set \(S = \{(\mathbf {x}_i, y_i)\}_{i=1}^m\), source hypotheses \(\{\mathbf {w}^{\text {src}}_i\}_{i=1}^n \subset \mathcal {H}\), parameters \(\varvec{\beta }\in \mathbb {R}^n\) and \(\lambda \in \mathbb {R}_+\), the algorithm generates the target hypothesis \(h(\mathbf {x}) = \left\langle \hat{\mathbf {w}}, \mathbf {x} \right\rangle \), where

This problem has a simple intuitive interpretation: minimize the training error on the target training set while keeping the solution close to the linear combination of the source hypotheses. One can naturally arrive at (3) from a probabilistic perspective: The solution \(\hat{\mathbf {w}}\) is a maximum a posteriori estimate when the conditional distribution is Gaussian and the prior is a \(\mathbf {W}^{\text {src}}\varvec{\beta }\)-mean, \(\frac{1}{\lambda } \mathbf {I}\)-covariance Gaussian distribution. Even though biased regularization is a simple idea, it found success in a plethora of transfer learning applications, ranging from computer vision (Kienzle and Chellapilla 2006; Yang et al. 2007; Tommasi et al. 2010; Aytar and Zisserman 2011; Kuzborskij et al. 2013; Tommasi et al. 2014) to NLP (Daumé III 2007), to electromyography classification (Orabona et al. 2009; Tommasi et al. 2013).

Claim

Least-Squares with Biased Regularization is a special case of the Regularized ERM in (1).

Proof

Introduce \(\mathbf {w}'\), such that \(\mathbf {w}' = \mathbf {w}- \mathbf {W}^{\text {src}}\varvec{\beta }\). Then we have that problem (3) is equivalent to

which in turn is a special version of (2) when \(h^{\text {src}}_i(\mathbf {x}) = \left\langle \mathbf {w}^{\text {src}}_i, \mathbf {x} \right\rangle \), we use the square loss, and \(\Vert \cdot \Vert _2^2\) as regularizer. \(\square \)

Albeit practically appealing, the formulation (3) is limited in the fact that the source hypotheses must be a linear predictor living in the same space of the target predictor. Instead, the formulation in (1) naturally generalizes the biased regularization formulation, allowing to treat the source hypothesis as “black box” predictors.

5 Main results

In this section, we present the main results of this work: generalization and excess risk bounds for the Regularized ERM. In the next section we discuss in detail the implications of these results, while we defer the proofs to the subsequent sections.

The first bound demonstrates the utility of the perfect combination of source hypotheses, while the second lets us observe the dependency on the arbitrary combination. In particular, the first bound explicitates the intuition that given a perfect source hypothesis learning is not required. In other words, when \(R^{\text {src}}=0\) we have that the empirical risk becomes equal to the risk with probability one.

Theorem 1

Let \(h_{\hat{\mathbf {w}}, \varvec{\beta }}\) be generated by Regularized ERM, given a m-sized training set S sampled i.i.d. from the target domain, source hypotheses \(\{h^{\text {src}}_i : \Vert h^{\text {src}}_i\Vert _\infty \le 1 \}_{i=1}^n\), any source weights \(\varvec{\beta }\) obeying \(\varOmega (\varvec{\beta }) \le \rho \), and \(\lambda \in \mathbb {R}_+\). Assume that \(\ell (h_{\hat{\mathbf {w}}, \varvec{\beta }}(\mathbf {x}), y) \le M\) for any \((\mathbf {x}, y)\) and any training set. Then, denoting \(\kappa = \frac{H}{\sigma }\) and assuming that \(\lambda \le \kappa \), we have with probability at least \(1 - e^{-\eta }, \ \forall \eta \ge 0\)

where \(u^{\text {src}}= R^{\text {src}}\left( m + \frac{\kappa \sqrt{m}}{\lambda } \right) + \kappa \sqrt{\frac{R^{\text {src}}m \rho }{\lambda }}\).

Now we focus on the consistency of the HTL. Specifically, we show an upper bound on the excess risk of the Regularized ERM, which depends on \(R^{\text {src}}\), that is the risk of the combined source hypothesis \(h^{\text {src}}_{\varvec{\beta }}\) on the target domain. We observe that for a small \(R^{\text {src}}\), the excess risk shrinks at a fast rate of \(\mathcal {O}(1/m)\). In other words, good prior knowledge guarantees not only good generalization, but also fast recovery of the performance of the best hypothesis in the class.

This bound is similar in spirit to the results of localized complexities, as in the works of Bartlett et al. (2005), Srebro et al. (2010), however we focus on the linear HTL scenario rather than a generic learning setting. Later, in Sect. 5.1, we compare our bounds to these works and show that our analysis achieves superior results.

Theorem 2

Let \(h_{\hat{\mathbf {w}}, \varvec{\beta }}\) be generated by Regularized ERM, given the m-sized training set S sampled i.i.d. from the target domain, source hypotheses \(\{h^{\text {src}}_i : \Vert h^{\text {src}}_i\Vert _\infty \le 1\}_{i=1}^n\), any source weights \(\varvec{\beta }\) obeying \(\varOmega (\varvec{\beta }) \le \rho \), and \(\lambda \in \mathbb {R}_+\). Then, denoting \(\kappa = \frac{H}{\sigma }\), assuming that \(\lambda \le \kappa \le 1\), and setting the regularization parameter

for any choice of \(\tau \ge 0\), we have with high probability that

5.1 Implications

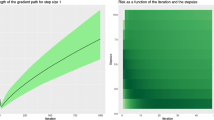

We start by discussing the effect on the generalization ability of the source hypothesis combination. Intuitively, a good source hypothesis combination should facilitate transfer learning, while a reasonable algorithm must not fail if we provide it with the bad one. That said, a natural question to ask here is, what makes a good or bad source hypothesis? As in previous works in transfer learning and domain adaptation, we capture this notion via a quantity that has two-fold interpretation: (1) the performance of the source hypothesis combination on the target domain; (2) relatedness of source and target domains. In the theorems presented in the previous sections we denoted it by \(R^{\text {src}}\), that is the risk of the source hypothesis combination on the target domain. In this section we will consider various regimes of interest with respect to \(R^{\text {src}}\).

5.1.1 When the source is a bad fit

First consider the case when the source hypothesis combination \(h^{\text {src}}_{\varvec{\beta }}\) is useless for the purpose of transfer learning, for example, \(h^{\text {src}}_{\varvec{\beta }}(\mathbf {x}) = 0\) for all \(\mathbf {x}\). This corresponds to learning with no auxiliary information. Then we can assume that \(R^{\text {src}}\le M\), and from Theorem 1 we obtain \( R(h_{\hat{\mathbf {w}}}) - \hat{R}_S(h_{\hat{\mathbf {w}}}) \le \mathcal {O}\left( 1/ (\sqrt{m} \lambda ) \right) \). This rate matches the one in the analysis of regularized least-squares (Vito et al. 2005; Bousquet and Elisseeff 2002), which is a special case of the smooth loss function that the Regularized ERM employs. On the other hand, Srebro et al. (2010) showed a better worst-case rate \(\mathcal {O}(1/\sqrt{m \lambda })\). However, their framework builds upon a worst case Rademacher complexity which does not involve the expectation over the sample and does not lead to the dependency on \(R^{\text {src}}\) we have obtained in Theorem 1. We will discuss this problem in details later.

5.1.2 When the source is a good fit

Here we would like to consider the behavior of the algorithm in the finite-sample and asymptotic scenarios. We first look at the regime of small m, in particular \(m = \mathcal {O}(1/R^{\text {src}})\). In this case, the fast rate term will dominate the bound, and we obtain the convergence rate of \(\mathcal {O}( \sqrt{\rho } / (m \sqrt{\lambda }) )\). In other words, we can expect faster convergence when m is small, where “small” depends on \(R^{\text {src}}\), the quality of combined source hypotheses. Now consider the asymptotic behavior of the algorithm, particularly when m goes to infinity. In such case, the algorithm exhibits a rate of \(\mathcal {O}\left( R^{\text {src}}/ \sqrt{m} \lambda + \sqrt{(R^{\text {src}}\rho ) / m \lambda }\right) \), so \(R^{\text {src}}\) controls the constant factor of the rate. Hence, the quantity \(R^{\text {src}}\) governs the transient regime for small m and the asymptotic behavior of the algorithm, predicting faster convergence in both regimes when it is small.

5.1.3 When source is a perfect fit

It is conceivable that the source hypothesis exploited is the perfect one, that is \(R^{\text {src}}= 0\). In other words, the source hypothesis combination is a perfect predictor for the target domain. Theorem 1 implies that \(R(h_{\hat{\mathbf {w}}, \varvec{\beta }}) = \hat{R}_S(h_{\hat{\mathbf {w}}, \varvec{\beta }})\) with probability one. We note that for many practically used smooth losses, such as the square loss, this setting is only realistic if the source and target domains match and the problem is noise-free. However, we can observe \(R^{\text {src}}= 0\), for example, when the squared hinge loss, \(\ell (z,y) = \max \{0, 1 - zy\}^2\), is used and all target domain examples are classified correctly by the source hypothesis combination, case that is not unthinkable for related domains.

5.1.4 Fast rates

There is a number of works in the literature investigating a rate of convergence faster than \(1/\sqrt{m}\) subject to different conditions. In particular, the localized Rademacher complexity bounds of Bartlett et al. (2005) and Bousquet (2002) can be used to obtain results similar to the second inequality of Theorem 1. Indeed, Theorem 4 shows a bound which is very similar to the localized ones, albeit with two differences. The r.h.s. of the first inequality in Theorem 4 vanishes when the loss class has zero variance. Though intuitively trivial, this allows to prove a considerable result in the theory of transfer learning as it quantifies the intuition that no learning is necessary if the source has perfect performance on the target task. Second, by applying the standard localized Rademacher complexity bounds of Bousquet (2002), and assuming the use of the Lipschitz loss function, we do not achieve a fast rate of convergence, as can be seen from Theorem 8, shown in the ‘Appendix’. We suspect that assuming the smoothness of the loss function is crucial to prove fast rates in our formulation.

Fast rates for ERM with the smooth loss have been thoroughly analyzed by Srebro et al. (2010). Yet, the analysis of our HTL algorithm within their framework would yield a bound that is inferior to ours in two respects. The first concerns the scenario when the combined source hypothesis is perfect, that is \(R^{\text {src}}= 0\). The generalization bound of Srebro et al. (2010) does not offer a way to show that the empirical risk converges to the risk with probability one—instead one can only hope to get a fast rate of convergence. The second problem is in the fact that such bound would depend on the empirical performance of combined source hypothesis. As we have noted before, the quantity \(R^{\text {src}}\) is essential because it captures the degree of relatedness between two domains. In their bounds, one cannot obtain this relationship through the Rademacher complexity term as we did in our analysis. The reason for this is the stronger notion of Rademacher complexity that is employed by that framework, involving a supremum over the sample instead of an expectation. The expectation over the sample of the target distribution is crucial here, because it allows us to quantify how well the source domain is aligned with the target domain, through the source hypothesis acting as a link. However, one can attempt to obtain the bound on the empirical risk in terms of \(R^{\text {src}}\). We prove such a bound in the ‘Appendix’, Theorem 6, and conclude that if one has a good source hypothesis or even a perfect one, the rate is \(\mathcal {O}(1/\root 4 \of {m^3})\), which is worse than ours.

5.2 Comparison to theories of domain adaptation and transfer learning

The setting in DA is different from the one we study, however, we will briefly discuss the theoretical relationship between the two. Typically in DA, one trains a hypothesis from an altered source training set, striving to achieve good performance on the target domain. The key question here is how to alter, or to adapt, the source training set. To answer this question, the DA literature introduces the notion of domain relatedness, which quantifies the dissimilarities between the marginal distributions of corresponding domains. Practically, in some cases the domain relatedness can be estimated through a large set of unlabeled samples drawn from both source and target domains. Theories of DA (Ben-David et al. 2010; Mansour et al. 2009; Ben-David and Urner 2012; Mansour et al. 2008; Cortes and Mohri 2014) have proposed a number of such domain relatedness criteria. Perhaps the most well known are the \(d_{\mathcal {H}\varDelta \mathcal {H}}\)-divergence (Ben-David et al. 2010) and its more general counterpart, the Discrepancy Distance (Mansour et al. 2009). Typically, this divergence is explicitated in the generalization bound along with other terms controlling the generalization on the target domain. Let \(R_{\mathcal {D}^{\text {trg}}}(h)\) and \(R_{\mathcal {D}^{\text {src}}}(h)\) denote the risks of the hypothesis h, measured w.r.t. the target and source distributions. Then a well-known result of Ben-David et al. (2010) suggests that for all \(h \in \mathcal {H}\)

where \(\epsilon _{\mathcal {H}}^{\star } = \min _{h \in \mathcal {H}}\left\{ R_{\mathcal {D}^{\text {trg}}}(h) + R_{\mathcal {D}^{\text {src}}}(h) \right\} \). This result implies that adaptation is possible given that \(d_{\mathcal {H}\varDelta \mathcal {H}}(\mathcal {D}^{\text {src}},\mathcal {D}^{\text {trg}})\) and \(\epsilon _{\mathcal {H}}^{\star }\) are small. One can try to reduce those by controlling the complexity of the class \(\mathcal {H}\) and by minimizing the divergence \(d_{\mathcal {H}\varDelta \mathcal {H}}(\mathcal {D}^{\text {src}},\mathcal {D}^{\text {trg}})\). In practice, the latter can be manipulated through an empirical counterpart on the basis of unlabeled samples. Increasing the complexity of \(\mathcal {H}\) indeed reduces \(\epsilon _{\mathcal {H}}^{\star }\), but inflates \(d_{\mathcal {H}\varDelta \mathcal {H}}(\mathcal {D}^{\text {src}},\mathcal {D}^{\text {trg}})\). On the other hand, by minimizing \(d_{\mathcal {H}\varDelta \mathcal {H}}(\mathcal {D}^{\text {src}},\mathcal {D}^{\text {trg}})\) alone puts us under the risk of increasing \(\epsilon _{\mathcal {H}}^{\star }\), since the empirical divergence is reduced without taking the labelling into account.

Clearly, this bound cannot be directly compared to our result in Theorem 1. However, we note the term \(R^{\text {src}}\) appearing in our results, which plays a role very similar to \(d_{\mathcal {H}\varDelta \mathcal {H}}\) in (6). In fact, by defining \(\mathcal {H}= \{\mathbf {x}\mapsto \left\langle \varvec{\beta }, \mathbf {h^{\text {src}}}(\mathbf {x}) \right\rangle \ : \ \varOmega (\varvec{\beta }) \le \tau \}\), where \(\mathbf {h^{\text {src}}}(\mathbf {x}) = [h^{\text {src}}_1(\mathbf {x}), \ldots , h^{\text {src}}_n(\mathbf {x})]^{\top }\), and fixing \(h = h^{\text {src}}_{\varvec{\beta }} \in \mathcal {H}\) in (6), we can write

Plugging this into the generalization bound (5) and assuming that \(\lambda \le 1\) and \(\rho \le 1/\lambda \) we have for the target hypothesis \(h\) that

Albeit this inequality shows the generalization ability of the transfer learning algorithm, comparing to (6), we observe that DA and our result agree on the fact that the divergence between the domains has to be small to generalize well. In fact, in the formulation we consider, the divergence is controlled in two ways: implicitly, by the choice of \(\mathbf {h^{\text {src}}}\) and through the complexity of class \(\mathcal {H}\), that is by choosing \(\tau \). Second, in DA we expect that a hypothesis performs well on the target only if it performs well on the source. In our results, this requirement is relaxed. As a side note, we observe that (7) captures an intuitive notion that a good source hypothesis has to perform well on its own domain. Finally, in the theory of DA \(\epsilon _\mathcal {H}^\star \) is assumed to be small. Indeed, if \(\epsilon _\mathcal {H}^\star \) is large, there is no hypothesis that is able to perform well on both domains simultaneously, and therefore adaptation is hopeless. In our case, the algorithm can still generalize even with large \(\epsilon _\mathcal {H}^\star \), however this is due to the supervised nature of the framework.

We now turn our attention to the previous theoretical works studying HTL-related settings. Few papers have addressed the theory of transfer learning, where the only information passed from the source domain is the classifier or regressor. Mansour et al. (2008) have addressed the problem of multiple source hypotheses combination, although, in a different setting. Specifically, in addition to the source hypotheses, the learner receives the unlabeled samples drawn from the source distributions, which are used to weight and combine these source hypotheses. The authors have presented a general theory of such a scenario and did not study the generalization properties of any particular algorithm. The first analysis of the generalization ability of HTL in the similar context we consider here was done by Kuzborskij and Orabona (2013). The work focused on L2-regularized least squares and a generalization bound involving the leave-one-out risk instead of the empirical one. The following result, obtained through an algorithmic stability argument (Bousquet and Elisseeff 2002), holds with probability at least \(1 - \delta \)

where \(R^{\text {src}}\) is the risk of a single fixed source hypothesis and \(h\) is the solution of a Regularized Least Square problem. We first observe that the shape of the bound is similar to the one obtained in this work, although with the number of differences. First, contrary to our presented bounds, their bound assumes the use of a fixed source hypothesis, which is not even weighted by any coefficient. In practice, this is a very strong assumption, as one can receive an arbitrarily bad source and have no way to exclude it. Second, the bound (8) seems to have a vanishing behavior whenever the risk of the source \(R^{\text {src}}\) is equal to zero. This comes at the cost of the use of a weaker concentration inequality. In Theorem 1 we manage to obtain the same behavior with high probability. Finally, we get a better dependency on \(R^{\text {src}}\).

5.3 Combining source hypotheses in practice

So far we have assumed that problem (4) is supplied with a pre-made combination of source hypotheses, that is, we did not study a particular algorithm for tuning the \(\varvec{\beta }\) weights. However, by analyzing our generalization bound (1), it is easy to come up with algorithms that could be used for this purpose. In particular, by minizing the bound w.r.t. \(\varvec{\beta }\), and assuming that the empirical risk \(\hat{R}_S(h^{\text {src}}_{\varvec{\beta }})\) converges uniformly to \(R^{\text {src}}\), we have with high probability that

Thus, at least theoretically, given a fixed solution \(\hat{\mathbf {w}}\), it is enough to jointly minimize the error of the target hypothesis \(h_{\hat{\mathbf {w}}, \varvec{\beta }}\) and the error of the source combination on the target training set. This is particularly efficient when the square loss is used, since \(\hat{\mathbf {w}}\) can be expresses in terms of an inverse of a covariance matrix that has to be inverted only once (Orabona et al. 2009; Tommasi et al. 2014; Kuzborskij et al. 2015).

Many HTL-like algorithms can be captured through the above by choosing among different loss functions and regularizers \(\varOmega \). The simplest case is just a concatenation of the source hypotheses predictions with the original feature vector. However, by choosing different regularizers and their parameters, we can treat the source hypotheses in a different way from the original features. For example, one might enforce sparsity over the source hypotheses, while using the usual L2 regularizer on the target solution \(\hat{\mathbf {w}}\).

6 Technical results and proofs

In this section we present general technical results that are used to prove our theorems.

First, we present the Rademacher complexity generalization bound in Theorem 4, which slightly differs from the usual ones. The difference comes in the assumption that the variance of the loss is uniformly bounded over the hypothesis class. This will allow us to state a generalization bound that obeys the fast empirical risk convergence rate subject to the small class complexity. Second, we will also show a generalization bound with a confidence term that vanishes if the complexity of the class is exactly zero.

Next, we focus on the Rademacher complexity of the smooth loss function class. We prove a bound on the empirical Rademacher complexity of a hypothesis class, Lemma 3, that depends on the point-wise bounds on the loss function. This novel bound might be of independent interest.

Finally, we employ this result to analyze the effect of the source hypotheses on the complexity of the target hypothesis class in Theorem 5.

6.1 Fast rate generalization bound

The proof of fast-rate and vanishing-confidence-term bounds, Theorem 4, stems from the functional generalization of Bennett’s inequality which is due to Bousquet (2002, Theorem 2.11) and we report it here for completeness.

Theorem 3

(Bousquet 2002) Let \(X_1, X_2, \ldots , X_m\) be identically distributed random variables according to \(\mathcal {D}\). For all \(\mathcal {D}\)-measurable, square-integrable \(g \in \mathcal {G}\), with \({{\mathrm{\mathbb {E}}}}_{X}[g(X)]=0\), and \(\sup _{g \in \mathcal {G}} {{\mathrm{ess\,sup}}}g \le 1\), we denote

Let \(\sigma \) be a positive real number such that \(\sup _{g \in \mathcal {G}} \mathrm {Var}_{X \sim \mathcal {D}}[g(X)] \le \sigma ^2\) almost surely. Then for all \(t \ge 0\), we have that

where

The following technical lemma will be used to invert the right hand side of (10).

Lemma 1

Let \(a,b>0\) such that \(b = (1+a) \log (1+a) - a\). Then \(a\le \frac{3 b}{2 \log (\sqrt{b}+1)}\).

Proof

It is easy to verify that the inverse function \(f^{-1}(b)\) of \(f(a):=(1+a) \log (1+a) - a\) is

where the function \(W:\mathbb {R}_+ \rightarrow \mathbb {R}\) is the Lambert function that satisfies

Hence, to obtain an upper bound to a, we need an upper bound to the Lambert function. We use Theorem 2.3 in Hoorfar and Hassani (2008), that says that

Setting \(C=\frac{\sqrt{b}+1}{e}\), we obtain

where in the last inequality we used the fact that \(x+\sqrt{x} - \log (\sqrt{x}+1) \le \frac{3}{2} x, \forall x\ge 0\), as it can be easily verified comparing the derivatives of both terms. \(\square \)

The following lemma is a standard tool (Mohri et al. 2012, (3.8)–(3.13); Bartlett and Mendelson 2003).

Lemma 2

(Symmetrization) For any \(f \in \mathcal {F}\), given random variables \(S=\{X_i\}_{i=1}^m\), we have

Now we are ready to present the proof of Theorem 4.

Theorem 4

Consider the non-negative loss function \(\ell : \mathcal {Y}\times \mathcal {Y}\mapsto \mathbb {R}_+\), such that \(0 \le \ell (h(\mathbf {x}), y) \le M\) for any \(h \in \mathcal {H}\) and any \((\mathbf {x}, y) \in \mathcal {X}\times \mathcal {Y}\). In addition, let the training set S of size m be sampled i.i.d. from the probability distribution over \(\mathcal {X}\times \mathcal {Y}\). Also for any \(r \ge 0\), define the loss class with respect to the hypothesis class \(\mathcal {H}\) as,

Then we have for all \(h \in \mathcal {H}\), and any training set S of size m, with probability at least \(1 - e^{-\eta }, \ \forall \eta \ge 0\)

where \(v = 4 \mathfrak {R}(\mathcal {L}) + r\).

Proof

To prove the statement, we will consider the uniform deviations of the empirical risk. Namely, we will show an upper bound on the random variable \(\sup _{h \in \mathcal {H}}\left\{ R(h) - \hat{R}_S(h)\right\} \). For this purpose, we will use the functional generalization of Bennett’s inequality given by Theorem 3. Consider the random variable

Using Theorem 3, we have

where,

We now need two things: invert the r.h.s. of (11), treating it as a function of t, and provide an upper-bound on v. For the first part, recall that \(u(y) = (1+y) \log (1+y) - y\). To give an upper-bound of t, we apply Lemma 1 with \(a=\frac{t}{v}\), and \(b=\frac{1}{v}\eta \). This leads to the inequalities

Using this fact, we have with probability at least \(1-e^{-\eta }\) with any \(\eta \ge 0\)

Next we prove the bound on v. We first show that the variance of centered loss function, \(\sigma ^2\), is uniformly bounded by the Rademacher complexity. From the definition of variance we have

Last inequality is due to the fact that \(\ell (h(\mathbf {x}), y) \le M\). Now we upper-bound the second term of v by applying Lemma 2,

We conclude the proof by upper-bounding the expectation terms in (13) and (14) using Lemma 2, and plugging the upper bound on v,

\(\square \)

6.2 Rademacher complexity of smooth loss class

In this section we study the Rademacher complexity of the hypothesis class populated by functions of the form (1), where the parameters \(\mathbf {w}\) and \(\varvec{\beta }\) are chosen by an algorithm with a strongly convex regularizer. For this purpose we employ the results of Kakade et al. (2008, 2012), who studied strongly convex regularizers in a more general setting. Furthermore, we will focus on the use of smooth loss functions as done by Srebro et al. (2010).

The proof of the main result of this section, Theorem 5, depends essentially on the following lemma, that bounds the empirical Rademacher complexity of a H-smooth loss class.

Lemma 3

Let \(\ell : \mathcal {Y}\times \mathcal {Y}\mapsto \mathbb {R}_+\) be the H-smooth loss function. Then for some function class \(\mathcal {F}\), let the loss class be

Then having the sample S of size m and the set

we have that

where \(\varepsilon _i\) is r.v. such that \(\mathbb {P}(\varepsilon _i=1) = \mathbb {P}(\varepsilon _i=-1) = \frac{1}{2}\).

Proof

This proof follows a line of reasoning similar to the proof of Talagrand’s lemma for Lipschitz functions, see for instance Mohri et al. (2012, p. 79). We will also use Lemma B.1 by Srebro et al. (2010) (arXiv extended version), stating that for any H-smooth non-negative function \(\phi : \mathbb {R}\mapsto \mathbb {R}_+\) and any \(x,z \in \mathbb {R}\),

Fix the sample S, then, by definition,

where \(u_{m-1}(f) = \sum _{i=1}^n \varepsilon _i \ell (f(\mathbf {x}_i), y_i)\). By definition of supremum, for any \(\delta > 0\), there exist \(f_1, f_2 \in \mathcal {F}\) such that

Thus for any \(\delta > 0\), by definition of \({{\mathrm{\mathbb {E}}}}_{\varepsilon _m}\),

To obtain the second inequality, we applied (16), where \(s_m = \text{ sgn }(f_1(\mathbf {x}_m) - f_2(\mathbf {x}_m))\). Since the inequality holds for all \(\delta > 0\), we have

Proceeding in the same way for all the other \(\varepsilon _i\), with \(i \ne m\), proves the lemma. \(\square \)

To prove Theorem 5 we will also use the following lemma in Kakade et al. (2012, Corollary 4).

Lemma 4

(Kakade et al. 2012) If \(\varOmega \) is \(\sigma \) strongly convex w.r.t. \(\Vert \cdot \Vert \) and \(\varOmega ^\star (\mathbf {0}) = 0\), then, denoting the partial sum \(\sum _{j \le i} \mathbf {v}_j\) by \(\mathbf {v}_{1:i}\), we have for any sequence \(\mathbf {v}_1, \ldots , \mathbf {v}_m\) and for any \(\mathbf {u}\),

Now we are ready to give the proofs of the Rademacher complexity results.

Theorem 5

Let \(\varOmega \) be a non-negative \(\sigma \)-strongly convex function w.r.t. a norm \(\Vert \cdot \Vert \), and let \(\mathbf {0}\) be its minimizer. Let risk and empirical risk be defined w.r.t. an H-smooth loss function \(\ell : \mathcal {Y}\times \mathcal {Y}\mapsto \mathbb {R}_+\). Finally, given the set of functions \(\{f_i : \mathcal {X}\mapsto \mathcal {Y}\}_{i=1}^n\) with \(\mathbf {f}(\mathbf {x}) := [f_1(\mathbf {x}), \ldots , f_n(\mathbf {x})]^{\top }\), a combination \(f_{\varvec{\beta }}(\mathbf {x}) = \left\langle \varvec{\beta }, \mathbf {f}(\mathbf {x}) \right\rangle \), a scalar \(\alpha > 0\), and any sample S drawn i.i.d. from distribution over \(\mathcal {X}\times \mathcal {Y}\), define classes

and the loss class

Then for the loss class \(\mathcal {L}\), setting constants \(\sup _{\mathbf {x}\in \mathcal {X}} \Vert \mathbf {x}\Vert _\star \le B\) and \(\sup _{\mathbf {x}\in \mathcal {X}} \Vert \mathbf {f}(\mathbf {x})\Vert _\star \le C\), we have that

Proof

The core of the proof consists in an application of Lemma 3. In particular, Lemma 3 allows us to introduce additional information about the loss class by providing bounds on the loss at each example. We will bound the loss at each example using the definition of smoothness, extracting the empirical risk of hypothesis \(\hat{R}_S(f_{\varvec{\beta }})\). The last step is to give an upper-bound on the empirical Rademacher complexity of a class regularized by a strongly convex function. We follow the proof of Kakade et al. (2012, Theorem 7) to accomplish this task. First define the classes

and also define altered samples \(S' := \{ \sqrt{\tau _i} \mathbf {x}_i \}_{i=1}^m\) and \(S'' := \{ \sqrt{\tau _i} \mathbf {f}(\mathbf {x}_i) \}_{i=1}^m\), where \(\tau _i\) is a quantity independent from \(\mathcal {W}\) and \(\mathcal {V}\). Then by applying Lemma 3, we have that,

Having this, we will follow the proof of Kakade et al. (2012, Theorem 7) to bound the empirical Rademacher complexities \(\hat{\mathfrak {R}}_{S'}(\mathcal {H}_{\mathcal {W}})\) and \(\hat{\mathfrak {R}}_{S''}(\mathcal {H}_{\mathcal {V}})\) with quantities of interest. Let \(t > 0\) and apply Lemma 4 with \(\mathbf {u}= \mathbf {w}\) and \(\mathbf {v}_i = t \varepsilon _i \sqrt{\tau _i} \mathbf {x}_i\) to get

Now take expectation w.r.t. all the \(\varepsilon _i\) on both sides. The left hand side is \(m t \hat{\mathfrak {R}}_{S'}(\mathcal {H}_{\mathcal {W}})\) and the last term on the right hand side becomes zero since \({{\mathrm{\mathbb {E}}}}[\varepsilon _i]=0\). Denoting \(r = \frac{1}{m} \sum _{i=1}^m |\tau _i|\) and multiplying through by \(\frac{1}{m t}\), we get

Proving analogously for \(\hat{\mathfrak {R}}_{S''}(\mathcal {H}_{\mathcal {V}})\), we get that

Optimizing over t gives us

Now focus on the upper bound of r. First we obtain bounds on each \(\tau _i\). We start with the bound on the loss function, exploiting smoothness. Let \(\ell (\left\langle \mathbf {w}, \mathbf {x} \right\rangle + f_{\varvec{\beta }}(\mathbf {x}), y) = \phi (\left\langle \mathbf {w}, \mathbf {x} \right\rangle + f_{\varvec{\beta }}(\mathbf {x}))\), where \(\phi : \mathbb {R}\mapsto \mathbb {R}\) is an H-smooth function. From the definition of smoothness (Shalev-Shwartz and Ben-David 2014, (12.5)), we have that for all \(\mathbf {w}\) and \(\mathbf {v}\)

To obtain the last inequality we used the generalized Cauchy-Schwarz inequality and the fact that for an H-smooth non-negative function \(\phi \), we have that \(|\phi '(t)| \le \sqrt{4 H \phi (t)}\), (Srebro et al. 2010, Lemma 2.1). Now recall a property of a \(\sigma \)-strongly-convex function F, that holds for its minimizer \(\mathbf {v}\) and any \(\mathbf {w}\) (Shalev-Shwartz and Ben-David 2014, Lemma 13.5),

Since inequality (17) holds for any \(\mathbf {v}\), set \(\mathbf {v}= \mathbf {0}\), which is also the minimizer of \(\varOmega (\cdot )\), apply aforementioned property to get

The last inequality comes from the definition of the class \(\mathcal {H}\). Now we consider the average and, by Jensen’s inequality,

This gives us

Taking expectation w.r.t. the sample on both sides and applying Jensen’s inequality gives the statement. \(\square \)

6.3 Proofs of main results

Proof of Theorem 1

To show the statement we will apply Theorem 4. In particular, we will consider any choice of \(\mathbf {w}\) and \(\varvec{\beta }\) within the set induced by a strongly-convex function \(\varOmega \). To apply Theorem 4, we need to upper bound the Rademacher complexity of the loss class \(\mathcal {L}\) and also the quantity \(r = \sup _{f \in \mathcal {L}} {{\mathrm{\mathbb {E}}}}_{(\mathbf {x},y)} [f(\mathbf {x}, y)]\).

We obtain the bound on Rademacher complexity by applying Theorem 5. First define the loss class \( \mathcal {L}:= \left\{ (\mathbf {x}, y) \mapsto \ell (h, y) \ : h \in \mathcal {H}\right\} , \) and hypothesis class

To motivate the choice for the constraints observe that for

we have \(\varOmega (\hat{\mathbf {w}}) \le \lambda ^{-1} \hat{R}_S(h_{\mathbf {0}, \varvec{\beta }}) = \lambda ^{-1} \hat{R}_S(h^{\text {src}}_{\varvec{\beta }})\), and \(\hat{R}_S(h_{\hat{\mathbf {w}}, \varvec{\beta }}) \le \hat{R}_S(h^{\text {src}}_{\varvec{\beta }})\). That said, by applying Theorem 5 with \(\alpha = \frac{1}{\lambda }\) and \(f_{\varvec{\beta }}=h^{\text {src}}_{\varvec{\beta }}\) and assuming that \(\lambda \le \kappa \), we obtain

Next we obtain the bound on r

The last two inequalities come from Jensen’s inequality and the definition of the class \(\mathcal {H}\). Plugging the bounds on the Rademacher complexity and r into the statement of Theorem 4, and applying the inequality \(\sqrt{a+b} \le \sqrt{a}+ \frac{b}{2\sqrt{a}}\) to the \(\sqrt{v}\) term, gives the statement. \(\square \)

Proof of Theorem 2

For any choice of \(\varvec{\beta }\) with \(\varOmega (\varvec{\beta }) \le \rho \), denote the best in the class by

By the definition of \(\hat{\mathbf {w}}\), we have

Now denote

Then, by following the proof of Theorem 1 until the application of inequality \(\sqrt{a+b} \le \sqrt{a} + \frac{b}{2 \sqrt{a}}\), ignoring constants, using the assumption (20), and assuming that \(\lambda \le \kappa \le 1\) we have that

Optimizing the l.h.s. over \(\lambda \) gives

We plug it back into (21) to obtain that

All that is left is to concentrate \(\hat{R}_S(h_{\mathbf {w}^\star , \varvec{\beta }})\) around its mean. Denoting the variance by

we apply Bernstein’s inequality

Setting

we have that with probability at least \(1-e^{-\eta }, \ \forall \eta \ge 0\)

The last inequality comes from the observation that \(R(h_{\mathbf {w}^\star , \varvec{\beta }}) \le R(h_{\mathbf {0}}) = R^{\text {src}}\). Plugging this result into (22) completes the proof. \(\square \)

7 Conclusions

In this paper we have formally captured and theoretically analyzed a general family of learning algorithms transferring information from multiple supplied source hypotheses. In particular, our formulation stems from the regularized Empirical Risk Minimization principle with the choice of any non-negative smooth loss function and any strongly convex regularizer. Theoretically we have analyzed the generalization ability and excess risk of this family of HTL algorithms. Our analysis showed that a good source hypothesis combination facilitates faster generalization, specifically in \(\mathcal {O}(1/m)\) instead of the usual \(\mathcal {O}(1/\sqrt{m})\). Furthermore, given a perfect source hypothesis combination, our analysis is consistent with the intuition that learning is not required. As a byproduct of our investigation, we came up with new results in Rademacher complexity analysis of the smooth loss classes, which could be of independent interest.

Our conclusions suggest the key importance of a source hypothesis selection procedure. Indeed, when an algorithm is provided with enormous pool of source hypotheses, how to select relevant ones on the basis of only a few labeled examples? This might sound similar to the feature selection problem under the condition that \(n \gg m\), however, earlier empirical studies by Tommasi et al. (2014) with hundreds of sources did not find much corroboration for this hypothesis when applying L1 regularization. Thus, it remains unclear if having few good sources from hundreds is a reasonable assumption.

Notes

Non-linear classifiers can be easily produced with the use of kernels.

References

Aytar, Y., & Zisserman, A. (2011). Tabula rasa: Model transfer for object category detection. In IEEE International Conference on Computer Vision (ICCV), (pp. 2252–2259). IEEE.

Bartlett, P. L., & Mendelson, S. (2003). Rademacher and gaussian complexities: Risk bounds and structural results. Journal of Machine Learning Research, 3, 463–482.

Bartlett, P. L., Bousquet, O., & Mendelson, S. (2005). Local Rademacher complexities. Annals of Statistics, 33(4), 1497–1537.

Ben-David, S., & Urner, R. (2012). On the hardness of domain adaptation and the utility of unlabeled target samples. In Algorithmic learning theory, lecture notes in computer science (Vol. 7568, pp. 139–153). Springer.

Ben-David, S., & Urner, R. (2013). Domain adaptation as learning with auxiliary information. In New Directions in Transfer and Multi-Task - Workshop @ Advances in Neural Information Processing Systems.

Ben-David, S., Blitzer, J., Crammer, K., Kulesza, A., Pereira, F., & Vaughan, J. W. (2010). A theory of learning from different domains. Machine learning, 79(1–2), 151–175.

Bergamo, A., & Torresani, L. (2014). Classemes and other classifier-based features for efficient object categorization. In IEEE Transactions on Pattern Analysis and Machine Intelligence, (pp. 99).

Blum, A., & Mitchell, T. (1998). Combining labeled and unlabeled data with co-training. In Conference on Computational learning theory, ACM, (pp. 92–100).

Bousquet, O. (2002). Concentration inequalities and empirical processes theory applied to the analysis of learning algorithms. PhD thesis, Ecole Polytechnique.

Bousquet, O., & Elisseeff, A. (2002). Stability and Generalization. Journal of Machine Learning Research, 2, 499–526.

Caruana, R. (1997). Multitask learning. Machine Learning, 28(1), 41–75.

Chapelle, O., Schölkopf, B., Zien, A., et al. (2006). Semi-supervised learning (Vol. 2). Cambridge: MIT Press.

Cortes, C., & Mohri, M. (2014). Domain adaptation and sample bias correction theory and algorithm for regression. Theoretical Computer Science, 519, 103–126.

Daumé III, H. (2007). Frustratingly easy domain adaptation. In Proceedings of the 45th Annual Meeting of the Association for Computational Linguistics.

Daumé III, H., Kumar, A., & Saha, A. (2010). Frustratingly easy semi-supervised domain adaptation. In Proceedings of the 2010 Workshop on Domain Adaptation for Natural Language Processing, Association for Computational Linguistics, (pp. 53–59).

Duan, L., Tsang, I. W., Xu, D., & Chua, T. (2009). Domain adaptation from multiple sources via auxiliary classifiers. In International Conference on Machine Learning, (pp. 289–296).

Hoorfar, A., & Hassani, M. (2008). Inequalities on the lambert w function and hyperpower function. Journal of Inequalities in Pure and Applied Mathematics, 9(2), 5–9.

Kakade, S. M., Sridharan, K., & Tewari, A. (2008). On the complexity of linear prediction: Risk bounds, margin bounds, and regularization. In Advances in Neural Information Processing Systems, 21, (pp. 793–800).

Kakade, S. M., Shalev-Shwartz, S., & Tewari, A. (2012). Regularization techniques for learning with matrices. Journal of Machine Learning Research, 13, 1865–1890.

Kienzle, W., & Chellapilla, K. (2006). Personalized handwriting recognition via biased regularization. In International Conference on Machine Learning, (pp. 457–464).

Kuzborskij, I., & Orabona, F. (2013). Stability and Hypothesis Transfer Learning. In International Conference on Machine Learning, (pp. 942–950).

Kuzborskij, I., Orabona, F., & Caputo, B. (2013). From N to N+1: Multiclass transfer incremental learning. In Conference on Computer Vision and Pattern Recognition, (pp. 3358–3365).

Kuzborskij, I., Orabona, F., & Caputo, B. (2015). Transfer Learning through Greedy Subset Selection. In International Conference on Image Analysis and Processing.

Li, L., Su, H., Xing, E. P., & Fei-Fei, L. (2010). Object bank: A high-level image representation for scene classification & semantic feature sparsification. In Advances in Neural Information Processing Systems, 23, (pp. 1378–1386).

Li, X., & Bilmes, J. (2007). A bayesian divergence prior for classiffier adaptation. In International Conference on Artificial Intelligence and Statistics, (pp. 275–282).

Luo, J., Tommasi, T., & Caputo B. (2011). Multiclass transfer learning from unconstrained priors. In International Conference on Computer Vision, (pp. 1863–1870).

Mansour, Y., Mohri, M., & Rostamizadeh, A. (2008). Domain adaptation with multiple sources. In Advances in Neural Information Processing Systems, 21, (pp. 1041–1048).

Mansour Y., Mohri M., & Rostamizadeh, A. (2009). Domain adaptation: Learning bounds and algorithms. In The Conference on Learning Theory.

Mohri, M., Rostamizadeh, A., & Talwalkar, A. (2012). Foundations of machine learning. Cambridge: The MIT Press.

Oquab, M., Bottou, L., Laptev, I., & Sivic, J. (2014). Learning and transferring mid-level image representations using convolutional neural networks. In Conference on Computer Vision and Pattern Recognition, (pp. 1717–1724).

Orabona, F., Castellini, C., Caputo, B., Fiorilla, A., & Sandini, G. (2009). Model Adaptation with Least-Squares SVM for Adaptive Hand Prosthetics. In IEEE International Conference on Robotics and Automation, (pp. 2897–2903). IEEE.

Pan, S. J., & Yang, Q. (2010). A survey on transfer learning. IEEE Transactions on Knowledge and Data Engineering, 22(10), 1345–1359.

Schölkopf, B., Herbrich, R., & Smola, A. J. (2001). A generalized representer theorem. In Conference on Computational learning theory, (pp. 416–426). Springer.

Shalev-Shwartz, S., & Ben-David, S. (2014). Understanding machine learning: From theory to algorithms. Cambridge: Cambridge University Press.

Sharmanska, V., Quadrianto, N., & Lampert, C. H. (2013). Learning to rank using privileged information. In IEEE International Conference on Computer Vision (ICCV), (pp. 825–832). IEEE.

Srebro, N., Sridharan, K., & Tewari, A. (2010). Smoothness, low noise and fast rates. In J. Lafferty, C. K. I. Williams, J. Shawe-Taylor, R. S. Zemel, & A. Culotta (Eds.), Advances in neural information processing systems, 23 (pp. 2199–2207). Red Hook: Curran Associates, Inc.

Taylor, M. E., & Stone, P. (2009). Transfer leraning for reinforcement learning domains: A survey. Journal of Machine Learning Research, 10, 1633–1685.

Thrun, S., & Pratt, L. (1998). Learning to learn. New York: Springer.

Tommasi, T., Orabona, F., & Caputo, B. (2010). Safety in numbers: Learning categories from few examples with multi model knowledge transfer. In The Twenty-Third IEEE Conference on Computer Vision and Pattern Recognition, CVPR, San Francisco, CA, USA, 13–18 June 2010, (pp. 3081–3088).

Tommasi, T., Orabona, F., Castellini, C., & Caputo, B. (2013). Improving control of dexterous hand prostheses using adaptive learning. IEEE Transactions on Robotics, 29(1), 207–219.

Tommasi, T., Orabona, F., & Caputo, B. (2014). Learning categories from few examples with multi model knowledge transfer. IEEE Transactions on Pattern Analysis and Machine Intelligence, 36(5), 928–941.

Vapnik, V., & Vashist, A. (2009). A new learning paradigm: Learning using privileged information. Neural Networks, 22(5), 544–557.

Vito, E. D., Caponnetto, A., & Rosasco, L. (2005). Model selection for regularized least-squares algorithm in learning theory. Foundations of Computational Mathematics, 5(1), 59–85.

Yang, J., Yan, R., & Hauptmann, A. (2007). Cross-Domain Video Concept Detection Using Adaptive SVMs. In Proceedings of the 15th international conference on Multimedia, ACM, (pp. 188–197).

Author information

Authors and Affiliations

Corresponding author

Additional information

Editor: Tapio Elomaa.

Appendix: Additional proofs

Appendix: Additional proofs

Theorem 6

Let \(h_{\hat{\mathbf {w}}, \varvec{\beta }}\) be generated by Regularized ERM, given the m-sized training set S sampled i.i.d. from the target domain, source hypotheses \(\{h^{\text {src}}_i\}_{i=1}^n\), any source weights \(\varvec{\beta }\) obeying \(\varOmega (\varvec{\beta }) \le \rho \), and \(\lambda \in \mathbb {R}_+\). Assume that \(\ell (h_{\hat{\mathbf {w}}, \varvec{\beta }}(\mathbf {x}), y) \le M\) for any \((\mathbf {x}, y)\) and any training set. Then, denoting \(\kappa = \frac{H}{\sigma }\) and assuming that \(\lambda \le 1\), we have with probability at least \(1 - e^{-\eta }, \ \forall \eta \ge 0\)

Proof

To prove the statement we will use Theorem 1 of Srebro et al. (2010). In particular, we need to obtain bounds on the empirical risk and also to bound the worst case Rademacher complexity of the class

The corresponding loss class is

A constraint on \(\varOmega (\varvec{\beta })\) in \(\mathcal {H}\) comes from the statement of the theorem, while a constraint on \(\varOmega (\hat{\mathbf {w}})\) comes from an observation that for

so we have \(\varOmega (\hat{\mathbf {w}}) \le \frac{\hat{R}_S(h_{\mathbf {0}, \varvec{\beta }})}{\lambda }\). The same argument immediately gives us a bound on the empirical risk, that is, \(\hat{R}_S(h_{\hat{\mathbf {w}}, \varvec{\beta }}) \le \hat{R}_S(h_{\mathbf {0}, \varvec{\beta }}) = \hat{R}_S(h^{\text {src}}_{\varvec{\beta }})\). Taking expectation on both sides gives the constraint of \(\mathcal {L}\).

By applying Theorem 1 of Kakade et al. (2008) and subadditive property of Rademacher complexities (Bartlett and Mendelson 2003), we have that

Note that the upper bound is the bound on the worst-case Rademacher complexity since no term depends on the sample.

All that is left to do is to show the bound on the empirical risk in terms of \(R^{\text {src}}\). However, we cannot use Theorem 1 of Srebro et al. (2010) since it is not symmetric. Instead we will use a similar localized bound of Bartlett et al. (2005, Corollary 3.5). In order to apply it, we have to obtain an upper bound on the Rademacher complexity of the loss class \(\mathcal {L}\) that is a sub-root function (Bousquet 2002, Definition 4.1). By using the fact that loss function is bounded, we apply Talagrand’s lemma (Mohri et al. 2012), have \(\hat{\mathfrak {R}}_S(\mathcal {L}) \le M \hat{\mathfrak {R}}_S(\mathcal {H})\), upper-bound with the first inequality of (23) and applying Jensen’s inequality w.r.t. \({{\mathrm{\mathbb {E}}}}[\cdot ]\) have

Since upper bound is a sub-root function of \(R^{\text {src}}\), we obtain it’s fixed point \(r^\star \) as required by Corollary 3.5 and conclude that

Now we apply Corollary 3.5 and for any \(K > 0\) we have with probability at least \(1 - e^{-\eta }, \ \forall \eta \ge 0\) the following holds

All that is left to do is to apply Theorem 1 of Srebro et al. (2010) to have

Using the assumption on \(\lambda \), we get the stated result. \(\square \)

1.1 Guarantees using localized Rademacher complexity bounds

The following theorem is due to Bousquet (2002, Theorem 6.1). In particular, we state the inequality appearing prior to the last in the proof, as it better serves our purpose.

Theorem 7

(Bousquet 2002) Let \(\mathcal {F}\) be a class of non-negative functions such that \(\Vert f\Vert _\infty \le M\) almost surely. Let \(\phi _m\) be a function defined on \([0, \infty )\) that is non-negative, non-decreasing, not identically zero, and such that \(\phi _m(r)/\sqrt{r}\) is non-increasing. Moreover let \(\phi _m\) be such that for all \(r > 0\)

Define \(r^\star _m\) as the largest solution of the equation \(\phi _m(r) = r\).Then, for all \(\eta > 0\), with probability at least \(1 - e^{-\eta }\) for all \(f \in \mathcal {F}\) and any \(\{X_i\}_{i=1}^m\) drawn i.i.d.

The following HTL generalization bound is shown using Theorem 7.

Theorem 8

Let \(h_{\hat{\mathbf {w}}, \varvec{\beta }}\) be generated by Regularized ERM, given the m-sized training set S sampled i.i.d. from the target domain, source hypotheses \(\{h^{\text {src}}_i\}_{i=1}^n\), any source weights \(\varvec{\beta }\) obeying \(\varOmega (\varvec{\beta }) \le \rho \), and \(\lambda \in \mathbb {R}_+\). Assume that \(\ell \) is a L-Lipschitz loss function and \(\ell (h_{\hat{\mathbf {w}}, \varvec{\beta }}(\mathbf {x}), y) \le M\) for any \((\mathbf {x}, y)\) and any training set. Then we have with probability at least \(1 - e^{-\eta }, \ \forall \eta \ge 0\)

Proof

The core of the proof is an application of Theorem 7. In particular, we have to obtain the fixed point \(r^\star _m\) and upper bound \(R(h)\) with the risk of the source hypothesis \(R^{\text {src}}\).

Considering the L-Lipschitz loss class of Theorem 7 to be \(\mathcal {L}:= \{(\mathbf {x}, y) \mapsto \ell (h(\mathbf {x}), y) ~:~ h \in \mathcal {H}\}\), we have the relationship \(\hat{\mathfrak {R}}_S(\mathcal {L}) \le L \hat{\mathfrak {R}}_S(\mathcal {H})\) via Talagrand’s lemma (Mohri et al. 2012, Lemma 4.2). Furthermore, let the hypothesis class be

The motivation for the choice of constraints comes from the same argument as in the proof of Theorem 1. That said, we obtain the upper bound

Both terms come by applying Theorem 7 by Kakade et al. (2012). In the first term we set \(f_{\text {max}} = R^{\text {src}}\) and in the second \(f_{\text {max}} = \rho \). Now define function \(\phi _m(r) = L \sqrt{\frac{2 r}{m \lambda \sigma }} + L \sqrt{\frac{2 \rho }{m \sigma }}\), and observe that it verifies the condition of Theorem 7. Next, to obtain the upper bound on \(r_m^\star \), we solve \(L \sqrt{\frac{2 r}{m \lambda \sigma } + \frac{2 \rho }{m \sigma }} \le r\) and get that \( r_m^\star \le \frac{L (L + 1)}{m \lambda \sigma } + L \sqrt{\frac{2 \rho }{m \sigma }}. \) As in Theorem 1, we also get that \(R(h) \le R^{\text {src}}\). Plugging \(r_m^\star \) and the bound on \(R(h)\) into Theorem 7, we have the statement. \(\square \)

Rights and permissions

About this article

Cite this article

Kuzborskij, I., Orabona, F. Fast rates by transferring from auxiliary hypotheses. Mach Learn 106, 171–195 (2017). https://doi.org/10.1007/s10994-016-5594-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10994-016-5594-4