Abstract

In the current work, after generating experimental data points for different volume fraction of nanoparticles (\(\phi\)) and different temperatures, an algorithm to find the best neuron number in the hidden layer of artificial neural network (ANN) method is proposed to find the best architecture and then to predict the thermal conductivity (\(k_{\text{nf}}\)) of SiO2/water–ethylene glycol (50:50) nanofluid. This ANN is a feed-forward network with Levenberg–Marquardt for the learning algorithm. Regarding the experimental data points, a third-order function is obtained. In the fitting method, the mean square error is 2.7547e−05, and the maximum value of error is 0.0125. The correlation coefficient of the fitting method is 0.9919. This surface also shows the behavior of nanofluid based on the \(\phi\) and temperatures, and finally, the results of these methods have been compared. It can be seen that for 8 neuron numbers, the correlation coefficient for all outputs of ANN is 0.993861.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Fluid cooling or heating is very important for many industrial applications. Improving the thermal properties of heat transfer fluids can be a method for heat transfer. Nanofluids have attracted the attention of many scientists in recent years [1,2,3,4,5,6,7,8]. For example, small amounts of nanoparticles in working fluids increase the thermal conductivity of these fluids. To improve the thermal conductivity of liquids, researchers have investigated the thermo-physical properties of nanofluids. Recently, ANNs are used in many scientific projects. ANNs are systems that are inspired by the human brain and the process of data is similar to biological neurons. The process of learning in such systems happens by examples. ANNs are often including connected units which are called neurons. The birth of ANNs returns to the 1940s by Warren McCulloch and Walter Pitts. In the 1940’s, the Hebbian learning algorithm was proposed by Hebb was an unsupervised learning method. In 1954, Hebbian networks were simulated by Farley and Clark AND Holland, Rochester, Holland, Habit and Duda worked on computational methods [9,10,11,12,13,14,15]. In many cases, these ANNs can predict the behavior of the systems. One of the most critical aspects of these ANNs is in predicting the behavior of nonlinear systems or complex systems.

Jamal-Abadi et al. [16] optimized the \(k_{\text{nf}}\) of Al2O3/water nanofluid using ANN. Their results show the maximum enhancement of 42% for \(k_{\text{nf}}\). Tahani et al. [17] modeled the \(k_{\text{nf}}\) of GO/water nanofluid using ANN method. Their results indicate that the ANN can predict the \(k_{\text{nf}}\). Khosrojerdi et al. [18] modeled the \(k_{\text{nf}}\) of GO/water nanofluid by MLP-ANN. Their results show high accuracy of ANN modeling for predicting the \(k_{\text{nf}}\). Esfe et al. [19] used an ANN model to predict the \(k_{\text{nf}}\) of MWCNT–water nanofluid. They concluded that ANN could predict the \(k_{\text{nf}}\) more accurately. Aghayari et al. [20] compared the experimental and predicted data for the \(k_{\text{nf}}\) of Fe3O4/water nanofluid using ANNs. Afrand et al. [21] predicted the effects of MgO concentration and temperature on the thermal conductivity of water by using of ANN approach. Comparisons revealed that the ANN approach was accurate. Zhao and Li [22] predicted the \(k_{\text{nf}}\) of Al2O3–water nanofluids using ANNs. They found that the ANN provides an effective way to predict the properties of this type of nanofluids. Esfe et al. [23] evaluated the properties of EG-ZnO-DWCNT with ANN. Their results show the accuracy of ANN in modeling the \(k_{\text{nf}}\). Aghayari et al. [24] modeled the electrical conductivity of CuO/glycerol nanofluids. Kannaiyan et al. [25] modeled the \(k_{\text{nf}}\) of Al2O3/SiO2–water nanofluid using ANNs. They found that the predicted \(k_{\text{nf}}\) is satisfactory. Zendehboudi and Saidur [26] obtained a model to estimate the \(k_{\text{nf}}\) of 26 nanofluids under different situations.

In this study, after generating experimental data points for different volume fraction of nanoparticles \((\phi = 0,0.1,0.5,1,1.5,2,3\;{\text{and}}\;5\% )\) and different temperatures (25, 30, 35, 40, 45 and 50 °C), an algorithm to find the best neuron number in the hidden layer of ANN method is proposed to find the best architecture and then to predict the \(k_{\text{nf}}\) of SiO2/water–ethylene glycol (50:50) hybrid Newtonian nanofluid. Then, using the fitting method, a surface is fitted on the experimental data points. According to the authors’ research, there is no investigation with ANN into the \(k_{\text{nf}}\) of this type of nanofluids.

Experimental

In this study, a mixture of 60 to 40 volumes of water and ethylene glycol was used. The silica nanoparticles were suspended in water and ethylene glycol mixture (made by Germany’s Merk Corporation). The nanofluid is stabilized by combining the chemical and mechanical methods in the different \(\phi\). A minimum of 5 h of ultra-sonication is used to stabilize the nanofluid. This nanofluid is made of 7 different volume fraction of nanoparticles (0, 0.1, 0.5, 1, 1.5, 2, 3 and 5%) and different temperatures (25, 30, 35, 40, 45 and 50 °C). After suspending the nanoparticles, the \(k_{\text{nf}}\) is measured with a KD2 probe. The physical and chemical properties of materials are presented in Tables 1, 2, and 3.

After making nanofluids, each sample was monitored for three days with no deposition and settling. Figure 1 shows the variation of \(k_{\text{nf}}\) versus \(\phi\) at all experimental temperatures. As can be seen, the changes of \(k_{\text{nf}}\) at all temperatures have a similar general shape. It is observed that in a lower \(\phi\), the slope of \(k_{\text{nf}}\) is greater. This behavior is due to the fact that increasing the \(\phi\) increases the probability of localization and consequently decreasing the specific surface area. At 25 °C, by increasing the \(\phi\) from \(\phi = 0.1\) to \(\phi = 5\%\), the \(k_{\text{nf}}\) increases from 3.7 to 38.4% and the highest \(k_{\text{nf}}\) occurs at the highest volume fraction. At 30 °C, by increasing \(\phi\) from 0.1 to 5%, the \(k_{\text{nf}}\) increases from 3.9 to 31% and the highest \(k_{\text{nf}}\) occurs at the highest \(\phi\). At 35 °C, by increasing the \(\phi\) from 0.1 to 5%, the \(k_{\text{nf}}\) increases from 4.1 to 41.9% and the highest \(k_{\text{nf}}\) occurs at the highest \(\phi\). At 40 °C, by increasing the \(\phi\) from 0.1 to 5%, the \(k_{\text{nf}}\) increases from 4.3 to 43.1% and the highest \(k_{\text{nf}}\) occurs at the highest \(\phi\). At 50 °C, by increasing the \(\phi\) from 0.1 to 5%, the \(k_{\text{nf}}\) increases from 5.1 to 50.9% and the highest \(k_{\text{nf}}\) occurs at the highest \(\phi\).

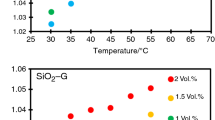

Figure 2 shows the changes in the \(k_{\text{nf}}\) versus temperature at the different \(\phi\). At \(\phi = 0.1\%\), the \(k_{\text{nf}}\) increases 6.6% with increasing temperature from 25 to 50 °C. The highest \(k_{\text{nf}}\) is related to T = 50 °C. At \(\phi = 0.5\%\), with increasing temperature from T = 25–50 °C the \(k_{\text{nf}}\) increases 9.9%. The \(k_{\text{nf}}\) increases by 9.9% compared to the \(k_{bf}\) at T = 25 °C.

At \(\phi = 1\%\), with increasing temperature from T = 25–50 °C, the \(k_{\text{nf}}\) increases 14.9%. At \(\phi = 1.5\%\) at 50 °C, the \(k_{\text{nf}}\) increased by 13.7%. At \(\phi = 2\%\), the \(k_{\text{nf}}\) increases 27.8% with increasing temperature from 25 to 50 °C. In \(\phi = 3\%\), with increasing temperature from T = 25–50 °C, the \(k_{\text{nf}}\) increases 8.9%. At \(\phi = 5\%\), with increasing temperature from T = 25–50 °C, the \(k_{\text{nf}}\) increases 45.5%.

As the temperature increases, the movement of the nanoparticles increases, resulting in a higher \(k_{\text{nf}}\). The increase in \(k_{\text{nf}}\) with increasing temperature and \(\phi\) can be attributed to the weakening of the molecular bonds in the fluid layers as well as the increase in the collision between the nanoparticles.

ANN method

ANNs are used for modeling the behavior of nanofluid. ANN is widely used in predicting the behavior of system especially nonlinear systems. But in this paper, an optimized ANN for predicting the \(k_{\text{nf}}\) is reached by changing different neuron numbers in the hidden layer and then comparing the performances and selecting the best neuron number. In fact, the architecture of ANN is modified to obtain the best neuron number for predicting the \(k_{\text{nf}}\). But some terms and descriptions about the basics of ANN has been presented as follows.

Mean square error (MSE) is presented as follows,

In Eq. 1, \(N\) is the number of data points, \(Y_{\text{k}}^{\text{ANN}}\) is the output and \(Y_{\text{k}}^{\exp }\) is the experimental value. In ANNs, the data are predicted by Eq. 2,

In Eq. 2, \(y_{\text{i}}\) is the output, \(\varPhi\) is activation function, \(w_{\text{ij}}\) is the weighting matrix, \(x_{\text{j}}\) is input and \(b_{\text{i}}\) is the bias. In this designed ANN, the activation function of input and hidden layer is tansig which is introduced in Eq. 3. Also, the activation function of output layer is purelin.

This ANN is a feed-forward network with Levenberg–Marquardt or damped least square for the learning algorithm. This algorithm was firstly introduced in 1944. The experimental data points are randomly divided into train, validation, and test parts. In the current work, there are 48 data points. 70% of data set is categorized as train, 15% for validation, and 15% for test data. Train data points are used to train the network; meanwhile, validation data points are used to modify the training process, and at the final step, the test data points are used to calculate the performance of the network. This ANN predicts the \(k_{\text{nf}}\) of aforementioned nanofluid. Therefore, only one neuron is used in the output layer. Obviously, the results of ANN depend on the neuron numbers of the hidden layer. This algorithm tries different neuron number and calculates the performance, and finally, the best ANN is selected as the best answer. In addition, in this algorithm, an inner iteration is defined to increase the reliability of the ANN. In fact, this inner iteration calculates the performance of each neuron number, and then, the algorithm generated another ANN and then calculates the performance, and at the final step on inner iteration, the mean value of performance for that neuron number is considered as the performance. This algorithm is shown in Fig. 3.

In this work, the number of inner iterations is considered 10. Different neuron numbers have been tested (from 6 to 35). The sorted neuron numbers based on the performances are presented in Table 4.

Considering Table 4, it can be seen that the ANN with 8 neurons has the best performance. The correlation is defined as:

In Eq. 4, \(E\) is the expected value, \(\text{cov}\) is covariance, \(\mu_{\text{X}}\) is the mean value of \(X\) and \(\mu_{\text{Y}}\) is the mean value of \(Y\) and \(\sigma_{\text{X}} ,\sigma_{\text{Y}}\) are standard deviations of \(X,Y\). The correlation coefficients for train, validation, test and all dataset are presented in Table 5. The correlation coefficient indicates that how closely data are along a straight line. The closer that the correlation coefficient to one, the better data points are aligned. Data sets with correlation coefficient close to zero show no first-order line relationship. The results of Eq. 4 for different neuron numbers are presented in Table 5. It can be seen that the ANN with 8 neurons in the hidden layer has the best correlation for overall data and its value is close to one.

The correlation coefficient indicates that how closely data are along a straight line. The closer that the correlation coefficient to one, the better data points are aligned. Data sets with correlation coefficient close to zero show no first-order line relationship. The results of Eq. 5 for different neuron numbers are presented in Table 5. It can be seen that the ANN with 8 neurons has the best correlation for overall data and its value is close to one. In many ANNs, the data points are divided into three main categories including train validation and test randomly. Then these data points are used for the network. Train data trains the network and generates masses and biases of the network. The validation data points modify the masses and biases and finally the test data points are used to evaluate the performance of the network. In Table 2 different neuron numbers have been tested and these neuron numbers are sorted based on their test performance. On the top of Table 2 it can be seen that the best neuron number is 8 because it has the best performance.

The train, validation, and test figures of ANN are presented in Figs. 4 to 7. Figure 4 shows ANN train outputs. In ANN train data outputs, MSE is 1.3040e−06, and maximum absolute value of error is 0.0027.

Figure 5 shows ANN validation outputs. In ANN validation outputs, MSE is 2.5222e−05 and maximum absolute value of error is 0.0073.

Figure 6 shows ANN test outputs. In ANN test outputs, MSE is 1.9024e−05 and maximum absolute value of error is 0.0084.

Figure 7 shows ANN all data outputs. In ANN all outputs, MSE is 7.3763e−06 and maximum absolute value of error is 0.0084. It can be seen that train, validation and test data points are accurately predicted by the ANN. In fact, this network can predict the \(k_{\text{nf}}\) for different temperatures and different \(\phi\). In the next part, another method for predicting the \(k_{\text{nf}}\) has been explained.

Surface fitting

In this part, since there are two inputs (\(\phi\) and temperature), we can fit a surface on experimental data points to predict the \(k_{\text{nf}}\). The fitted surface is shown in Fig. 8.

Different orders for functions have been tested. But, the third order had significantly better results compared to the second order. Although, the fourth order showed better results but there were no considerable differences between the third-order and the fourth-order results. In addition, the fourth-order function had more coefficients. Therefore, to avoid complexity in the formula, the third-order function is selected. Regarding the experimental data points, a third-order function is obtained. The fitted surface is presented in Eq. 5,

In Eq. 5, x is purity and y is temperature and \({\text{Fitresult}}\left( {x,y} \right)\) is the fitted surface. The coefficients of the fitted surface are presented in Table 6. In the fitting method, the MSE is 2.7547e−05, and the maximum value of error is 0.0125. The correlation coefficient of the fitting method is 0.9919. In Fig. 9 the experimental data points, ANN outputs and fitting results have been shown.

In Fig. 10, the absolute values of errors of ANN and fitting method have been compared. It can be seen that the ANN method has smaller absolute values of errors compared to the fitting method. In addition, the maximum error value of the ANN method is smaller than half of the fitting method. In Fig. 10 it can be seen that the ANN had smaller errors compared to the fitting method.

Conclusions

Based on the presented results, it can be concluded that the ANN had better ability in predicting the \(k_{\text{nf}}\) for \(\phi = 0,0.1,0.5,1.5,2,3,5\%\) and T = 25, 30, 35, 40, 45, 50 °C. Also, ANN showed better performance and better correlation and also smaller error in most of the predicted data points. Based on the presented results, it can be seen that:

-

The best neuron number is 8.

-

The correlation coefficient of the fitting method is 0.9919.

-

In the fitting method, the MSE is 2.7547e-05, and the maximum value of error is 0.0125.

-

The ANN had ability in predicting thermal conductivity for volume fraction of particles range (0, 0.1, 0.5, 1.5, 2, 3, 5%) and temperature range (25, 30, 35, 40, 45, 50 °C).

-

ANN method has smaller absolute values of errors compared to the fitting method.

-

The maximum error value of the ANN method is smaller than half of the fitting method.

Therefore, it can be said that the designed ANN had showed better results compared to the surface fitting method and the results were reliable and accurate. Lab costs for generating experimental data are high. By using such a network, the thermal conductivity of this nanofluid can be obtained and decreases the experimental costs.

References

Ruhani B, Toghraie D, Hekmatifar M, Hadian M. Statistical investigation for developing a new model for rheological behavior of ZnO–Ag (50%–50%)/water hybrid Newtonian nanofluid using experimental data. Phys A Stat Mech Appl. 2019;525:741–75.

Moradi A, Toghraie D, Isfahani AHM, Hosseinian A. An experimental study on MWCNT–water nanofluids flow and heat transfer in double-pipe heat exchanger using porous media. J Therm Anal Calorim. 2019. https://doi.org/10.1007/s10973-019-08076-0.

Keyvani M, Afrand M, Toghraie D, Reiszadeh M. An experimental study on the thermal conductivity of cerium oxide/ethylene glycol nanofluid: developing a new correlation. J Mol Liq. 2018;266:211–7.

Saeedi HA, Akbari M, Toghraie D. An experimental study on rheological behavior of a nanofluid containing oxide nanoparticle and proposing a new correlation. Physica E: Low-dimensional Syst Nanostruct. 2018;99:285–93.

Akhgar A, Toghraie D. An experimental study on the stability and thermal conductivity of water-ethylene glycol/TiO2-MWCNTs hybrid nanofluid: developing a new correlation. Powder Technol. 2018;338:806–18.

Deris Zadeh A, Toghraie D. Experimental investigation for developing a new model for the dynamic viscosity of silver/ethylene glycol nanofluid at different temperatures and solid volume fractions. J Therm Anal Calorim. 2018;131:1449–61.

Afshari A, Akbari M, Toghraie D, Eftekhari Yazdi M. Experimental investigation of rheological behavior of the hybrid nanofluid of MWCNT–alumina/water (80%)–ethylene-glycol (20%). J Therm Anal Calorim. 2018;132:1001–15.

Ahmadi Esfahani M, Toghraie D. Experimental investigation for developing a new model for the thermal conductivity of silica/water-ethylene glycol (40%–60%) nanofluid at different temperatures and solid volume fractions. J Mol Liq. 2017;232:105–12.

Hemmat Esfe M, Rostamian H, Afrand M, Karimipour A, Hassani M. Modeling and estimation of thermal conductivity of MgO–water/EG (60:40) by artificial neural network and correlation. Int Commun Heat Mass Transf. 2015;68:98–103.

Hemmat Esfe M, Saedodin S, Naderi A, Alirezaie A, Karimipour A, Wongwises S, Goodarzi M, Dahari M. Modeling of thermal conductivity of ZnO-EG using experimental data and ANN methods. Int Commun Heat Mass Transf. 2015;63:35–40.

Afrand M, Toghraie D, Sina N. Experimental study on thermal conductivity of water-based Fe3O4 nanofluid: development of a new correlation and modeled by artificial neural network. Int Commun Heat Mass Transf. 2016;75:262–9.

Afrand M, Najafabadi KN, Sina N, Safaei MR, Kherbeet AS, Wongwises S, Dahari M. Prediction of dynamic viscosity of a hybrid nano-lubricant by an optimal artificial neural network. Int Commun Heat Mass Transf. 2016;76:209–14.

Hemmat Esfe M, Hajmohammad H, Toghraie D, Rostamian H, Mahian O, Wongwises S. Multi-objective optimization of nanofluid flow in double tube heat exchangers for applications in energy systems. Energy. 2017;137:160–71.

Hemmat Esfe M, Yan W, Afrand M, Sarraf M, Toghraie D, Dahari M. Estimation of thermal conductivity of Al2O3/water (40%)–ethylene glycol (60%) by artificial neural network and correlation using experimental data. Int Commun Heat Mass Transf. 2016;74:125–8.

Hemmat Esfe M, Hassani Ahangar MR, Rejvani M, Toghraie D, Hadi Hajmohammad M. Designing an artificial neural network to predict dynamic viscosity of aqueous nanofluid of TiO2 using experimental data. Int Commun Heat Mass Transf. 2016;75:192–6.

Tajik Jamal-Abadi M, Zamzamian AH. Optimization of thermal conductivity of Al2O3 nanofluid by using ANN and GRG methods. Int J Nanosci Nanotechnol. 2013;9:177–84.

Tahani M, Vakili M, Khosrojerdi S. Experimental evaluation and ANN modeling of thermal conductivity of graphene oxide nanoplatelets/deionized water nanofluid. Int Commun Heat Mass Transf. 2016;76:358–65.

Khosrojerdi S, Vakili M, Yahyaei M, Kalhor K. Thermal conductivity modeling of graphene nanoplatelets/deionized water nanofluid by MLP neural network and theoretical modeling using experimental results. Int Commun Heat Mass Transf. 2016;74:11–7.

Hemmat Esfe M, Motahari K, Sanatizadeh E, Afrand M, Rostamian H, Hassani Ahangar MR. Estimation of thermal conductivity of CNTs-water in low temperature by artificial neural network and correlation. Int Commun Heat Mass Transf. 2016;76:376–81.

Aghayar R, Maddah H, Faramarzi AR, Mohammadiun H, Mohammadiun M. Comparison of the experimental and predicted data for thermal conductivity of iron oxide nanofluid using artificial neural networks. Nanomed Res J. 2016;1:15–22.

Afrand M, Hemmat Esfe M, Abedini E, Teimouri H. Predicting the effects of magnesium oxide nanoparticles and temperature on the thermal conductivity of water using artificial neural network and experimental data. Phys E. 2017;87:242–7.

Zhao N, Li Z. Experiment and artificial neural network prediction of thermal conductivity and viscosity for alumina–water nanofluids. Materials. 2017;10:552–60.

Hemmat Esfe M, Esfandeh S, Afrand M, Rejvani M, Rostamian SH. Experimental evaluation, new correlation proposing and ANN modeling of thermal properties of EG based hybrid nanofluid containing ZnODWCNT nanoparticles for internal combustion engines applications. Appl Therm Eng. 2018;133:452–63.

Aghayari R, Maddah H, Ahmadi MH, Yan W, Ghasemi N. Measurement and artificial neural network modeling of electrical conductivity of CuO/glycerol nanofluids at various thermal and concentration conditions. Energies. 2018;11:1190–8.

Kannaiyan S, Boobalan C, Castro Nagarajan F, Sivaraman S. Modeling of thermal conductivity and density of alumina/silica in water hybrid nanocolloid by the application of artificial neural networks. Chin J Chem Eng. 2019;27:726–36.

Zendehboudi A, Saidur R. A reliable model to estimate the effective thermal conductivity of nanofluids. Heat Mass Transf. 2019;55:397–411.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Rostami, S., Toghraie, D., Esfahani, M.A. et al. Predict the thermal conductivity of SiO2/water–ethylene glycol (50:50) hybrid nanofluid using artificial neural network. J Therm Anal Calorim 143, 1119–1128 (2021). https://doi.org/10.1007/s10973-020-09426-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10973-020-09426-z