Abstract

Viscosity can be mentioned as one of the most crucial properties of nanofluids due to its ability to describe the fluid resistance to flow, and as the result it affects other phenomena. The effects of nanofluids’ viscosity on different parameters can be enumerated as pressure drop, pumping power, feasibility of the nanofluid, and its convective heat transfer coefficient. In this investigation, the viscosity of TiO2/water nanofluid was compared and analyzed with experimental data. The primary goal of this investigation was to introduce a combination of experimental and modeling approaches to predict viscosity values using four different neural networks. Between MLP-ANN, ANFIS, LSSVM, and RBF-ANN methods, it was found that the LSSVM produced better results with the lowest deviation factor and reflected the most accurate responses between the proposed models. The regression diagram of experimental and estimated values shows an R2 coefficient of 0.995 and 0.993 for training and testing sections of the ANFIS model. These values for MLP-ANN, RBF-ANN, and LSSVM models were 0.998 and 0.999, 0.996 and 0.997, and 0.997 and 1.000 for their training and testing parts, respectively. Furthermore, the effect of different parameters was investigated using a sensitivity analysis which demonstrates that the average diameter can be considered as the most affecting parameter on the viscosity TiO2–water nanofluid with a relevancy factor of 0.992123.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Recently, the applications of nanofluids have been widely accepted by many scholars in numerous engineering problems like mechanical, chemical, and electrical engineering, due to their favorable properties compared to the conventional fluids, such as lubricating efficiency, cooling capacity, thermal characteristics, and viscosity behavior [1,2,3,4,5].

The viscosity augmentation can be achieved with the help of adding nanoparticles to the base fluid [6,7,8]. The nanofluids’ viscosity affects the convective heat transfer coefficient, pressure drop, pumping power, its workability in industrial application, and thereby the pressure reduction must be compensated by using higher quantity of power [9]. The addition of micrometer-sized particles to the base fluids causes vast changes in the properties of the operating fluid [10, 11]. In this way, it can be concluded that nanofluids express higher convective heat transfer and viscosity by comparison of nanofluids with liquids which conventionally utilized [12,13,14]. Thus, the optimization of heat transfer process can be obtained by applying nanotechnology. The examples of nanoparticles that are suspended in the fluids are metals, oxides, ceramics, and nanotubes [15,16,17,18,19]. Additionally, the range contributed to the nanoparticles’ size is approximately between 1 and 100 nm. As suspended particles have the characteristics of higher viscosity and thermal performance, auspicious properties associated with nanofluids can be achieved by adding these particles. In this way, some experimental investigations on the viscosity of nanofluids have been performed by many researchers [4]. Therefore, the influence of various parameters on nanofluids viscosity has been studied by many scholars [20,21,22,23,24]. The effects of temperature [9, 21, 22, 25,26,27,28,29,30,31,32,33], volumetric concentration of nanoparticle [1, 20, 21, 23, 27,28,29, 34,35,36,37,38,39], aggregation radius [40, 41], particle shape [42], thickness of nanolayers [43, 44], and packing fraction [45] have been widely provided in these studies.

The flow characteristic and thermal performance of TiO2/distilled water flowing through a vertical pipe in an upward direction have been investigated by He et al. [46]. The operation conditions can be stated as a constant heat flux boundary condition in turbulent and laminar flow regimes. In the investigation, the average diameter of 95 nm was utilized for TiO2 nanoparticles. As indicated in the results, the estimated quantity of the Einstein equation is considerably lower than the calculated viscosity of nanofluids [28]; the viscosity of TiO2/water nanofluid in the temperature range of 15–35 °C and volume concentration range of 0.2–2 vol% has been studied by Duangthongsuk et al. [28]. According to the experiments, the nanofluids’ viscosity reduced by increasing the temperature and particle concentrations. As stated in the results, the comparison of the experimental viscosity of nanofluids with estimated quantities from the previous correlations showed these two values were distinct. Therefore, new correlations were performed in order to predict the nanofluids’ viscosity [47].

In addition to the experimental investigations, vast variety of efforts were done to model the experimental results by proposing different correlations or by utilizing artificial neural networks. Various studies in different fields have recommended applying artificial intelligence like support vector machines, fuzzy inference systems, and the artificial neural networks (ANNs), which commonly lead to accurate results [48,49,50,51,52,53].

Radial basis function neural networks (RBF-NN) have been applied by Zhao et al. [34] for the aim of predicting the viscosity of CuO/water and Al2O3/water nanofluids. In this way, 721 experimental data contributed to the mentioned nanofluids were utilized. Also, for predicting the viscosity of nine various nanofluids with the help of hybrid self-organizing polynomial neural network based on GMDH, nine models have been developed by Atashrouz et al. [54].

Derakhshanfard and Mehralizadeh in 2018 published a paper on the impact of the efficiency of radial basis function method. They investigated the viscosity of crude oil under different ranges of temperatures and various mass fractions of five types of nanofluids. Increase in the concentration of TiO2, ZnO, and FeO3 nanoparticles led to decrease in the viscosity of their corresponding nanofluids; by contrast, any raise in the mass fraction of WO3 and NiO will result in higher viscosities [55].

Additionally, LSSVM approach has been employed by Meybodi et al. [56] for predicting the viscosity of water-based SiO2, CuO, TiO2, and Al2O3 nanofluids. For the aim of estimating the viscosity of TiO2/water nanofluid, an ANN has been developed by Esfe et al. [40] with considering volume fraction and temperature as input data. As stated in the results, the ANN model can precisely and reliably estimate the viscosity of TiO2/water nanofluid. Baghban et al. [57] used least square support vector machine algorithm (LSSVM) to examine the properties of 29 various nanofluids.

Furthermore, or the aim of predicting the viscosity of non-Newtonian EG–water/Fe3O4 nanofluids as a function of shear rate, volume fraction, and temperature, GMDH approach has been utilized by Atashrouz et al. [58]. According to the results, the GMDH approach can estimate the viscosity accurately. Heidari et al. [59] and Barati-Harooni et al. [60] proposed two distinct methods utilizing a similar data bank for predicting the viscosity based on MLP-ANN and RBF-ANN, respectively. Based on their results, both models have the ability to predict the nanofluids’ viscosity accurately [61].

Additionally, the ANN model has been used by Longo et al. [62] for estimating the thermal conductivity of Al2O3/water and TiO2/water nanofluids. Moreover, different investigations with the help of ANN approach for estimating the thermophysical properties of nanofluids containing various kinds of nanoparticles (Fe, Cu, Mg(OH)2, TiO2, MgO, Al2O3) and base fluids (ethylene glycol, water, and their mixture) have been stated by Esfe et al. [63,64,65,66,67]. Also, another study has been carried out by Aminian [68] which focused on the development of an ANN model for predicting the water-based nanofluid’s effective viscosity for an extensive group of experimental data [47]. In addition to the ANN approaches, Tafarroj et al. [69] utilized the computational fluid dynamics and predicted the efficiency of the absorption of nanofluids. The impact of concentration of nanofluid and the operating temperature was investigated through CFD and ANN approaches. They drew a comparison between these methods and discussed their benefits and disadvantages. In spite of the CFD approach, they proposed that MLP network is not able to either analyze the trends or predict anything beyond its training domain [70].

In the present study, we utilize various neural networks (ANFIS, MLP-ANN, LSSVM, and RBF-ANN) to model the experimentally obtained data of viscosity of TiO2–water nanofluid and develop four various models to predict the viscosity precisely, rapidly, and cost-effectively.

Theory

Multilayer perceptron artificial neural network (MLP-ANN)

A type of computational intelligence which is originated from biological nervous systems like the human brain is named artificial neural networks (ANNs). Complicated relations between outputs and inputs of a system can be found with the help of ANNs. Interconnections or links and processing elements are mentioned as two major elements for each ANN. In this way, interconnections and masses provide connections between neurons; however, nodes or neurons and the processing elements process the information [71, 72]. Radial basis function (RBF) and multilayer perceptron (MLP) are considered as the most prevailing ANNs. The mentioned networks differ fundamentally based on the approach in which the neurons process the information. Various layers are included in an MLP neural network, in which the input layer proportionate to the input data and the output layer proportionate to the output of the model are the first and the last layers, respectively. Additionally, the middle layers between the output and input layers are called hidden layers [73]. In general, hidden layers have an obligation for the internal appearance of the relation between the model’s inputs and the favorable output. The neurons’ number and input variables are equal; on the other hand, the outputs’ number is typically the one that is the appealing property/parameter. The numbers of neurons and hidden layers must be decided based on empirical approaches. A one-hidden-layer MLP is favorable in many problems [74]. Nonetheless, mainly two hidden layers are utilized for complex systems. Also, the whole neurons in the next and previous layer are connected to each neuron in the hidden layer [61]. In turn, the hidden neurons’ outputs perform as inputs to the output neuron where they undergo another transformation. The following equation describes the output of MLP neural network:

in which \(\beta_{\text{jk}}\) and \(\gamma_{\text{jk}}\) express bias mass for neuron j in layer k and the neuron j’s output from k’s layer, respectively. \(\omega_{\text{ijk}}\) indicates the model-fitting parameters, which are the link masses. These parameters have been chosen indiscriminately in the start of network training process. Additionally, \(F_{\text{k}}\) signifies the nonlinear activation transfer functions that are regarded in various forms like linear functions, Gaussian, bipolar sigmoid, binary sigmoid, binary step function, and identity function [75].

Adaptive neuro-fuzzy inference system (ANFIS)

The combination of multilayer artificial neural network and Sugeno fuzzy inference model (SFIM) is named ANFIS. Synaptic masses are not utilized in ANFIS approach; however, it applies nonadaptive and adaptive nodes in its different layers. The performing principle of ANFIS and SFIM is relatively identical [76]. In the initial phase of ANFIS, least square method and gradient descent with backpropagation algorithm identify model parameters and fuzzy membership function [77]. The analysis of viscosity values is performed with the help of using the fuzzy membership functions. Generally, a set of degrees and objects of membership is considered as a fuzzy set, which its values vary between 0 and 1. The analysis of ambiguous and subjective judgments is mentioned as the crucial role of the fuzzy logic. It is presumed that the ANFIS approach includes two inputs x and y, as well as one output f. For generating two if–then rules, the first-order Sugeno kind should be utilized as follows:

in which x and y express the input variables, and U1, U2, V1, and V2 indicate small, medium, and large fuzzy sets. Also, different design parameters like p1, q1, r1, p2, q2, and r2 define the linguistic labels in the training phase. A typical schematic of the ANSIS model is provided in Fig. 1.

Typical structure of the ANFIS [4]

Least squares support vector machine (LSSVM)

A machine learning technique utilized in regression pattern recognition, classification, and analysis amid input data is named support vector machine (SVM). Least square support vector machine (LSSVM) is considered as a novel version of SVM, which was proposed to obviate the prevailing problems of SVM approach. In this approach, regression error is added to the constraints associated with the optimization. To be clear, regression error is mathematically determined and solved in LSSVM approaches; nonetheless, it is optimized in the learning phase of SVM methods. Equation 2 defines the penalized function in this method as follows [78]:

in which T and γ indicate transpose matrix and regression errors’ summation. The succeeding constraints subject to the above equation:

in which, ek, T, y, b, and w express the N training objects’ regression error, the transpose matrix, the output vector contributed to the model, the bias or the intercept of linear regression, and the regression mass (the linear regression slope), respectively. Additionally, the following equation regularly expresses the mass coefficient (w):

By reformulating Eq. (4) with the help of LSSVM method, the succeeding equation is obtained [78]:

Therefore, the Lagrange multipliers can be described as follows:

By utilizing the succeeding Kernel function, the mentioned linear regression equation is reformulated:

Also, K(x, xk) indicates the Kernel function that is the result of dot product of x and xk vectors. Actually, the dot product of Φ(xk) and Φ(x)T is K(x, xk) like the succeeding expression [78]:

Radial basis Kernel function is considered as one of the most eminent Kernel function which has been applied in this investigation as follows:

This paper uses a PSO algorithm for the aim of optimizing the LSSVM approach. A schematic illustration of the PSO-LSSVM method is demonstrated in Fig. 2.

RBF-ANN

Radial basis function neural networks are referred as one of the firm-proved neural networks, which are applied in regression and classification. In fact, the theory of function approximation is the basis of the concept associated with RBF-ANN. The introduction of this method was rendered by Broomhead, which was a sort of feed-forward neural networks. Furthermore, numerous numerical and mathematical investigations have been carried out with the help of using these networks [79]. Generally, a three-layer feed-forward structure is included in a RBF neural network, that is, an input layer is connected to the output layer with the help of a hidden layer. In reality, p is the input nodes in the input layer which is similar to the input variables’ number of the model. The major part of RBF-ANN which transmits the data from input space to a hidden space is the hidden layer. Every point contributed to the hidden layer is centered at an exact radius. The distance between the input vector and its own center is measured in every neuron [80].

The configuration of an RBF-ANN system is similar to the structure of MLP-ANN, but a complex RBF function is applied to the hidden layers. The result of RBF-ANN is:

where x is an input pattern, yi (x) is ith output, and wki is the mass of connection from the kth interior element to the ith element of outcome layer. The || || symbol represents the Euclidean norm, and ck is the archetype of the middle of the kth interior element. Conventionally, the RBF (φ) is picked out as the Gaussian operator which is presented below:

The radius (r) and center (c) are parameters of Gaussian RBF. Away from the center, it decreases uniformly.

Methodology

Pre-analysis phase

The data used for modeling are extracted from the experimental studies [81,82,83,84,85]. There are 56 sets of data points in this study for predicting viscosity of TiO2-based water nanofluid as a function of temperature, volume fraction, and average diameter. The temperature ranges between 15 and 50 °C, while the volume fraction, average diameter, and viscosity range between 0.2 and 3, 21 and 25 nm, and 0.00057 and 0.00122 kg (mS)−1, respectively. In the current paper, four model-building procedures and five different statistical approaches were used in order to estimate and validate the viscosity of nanofluid. The resulted data from the experimental section of the study at the first step are used to train the models. In order to evaluate the globalization of models, we used dataset of nanofluid with a diameter of 21 nm for testing phase and other data points with a diameter of 25 nm were used for training stage. The suggested models can predict viscosity of TiO2 nanofluids with great accuracy for different inputs. Equation 12 shows the normalization procedure of every data:

where x is the value of the nth parameter. The absolute value of \(D_{\text{k}}\) will be less than unity. The other values are fed to the neural network systems, and the models are built to predict the viscosity as the main output.

Outlier detection

On the condition of implementing statistical approaches or training machine learning algorithms, outliers or anomalies could be mentioned as a severe concern. They are generally made due to the measurements’ errors or excellent systems conditions, as the result cannot illustrate the prevailing functioning of the underlying system. Certainly, applying an outlier removal phase before proceeding with additional investigation can be stated as the exceptional practice. The leverage value procedure is applied as an outlier detection method in this study. The Hat and the residual values of any input were calculated. This method’s principles are provided in Refs. [86, 87]. The succeeding equation is applied to calculate the Hat matrix:

X is a matrix of size N × P, in which N represents the data points’ total number and P denotes the input parameters’ number. T and − l are transposed and inverse operators, respectively. A warning leverage value is also defined using the following expression:

A rectangular area restricted to R = ± 3 and 0 ≤ H ≤ H* is considered as the feasible region.

Model development and verification methodology

As an essential step in developing a model, the validation of model must be carried out. This step aims to check the accuracy of the proposed models and see if they produce valid results [88]. To derive the representative models, outstanding approaches of MLP-ANN, LSSVM, ANFIS, and RBF-ANN were utilized. The accuracy of models was examined by Eqs. 15–19:

where output property is denoted by X, N represents the figure of total data points, ‘exp’ illustrates the experimental values and ‘simul’ is a notation for modeled values. \(X_{\text{i}}^{\text{avg}}\) is the average of experimentally obtained viscosities.

Results and discussion

The proposed MLP-ANN, RBF-ANN, ANFIS, and LSSVM strategies were associated with common optimization algorithms including Levenberg–Marquardt and particle swarm optimization (PSO). Figure 3 shows the bubble curve of viscosity versus the volume fraction and temperature in which the size of each bubble is dependent on the size of particles. The detailed information of MLP-ANN including the number of neurons in hidden and output layers is listed in Table 1. In this table, the amount of mass parameter for different inputs (temperature, volume fraction, and diameter of TiO2) and also the bias numbers for the interior and the output layers are presented. It is worth mentioning that several structures were evaluated and then the best one with four neurons in hidden layer was selected as good structure with minimum parameters. Based on the above procedure for MLP-ANN model, we tried several times to find the best structure of RBF-ANN with minimum parameters. The optimization algorithm to find the optimized RBF-ANN parameters was Levenberg–Marquardt.

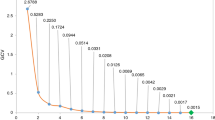

In association with ANFIS strategy, the particle swarm optimization (PSO) method is utilized for the aim of determining optimum parameters. Training results of membership functions for different parameters and various clusters are demonstrated in Fig. 4, where the plot of degree of membership versus average diameter of particles, percentage of volume fraction, and temperature is illustrated. Detailed information about the proposed models such as used membership and activation functions, number of clusters, interior and exterior layers, and the optimization methods is reported in Table 2. Two kinds of tuning parameters (γ and σ2) were used in the LSSVM machine. The optimized values for γ and σ2 are 5745.3831 and 2.0486028, respectively.

Model validation results

We applied both graphical and statistical approaches to evaluate the models’ performances regarding the estimation of the viscosity. Figure 5 illustrates the MSE error for the LM algorithm. Increasing the number of iterations results in the decrease in MSE error until it touches a final value of 4 × 10−4 after about 40 iterations. Figure 6 shows information about the performance of ANFIS method evaluated by PSO approach; it was seen that the corresponding root-mean-squared error was decreased rapidly in the first 100 iterations. Figure 7 shows the plot of the resulted viscosities obtained from the proposed models. In this figure, the results of prediction are plotted versus data index and shows the training and testing procedure results. From this figure, it can be seen that the LSSVM and RBF-ANN had a better prediction capability and led to a more precise results. The coefficient of determination (R2) indicates how close predicted values are to experimental values. This parameter usually lies between 0 and 1.0. Closer values to unity indicate more accurate predictions. Near-unity coefficients of determination for the proposed models represent their capability in predicting the viscosity. As is demonstrated in different parts of Fig. 8, the regression diagram of experimental and estimated values shows an R2 coefficient of 0.995 and 0.993 for training and testing sections of the ANFIS method in part a, and in the b, c and d parts of the diagram; the coefficients of determination were 0.998 and 0.999, 0.995 and 0.997, 0.997 and 1.000 for training and testing part of MLP-ANN, RBF-ANN, and LSSVM models. The majority of data points for both training and testing datasets are concentrated around the Y = X line which implies the accurate predictions of the proposed models. In addition to the conclusion derived from Fig. 7, Fig. 8 also verifies the accurateness and the prediction capability of LSSVM and the MLP-ANN approaches. Detailed information about the results of the evaluation methods is summarized in Table 3. Based on the acquired values, LSSVM showed an absolutely brilliant accurateness; it has had minimum MRE%, while having the maximum R-squared quantitates. Different parts of Fig. 9 illustrate the percentage of the relative deviation for the developed models. It was observed that the LSSVM model had the best accuracy than the others and its relative deviation does not exceed from 1.5% band. The relative deviation of MLP-ANN also lies between + 1.5 and − 1.5%.

Detection of suspicious dataset for different models was done based on the pre-mentioned strategy of outlier detection, and the results are illustrated in Fig. 10. According to these analyses, based on various plots of standard residual versus Hat values, in ANFIS, MLP-ANN, RBF-ANN, and LSSVM no data were considered as outlier.

Sensitivity analysis

A bunch of sensitivity analyses were carried out to find out how each input parameter affects the target variable, namely the viscosity. Quantitative effect of each parameter calculated using a relevancy factor is defined by the following expression:

where N, Xk,i, Yi, \(\bar{X}_{\text{k}}\), and \(\bar{Y}\) are the total number of data points, ith input value of the kth parameter, ith output value, average value of the kth input parameter, and mean value of the output parameter, respectively. The relevancy factor lies between − 1 and + 1, in which higher absolute values represent the higher effect of the corresponding parameter. The positive effect reflects the target variable’s increment as a specific input parameter increases, while the negative effect reflects the target variable’s decrement as a specific input parameter increases. From three main input parameters, all parameters showed direct impact on the results, which means any increase in anyone of the input parameters leads to increase in viscosity. Figure 11 illustrates the sensitivity analysis results, in which the average diameter had the highest positive effects with relevancy factor of 0.9922. The second most affecting parameter was temperature and showed a positive effect of 0.9722. It was seen that volume fraction also had a great impact on the viscosity of the nanofluid with relevancy factor of 0.9320.

Conclusions

Enhancement of heat transfer rates with the lowest utilization of energy attracted a lot of attention during recent decades. Carbon nanotubes (TiO2) are considered as promising nanomaterials and have been in the center of attention. In the present study, four soft computing-based approaches including MLP-ANN, ANFIS, LSSVM, and RBF-ANN were used in order to model the amount of viscosity of TiO2–water nanofluid system. Among MLP-ANN, ANFIS, LSSVM, and RBF-ANN methods, it was found that the LSSVM produced better results with the lowest deviation factor and reflected the most accurate responses. The regression diagram of experimental and estimated values shows the R2 coefficient of 0.9982 and 0.9969 for training and testing. The coefficients of determination were 0.9993 and 0.9989, 0.9975 and 0.9963, 0.9996 and 0.9993 for training and testing part of MLP-ANN, RBF-ANN, and LSSVM models. Furthermore, LSSVM model had the best accuracy than the others and its relative deviation does not exceed from 1.5% band. The relative deviation of MLP-ANN also lies between + 1.5 and − 1.5%. Results from the sensitivity analysis revealed that all parameters had direct impact on the viscosity which means increase in every parameter will increase the viscosity of TiO2–water nanofluid. The present study can be worthy to reach a better understanding of nanofluids and their applications in heat transfer phenomenon, especially when a high level of performance is needed.

References

Ahmadi MH, Tatar A, Seifaddini P, Ghazvini M, Ghasempour R, Sheremet MA. Thermal conductivity and dynamic viscosity modeling of Fe2O3/water nanofluid by applying various connectionist approaches. Numer Heat Transf A Appl. 2018;74:1301–22. https://doi.org/10.1080/10407782.2018.1505092.

Hemmat Esfe M, Kamyab MH, Afrand M, Amiri MK. Using artificial neural network for investigating of concurrent effects of multi-walled carbon nanotubes and alumina nanoparticles on the viscosity of 10 W-40 engine oil. Phys A Stat Mech Appl. 2018;510:610–24. https://doi.org/10.1016/j.physa.2018.06.029.

Hemmat Esfe M, Rostamian H, Esfandeh S, Afrand M. Modeling and prediction of rheological behavior of Al2O3-MWCNT/5W50 hybrid nano-lubricant by artificial neural network using experimental data. Phys A Stat Mech Appl. 2018;510:625–34. https://doi.org/10.1016/j.physa.2018.06.041.

Hemmat Esfe M, Nadooshan AA, Arshi A, Alirezaie A. Convective heat transfer and pressure drop of aqua based TiO2 nanofluids at different diameters of nanoparticles: data analysis and modeling with artificial neural network. Phys E Low-Dimens Syst Nanostruct. 2018;97:155–61. https://doi.org/10.1016/j.physe.2017.10.002.

Hemmat Esfe M, Tatar A, Ahangar MRH, Rostamian H. A comparison of performance of several artificial intelligence methods for predicting the dynamic viscosity of TiO2/SAE 50 nano-lubricant. Phys E Low-Dimens Syst Nanostruct. 2018;96:85–93. https://doi.org/10.1016/j.physe.2017.08.019.

Hemmat Esfe M, Rostamian H, Reza Sarlak M, Rejvani M, Alirezaie A. Rheological behavior characteristics of TiO2-MWCNT/10w40 hybrid nano-oil affected by temperature, concentration and shear rate: an experimental study and a neural network simulating. Phys E Low-Dimens Syst Nanostruct. 2017;94:231–40. https://doi.org/10.1016/j.physe.2017.07.012.

Afrand M, Hemmat Esfe M, Abedini E, Teimouri H. Predicting the effects of magnesium oxide nanoparticles and temperature on the thermal conductivity of water using artificial neural network and experimental data. Phys E Low-Dimens Syst Nanostruct. 2017;87:242–7. https://doi.org/10.1016/j.physe.2016.10.020.

Karimipour A, Hemmat Esfe M, Safaei MR, Semiromi D, Jafari S, Kazi SN. Mixed convection of copper–water nanofluid in a shallow inclined lid driven cavity using the lattice Boltzmann method. Phys A Stat Mech Appl. 2014;402:150–68. https://doi.org/10.1016/j.physa.2014.01.057.

Hemmat Esfe M, Saedodin S, Mahian O, Wongwises S. Efficiency of ferromagnetic nanoparticles suspended in ethylene glycol for applications in energy devices: effects of particle size, temperature, and concentration. Int Commun Heat Mass Transf. 2014;58:138–46. https://doi.org/10.1016/j.icheatmasstransfer.2014.08.035.

Nafchi PM, Karimipour A, Afrand M. The evaluation on a new non-Newtonian hybrid mixture composed of TiO2/ZnO/EG to present a statistical approach of power law for its rheological and thermal properties. Phys A Stat Mech Appl. 2019;516:1–18. https://doi.org/10.1016/J.PHYSA.2018.10.015.

Vafaei M, Afrand M, Sina N, Kalbasi R, Sourani F, Teimouri H. Evaluation of thermal conductivity of MgO-MWCNTs/EG hybrid nanofluids based on experimental data by selecting optimal artificial neural networks. Phys E Low-Dimens Syst Nanostruct. 2017;85:90–6. https://doi.org/10.1016/J.PHYSE.2016.08.020.

Ahmadi MH, Nazari MA, Ghasempour R, Madah H, Shafii MB, Ahmadi MA. Thermal conductivity ratio prediction of Al2O3/water nanofluid by applying connectionist methods. Colloids Surf A Physicochem Eng Asp. 2018. https://doi.org/10.1016/j.colsurfa.2018.01.030.

Ahmadi MH, Ahmadi MA, Nazari MA, Mahian O, Ghasempour R. A proposed model to predict thermal conductivity ratio of Al2O3/EG nanofluid by applying least squares support vector machine (LSSVM) and genetic algorithm as a connectionist approach. J Therm Anal Calorim. 2019;135:271–81. https://doi.org/10.1007/s10973-018-7035-z.

Kahani M, Ahmadi MH, Tatar A, Sadeghzadeh M. Development of multilayer perceptron artificial neural network (MLP-ANN) and least square support vector machine (LSSVM) models to predict Nusselt number and pressure drop of TiO2/water nanofluid flows through non-straight pathways. Numer Heat Transf A Appl. 2018. https://doi.org/10.1080/10407782.2018.1523597.

Hemmat Esfe M, Goodarzi M, Reiszadeh M, Afrand M. Evaluation of MWCNTs-ZnO/5W50 nanolubricant by design of an artificial neural network for predicting viscosity and its optimization. J Mol Liq. 2019;277:921–31. https://doi.org/10.1016/j.molliq.2018.08.047.

Hemmat Esfe M, Abbasian Arani AA, Esfandeh S. Experimental study on rheological behavior of monograde heavy-duty engine oil containing CNTs and oxide nanoparticles with focus on viscosity analysis. J Mol Liq. 2018;272:319–29. https://doi.org/10.1016/j.molliq.2018.09.004.

Hemmat Esfe M, Esfandeh S, Alirezaie A. A novel experimental investigation on the effect of nanoparticles composition on the rheological behavior of nano-hybrids. J Mol Liq. 2018;269:933–9. https://doi.org/10.1016/j.molliq.2017.11.147.

Alipour H, Karimipour A, Safaei MR, Semiromi DT, Akbari OA. Influence of T-semi attached rib on turbulent flow and heat transfer parameters of a silver-water nanofluid with different volume fractions in a three-dimensional trapezoidal microchannel. Phys E Low-Dimens Syst Nanostruct. 2017;88:60–76. https://doi.org/10.1016/J.PHYSE.2016.11.021.

Nojoomizadeh M, D’Orazio A, Karimipour A, Afrand M, Goodarzi M. Investigation of permeability effect on slip velocity and temperature jump boundary conditions for FMWNT/water nanofluid flow and heat transfer inside a microchannel filled by a porous media. Phys E Low-Dimens Syst Nanostruct. 2018;97:226–38. https://doi.org/10.1016/J.PHYSE.2017.11.008.

Anoop KB, Kabelac S, Sundararajan T, Das SK. Rheological and flow characteristics of nanofluids: Influence of electroviscous effects and particle agglomeration. J Appl Phys. 2009;106:034909. https://doi.org/10.1063/1.3182807.

Nguyen CT, Desgranges F, Roy G, Galanis N, Maré T, Boucher S, Angue Mintsa H. Temperature and particle-size dependent viscosity data for water-based nanofluids—hysteresis phenomenon. Int J Heat Fluid Flow. 2007;28:1492–506. https://doi.org/10.1016/j.ijheatfluidflow.2007.02.004.

Pastoriza-Gallego MJ, Casanova C, Legido JL, Piñeiro MM. CuO in water nanofluid: Influence of particle size and polydispersity on volumetric behaviour and viscosity. Fluid Phase Equilib. 2011;300:188–96. https://doi.org/10.1016/J.FLUID.2010.10.015.

Pak BC, Cho YI. Hydrodynamic and heat transfer study of dispersed fluids with submicron metallic oxide particles. Exp Heat Transf. 1998;11:151–70. https://doi.org/10.1080/08916159808946559.

Kwek D, Crivoi A, Duan F. Effects of temperature and particle size on the thermal property measurements of Al2O3–water Nanofluids. J Chem Eng Data. 2010;55:5690–5. https://doi.org/10.1021/je1006407.

Hemmat Esfe M, Saedodin S, Sina N, Afrand M, Rostami S. Designing an artificial neural network to predict thermal conductivity and dynamic viscosity of ferromagnetic nanofluid. Int Commun Heat Mass Transf. 2015;68:50–7. https://doi.org/10.1016/j.icheatmasstransfer.2015.06.013.

Hemmat Esfe M, Saedodin S. An experimental investigation and new correlation of viscosity of ZnO–EG nanofluid at various temperatures and different solid volume fractions. Exp Therm Fluid Sci. 2014;55:1–5. https://doi.org/10.1016/j.expthermflusci.2014.02.011.

Prasher R, Song D, Wang J, Phelan P. Measurements of nanofluid viscosity and its implications for thermal applications. Appl Phys Lett. 2006;89:133108. https://doi.org/10.1063/1.2356113.

Duangthongsuk W, Wongwises S. Measurement of temperature-dependent thermal conductivity and viscosity of TiO2-water nanofluids. Exp. Therm. Fluid Sci. 2009;33:706–14. https://doi.org/10.1016/J.EXPTHERMFLUSCI.2009.01.005.

Tavman I, Turgut A, Chirtoc M, Schuchmann HP, Tavman S. Archives of materials science and engineering international scientific journal published monthly as the organ of the Committee of Materials Science of the Polish Academy of Sciences. Cheltenham: International OCSCO World Press; 2007.

Meybodi MK, Daryasafar A, Koochi MM, Moghadasi J, Meybodi RB, Ghahfarokhi AK. A novel correlation approach for viscosity prediction of water based nanofluids of Al2O3, TiO2, SiO2 and CuO. J Taiwan Inst Chem Eng. 2016;58:19–27. https://doi.org/10.1016/J.JTICE.2015.05.032.

Yiamsawas T, Mahian O, Dalkilic AS, Kaewnai S, Wongwises S. Experimental studies on the viscosity of TiO2 and Al2O3 nanoparticles suspended in a mixture of ethylene glycol and water for high temperature applications. Appl Energy. 2013;111:40–5. https://doi.org/10.1016/J.APENERGY.2013.04.068.

Hemmat Esfe M, Saedodin S, Asadi A, Karimipour A. Thermal conductivity and viscosity of Mg(OH)2-ethylene glycol nanofluids. J Therm Anal Calorim. 2015;120:1145–9. https://doi.org/10.1007/s10973-015-4417-3.

Nabeel Rashin M, Hemalatha J. Viscosity studies on novel copper oxide–coconut oil nanofluid. Exp Therm Fluid Sci. 2013;48:67–72. https://doi.org/10.1016/j.expthermflusci.2013.02.009.

Zhao N, Wen X, Yang J, Li S, Wang Z. Modeling and prediction of viscosity of water-based nanofluids by radial basis function neural networks. Powder Technol. 2015;281:173–83. https://doi.org/10.1016/J.POWTEC.2015.04.058.

Vajjha RS, Das DK, Ray DR. Development of new correlations for the Nusselt number and the friction factor under turbulent flow of nanofluids in flat tubes. Int J Heat Mass Transf. 2015;80:353–67. https://doi.org/10.1016/J.IJHEATMASSTRANSFER.2014.09.018.

Maddah H, Ghazvini M, Ahmadi MH. Predicting the efficiency of CuO/water nanofluid in heat pipe heat exchanger using neural network. Int Commun Heat Mass Transf. 2019;104:33–40. https://doi.org/10.1016/J.ICHEATMASSTRANSFER.2019.02.002.

Hemmat Esfe M, Saedodin S, Mahmoodi M. Experimental studies on the convective heat transfer performance and thermophysical properties of MgO–water nanofluid under turbulent flow. Exp Therm Fluid Sci. 2014;52:68–78. https://doi.org/10.1016/j.expthermflusci.2013.08.023.

Abdellahoum C, Mataoui A, Oztop HF. Comparison of viscosity variation formulations for turbulent flow of Al2O3–water nanofluid over a heated cavity in a duct. Adv Powder Technol. 2015;26:1210–8. https://doi.org/10.1016/J.APT.2015.06.002.

Mehrabi M, Sharifpur M, Meyer JP. Viscosity of nanofluids based on an artificial intelligence model. Int Commun Heat Mass Transf. 2013;43:16–21. https://doi.org/10.1016/J.ICHEATMASSTRANSFER.2013.02.008.

Hemmat Esfe M, Ahangar MR, Rejvani M, Toghraie D, Hajmohammad MH. Designing an artificial neural network to predict dynamic viscosity of aqueous nanofluid of TiO2 using experimental data. Int Commun Heat Mass Transf. 2016;75:192–6. https://doi.org/10.1016/j.icheatmasstransfer.2016.04.002.

Jia-Fei Z, Zhong-Yang L, Ming-Jiang N, Ke-Fa C. Dependence of nanofluid viscosity on particle size and pH value. Chin Phys Lett. 2009;26:066202. https://doi.org/10.1088/0256-307X/26/6/066202.

Hari M, Joseph SA, Mathew S, Nithyaja B, Nampoori VPN, Radhakrishnan P. Thermal diffusivity of nanofluids composed of rod-shaped silver nanoparticles. Int J Therm Sci. 2013;64:188–94. https://doi.org/10.1016/J.IJTHERMALSCI.2012.08.011.

Kole M, Dey TK. Role of interfacial layer and clustering on the effective thermal conductivity of CuO–gear oil nanofluids. Exp Therm Fluid Sci. 2011;35:1490–5. https://doi.org/10.1016/J.EXPTHERMFLUSCI.2011.06.010.

Tso CY, Fu SC, Chao CYH. A semi-analytical model for the thermal conductivity of nanofluids and determination of the nanolayer thickness. Int J Heat Mass Transf. 2014;70:202–14. https://doi.org/10.1016/J.IJHEATMASSTRANSFER.2013.10.077.

Chen H, Ding Y, Tan C. Rheological behaviour of nanofluids. New J Phys. 2007;9:367. https://doi.org/10.1088/1367-2630/9/10/367.

He Y, Jin Y, Chen H, Ding Y, Cang D, Lu H. Heat transfer and flow behaviour of aqueous suspensions of TiO2 nanoparticles (nanofluids) flowing upward through a vertical pipe. Int J Heat Mass Transf. 2007;50:2272–81. https://doi.org/10.1016/J.IJHEATMASSTRANSFER.2006.10.024.

Ansari HR, Zarei MJ, Sabbaghi S, Keshavarz P. A new comprehensive model for relative viscosity of various nanofluids using feed-forward back-propagation MLP neural networks. Int Commun Heat Mass Transf. 2018;91:158–64. https://doi.org/10.1016/J.ICHEATMASSTRANSFER.2017.12.012.

Baghban A, Kardani MN, Habibzadeh S. Prediction viscosity of ionic liquids using a hybrid LSSVM and group contribution method. J Mol Liq. 2017;236:452–64. https://doi.org/10.1016/J.MOLLIQ.2017.04.019.

Bahadori A, Baghban A, Bahadori M, Lee M, Ahmad Z, Zare M, Abdollahi E. Computational intelligent strategies to predict energy conservation benefits in excess air controlled gas-fired systems. Appl Therm Eng. 2016;102:432–46. https://doi.org/10.1016/J.APPLTHERMALENG.2016.04.005.

Baghban A, Mohammadi AH, Taleghani MS. Rigorous modeling of CO2 equilibrium absorption in ionic liquids. Int J Greenh Gas Control. 2017;58:19–41. https://doi.org/10.1016/J.IJGGC.2016.12.009.

Baghban A, Bahadori M, Rozyn J, Lee M, Abbas A, Bahadori A, Rahimali A. Estimation of air dew point temperature using computational intelligence schemes. Appl Therm Eng. 2016;93:1043–52.

Baghban A, Bahadori A, Mohammadi AH, Behbahaninia A. Prediction of CO2 loading capacities of aqueous solutions of absorbents using different computational schemes. Int J Greenh Gas Control. 2017;57:143–61.

Baghban A, Ahmadi MA, Shahraki BH. Prediction carbon dioxide solubility in presence of various ionic liquids using computational intelligence approaches. J Supercrit Fluids. 2015;98:50–64.

Atashrouz S, Pazuki G, Alimoradi Y. Estimation of the viscosity of nine nanofluids using a hybrid GMDH-type neural network system. Fluid Phase Equilib. 2014;372:43–8. https://doi.org/10.1016/J.FLUID.2014.03.031.

Derakhshanfard F, Mehralizadeh A. Application of artificial neural networks for viscosity of crude oil-based nanofluids containing oxides nanoparticles. J Pet Sci Eng. 2018;168:263–72.

Meybodi MK, Naseri S, Shokrollahi A, Daryasafar A. Prediction of viscosity of water-based Al2O3, TiO2, SiO2, and CuO nanofluids using a reliable approach. Chemom Intell Lab Syst. 2015;149:60–9. https://doi.org/10.1016/J.CHEMOLAB.2015.10.001.

Baghban A, Habibzadeh S, Ashtiani FZ. Toward a modeling study of thermal conductivity of nanofluids using LSSVM strategy. J Therm Anal Calorim. 2018;10:1–10. https://doi.org/10.1007/s10973-018-7074-5.

Atashrouz S, Mozaffarian M, Pazuki G. Viscosity and rheological properties of ethylene glycol + water + Fe3O4 nanofluids at various temperatures: Experimental and thermodynamics modeling. Korean J Chem Eng. 2016;33:2522–9. https://doi.org/10.1007/s11814-016-0169-4.

Heidari E, Sobati MA, Movahedirad S. Accurate prediction of nanofluid viscosity using a multilayer perceptron artificial neural network (MLP-ANN). Chemom Intell Lab Syst. 2016;155:73–85. https://doi.org/10.1016/j.chemolab.2016.03.031.

Barati-Harooni A, Najafi-Marghmaleki A. An accurate RBF-NN model for estimation of viscosity of nanofluids. J Mol Liq. 2016;224:580–8. https://doi.org/10.1016/J.MOLLIQ.2016.10.049.

Hemmati-Sarapardeh A, Varamesh A, Husein MM, Karan K. On the evaluation of the viscosity of nanofluid systems: Modeling and data assessment. Renew Sustain Energy Rev. 2018;81:313–29. https://doi.org/10.1016/J.RSER.2017.07.049.

Longo GA, Zilio C, Ceseracciu E, Reggiani M. Application of Artificial Neural Network (ANN) for the prediction of thermal conductivity of oxide–water nanofluids. Nano Energy. 2012;1:290–6. https://doi.org/10.1016/J.NANOEN.2011.11.007.

Hemmat Esfe M, Yan W-M, Afrand M, Sarraf M, Toghraie D, Dahari M. Estimation of thermal conductivity of Al2O3/water (40%)–ethylene glycol (60%) by artificial neural network and correlation using experimental data. Int Commun Heat Mass Transf. 2016;74:125–8. https://doi.org/10.1016/j.icheatmasstransfer.2016.02.002.

Hemmat Esfe M, Afrand M, Wongwises S, Naderi A, Asadi A, Rostami S, Akbari M. Applications of feedforward multilayer perceptron artificial neural networks and empirical correlation for prediction of thermal conductivity of Mg(OH)2–EG using experimental data. Int Commun Heat Mass Transf. 2015;67:46–50. https://doi.org/10.1016/j.icheatmasstransfer.2015.06.015.

Hemmat Esfe M, Afrand M, Yan W-M, Akbari M. Applicability of artificial neural network and nonlinear regression to predict thermal conductivity modeling of Al2O3–water nanofluids using experimental data. Int Commun Heat Mass Transf. 2015;66:246–9. https://doi.org/10.1016/j.icheatmasstransfer.2015.06.002.

Esfe M, Wongwises S, Naderi A, Asadi A, Safaei MR, Rostamian H, Dahari M, Karimipour A. Thermal conductivity of Cu/TiO2–water/EG hybrid nanofluid: experimental data and modeling using artificial neural network and correlation. Int Commun Heat Mass Transf. 2015;66:100–4. https://doi.org/10.1016/j.icheatmasstransfer.2015.05.014.

Hemmat Esfe M, Rostamian H, Afrand M, Karimipour A, Hassani M. Modeling and estimation of thermal conductivity of MgO–water/EG (60:40) by artificial neural network and correlation. Int Commun Heat Mass Transf. 2015;68:98–103. https://doi.org/10.1016/j.icheatmasstransfer.2015.08.015.

Aminian A. Predicting the effective viscosity of nanofluids for the augmentation of heat transfer in the process industries. J Mol Liq. 2017;229:300–8. https://doi.org/10.1016/J.MOLLIQ.2016.12.071.

Tafarroj MM, Daneshazarian R, Kasaeian A. CFD modeling and predicting the performance of direct absorption of nanofluids in trough collector. Appl Therm Eng. 2019;148:256–69.

Manogaran G, Varatharajan R, Priyan MK. Hybrid recommendation system for heart disease diagnosis based on multiple kernel learning with adaptive neuro-fuzzy inference system. Multimed. Tools Appl. 2018;77:4379–99.

Mohaghegh S. Virtual-intelligence applications in petroleum engineering: part 3—fuzzy logic. J Pet Technol. 2000;52:82–7. https://doi.org/10.2118/62415-JPT.

Mohagheghian E, Zafarian-Rigaki H, Motamedi-Ghahfarrokhi Y, Hemmati-Sarapardeh A. Using an artificial neural network to predict carbon dioxide compressibility factor at high pressure and temperature. Korean J Chem Eng. 2015;32:2087–96. https://doi.org/10.1007/s11814-015-0025-y.

Lashkarbolooki M, Hezave AZ, Ayatollahi S. Artificial neural network as an applicable tool to predict the binary heat capacity of mixtures containing ionic liquids. Fluid Phase Equilib. 2012;324:102–7. https://doi.org/10.1016/J.FLUID.2012.03.015.

Hemmati-Sarapardeh A, Ghazanfari M-H, Ayatollahi S, Masihi M. Accurate determination of the CO2-crude oil minimum miscibility pressure of pure and impure CO2 streams: a robust modelling approach. Can J Chem Eng. 2016;94:253–61. https://doi.org/10.1002/cjce.22387.

Fausett L. Fundamentals of neural networks: architectures, algorithms, and applications. Prentice-Hall, Inc., 1994.

Alfarhan KA, Mashor MY, Saad AR, Azeez HA, Sabry MM. Effects of the window size and feature extraction approach for arrhythmia classification. J Biomim Biomater Biomed Eng. 2017;30:1–11. https://doi.org/10.4028/www.scientific.net/JBBBE.30.1.

Wang R, Du H, Zhou F, Deng D, Liu Y. An adaptive neural fuzzy network clothing comfort evaluation model and application in digital home. Multimed Tools Appl. 2014;71:395–410. https://doi.org/10.1007/s11042-013-1519-4.

Suykens JAK, Vandewalle J. Least squares support vector machine classifiers. Neural Process Lett. 1999;9:293–300. https://doi.org/10.1023/A:1018628609742.

Varamesh A, Hemmati-Sarapardeh A, Dabir B, Mohammadi AH. Development of robust generalized models for estimating the normal boiling points of pure chemical compounds. J Mol Liq. 2017;242:59–69. https://doi.org/10.1016/J.MOLLIQ.2017.06.039.

Panda SS, Chakraborty D, Pal SK. Flank wear prediction in drilling using back propagation neural network and radial basis function network. Appl Soft Comput. 2008;8:858–71. https://doi.org/10.1016/J.ASOC.2007.07.003.

Turgut A, Tavman I, Chirtoc M, Schuchmann HP, Sauter C, Tavman S. Thermal conductivity and viscosity measurements of water-based TiO2 nanofluids. Int J Thermophys. 2009;30(4):1213–26.

Duangthongsuk Weerapun, Wongwises Somchai. Measurement of temperature-dependent thermal conductivity and viscosity of TiO2–water nanofluids. Exp Therm Fluid Sci. 2009;33(4):706–14.

Murshed SMS, Leong KC, Yang C. Enhanced thermal conductivity of TiO2–water based nanofluids. Int J Therm Sci. 2005;44(4):367–73.

Murshed SMS, Leong KC, Yang C. Investigations of thermal conductivity and viscosity of nanofluids. Int J Therm Sci. 2008;47(5):560–8.

Bobbo Sergio, Fedele Laura, Benetti Anna, Colla Laura, Fabrizio Monica, Pagura Cesare, Barison Simona. Viscosity of water based SWCNH and TiO2 nanofluids. Exp Therm Fluid Sci. 2012;36:65–71.

Gramatica P. Principles of QSAR models validation: internal and external. Mol Inform. 2007;26:694–701.

Goodall CR. 13 Computation using the QR decomposition. Handb Stat. 1993;9:467–508.

Thacker BH, Doebling SW, Hemez FM, Anderson MC, Pepin JE, Rodriguez EA. Concepts of model verification and validation. Los Alamos: Los Alamos National Laboratory; 2004. https://doi.org/10.2172/835920.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Ahmadi, M.H., Baghban, A., Ghazvini, M. et al. An insight into the prediction of TiO2/water nanofluid viscosity through intelligence schemes. J Therm Anal Calorim 139, 2381–2394 (2020). https://doi.org/10.1007/s10973-019-08636-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10973-019-08636-4