Abstract

This paper studies a Stieltjes-type moment problem defined by the generalized lognormal distribution, a heavy-tailed distribution with applications in economics, finance, and related fields. It arises as the distribution of the exponential of a random variable following a generalized error distribution, and hence figures prominently in the exponential general autoregressive conditional heteroskedastic (EGARCH) model of asset price volatility. Compared to the classical lognormal distribution it has an additional shape parameter. It emerges that moment (in)determinacy depends on the value of this parameter: for some values, the distribution does not have finite moments of all orders, hence the moment problem is not of interest in these cases. For other values, the distribution has moments of all orders, yet it is moment-indeterminate. Finally, a limiting case is supported on a bounded interval, and hence determined by its moments. For those generalized lognormal distributions that are moment-indeterminate, Stieltjes classes of moment-equivalent distributions are presented.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The moment problem asks for a given distribution with distribution function (CDF) \(F\) with finite moments \(m_k (F) ~=~ \int ^\infty _{-\infty } x^k \ \text{ d}F(x)\) of all orders \(k = 1, 2, \dots , \) whether or not \(F\) is uniquely determined by the sequence of these moments. If \(F\) is uniquely determined by this sequence, \(F\) or a random variable \(X\) following this distribution are called moment-determinate (for brevity, M-det); otherwise \(F\) or \(X\) are called moment-indeterminate (M-indet). Cases where the support of the distribution \(F\) is the positive half-axis \(\mathbb R ^+ = [0, \infty )\) are called Stieltjes moment problems, cases where the support is the real line are called Hamburger moment problems, and cases where the support is a bounded interval are called Hausdorff moment problems.

The probably most widely known example of an M-indeterminate distribution is the lognormal distribution, first described by Stieltjes [20] in a non-probabilistic setting and further developed by Heyde [8]. The lognormal distribution is a basic model for describing size phenomena in economics and related fields (see, e.g., [12]), including distributions of personal income, actuarial losses, or city sizes. It also arises in mathematical finance in the fundamental geometric Brownian motion model of asset price dynamics. Given the central role of the lognormal distribution in Stieltjes-type moment problems it is, therefore, of special interest to explore closely related distributions with respect to M-indeterminacy. Recently, Lin and Stoyanov [15] studied a generalization of the lognormal distribution derived from a skewed generalization of the normal distribution, finding that it is M-indeterminate for every value of the skewness parameter. The present paper explores a family of generalized lognormal distributions derived from a more classical symmetric generalization of the normal distribution, which compared to the normal distribution has an additional shape parameter. Like the classical lognormal distribution, this generalized version has been employed in financial economics as well as in modeling size distributions.

It turns out that this family of distributions sheds new light on the classical lognormal moment problem, in that M-determinacy now depends on the value of the shape parameter. Specifically, the family incorporates heavy-tailed distributions for which not all integer moments exist, moderately heavy-tailed distributions for which all moments exist yet the distributions are M-indeterminate, and, as a limiting case, a distribution with bounded support that is, therefore, determined by its moments. It also emerges that the classical lognormal distribution does not constitute an extreme case within the family: in the setting considered here, there exist more as well as less heavy-tailed M-indet distributions than the lognormal.

The paper is organized as follows: Sect. 2 provides some background on the generalized lognormal distribution. Section 3 contains a characterization of moment (in)determinacy for the family of generalized lognormal distributions in terms of their shape parameter, while Sect. 4 describes Stieltjes classes pertaining to the indeterminate cases. Section 5 concludes.

2 The Generalized Lognormal Distribution

Being one of the basic distributions in probability and statistics, the normal distribution has triggered a number of generalizations. One such generalization is defined by the density

which includes the normal as the special case where \(r=2\). Here \(\mu \in \mathbb R \) is a location parameter and \(\sigma \in \mathbb R ^+\) is a scale parameter. The new parameter \(r \in \mathbb R ^+\) is a shape parameter measuring tail thickness, with lower values of \(r\) indicating heavier tails. The parameter \(r\) plays a crucial role below.

This distribution is fairly widely known; however, it is known under different names in different fields and it was (re)discovered several times in different contexts. Specifically, since \(r=2\) yields the normal distribution and \(r=1\) the Laplace distribution, the distribution (1) is known both as a generalized normal distribution, in particular in the Italian language literature [16, 27], and as a generalized Laplace distribution. It is also known as the normal distribution of order \(r\), again especially in the Italian literature (e.g., [29]), and as the generalized error distribution, notably in econometrics and finance (e.g., [17]). A further name is exponential power distribution [3], the name under which this distribution is presumably best known in the statistical literature. To the best of the author’s knowledge, the generalized form (1) was first proposed in a Russian journal by Subbotin [25], who sought an axiomatic basis for a generalized form of Gauss’s “law of error.” Hence the name Subbotin distribution is also in use, notably in econophysics (e.g., [1]). A multivariate generalization of (1) is the Kotz-type distribution [13].

In what follows we sometimes set \(\mu = 0\), since in the context of moment problems no extra generality is gained by including this location parameter. There exist different parameterizations of (1), notably regarding the scale parameter, but for the purposes of this paper the relevant parameter is \(r\), so this complication shall be ignored below.

The generalized lognormal distribution [28, 29], or perhaps logarithmic generalized normal distribution, is less widely known than the generalized normal distribution. In fact, most of the currently available works are written in Italian and published in Italian journals and collected volumes that are often not easily available outside of Italy. A more accessible source may be Kleiber and Kotz [12], Ch. 4.10, who summarize many basic properties. The distribution is defined as the distribution of \(X = \exp (Y)\), where \(Y\) follows Eq. (1), leading to the density

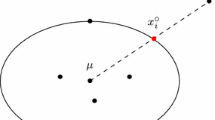

If a random variable \(X\) follows Eq. (2) this is denoted as \(X \sim \) GLN(\(\mu , \sigma , r\)). The distribution will sometimes be referred to as the generalized lognormal distribution of order \(r\) if further emphasis is needed. The case where \(r=2\) gives the classical lognormal distribution. In Eq. (2), \(e^{\mu }\) is a scale parameter, while \(\sigma \) and \(r\) are both shape parameters. The effect of the new parameter \(r\) is illustrated in Fig. 1. This Figure suggests that the density becomes more and more concentrated on a bounded interval with increasing \(r\). Specifically, for \(r=1.5\) the density is much like the classical lognormal density, but with slightly heavier tails, while for \(r=15\) several points of inflection and a more rapid decrease in the tails emerge. The limiting case where \(r \rightarrow \infty \) will also be explored below, see Theorem 3.

Like the classical lognormal distribution, the generalized lognormal distribution has been employed in economics and finance. As mentioned above, it has been used as a model for the size distribution of personal incomes. In an application to Italian income data, Brunazzo and Pollastri [4] estimate \(r\) in the vicinity of 1.45, suggesting a model with even heavier tails than the classical lognormal distribution for their data. It will emerge below that their estimated model is not determined by its moments.

Perhaps more prominently, the distribution also arises in the widely used exponential GARCH (EGARCH) model of asset return dynamics [17], where it provides a more realistic specification of the innovation distribution in the volatility equation than the normal distribution. Recall that, in view of the exponential transformation employed in the EGARCH model, a widely used alternative to the normal distribution in GARCH modeling, the \(t\) distribution, leads to tails that are too heavy, in the sense that the distribution corresponding to the exponentiated random variable has no moments of any order. In contrast, it will emerge below that the less extreme members of the generalized lognormal distribution possess moments of all orders, yet they are M-indeterminate. Specifically, all models estimated by Nelson [17], with shape parameters \(r\) in the vicinity of 1.56–1.57, are not determined by their moments. More recent work (e.g., [26]) confirms that \(1 < r < 2\) is the empirically relevant range of the tail thickness parameter in this model. All of these objects are M-indeterminate.

3 Generalized Lognormal Distributions and the Moment Problem

How can one determine whether or not a given distribution with CDF \(F\) is determined by the sequence of its moments? Although necessary and sufficient conditions are known (see, e.g., [19]), they are not very practical. For M-determinacy, a sufficient condition is the existence of the moment generating function (MGF) \(m_X (t) \ = \ \mathbb E [e^{tX}] \ = \ \int _0 ^\infty e^{tx} \ \text{ d}F_X(x),\,|t| < t_0\), for some \(t_0 >0\).

From the expression for the density (2) of the generalized lognormal distribution it is immediate that, for any \(r \in \mathbb R ^+,\,\mathbb E [e^{t X}] = \infty \) for all \(t > 0\); hence the MGF does not exist. It remains to explore the existence of the moments themselves. (Note that in view of \(X > 0\) (a.s.) it is possible to consider moments of fractional order.) Without loss of generality, set \(\mu = 0\) since \(\exp (\mu )\) is a scale parameter. Substituting \(z = \ln x\) yields, for some \(C > 0\),

This shows that convergence of the integral depends on the value of \(r\): for \(r > 1\) the integral is finite for all \(k\), for \(r = 1\) the condition \(|k| < 1/\sigma \) is needed, while for \(r < 1\) it does not converge for any \(k \ne 0\). The following proposition collects these observations:

Proposition 1

Suppose \(X \sim GLN(\mu , \sigma , r)\).

-

(a)

The moment-generating function of \(X\) does not exist for any \(r \in (0, \infty )\).

-

(b)

The \(k\)th moment \(\mathbb E [X^k]\) exists if and only if

-

\(k = 0\), if \(r < 1\).

-

\(|k| < 1/\sigma \), if \(r = 1\).

-

\(k \in (-\infty , \infty )\), if \(r > 1\).

-

Apart from the integral representation (3), it is also possible to obtain series expansions of the moments (when they exist). For \(r >1\), they are of the form

see Brunazzo and Pollastri [4] or Nelson [17].Footnote 1

In view of Proposition 1 not all generalized lognormal distributions are of interest in the context of the moment problem. For \(r=1\), only some moments exist, for \(r < 1\) no moments exist. The cases where \(r < 1\), therefore, provide examples of distributions without any moments, integer or fractional. An earlier example was given by Kleiber [10]. For the remaining cases where \(1 < r < \infty \) all the moments are finite yet the MGF does not exist. These are circumstances under which M-indeterminacy may arise.

It remains to show that the distributions where \(1 < r < \infty \) are indeed M-indet. For M-indeterminacy, a useful sufficient condition is the Krein condition (e.g., [22]). In a Stieltjes-type moment problem, it requires, for a density \(f\) that is strictly positive for all \(x \ge a > 0\), for some \(a > 0\), that the normalized logarithmic integral of the density

is finite. \(K_S[f]\) is called the Krein integral of \(f\).

The following Theorem shows that generalized lognormal distributions of orders \(1 < r < \infty \) are M-indeterminate:

Theorem 2

All generalized lognormal distributions \(GLN(\mu , \sigma , r)\) of order \(1 < r < \infty \) are M-indeterminate.

Proof

Setting without loss of generality \(\mu = 0\) and \(\sigma = 1\), the Krein integral (4) is, for \(a > 0\) and \(C_r > 0\) the normalizing constant,

Since for large \(x\) the integrand is eventually dominated by \(x^{-1-\delta }\), for any \(\delta \in (0,1)\), this integral is finite for all \(1 < r < \infty \), which gives the result. \(\square \)

Alternative proofs could employ results presented by Gut [7], Remark 6.2 or by Pakes, Hung and Wu [18], p. 110.

For \(X_i \sim \) GLN(\(\mu _i, \sigma _i, r_i\)), \(i = 1,2\), with \(r_i > 1\) and densities \(f_i\) it is easily seen that \(\lim _{x \rightarrow \infty } f_1 (x)/ f_2(x) = \infty \) iff \(r_1 < r_2\), hence the generalized lognormal distributions are, in a sense, “more M-indeterminate” for smaller \(r\). (Indeed, in view of Proposition 1 for \(r=1\) some moments no longer exist.) Specifically, the generalized lognormal distributions with \(1 < r < 2\) are even more extreme than the classical lognormal distribution (\(r=2\)). Also, the cases where \(2 < r < \infty \) are less extreme. It is also noteworthy that although the tails of the generalized lognormal distribution become lighter and lighter with increasing \(r\), the distribution is M-indet no matter how large \(r\). It is, therefore, natural to ask what happens in the limit, i.e., for \(r \rightarrow \infty \). The following Theorem addresses this case:

Theorem 3

For \(r \rightarrow \infty \), the generalized lognormal distribution GLN(\(\mu ,\sigma , r\)) tends to a distribution supported on a bounded interval. Hence this limiting distribution is M-det.

Proof

It is convenient to analyze the limiting case for the distribution of \(Y = \ln X\), i.e., the generalized normal distribution. Without loss of generality, set \(\mu = 0\) and \(\sigma = 1\). A random variable \(Y\) following a generalized normal distribution admits the mixture representation [5], p. 175

where \(U\) is uniform on \([-1,1]\) and \(Z \sim (r^{1/r}) W^{1/r}\) with \(W \sim \) Ga(\(1+1/r, 1\)), i.e, a gamma distribution with scale 1 and shape parameter \(1+1/r\). Hence \(Z\) follows a generalized gamma (GG) distribution, specifically \(Z \sim \) GG(\(r, r^{1/r}, 1 + 1/r\)). The moments of \(Z\) are (see, e.g., [12], p. 151)

Now \(\lim _{r \rightarrow \infty } \mathbb E [Z^k] = 1\) for all \(k\), and it follows that \(Z = r^{1/r} W^{1/r}\) tends to a point mass at 1 by Fréchet-Shohat (e.g., [6], p. 81). Thus \(\lim _{r \rightarrow \infty } Y {\mathop {=}\limits ^{d}}U\), and the density of \(\exp (U)\) is given by

This distribution has compact support, hence it is determined by its moments. \(\square \)

Lunetta [16] provides an alternative derivation of the limiting distribution of the generalized normal distribution that analyzes the limit of its characteristic function. However, we prefer the approach involving a mixture representation presented here because it motivates further questions, on which more below.

Interestingly, Bomsdorf [2] observed that a distribution of the type described by Eq. (6) occurs as the distribution of prizes in lotteries, hence he calls it the prize competition distribution. Among other characteristics he also provides the MGF of this object.

4 Stieltjes Classes for M-Indeterminate Generalized Lognormal Distributions

The preceding section showed that generalized lognormal distributions of orders \(1 < r < \infty \) are M-indeterminate, by way of an existence proof. To round off the discussion, this section provides explicit examples of distributions that are equivalent, in the sense of having identical moments of all orders, to these indeterminate distributions.

A Stieltjes class—a term coined by Stoyanov [23]—corresponding to a moment-indeterminate distribution \(F\) with density \(f\) is a set

where \(p(x)\) is a perturbation function satisfying \(-1 \le p(x) \le 1\) and \(\mathbb E [X^k p(X)] = 0\) for all \(k = 0,1,2, \dots \).

It is possible to obtain Stieltjes classes for the generalized lognormal distributions of orders \(1 < r < \infty \) that generalize a recently derived Stieltjes class pertaining to the classical lognormal distribution. The construction of the required Stieltjes classes in the following Theorem is adapted from a construction presented by Stoyanov and Tolmatz [24], Theorem 3:

Theorem 4

Suppose \(X \sim GLN(\mu , \sigma , r)\) with density \(f_r,\,(\mu , \sigma , r) \in \mathbb R \times \mathbb R ^+ \times (1, \infty )\).

-

(a)

The function

$$\begin{aligned} h_r(x) \!= \! \left\{ \begin{array}{ll} \sin \{ ( x - 1 )^{1/4} \} \exp \left\{ \frac{1}{r \sigma ^r } | \ln x \!-\! \mu | ^r + \ln x \!-\! ( x \!-\! 1 )^{1/4} \right\} ,&x > 1, \\ 0,&x \le 1, \end{array} \right. \end{aligned}$$(7)is bounded on \(\mathbb R ^+\) for all \((\mu , \sigma , r) \in \mathbb R \times \mathbb R ^+ \times (1, \infty )\), with \(\mathbb E [X^k h_r(X)] = 0\) for all \(k=0,1,2,\dots \).

-

(b)

\(p_r {:}= h_r /H_r\), with \(H_r{:}=\sup _x |h_r(x)|\), defines a perturbation corresponding to \(f_r\).

-

(c)

The family of functions \(f_{r,\varepsilon } (x) = f_r(x) [1 + \varepsilon \ p_r(x)],\,\varepsilon \in [-1, 1]\), defines a Stieltjes class comprising distributions whose moments are identical to those of \(f_r\) for any \(\varepsilon \in [-1, 1]\).

Proof

The function \(h_r\) is continuous on \((1, \infty )\), with \(\lim _{x \rightarrow 1^+} h_r(x) < \infty \) and \(\lim _{x\rightarrow \infty } h_r(x) = 0\), hence \(h_r\) is bounded on \(\mathbb R ^+\).

By construction, with \(C_r > 0\) the normalizing constant of \(f_r\),

for \(k = 0,1,2, \dots \) in view of Lemma 1 of Stoyanov and Tolmatz [24] and the fact that

This proves (a).

Since \(H_r {:}= \sup _x |h_r(x)| < \infty \) we may set \(p_r(x) = h_r(x) / H_r\), assuring \(|p_r(x)| \le 1\) for all \(x\). This gives (b). Finally, \(f_{r,\varepsilon } (x) = f_r(x) [1 + \varepsilon \ p_r(x)]\) defines a density for any \(\varepsilon \in [-1, 1]\), which gives (c). \(\square \)

It should be noted that the construction of Stoyanov and Tolmatz [24] is somewhat more general, in that the kernel \(k(x) {:}= (x-1)^{1/4}\) used here may be generalized to a three-parameter family of kernels defined by \(k(x;\xi , \delta , \beta ) {:}= (\delta x - \xi )^\beta \tan (\pi \beta )\), where \((\xi , \delta , \beta ) \in \mathbb R ^+ \times \mathbb R ^+ \times (0,1/2)\). Thus amending the kernel in this manner defines a four-parameter family of perturbations \(p_r(x;\xi , \delta , \beta )\) leading to Stieltjes classes that generalize the three-parameter family of Stieltjes classes for the classical lognormal distribution derived by Stoyanov and Tolmatz [24]. However, the Stieltjes class presented above already provides infinitely many distributions whose moments coincide with those of the generalized lognormal distribution.

In (7), the choice of \(\beta = 1/4\) was made because it is related to one of the classical examples of an M-indeterminate distribution that dates back to the pioneering work of Stieltjes [20]. Stieltjes considered the case where \(\xi = 0\) and the perturbation \(h(x) = \sin (x^{1/4}),\,x > 0\), used in the proof of part (a) of Theorem 4; it pertains to a certain generalized gamma distribution. Moreover, a shift \(\xi > 0\) is needed in (7), as otherwise the resulting object would exhibit a singularity at the origin, see also the discussion in Stoyanov and Tolmatz [24], Section 4.

5 Further Discussion and Concluding Remarks

The paper exhibited a family of distributions, occurring in economics and finance, that generalizes the lognormal distribution, the classical example of a moment-indeterminate distribution. It emerged not only that a large subfamily consists of moment-indeterminate distributions, but also that not all members share this property of the lognormal, for different reasons: some tails are so heavy that not enough moments exist, while a limiting case corresponds to a light-tailed distribution with compact support.

It may, therefore, be asked to what extent it is possible to characterize the generalized lognormal distributions with \(r=1\), i.e., the log-Laplace distributions,Footnote 2 for which \(\mathbb E [X^k] < \infty \) iff \(|k| < 1/\sigma \). If one leaves the classical setting of the moment problem characterizations in terms of certain moments are possible. First, Theorem 1 of Lin [14] implies that characterizations in terms of fractional moments are feasible: for a sequence \(\{k_n \; | \; 0 < k_n < 1/\sigma ; n \in \mathbb N \}\) of positive and distinct numbers converging to some \(k_0 \in (0, 1/\sigma )\), the sequence \(\{ \mathbb E [X^{k_n}] \; | \; n \in \mathbb N \}\) of fractional moments characterizes the distribution. Second, observe that for \(r=1\) the first moment exists iff \(\sigma < 1\). It is well known that existence of the first moment permits characterization of the underlying distribution in terms of the triangular array of first moments of the associated order statistics, \(\{ \mathbb E [X_{k:n}] \; | \; k = 1,2, \ldots , n ; n \in \mathbb N \}\), where \( X_{1:n} \le X_{2:n} \le \ldots \le X_{n:n}\) are the order statistics in a sample of size \(n\). In fact, certain subsets of this array are already sufficient, see Huang [9] for a review. Such characterizations are meaningful in applications to income distribution [11], one of the fields where the generalized lognormal distribution has been employed. Note also that both characterizations, via fractional moments as well as via moments of order statistics, are available for all generalized lognormal distributions with \(r > 1\) since moments of arbitrary order exist in that case.

It is natural to ask about M-determinacy of the more widely known distribution of \(\ln X\), the generalized error or Subbotin distribution (1). This is a Hamburger moment problem. The answer is already available in the literature, although not in a probabilistic setting: the family of generalized error distributions also admits M-indet examples, namely for \(r < 1\), and a Stieltjes class is given in Shohat and Tamarkin [19], p. 22.

It is also known that for some M-determinate distributions power transformations lead to M-indeterminacy and vice versa (e.g., [21]). The standard example is the generalized gamma distribution. For \(X \sim \) GLN(\(\mu , \sigma , r\)), it is easily seen that \(X^p \sim \) GLN(\(p\mu , p\sigma , r\)) for all \(p > 0\), showing that the distribution is closed under power transformations. Hence this well-known property of the classical lognormal distribution extends to the generalized version (2). Consequently, consideration of power transformations does not lead to new insights regarding the moment problem here.

However, it might be worthwhile to further explore aspects of the mixture representation (5). This representation is a special case of a general mixture representation for unimodal distributions known as Khinchine’s theorem. The exponentiated version states that \(\exp (Y) = \exp (U Z)\), i.e., a random variable following a generalized lognormal distribution can be obtained as the exponential of the product of a uniform and a transformed gamma random variable. It would be interesting to characterize the set of mixing distributions \(F_Z\) leading to indeterminate log-unimodal distributions.

Notes

It should be noted that these works employ different parameterizations of the distribution. Also, Nelson [17] obtains expectations of somewhat more general objects. Setting \(\gamma = 0,\,p=0\), and \(\theta =1\) in his Theorem A1.2 yields the required moments. The resulting expressions can be shown to coincide with those presented by Brunazzo and Pollastri [4].

This question was raised by an anonymous reviewer.

References

Alfarano, S., Milaković, M., Irle, A., Kauschke, J.: A statistical equilibrium model of competitive firms. J. Econ. Dyn. Control 36, 136–149 (2012)

Bomsdorf, E.: The prize-competition distribution: a particular \(L\)-distribution as a supplement to the Pareto distribution. Stat. Pap. 18, 254–264 (1977)

Box, G.E.P., Tiao, G.: Bayesian Inference in Statistical Analysis. Addison-Wesley, Reading (1973)

Brunazzo, A., Pollastri, A.: Proposta di una nuova distribuzione: la lognormale generalizzata. In: Scritti in Onore di Francesco Brambilla, vol. 1. Bocconi Comunicazioni, Milan (1986)

Devroye, L.: Non-uniform Random Number Generation. Springer-Verlag, New York (1986)

Galambos, J.: Advanced Probability Theory, 2nd edn. Marcel Dekker, New York (1995)

Gut, A.: On the moment problem. Bernoulli 8, 407–421 (2002)

Heyde, C.C.: On a property of the lognormal distribution. J. R. Stat. Soc. Series B 25, 392–393 (1963)

Huang, J.S.: Moment problem of order statistics: a review. Int. Stat. Rev. 57, 59–66 (1989)

Kleiber, C.: A simple distribution without any moments. Math. Sci. 25, 59–60 (2000)

Kleiber, C., Kotz, S.: A characterization of income distributions in terms of generalized Gini coefficients. Soc. Choice Welfare 19, 789–794 (2002)

Kleiber, C., Kotz, S.: Statistical Size Distributions in Economics and Actuarial Sciences. Wiley, Hoboken (2003)

Kotz, S.: Multivariate distributions at a cross-road. In: Kotz, S., Ord, J.K., Patil, G.P. (eds.) Statistical Distributions in Scientific Work, vol. 1. D. Reidel Publishing Company, Dordrecht (1975)

Lin, G.D.: Characterizations of distributions via moments. Sankhyā A 54, 128–132 (1992)

Lin, G.D., Stoyanov, J.: The logarithmic skew-normal distributions are moment-indeterminate. J. Appl. Probab. 46, 909–916 (2009)

Lunetta, G.: Di una generalizzazione dello schema della curva normale. Annali della Facoltà di Economia e Commercio di Palermo 17, 237–244 (1963)

Nelson, D.B.: Conditional heteroskedasticity in asset returns: a new approach. Econometrica 59, 347–370 (1991)

Pakes, A.G., Hung, W.-L., Wu, J.-W.: Criteria for the unique determination of probability distributions by moments. Aust. N. Z. J. Stat. 43, 101–111 (2001)

Shohat, J.A., Tamarkin, J.D.: The Problem of Moments. American Mathematical Society, Providence (1950). revised edition

Stieltjes, T.J.: Recherches sur les fractions continues. Annales de la Faculté des Sciences de Toulouse 8/9, 1–122, 1–47 (1894/1895)

Stoyanov, J.: Counterexamples in Probability, 3rd edn., Dover, New York (2013)

Stoyanov, J.: Krein condition in probabilistic moment problems. Bernoulli 6, 939–949 (2000)

Stoyanov, J.: Stieltjes classes for moment-indeterminate probability distributions. J. Appl. Probab. 41A, 281–294 (2004)

Stoyanov, J., Tolmatz, L.: Methods for constructing Stieltjes classes for M-indeterminate probability distributions. Appl. Math. Comput. 165, 669–685 (2005)

Subbotin, M.T.: On the law of frequency of error. Math. Sb. 31, 296–301 (1923)

Taylor, S.J.: Asset Price Dynamics, Volatility, and Prediction. Princeton University Press, Princeton (2005)

Vianelli, S.: La misura della variabilità condizionata in uno schema generale delle curve normali di frequenza. Statistica 23, 447–474 (1963)

Vianelli, S.: Sulle curve lognormali di ordine \(r\) quali famiglie di distribuzioni di errori di proporzione. Statistica 42, 155–176 (1982)

Vianelli, S.: The family of normal and lognormal distributions of order \(r\). Metron 41, 3–10 (1983)

Acknowledgments

I am grateful to Thomas Zehrt for helpful discussions and to an anonymous reviewer for a careful reading of an earlier draft.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kleiber, C. The Generalized Lognormal Distribution and the Stieltjes Moment Problem. J Theor Probab 27, 1167–1177 (2014). https://doi.org/10.1007/s10959-013-0477-0

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10959-013-0477-0

Keywords

- Generalized error distribution

- Generalized lognormal distribution

- Lognormal distribution

- Moment problem

- Size distribution

- Stieltjes class

- Volatility model