Abstract

For an ergodic continuous-time birth and death process on the nonnegative integers, a well-known theorem states that the hitting time T 0,n starting from state 0 to state n has the same distribution as the sum of n independent exponential random variables. Firstly, we generalize this theorem to an absorbing birth and death process (say, with state −1 absorbing) to derive the distribution of T 0,n . We then give explicit formulas for Laplace transforms of hitting times between any two states for an ergodic or absorbing birth and death process. Secondly, these results are all extended to birth and death processes on the nonnegative integers with ∞ an exit, entrance, or regular boundary. Finally, we apply these formulas to fastest strong stationary times for strongly ergodic birth and death processes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we will study the distribution of passage time between any two states of an irreducible continuous-time birth and death process on the nonnegative integers {0,1,2,…}. This is an extension of a well-known theorem, which states that the passage time from state 0 to state d (<∞) has the same law as a sum of d independent exponential random variables with distinct rates. These rates are just the nonzero eigenvalues of the associated generator for the process absorbed at state d; cf. [3, 14, 16]. For historical comments, see Fill [10], Diaconis and Miclo [6], and references therein.

Very recently, Fill [10] gave a first stochastic proof for this theorem via duality. An excellent application of this theorem is to the distribution of fastest strong stationary time for an ergodic birth and death process on {0,1,…,d}. It is also the starting point of studying separation cut-off for birth and death processes in [7]. By a similar method, Fill [11] proved an analogous result for upward skip-free processes. Diaconis and Miclo [6] presented another probabilistic proof for birth and death processes, by using the “differential operators” for birth and death processes [8].

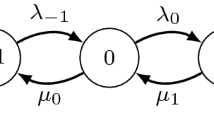

Consider a continuous-time birth and death process (X t ) t≥0 with generator Q=(q ij ) on E={0,1,2,…}. The (q ij ) are specified as follows:

Here {a i :i≥1} and {b i :i≥0} are two sequences of positive numbers, and a 0≥0. When a 0=0, state 0 is reflecting; when a 0>0, state 0 is not conservative, so by convention we can and do regard an extra state −1 as the absorbing state.

Let T i,n be the hitting time of state n starting from state i. A well-known theorem is the following (see [10, Theorem 1.1]).

Theorem 1.1

Let \(\lambda_{1}^{(n)}<\cdots<\lambda_{n}^{(n)}\) be all (positive) n eigenvalues of −Q (n), where

Then T 0,n is distributed as a sum of n independent exponential random variables with rate parameters \(\{\lambda_{1}^{(n)}, \ldots,\lambda_{n}^{(n)} \}\). That is,

We will investigate the distribution of hitting time T i,n for birth and death process (X t ) on the nonnegative integers. This includes four cases:

- Case I::

-

0≤i<n<∞;

- Case II::

-

0≤n<i≤N<∞, where N is a reflecting state;

- Case III::

-

0≤i<n=∞;

- Case IV::

-

0≤n<i≤∞.

Cases I and II can be easily deduced from Theorem 1.1. See Corollaries 2.1 and 2.2 in the next section.

When we deal with a birth and death process on E, we will first face the classification of infinity state ∞ concerning uniqueness of the corresponding Q-process. The classification of Q-process in (1.1) was due to Feller [8]. See also [2, Chap. 8] for more details. According to [8], there are four types of ∞ boundary: regular, exit, entrance, and natural boundaries.

Define

and \(\mu=\sum_{i=0}^{\infty}\mu_{i}\). Then (μ i ,i≥0) is the unique (up to scaling) invariant measure.

Let us also define

The ∞ boundary is called exit if R<∞,S=∞; entrance if R=∞,S<∞; regular if R<∞,S<∞; natural if R=∞=S. From [2, Sect. 8.1] we know that a necessary and sufficient condition for the boundary at ∞ to be an exit boundary is

a necessary and sufficient condition for the boundary at ∞ to be an entrance boundary is

Another difficulty for an infinite birth and death process is obviously determining all the eigenvalues or the spectrum of the generator. By the spectral theory established in [18] and [19], we can overcome this difficulty. Briefly speaking, we give distributions of T 0,∞ (the lifetime) for the minimal birth and death process corresponding to Q when ∞ is an exit boundary. We will use approximation by letting n→∞ to derive the distribution of T 0,∞ from that of T 0,n in Theorem 1.1. To deal with the eigenvalues for birth and death processes in infinite state spaces, we should utilize the powerful theory of Dirichlet forms. Dirichlet forms help one to obtain the variational formulas for eigenvalues and, more importantly, provides the approximation procedure. A similar situation appears when ∞ is an entrance boundary, from a view point of the duality method in [14]. The duality method was used successfully in [5] to study the estimation of the principal eigenvalue for birth and death processes.

To end this section, we mention the Dirichlet form associated to a birth and death process. Let

and  . Let

. Let

Then  is called regular if

is called regular if  is dense in

is dense in  with respect to the Dirichlet norm \(\Vert\cdot\Vert_{D}: \Vert f\Vert_{D}^{2}=\mu(f^{2})+D(f)\); see [4, Sects. 6.7–6.8]. From [4, Corollary 6.62] we know that the symmetric Q-process is unique if and only if

with respect to the Dirichlet norm \(\Vert\cdot\Vert_{D}: \Vert f\Vert_{D}^{2}=\mu(f^{2})+D(f)\); see [4, Sects. 6.7–6.8]. From [4, Corollary 6.62] we know that the symmetric Q-process is unique if and only if  is regular. Here the symmetric process Q-process means the transition function p

ij

(t) corresponding to Q satisfies μ

i

p

ij

(t)=μ

j

p

ji

(t) for all i,j,t. This means that for Q-matrix defined by (1.1), whenever

is regular. Here the symmetric process Q-process means the transition function p

ij

(t) corresponding to Q satisfies μ

i

p

ij

(t)=μ

j

p

ji

(t) for all i,j,t. This means that for Q-matrix defined by (1.1), whenever  is regular, the minimal process is the only symmetric Q-process.

is regular, the minimal process is the only symmetric Q-process.

It is proven in [5, Proposition 1.3] that  is regular if and only if

is regular if and only if

We remark that when ∞ is an exit or entrance boundary, the Dirichlet form is regular. For natural boundary (R=∞,S=∞), the situation is different. Although the Dirichlet form is unique, we will face the essential spectrum problem. In this case, the essential spectrum of the generator in L 2 may be nonempty. Thus, the formula for natural boundary may be totally different from Theorems 4.6 and 5.5 in this paper.

For regular boundary (R<∞,S<∞), the problem is that the Dirichlet form is not regular. Since now the corresponding Q-processes are not unique, we can do this in two ways. Firstly, we deal with the minimal process. In this case, we face the similar situation as in case of exit boundary. Secondly, we can deal with the “maximal” process. According to [4, Proposition 6.56] or [24, Theorem 1 in Sect. 13.2], this “maximal” process is the unique honest symmetric Q-process, which looks like a process with entrance boundary.

The rest of paper is organized as follows. In Sect. 2, we derive (Cases I and II) the distributions of hitting times T i,n for an ergodic finite birth and death process from Theorem 1.1. In Sect. 3, we give the distributions of hitting times for an absorbing finite birth and death process. In Sect. 4, we give (Case III) the distribution of the life time for a birth and death process with ∞ an exit boundary, starting from any i≥0. In Sect. 5, we give (Case IV) the distributions of T i,n (i>n) for a birth and death process with ∞ an entrance boundary. In Sect. 6, the distribution of (fastest) strong stationary time is derived. Finally in Sect. 7, we deal with the minimal and “maximal” processes, respectively, for a Q-matrix with regular boundary.

We remark that for one-dimensional diffusion process on a finite interval, Kent [17] investigated a similar problem and proved that the canonical measure corresponding to the distribution of a hitting time between two states can be identified with a spectral measure corresponding to the generator of the absorbing diffusion process.

2 Finite State Space with a 0=0

2.1 Case I

Let us first solve Case I from Theorem 1.1.

Corollary 2.1

For 0≤i<n<∞,

In particular,

Proof

Since T 0,n =T 0,i +T i,n by the skip-free property of birth and death process and T 0,i ,T i,n are independent by the strong Markov property, (2.1) follows immediately from Theorem 1.1.

Equation (2.2) follows by Theorem 1.1 and T 0,n =T 0,i +T i,n , or from (2.1) by a standard method to derive the moments from the Laplace transform. □

We remark that (2.2) is called the eigentime identity for absorbed birth and death processes; see [19]. It is different from that in [1, Chap. 3], where the eigentime identity for an ergodic finite Markov chain concerns the average hitting time. In [18], this kind of eigentime identity for continuous-time Markov chains on a countable state space was studied.

2.2 Case II

For 0≤n<N<∞, let \(\hat{\lambda}_{n,1}^{(N)}<\hat{\lambda}_{n,2}^{(N)}< \cdots<\hat{\lambda}_{n,N-n}^{(N)}\) be positive eigenvalues of \(-\hat{Q}_{n}^{(N)}\), where

This is the generator of the birth and death process on {n+1,…,N} with reflecting state N before the process reaches state n, which can be considered an absorbing state.

By using the mapping j↦j′=N−j on the state space, we can easily obtain the following results from Theorem 1.1 and Corollary 2.1.

Corollary 2.2

For 0≤n<i≤N<∞,

In particular,

and

Proof

The second equality in (2.4) can be found on [2, p. 264]. □

We remark that Miclo [21] gave via duality the distribution of hitting times for finite Markov chains by using a probabilistic method, which is close to that used in Fill [10]. As suggested kindly by a referee, it is interesting to study these problems for general Markov chains in infinite state spaces, as we have done in Theorems 4.6 and 5.5.

3 Finite State Space with a 0>0

In this section, we will study the hitting time distribution for absorbing finite space and give the answers for Case I and Case II.

In [10], Fill used a clever trick, duality method, to give a probabilistic proof for Theorem 1.1. This duality method was further used in [11] to establish a corresponding theorem for the skip-free process. See also Miclo [21]. In this subsection, we will give the distribution of T 0,n for the birth and death process when a 0>0, that is, for the process which is no longer conservative at state 0. Luckily, by modifying Fill’s duality method, we can finally carry out the result in Theorem 3.1 below.

Now fix n≥1 and assume that a 0>0. Set

Let 0=λ 0<λ 1<⋯<λ n−1<λ n be n+1 eigenvalues of −G, and denote by P(t) the probability transition function corresponding to G. Note that states n and −1 are absorbing states for P(t).

Let μ i be defined by (1.4), and further define

Theorem 3.1

With the notations given above, the hitting time T 0,n has the Laplace transform

To prove Theorem 3.1, we modify the argument in [10]. Define

Then we have the following lemma, see [10, Theorem 5.3].

Lemma 3.2

Define M 0=I and, for 1≤i≤n,

Let Λ=(Λ(i,j)) be Λ(i,j)=M i (0,j),0≤i,j≤n. Then

We remark that \(\prod_{k=0}^{i-1}(G+\lambda_{n-k}I)\) above are called spectral polynomials. See [10, 20]. Λ given in Lemma 3.2 is a little different from that given in [10]. In [10], Λ is a lower triangle stochastic matrix, but Λ in Lemma 3.2 is really substochastic. These polynomials, together with matrix Λ, satisfy the following properties.

Proposition 3.3

-

(i)

The matrices M i ,1≤i≤n, are nonnegative and substochastic.

-

(ii)

The matrix Λ is also nonnegative and substochastic. Actually, the sum of its ith row strictly decreases with i, and it is lower triangular with

$$\varLambda(0,0)=1,\qquad \varLambda(k,k)=\frac{b_0\cdots b_{k-1}}{\lambda_{n}\cdots\lambda_{n-k+1}}>0,\quad 1\le i\le n, $$which implies that Λ is nonsingular. So G and \(\hat{G}\) are similar.

Proof

(i) Let q=max0≤i<n {a i +b i } and A=G+q. Then A is nonnegative with eigenvalues \(\lambda^{A}_{i}=-\lambda_{i}+q\) and spectral polynomials satisfying

By [20, Theorem 3.2], the left side of the above equation is nonnegative, from which we get the nonnegativity of M i . In addition, from the definition in Lemma 3.2 it is easy to check by induction that the sum of every row for M i is not greater than 1, and thus each M i is substochastic.

(ii) From the definition in Lemma 3.2 we can derive the assertion by direct calculation. □

Lemma 3.4

where \(\hat{T}_{0,n}\) is the hitting time of n starting from 0 for the pure birth process \(\hat{P}(t)\) with generator \(\hat{G}\).

Proof

By Lemma 3.2 we have

Consider the (0,n)-entry of each side of above equality. Because Λ is a lower triangular matrix with Λ(0,0)=1, we have that

and

So,

To get Λ(n,n), let t→∞ in the above equation. Since \(\mathbb{P}(\hat{T}_{0,n}\le t)\rightarrow1\), we have

Note that T 0,n <∞ if and only if T 0,−1=∞. So,

It follows from [23, Theorem 3 in Sect. 5.1] that

This completes the proof. □

Lemma 3.4 is ready for the proof of Theorem 3.1. Let \(\hat{T}_{i,i+1}\) be the passage time from i to i+1 for \(\hat{P}(t)\). Since \(\hat{T}_{0,n}=\sum_{i=0}^{n-1}\hat{T}_{i,i+1}\) and \(\{\hat{T}_{i,i+1} \}\) are independent and distributed exponentially, we have

Next, we will give the distribution of T i,n for \(i\not=0\). For 1≤i<n, let \(\{\lambda_{\nu}^{(i)},1\le\nu\le i \}\) be the eigenvalues of −Q (i), where

This is the probability matrix of the process on {0,1,…,i−1} before the birth and death process P(t) reaches i.

The following conclusion is for the distribution of T i,n , the proof of which is similar to that of Corollary 2.2.

Corollary 3.5

For 0≤i<n, we have

and the eigentime identity holds:

Let us consider the hitting time T i,−1(0≤i<n). We use the same argument as in Case II by mapping j↦j′=n−j. For 0≤i<n−1, let \(\{{\lambda}_{\nu}^{(i,n)},1\le\nu\le n-1-i \}\) be the eigenvalues of −Q (i,n), where

Then we can easily obtain the following results from Theorem 3.1 and Corollary 3.5.

Corollary 3.6

and

Now we will consider the absorbing time, that is, the hitting time starting from i (0≤i<n) to the set {−1,n}. Let T

i

:=T

i,−1∧T

i,n

. Since either T

i,−1<∞ or T

i,n

<∞, we have  . Then we can derive the distribution of T

i

.

. Then we can derive the distribution of T

i

.

Theorem 3.7

For the birth and death process with generator (3.1), the absorbing time T i :=T i,−1∧T i,n has the Laplace transform

and the eigentime identity holds:

4 Case III: ∞ Is an Exit Boundary

In this section, we will derive the lifetime distribution for the minimal birth and death process when a 0=0 and Q-processes are not unique. We recall some facts about the uniqueness of a birth and death process, see, for example, [2, 4]. Then we study spectral theory for the minimal birth and death process. Spectral theory helps us to pass from finite states to infinite states; in particular, we will establish what are the limits of eigenvalues for finite birth and death processes as the state space goes to infinity.

Assume that a 0=0. When R<∞, the corresponding Q-processes are not unique; for details, see [2, Chap. 8] or [4, Chap. 4]. Let (X t ,t≥0) be the corresponding continuous-time Markov chain with minimal Q-function P(t)=(p ij (t)) on E, that is,

with ζ=lim n→∞ ξ n the lifetime, where ξ n are the epochs of successive jumps:

Proposition 4.1

-

(i)

When starting from state i, the lifetime ζ equals lim n→∞ T i,n a.s.

-

(ii)

Assume that R<∞. We have ℙ i [ζ=∞]=0 for any i∈E and \(\mathbb{E}_{0} \zeta=R\).

Proof

-

(i)

Let X 0=i. Note that ζ is the (first) time that the process has jumped infinitely many times. For any state n, before T i,n the process jumps only finite times, so that ζ≥T i,n for any n. Thus, ζ≥lim n→∞ T i,n a.s. Conversely, since the birth and death process jumps once to two nearest neighbors, we have ξ n ≤T i,n for any n. Thus, ζ=lim n→∞ ξ n ≤lim n→∞ T i,n a.s.

- (ii)

□

Denote by L 2(μ) the usual (real) Hilbert space on E. Then it is well known that Q (n),Q,P(t) are self-adjoint operators on L 2(μ). For a self-adjoint operator A on L 2(μ), denote by σ(A) and σ ess(A), respectively, the spectrum and the essential spectrum of A. Here, the essential spectrum consists of continuous spectrum and eigenvalues with infinite multiplicity. When σ ess(Q)=∅, denote by λ 1<λ 2<⋯ all the eigenvalues of −Q. Actually, all the eigenvalues under consideration in this paper are simple; for this, see Theorem 4.4 below.

The following result in [19] is our starting point of spectral theory for the minimal birth and death process.

Theorem 4.2

Assume that R<∞,S=∞, i.e., assume that ∞ is an exit boundary. Then P(t) is a Hilbert–Schmidt operator for any t>0, σ ess(Q)=∅, and

From the above equality we see that the spectrum has no accumulation point and can be ordered.

To get the distribution of lifetime, we need the following minimax principle for eigenvalues for birth and death processes. This extends the classical Courant–Fischer theorem for finite symmetric matrices. For the Courant–Fischer theorem, see, for example, [12, p. 149].

Proposition 4.3

Assume that σ ess(Q)=∅. Let λ ν (ν≥1) and \(\lambda_{\nu}^{(n)} (1\le\nu\le n)\) be eigenvalues for −Q in (1.1) and −Q (n) in (1.2), respectively. Then

-

(a)

for ν≥1,

$$ \lambda_\nu=\sup_{f^{(1)},\ldots,f^{(\nu-1)}\in L^2(\mu)}\inf \bigl\{D(f): \mu \bigl(f^2\bigr)=1\text{ \textit{and} } \mu\bigl(ff^{(i)}\bigr)=0 \text{ \textit{for} }1\le i<\nu \bigr\}, $$(4.1)where μ(g):=∑ i∈E μ i g i ;

-

(b)

for 1≤ν≤n,

(4.2)

(4.2)

Proof

-

(a)

We first prove the assertion for λ ν . Let e (ν) be the corresponding eigenfunction for λ ν . Then

$$\lambda_\nu=\inf \bigl\{D(f):\mu\bigl(f^2\bigr)=1 \text{ and } \mu \bigl(fe^{(j)}\bigr)=0 \text{ for } 1\le j<\nu \bigr\}. $$For arbitrary f∈L 2(μ), since \(f=\sum_{j=1}^{\infty}\mu (fe^{(j)})e^{(j)}\) and −Qe (j)=λ j e (j), we have

$$D(f)=\mu\bigl((-Qf)f\bigr)=\sum_{j=1}^\infty \lambda_j\mu\bigl(fe^{(j)}\bigr)^2, $$so that for f (i)∈L 2(μ)(1≤i<ν),

$$\begin{aligned} &\inf \bigl\{D(f):\mu\bigl(f^2\bigr)=1 \text{ and } \mu \bigl(ff^{(i)}\bigr)=0 \text{ for } 1\le i<\nu \bigr\} \\ &\quad{}=\inf \Biggl\{\sum_{j=1}^\infty \lambda_j\mu\bigl(fe^{(j)}\bigr)^2:\mu \bigl(f^2\bigr)=1 \text{ and } \mu\bigl(ff^{(i)}\bigr)=0 \text{ for } 1\le i<\nu \Biggr\} \\ &\quad{}\le\inf \Biggl\{\sum_{j=1}^\nu \lambda_j\mu\bigl(fe^{(j)}\bigr)^2:\mu \bigl(f^2\bigr)=1,\mu\bigl(ff^{(i)}\bigr)=0\ (1\le i<\nu), \mu\bigl(fe^{(j)}\bigr)=0\\ &\quad{}\phantom{\le\inf \Biggl\{}(j>\nu ) \Biggr\} \\ &\quad{}\le\sup \Biggl\{\sum_{j=1}^\nu \lambda_j\mu\bigl(fe^{(j)}\bigr)^2:\mu \bigl(f^2\bigr)=1 \text{ and } \mu\bigl(fe^{(j)}\bigr)=0 \text{ for } j>\nu \Biggr\} \\ &\quad{}=\lambda_\nu. \end{aligned} $$Therefore,

$$ \lambda_\nu\ge\sup_{f^{(1)},\ldots,f^{(\nu-1)}\in L^2(\mu)}\inf \bigl\{D(f):\mu \bigl(f^2\bigr)=1 \text{ and } \mu\bigl(ff^{(i)}\bigr)=0 \text{ for }1\le i<\nu \bigr\}. $$(4.3)If we choose f i =e (i),1≤i<ν, then

$$\begin{aligned} \text{RHS~(4.3)}&\ge\inf \bigl\{D(f):\mu\bigl(f^2 \bigr)=1 \text{ and } \mu\bigl(fe^{(i)}\bigr)=0 \text{ for } 1\le i<\nu \bigr\}=D\bigl(e^{(\nu)}\bigr) \\ &=\lambda_\nu=\text{LHS~(4.3)}; \end{aligned} $$thus, equality holds in (4.3).

-

(b)

For f|[n,∞)=0,

$$ D(f)=\sum_{i=0}^{n-2}\mu_ib_i(f_i-f_{i+1})^2+ \mu_{n-1}b_{n-1}f_{n-1}^2=:D^{(n)}(f); $$(4.4)then it is easy to check that D (n)(f) is the Dirichlet form for Q (n) in (1.2). The rest of proof is the same as above. □

As pointed out in Sect. 1, when ∞ is an exit boundary,  is a regular Dirichlet form. That is,

is a regular Dirichlet form. That is,  is the closure of

is the closure of  with respect to Dirichlet norm \(\Vert\cdot\Vert_{D}: \Vert f\Vert_{D}^{2}=\mu(f^{2})+D(f)\). Therefore, as is done in [4, Theorem 9.11], when ∞ is an exit boundary, it follows from Proposition 4.3 that

with respect to Dirichlet norm \(\Vert\cdot\Vert_{D}: \Vert f\Vert_{D}^{2}=\mu(f^{2})+D(f)\). Therefore, as is done in [4, Theorem 9.11], when ∞ is an exit boundary, it follows from Proposition 4.3 that

Theorem 4.4

Assume that R<∞,S=∞, i.e., assume that ∞ is an exit boundary. Then, for each ν≥1, we have

Moreover, all eigenvalues {λ ν ,ν≥1} of −Q are distinct (each of multiplicity one).

Proof

The proof will be split into three steps:

-

(a)

\(\lambda_{\nu}^{(n)}\downarrow\alpha_{\nu}(\text{say}) \text{as} n\uparrow \infty\);

-

(b)

α ν =λ ν ;

-

(c)

the eigenvalues {λ ν ,ν≥1} are distinct.

(a) We use Proposition 4.3 to prove the monotonicity for \(\lambda_{\nu}^{(n)}\). For any fixed 1≤ν<n and f (1),…,f (ν−1)∈L 2(μ), if f is such that f|[n,∞)=0 and μ(f 2)=1 and μ(ff (i))=0 for 1≤i<ν, then f|[n+1,∞)=0 and μ(f 2)=1 and μ(ff (i))=0 for 1≤i<ν, so that

and \(\lambda_{\nu}^{(n)}\ge\lambda_{\nu}^{(n+1)}\) for 1≤ν<n. This proves the monotonicity. Thus, the limit \(\lim_{n\rightarrow\infty }\lambda_{\nu}^{(n)}=:\alpha_{\nu}\) exists for any ν≥1.

(b) On the one hand, it follows from Proposition 4.3 that \(\lambda_{\nu}\le\lambda_{\nu}^{(n)}\) for n≥1, so λ ν ≤α ν . On the other hand, from Corollary 2.1 we have

Thus, it follows from Proposition 4.1 and the monotone convergence theorem that

But we already know from Theorem 4.2 that

Since λ ν ≤α ν for all ν≥1, it must hold that λ ν =α ν for every ν≥1.

(c) Next, we will prove that all eigenvalues {λ ν ,ν≥1} of −Q are distinct. For this, we only need to prove that the eigenspace for λ ν is of dimension one. Indeed, let −λ be an eigenvalue, and g a corresponding eigenfunction. From (Qg)(i)=−λg i ,i≥0, we have

Since μ i b i =μ i+1 a i+1 for i≥0, it follows from (4.7) that

This means that the eigenfunction g is determined uniquely once g 0 is given. □

Remark 4.5

For the first eigenvalue (ν=1) corresponding to the minimal process, Chen [5] proved that λ 1 is defined by the classical Poincaré variational formula:

without the assumption σ ess(Q)=∅.

The following is the main theorem of this section.

Theorem 4.6

Assume that ∞ is an exit boundary. Let ζ be the lifetime for the minimal process. Then

That is,

where \(Y_{\nu}\sim\operatorname{Exp}(\lambda_{\nu})\) (for 1≤ν<∞) are independent. Moreover, for any i≥0, let T i,∞=lim n→∞ T i,n . Then

Proof

We will prove the first assertion, while the second assertion then follows by independence as in Corollary 2.1.

Recall ζ=lim n→∞ T 0,n when X 0=0. So,

Take the logarithm of each side of above equation, and then by the monotone convergence theorem we can get that

that is,

□

By a similar argument, we can deal with the case a 0>0. Since the boundary ∞ now can be considered as an absorbing state, we can extend the results in Sect. 3 to infinite state space case. The details are then omitted here.

5 Case IV: ∞ Is an Entrance Boundary

In this section we will deal with Case IV for a birth and death process with ∞ an entrance boundary, i.e., R=∞,S<∞, and the corresponding Q-process is unique.

Since S<∞ and \(S\ge\frac{1}{\mu_{0}b_{0}}\sum_{j\ge1}\mu_{j}\), we have μ=∑ j≥0 μ j <∞. Let π i =μ i /μ. Then π:=(π i ,i≥0) is a probability measure on E, and the process is reversible with respect to π. Now we will consider the spectral theory for operators on the Hilbert space L 2(π).

For n≥0, let

be the generator of the birth and death process on {n+1,n+2,…} before the process reaches state n.

Let \(\hat{\pi}^{(n)}:=(\pi_{i}:i>n)\) and \(\hat{E}_{n}:= \{ n+1,n+2,\ldots \}\). It is easy to check that \(\hat{Q}_{n}\) is symmetric with respect to \(\hat{\pi}{(n)}\) and then that \(\hat{Q}_{n}\) is a self-adjoint operator in \(L^{2}(\hat{E}_{n},\hat{\pi}^{(n)})\). When \(\sigma_{\mathrm{ess}}(\hat{Q}_{n})=\emptyset\), denote by \(\hat{\lambda}_{n,1}<\hat{\lambda}_{n,2}<\cdots\) all the positive eigenvalues of \(-\hat{Q}_{n}\). By a similar proof as in Theorem 4.4, we see that each eigenvalue is of multiplicity one. When n=0, the subscript 0 is dropped.

Theorem 5.1

Assume that R=∞,S<∞, i.e., assume that ∞ is an entrance boundary. Then \(\sigma_{\mathrm{ess}}(\hat{Q}_{n})=\emptyset\), and for any n≥0,

Proof

It follows from [18, Theorem 1.4] that σ ess(Q)=∅, where Q is defined by (1.1). We can view \(\hat{Q}_{n}\) as an operator on L 2(E,π) by adding zero columns or rows; then \(Q-\hat{Q}_{n}\) is of finite rank. So their essential spectra are the same and empty (see, for example, [15, Theorem 5.35]).

Now we prove the identity in (5.2). For i,j>n, let

Let \(\hat{X}_{t}^{(n)}\) be the jump process with transition matrix \((\hat{p}^{(n)}_{ij}(t))\). By a similar method as in the proof of [19, Theorem 1.4], we can get that

where \(\hat{\tau}_{i}^{+}:=\inf \{t\ge\text{the first jump time}:\hat{X}^{(n)}_{t}=i \}\) is the return time to state i.

Now fix i>n, and we will calculate \(\mathbb{P}_{i}[\hat{\tau}_{i}^{+}=\infty ]\). Assume that \(\hat{X}^{(n)}_{0}=i\). Note that once \([\hat{\tau}_{i}^{+}=\infty]\) happens, \(\hat{X}_{t}^{(n)}\) must first jump to state i−1. If \(\hat{X}_{t}^{(n)}\) jumps first to state i+1, starting from state i+1, it will be back to state i eventually since the original Q-process X t is ergodic (see [4, Theorem 4.55]). Next when it comes to state i−1, \(\hat{X}_{t}^{(n)}\) must arrive at state n before it arrives at state i. Thus, we have

and by [23, Theorem 3 in Sect. 5.1]

So,

Finally, since S n ≤S<∞, we have \(\sum_{\nu\ge1}\hat{\lambda}_{n,\nu}^{-1}<\infty\). □

As in the last section, we also need the minimax principle for eigenvalues of \(-\hat{Q}_{n}\).

Proposition 5.2

For 0≤n<N<∞, let f have domain {n+1,…,N}, and

where \(\hat{Q}_{n}^{(N)}\) is defined in (2.3). Then for 1≤ν≤N−n,

Proof

The proof is direct and is omitted. □

Proposition 5.3

Assume that σ ess(Q)=∅. Let \(\hat{\lambda}_{n,\nu}\) be eigenvalues for \(-\hat{Q}_{n}\) in (5.1). Then, for ν≥1,

Proof

Note that for f such that f [0,n]=0, we have \(D(f)=\sum_{i=n+1}^{\infty}\mu_{i}b_{i}(f_{i}-f_{i+1})^{2}+\mu_{n}b_{n}f_{n+1}^{2}=\mu(-f\hat{Q}_{n}f)\), which is the Dirichlet form for \(\hat{Q}_{n}\). The rest of proof is similar to that of Proposition 4.3. □

We will make use of an approximation procedure as N→∞. For this purpose, we need to do more.

Fix n≥0 and define

and  . Define

. Define

with the domain  consisting of the functions in the closure of

consisting of the functions in the closure of  with respect to the norm \(\Vert\cdot\Vert_{D}: \Vert f\Vert_{D}^{2}=\mu(f^{2})+D(f)\).

with respect to the norm \(\Vert\cdot\Vert_{D}: \Vert f\Vert_{D}^{2}=\mu(f^{2})+D(f)\).

Since ∞ is an entrance boundary, the Dirichlet form is regular as explained in Sect. 1, and we can rewrite (5.4) as

This leads to the following:

Theorem 5.4

Assume that R=∞,S<∞, i.e., that ∞ is an entrance boundary. Then, for each ν≥1, we have

Proof

(a) Let  , and assume that f

[N+1,∞)=d. Then

, and assume that f

[N+1,∞)=d. Then

Here, in \(D_{n}^{(N)}(f)\), f is viewed as a function on {n+1,…,N}. From this we can easily deduce the monotonicity of \(\hat{\lambda}_{n,\nu}^{(N)}\) in N.

(b) On the one hand, it follows from (5.3) and (5.6) that \(\hat{\lambda}_{n,\nu}\le\hat{\lambda}_{n,\nu}^{(N)}\) for N≥1, from which we can get that \(\hat{\lambda}_{n,\nu }\le \lim_{N\rightarrow\infty}\hat{\lambda}_{n,\nu}^{(N)}=:\alpha_{n,\nu}\).

On the other hand, Eq. (2.4) in Corollary 2.2 states that

from which by letting N→∞ we have

But, for any ν≥1, we know that \(\hat{\lambda}_{n,\nu}\le \alpha_{n,\nu}\), and Theorem 5.1 asserts that

Thus, it must hold that \(\hat{\lambda}_{n,\nu}=\alpha_{n,\nu }=\lim_{N\rightarrow\infty}\hat{\lambda}_{n,\nu}^{(N)}\) for every ν≥1. □

The following theorem is the main result of this section.

Theorem 5.5

Assume that the birth and death process is such that ∞ is an entrance boundary.

-

(a)

For n≥1, we have

$$ \mathbb{E}e^{-sT_{n,0}}= \frac{\prod_{\nu=1}^\infty\frac{\hat{\lambda}_\nu }{s+\hat{\lambda}_{\nu}}}{\prod_{\nu=1}^\infty\frac{\hat{\lambda}_{n,\nu }}{s+\hat{\lambda}_{n,\nu}}},\quad s\ge0. $$(5.7) -

(b)

Let T ∞,0=lim n→∞ T n,0. Then

$$ \mathbb{E}e^{-sT_{\infty,0}}= {\prod _{\nu=1}^\infty\frac{\hat{\lambda}_\nu }{s+\hat{\lambda}_{\nu}}},\quad s\ge0. $$(5.8)

Proof

Recall that the numerator and denominator are well defined because the corresponding sums of inverse of eigenvalues are finite.

-

(a)

By using the monotone convergence theorem, we can get (5.7) from Theorem 5.4 and Corollary 2.2 immediately.

-

(b)

To pass from (5.7) to (5.8), we only need to show that

$$\prod_{\nu=1}^\infty \frac{\hat{\lambda}_{n,\nu }}{s+\hat{\lambda}_{n,\nu}}\rightarrow1\quad \text{as } n\rightarrow\infty. $$Using the inequality log(1+x)≤x (x≥−1), we have

$$-\log\prod_{\nu=1}^\infty \frac{\hat{\lambda}_{n,\nu }}{s+\hat{\lambda}_{n,\nu}}=\sum_{\nu=1}^\infty-\log \frac{\hat{\lambda}_{n,\nu }}{s+\hat{\lambda}_{n,\nu}}= \sum_{\nu=1}^\infty\log \biggl[1+ \frac{s}{\hat{\lambda}_{n,\nu}}\biggr] \le\sum_{\nu=1}^\infty \frac{s}{\hat{\lambda}_{n,\nu}}. $$Then Eq. (5.2) in Theorem 5.1 implies that

$$-\log\prod_{\nu=1}^\infty \frac{\hat{\lambda}_{n,\nu }}{s+\hat{\lambda}_{n,\nu}}\le s S_n. $$Since

$$S_n=\sum_{j=n}^\infty \frac{1}{\pi_jb_j}\sum_{i=j+1}^\infty \pi_i\le \sum_{j=0}^\infty \frac{1}{\pi_jb_j}\sum_{i=j+1}^\infty \pi_i=S<\infty, $$we have that S n converges monotonically to 0 as n→∞. That is,

$$\prod_{\nu=1}^\infty \frac{\hat{\lambda}_{n,\nu }}{s+\hat{\lambda}_{n,\nu}}\rightarrow1\quad \text{as } n\rightarrow\infty. $$

□

For the entrance boundary case, the distributions of T i,n for 0≤i,n≤∞ are all known. When 0≤i<n<∞, the distribution of T i,n is given by Corollary 2.1, while when 0≤i<n=∞, we have T i,∞=∞ a.s. since the lifetime ζ equals ∞ a.s. (see [2, Chap. 8]). When 0≤n<i≤∞, the distribution of T i,n is given by Theorem 5.5.

6 Application to the Fastest Strong Stationary Time

In this section, we apply the theorems to strong stationary times. We give the distribution of fastest strong stationary times.

First of all, we recall some facts about strong ergodicity for birth and death processes. A Markov transition function P(t)=(p ij (t)) is called strongly ergodic if

Let η be the average hitting time, \(\eta=\sum_{ij}\pi_{i}\pi_{j} \mathbb{E}T_{i,j}\); see [1, Chap. 3]. From [2, Chap. 8] we can eventually calculate that (cf. [18])

In the following theorem, we summarize facts about strong ergodicity.

Theorem 6.1

Assume that the process is unique (i.e., R=∞). The following statements are equivalent.

-

(i)

The boundary ∞ is an entrance boundary.

-

(ii)

S<∞.

-

(iii)

The process is strongly ergodic.

-

(iv)

η<∞.

-

(v)

σ ess(Q)=∅ and \(\sum_{\nu\ge 1}\lambda_{\nu}^{-1}<\infty\).

Furthermore, when R=∞ and σ ess(Q)=∅, then

Proof

The equivalence of (i) and (ii) is from the definition, and that of (ii) and (iii) was proved in [25]. The other equivalences and assertions in (6.1) were proved in [18]. □

We remark that (6.1) is an eigentime identity, which generalizes the eigentime identity for finite Markov chains in [1, Chap. 3]. For an ergodic birth and death process on {0,1,…,N}, (6.1) becomes

where \(\{\lambda^{(N)}_{\nu}:1\le\nu\le N \}\) are the corresponding positive eigenvalues, and \(\mu^{(N)}=\sum_{i=0}^{N}\mu_{i}\).

Now we study the distribution of a fastest strong stationary time for a strongly ergodic birth and death process. A strong stationary time (SST) is a randomized stopping time τ for (X t ) such that X τ has the distribution π and is independent of τ.

With the aid of Theorem 4.6 and a duality established in [9], we can obtain the following result, which extends the result in [11, Theorem 1.4(a)] in the special case of a birth and death chain to the denumerable case.

Theorem 6.2

For a strongly ergodic birth and death process (i.e., R=∞,S<∞) started at state 0, any fastest SST τ has distribution

where {λ ν :ν≥1} are the positive eigenvalues of −Q, and Q is the generator in (1.1).

Proof

We follow an argument in [9, Sect. 3.3] with minor modifications. Let

Then 1=H 0≤H i ≤∑ k μ k =μ<∞ for i=0,1,2,… .

Define a “dual” Q ∗-birth and death process with parameters \((a^{*}_{i},b_{i}^{*})\) given by

Also,

Noting that H i ≥H 0=1, we have

and as 1=H 0≤H j ≤H i (0≤j≤i),μ i b i =μ i+1 a i+1,i≥0, we have

Next,

Thus, the minimal Q ∗-process has an exit boundary at ∞. It follows from [9, Sect. 3.3] that this Q ∗-process is a strong stationary dual for the Q-process (cf. [9, Definition 1]). So τ has the same distribution as the lifetime ζ ∗ (the time to reach ∞) for the Q ∗-process started from 0. Applying Theorem 4.6, we have

where \(\{\lambda_{\nu}^{*},\nu\ge1 \}\) is the spectrum of −Q ∗. By Lemma 6.3 below, we have \(\{\lambda_{\nu}^{*},\nu\ge 1 \}= \{\lambda_{\nu},\nu\ge1 \}\). This completes the proof. □

Lemma 6.3

Let Q,Q ∗ be as above, and \(\{\lambda_{\nu},\nu\ge1 \}, \{\lambda_{\nu}^{*},\nu\ge1 \}\) be the positive eigenvalues of −Q in L 2(μ) and −Q ∗ in L 2(μ ∗), respectively. Then \(\{\lambda_{\nu}^{*},\nu\ge1 \}= \{\lambda_{\nu},\nu\ge1 \}\).

Proof

We will prove the assertion on finite state space and then use the approximation procedure to derive the final assertion.

-

(a)

We will prove that \(-\hat{Q}^{(n)}\) and −Q ∗(n) are similar, where

$$ \hat{Q}^{(n)}:=\left ( \begin{array}{c@{\quad}c@{\quad}c@{\quad}c@{\quad}c@{\quad}c@{\quad}c} -b_{0} & b_{0} & 0& 0 & \cdots& 0& 0 \\ a_{1} & -(a_{1}+b_{1}) & b_{1} & 0& \cdots& 0& 0 \\ \vdots& \vdots& \vdots& \vdots& \vdots& \vdots& \vdots\\ 0 & 0 & 0& 0 & \cdots& a_{n} & -a_{n} \\ \end{array} \right ) $$(6.5)and

$$Q^{*(n)}:=\left ( \begin{array}{c@{\quad}c@{\quad}c@{\quad}c@{\quad}c@{\quad}c@{\quad}c} -b_0^* & b_0^* & 0 & \cdots& 0& 0 & 0\\ \vdots& \vdots& \vdots& \vdots& \vdots& \vdots& 0\\ 0 & 0 & 0& \cdots& a_{n-1}^* & -(a_{n-1}^*+{ b_{n-1}^*})& 0 \\ 0 & 0 & 0& 0 & 0 & 0 & 0 \\ \end{array} \right ). $$Define the link matrix Λ=(Λ ij )0≤i,j≤n as Λ ij =1[j≤i] μ j /H i . It is a routine to check that \(\varLambda\hat{Q}^{(n)}=Q^{*(n)}\varLambda\); cf. [10, Sect. 3] or [9, Sect. 3.A]. Λ is a lower triangle matrix, and its determinant is positive, and thus Λ is invertible. So \(-\hat{Q}^{(n)}\) and −Q ∗(n) are similar.

-

(b)

Note that the eigenvalues of \(\hat{Q}^{(n)}\) or Q ∗(n) are distinct, as we proved in Theorem 4.4. Let \(0=\lambda^{(n)}_{0}<\lambda^{(n)}_{1}<\cdots<\lambda^{(n)}_{n}\) and \(0=\lambda^{*(n)}_{0}<\lambda^{*(n)}_{1}<\cdots<\lambda^{*(n)}_{n}\) be the eigenvalues of \(-\hat{Q}^{(n)}\) and −Q ∗(n), respectively. We have proved in (a) that \(\lambda^{(n)}_{\nu}=\lambda^{*(n)}_{\nu}~(1\le\nu\le n)\).

Since the Q ∗-process has ∞ as an exit boundary, it follows from Theorem 4.4 that

$$\forall\nu\ge1,\quad \text{we have } \lambda^{*(n)}_\nu\downarrow \lambda^*_\nu\quad \text{as } n\uparrow\infty. $$To complete the proof, we need only show that

$$ \forall\nu\ge1,\quad \text{we have } \lambda^{(n)}_\nu \downarrow \lambda_\nu\quad \text{as } n\uparrow\infty. $$(6.6)This can be proved by a similar argument as in Theorem 5.4 (in the case n=−1).

□

7 ∞ Is a Regular Boundary

Roughly speaking, the regular boundary is an interline between the exit boundary and the entrance boundary. Since for Q with the regular boundary, the corresponding transition functions are not unique, so we will next consider the minimal one and the “maximal” one. The minimal transition function behaves like a process with exit boundary, whilst the “maximal” one behaves like a process with entrance boundary.

7.1 The Minimal Process

As we explained just above, the minimal transition function behaves like a process with exit boundary, so we will follow the notations in Sect. 4. For simplicity, we will only present the distribution of lifetime for the minimal process when a 0=0.

Assume that a 0=0. Let ζ be the lifetime of the minimal process P min(t) with

Since R<∞, ζ<∞ a.s. From [19, Theorem 1.3] we know that P min(t) is a Hilbert–Schmidt operator for any t>0 and σ ess(L min)=∅. Here, L min is the generator of P min(t) in L 2(π), and π=(π i :i≥0):=(μ i /μ:i≥0).

Let Q (n) be as in (1.2). Q (n) is the generator of the process before hitting state n, and the corresponding process P (n)(t)=(p ij (t):i,j∈E) is given by

Note that for i≥n or j≥n, \(p_{ij}^{(n)}(t)=0\).

Denote again by \(\lambda_{\nu}^{(n)} (1\le\nu\le n)\) all eigenvalues of −Q (n). As proved in Theorem 4.4, for any ν≥1, \(\lambda_{\nu}^{(n)}\) is decreasing in n, and the limit is denoted by α ν . Then we can derive as in Sect. 4 the distribution of lifetime for the minimal process.

Theorem 7.1

Assume that ∞ is a regular boundary, i.e., R<∞,S<∞. Let ζ be the lifetime for the minimal process. Then

Remark 7.2

We remark that {α

ν

} is the spectrum of L

min. This minimal generator has domain smaller than  , which is the domain of the generator L

max for the “maximal” process in L

2(π). For example, since S<∞, \(\mu=\sum_{0}^{\infty}\mu_{i}<\infty\), so that 1∈L

2(π), and 0 is an eigenvalue of L

max. However, 0 is not an eigenvalue of L

min because α

ν

>0 by Remark 4.5.

, which is the domain of the generator L

max for the “maximal” process in L

2(π). For example, since S<∞, \(\mu=\sum_{0}^{\infty}\mu_{i}<\infty\), so that 1∈L

2(π), and 0 is an eigenvalue of L

max. However, 0 is not an eigenvalue of L

min because α

ν

>0 by Remark 4.5.

We have the following relation between P min(t) and P (n)(t). We prove that the process P (n)(t) converges in total variance to the minimal process.

Proposition 7.3

Assume that R<∞.

-

(1)

For any i≥0 and t≥0,

$$ \lim_{n\rightarrow\infty}\sum_{j\in E}\bigl|p^{\min }_{ij}(t)-p_{ij}^{(n)}(t)\bigr|=0. $$(7.2) -

(2)

Let \(\phi_{ij}^{\min}(\lambda)\) and \(\phi_{ij}^{(n)}(\lambda)\) be the Laplace transforms of \(p^{\min}_{ij}(t)\) and \(p_{ij}^{(n)}(t)\), respectively.

$$ \lim_{n\rightarrow\infty}\sup_{i}\sum _{j\in E}\bigl|\phi^{\min }_{ij}(\lambda )- \phi_{ij}^{(n)}(\lambda)\bigr|=0. $$(7.3)

Proof

-

(1)

Note that T i,n ≤ζ. We have \(p^{\min }_{ij}(t)\ge p_{ij}^{(n)}(t)\) and

$$\begin{aligned} \sum_{j\in E}\bigl|p^{\min}_{ij}(t)-p_{ij}^{(n)}(t)\bigr|&= \sum_{j\in E}p^{\min}_{ij}(t)-\sum _{j\in E}p_{ij}^{(n)}(t) \\ &=\mathbb{P}_i[t<\zeta]-\mathbb{P}_i[t<T_{i,n}]= \mathbb{P}_i[T_{i,n}\le t<\zeta ]\rightarrow0 \end{aligned} $$as n→∞, since with probability 1, lim n→∞ T i,n =ζ<∞.

-

(2)

Since \(p^{\min}_{ij}(t)\ge p_{ij}^{(n)}(t)\), we have \(\phi^{\min}_{ij}(\lambda)\ge\phi_{ij}^{(n)}(\lambda) \), so

(7.4)

(7.4)By the skip-free property, T 0,n =T 0,i +T i,n and T 0,i ,T i,n are independent, so for i≤n,

$$\mathbb{E}_i e^{-\lambda T_{i,n}}=\mathbb{E}_0 e^{-\lambda T_{0,n}}/\mathbb{E}_0 e^{-\lambda T_{0,i}}. $$As ζ=lim n→∞ T i,n , we have, for i≤n,

$$\mathbb{E}_i e^{-\lambda\zeta}=\mathbb{E}_0 e^{-\lambda\zeta }/\mathbb{E}_0 e^{-\lambda T_{0,i}}. $$Since \(\mathbb{E}_{0} e^{-\lambda T_{0,i}}\) is decreasing in i, it follows from (7.4) that for i≤n,

$$\begin{aligned} \sup_{i\le n}\sum_{j\in E}\bigl| \phi^{\min}_{ij}(\lambda)-\phi_{ij}^{(n)}( \lambda)\bigr|&=\sup_{i\le n}\frac{\mathbb{E}_0 e^{-\lambda T_{0,n}}-\mathbb{E}_0 e^{-\lambda\zeta}}{\lambda\mathbb{E}_0 e^{-\lambda T_{0,i}}} =\frac{\mathbb{E}_0 e^{-\lambda T_{0,n}}-\mathbb{E}_0 e^{-\lambda \zeta }}{\lambda\mathbb{E}_0 e^{-\lambda T_{0, n}}} \\ &=\frac{1}{\lambda}\biggl[1-\frac{\mathbb{E}_0 e^{-\lambda\zeta}}{\mathbb{E}_0 e^{-\lambda T_{0, n}}}\biggr]\rightarrow0 \text{\quad as $n \rightarrow\infty$.} \end{aligned} $$As for i≥n, T i,n =0, from (7.2) and (7.4) we have

$$\begin{aligned} \sup_{i\ge n}\sum_{j\in E}\bigl| \phi^{\min}_{ij}(\lambda)-\phi_{ij}^{(n)}( \lambda)\bigr|&=\sup_{i\ge n}\frac{1}{\lambda}\bigl[1-\mathbb{E}_i e^{-\lambda\zeta }\bigr] \\ &=\frac{1}{\lambda}\bigl[1-\mathbb{E}_n e^{-\lambda\zeta}\bigr]=\int _0^\infty e^{-\lambda t}\mathbb{P}_n[t<\zeta], \end{aligned} $$which tends to zero as n→∞ since \(\lim_{n\rightarrow\infty}\mathbb{E}_{n}\zeta=0\).

□

7.2 The “Maximal” Process

We now turn to the “maximal” process. In contrast to the minimal case, the “maximal” process is much more complicated. There are two ways to construct the “maximal” process: one is the probabilistic method in [24, Chap. 13], and another is via Dirichlet form theory in [13], see also [4, Proposition 6.56]. Here we will use the construction in [23]. We prove that the “maximal” process is just the limit process of a series of reflecting birth and death processes on {0,1,…,n} as n goes to infinity. Actually, we prove that the convergence is in operator norm in L 2(π) for the Laplace transform for these processes. Thus, we can use the standard perturbation theory for linear operators to derive the distribution for hitting times of the “maximal” process. For the argument, the reader is urged to refer [15, Chap. IV].

Assume that a 0=0. Let ζ be the lifetime for the minimal process as in Sect. 7.1. For λ>0, let \(X_{i}(\lambda)=\mathbb{E}_{i}e^{-\lambda\zeta}\), and \(\phi^{\min }_{ij}(\lambda)=\int_{0}^{\infty}e^{-\lambda t}p^{\min}_{ij}(t)\,dt\) be the Laplace transform for the minimal transition function \(p^{\min}_{ij}(t)\).

Following [24, Chap. 13], when R<∞,S<∞, there exists a unique reversible and honest Q-function P(t)=(p ij (t):i,j∈E), and its Laplace transform Ψ(λ)=(ψ ij (λ):i,j∈E)(λ>0) has the following form:

where μ i is defined by (1.4). It also satisfies both Kolmogorov backward and forward equations.

In the following, we will give a similar expression as in (7.5) connecting the process absorbed at state n and the process reflected at state n on state space {0,1,…,n}.

Theorem 7.4

Let \((p_{ij}^{(n)}(t))\) be the absorbing process defined in (7.1), and \(\phi_{ij}^{(n)}(\lambda)\) be its Laplace transform. Then the Laplace transform of the process reflected at state n can be expressed as follows: for 0≤i,j≤n,

where \(X^{(n)}_{i}(\lambda)=1-\lambda\sum_{k=0}^{n-1}\phi^{(n)}_{ik}(\lambda )=\mathbb{E}_{i}e^{-\lambda T_{i,n}}\).

Proof

It is easy to see that for all λ>0,0≤i,j≤n,

and the resolvent equation holds: Ψ (n)(λ)−Ψ (n)(μ)+(λ−μ)Ψ (n)(λ)Ψ (n)(μ)=0 for all λ,μ>0, where \(\varPsi^{(n)}(\lambda)=(\psi^{(n)}_{ij})\). Then according to [2, Sect. 1.3], we only need to show that \({\psi_{ij}^{(n)}(\lambda)}\) satisfies the following Q-condition for the reflecting process with generator \(\hat{Q}^{(n)}\) in (6.5):

The proof of (7.7) is based on the following facts:

-

(1)

Since \(\phi_{ij}^{(n)}(\lambda)\) is the absorbing process with generator (3.1), we have

$$ \lim_{\lambda\rightarrow\infty}\lambda\bigl(\lambda\phi^{(n)}_{ij}( \lambda)-\delta_{ij}\bigr)= \left \{ \begin{array}{l@{\quad}l} a_i & \hbox{for $1\le i\le n-1, j=i-1$;} \\ -(a_i+b_i) & \hbox{for $0\le i=j\le n-2$;} \\ b_i & \hbox{for $0\le i\le n-2, j=i+1$;} \\ -b_{n-1} & \hbox{for $i=j=n-1$;}\\ 0 & \hbox{otherwise.} \end{array} \right . $$(7.8) -

(2)

By definition (\(X^{(n)}_{i}(\lambda)=1-\lambda\sum_{k=0}^{n-1}\phi^{(n)}_{ik}(\lambda)\)), we get \(X_{n}^{(n)}(\lambda)=1\) and for 0≤i≤n−1,

$$ \lim_{\lambda\rightarrow\infty}X^{(n)}_i(\lambda)=1-\sum _{k=0}^{n-1}\lim_{\lambda \rightarrow\infty}\lambda \phi^{(n)}_{ik}(\lambda)=1-\sum_{k=0}^{n-1} \delta_{ik}=0, $$(7.9)and then

(7.10)

(7.10)-

(a)

Prove (7.7) for i,j≤n−1. This follows from (7.8) and for i,j≤n−1,

$$\lim_{\lambda\rightarrow\infty}\frac{\lambda X^{(n)}_i(\lambda)X^{(n)}_j(\lambda)\mu_j}{ \sum_{k=0}^n\mu_kX^{(n)}_k(\lambda)}= \lim_{\lambda\rightarrow\infty} \frac{\lambda X^{(n)}_i(\lambda)X^{(n)}_j(\lambda)\mu_j}{ \mu_n+\sum_{k=0}^{n-1}\mu_kX^{(n)}_k(\lambda)}=0. $$ -

(b)

Prove (7.7) for i=n,j≤n−1. Since \(\lambda\phi^{(n)}_{nj}(\lambda)=0\) and \(X_{n}^{(n)}(\lambda)=1\), by (7.10) we have

-

(c)

Prove (7.7) for i≤n−1,j=n. As in (b) above, we have

-

(d)

Prove (7.7) for i=j=n:

$$\begin{aligned} \lim_{\lambda\rightarrow\infty}\lambda\bigl(\lambda\psi^{(n)}_{nn}( \lambda)-\delta_{nn}\bigr)&= \lim_{\lambda\rightarrow\infty}\lambda\biggl[ \frac{\mu_n}{ \mu_n+\sum_{k=0}^{n-1}\mu_kX^{(n)}_k(\lambda)}-1\biggr] \\ &=-\lim_{\lambda\rightarrow\infty}\frac{\lambda\sum_{k=0}^{n-1}\mu_kX^{(n)}_k(\lambda)}{ \mu_n+\sum_{k=0}^{n-1}\mu_kX^{(n)}_k(\lambda)} \\ &=-\frac{b_{n-1}\mu_{n-1}}{\mu_n}=-a_n. \end{aligned} $$

-

(a)

□

Using Theorem 7.3 and Theorem 7.4, we can prove that the “maximal” process P(t) is the limit of the process \(\widetilde{P}^{(n)}\) reflected at state n as n goes infinity.

Proposition 7.5

Let ψ ij (λ) be given by (7.5), and \(\psi_{ij}^{(n)}(\lambda)\) by (7.6). Then, for λ≥0,

Proof

By Proposition 7.3, it suffices to prove that lim n→∞sup i ∑ j∈E B ij =0, where

Since \(1\ge X^{(n)}_{i}(\lambda)\downarrow X_{i}(\lambda)\), we have

which implies that

Noting that ∑ j μ j <∞ and recalling (7.3), the assertion follows by the dominated convergence theorem. □

From Proposition 7.5 we can get the approximation of eigenvalues of the “maximal” process by the processes that are reflected at state n.

Theorem 7.6

The essential spectrum of generator L max for the “maximal” process in L 2(π) is empty, and for each ν≥1, we have

where \(\{\lambda^{(n)}_{\nu},0\le\nu\le n \}\) and {λ ν ,ν≥0} are the eigenvalues of \(-\hat{Q}^{(n)}\) in (6.5) and −L max, respectively, both in increasing order with ν.

Proof

Let (L ∞,∥⋅∥) be the space of bounded functions on E. Then it is easy to check that when matrix A=(a ij ) is seen as an operator on L ∞,

Now fix λ>0. First, we rewrite (7.11) in Proposition 7.5 as

when Ψ(λ)=(ψ ij (λ)) and \(\varPsi^{(n)}(\lambda)=(\psi_{ij}^{(n)}(\lambda))\) are seen as operators on L ∞. Since \(\varPsi(\lambda)=(\psi_{ij}(\lambda)), \varPsi^{(n)}(\lambda )=(\psi_{ij}^{(n)}(\lambda))\) are bounded in L 1(π), an application of the Riesz–Thorin interpolation theorem (see [22, Theorem IX.17]) implies that

This means that Ψ(λ) is approximated in operator norm by a sequence of finite-rank and hence compact operators Ψ (n)(λ), so Ψ(λ) is compact, which implies that its spectrum is countable with 0 the unique accumulation point and other eigenvalues with finite multiplicity. Note that Ψ(λ) is just the resolvent operator (λ−L max)−1. By [15, Theorem III-6.29] we have σ ess(L max)=∅.

Now we prove the second assertion. As proved in Theorem 4.4, for any ν≥0, \(\lambda_{\nu}^{(n)}\) is decreasing in n, and the limit is denoted by α ν . We will prove that α ν =λ ν for ν≥0. In fact, by the spectral mapping theorem, the spectra of Ψ(λ), Ψ (n)(λ) are respectively {0}∪{β ν ,ν≥0} and \(\{0 \}\cup \{\beta_{\nu}^{(n)},0\le\nu\le n \}\), where β ν =(λ+λ ν )−1, \(\beta_{\nu}^{(n)}=(\lambda+\lambda_{\nu}^{(n)})^{-1}\); see [15, Problem III-6.16]. Since \(\beta_{\nu}^{(n)}\uparrow \gamma_{\nu}:=(\lambda+\alpha_{\nu})^{-1}\) as n↑∞, we only need to show that γ ν =β ν for ν≥0.

Indeed, on the one hand, by (7.12) and the upper semicontinuity of the spectrum for bounded operators (see [15, Remark IV-3.3]), we have γ ν ∈{β ν ,ν≥0}. On the other hand, by the continuity of finite system of eigenvalues in [15, IV-Sect. 3.5], for any fixed ν≥0, there exists a sequence \(\{\beta^{(n)}_{k(n)},n\ge0 \}\), where \(\beta^{(n)}_{k(n)}\) is the k(n)th (in decreasing order) eigenvalue of Ψ (n)(λ), that \(\beta^{(n)}_{k(n)}\rightarrow\beta_{\nu}\) as n→∞. Thus, if we take \(\varepsilon=\frac{1}{2}\min \{\beta_{\nu -1}-\beta_{\nu},\beta_{\nu}-\beta_{\nu+1} \}\), then there exists N∈ℤ+ such that for any n>N, \(|\beta^{(n)}_{k(n)}-\beta_{\nu}|<\varepsilon\) but \(|\beta^{(n)}_{k(n)}-\beta_{\nu\pm 1}|>\varepsilon\). Moreover, by the fact that \(\beta_{\nu}^{(n)}\uparrow\gamma_{\nu}\) for any ν≥0, we can find an integer n 0 large enough such that \(|\beta^{(n_{0})}_{k(n_{0})}-\gamma_{k(n_{0})}|<\varepsilon\). Since β ν is the unique eigenvalue of Ψ(λ) in the interval \((\beta^{(n_{0})}_{k(n_{0})}-\varepsilon,\beta^{(n_{0})}_{k(n_{0})}+\varepsilon)\), we have \(\gamma_{k(n_{0})}=\beta_{\nu}\). This shows that β ν ∈{γ ν ,ν≥0} and hence γ ν =β ν for ν≥0. This completes the proof. □

Next, we will give the distribution of the hitting time of state 0 starting from state n. For this, we need to study the birth and death process \(\hat{P}^{(n)}(t)\) on \(\hat{E}_{n}= \{n+1,n+2,\ldots \}\) before the maximal process P(t) gets to n, which can been seen as an absorbing state. By the probability construction of P(t), \(\hat{P}^{(n)}(t)\) is the maximal process on \(\hat{E}_{n}\) corresponding to \(\hat{Q}_{n}\) in (5.1), which also satisfies both the Kolmogorov backward and forward equations. Then by Theorem 1 in [23, Sect. 7.6] or [24, Sect. 6.6], its Laplace transform \(\hat{\varPsi}^{(n)}(\lambda)\) has the following form:

where \(\hat{X}^{(n)}_{i}(\lambda)=1-\lambda\sum_{k\ge n}\hat{\phi}^{(n)}_{ik}(\lambda)\), and \(\hat{\varPhi}^{(n)}(\lambda)=(\hat{\phi}^{(n)}_{ij}(\lambda ):i,j\ge n)\) is the Laplace transform of the minimal process with respect to \(\hat{Q}_{n}\) with two absorbing states n and ∞.

Let \(\hat{\pi}^{(n)}\) be as in Sect. 5. Then \(\hat{P}^{(n)}(t)\) is symmetric with respect to \(\hat{\pi}^{(n)}\). Denote by \(\hat{L}_{n}\) the generator of \(\hat{P}^{(n)}(t)\) in \(L^{2}(\hat{E}_{n},\hat{\pi}^{(n)})\). We have the following theorem.

Theorem 7.7

For any n≥0, the essential spectrum of \(\hat{L}_{n}\) is empty, and for each ν≥1, we have

where \(\{\hat{\lambda}_{n,\nu}^{(N)}:1\le\nu\le N-n \}\) and \(\{\hat{\lambda}_{n,\nu}:\nu\ge1 \}\) are the eigenvalues of \(-\hat{Q}_{n}^{(N)}\) in (2.3) and \(-\hat{L}_{n}\) respectively, both in increasing order with ν.

Proof

The first assertion follows from the fact that σ ess(L max)=∅, while the second one can be derived by a similar method as in Theorem 7.4, Proposition 7.5, and Theorem 7.6. □

Theorem 7.8

Assume that the birth and death process is such that ∞ is a regular boundary. Let T n,0 be the hitting time of state 0 starting from state n for the “maximal” process, and \(\{\hat{\lambda}_{\nu}\}\) (resp. \(\{\hat{\lambda}_{n,\nu}\} \)) be eigenvalues of generators for the “maximal” process before hitting to state 0 (resp. state n). Then, for n≥1, we have

Proof

For s≥0, let \(\hat{\varPsi}_{N}(s)= \{\hat{\psi}_{N,ij}(s): 0\le i,j\le N \}\) be the Laplace transform of the Q-function of \(\hat{Q}_{0}^{(N)}\) in (2.3). Then by a similar construction as in Theorem 7.4, we can get that for all 0≤i,j≤N,

So,

where \(\hat{T}^{(N)}_{n,0}\) is the hitting time of state 0 from state n for \(\hat{\varPsi}_{N}(s)\). Then (7.14) follows from Corollary 2.2 and Theorem 7.7. □

For the “maximal” process, the distributions of T i,n for 0≤i,n≤∞, are all known. When 0≤i<n<∞, the distribution of T i,n is given by Corollary 2.1, and when 0≤i<n=∞, it is given by Theorem 7.1, since for the “maximal” process, the particle moves as the minimal process at the beginning and thus T i,∞<∞ a.s. When 0≤n<i≤∞, the distribution of T i,n is given by Theorem 7.8.

References

Aldous, D.J., Fill, J.A.: Reversible Markov Chains and Random Walks on Graphs (2003). www.berkeley.edu/users/aldous/book.html

Anderson, W.: Continuous-Time Markov Chains. Springer, New York (1991)

Brown, M., Shao, Y.S.: Identifying coefficients in the spectral representation for first passage time distributions. Probab. Eng. Inf. Sci. 1, 69–74 (1987)

Chen, M.F.: From Markov Chains to Non-Equilibrium Particle Systems, 2nd edn. World Scientific, Singapore (2004)

Chen, M.-F.: Speed of stability for birth-death processes. Front. Math. China 5(3), 379–515 (2010)

Diaconis, P., Miclo, L.: On times to quasi-stationarity for birth and death processes. J. Theor. Probab. 22, 558–586 (2009)

Diaconis, P., Saloff-Coste, L.: Separation cut-offs for birth and death chains. Ann. Appl. Probab. 16, 2098–2122 (2006)

Feller, W.: The birth and death processes as diffusion processes. J. Math. Pures Appl. 38, 301–345 (1959)

Fill, J.A.: Strong stationary duality for continuous-time Markov chains. Part I: Theory. J. Theor. Probab. 5, 45–70 (1992)

Fill, J.A.: The passage time distribution for a birth-and-death chain: strong stationary duality gives a first stochastic proof. J. Theor. Probab. 22, 543–557 (2009)

Fill, J.A.: On hitting times and fastest strong stationary times for skip-free and more general chains. J. Theor. Probab. 22, 587–600 (2009)

Horn, R.A., Johnson, C.R.: Topics in Matrix Analysis, vol. II. Cambridge University Press, Cambridge (1994)

Hou, Z.-T., Chen, M.-F.: On the symmetrizability of a class of Q-processes. J. Beijing Norm. Univ. 3–4, 1–12 (1980) (in Chinese)

Karlin, S., McGregor, J.: Coincidence properties of birth and death processes. Pac. J. Math. 9, 1109–1140 (1959)

Kato, T.: Perturbation Theory for Linear Operators. Springer, Berlin (1966)

Keilson, J.: Markov Chain Models—Rarity and Exponentiality. Applied Mathematical Sciences, vol. 28. Springer, New York (1979)

Kent, J.T.: The spectral decomposition of a diffusion hitting time. Ann. Probab. 10, 207–219 (1982)

Mao, Y.-H.: Eigentime identity for continuous-time ergodic Markov chains. J. Appl. Probab. 41, 1071–1080 (2004)

Mao, Y.-H.: Eigentime identity for transient Markov chains. J. Math. Anal. Appl. 315, 415–424 (2006)

Micchelli, C.A., Willoughby, R.A.: On functions which preserve the class of Stieltjes matrices. Linear Algebra Appl. 23, 141–156 (1979)

Miclo, L.: On absorbtion times and Dirichlet eigenvalues. ESAIM Probab. Stat. 14, 117–150 (2010)

Reed, M., Simon, B.: Methods of Modern Mathematical Physics, II. Fourier Analysis, Self-Adjointness. Elsevier, Amsterdam (2003)

Wang, Z.K., Yang, X.Q.: Birth and Death Processes and Markov Processes. Science Press/Springer, Beijing/Berlin (1992)

Yang, X.-Q.: The Construction Theory of Denumerable Markov Processes. Wiley, New York (1990)

Zhang, H.J., Lin, X., Hou, Z.T.: Polynomial uniform convergence for standard transition functions. Chin. Ann. Math., Ser. A 21, 351–355 (2000) (in Chinese)

Acknowledgements

The authors thank Professors Mu-Fa Chen and Feng-Yu Wang for their valuable suggestions. The authors thank especially Professor James Allen Fill and an anonymous referee for their careful reading and instructional comments on the first two manuscripts.

Research supported in part by 985 Project, 973 Project (No 2011CB808000), NSFC (No 11131003), SRFDP (No 20100003110005) and the Fundamental Research Funds for the Central Universities.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Gong, Y., Mao, YH. & Zhang, C. Hitting Time Distributions for Denumerable Birth and Death Processes. J Theor Probab 25, 950–980 (2012). https://doi.org/10.1007/s10959-012-0436-1

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10959-012-0436-1

Keywords

- Birth and death process

- Eigenvalue

- Hitting time

- Strong ergodicity

- Strong stationary time

- Exit/entrance/regular boundary