Abstract

We generalize the exponential family of probability distributions. In our approach, the exponential function is replaced by a φ-function, resulting in a φ-family of probability distributions. We show how φ-families are constructed. In a φ-family, the analogue of the cumulant-generating function is a normalizing function. We define the φ-divergence as the Bregman divergence associated to the normalizing function, providing a generalization of the Kullback–Leibler divergence. A formula for the φ-divergence where the φ-function is the Kaniadakis κ-exponential function is derived.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let (T,Σ,μ) be a σ-finite, non-atomic measure space. We denote by \(\mathcal{P}_{\mu}=\mathcal{P}(T,\Sigma,\mu)\) the family of all probability measures on T that are equivalent to the measure μ. The probability family \(\mathcal{P}_{\mu}\) can be represented as (we adopt the same symbol \(\mathcal{P}_{\mu}\) for this representation)

where L 0 is the linear space of all real-valued, measurable functions on T, with equality μ-a.e., and \(\mathbb{E}[\cdot]\) denotes the expectation with respect to the measure μ.

The family \(\mathcal{P}_{\mu}\) can be equipped with a structure of C ∞-Banach manifold, using the Orlicz space \(L^{\Phi _{1}}(p)=L^{\Phi_{1}}(T,\Sigma,p\cdot\mu)\) associated to the Orlicz function Φ1(u)=exp(u)−1, for u≥0. With this structure, \(\mathcal{P}_{\mu}\) is called the exponential statistical manifold, whose construction was proposed in [15] and developed in [3, 5, 14]. Each connected component of the exponential statistical manifold gives rise to an exponential family of probability distributions \(\mathcal{E}_{p}\) (for each \(p\in\mathcal{P}_{\mu}\)). Each element of \(\mathcal{E}_{p}\) can be expressed as

for a subset \(\mathcal{B}_{p}\) of the Orlicz space \(L^{\Phi_{1}}(p)\). K p is the cumulant-generating functional \(K_{p}(u)=\log\mathbb {E}_{p}[e^{u}]\), where \(\mathbb{E}_{p}[\cdot]\) is the expectation with respect to p⋅μ. If c is a measurable function such that p=e c, then (1) can be rewritten as

where 1 A is the indicator function of a subset A⊆T. A generalization of expression (1) was given in [13], where the exponential function is replaced by a κ-exponential function. In our generalization, we make use of expression (2).

In the φ-family of probability distributions \(\mathcal {F}_{c}^{\varphi}\), which we propose, the exponential function is replaced by the so called φ-function \(\varphi\colon T\times\overline{\mathbb {R}}\rightarrow[0,\infty]\). The function φ(t,⋅) has a “shape” which is similar to that of an exponential function, with an arbitrary rate of increasing. For example, we found that the κ-exponential function satisfies the definition of φ-functions. As in the exponential family, the φ-families are the connected component of \(\mathcal{P}_{\mu}\), which is endowed with a structure of C ∞-Banach manifold, using φ in the place of an exponential function. Let c be any measurable function such that φ(t,c(t)) belongs to \(\mathcal{P}_{\mu}\). The elements of the φ-family of probability distributions \(\mathcal{F}_{c}^{\varphi}\) are given by

for a subset \(\mathcal{B}_{c}^{\varphi}\) of a Musielak–Orlicz space \(L_{c}^{\varphi}\). The normalizing function \(\psi\colon\mathcal {B}_{c}^{\varphi}\rightarrow[0,\infty)\) and the measurable function u 0:T→[0,∞) in (3) replaces K p and 1 T in (2), receptively. The function u 0 is not arbitrary. In the text, we will show how u 0 can be chosen.

We define the φ-divergence as the Bregman divergence associated to the normalizing function ψ, providing a generalization of the Kullback–Leibler divergence. Then geometrical aspects related to the φ-family can be developed, since the Fisher information (on which the Information Geometry [1, 9] is based) is derived from the divergence. A formula for the φ-divergence where the φ-function is the Kaniadakis’ κ-exponential function [6, 11] is derived, which we called the κ-divergence.

We expect that an extension of our work will provide advances in other areas, like in Information Geometry or in the non-parametric, non-commutative setting [4, 12]. The rest of this paper is organized as follows. Section 2 deals with the topics of Musielak–Orlicz spaces we will use in the construction of the φ-family of probability distributions. In Sect. 3, the exponential statistical manifold is reviewed. The construction of the φ-family of probability distributions is given in Sect. 4. Finally, the φ-divergence is derived in Sect. 5.

2 Musielak–Orlicz Spaces

In this section we provide a brief introduction to Musielak–Orlicz (function) spaces, which are used in the construction of the exponential and φ-families. A more detailed exposition about these spaces can be found in [7, 10, 16].

We say that Φ:T×[0,∞]→[0,∞] is a Musielak–Orlicz function when, for μ-a.e. t∈T,

-

(i)

Φ(t,⋅) is convex and lower semi-continuous,

-

(ii)

Φ(t,0)=lim u↓0Φ(t,u)=0 and Φ(t,∞)=∞,

-

(iii)

Φ(⋅,u) is measurable for all u≥0.

Items (i)–(ii) guarantee that Φ(t,⋅) is not equal to 0 or ∞ on the interval (0,∞). A Musielak–Orlicz function Φ is said to be an Orlicz function if the functions Φ(t,⋅) are identical for μ-a.e. t∈T.

Define the functional I Φ(u)=∫ T Φ(t,|u(t)|) dμ, for any u∈L 0. The Musielak–Orlicz space, Musielak–Orlicz class, and Morse–Transue space, are given by

and

respectively. If the underlying measure space (T,Σ,μ) have to be specified, we write L Φ(T,Σ,μ), \(\tilde {L}^{\Phi}(T,\Sigma,\mu)\) and E Φ(T,Σ,μ) in the place of L Φ, \(\tilde{L}^{\Phi}\) and E Φ, respectively. Clearly, \(E^{\Phi}\subseteq\tilde {L}^{\Phi}\subseteq L^{\Phi}\). The Musielak–Orlicz space L Φ can be interpreted as the smallest vector subspace of L 0 that contains \(\tilde{L}^{\Phi}\), and E Φ is the largest vector subspace of L 0 that is contained in \(\tilde{L}^{\Phi}\).

The Musielak–Orlicz space L Φ is a Banach space when it is endowed with the Luxemburg norm

or the Orlicz norm

where \(\Phi^{*}(t,v)=\sup\nolimits_{u\geq0}(uv-\Phi(t,u))\) is the Fenchel conjugate of Φ(t,⋅). These norms are equivalent and the inequalities ∥u∥Φ≤∥u∥Φ,0≤2∥u∥Φ hold for all u∈L Φ.

If we can find a non-negative function \(f\in\tilde{L}^{\Phi}\) and a constant K>0 such that

then we say that Φ satisfies the Δ2 -condition, or belong to the Δ2 -class (denoted by Φ∈Δ2). When the Musielak–Orlicz function Φ satisfies the Δ2-condition, E Φ coincides with L Φ. On the other hand, if Φ is finite-valued and does not satisfy the Δ2-condition, then the Musielak–Orlicz class \(\tilde{L}^{\Phi}\) is not open and its interior coincides with

or, equivalently, \(B_{0}(E^{\Phi},1)\varsubsetneq\tilde{L}^{\Phi }\varsubsetneq\overline{B}_{0}(E^{\Phi},1)\).

3 The Exponential Statistical Manifold

This section starts with the definition of a C k-Banach manifold [8]. A C k -Banach manifold is a set M and a collection of pairs (U α ,x α ) (α belonging to some indexing set), composed by open subsets U α of some Banach space X α , and injective mappings x α :U α →M, satisfying the following conditions:

-

(bm1)

the sets x α (U α ) cover M, i.e., ⋃ α x α (U α )=M;

-

(bm2)

for any pair of indices α,β such that x α (U α )∩x β (U β )=W≠∅, the sets \(\boldsymbol{x}_{\alpha}^{-1}(W)\) and \(\boldsymbol{x}_{\beta}^{-1}(W)\) are open in X α and X β , respectively; and

-

(bm3)

the transition map \(\boldsymbol{x}_{\beta}^{-1}\circ \boldsymbol{x}_{\alpha}\colon\boldsymbol{x}_{\alpha}^{-1}(W)\rightarrow \boldsymbol{x}_{\beta}^{-1}(W)\) is a C k-isomorphism.

The pair (U α ,x α ) with p∈x α (U α ) is called a parametrization (or system of coordinates) of M at p; and x α (U α ) is said to be a coordinate neighborhood at p.

The set M can be endowed with a topology in a unique way such that each x α (U α ) is open, and the x α ’s are topological isomorphisms. We note that if k≥1 and two parametrizations (U α ,x α ) and (U β ,x β ) are such that x α (U α ) and x β (U β ) have a non-empty intersection, then from the derivative of \(\boldsymbol {x}_{\beta}^{-1}\circ\boldsymbol{x}_{\alpha}\) we see that X α and X β are isomorphic.

Two collections {(U α ,x α )} and {(V β ,x β )} satisfying (bm1)–(bm3) are said to be C k -compatible if their union also satisfies (bm1)–(bm3). It can be verified that the relation of C k-compatibility is an equivalence relation. An equivalence class of C k-compatible collections {(U α ,x α )} on M is said to define a C k -differentiable structure on X.

Now we review the construction of the exponential statistical manifold. We consider the Musielak–Orlicz space \(L^{\Phi_{1}}(p)=L^{\Phi _{1}}(T,\Sigma,p\cdot\mu)\), where the Orlicz function Φ1:[0,∞)→[0,∞) is given by Φ1(u)=e u−1, and p is a probability density in \(\mathcal{P}_{\mu}\). The space \(L^{\Phi_{1}}(p)\) corresponds to the set of all functions u∈L 0 whose moment-generating function \(\widehat{u}_{p}(\lambda)=\mathbb{E}_{p}[e^{\lambda u}]\) is finite in a neighborhood of 0.

For every function u∈L 0 we define the moment-generating functional

and the cumulant-generating functional

Clearly, these functionals are not expected to be finite for every u∈L 0. Denote by \(\mathcal{K}_{p}\) the interior of the set of all functions \(u\in L^{\Phi_{1}}(p)\) whose moment-generating functional M p (u) is finite. Equivalently, a function \(u\in L^{\Phi_{1}}(p)\) belongs to \(\mathcal{K}_{p}\) if and only if M p (λu) is finite for every λ in some neighborhood of [0,1]. The closed subspace of p-centered random variables

is taken to be the coordinate Banach space. The exponential parametrization \(\boldsymbol{e}_{p}\colon\mathcal{B}_{p}\rightarrow \mathcal{E}_{p}\) maps \(\mathcal{B}_{p}=B_{p}\cap\mathcal{K}_{p}\) to the exponential family \(\mathcal{E}_{p}=\boldsymbol{e}_{p}(\mathcal{B}_{p})\subseteq \mathcal{P}_{\mu}\), according to

e p is a bijection from \(\mathcal{B}_{p}\) to its image \(\mathcal{E}_{p}=\boldsymbol{e}_{p}(\mathcal{B}_{p})\), whose inverse \(\boldsymbol{e}_{p}^{-1}\colon\mathcal{E}_{p}\rightarrow\mathcal{B}_{p}\) can be expressed as

Since K p (u)<∞ for every \(u\in\mathcal{K}_{p}\), we find that e p can be extended to \(\mathcal{K}_{p}\). The restriction of e p to \(\mathcal{B}_{p}\) guarantees that e p is bijective.

Given two probability densities p and q in the same connected component of \(\mathcal{P}_{\mu}\), the exponential probability families \(\mathcal{E}_{p}\) and \(\mathcal{E}_{q}\) coincide, and the exponential spaces \(L^{\Phi_{1}}(p)\) and \(L^{\Phi_{1}}(q)\) are isomorphic (see [14, Proposition 5]). Hence, \(\mathcal{B}_{p}=\boldsymbol {e}_{p}^{-1}(\mathcal{E}_{p}\cap\mathcal{E}_{q})\) and \(\mathcal{B}_{q}=\boldsymbol{e}_{q}^{-1}(\mathcal{E}_{p}\cap\mathcal {E}_{q})\). The transition map \(\boldsymbol{e}_{q}^{-1}\circ\boldsymbol {e}_{p}:\mathcal{B}_{p}\rightarrow\mathcal{B}_{q}\), which can be written as

is a C ∞-function. Clearly, \(\bigcup_{p\in\mathcal{P}_{\mu }}e_{p}(\mathcal{B}_{p})=\mathcal{P}_{\mu}\). Thus the collection \(\{(\mathcal{B}_{p},\boldsymbol{e}_{p})\}_{p\in \mathcal{P}_{\mu}}\) satisfies (bm1)–(bm2). Hence \(\mathcal{P}_{\mu}\) is a C ∞-Banach manifold, which is called the exponential statistical manifold.

4 Construction of the φ-Family of Probability Distributions

The generalization of the exponential family is based on the replacement of the exponential function by a φ-function \(\varphi \colon T\times\overline{\mathbb{R}}\rightarrow[0,\infty]\) that satisfies the following properties, for μ-a.e. t∈T:

-

(a1)

φ(t,⋅) is convex and injective,

-

(a2)

φ(t,−∞)=0 and φ(t,∞)=∞,

-

(a3)

φ(⋅,u) is measurable for all u∈ℝ.

In addition, we assume a positive, measurable function u 0:T→(0,∞) can be found such that, for every measurable function c:T→ℝ for which φ(t,c(t)) is in \(\mathcal{P}_{\mu}\), we have

-

(a4)

φ(t,c(t)+λu 0(t)) is μ-integrable for all λ>0.

The choice for φ(t,⋅) injective with image [0,∞] is justified by the fact that a parametrization of \(\mathcal{P}_{\mu}\) maps real-valued functions to positive functions. Moreover, by (a1), φ(t,⋅) is continuous and strictly increasing. From (a3), the function φ(t,u(t)) is measurable if and only if u:T→ℝ is measurable. Replacing φ(t,u) by φ(t,u 0(t)u), a “new” function u 0=1 is obtained, satisfying (a4).

Example 1

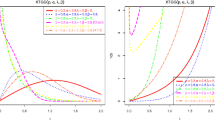

The Kaniadakis’ κ-exponential exp κ :ℝ→(0,∞) for κ∈[−1,1] is defined as

The inverse of exp κ is the Kaniadakis’ κ-logarithm

Some algebraic properties of the ordinary exponential and logarithm functions are preserved:

For a measurable function κ:T→[−1,1], we define the variable κ-exponential exp κ :T×ℝ→(0,∞) as

whose inverse is called the variable κ-logarithm:

Assuming that κ −=ess inf |κ(t)|>0, the variable κ-exponential exp κ satisfies (a1)–(a4). The verification of (a1)–(a3) is easy. Moreover, we notice that exp κ (t,⋅) is strictly convex. We can write for α≥1

By the convexity of exp κ (t,⋅), we obtain for any λ∈(0,1)

Thus any positive function u 0 such that \(\mathbb{E}[\exp_{\kappa }(u_{0})]<\infty\) satisfies (a4).

Let c:T→ℝ be a measurable function such that φ(t,c(t)) is μ-integrable. We define the Musielak–Orlicz function

and denote L Φ, \(\tilde{L}^{\Phi}\) and E Φ by \(L_{c}^{\varphi}\), \(\tilde{L}_{c}^{\varphi}\) and \(E_{c}^{\varphi}\), respectively. Since φ(t,c(t)) is μ-integrable, the Musielak–Orlicz space \(L_{c}^{\varphi}\) corresponds to the set of all functions u∈L 0 for which φ(t,c(t)+λu(t)) is μ-integrable for every λ contained in some neighborhood of 0.

Let \(\mathcal{K}_{c}^{\varphi}\) be the set of all functions \(u\in L_{c}^{\varphi}\) such that φ(t,c(t)+λu(t)) is μ-integrable for every λ in a neighborhood of [0,1]. Denote by φ the operator acting on the set of real-valued functions u:T→ℝ given by φ(u)(t)=φ(t,u(t)). For each probability density \(p\in\mathcal{P}_{\mu}\), we can take a measurable function c:T→ℝ such that p=φ(c). The first import result in the construction of the φ-family is given below.

Lemma 2

The set \(\mathcal{K}_{c}^{\varphi}\) is open in \(L_{c}^{\varphi}\).

Proof

Take any \(u\in\mathcal{K}_{c}^{\varphi}\). We can find ε∈(0,1) such that \(\mathbb{E}[\boldsymbol{\varphi}(c+\alpha u)]<\infty\) for every α∈[−ε,1+ε]. Let \(\delta=[ \frac {2}{\varepsilon}(1+\varepsilon)(1+ \frac{\varepsilon}{2})]^{-1}\). For any function \(v\in L_{c}^{\varphi}\) in the open ball \(B_{\delta}=\{w\in L_{c}^{\varphi}:\Vert w\Vert_{\Phi}<\delta\}\), we have \(I_{\Phi}( \frac{v}{\delta})\leq1\). Thus \(\mathbb {E}[\boldsymbol{\varphi}(c+ \frac{1}{\delta}|v|)]\leq2\). Taking any \(\alpha\in(0,1+ \frac{\varepsilon}{2})\), we denote \(\lambda=\frac{\alpha}{1+\varepsilon}\). In virtue of

it follows that

For \(\alpha\in(- \frac{\varepsilon}{2},0)\), we can write

By (4) and (5), we get \(\mathbb {E}[\boldsymbol{\varphi}(c+\alpha(u+v))]<\infty\), for any \(\alpha\in(- \frac{\varepsilon}{2},1+ \frac{\varepsilon}{2})\). Hence the ball of radius δ centered at u is contained in \(\mathcal{K}_{c}^{\varphi}\). Therefore, the set \(\mathcal {K}_{c}^{\varphi}\) is open. □

Clearly, for \(u\in\mathcal{K}_{c}^{\varphi}\) the function φ(c+u) is not necessarily in \(\mathcal{P}_{\mu}\). The normalizing function \(\psi\colon\mathcal{K}_{c}^{\varphi}\rightarrow\mathbb{R}\) is introduced in order to make the density

contained in \(\mathcal{P}_{\mu}\), for any \(u\in\mathcal{K}_{c}^{\varphi}\). We have to find the functions for which the normalizing function exists. For a function \(u\in L_{c}^{\varphi}\), suppose that φ(c+u−αu 0) is μ-integrable for some α∈ℝ. Then u is in the closure of the set \(\mathcal{K}_{c}^{\varphi}\). Indeed, for any λ∈(0,1),

Since the function u 0 satisfies (a4), we see that φ(c+λu) is μ-integrable. Hence the maximal, open domain of ψ is contained in \(\mathcal{K}_{c}^{\varphi}\).

Proposition 3

If the function u is in \(\mathcal{K}_{c}^{\varphi}\), then there exists a unique ψ(u)∈ℝ for which φ(c+u−ψ(u)u 0) is a probability density in \(\mathcal{P}_{\mu}\).

Proof

We will show that if the function u is in \(\mathcal{K}_{c}^{\varphi}\), then φ(c+u+αu 0) is μ-integrable for every α∈ℝ. Since u is in \(\mathcal {K}_{c}^{\varphi}\), we can find ε>0 such that φ(c+(1+ε)u) is μ-integrable. Taking \(\lambda= \frac{1}{1+\varepsilon}\), we can write

Thus φ(c+u+αu 0) is μ-integrable. By the Dominated Convergence Theorem, the map \(\alpha\mapsto J(\alpha )=\mathbb{E}[\boldsymbol{\varphi}(c+u+\alpha u_{0})]\) is continuous, tends to 0 as α→−∞, and goes to infinity as α→∞. Since φ(t,⋅) is strictly increasing, it follows that J(α) is also strictly increasing. Therefore, there exists a unique ψ(u)∈ℝ for which φ(c+u−ψ(u)u 0) is a probability density in \(\mathcal{P}_{\mu}\). □

The function \(\psi\colon\mathcal{K}_{c}^{\varphi}\rightarrow\mathbb{R}\) can take both positive and negative values. However, if the domain of ψ is restricted to a subspace of \(L_{c}^{\varphi}\), its image will be contained in [0,∞). We denote by \(\boldsymbol {\varphi}_{+}'\) the operator acting on the set of real-valued functions u:T→ℝ given by \(\boldsymbol{\varphi}_{+}'(u)(t)=\varphi_{+}'(t,u(t))\), where \(\varphi_{+}'(t,\cdot)\) is the right-derivative of φ(t,⋅). Define the closed subspace

and let \(\mathcal{B}_{c}^{\varphi}=B_{c}^{\varphi}\cap\mathcal {K}_{c}^{\varphi}\). By the convexity of φ(t,⋅), we have

Hence, for any \(u\in\mathcal{B}_{c}^{\varphi}\), we get

Thus it follows that ψ(u)≥0 in order to find that φ(c+u−ψ(u)u 0) is in \(\mathcal{P}_{\mu}\).

For each measurable function c:T→ℝ such that p=φ(c) is the probability density in \(\mathcal {P}_{\mu}\), we associate a parametrization \(\boldsymbol{\varphi}_{c}\colon\mathcal {B}_{c}^{\varphi}\rightarrow\mathcal{F}_{c}^{\varphi}\) that maps any function u in \(\mathcal{B}_{c}^{\varphi}\) to a probability density in \(\mathcal{F}_{c}^{\varphi}=\varphi_{c}(\mathcal {B}_{c}^{\varphi})\subseteq\mathcal{P}_{\mu}\) according to

Clearly, we have \(\mathcal{P}_{\mu}=\bigcup\{\mathcal{F}_{c}^{\varphi }:\boldsymbol{\varphi}(c)\in\mathcal{P}_{\mu}\}\). Moreover, the map φ c is a bijection from \(\mathcal{B}_{c}^{\varphi}\) to \(\mathcal{F}_{c}^{\varphi}\). If the functions \(u,v\in\mathcal{B}_{c}^{\varphi}\) are such that φ c (u)=φ c (v), then the difference u−v=(ψ(u)−ψ(v))u 0 is in \(B_{c}^{\varphi}\). Consequently, ψ(u)=ψ(v) and then u=v.

Suppose that the measurable functions c 1,c 2:T→ℝ are such that p 1=φ(c 1) and p 2=φ(c 2) belong to \(\mathcal{P}_{\mu}\). The parametrizations \(\boldsymbol{\varphi }_{c_{1}}\colon\mathcal{B}_{c_{1}}^{\varphi}\rightarrow\mathcal {F}_{c_{1}}^{\varphi}\) and \(\boldsymbol{\varphi}_{c_{2}}\colon\mathcal{B}_{c_{2}}^{\varphi }\rightarrow\mathcal{F}_{c_{2}}^{\varphi}\) related to these functions have transition map

Let \(\psi_{1}\colon\mathcal{B}_{c_{1}}^{\varphi}\rightarrow[0,\infty)\) and \(\psi_{2}\colon\mathcal{B}_{c_{2}}^{\varphi}\rightarrow[0,\infty)\) be the normalizing functions associated to c 1 and c 2, respectively. Assume that the functions \(u\in\mathcal {B}_{c_{1}}^{\varphi}\) and \(v\in\mathcal{B}_{c_{2}}^{\varphi}\) are such that \(\boldsymbol {\varphi}_{c_{1}}(u)=\boldsymbol{\varphi}_{c_{2}}(v)\in\mathcal {F}_{c_{1}}^{\varphi}\cap\mathcal{F}_{c_{2}}^{\varphi}\). Then we can write

Since the function v is in \(B_{c_{2}}^{\varphi}\), if we multiply this equation by \(\boldsymbol{\varphi}_{+}'(c_{2})\) and integrate with respect to the measure μ, we obtain

Thus the transition map \(\boldsymbol{\varphi}_{c_{2}}^{-1}\circ \boldsymbol{\varphi}_{c_{1}}\) can be expressed as

for every \(w\in\boldsymbol{\varphi}_{c_{1}}^{-1}(\mathcal {F}_{c_{1}}^{\varphi}\cap\mathcal{F}_{c_{2}}^{\varphi})\). Clearly, this transition map will be of class C ∞ if we show that the functions w and c 1−c 2 are in \(L_{c_{2}}^{\varphi}\), and the spaces \(L_{c_{1}}^{\varphi}\) and \(L_{c_{2}}^{\varphi}\) have equivalent norms. It is not hard to verify that if two Musielak–Orlicz spaces are equal as sets, then their norms are equivalent (see [10, Theorem 8.5]). We make use of the following:

Proposition 4

Assume that the measurable functions \(\widetilde {c},c\colon T\rightarrow\mathbb{R}\) satisfy \(\mathbb{E}[\varphi(t,\widetilde{c}(t))]<\infty\) and \(\mathbb {E}[\varphi(t,c(t))]<\infty\). Then \(L_{\widetilde{c}}^{\varphi}\subseteq L_{c}^{\varphi}\) if and only if \(\widetilde{c}-c\in L_{c}^{\varphi}\).

Proof

Suppose that \(\widetilde{c}-c\) is not in \(L_{c}^{\varphi}\). Let \(A=\{t\in T:\widetilde{c}(t)<c(t)\}\). For λ∈[0,1], we have

Since \(\widetilde{c}-c\notin L_{c}^{\varphi}\), for any λ>0, there holds \(\mathbb{E}[\boldsymbol{\varphi}(c-\lambda(\widetilde {c}-c))]=\infty\). From

we see that \((c-\widetilde{c})\boldsymbol{1}_{A}\) does not belong to \(L_{c}^{\varphi}\). Clearly, \((c-\widetilde{c})\boldsymbol{1}_{A}\in L_{\widetilde{c}}^{\varphi}\). Consequently, \(L_{\widetilde{c}}^{\varphi}\) is not contained in \(L_{c}^{\varphi}\).

Conversely, assume \(\widetilde{c}-c\in L_{c}^{\varphi}\). Let w be any function in \(L_{\widetilde{c}}^{\varphi}\). We can find ε>0 such that \(\mathbb{E}[\boldsymbol{\varphi}(\widetilde{c}+\lambda w)]<\infty\), for every λ∈(−ε,ε). Consider the convex function

This function is finite for λ=0 and α in the interval (−η,1], for some η>0. Moreover, g(1,λ) is finite for every λ∈(−ε,ε). By the convexity of g, we see that g is finite in the convex hull of the set 1×(−ε,ε)∪(−η,1]×0. We find that g(0,λ) is finite for every λ in some neighborhood of 0. Consequently, \(w\in L_{c}^{\varphi}\). Since \(w\in L_{c}^{\varphi}\) is arbitrary, the inclusion \(L_{\widetilde{c}}^{\varphi}\subseteq L_{c}^{\varphi}\) follows. □

Lemma 5

If the function u is in \(\mathcal{K}_{c}^{\varphi}\) and we denote \(\widetilde{c}=c+u-\psi(u)u_{0}\), then the spaces \(L_{c}^{\varphi}\) and \(L_{\widetilde{c}}^{\varphi}\) are equal as sets.

Proof

The inclusion \(L_{\widetilde{c}}^{\varphi}\subseteq L_{c}^{\varphi}\) follows from Proposition 4. Since \(u\in\mathcal {K}_{c}^{\varphi}\), we have

for every λ in a neighborhood of 0. Thus \(c-\widetilde {c}=-u+\psi(u)u_{0}\) belongs to \(L_{\widetilde{c}}^{\varphi}\). From Proposition 4, we obtain \(L_{\widetilde{c}}^{\varphi}\subseteq L_{c}^{\varphi}\). □

By Lemma 5, if we denote \(c_{1}+u-\psi _{1}(u)u_{0}=\widetilde{c}=c_{2}+v-\psi_{2}(v)u_{0}\), we find that the spaces \(L_{c_{1}}^{\varphi}\), \(L_{\widetilde {c}}^{\varphi}\) and \(L_{c_{2}}^{\varphi}\) are equal as sets. In (6), the function w is in \(L_{c_{2}}^{\varphi}\) and consequently c 1−c 2 is in \(L_{c_{2}}^{\varphi}\). Therefore, the transition map \(\boldsymbol {\varphi}_{c_{2}}^{-1}\circ\boldsymbol{\varphi}_{c_{1}}\) is of class C ∞.

Since \(\boldsymbol{\varphi}_{c_{2}}^{-1}\circ\boldsymbol{\varphi}_{c_{1}}\) is of class C ∞, the set \(\boldsymbol{\varphi }_{c_{1}}^{-1}(\mathcal{F}_{c_{1}}^{\varphi}\cap\mathcal {F}_{c_{2}}^{\varphi})\) is open \(B_{c_{1}}^{\varphi}\). The φ-families \(\mathcal {F}_{c}^{\varphi}\) are maximal in the sense that if two φ-families \(\mathcal {F}_{c_{1}}^{\varphi}\) and \(\mathcal{F}_{c_{2}}^{\varphi}\) have non-empty intersection, then they coincide.

Lemma 6

For a function u in \(\mathcal{B}_{c}^{\varphi}\), denote \(\widetilde{c}=c+u-\psi(u)u_{0}\). Then \(\mathcal{F}_{c}^{\varphi }=\mathcal{F}_{\widetilde{c}}^{\varphi}\).

Proof

Let v be a function in \(\mathcal{B}_{c}^{\varphi}\). Then there exists ε>0 such that, for every λ∈(−ε,1+ε), the function φ(c+λv+(1−λ)u) is μ-integrable. Consequently, \(\varphi(\widetilde{c}+\lambda(v-u))\) is μ-integrable for all λ∈(−ε,1+ε). Thus the difference v−u is in \(\mathcal{K}_{\widetilde{c}}^{\varphi}\) and

belongs to \(\mathcal{B}_{\widetilde{c}}^{\varphi}\). Let \(\widetilde{\psi }\colon\mathcal{B}_{\widetilde{c}}^{\varphi}\rightarrow[0,\infty)\) be the normalizing function associated to \(\widetilde{c}\). Then the probability density \(\boldsymbol{\varphi}(\widetilde{c}+w-\widetilde {\psi}(w)u_{0})\) is in \(\mathcal{F}_{\widetilde{c}}^{\varphi}\). This probability density can be expressed as φ(c+v−ku 0) for a constant k. According to Proposition 3, there exists a unique ψ(u)∈ℝ such that the probability density φ(c+v−ψ(v)u 0) is in \(\mathcal{F}_{c}^{\varphi}\). Therefore, \(\mathcal{F}_{c}^{\varphi }\subseteq\mathcal{F}_{\widetilde{c}}^{\varphi}\).

Using the same arguments as in the previous paragraph, we obtain \(c=\widetilde{c}+w-\widetilde{\psi}(w)u_{0}\), where the function \(w\in\mathcal{B}_{\widetilde{c}}^{\varphi}\) is given in (7) with v=0. Thus \(\mathcal{F}_{\widetilde{c}}^{\varphi}\subseteq\mathcal {F}_{c}^{\varphi}\). □

By Lemma 6, if we denote \(c_{1}+u-\psi _{1}(u)u_{0}=\widetilde{c}=c_{2}+v-\psi_{2}(v)u_{0}\), then we have the equality \(\mathcal{F}_{c_{1}}^{\varphi}=\mathcal {F}_{\widetilde{c}}^{\varphi}=\mathcal{F}_{c_{2}}^{\varphi}\).

The results obtained in these lemmas are summarized in the next proposition.

Proposition 7

Let c 1,c 2:T→ℝ be measurable functions such that the probability densities p 1=φ(c 1) and p 2=φ(c 2) are in \(\mathcal{P}_{\mu}\). Suppose \(\mathcal{F}_{c_{1}}^{\varphi}\cap\mathcal{F}_{c_{2}}^{\varphi }\neq\emptyset\). Then the Musielak–Orlicz spaces \(L_{c_{1}}^{\varphi}\) and \(L_{c_{2}}^{\varphi}\) are equal as sets, and have equivalent norms. Moreover, \(\mathcal {F}_{c_{1}}^{\varphi}=\mathcal{F}_{c_{2}}^{\varphi}\).

Thus we can state:

Proposition 8

The collection \(\{(\mathcal{B}_{c}^{\varphi},\boldsymbol {\varphi}_{c})\}_{\boldsymbol{\varphi}(c)\in\mathcal{P}_{\mu}}\) satisfies (bm1)–(bm2), equipping \(\mathcal{P}_{\mu}\) with a C ∞-differentiable structure.

5 Divergence

In this section we define the divergence between two probability distributions. The entities found in Information Geometry [1, 9], like the Fisher information, connections, geodesics, etc., are all derived from the divergence taken in the considered family. The divergence we will found is the Bregman divergence [2] associated to the normalizing function \(\psi\colon\mathcal{K}_{c}^{\varphi }\rightarrow[0,\infty)\). We show that our divergence does not depend on the parametrization of the φ-family \(\mathcal{F}_{c}^{\varphi}\).

Let S be a convex subset of a Banach space X. Given a convex function f:S→ℝ, the Bregman divergence B f :S×S→[0,∞) is defined as

for all x,y∈S, where ∂ + f(x)(h)=lim t↓0(f(x+th)−f(x))/t denotes the right-directional derivative of f at x in the direction of h. The right-directional derivative ∂ + f(x)(h) exists and defines a sublinear functional. If the function f is strictly convex, the divergence satisfies B f (y,x)=0 if and only if x=y.

Let X and Y be Banach spaces, and U⊆X be an open set. A function f:U→Y is said to be Gâteaux-differentiable at x 0∈U if there exists a bounded linear map A:X→Y such that

for every h∈X. The Gâteaux derivative of f at x 0 is denoted by A=∂f(x 0). If the limit above can be taken uniformly for every h∈X such that ∥h∥≤1, then the function f is said to be Fréchet-differentiable at x 0. The Fréchet derivative of f at x 0 is denoted by A=Df(x 0).

Now we verify that \(\psi\colon\mathcal{K}_{c}^{\varphi}\rightarrow \mathbb{R}\) is a convex function. Take any \(u,v\in\mathcal{K}_{c}^{\varphi}\) such that u≠v. Clearly, the function λu+(1−λ)v is in \(\mathcal{K}_{c}^{\varphi}\), for any λ∈(0,1). By the convexity of φ(t,⋅), we can write

Since φ(c+λu+(1−λ)v−ψ(λu+(1−λ)v)u 0) has μ-integral equal to 1, we can conclude that the following inequality holds:

So we can define the Bregman divergence B ψ from to the normalizing function ψ.

The Bregman divergence \(B_{\psi}\colon\mathcal{B}_{c}^{\varphi}\times \mathcal{B}_{c}^{\varphi}\rightarrow[0,\infty)\) associated to the normalizing function \(\psi\colon\mathcal {B}_{c}^{\varphi}\rightarrow[0,\infty)\) is given by

Then we define the divergence \(D_{\psi}\colon\mathcal{B}_{c}^{\varphi }\times\mathcal{B}_{c}^{\varphi}\rightarrow[0,\infty)\) related to the φ-family \(\mathcal{F}_{c}^{\varphi}\) as

The entries of B ψ are inverted in order that D ψ corresponds in some way to the Kullback–Leibler divergence \(D_{\mathrm{KL}}(p,q)=\mathbb{E}[p\log( \frac{p}{q})]\). Assuming that φ(t,⋅) is continuously differentiable, we will find an expression for ∂ψ(u).

Lemma 9

Assume that φ(t,⋅) is continuously differentiable. For any \(u\in\mathcal{K}_{c}^{\varphi}\), the linear functional \(f_{u}\colon L_{c}^{\varphi}\rightarrow\mathbb{R}\) given by \(f_{u}(v)=\mathbb{E}[v\boldsymbol{\varphi}'(c+u)]\) is bounded.

Proof

Every function \(v\in L_{c}^{\varphi}\) with norm ∥v∥Φ,0≤1 satisfies I Φ(v)≤∥v∥Φ,0. Then we obtain

Since \(u\in\mathcal{K}_{c}^{\varphi}\), we can find λ∈(0,1) such that \(\mathbb{E}[\boldsymbol{\varphi}(c+ \frac{1}{\lambda }u)]<\infty\). We can write

Thus the absolute value of \(f_{u}(v)=\mathbb{E}[v\boldsymbol{\varphi}'(c+u)]\) is bounded by some constant for ∥v∥Φ,0≤1. □

Lemma 10

Assume that φ(t,⋅) is continuously differentiable. Then the normalizing function \(\psi\colon\mathcal{K}_{c}^{\varphi}\rightarrow \mathbb{R}\) is Gâteaux-differentiable and

Proof

According to Lemma 9, the expression in (8) defines a bounded linear functional. Fix functions \(u\in\mathcal {K}_{c}^{\varphi}\) and \(v\in L_{c}^{\varphi}\). In virtue of Proposition 4, we can find ε>0 such that \(\mathbb{E}[\boldsymbol{\varphi }(c+u+\lambda|v|)]<\infty\), for every λ∈[−ε,ε]. Define

for any λ∈(−ε,ε) and k≥0. Since \(\mathcal{K}_{c}^{\varphi}\) is open, there exist a sufficiently small α 0>0 such that u+λv+α|v| is in \(\mathcal {K}_{c}^{\varphi}\) for all α∈[−α 0,α 0]. We can write

The function in the expectation above is dominated by the μ-integrable function \(\frac{1}{\alpha_{0}}\{\boldsymbol{\varphi}(c+u+\lambda v+\alpha_{0}|v|-ku_{0})-\boldsymbol{\varphi}(c+u+\lambda v-ku_{0})\}\). By the Dominated Convergence Theorem,

and, consequently,

Since v φ′(c+u+λv−ku 0) is dominated by the μ-integrable function |v|φ′(c+u+ε|v|−ku 0), we obtain for any sequence λ n →λ,

Thus \(\frac{\partial g}{\partial\lambda}(\lambda,k)\) is continuous with respect to λ. Analogously, it can be shown that

and \(\frac{\partial g}{\partial k}(\lambda,k)\) is continuous with respect to k. The equality \(g(\lambda,k(\lambda))=\mathbb {E}[\boldsymbol{\varphi}(c+u+\lambda v-k(\lambda)u_{0})]=1\) defines k(λ)=ψ(u+λv) as an implicit function of λ. Notice that \(\frac{\partial g(0,k)}{\partial k}<0\). By the Implicit Function Theorem, the function k(λ)=ψ(u+λv) is continuously differentiable in a neighborhood of 0, and has derivative

Consequently,

Thus the expression in (8) is the Gâteaux-derivative of ψ. □

Lemma 11

Assume that φ(t,⋅) is continuously differentiable. Then the divergence D ψ does not depend on the parametrization of \(\mathcal{F}_{c}^{\varphi}\).

Proof

For any \(w\in\mathcal{B}_{c}^{\varphi}\), we denote \(\widetilde {c}=c+w-\psi(w)u_{0}\). Given \(u,v\in\mathcal{B}_{c}^{\varphi}\), select \(\widetilde {u},\widetilde{v}\in\mathcal{B}_{\widetilde{c}}^{\varphi}\) such that \(\boldsymbol{\varphi}_{\widetilde{c}}(\widetilde {u})=\boldsymbol{\varphi}_{c}(u)\) and \(\boldsymbol{\varphi}_{\widetilde{c}}(\widetilde{v})=\boldsymbol {\varphi}_{c}(v)\). Let \(\widetilde{\psi}\colon\mathcal{B}_{\widetilde{c}}^{\varphi }\rightarrow[0,\infty)\) be the normalizing function associated to \(\widetilde{c}\). These definitions provide

and

Subtracting these equations, we obtain

and, consequently,

Therefore, \(D_{\widetilde{\psi}}(\widetilde{u},\widetilde{v})=D_{\psi}(u,v)\). □

Let p=φ c (u) and q=φ c (v), for \(u,v\in\mathcal{B}_{c}^{\varphi}\). We denote the divergence between the probability densities p and q by

According to Lemma 11, D(p∥q) is well-defined if p and q are in the same φ-family. We will find an expression for D(p∥q) where p and q are given explicitly. For u=0, we have D(p∥q)=D ψ (0,v)=ψ(v), and then

Therefore, the divergence between probability densities p and q in the same φ-family can be expressed as

Clearly, the expectation in (9) may not be defined if p and q are not in the same φ-family. We extend the divergence in (9) by setting D(p∥q)=∞ if p and q are not in the same φ-family. With this extension, the divergence is denoted by D φ and is called the φ-divergence. By the strict convexity of φ(t,⋅), we have the inequality φ −1(t,u)−φ −1(t,v)≥(φ −1)′(t,u)(u−v) for any u,v>0, with equality if and only if u=v. Hence D φ is always non-negative, and D φ (p∥q) is equal to zero if and only if p=q.

Example 12

With the variable κ-exponential exp κ (t,u)=exp κ(t)(u) in the place of φ(t,u), whose inverse φ −1(t,u) is the variable κ-logarithm ln κ (t,u)=ln κ(t)(u), we rewrite (9) as

where ln κ (p) denotes ln κ(t)(p(t)). Since the κ-logarithm \(\ln_{\kappa}(u)=\frac{u^{\kappa}-u^{-\kappa}}{2\kappa}\) has derivative \(\ln_{\kappa}'(u)= \frac{1}{u} \frac{u^{\kappa }+u^{-\kappa}}{2}\), the numerator and denominator in (10) result in

and

respectively. Thus (10) can be rewritten as

which we called the κ-divergence.

References

Amari, S., Nagaoka, H.: Methods of Information Geometry. Translations of Mathematical Monographs, vol. 191. Am. Math. Soc., Providence (2000). Translated from the 1993 Japanese original by Daishi Harada

Bregman, L.M.: The relaxation method of finding the common point of convex sets and its application to the solution of problems in convex programming. U.S.S.R. Comput. Math. Math. Phys. 7(3), 200–217 (1967)

Cena, A., Pistone, G.: Exponential statistical manifold. Ann. Inst. Stat. Math. 59(1), 27–56 (2007)

Gibilisco, P., Riccomagno, E., Rogantin, M.P., Wynn, H.P. (eds.): Algebraic and Geometric Methods in Statistics. Cambridge University Press, Cambridge (2010)

Grasselli, M.R.: Dual connections in nonparametric classical information geometry. Ann. Inst. Stat. Math. 62(5), 873–896 (2010)

Kaniadakis, G.: Statistical mechanics in the context of special relativity. Phys. Rev. E 66(5), 056125 (2002)

Krasnosel’skiĭ, M.A., Rutickiĭ, J.B.: Convex Functions and Orlicz Spaces. Noordhoff, Groningen (1961). Translated from the first Russian edition by Leo F. Boron

Lang, S.: Differential and Riemannian Manifolds. Graduate Texts in Mathematics, vol. 160, 3rd edn. Springer, New York (1995)

Murray, M.K., Rice, J.W.: Differential Geometry and Statistics. Monographs on Statistics and Applied Probability, vol. 48. Chapman & Hall, London (1993)

Musielak, J.: Orlicz Spaces and Modular Spaces. Lecture Notes in Mathematics, vol. 1034. Springer, Berlin (1983)

Naudts, J.: Generalised Thermostatistics. Springer, London (2011)

Petz, D.: Quantum Information Theory and Quantum Statistics. Theoretical and Mathematical Physics. Springer, Berlin (2008)

Pistone, G.: κ-exponential models from the geometrical viewpoint. Eur. Phys. J. B 70(1), 29–37 (2009)

Pistone, G., Rogantin, M.P.: The exponential statistical manifold: mean parameters, orthogonality and space transformations. Bernoulli 5(4), 721–760 (1999)

Pistone, G., Sempi, C.: An infinite-dimensional geometric structure on the space of all the probability measures equivalent to a given one. Ann. Stat. 23(5), 1543–1561 (1995)

Rao, M.M., Ren, Z.D.: Theory of Orlicz Spaces. Monographs and Textbooks in Pure and Applied Mathematics, vol. 146. Dekker, New York (1991)

Acknowledgements

We are indebted to the anonymous referees for significant comments and suggestions leading to the current version. This work received financial support from CAPES—Coordenação de Aperfeiçoamento de Pessoal de Nível Superior.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Vigelis, R.F., Cavalcante, C.C. On φ-Families of Probability Distributions. J Theor Probab 26, 870–884 (2013). https://doi.org/10.1007/s10959-011-0400-5

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10959-011-0400-5