Abstract

Analyzing the behavior and stability properties of a local optimum in an optimization problem, when small perturbations are added to the objective functions, are important considerations in optimization. The tilt stability of a local minimum in a scalar optimization problem is a well-studied concept in optimization which is a version of the Lipschitzian stability condition for a local minimum. In this paper, we define a new concept of stability pertinent to the study of multiobjective optimization problems. We prove that our new concept of stability is equivalent to tilt stability when scalar optimizations are available. We then use our new notions of stability to establish new necessary and sufficient conditions on when strict locally efficient solutions of a multiobjective optimization problem will have small changes when correspondingly small perturbations are added to the objective functions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Analyzing the sensitivity and stability of an optimal solution when small perturbations are introduced into an optimization problem is an important topic in optimization. In particular, the Lipschitzian stability of locally optimal solutions with respect to small perturbations is a well-studied concept in optimization problems. Several variants of Lipschitzian stability have been studied in [1,2,3,4,5]. A well-known notion of Lipschitzian stability is the tilt stability of a local minimizer. Tilt stability is studied by Poliquin and Rockafellar in the context for scalar optimization in [6]. This notion of stability has been extensively studied by several other researchers in [6,7,8,9,10]. These researchers have paved the way to identify necessary and sufficient conditions for the tilt stability status of locally optimal solutions to an optimization problem. The second-order subdifferential introduced by Mordukhovich in [11, 12] is similarly employed as a tool for constructing exact criteria to identify the tilt stability status of local minimizers in [6,7,8,9,10].

Robust optimization represents another popular and practical branch of approaches to optimization problems which deal with uncertainty in their parameters. Robustness has been studied from several differing points of view in [13,14,15,16,17,18,19,20,21,22]. Considering the multiple notions of characterizing robustness in optimization problems, in this article we benefit from the definition given in [19, 20]. This definition states that an efficient point of a multiobjective optimization problem is robust if it remains efficient when small linear terms are added to the objective functions.

In Sect. 2, we review preliminary concepts, which are assumed in our theories and discussions later in the article. We next introduce a new notion of stability in multiobjective optimization in Sect. 3 with respect to tilt stability in scalar optimization. We then obtain relations between our new concept of stability and the concept of tilt stability. Namely, we show that a tilt-stable local minimizer of the weighted sum problem is a stable locally efficient point of the multiobjective optimization problem. We also present sufficient conditions for stable locally efficient points in our methods based on a few specific properties of the objective functions including linearity, convexity, differentiability, and Lipschitzianity. Furthermore, we determine new necessary and sufficient conditions for robust efficient solutions in our stability analysis. Finally, we demonstrate the significance of the new contributions to stability theory we introduced here and clarify our methodology by elaborating on a short selection of representative examples of our work. We conclude the article in Sect. 4 with some key remarks and suggestions for future research which builds on the new results we have proved in this article.

2 Preliminaries

In this section, we review some basics, notation, and preliminary results in variational analysis, scalar optimization, and multiobjective optimization which we will need to discuss and prove our new results in Sect. 3. For more careful and detailed treatments of these topics, we suggest Borwein and Zhu [23], Rockafellar and Wets [5], and Mordukhovich [4, 24].

2.1 Notation, Definitions and Conventions

In mathematical analysis, for any \(\delta > 0\) we define the \(\delta \)-neighborhood of a point \({\bar{x}} \in {\mathbb {R}}^n\) to be the set

For any set \(\varOmega \), we denote the closure of \(\varOmega \) by \({\text {cl}}(\varOmega )\). The conical hull (or positive hull) of the set \(\varOmega \) is the cone defined by

For any collection of convex sets \(\varOmega _{1} , \ldots , \varOmega _{k} \subseteq {\mathbb {R}}^{n} \), we clearly have that

We adopt the following conventions to compare the order of any two vectors \(x,y\in {\mathbb {R}}^{n}\):

Suppose that \(\Vert . \Vert \) is a vector norm on \( {\mathbb {R}}^{n}\) and \( {\mathbb {R}}^{p} \); then, the mapping \(|\Vert . |\Vert \) on \( {\mathbb {R}}^{p\times n}\)

is called the induced matrix norm corresponding \( \Vert . \Vert \) acting on the vector space \( {\mathbb {R}}^{p \times n} \) of all real \(p \times n\) matrices.

In this paper, we primarily consider the induced matrix norm corresponding to the infinity norm \(\Vert . \Vert _{\infty } \) defined by

If we define the standard matrix \(\gamma \)-norm on \( {\mathbb {R}}^{p \times n} \) by

for \(1 \le \gamma < \infty \), then by norm equivalence there are always positive constants \(C_1(\gamma )\) and \(C_2(\gamma ) \) such that

Thus norm equivalence guarantees that our results considered in this article are suitable in contexts where other norms are more natural choices for analysis.

2.2 Some Basics of Variational Analysis

Consider the following optimization problem

where \(g:{\mathbb {R}}^n\rightarrow {\mathbb {R}}\) is a single real-valued function and \(X \subseteq {\mathbb {R}}^n\) is the set of feasible solutions of (1). Let

The optimization problem in (1) is equivalent to finding an extended real-valued function \( f : {\mathbb {R}}^{n} \longrightarrow \overline{{\mathbb {R}}}\) as follows:

The following proposition expresses a necessary and sufficient condition for minimality of \({\bar{x}} \in {\mathbb {R}}^n\) under some key assumptions of the above optimization problem.

Proposition 2.1

Let \(f : {\mathbb {R}}^{n} \rightarrow {\mathbb {R}}\) be differentiable at \({\bar{x}}\). If there is some \(\delta > 0\) such that \({\bar{x}}\) is a minimum solution of the problem

then \(\nabla f({\bar{x}}) = 0\). Conversely, if f(x) is convex on \(B({\bar{x}},\delta )\) and \(\nabla f({\bar{x}}) = 0\), then \({\bar{x}}\) is an optimal solution of Problem (3).

Proof

We refer the reader to the statement of Theorem 4.1.2 and its corollary proved in [25], which we do not reproduce here. \(\square \)

In scalar optimization, studying the Lipschitzian stability of locally optimal solutions when a small-sized linear term is added to the objective function is of essential importance in applications. The tilt stability of local minimizers is a version of the Lipschitzian stability introduced by Poliquin and Rockafellar in [6] in the following form:

Definition 2.1

(Tilt Stability of Local Minimizers) \({\bar{x}}\) is a tilt-stable locally minimum point of the function \(f: {\mathbb {R}}^{n} \rightarrow \overline{{\mathbb {R}}}\) if and only if \(f({\bar{x}}) \) is finite and there exist \(\delta > 0\) and \(\epsilon > 0\) such that the mapping

is single-valued and Lipschitzian for any \(v \in {\mathbb {R}}^{n}\) satisfying \( \Vert v \Vert < \epsilon \) with \( M(0) = \{ {\bar{x}} \} \).

Proposition 2.2

If the mapping M in Definition 2.1 has Lipschitzian behavior for any \(v \in {\mathbb {R}}^{n}\) with \( \Vert v \Vert < \epsilon \), then the mapping

has Lipschitzian behavior as well.

Proof

Suppose that the mapping M is single-valued and Lipschitzian for any \(v \in {\mathbb {R}}^{n}\) with \( \Vert v \Vert < \epsilon \). For two arbitrary vectors \(v_1\) and \(v_2\) with \( \Vert v_{1} \Vert , \Vert v_{2} \Vert < \epsilon \), let \( x_{1} \in M(v_{1})\) and \( x_{2} \in M(v_{2})\) with \(x_1 \ne x_2\). Since the mapping M is single-valued, we have

Without any loss of generality, suppose that \( f(x_{1}) \le f(x_{2})\). By this inequality and the Lipschitzian property of the mapping M with respect to the constant \(\gamma \) we have

\(\square \)

Most of the properties presented in this article are constructed from the principles of generalized differentiation in variational analysis. We next present the notions of first-order subdifferentials for extended real-valued functions.

In particular, let \(f : {\mathbb {R}}^{n} \rightarrow \overline{{\mathbb {R}}}\) and \(f ({\bar{x}})\) be finite. The regular subdifferential of the extended real-valued function f at \({\bar{x}}\) is defined as

Correspondingly, the Mordukhovich subdifferential of this f at \({\bar{x}}\) is defined as follows:

If f is a smooth function, then we can see that \({\hat{\partial }} f({\bar{x}}) = \partial f({\bar{x}}) = \nabla f({\bar{x}})\). If in addition f is convex, then

We remark that if f is a nonsmooth and nonconvex function, we may have that \( {\hat{\partial }} f ({\bar{x}}) = \emptyset \).

For a \(C^{2}\)-smooth function f(x) at point \(x={\bar{x}}\), a necessary and sufficient condition for \({\bar{x}}\) being a tilt-stable local optimum has been established by [Prop. 1.2, 6] in the form of the next proposition. Then according to this proposition, the tilt stability of f(x) at \(x={\bar{x}}\) reduces to the positive definiteness of the Hessian matrix \(\nabla ^2 f({\bar{x}})\), where f is a \(C^{2}\)-smooth function.

Proposition 2.3

Let f be a \(C^{2}\)-smooth function. If \( \nabla f ( {\bar{x}} ) = 0\), then \({\bar{x}}\) is a tilt-stable local minimum of f if and only if \(\nabla ^{2} f ({\bar{x}})\) is positive definite.

2.3 Some Basics of Multiobjective Optimization

Consider the following multiobjective programming (MOP) problem:

where \(X \subseteq {\mathbb {R}}^n\) is the feasible set and \(f_i(x)\), \(i=1,2,\ldots ,p\), are the objective functions of the above MOP problem. Usually, there exist conflicts among objective functions in their targets then, usually, there does not exist any feasible solution of an MOP problem that optimizes all objective functions. Therefore, the notions of efficient solutions and weakly efficient solutions are introduced in MOP to replace optimal solutions.

Definition 2.2

\({\overline{x}} \in X\) is a (locally) strictly efficient solution of Problem (4) iff (for some \(\delta >0\)) there is no other \(( x \in X \cap B({\bar{x}}, \delta ), x\ne {\bar{x}} )\) \(x \in X\), \(x\ne {\overline{x}}\) such that \(f(x) \leqq f({\overline{x}})\).

Definition 2.3

\({\overline{x}} \in X\) is a (locally) Pareto efficient solution of Problem (4) iff (for some \(\delta >0\)) there is no other \(( x \in X \cap B({\bar{x}}, \delta ))\) \(x \in X\) such that \(f(x) \le f({\overline{x}})\).

Definition 2.4

\({\overline{x}} \in X\) is a (locally) weakly efficient solution of Problem (4) iff (for some \(\delta >0\)) there is no other \(( x \in X \cap B({\bar{x}}, \delta ))\) \(x \in X\) such that \(f(x) < f({\overline{x}})\).

We define sets \(X_{SE}\), \(X_{E}\), and \(X_{WE}\) as the sets of strictly, Pareto, and weakly efficient solutions of (4), respectively.

Remark 2.1

Definitions 2.2, 2.3, and 2.4 imply that each strictly efficient solution is a Pareto efficient solution and each Pareto efficient solution is a weakly efficient solution but the reverses are not necessarily true.

The weakly efficient, Pareto efficient, and strictly efficient solutions of Problem (4) can be found by solving the following single-objective optimization problem:

where \(\lambda _{k} \ge 0\), \(k=1,2,\ldots ,p\), and \(\sum _{k=1}^{p}\lambda _k=1\). The above single-objective optimization problem is called the weighted sum scalarization of the MOP problem (4).

Proposition 2.4

Suppose that \(x^{0}\) is an optimal solution of the weighted sum optimization problem (5) with \(\lambda \in {\mathbb {R}}^{p}_{\ge }\). The following statements hold.

-

1.

If \(\lambda \in {\mathbb {R}}^{p}_{\ge }\), then \(x^{0}\in X_{wE}.\)

-

2.

If \(\lambda \in {\mathbb {R}}^{p}_{>}\), then \(x^{0}\in X_{E}.\)

-

3.

If \(\lambda \in {\mathbb {R}}^{p}_{\ge }\) and \(x^{0}\) is a unique optimal solution of (5), then \(x^{0}\in X_{SE}\),

where \({\mathbb {R}}^{p}_{\ge (>) }=\{x=(x_1,x_2,\ldots ,x_p)\ne 0: x \ge (>) \ 0 \}\).

Conversely, let all \(f_{i}\), \(i=1,2,\ldots ,p\), be convex functions and X be a convex set. If \( {\bar{x}}\in X_{WE}\), then there is \( \lambda = ( \lambda _{1} ,\ldots , \lambda _{p} ) \in {\mathbb {R}}^{p}_{\ge } \) such that \({\bar{x}}\) is an optimal solution of Problem (5).

Proof

Refer to [26]. \(\square \)

3 A New Concept of Stability in Multiobjective Optimization

In this section, we will introduce a new concept of the stability and its properties in multiobjective optimization. Consider the multiobjective optimization problem (4), where \(f_k: X \subseteq {\mathbb {R}}^n\rightarrow {\mathbb {R}}\), \(k=1,2,\ldots , p\), are real-valued objective functions. Using the indicator function \(\delta _X(x)\), (4) is represented as follows:

In the above problem, \(F:{\mathbb {R}}^n\rightarrow \overline{{\mathbb {R}}}^{p} = ]- \infty , \infty ] \times \ldots \times \ ]- \infty , \infty ]\) is an extended real-valued multifunction. In this regard, for an index \(j\in \{1,2,\ldots ,p\}\), if \(f_{j}({\bar{x}})\) (\({\bar{x}} \in {\mathbb {R}}^n\)) is not finite, then \( F({\bar{x}}) \) is not finite, and so, point \({\bar{x}}\) cannot be a candidate for Pareto efficient (weakly efficient) solutions.

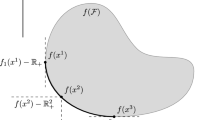

In this section, we present a new concept of the stability of locally efficient solutions in multiobjective optimization. This concept is equivalent to the tilt stability when a single-objective function is available (\(p=1\)). In this regard, we study the following multiobjective optimization problem:

where \(f_{i} : {\mathbb {R}}^{n} \rightarrow \overline{{\mathbb {R}} }, \ i = 1, 2 ,\ldots , p\), are extended real-valued functions and \({\bar{x}}\) is a strictly efficient solution.

To discuss the stability, we perturb F(x) by adding a linear mapping with a sufficiently small norm to the objective functions as follows:

where \(\delta \) is a positive real number and \(v_{1} , v_{2} ,\ldots , v_{p} \in {\mathbb {R}}^{n} \) are the transpose of the rows of matrix \(A_{p \times n}\) with \( \Vert | A \Vert | < \epsilon \) for the sufficiently small number \(\epsilon > 0\).

The main message of tilt stability is the Lipschitzian behavior of the solution mapping of perturbed problems, and then the single-valuedness of the local minimum appears as a result of the Lipschitzian property. However, in our definition, we do not use the Lipschitzian behavior of the solution mapping of perturbed problems, because the assumption of Lipschitzian property of the solution mapping implies the uniqueness of the efficient solution of a multiobjective optimization problem, which is not reasonable. Note that, usually, the number of the efficient solutions of a multiobjective optimization problem is uncountable in continuous optimization. In this regard, we use strict efficiency in the presented concept of the stability of locally efficient solutions in multiobjective optimization instead of unique efficiency. In other words, strict efficiency in multiobjective optimization is corresponded to unique optimality in scalar optimization.

Regarding the above introduction, we present a new structure of stability in multiobjective optimization. Throughout the paper, we consider \(M_{(\delta , F)} ({\bar{x}},A)\) to refer to the following optimization problem:

where \(\delta > 0\) and A is a \(p \times n\) matrix. Here, to extend the notion of tilt stability in scalar optimization to multiobjective optimization, we introduce the following definition.

Definition 3.1

\({\bar{x}}\) is a stable locally efficient point of the function \(F : {\mathbb {R}}^{n} \rightarrow \overline{{\mathbb {R}}}^{p} \), iff \(F({\bar{x}})\) is finite and there exist \(\delta > 0\), \(\epsilon > 0\), \(\gamma \ge 0\), and \( \lambda \ge 0\) such that

-

(a)

\({\bar{x}} \) is a strictly efficient solution of problem \( M_{\delta ,F} ({\bar{x}} , 0_{p \times n})\) and for any \(p \times n\) matrix A with \(\Vert | A \Vert | < \epsilon \), there is a strictly efficient solution x of problem \(M_{\delta ,F} ({\bar{x}} , A)\) such that

$$\begin{aligned} \Vert x - {\bar{x}} \Vert \le \gamma \Vert | A \Vert |. \end{aligned}$$ -

(b)

For any two \(p \times n\) matrices \(A_{1}\) and \(A_{2}\) with \(\Vert | A_{1} \Vert | , \Vert | A_{2} \Vert | < \epsilon \), there are strictly efficient solutions \(x_{1} \) and \( x_{2}\) of problems \(M_{\delta } ({\bar{x}} , A_{1})\) and \(M_{\delta } ({\bar{x}} , A_{2})\), respectively, such that

$$\begin{aligned} \Vert x_{1} - x_{2} \Vert \le \gamma \Vert | A_{1} - A_{2} \Vert |. \end{aligned}$$ -

(c)

For any two strictly efficient solutions \(x_{1}\) and \(x_{2}\) of problems \(M_{\delta ,F} ({\bar{x}} , A_{1})\) and \(M_{\delta ,F} ({\bar{x}} , A_{2})\), respectively, with \( \Vert x_{1} - x_{2} \Vert \le \gamma \Vert | A_{1} - A_{2}\Vert | \) and \(\Vert | A_{1} \Vert | , \Vert | A_{2} \Vert | < \epsilon \), the following relation is true:

$$\begin{aligned} \Vert F(x_{1}) - F(x_{2}) \Vert \le \lambda \Vert | A_{1} - A_{2} \Vert |. \end{aligned}$$

Proposition 3.1

Stable local efficiency, as defined in Definition 3.1, is equivalent to the definition of the tilt stability of a local minimum when \(F : {\mathbb {R}}^{n} \rightarrow \overline{{\mathbb {R}}}\).

Proof

Strict efficiency in multiobjective optimization when one objective function is available (scalar optimization) is equivalent to the existence of a unique optimal solution. In Definition 3.1, if conditions (a) and (b) are relaxed for one objective function (scalar optimization), then the Lipschitzian perturbation of the mapping M is seen. For more explanation, suppose \(A_{1}=v_{1}\) and \(A_{2}=v_{2}\) are \(1 \times n\) vectors and also \(x_{1}\) and \(x_{2}\) are the optimal solutions of \(M_{\delta ,F} ({\bar{x}},v_{1})\) and \(M_{\delta ,F} ({\bar{x}},v_{2})\), respectively. Clearly, condition (b) in Definition 3.1 is the Lipschitzian property on M. Thus, the stable local efficiency introduced in Definition 3.1, where \(p=1\), implies the tilt stability.

Conversely, by the Lipschitzian property of the mapping M in Definition 2.1 and regarding Proposition 2.2, conditions (a), (b), and (c) of Definition 3.1 hold directly where \(p=1\). \(\square \)

Proposition 3.2

If \({\bar{x}}\) is a stable locally efficient point of F(x) with constants \(\delta > 0\), \(\epsilon >0 \), and \(\gamma \ge 0\), as identified in the above definition, then all relations in the definition are true for any \(0< {\hat{\delta }} < \delta \).

Proof

Suppose that \(0< {\hat{\delta }} < \delta \). Without any loss of generality, let

Since there is the strictly efficient solution \({\hat{x}}\) of problem \(M_{\delta ,F}({\bar{x}} , A)\) such that

therefore, \({\hat{x}}\) is a strictly efficient solution of problem \(M_{{\hat{\delta }},F}({\bar{x}} , A)\). \(\square \)

Proposition 3.3

If \(F(x) = ( f_{1}(x) , f_{2}(x) ,\ldots , f_{p}(x) )\), and all functions \(f_{i}\) are Lipschitzian with constants \(k_{i}\), \(i=1,2,\ldots ,p\), then for all \(x_{1}\) and \(x_{2} \) with \( \Vert x_{1} - x_{2} \Vert \le \gamma |\Vert A_{1} - A_{2} \Vert | \), there is \({\hat{\gamma }} \ge 0 \) such that

Proof

For any \(\wp \ge 1\) we have

where \(\Vert .\Vert _\wp \) is the \(L^{\wp }\)-norm. Let \({\hat{\gamma }} := \gamma \sum _{i=1}^{p} k_{i}\), and so the proof is completed. \(\square \)

Proposition 3.4

If there is \(\delta _{1} > 0\) such that each \(f_{i}\) is Lipschitzian on \(B ({\bar{x}} , \delta _{1}) \cap \mathrm{dom}F\), then for the stability of the locally efficient solution \({\bar{x}}\), it is sufficient to check the first and the second conditions of Definition 3.1, where

Proof

If conditions (a) and (b) of Definition 3.1 are true at \({\bar{x}}\) with constant \(\delta > 0\), then according to Proposition 3.2, without any loss of generality, we can consider \(\delta < \delta _{1}\). From Proposition 3.3 condition (c) holds and the proof is completed. \(\square \)

Throughout this paper, we consider \( F(x) = (f_{1} (x),f_{2}(x), \ldots , f_{p}(x))\), where \(f_{i} : {\mathbb {R}}^{n} \rightarrow \overline{{\mathbb {R}}} \), \(i=1,2,\ldots ,p\). Moreover, we suppose that there is \(\delta _{1}>0\) such that each \(f_{i}\) is a Lipschitzian function on \(B ({\bar{x}} , \delta _{1}) \cap \mathrm{dom}F\). Furthermore, we consider

where A is a \(p \times n\) matrix. Since there are \( \eta _{1} > 0 \) and \(\eta _{2} > 0 \) such that \( \eta _{1} |\Vert A \Vert |_{\wp } \le |\Vert A \Vert |_{\infty } \le \eta _{2} |\Vert A \Vert |_{\wp } \) for any \( \wp \ge 1 \) , so conditions (a) and (b) of Definition 3.1 are true for any \( |\Vert . \Vert |_{\wp } \), if we can show that they are true for \( |\Vert . \Vert |_{\infty } \).

Lemma 3.1

\({\hat{x}}\) is a strictly efficient solution of problem \(M_{(\delta ,F)} (A , {\bar{x}} )\) if and only if

Proof

Suppose that \({\hat{x}}\) is a strictly efficient solution of problem \(M_{(\delta ,F)} (A , {\bar{x}} )\). So, there is no \( x_{1} \in cl(B ({\bar{x}} , \delta )) \) with \(x_{1} \ne {\hat{x}}\) such that \(F(x_{1}) - Ax_{1} \leqq F({\hat{x}}) - A {\hat{x}} \). Therefore, Relation (7) holds, clearly.

Now, suppose that Relation (7) is true and \({\hat{x}}\) is not a strictly efficient solution of problem \(M_{(\delta ,F)} (A , {\bar{x}} )\). Then, there is \( x_{1} \in cl(B ({\bar{x}} , \delta )) \) such that \( x_{1} \ne {\hat{x}} \) and \(F(x_{1}) - Ax_{1} \leqq F({\hat{x}}) - A {\hat{x}} \). This implies that there is \( d \in {\mathbb {R}}^{m}_{\geqq } \) such that \( F(x_{1}) - Ax_{1} = (F({\hat{x}}) - A {\hat{x}}) - d \). Therefore

Thus

This shows a contradiction. \(\square \)

For function \(F : {\mathbb {R}}^{n} \rightarrow \overline{{\mathbb {R}}}^{p}\) and point \( {\bar{x}} \in \mathrm{dom}F \), let

\(\phi _{(\delta ,F)} (A , {\bar{x}} )\) is the set of all strictly efficient solutions of problem \(M_{(\delta ,F)} (A , {\bar{x}} )\).

Proposition 3.5

\({\bar{x}} \in \mathrm{dom}F\) is a stable locally efficient point of \(F : {\mathbb {R}}^{n} \rightarrow \overline{{\mathbb {R}}}^{p}\) if and only if

-

(a)

there are \(\delta > 0\), \( \epsilon > 0\), and \(\gamma \ge 0\) such that \( {\bar{x}} \in \phi _{(\delta ,F)} (0 , {\bar{x}} ) \) and for any matrix \(A_{p \times n}\) with \(|\Vert A \Vert | < \epsilon \), there is \(x \in \phi _{(\delta ,F)} (A , {\bar{x}} )\) such that

$$\begin{aligned} \Vert x - {\bar{x}} \Vert \le \gamma \Vert | A \Vert |. \end{aligned}$$ -

(b)

For any two \(p \times n\) matrices \(A_{1}\) and \(A_{2}\) with \(\Vert | A_{1} \Vert | , \Vert | A_{2} \Vert | < \epsilon \), there are \(x_{1} \in \phi _{(\delta ,F)} (A_{1} , {\bar{x}} )\) and \( x_{2} \in \phi _{(\delta ,F)} (A_{2} , {\bar{x}} )\) such that

$$\begin{aligned} \Vert x_{1} - x_{2} \Vert \le \gamma \Vert | A_{1} - A_{2} \Vert |. \end{aligned}$$

Proof

According to the definition of stable local efficiency and Lemma 3.1, the proof is obvious.\(\square \)

The following theorem shows a necessary condition for the stability of a locally efficient solution of an MOP problem, where all objective functions are convex.

Proposition 3.6

Suppose that \( F(x) = (f_{1}(x) , f_{2}(x) ,\ldots , f_{p} (x) ) \) and each \(f_{i}\) is convex and finite on some neighborhoods \(B ({\bar{x}},{\hat{\delta }}) \) with \({\hat{\delta }} > 0\). If \({\bar{x}}\) is a stable locally efficient point of F(x), then for any \(\delta _{1} > 0\) we have

Proof

Suppose that \( 0 \ne d \in {\mathbb {R}}^{n} \) and \(\delta _{1} > 0\) have been given. We show that \( d \in \cup _{x \in B({\bar{x}} , \delta _{1} )} \mathrm{Pos} \{ \cup _{i} \partial f_{i} (x) \} \). Since \( {\bar{x}} \) is a stable locally efficient point of F(x), there exist \(\delta > 0\), \(\epsilon > 0\), and \(\gamma \ge 0\) such that for any \(A_{p \times n}\) with \( |\Vert A \Vert | < \epsilon \) there is the strictly efficient solution x of problem \(M_{(\delta , F)} ({\bar{x}} , A)\) such that

Without any loss of generality, consider \(\gamma \ge 1\), \( \frac{\delta _{1}}{2} < \epsilon \), and \( \delta _{1}< \delta < {\hat{\delta }} \). Let

in which \( |\Vert A \Vert | = \frac{\delta _{1}}{2 \gamma } < \epsilon \). This means that there is the strictly efficient solution \({\hat{x}} \in cl(B({\bar{x}}, \delta )) \) of problem \(M_{(\delta , F)}({\bar{x}} , A)\) such that

and so \({\hat{x}} \in B({\bar{x}},\delta )\). Since for any \(i\in \{1,2,\ldots ,p\}\), \(f_{i}(x) - f_{i}({\bar{x}}) - v_{i}^{t} (x - {\bar{x}}) \) is a convex function on \(cl(B({\bar{x}}, \delta ))\), then, according to Proposition 2.4, there exists vector \( {\overline{\lambda }} \in {\mathbb {R}}_{\ge }^{m} \) such that the optimal solution of problem

is attained at \( {\hat{x}} \in B({\bar{x}},\delta ) \). This means that

Since each \(f_{i}\) is locally Lipschitzian around \({\hat{x}}\), then, according to Theorem 3.36 of [24], we have

Regarding (8) and (9) we have \( d \in \mathrm{Pos} ( \cup _{i} \partial f_{i} ) \). \(\square \)

Lemma 3.2

Suppose that \( f : {\mathbb {R}}^{n} \mapsto \overline{{\mathbb {R}}} \) and \( {\bar{x}} \in \mathrm{dom}f \). If \({\bar{x}}\) is a tilt-stable local minimum of f(x), then for any \(\delta _{1} > 0\) we have

Proof

Suppose that \( 0 \ne d \in {\mathbb {R}}^{n} \) and \(\delta _{1} > 0\) have been given. We show that \( d \in \cup _{x \in B({\bar{x}} , \delta _{1} )} \mathrm{Pos} \{ \partial f (x) \} \). Since \( {\bar{x}} \) is a tilt-stable local minimum of f(x), there exist \(\delta > 0\), \(\epsilon > 0\), and \(\gamma \ge 0\) such that for any \( v \in {\mathbb {R}}^{n} \) with \( \Vert v \Vert < \epsilon \) there is the unique point x such that \(M(v) = \{x\}\) and

where M(.) is the mapping defined in Definition 2.1. Without any loss of generality, consider \(\gamma \ge 1\), \( \frac{\delta _{1}}{2} < \epsilon \) and \(\delta _{1} < \delta \). Let \( v := \frac{\delta _{1}}{2 \gamma } \frac{d}{\Vert d \Vert }\), therefore \( \Vert v \Vert = \frac{\delta _{1}}{2 \gamma } < \epsilon \). This means that there is \({\hat{x}} \in cl(B({\bar{x}}, \delta )) \) such that \( M(v) =\{ {\hat{x}} \} \) and

So \({\hat{x}} \in B ({\bar{x}} , \delta _{1})\) and according to the definition of the mapping M(.), we have \(\frac{\delta _{1}}{2 \gamma } \frac{d}{\Vert d \Vert _{1}} \in \partial f ({\hat{x}})\), then \( d \in \cup _{ x \in B({\bar{x}} , \delta _{1} )} \mathrm{Pos} (\partial f(x)) \). \(\square \)

Note that in the above lemma, function f is not necessarily convex.

Example 3.1

Consider linear function \( f(x) := c^{t}x : {\mathbb {R}}^{n} \mapsto {\mathbb {R}}\). For any \( {\bar{x}} \in {\mathbb {R}}^{n} \) and \( \delta _{1} > 0 \) we have

and \( \cup _{x \in B({\bar{x}}, \delta _{1})} \mathrm{Pos} \{ \partial f(x) \} \ne {\mathbb {R}}^{n}\). So, there exists no tilt-stable local minimum of f(x).

Proposition 3.7

Let \( F(x) := (f_{1}(x) , f_{2}(x) ,\ldots , f_{p} (x) ) \), where \(f_{i} : {\mathbb {R}}^{n} \longmapsto \overline{{\mathbb {R}}} \), \(i=1,2,\ldots ,p\). \({\bar{x}} \in \mathrm{dom}F \) is a stable locally efficient point of F(x) if and only if there is an index set \( I := \{ i_{1} , i_{2} ,\ldots , i_{k} \} \subseteq \{ 1 , 2,\ldots , p \}\) such that \({\bar{x}}\) is a stable locally efficient point of function \( G(x) := (f_{i_{1}}(x) , f_{i_{2}}(x) ,\ldots , f_{i_{k}} (x) )\).

Proof

(\(\Rightarrow \)) By letting \( I := \{ 1 , 2 ,\ldots , p\}\), the proof is clear.

(\( \Leftarrow \)) Conversely, let \({\bar{x}}\) be a stable locally efficient point of G(x), so, there are \(\delta > 0\), \(\epsilon > 0\), and \(\gamma \ge 0\) such that the conditions of Definition 3.1 are satisfied. Since \({\bar{x}}\) is a strictly efficient solution of problem \(M_{\delta ,G} ({\bar{x}} , 0_{|I| \times n})\), then according to Corollary 2.32 of [26], \({\bar{x}}\) is a strictly efficient solution of problem \(M_{\delta ,F}({\bar{x}} , 0_{p \times n}) \). Now consider an arbitrary matrix \(A_{p \times n}\) with \(|\Vert A \Vert | < \epsilon \). Without any loss of generality suppose that \(i_{1} \le i_{2} \le \ldots \le i_{k} \). Let matrix \(B_{|I| \times n} \) be as follows:

where \(b_{l}\) and \(a_{i_l}\), \(l = 1,2,\ldots , k\), are the rows of matrices B and A, respectively. Because of the stable local efficiency of \( {\bar{x}}\) for G(x) and inequality \( |\Vert B \Vert |\le |\Vert A \Vert | < \epsilon \), there is a strictly efficient solution x of problem \(M_{\delta ,G} ({\bar{x}} , B)\) such that

Furthermore, according to Corollary 2.32 of [26], x is a strictly efficient solution of problem \(M_{\delta ,F} ({\bar{x}} , A)\) and so, condition (a) is satisfied for F(x) at \({\bar{x}}\).

Now, consider \( p \times n\) matrices \(A_{1}\) and \(A_{2}\) with \( |\Vert A_{1} \Vert | , |\Vert A_{2} \Vert | < \epsilon \). Similar to the above definition for constructing B from A, \( | I | \times n\) matrices \(B_{1}\) and \(B_{2}\) are defined corresponding to \(A_{1}\) and \(A_{2}\), respectively. There are strictly efficient solutions \(x_{1}\) and \(x_{2}\) of problems \(M_{\delta ,G} ({\bar{x}} , B_{1})\) and \(M_{\delta ,G} ({\bar{x}} , B_{2})\), respectively, and so according to Corollary 2.32 of [26], \(x_{1}\) and \(x_{2}\) are strictly efficient solutions of problems \(M_{\delta ,F} ({\bar{x}} , A_{1})\) and \(M_{\delta ,F} ({\bar{x}} , A_{2})\), respectively, such that

which completes the proof. \(\square \)

Corollary 3.1

If \({\bar{x}}\) is a tilt-stable local minimum of one of the functions \(f_{i}\), \(i=1,2,\ldots ,p\), then \({\bar{x}}\) is a stable locally efficient point of F(x).

Proof

The proof is obtained directly from Propositions 3.7 and 3.3. \(\square \)

Now, we present a necessary and sufficient condition of the stability of locally efficient solutions for multiobjective linear optimization using robustness which has been defined by Georgiev et al. [19] for linear multiobjective optimization and has been extended by Zamani et al. [20] for multiobjective optimization as follows.

Definition 3.2

\({\bar{x}} \in X\) is a robust efficient solution of problem

iff \( {\bar{x}} \) is an efficient solution of Problem (10) and there is \(\epsilon > 0\) such that for any \( p \times n \) matrix A with \( |\Vert A \Vert | < \epsilon \), the point \({\bar{x}}\) is an efficient solution of problem

Now, we show that any robust efficient point is a stable point.

Proposition 3.8

If \({\bar{x}}\) is a robust efficient solution of Problem (10) with constant radius \(\epsilon \), then \({\bar{x}}\) is a strictly efficient point of Problem (10).

Proof

By contradiction, suppose that \({\bar{x}}\) is a robust efficient solution of Problem (10) with constant \(\epsilon \), while it is not a strictly efficient point of Problem (10). So, there is \({\hat{x}} \in X\) such that \({\hat{x}} \ne {\bar{x}}\) and \(F({\hat{x}}) = F({\bar{x}})\).

Let

Now, we have

Furthermore,

Regarding (11) and (12) we have a contradiction with the assumption that \({\bar{x}}\) is a robust efficient solution with radius \(\epsilon \). \(\square \)

Lemma 3.3

If \({\bar{x}}\) is a robust efficient solution of Problem (10) with constant radius \(\epsilon \), then for any matrix \(A_{p \times n}\) with \( |\Vert A |\Vert = \epsilon _{1} < \epsilon \), \({\bar{x}}\) is a robust efficient solution of problem

Proof

Let \( \epsilon _{2} := \epsilon - \epsilon _{1} > 0 \). For any matrix \(B_{p \times n}\) with \( |\Vert B |\Vert < \epsilon _{2}\), \({\bar{x}}\) is an efficient solution of problem

because \({\bar{x}}\) is a robust efficient solution of Problem (10) with constant \(\epsilon \) and also

\(|\Vert -A + B |\Vert \le |\Vert A |\Vert + |\Vert B |\Vert < \epsilon _{1} + \epsilon _{2} = \epsilon .\)

This completes the proof. \(\square \)

Corollary 3.2

If \({\bar{x}}\) is a robust efficient point of Problem (10) with constant radius \(\epsilon \), then for any matrix A with \(\Vert A \Vert < \epsilon ,\) \({\bar{x}}\) is a strictly efficient point of problem

Proof

With regard to Proposition 3.8 and Lemma 3.3 the proof is clear. \(\square \)

Corollary 3.3

If there is \(\delta > 0\) such that \({\bar{x}}\) is a robust efficient point of problem

then \({\bar{x}}\) is a stable locally efficient point.

Proof

Regarding Corollary 3.2, for any matrix A with \(\Vert A \Vert < \epsilon \), \({\bar{x}}\) is a strictly efficient solution of problem

so the conditions of Definition 3.1 are satisfied. \(\square \)

Proposition 3.9

Let X be a closed and convex set, \(f_{i}\) (\(i = 1,\ldots , p\)) be convex and \({\bar{x}}\) be an efficient solution of Problem (10). \({\bar{x}}\) is a robust efficient solution of (10) if and only if \(T_{X} ({\bar{x}}) \cap G ({\bar{x}}) = \{ 0 \}\), where \(G ({\bar{x}})\) is the set of all non-ascent directions defined as

and \(T_{X} ({\bar{x}})\) is the tangent cone of X at \({\bar{x}}\) defined as

Proof

Refer to the proof of Theorem 3.3 of [20]. \(\square \)

Proposition 3.10

Suppose that F(x) is a multiobjective linear function in a neighborhood of \({\bar{x}}\). \({\bar{x}}\) is a stable locally efficient point of F(x) if and only if there is \(\delta > 0\) such that \({\bar{x}}\) is a strictly efficient solution of problem \(M_{\delta ,F}({\bar{x}},0)\).

Proof

(\(\Rightarrow \)) From Definition 3.1 of stable local efficiency, we conclude that, if \({\bar{x}}\) is a stable locally efficient point of F(x), then there exists \(\delta >0\) such that \({\bar{x}}\) is a strictly efficient solution of \(M_{\delta ,F}({\bar{x}},0)\).

(\(\Leftarrow \)) Conversely, let \(F(x)= Cx \) on some neighborhoods of \({\bar{x}}\), where C is a \(p \times n\) matrix. Since \( \partial f_{i} ({\bar{x}}) = \{ \nabla f_{i} ({\bar{x}}) \}= \{ c_{i} \}\), \(i = 1,\ldots , p\), where \(c_i\) is the ith row of C, and \({\bar{x}}\) is a strictly efficient solution of problem \(M_{\delta ,F}({\bar{x}},0)\),

According to Proposition 3.9, point \({\bar{x}}\) is a robust efficient solution of problem

Thus, by Corollary 3.3 we conclude that \({\bar{x}}\) is a stable locally efficient point. \(\square \)

Remark 3.1

Consider the following multiobjective linear programming problem:

where C and H are two \(p\times n\) and \(k \times n\) matrices, respectively. All strict locally efficient solutions of (14) are strict (globally) efficient solutions of (14). Regarding Propositions 3.9 and 3.10, \({\bar{x}}\) is a stable locally efficient solution of (14) if and only if \({\bar{x}}\) is a robust efficient solution of (14). To see more examples for robust efficiency in multiobjective linear programming refer to [19]. Those examples can also be used for stable local efficiency in multiobjective linear programming.

As mentioned in the preliminaries of multiobjective optimization, MOP problems can be converted to scalar optimization problems using some scalarization techniques. One of these scalarization techniques is the weighted sum approach introduced in the previous section. Here, using the weighted sum approach, we introduce some characterizations of the stability of the locally efficient solutions of an MOP problem and the tilt stability of the locally optimal solutions of the weighted sum problem.

Proposition 3.11

Suppose that \( F : {\mathbb {R}}^{n} \mapsto \overline{{\mathbb {R}}}^{p} \) and \({\bar{x}} \in \mathrm{dom} F \). If there is \( \lambda \in {\mathbb {R}}_{\ge }^{p} \) such that \({\bar{x}}\) is a tilt-stable local minimum of the function \( \sum _{i=1}^{p} \lambda _{i} f_{i} (x) \), then \({\bar{x}}\) is a stable locally efficient point of F(x).

Proof

Suppose that there is \( 0 \ne \lambda \in {\mathbb {R}}_{\ge }^{p} \) such that \({\bar{x}}\) is a tilt-stable local minimum of the function \( \sum _{i=1}^{p} \lambda _{i} f_{i} (x) \). Without any loss of generality, suppose that \( \sum _{i=1}^{p} \lambda _{i} = 1 \)( by letting \(\lambda _{i} := \frac{\lambda _{i}}{\sum _{i=1}^{p} \lambda _{i}}\) ).

Therefore, there are \(\epsilon > 0\) and \(\delta > 0\) such that for any \( v \in {\mathbb {R}}^{n} \) with \(\Vert v \Vert < \epsilon \), the mapping

is single-valued and Lipschitzian. For any matrix \(A_{p \times n}\) with \( |\Vert A \Vert | < \epsilon \), let \( v = \sum _{i=1}^{p} \lambda _{i} v_{i}\), where \(v_i\) is the transpose of the ith row of A. Thus

Since the mapping

is single-valued and Lipschitzian with constant \(\gamma \ge 0 \), there is \(x \in cl(B({\bar{x}} , \delta ))\) such that \(M(v) = \{ x \}\). Regarding Part 3 of Proposition 2.4, x is a strictly efficient solution of problem \(M_{\delta ,F} ({\bar{x}} , A)\), and also we have

Similar to the above discussions, for any two \(p \times n \) matrices \(A_{1}\) and \(A_{2}\) with \( |\Vert A_{1} \Vert | , |\Vert A_{2} \Vert | < \epsilon \), there are strictly efficient solutions \(x_{1}\) and \(x_{2} \) of problems \(M_{\delta ,F} ({\bar{x}} , A_{1})\) and \(M_{\delta ,F} ({\bar{x}} , A_{2})\), respectively, such that

This completes the proof. \(\square \)

Note that Corollary 3.1 can also be obtained as a corollary of Proposition 3.11. The converse of the above theorem is not valid in the general case. The following example shows this inconsistency.

Example 3.2

Consider the following multiobjective optimization problem:

where

\({\overline{x}}=0\) is a strictly efficient solution of problem \(M_{\frac{1}{2} , F} (0 , 0_{2 \times 1})\). Furthermore, F(x) is a multi-linear function on B(0, 1). With regard to Proposition 3.10, \({\overline{x}}\) is a stable locally efficient point of F(x). Moreover, for any \( \lambda = (\lambda _{1} , \lambda _{2}) \ge 0 \) and \( -1 \le x \le 1 \), let

Consider \( \delta := 0.5 \), therefore,

Thus

Regarding Lemma 3.2, we conclude that \({\overline{x}}=0\) is not a tilt-stable local minimum for all weights \(\lambda _i\ge 0\), \(i=1,2\).

Proposition 3.12

Let \( F: {\mathbb {R}}^{n} \rightarrow \overline{{\mathbb {R}}}^{p} \) and all \(f_{i}\) be finite on some neighborhoods of \({\bar{x}}\), where \(i \in I \subseteq \{ 1 , 2 ,\ldots , p \} \). Suppose that there are \(\lambda \in {\mathbb {R}}_{\ge 0}^{|I|}\) and \(\epsilon > 0\) such that \(\phi ^{-1} ( v ) = \{ x \ : \ v \in \sum _{i \in I} \lambda _{i} \partial f_{i} (x) \}\) is a Lipschitzian single-valued mapping for any \( v \in {\mathbb {R}}^{n} \), with \(\Vert v \Vert < \epsilon \). If \(\phi ^{-1} (0) = \{ {\bar{x}} \}\), then \( {\bar{x}} \) is a stable locally efficient point of F(x) .

Proof

Since for all \( i \in I \), \(f_{i}\) are locally Lipschitzian on some neighborhoods of \({\bar{x}}\), according to Theorem 3.36 of [24], for any x in these neighborhoods of \({\bar{x}}\) we have

Moreover, regarding Theorem 3.4 of [27], \({\bar{x}}\) is a tilt-stable local minimum of \(\sum _{i \in I} \lambda _{i} f_{i} (x)\). Using Propositions 3.7 and 3.11 of the present paper, we conclude that \({\bar{x}}\) is a stable locally efficient point of F(x). \(\square \)

Proposition 3.13

Let \(F : {\mathbb {R}}^{n} \rightarrow \overline{{\mathbb {R}}}^{p} \) and \( {\bar{x}} \in \mathrm{dom}F\). Suppose that there are index set \(I \subseteq \{ 1 , 2 ,\ldots , p \} \) and \(\delta > 0\) such that \(f_{i}\) are \(C^{2}\)-smooth on \(cl(B({\bar{x}} , \delta ))\) and \( \nabla ^{2} f_{i} ({\bar{x}}) \) are positive semi-definite for any \(i \in I\) and also there is \(j \in I\) such that \(\nabla ^{2} f_{j} ({\bar{x}}) \) is positive definite. If there exist \(\lambda \in {\mathbb {R}}_{>}^{|I|} \) and \({\hat{\delta }} > 0\) such that \({\bar{x}}\) is an optimal solution of the following problem

then \( {\bar{x}} \) is a stable locally efficient point of F(x).

Proof

Without any loss of generality consider \( {\hat{\delta }} \le \delta \), then according to Propositions 2.1 and 2.3 , \({\bar{x}}\) is a tilt-stable local minimum of function \(\sum _{i \in I} \lambda _{i} f_{i} (x)\). Therefore, Propositions 3.7 and 3.11 show that \({\bar{x}}\) is a stable locally efficient point of F(x). \(\square \)

The converse of Proposition 3.13 does not hold in general. Consider the following example.

Example 3.3

Consider \(F : {\mathbb {R}}^{2} \rightarrow {\mathbb {R}}^{3}\) as follows:

Let \( \lambda := (0.5 , 0.5 , 0)\), then \(x = (0, 0)\) is a tilt-stable locally optimal point of \( g(x) = \sum _{i=1}^{3} \lambda _{i} f_{i} (x) = 0.5 (x_{1}^{2} + x_{2}^{2}) \). Using Proposition 3.11, \( x = (0, 0)\) is a stable locally efficient point of F(x).

Moreover, \(f_{1}(0,0)\) and \(f_{2}(0,0)\) are positive semi-definite and \(f_{3}(0,0)\) is positive definite. Furthermore, there are no \({\hat{\delta }} > 0\) and \(\lambda \in {\mathbb {R}}^{3}_{\ge }\) with \(\lambda _{3} > 0\) such that the optimal solution of the following problem is determined at \(x = (0 , 0)\),

because \(\sum _{i=1}^{3} \lambda _{i} \nabla f_{i} (0,0) = \lambda _{3} [2 ,2]^{t} \ne 0\). Therefore, we conclude that the converse of Proposition 3.13 is not valid in general.

Proposition 3.14

For vector function F, let all \(f_{i} \) be \(C^{2}\)-smooth on some neighborhoods \( B({\bar{x}} , \delta ) \). Suppose that there is an index set \( I \subseteq \{ 1 ,\ldots , p \} \) such that \( \nabla ^{2} f_{i}({\bar{x}}) \) are positive definite for all \(i\in I\) and \( f_{j} (x)\) are linear functions on \(B({\bar{x}} , \delta )\) for all \(j\in I^{c}\). Then, \({\bar{x}}\) is a stable locally efficient point of F(x) if and only if there are \( \lambda \in {\mathbb {R}}_{\ge }^{p} \) and \({\hat{\delta }} > 0\) such that the optimal solution of problem

is attained uniquely at \({\bar{x}}\).

Proof

\((\Leftarrow )\) Suppose that \({\hat{\delta }} < \delta \) and there is \(\lambda \ge 0\) such that the optimal solution of the following problem is attained uniquely at \({\bar{x}}\):

We claim that there is \( j \in I\) such that \(\lambda _{j} > 0\). By contradiction, suppose that \(\lambda _{j} = 0\) for all \( j \in I \). Let \( I_{1} := \{ i \in I^{c} : \lambda _{i} > 0 \}:=\{i_1,i_2,\ldots ,i_k\} \) and \(G (x) := (f_{i_{1}} , f_{i_2},\ldots , f_{i_{k}})\). G(x) is a linear mapping on \( cl(B({\bar{x}}, {\hat{\delta }}))\), and so, the objective function of Problem (16) is a parametric scalar linear function with parameters \(\lambda _i\), \(i\in I_1\). Furthermore, \( {\bar{x}} \in B({\bar{x}},\delta )\), which implies that (16) has another optimal solution in addition to \({\bar{x}}\). Thus, the optimal solution of (16) is not attained uniquely at \({\bar{x}}\) which is a contradiction. Therefore, there exists index \(j\in I\) such that \(\lambda _j\ne 0\), and so \( \sum _{i} \lambda _{i} \nabla ^{2} f_{i} ({\bar{x}}) \) is positive definite. By Propositions 2.1 and 2.3 , \({\bar{x}}\) is a tilt-stable local minimum of \( \sum _{i} \lambda _{i} f_{i} ({\bar{x}}) \), and by Proposition 3.11, \({\bar{x}}\) is a stable locally efficient point of F(x).

\((\Rightarrow )\) Conversely, suppose that \({\bar{x}}\) is a stable locally efficient point of F(x), so there is \({\bar{\delta }} < \delta \) such that \({\bar{x}}\) is a strictly efficient solution of problem

Without any loss of generality suppose that \(I := \{ 1, 2,\ldots ,k \}\) and \( I^{c} := \{ k+1, k+2,\ldots , p\}\).

Let

Since \({\bar{x}} \) is a strictly efficient point of Problem (17) and \(f_{j}\) is a linear function on \(cl(B({\bar{x}},{\bar{\delta }}))\) for any \( j \in I^{c}\), we conclude that the system

has no solution. So, regarding Theorem 3.22 of [26], there are \( y^{1} \in {\mathbb {R}}^{k}_{\ge }\) and \( y^{2} \in {\mathbb {R}}^{p-k}_{\geqq } \) such that

Define \(\lambda \in {\mathbb {R}}^{p}_{\ge } \) as follows:

So, considering (20) and (21) we have

In addition, since there is an index \( 1 \le j \le k\) such that \(\lambda _{j} > 0\) and \(\nabla ^{2} f_{j} ({\bar{x}})\) is positive definite, then \(\nabla ^{2} ( \sum _{i} \lambda _{i} f_{i}({\bar{x}}))\) is positive definite and by Proposition 2.3 we conclude that \({\bar{x}}\) is a tilt-stable local minimum of problem \(\sum _{i} \lambda _{i} f_{i}(x)\). The proof is completed by the definition of tilt stability. \(\square \)

Note that in Proposition 3.14 the existence of index set I such that \(\nabla ^{2} f_{i} ({\bar{x}})\) are positive definite for all \(i \in I\) is essential. This is shown by Example 3.2. Regarding Example 3.2, \({\overline{x}}= 0\) is a stable locally efficient point of F(x) while there are no \( \lambda \in {\mathbb {R}}^{2}_{\ge }\) and \(\delta > 0\) such that \( {\overline{x}} = 0 \) is the unique optimal solution of Problem (16).

Note that in Proposition 3.14 the condition that each \(f_{j}, \ j \in I^{c}\) be linear on \(B({\bar{x}},\delta )\) cannot change to the positive semi-definiteness of \(\nabla ^{2}f_{j}({\bar{x}}), \ j \in I^{c}\).

Example 3.4

To show the shortcoming of substituting positive semi-definiteness for linearity in Proposition 3.14, we consider \(F(x)=(-x,x^{4},(x-1)^{2})\). \({\bar{x}} =0\) is a unique optimal point of the weighted sum problem (16) with weights \(\lambda _{1} = \lambda _{3} = 0 , \ \lambda _{2} = 1 \) and arbitrary \({\hat{\delta }} > 0\). Each \(f_i\) is convex, \( \nabla ^{2} f_{1} (0) \) and \( \nabla ^{2} f_{2} (0) \) are positive semi-definite, and also \( \nabla ^{2} f_{3} (0) \) is positive definite. We will show that \( {\bar{x}}=0\) is not a stable locally efficient point of F(x). By contradiction, suppose that \( {\bar{x}}=0\) is a stable locally efficient point of F(x); so, there are \(\delta < 1\) and \( \epsilon > 0\) such that conditions (a) and (b) of Definition 3.1 hold for any matrix A with \(\Vert A \Vert < \epsilon \). Moreover, there is \(k \in N\) such that \(\frac{1}{k^{\frac{1}{3}}} < \delta \) and \( \frac{1}{k} < \epsilon \). For any \( m \in {\mathbb {N}} \) with \(m \ge k\), let

then \( \Vert A_{m} \Vert < \epsilon \). The set of strictly efficient points of \( M_{(\delta ,F)} ({\bar{x}},A_{m}) \) is \( [ (\frac{1}{4m})^{\frac{1}{3}}, \delta ] \). Now we have

for any strictly efficient point of \(M_{(\delta ,F)} ({\bar{x}},A_{m})\). In addition, there is no \(\gamma \ge 0\) such that \(\frac{1}{4m^{\frac{1}{3}}} \le \gamma \Vert A_{m} \Vert =\frac{\gamma }{m} \) for any \( m \in {\mathbb {N}}\) with \(m\ge k\), and this contradicts condition (a) of Definition 3.1.

Proposition 3.15

If the conditions of Proposition 3.14 hold, then \({\bar{x}}\) is a stable locally efficient point of F(x) if and only if there is \(\delta > 0\) such that \({\bar{x}}\) is an efficient point of problem \(M_{\delta ,F}({\bar{x}},0)\).

Proof

\((\Leftarrow \)) Suppose that \({\bar{x}} \) is an efficient point of problem \(M_{\delta ,F}({\bar{x}},0)\) and \(f_{j}\) are linear functions on \( cl(B({\bar{x}},{\bar{\delta }}))\) for any \( j \in I^{c}\), then we conclude the system

has no solution, where A and B are as defined in (18). Similar to the proof of Proposition 3.14, the result is obtained.

(\(\Rightarrow \)) The converse statement is obtained directly by the definition of the stability of F(x). \(\square \)

Proposition 3.16

For vector function F, let each \(f_{i} \) be convex and \(C^{2}\)-smooth on some neighborhoods of \( {\bar{x}} \in \mathrm{dom}F \) and also \( \nabla ^{2} f_{i}({\bar{x}}) \) be positive definite for all i. \({\bar{x}}\) is a stable locally efficient point of F(x) if and only if there is \(\delta > 0\) such that \({\bar{x}}\) is a weakly efficient solution of problem \(M_{\delta ,F}({\bar{x}} , 0)\).

Proof

(\(\Leftarrow \)) Without any loss of generality, suppose that all \(f_{i}\) are convex and \(C^{2}\)-smooth on \(cl(B({\bar{x}}, \delta ))\). Since \({\bar{x}}\) is a weakly efficient solution of problem

there is \(\lambda \ge 0\) such that the optimal value of the following problem is attained at \({\bar{x}}\),

and so \( \sum _{i} \lambda _{i} \nabla f_{i} ({\bar{x}}) = 0 \). Furthermore, there is \( j \in \{ 1 , 2 ,\ldots , p \}\) such that \(\lambda _{j} > 0\). Since \( \nabla ^{2} f_{i} ({\bar{x}}) \) are positive definite for all i, \( \sum _{i} \lambda _{i} \nabla ^{2} f_{i} ({\bar{x}}) \) is positive definite. Regarding Proposition 2.3, \({\bar{x}}\) is a tilt-stable local minimum of \( \sum _{i} \lambda _{i} f_{i} (x) \), and so according to Proposition 3.11, \({\bar{x}}\) is a stable locally efficient point of F(x).

(\(\Rightarrow \)) The converse statement is obtained directly by the definition of the stability of F(x). \(\square \)

4 Conclusions

Tilt stability of a local minimum is a well-known concept to investigate the sensitivity and stability of an optimal solution. This concept is a version of the Lipschitzian stability of a local minimum in scalar optimization. In this paper, a new concept of stability in multiobjective optimization is introduced. In this regard, some perturbations are added to the objective functions as small linear terms. Furthermore, some propositions are established to identify the stability status of a strictly efficient solution. Also, the equivalency of the introduced stability concept and tilt stability is proved, when the set of objective functions is a singleton. In other words, the proposed concept can be considered as an extension of tilt stability in scalar optimization to multiobjective optimization.

Using some nonlinear scalarization methods for the proposed concept of stability and robustness can be considered as an interesting research for future.

References

Bonnans, J.F., Shapiro, A.: Perturbation Analysis of Optimization Problems. Springer, New York (2000)

Dontchev, A.L., Rockafellar, R.T.: Implicit Functions and Solution Mappings: A View from Variational Analysis. Springer, Berlin (2009)

Facchinei, F., Pang, J.-S.: Finite-Dimensional Variational Inequalities and Complementarity Problems. Springer, New York (2003)

Mordukhovich, B.S.: Variational Analysis and Generalized Differentiation, Vol. II: Applications Grundlehren Math. Springer, Berlin (2006)

Rockafellar, R.T., Wets, R.J.B.: Variational Analysis, Grundlehren Math. Wiss., vol. 317. Springer, Berlin (2006)

Poliquin, R.A., Rockafellar, R.T.: Tilt stability of a local minimum. SIAM J. Optim. 8, 287–299 (1998)

Drusvyatskiy, D., Lewis, A.S.: Tilt stability, uniform quadratic growth, and strong metric regularity of the subdifferential. SIAM J. Optim. 23, 256–267 (2013)

Eberhard, A.C., Wenczel, R.: A study of tilt-stable optimality and sufficient conditions. Nonlinear Anal. 75, 1240–1281 (2012)

Mordukhovich, B., Outrata, S.: Tilt stability in nonlinear programming under Mangasarim–Fromouvitz constraint qualification. Kybernetika 49, 446–464 (2013)

Mordukhovich, B.S., Rockafellar, R.T.: Second-order subdifferential calculus with application to tilt stability in optimization. SIAM J. Optim. 22, 953–986 (2012)

Mordukhovich, B.S.: Lipschitzian stability of constraint systems and generalized equations. Nonlinear Anal. 22, 173–206 (1994)

Mordukhovich, B.S.: Sensitivity analysis in nonsmooth optimization. In: Field, D.A., Komkov, V. (eds.) Theoretical Aspects of Industrial Design, SIAM Volume in Applied Mathematics, vol. 58, pp. 32–46 (1992)

Ben-Tal, A., Ghaoui, L.-E., Nemirovski, A.: Robust Optimization. Princeton University Press, Princeton (2009)

Bertsimas, D., Brown, D.-B., Caramanis, C.: Theory and applications of robust optimization. SIAM Rev. 53(3), 464–501 (2011)

Bokrantz, R., Fredriksson, A.: On solutions to robust multiobjective optimization problems that are optimal under convex scalarization. arXiv:1308.4616v2 (2014)

Deb, K., Gupta, H.: Introducing robustness in multi-objective optimization. Evol. Comput. 14(4), 463–494 (2006)

Ehrgott, M., Ide, J., Schöbel, A.: Minmax robustness for multiobjective optimization problems. Eur. J. Oper. Res. 239(1), 17–31 (2014)

Fliege, J., Werner, R.: Robust multiobjective optimization applications in portfolio optimization. Eur. J. Oper. Res. 234(2), 422–433 (2014)

Georgiev, P.-G., Luc, D.-T., Pardalos, P.: Robust aspects of solutions in deterministic multiple objective linear programming. Eur. J. Oper. Res. 229(1), 29–36 (2013)

Zamani, M., Soleimani-damaneh, M., Kabgani, A.: Robustness in nonsmooth nonlinear multi-objective programming. Eur. J. Oper. Res. 247(2), 370–378 (2015)

Jeyakumar, V., Lee, G.M., Li, G.: Characterizing robust solution sets of convex programs under data uncertainty. J. Optim. Theory Appl. 164, 407–435 (2015)

Bot, R.I., Jeyakumar, V., Li, G.Y.: Robust duality in parametric convex optimization. Set-Valued Var. Anal. 21, 177–189 (2013)

Borwein, J.M., Zhu, Q.J.: Techniques of Variational Analysis. CMS Book Math, vol. 20. Springer, New York (2005)

Mordukhovich, B.S.: Variational Analysis and Generalized Differentiation, Vol. I: Basic Theory. Grundlehren Math. Springer, Berlin (2006)

Bazaraa, M.S., Sherali, H.D., Shetty, C.M.: Nonlinear Programming. Wiley, Hoboken (2016)

Ehrgott, M.: Multicriteria Optimization. Springer, Berlin (2005)

Mordukhovich, B.S., Rockafellar, R.T., Sarabi, M.: Characterizations of full stability in constrained optimization. SIAM J. Optim. 23, 1810–1849 (2013)

Acknowledgements

The authors are indebted to Prof. Boris Mordukhovich for his serious discussions on the earlier draft of this manuscript to enrich this manuscript. Moreover, the authors are grateful for the editor and the two anonymous referees for their valuable and constructive comments to improve this manuscript. They would also like to extend their thankfulness to Maxie Schmidt and Karim Rezaei for the linguistic editing of the final draft of this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Fabián Flores-Bazán.

Rights and permissions

About this article

Cite this article

Sadeghi, S., Mirdehghan, S.M. Stability of Local Efficiency in Multiobjective Optimization. J Optim Theory Appl 178, 591–613 (2018). https://doi.org/10.1007/s10957-018-1312-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-018-1312-7