Abstract

In this paper, epsilon and Ritz methods are applied for solving a general class of fractional constrained optimization problems. The goal is to minimize a functional subject to a number of constraints. The functional and constraints can have multiple dependent variables, multiorder fractional derivatives, and a group of initial and boundary conditions. The fractional derivative in the problem is in the Caputo sense. The constrained optimization problems include isoperimetric fractional variational problems (IFVPs) and fractional optimal control problems (FOCPs). In the presented approach, first by implementing epsilon method, we transform the given constrained optimization problem into an unconstrained problem, then by applying Ritz method and polynomial basis functions, we reduce the optimization problem to the problem of optimizing a real value function. The choice of polynomial basis functions provides the method with such a flexibility that initial and boundary conditions can be easily imposed. The convergence of the method is analytically studied and some illustrative examples including IFVPs and FOCPs are presented to demonstrate validity and applicability of the new technique.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this work, our focus is placed on developing an efficient approximate method for solving the fractional constrained optimization problems. A fractional constrained optimization problem can be considered as an isoperimetric fractional variational problem (IFVP) or fractional optimal control problem (FOCP). Thus, it is worthwhile to find an efficient approximate method for solving such problems.

Fractional variational problems (FVPs), isoperimetric fractional variational problems, and FOCPs are three different types of fractional optimization problems. General optimality conditions have been developed for FVPs, IFVPs, and FOCPs. For instance in [1], the author has achieved the necessary optimality conditions for FVPs and IFVPs with Riemann-Liouville derivatives. Hamiltonian formulas for fractional optimal control problems with Riemann-Liouville fractional derivatives have been derived in [2, 3]. In [4] the authors present necessary and sufficient optimality conditions for a class of FVPs with respect to Caputo fractional derivative. Agrawal [5] provides Hamiltonian formulas for FOCPs with Caputo fractional derivatives. Optimality conditions for fractional variational problems with functionals containing both fractional derivatives and integrals are presented in [6]. Such formulas are also developed for FVPs with other definitions of fractional derivatives in [7, 8]. Agrawal [9] includes discussion about a General form of FVPs; the author claims that the derived equations are general form of Euler-Lagrange equations for problems with fractional Riemann-Liouville, Caputo, Riesz-Caputo, and Riesz-Riemann-Liouvile derivatives. Other generalizations of Euler-Lagrange equations for problems with free boundary conditions can be found in [10–13] as well. It is known that optimal solution of fractional variational and optimal control problems should satisfy Euler-Lagrange and Hamiltonian systems, respectively [1–3, 5]. Hence, solving Euler-Lagrange equations and Hamiltonian systems leads to optimal solution of FVPs or FOCPs. Except for some special cases in FVPs [14], it is hard to find exact solution for Euler-Lagrange and Hamiltonian equations, specially when the problem has boundary conditions. Examples of numerical simulations for fractional optimal control problems with Riemann-Liouville fractional derivatives can be found in [2, 3, 15–17]. There also exist some numerical methods for solving fractional variational problems. For instance finite element method in [18, 19] and fractional variational integrator in [20] are developed and applied for some classes of FVPs. In [5], the classical discrete direct method for solving variational problems is generalized for FVPs. Numerical simulations for FOCPs with Caputo fractional derivatives are developed in [5] and [22], where the author has solved the Hamiltonian equations approximately. A general class of FVPs in [23] and a class of FOCPs in [24] are solved directly without using Euler-Lagrange and hamiltonian formulas. Through the use of operational matrix of fractional integration and gauss quadrature formula, [25] presents approximate direct method for solving a class of FOCPs. An approximate method for solving FVPs with Lagrangian containing fractional integral operators is provided in [26]; the authors approximately transform fractional problem into regular problem by decomposing fractional integral operator with the finite series in terms of derivative operators. We refer readers interested in fractional calculus of variations to [27].

The epsilon method has been first introduced by Balakrishnan in [28]. Later, Frick [29, 30] developed the method for solving optimal control problems. In this paper, we apply combination of Ritz and epsilon methods for solving fractional constrained optimization problems. These problems can also have a group of boundary conditions. Our development in Epsilon-Ritz method has the property that the approximate solutions satisfy all initial and boundary conditions of the problem. The implemented method reduces the given constrained optimization problem to the problem of finding optimal solution of a real value function. First, unknown functions are expanded with polynomial basis and unknown coefficients, then an algebraic function, which should be optimized with respect to its variables, in terms of unknown coefficients is achieved. We study the convergence of the approximate method and present numerical examples to illustrate the applicability of the new approach.

This paper is organized as follows. Section 2 presents problem formulation. In Sect. 3, epsilon method is applied to reduce constrained optimization problem of Sect. 2 to an unconstrained problem. In Sect. 4 we solve the unconstrained problem, constructed in Sect. 3, using Ritz method, to achieve an approximate solution of the main problem. Section 5 discusses on the convergence of the method presented in Sect. 4 and finally Sect. 6 reports numerical findings and demonstrates the accuracy of the numerical scheme by considering some test examples. Section 7 consists of a brief summary.

2 Statement of the Problem

Consider the following fractional constrained optimization problem:

subject to

where \(n-1<\alpha _r\le n\), \(n\in \mathbb {N}\), \(L\) is the number of constraints, functions \(F\) and \(G\) are continuously differentiable with respect to all their arguments, and functional \(J\) is bounded from below, i.e., there exist \(\lambda \in \mathbb {R}\) such that \(J[y_1,\dots ,y_m]\ge \lambda \). The fractional derivatives are defined in the Caputo sense

In cases when \(\alpha =n\), the Caputo derivative is defined \( ^C _{t_0}D^{\alpha }_{t}y(t)=y^{(n)}(t)\). In the above problem functionals \(F\) and \(G\) can contain fractional derivatives for some of the variables \(y_j\), \(1 \le j \le m\), (not necessarily for all \(y_j\)s) and each variable can have initial or boundary conditions.

3 Epsilon Method

Without loss of generality, we let \(t_0=0\), \(t_1=1\), and \(t\in [0,1]\) in the problem of Sect. 2.

where \(n-1<\alpha _r\le n\), \(1\le r \le m\) and \(L\) is the number of constraints. In the problem (1) – (2) each variable \(y_j\), \(1\le j \le m\), can be considered in the following three cases:

-

(i)

\(y_j\) has no derivative, neither initial nor boundary conditions.

-

(ii)

\(y_j\) has fractional derivative of order at most \(\alpha _j\) and initial conditions

$$\begin{aligned} y_j^{(i)}(0)=y_{j0}^{i}, \quad 0 \le i \le \lceil \alpha _j \rceil -1. \end{aligned}$$ -

(iii)

\(y_j\) has fractional derivative of order at most \(\alpha _j\) and initial and boundary conditions

$$\begin{aligned} y_j^{(i)}(0)=y_{j0}^{i},\quad y_j^{(i)}(1)=y_{j1}^{i}, \quad 0 \le i \le \lceil \alpha _j \rceil -1. \end{aligned}$$

In this paper, it is considered that the constrained problem (1) – (2) has minimum \(\mu =J[y_{\mu }^1,\dots ,y_{\mu }^m]\) on

where

when \(y_j\) belongs to the case (i),

when \(y_j\) belongs to the case (ii), and

when \(y_j\) belongs to the case (iii).

Note that here \((C^n[0,1],\parallel . \parallel _n)\) is the Banach space

where

Consider the following optimization problem:

where \(\epsilon >0\) is given.

We solve the unconstrained problem (3) instead of the constrained optimization problem (1)–(2) for sufficiently small value of \(\epsilon \). Theorem 5.3 ensures that solving problem (3) with Ritz method leads to an approximate solution for the problem (1)–(2).

4 Ritz Approximation Method

Since Legendre Polynomials have been applied to approximate functions in the subsequent development, we state some basic properties of these polynomials. Of course it is possible to use other types of polynomials, such as Taylor, Bernstein, etc, for approximations.

4.1 Legendre Polynomials

The Legendre polynomials are orthogonal polynomials on the interval \([-1,1]\) and can be determined with the following recurrence formula:

where \(L_0(y)=1\) and \(L_1(y)=y\). By the change of variable \(y=2t-1\) we will have the well-known shifted Legendre polynomials. Let \(p_m(t)\) be the shifted Legendre polynomials of order \(m\) which are defined on the interval \([0,1]\) and can be determined with the following recurrence formula

We also have analytical form of the shifted Legendre polynomial of degree \(i,\) \(p_i(t)\) as follows

In Sect. 4.2, we minimize functional (3) on the set of all polynomials that satisfy initial and boundary conditions of the problem (1) – (2). Lemma 4.1 shows that all such polynomials have the same form.

Lemma 4.1

Let \(p(t)\) be a polynomial that satisfies the following conditions

then \(p(t)\) has the following form

where, \(k\in Z^+\), \(c_j \in \mathbb {R}\), and \(w(t)\) is the Hermit interpolating polynomial of degree at most \(2n+1\) that satisfies above conditions.

Proof

Obviously we have

where

So we have

where \( s(t)=\sum _{j=0}^k c_j p_j(t)\). \(\square \)

Remark 4.1

Considering above lemma, it is easy to see that polynomial \(p(t)\), that satisfies conditions

has the form

where, \(k\in Z^+\), \(c_j \in \mathbb {R}\), and \(w(t)\) is the Hermit interpolating polynomial that satisfies given conditions.

4.2 Approximation

Consider expansions \(y_{j,\epsilon }^k(t)\), \(1\le j \le m\), in the following forms:

for when \(y_j\) belongs to the case (i).

for when \(y_j\) belongs to the case (ii). Here, \(w_j\) is the Hermit interpolating polynomial that satisfies all initial conditions of \(y_j\).

for when \(y_j\) belongs to the case (iii). In this case, \(w_j\) is the Hermit interpolating polynomial that satisfies all initial and boundary conditions of \(y_j\).

Substituting \(y_{j,\epsilon }^k\), \(1\le j \le m\), in (3), we achieve

which is an algebraic function of unknowns \(c_{j,\epsilon }^i, i=0,1,\dots ,k, j=1,\dots ,m\). If \(c_{j,\epsilon }^i\)s be determined by minimizing function \(J_{\epsilon }\), then by (4) – (6) we achieve functions that approximate minimum value of \(J_{\epsilon }\) in (7) and also satisfy all initial and boundary conditions of the problem. According to differential calculus, the following system of equations is the necessary condition of optimization for the function

(7).

By solving the system (8), we can determine the minimizing values of \(c_{j,\epsilon }^i\)s, \(i=0,1,\dots ,k\), \(j=1,\dots ,m\) for function (7). Hence, we achieve functions \(y_{j,\epsilon }^k\), \(1\le j \le m\), by (4) – (6), which approximate minimum value of \(J\) by

while

and also satisfy all initial and boundary conditions of the problem.

5 Convergence

Let

where \(f_{0}^j\) and \(f_{1}^j\) are given constant values. The following lemma plays an important role in our discussion. The lemma shows that polynomial functions of the metric space \(E[0,1]\) are dens.

Lemma 5.1

Let \(f(t)\in E[0,1]\). There exist a sequence of polynomial functions \(\{s_k(t)\}_{k\in N} \subset E[0,1]\) such that \(s_k \rightarrow f\) with respect to \(\parallel . \parallel _n\).

Proof

[23]. \(\square \)

Consider the normed space \((F_m [0,1],\parallel . \parallel )\) as follows

where \(\parallel y_j \parallel _{E_j}=\parallel y_j \parallel _{\infty }\) when \(y_j\) belongs to the case (i), \(\parallel y_j \parallel _{E_j}=\parallel y_j \parallel _{\lceil \alpha _j \rceil } \) when \(y_j\) belongs to the case (ii) or (iii).

Consider \(H_m^k[0,1]\) as follows:

where \(< \{p_i\}_{i=0}^k >\) is the Banach space generated by the Legendre polynomials of degree at most \(k\). Of course \(H_m^k[0,1]\) is a subspace of \(F_m[0,1]\).

Let \(y\in C^{n}[0,1]\). For Caputo fractional derivative of order \(\alpha \), \(n-1<\alpha \le n\), we have \( ^C _{0}D^{\alpha }_{t}y(t) \in C[0,1] \) [31–33]. We also have

So

Now consider (3) as functional \(J_{\epsilon }:F_m[0,1] \rightarrow \mathbb {R}.\) Lemma 5.2 shows that \(J_{\epsilon }\) is continuous on it’s domain. We use this important property later in Theorem 5.2. The following theorem from real analysis plays key role in the proof of Lemma 5.2.

Theorem 5.1

Let \(f\) be continuous mapping of a compact metric space \(X\) into a metric space \(Y\), then \(f\) is uniformly continuous.

Proof

[34]. \(\square \)

Lemma 5.2

The functional \(J_{\epsilon }\) is continuous on \((F_m[0,1],\parallel . \parallel )\).

Proof

Let \((y_1^*,\dots ,y_m^*)\in F_m[0,1]\). \(\eta >0\) is given. Consider \(d>0\) and

where \( L=max \{ \parallel y_1^* \parallel _{\infty },\dots ,\parallel y_m^* \parallel _{\infty },\dots , \parallel {^C _{0}D^{\alpha _r}_{t}y_r^*}\parallel _{\infty },\dots \}.\)

Obviously \(Y^*(t)=(t,y_1^*(t),\dots ,y_m^*(t),\dots ,{^C _{0}D^{\alpha _r}_{t}y_r^*(t)},\dots ) \in I,\) \(t\in [0,1]. \) \(\gamma >0\) is given. Let \(\delta >0\) and \(\parallel (y_1,\dots ,y_m)-(y_1^*,\dots ,y_m^*)\parallel <\delta \), hence we have \(\parallel y_{j}-y_{j}^*\parallel _{E_j}<\delta \), \(1\le j\le m,\) and according to (10) it is easy to see that for small enough value of \(\delta \) we have

Since functions \(F\) and \(G_l\), \( l=1,\dots , L,\) are continuous on \(I\) and \(I\) is a compact set, according to Theorem 5.1, \(R=F+\frac{1}{\epsilon }\sum _{l=1}^L G_l^2\) is uniformly continuous on \(I\). So if \(\gamma >0\) be sufficiently small, then \(\mid Y(t)-Y^*(t)\mid <\gamma \) implies that \(\mid R(Y(t))-R(Y^*(t)) \mid < \eta \), \(t\in [0,1]\), and \( \mid J_{\epsilon }[y_1,\dots ,y_m]-J_{\epsilon }[y_1^*,\dots ,y_m^*]\mid <\eta .\) \(\square \)

Theorem 5.2

Let \(\mu _{\epsilon }\) be the minimum of the functional \(J_{\epsilon }\) on \(F_m[0,1]\) and also let \(\hat{\mu }_{\epsilon ,k}\) be the minimum of the functional \(J_{\epsilon }\) on \(H_m^k[0,1]\), then \(\lim _{k \rightarrow \infty }\hat{\mu }_{\epsilon ,k}=\mu _{\epsilon }.\)

Proof

For any given \(\eta >0\), let \((y_{1,\epsilon }^*,\dots ,y_{m,\epsilon }^*)\in F_m[0,1]\) such that

Such \((y_{1,\epsilon }^*,\dots ,y_{m,\epsilon }^*)\) exists by the properties of minimum. According to Lemma 5.2, \(J_{\epsilon }\) is continuous on \(( F_m[0,1],\parallel . \parallel )\) so we have

provided that \(\parallel (y_1,\dots ,y_m)-(y_{1,\epsilon }^*,\dots ,y_{m,\epsilon }^*)\parallel <\delta \). According to Weierstrass theorem [34] and Lemma 5.1, for large enough value of \(k\) there exist \((\gamma _1^k,\dots ,\gamma _m^k)\in H_m^k[0,1]\) such that \(\parallel (\gamma _1^k,\dots ,\gamma _m^k)-(y_{1,\epsilon }^*,\dots ,y_{m,\epsilon }^*) \parallel <\delta \). Moreover let \((y_{1,\epsilon }^k,\dots ,y_{m,\epsilon }^k)\) be the element of \(H_m^k[0,1]\) such that \(J_{\epsilon }[y_{1,\epsilon }^k,\dots ,y_{m,\epsilon }^k]=\hat{\mu }_{\epsilon ,k}\), then using (11) we have

Since \(\eta >0\) is arbitrary, it follows that: \( \lim _{k \rightarrow \infty }\hat{\mu }_{\epsilon ,k}=\mu _{\epsilon }.\) \(\square \)

Theorem 5.3

Let \(\{\epsilon _j\}_{j \in N}\downarrow 0\) be a sequence of monotonically decreasing positive real numbers. Suppose \(\hat{\mu }_{\epsilon _j,k}=J_{\epsilon _j}[y_{1,\epsilon _j}^k,\dots ,y_{m,\epsilon _j}^k]\) be the minimum of the functional \(J_{\epsilon _j}\) on \(H_m^k[0,1]\), then for given \(\eta >0\) there exist a sequence of natural numbers \(\{k_j\}_{j\in N}\) such that

and

Proof

\(\eta >0\) is given. Suppose \(\mu _{\epsilon _j}=J_{\epsilon _j}[y_{1,\epsilon _j},\dots ,y_{m,\epsilon _j}]\) be the minimum of the functional \(J_{\epsilon _j}\) on \(F_m[0,1]\). It is obvious that

On the other hand according to Theorem 5.2 we have

So considering (12) and (13), \(\forall j \in N\) there exist \(k_j\in N\) such that

and we have

Now according to (14) and the assumption \(J[y_1,\dots ,y_m]\ge \lambda \), it is easy to see that

Thus, (15) leads to: \( \lim _{j \rightarrow \infty } \sum _{l=1}^L \int \limits _0^1 G_l^2(t, y_{1,\epsilon _j}^{k_j},\dots , y_{m,\epsilon _j}^{k_j},\dots ,{^C _{0}D^{\alpha _r}_{t} y_{r,\epsilon _j}^{k_j}},\dots )\mathrm{d}t=0.\) \(\square \)

6 Illustrative Test Problems

In this section we apply the method presented in Sect. 4.2 for solving the following test examples. The well-known symbolic software “Mathematica” has been employed for calculations and creating figures.

Example 6.1

Consider the one dimensional integer order FOCP

For above problem there exist optimal solution

and minimum value \(J[x(t),u(t)]=0.192909\) [35]. Let

and \(\epsilon =0.00001\) in (3). Consider approximations (4) and (5), respectively, as \( u^8_\epsilon (t)=\sum _{j=0}^8 u_\epsilon ^jp_j(t)\) and \(x^8_\epsilon (t)=\sum _{j=0}^8 x_\epsilon ^jp_j(t)t+1. \) Substituting approximations \(u^8_\epsilon (t)\) and \(x^8_\epsilon (t)\) in functional \(J_{0.00001}[x,u]\) to achieve function (7) and solving the system (8) we will have

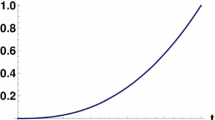

Figure 1 shows the approximate and exact solutions of the problem.

Example 6.2

Consider the following IFVP

subject to

For the given problem, we have minimizing functions \(y_1(t)=t^2+1\) and \(y_2(t)=t^{\frac{5}{2}}\) with \(J[y_1,y_2]=0\). The problem is solved, substituting approximations (6) with \(w_1(t)=1+t\), \(w_2(t)=t\) and \(k=4\) in (7) and solving the system (8). Table 1 shows \(\mu _{\epsilon ,k} \) and \(E_{\epsilon ,k}=\mid \int _0^1 [5(y_{1,\epsilon }^k(t)-1)^2+6{y_{2,\epsilon }^k}^2(t)]\mathrm{d}t-2 \mid ,\) for \(k=4\) and \(\epsilon =0.1,0.01,0.001\).

Example 6.3

Consider the IFVP

subject to

For the given problem we have \(y(t)=t^{\frac{5}{2}}\) as minimizing function with \(J[y]=0\). Applying approximation (6) with \(w(t)=t^2+\frac{1}{2}t^2(t-1)\) and solving the system (8), approximate solution for the problem is achieved. Table 2 shows values of \(\mu _{\epsilon ,k}\) and \(E_{\epsilon ,k}=\mid \int _0^1 {y_{\epsilon }^k}^2(t)\mathrm{d}t-\frac{1}{6} \mid \) for different values of \(\epsilon \) and basis functions \(k\).

Example 6.4

Consider the two dimensional integer order FOCP

For above problem we have optimal solution

and minimum value \(J[x_1,x_2,u]=0.431984\) [17]. Let

and \(\epsilon =0.00001\) in (3). Approximations (4) for \(u(t)\) and (5) for \(x_1(t)\) and \(x_2(t)\) with \(w_1(t)=w_2(t)=1\) are considered. Substituting the approximations in functional \(J_{0.00001}[x_1,x_2,u]\) as (7) and solving the system (8), we achieve the following approximate values for the problem:

Figure 2 demonstrates exact and approximate solutions of the problem.

Example 6.5

Consider the two dimensional FOCP

subject to

For above problem optimal solution \(x_1(t)=1+t^{\frac{3}{2}}\), \(x_2(t)=t^{\frac{5}{2}}\), \(u(t)=\frac{3\sqrt{\pi }}{4}t-t^{\frac{5}{2}}\) and minimum value \(J[x_1,x_2,u]=0\) are available. Let

We solve the problem with considering approximation (4) for \(u(t)\) and (5) for \(x_1(t)\) and \(x_2(t)\) with \(w_1(t)=1\) and \(w_2(t)=0\), respectively. Table 3 shows values of approximate minimum \(\mu _{\epsilon ,k}\) and

for different values of \(\epsilon \) and \(k\).

7 Conclusions

An approximate method based upon epsilon and Ritz methods is developed for solving a general class of fractional constrained optimization problems. First, by applying the epsilon method, the constrained optimization problem is reduced to an unconstrained problem, then using Ritz method with special type of polynomial basis functions, the unconstrained optimization problem is reduced to the problem of finding optimal solution of a real value function. The proposed polynomial basis functions have great flexibility in satisfying initial and boundary conditions. The convergence of the method is extensively discussed and illustrative test examples including IFVPs and FOCPs are presented to demonstrate efficiency of the new technique.

References

Agrawal, O.M.P.: Formulation of Euler-Lagrange equations for fractional variational problems. J. Math. Anal. Appl. 272, 368–379 (2002)

Agrawal, O.M.P.: A general formulation and solution scheme for fractional optimal control problems. Nonlinear Dyn. 38, 323–337 (2004)

Agrawal, O.M.P., Baleanu, D.: A Hamiltonian formulation and a direct numerical scheme for fractional optimal control problems. J. Vib. Cont. 13, 1269–1281 (2007)

Almeida, R., Torres, D.F.M.: Necessary and sufficient conditions for the fractional calculus of variations with Caputo derivatives. Commun. Nonlinear Sci. Numer. Simul. 16, 1490–1500 (2011)

Agrawal, O.M.P.: A formulation and numerical scheme for fractional optimal control problems. J. Vib. Cont. 14, 1291–1299 (2008)

Almeida, R., Torres, D.F.M.: Calculus of variations with fractional derivatives and fractional integrals. Appl. Math. Lett. 22, 1816–1820 (2009)

Agrawal, O.M.P.: Fractional variational calculus in terms of Riesz fractional derivatives. J. Phys. Math. Theo. 40, 6287–6303 (2007)

Almeida, R., Torres, D.F.M.: Fractional variational calculus for nondifferentiable functions. Comput. Math. Appl. 61, 3097–3104 (2011)

Agrawal, O.M.P.: Generalized variational problems and Euler-Lagrange equations. Comput. Math. Appl. 59, 1852–1864 (2010)

Agrawal, O.M.P.: Fractional variational calculus and transversality conditions. J. Phys. Math. Gen. 39, 10375–10384 (2006)

Agrawal, O.M.P.: Generalized Euler-Lagrange equations and transversality conditions for FVPs in terms of the Caputo Derivative. J. Vib. Cont. 13, 1217–1237 (2007)

Malinowska, A.B., Torres, D.F.M.: Generalized natural boundary conditions for fractional variational problems in terms of the Caputo derivative. Comput. Math. Appl. 59, 3110–3116 (2010)

Yousefi, S.A., Dehghan, M., Lotfi, A.: Generalized Euler-Lagrange equations for fractional variational problems with free boundary conditions. Comput. Math. Appl. 62, 987–995 (2011)

Baleanu, D., Trujillo, J.J.: On exact solutions of class of fractional Euler-Lagrange equations. Nonlinear Dyn. 52, 331–335 (2008)

Baleanu, D., Defterli, O., Agrawal, O.M.P.: A central difference numerical scheme for fractional optimal control problems. J. Vib. Cont. 15, 583–597 (2009)

Tricaud, C., Chen, Y.Q.: An approximate method for numerically solving fractional order optimal control problems of general form. Comput. Math. Appl. 59, 1644–1655 (2010)

Yousefi, S.A., Lotfi, A., Dehghan, M.: The use of a Legendre multiwavelet collocation method for solving the fractional optimal control problems. J. Vib. Cont. 17, 1–7 (2011)

Agrawal, O.M.P.: A general finite element formulation for fractional variational problems. J. Math. Anal. Appl. 337, 1–12 (2008)

Agrawal, O.M.P., Mehedi Hasan, M., Tangpong, X.W.: A numerical scheme for a class of parametric problem of fractional variational calculus. J. Comput. Nonlinear Dyn. 7, 021005–1 (2012)

Wang, D., Xiao, A.: Fractional variational integrators for fractional variational problems. Commun. Nonlinear Sci. Numer. Simul. 17, 602–610 (2012)

Pooseh, S., Almeida, R., Torres, D.F.M.: Discrete direct methods in the fractional calculus of variations. Comput. Math. Appl. 66, 668–676 (2013)

Agrawal, O.M.P.: A quadratic numerical scheme for fractional optimal control problems. J. Dyn. Sys. Measur. Cont. 130, 011010-1-011010-6 (2008)

Lotfi, A., Yousefi, S.A.: A numerical technique for solving a class of fractional variational problems. J. Comput. Appl. Math. 237, 633–643 (2013)

Lotfi, A., Dehghan, M., Yousefi, S.A.: A numerical technique for solving fractional optimal control problems. Comput. Math. Appl. 62, 1055–1067 (2011)

Lotfi, A., Yousefi, S.A., Dehghan, M.: Numerical solution of a class of fractional optimal control problems via the Legendre orthonormal basis combined with the operational matrix and the Gauss quadrature rule. J. Comput. Appl. Math. 250, 143–160 (2013)

Pooseh, S., Almeida, R., Torres, D.F.M.: Approximation of fractional integrals by means of derivatives. Comput. Math. appl. 64, 3090–3100 (2012)

Malinowska, A.B., Torres, D.F.M.: Introduction to the Fractional Calculus of Variations. Imp. Coll. Press, London (2012)

Balakrishnan, A.V.: On a new computing technique in optimal control. SIAM J. Cont. 6, 149–173 (1968)

Frick, P.A.: An integral formulation of the \(\epsilon \)-problem and a new computational approach to control function optimization. J. Optim. Theo. Appl. 13, 553–581 (1974)

Frick, P.A., Stech, D.J.: Epsilon-Ritz method for solving optimal control problems: usefull parallel solution method. J. Optim. Theo. Appl. 79, 31–58 (1993)

Samko, S.G., Kilbas, A.A., Marichev, O.I.: Fractional Integrals and Derivatives. Theory and Applications. Gordon and Breach Sci. Publ, Langhorne (1993)

Podlubny, I.: Fractional Differential Equations. Academic Press, San Diego (1999)

Kilbas, A.A., Srivastava, H.M., Trujillo, J.J.: Theory and Applications of Fractional Differential Equations. North-Holland Mathematics Studies, Amsterdam (2006)

Royden, H.L.: Real Analysis. Macmillan Publishing Company, U.S.A (1988)

Datta, K.B., Mohan, B.M.: Orthogonal Functions in Systems and Control. World Scientific, Singapore, New Jersey, London, Hong Kong (1995)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Lotfi, A., Yousefi, S.A. Epsilon-Ritz Method for Solving a Class of Fractional Constrained Optimization Problems. J Optim Theory Appl 163, 884–899 (2014). https://doi.org/10.1007/s10957-013-0511-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-013-0511-5