Abstract

In this paper, we consider the global error bound for the generalized linear complementarity problem over a polyhedral cone (GLCP). Based on the new transformation of the problem, we establish its global error bound under milder conditions, which improves the result obtained by Sun and Wang (2009) for GLCP by weakening the assumption.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In recent years, complementarity problems have been extended in many directions via innovative techniques to study a wide class of problems arising in pure and applied sciences. A useful and important generalization is called the generalized nonlinear complementarity problem over a polyhedral cone (GNCP). This problem was introduced first by Andreani et al. [1] in 2001, and further developed by Wang et al. in [2, 3]. The GNCP plays a significant role in economics, operation research, and nonlinear analysis, etc. [4].

The generalized linear complementarity problem over a polyhedral cone (GLCP) is a special case of the GNCP, and some theoretical issues such as the existence of solutions and the numerical solution methods for the GLCP (GNCP) have been discussed, e.g., [1–3]. It is well known that the global error bound is an important tool for theoretical analysis and numerical treatment for a mathematic problem [4, 5]. We are here concerned with the global error bound on the distance between a given point and the solution set of the GLCP in terms of some residual functions.

This paper is a follow-up to [6], as in this paper will establish the global error bound estimation of the GLCP under weaker conditions than that needed in [6]. To this end, we first develop some equivalent reformulations of the GLCP under weaker condition than that discussed in [6], and then establish the global error bound for the GLCP, which improves the result obtained in [6]. Compared with the error bound result in [6, 7], the requirement of a non-degenerate solution and the square root term of residual function in the error bound estimation are both removed here.

2 Preliminaries

Let ℝm be a real Euclidean space equipped with the standard inner product, and let mappings F(x)=Mx+p, G(x)=Nx+q, where M,N∈ℝm×n, p,q∈ℝm. The generalized linear complementarity problem over a polyhedral cone, abbreviated as GLCP, is to find vector x ∗∈ℝn such that

where \(\mathcal{K}\) is a polyhedral cone in ℝm such that there exist matrices A∈ℝs×m, B∈ℝt×m satisfying \(\mathcal{K} =\{v\in \mathbb{R}^{m}\mid Av\geq0,\ Bv=0\}\), and \(\mathcal{K}^{\circ}\) is its dual cone which admits the following form:

Throughout this paper, we denote the solution set of the GLCP by X ∗ and assume that it is nonempty throughout this paper.

Based on this, we can give the needed assumptions for our analysis.

Assumption 2.1

For the matrices A, M, N involved in the GLCP, we assume that

-

(A1)

the matrix M ⊤ N is positive semi-definite;

-

(A2)

the matrix A ⊤ has full-column rank.

Under this assumption, matrix A ⊤ has full-column rank and it has left inverse (AA ⊤)−1 A, which is also its pseudo-inverse of A ⊤. On the other hand, the condition that the matrix A ⊤ has full-column rank is weaker than that the matrix (A ⊤,B ⊤) has full-column rank discussed in [6].

To end this section, some notations used are in order. The norm ∥⋅∥ and ∥⋅∥1 denote the Euclidean 2-norm and 1-norm, respectively. We use \(\mathbb{R}^{n}_{+}\) to denote the orthogonal nonnegative orthant in ℝn, and use x + and x − to denote the projections of vector x onto \(\mathbb{R}^{n}_{+}\) and \(\mathbb{R}^{n}_{-}\), respectively.

3 Main Result

In the following, we first establish some equivalent reformulations to the GLCP. To this end, the following straightforward result is needed.

Theorem 3.1

A point x ∗∈ℝn is a solution of the GLCP if and only if there exist \(\lambda_{1}^{*}\in \mathbb{R}^{s}\), \(\lambda_{2}^{*}\in \mathbb{R}^{t}\), such that

From Theorem 3.1, under Assumption 2.1(A2), we can transform the second inequality and the last equality in (2) into a new system in which neither parameter λ 1 nor parameter λ 2 is involved. To this end, we need the following conclusion [8].

Lemma 3.1

Suppose that the non-homogeneous linear equation system Hy=b is consistent. Then y=H + b is the solution with the minimum 2-norm, where H + is the pseudo-inverse of H.

Since X ∗≠∅, we can establish the following result.

Lemma 3.2

Suppose that Assumption 2.1(A2) holds. Then, for any x∈ℝn, the following statements are equivalent:

-

(1)

There exist \(\lambda_{1}\in \mathbb{R}^{s}_{+}, \lambda_{2}\in \mathbb{R}^{t}\) such that Nx+q=A ⊤ λ 1+B ⊤ λ 2,

-

(2)

$$\left \{ \begin{array}{l} \bigl\{-A^{-1}_LB^\top\bigl[\bigl(A^\top A^{-1}_L-I\bigr)B^\top\bigr]^+\bigl[A^\top A^{-1}_L-I\bigr]+A^{-1}_L\bigr\}(Nx+q)\geq0,\\[5pt] \bigl\{A^\top\bigl\{-A^{-1}_L B^\top\bigl[\bigl(A^\top A^{-1}_L-I\bigr)B^\top\bigr]^+\bigl[A^\top A^{-1}_L-I\bigr]+A^{-1}_L\bigr\}\\[5pt] \quad{}+B^\top\bigl[\bigl(A^\top A^{-1}_L-I\bigr)B^\top\bigr]^+\bigl[A^\top A^{-1}_L-I\bigr]-I\bigr\}(Nx+q)=0,\end{array} \right . $$

where \(A^{-1}_{L}=(AA^{\top})^{-1}A\).

Proof

Set

Now, we show that these two sets are equal.

First, for any x∈X 1, there exist \(\lambda_{1}\in \mathbb{R}^{s}_{+}\), λ 2∈ℝt such that

Pre-multiplying (3) by \(A^{-1}_{L}:=(AA^{\top})^{-1}A\) gives

and combining this with (3), we have

i.e.,

Recalling Lemma 3.1, we further have

Combining this with (4) yields

Using the fact that λ 1≥0, by (6), one has

Combining this with (7) leads to that x∈X 2. This shows that X 1⊆X 2.

Second, for any x∈X 2, let

Then \(\lambda_{1}\in \mathbb{R}^{s}_{+}\), λ 2∈ℝt. From (7), one has

i.e., x∈X 1. Hence, X 2⊆X 1, and the desired result follows. □

Combining this conclusion with Theorem 3.1, we can establish the following equivalent formulation of the GLCP under Assumption 2.1(A2):

where

For system (8), by the first equality and the last equality, one has

Thus, system (8) can be further written as

For convenience of discussion, we denote

in the following. To proceed, we present the following assumption, which will be needed in the sequel.

Assumption 3.1

For system (10), there exists a point \(\hat{x}\in \varOmega\) such that

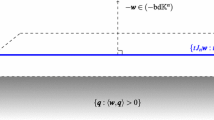

Obviously, Assumption 3.1 is weaker than the condition in Theorem 4.2 of [6]. Now, we consider the following set associated with the GLCP:

where ϵ≥0 and φ(x)=∥(−AF(x))+∥1+∥(−UG(x))+∥1+((AF(x))⊤(UG(x)))+.

Theorem 3.2

Suppose Assumptions 2.1 and 3.1 hold, and matrix \({A M\choose U N}\) is of full column rank, then \(\mathcal{L}(\epsilon)\) is bounded for any ϵ≥0.

Proof

For any \(x\in \mathcal{L}(\epsilon)\), we have

Then, for i=1,2,…,n, one has

By Assumption 2.1(A1),

where the last equality is obtained by a similar argument of (9). Certainly, (14) can be written as

We now break up the discussion into two cases.

First, if [AF(x)] i <0, then by (13), we have −ϵ<[AF(x)] i <0.

Second, if [AF(x)] i >0, combining (15) with \([UG(\hat{x})]_{i}>0\), we have

which shows that [AF(x)] i is bounded w.r.t. x.

Similar to discussion above, we can also prove that [UG(x)] i is bounded w.r.t. x.

Since the matrix \({A M\choose U N}\) is of column full rank, we know that

where \({A M\choose U N}_{L}^{-1}\) is left inverse of the matrix \({A M\choose U N}\). So \(\mathcal{L}(\epsilon)\) is bounded for any ϵ≥0. □

Lemma 3.3

Given constant μ>0, for any ∥x∥≤μ,x∈ℝn, then there exists constant ν>0 such that

where r(x)=∥min{AF(x),UG(x)}∥.

Proof

Using the fact that

we know that there exists constant c 1>0 such that

For any x∈ℝn with ∥x∥≤μ, there exists constant c 2>0 such that

Therefore, by the definition of φ(x), we have

where ν=max{2c 1,c 2}, the first inequality is by (16), and the second inequality follows from the fact that

□

By Lemma 3.3 and Theorem 3.2, we immediately obtain the following conclusion.

Lemma 3.4

Suppose that the hypothesis of Theorem 3.2 holds, then the set

is bounded.

Based on Lemma 3.4, we can establish the following error bound of the GLCP in X ε .

Lemma 3.5

Suppose that the hypothesis of Theorem 3.2 holds. Then there exists constant τ>0 such that

where \(\operatorname{dist}(x,X^{*})\) denotes the distance between the point x and the solution set X ∗, and r(x) defined in Lemma 3.3.

Proof

The proof uses a similar technique to that of Corollary 3.2 in [9]. For completeness, we include it.

Assume that the theorem is false. Then there exist ε 0>0, a positive sequence {τ k }, and a sequence \(\{x^{k}\}\subseteq X_{\varepsilon_{0}}\) such that τ k →∞ as k→∞ and

Hence,

Since \(X_{\varepsilon_{0}}\) is bounded and r(x) is continuous, by (19), we have r(x k)→0 as k→∞. Since \(X_{\varepsilon_{0}}\) is bounded again and \(\{x^{k}\}\subseteq X_{\varepsilon_{0}}\), there exists a subsequence \(\{x^{k_{i}}\}\) of {x k} such that \(\lim_{k_{i}\rightarrow \infty} x^{k_{i}}=\bar{x}\in X_{\varepsilon_{0}}\) with \(r(\bar{x})=0\). Hence \(\bar{x}\in X^{*}\). By (19), we further obtain

On the other hand, since AF(x) and UG(x) are both polynomial functions with powers 1, respectively, from \(x^{k_{i}}\rightarrow\bar{x}\) and \(r(x^{k_{i}})\rightarrow0\), we know that, for all sufficiently large k i ,

for some positive number θ, this contradicts (20). Thus, (17) holds. □

Based on Lemmas 3.4 and 3.5, we have the following conclusion.

Theorem 3.3

Suppose that the hypothesis of Theorem 3.2 holds. Then there exists constant ρ>0 such that

Proof

Assume that the conclusion is false. Then, for any ρ k >0 such that ρ k →∞ as k→∞, there exist x k∈Ω and \(\bar {x}\in X^{*}\), such that

Now, we claim that there exist k 0>0 and ε 0>0 such that

In fact, if not, for any ε∈(0,1), and for any k 0>0, there exists \(\bar{k}>k_{0}\) such that \(r(x^{\bar{k}})\leq\varepsilon\). Then set Θ ε ={x∈Ω|r(x)≤ε} is bounded. Combining this with Lemma 3.5, there exists constant τ>0, such that for \(x^{\bar{k}}\in \varTheta_{\varepsilon}\), there exists \(\bar {x}(x^{\bar{k}})\in X^{*}\) with that \(\|x^{\bar{k}}-\bar {x}(x^{\bar{k}})\|\leq \tau r(x^{\bar{k}})\). Combining this with (21), for \(x^{\bar{k}}\), and \(\bar {x}(x^{\bar{k}})\in X^{*}\), we have

i.e. \((\tau/\rho_{\bar{k}}) >1\). Letting \(\bar{k}\rightarrow\infty\) yields \((\tau/\rho_{\bar{k}})<1\). This is a contradiction. Hence (22) holds.

By (21) and (22), we have \(\|x^{k}\|>\rho_{k}\varepsilon_{0}-\|\bar {x}\|\), i.e., ∥x k∥→∞ as k→∞.

Let \(y^{k}=\frac{x^{k}}{\|x^{k}\|}\), then there exists a subsequence \(\{y^{k_{i}}\}\) of {y k}, such that \(y^{k_{i}}\rightarrow\bar{y}\) with \(\|\bar {y}\|=1\) as k i →∞. Thus, for subsequence \(\{x^{k_{i}}\}\) with \(\|x^{k_{i}}\|\rightarrow\infty\) as i→∞, if

does not hold, then there exists an index j such that

In this case, based on definition of \(\varphi(x^{k_{i}})/\|x^{k_{i}}\|\) in (11), we have

If the sequence \(\{x^{k_{i}}\}\) satisfies the condition (23), then

Clearly, \({A M\choose U N}\bar{y}\neq0\) and \({A M\choose U N}\bar{y}\geq0\) by condition (23). By Assumption 2.1(A1), using the technique used in (14) again, we have

where the deduction of the second equality uses a similar technique to that of (9). By (25), we obtain

Dividing both sides of (26) by \(\|x^{k_{i}}\|\) gives

where the last inequality follows from the properties of \({A M\choose U N}\bar{y}\) and Assumption 3.1. Based on definition of \(\varphi(x^{k_{i}})/\|x^{k_{i}}\|\) in (11) again, we can obtain (24).

Dividing both sides of (21) by \(\|x^{k_{i}}\|\), taking (24) into consideration, and letting k i →∞ yield

where the second inequality is by Lemma 3.3. This is contradiction, and the desired result follows. □

To establish a global error bound for GLCP, we give the following result from [10] on the error bound for a polyhedral cone.

Lemma 3.6

For polyhedral cone P={x∈ℝn∣D 1 x=d 1,D 2 x≤d 2} with D 1∈ℝl×n,D 2∈ℝm×n, d 1∈ℝl and d 2∈ℝm, there exists constant c>0 such that

Now, we arrive at the position to state our main results.

Theorem 3.4

Suppose that the hypothesis of Theorem 3.2 holds. Then there exists constant η>0 such that

Proof

For any x∈ℝn, there exists a vector \(\bar{x}\in \varOmega\) such that

Using the fact that

one has

where the second inequality is by non-expanding property of projection operator. Thus,

Combining Theorem 3.3 with (29), we have

where the last inequality is by Lemma 3.6, and

c is a constant. □

In the end of this section, we present two examples to compare the conditions in Theorem 3.4 in this paper and that in Theorem 4.2 in [6].

Example 3.1

For the matrices A, B, M, N, p, q involved in the GLCP, we set

It is easy to see that the solution set X ∗={0}. Obviously, M ⊤ N is positive semi-definite. By definition of U, V in (8), one has

For x(ϵ):=(0,ϵ)⊤, 0≤ϵ≤1, we have

as ϵ→0. Thus, the conclusion in Theorem 3.4 in this paper provides a global error bound for the GLCP. However, since matrix (A ⊤,B ⊤) has not full-column rank, thus, the error bound of Theorems 4.1 and 4.2 in [6] is not valid.

Example 3.2

For the matrices A, B, M, N, p, q involved in the GLCP, we set

It is easy to see that the solution set X ∗={0}. Hence it has no non-degenerate solution. Obviously, M ⊤ N is positive semi-definite. By definition of U, V in (8), one has

For x(ϵ):=(ϵ,−ϵ)⊤, 0≤ϵ≤1, we have

as ϵ→0. Thus, Theorem 3.4 in this paper provides a global error bound for the GLCP.

On the other hand, it is obvious that the matrix (A ⊤,B ⊤) has full-column rank. From Theorem 4.2 in [6], we can obtain

Using Theorem 4.2 in [6], for x(ϵ), we have

i.e.,

as ϵ→0. Thus, Theorem 4.2 in [6] fails in providing an error bound for this GLCP.

Overall, Theorem 4.2 in [6] cannot provide an error bound for these two problems as the problems has no non-degenerate solutions, while Theorem 3.4 in this paper can do.

In the end of this section, we will discuss the hypotheses used in our main results. First, without the requirement of a non-degenerate solution, the square root term in the error bound estimation is removed, as stated in Theorem 3.4 in this paper. Hence, the error estimation becomes more practical than that obtained in Theorem 4.1 in [6] as we may establish quick convergence rate of the Newton-type method for solving the GLCP [2] under the error bound assumption obtained in this paper instead of the nonsingular assumption [11]. Second, when GLCP reduces to the classical linear complementarity problems (LCP), Assumption 2.1(A1) coincides with the assumption in [7], and Assumption 2.1(A2) and the condition that the matrix \({A M\choose U N}\) is of column full rank are automatically satisfied for LCP. Hence, Assumption 3.1 is weaker than the condition that the LCP has a non-degenerate solution required in Theorem 2.6 of [7].

4 Conclusions and Discussions

In this paper, we established a global error bound on the generalized linear complementarity problems over a polyhedral cone, which improves the result obtained in [6] by weakening the assumption. Surely, under milder conditions, we may established global error bounds for GLCP with the mapping being nonmonotone, and may use the error bound estimation to establish quick convergence rate of the Newton-type method for solving the GLCP in [2] instead of the nonsingular assumption just as was done for nonlinear equations in [11]. This is a topic for future research.

References

Andreani, R., Friedlander, A., Santos, S.A.: On the resolution of the generalized nonlinear complementarity problem. SIAM J. Optim. 12, 303–321 (2001)

Wang, Y.J., Ma, F.M., Zhang, J.Z.: A nonsmooth L–M method for solving the generalized nonlinear complementarity problem over a polyhedral cone. Appl. Math. Optim. 52(1), 73–92 (2005)

Zhang, X.Z., Ma, F.M., Wang, Y.J.: A Newton-type algorithm for generalized linear complementarity problem over a polyhedral cone. Appl. Math. Comput. 169, 388–401 (2005)

Facchinei, F., Pang, J.S.: Finite-Dimensional Variational Inequality and Complementarity Problems. Springer, New York (2003)

Pang, J.S.: Error bounds in mathematical programming. Math. Program. 79, 299–332 (1997)

Sun, H.C., Wang, Y.J., Qi, L.Q.: Global error bound for the generalized linear complementarity problem over a polyhedral cone. J. Optim. Theory Appl. 142, 417–429 (2009)

Mangasarian, O.L.: Error bounds for nondegenerate monotone linear complementarity problems. Math. Program. 48, 437–445 (1990)

Horn, R.A., Johnson, C.R.: Topics in Matrix Analysis. Cambridge University Press, Cambridge (1991)

Xiu, N.H., Zhang, J.Z.: Global projection-type error bound for general variational inequalities. J. Optim. Theory Appl. 112(1), 213–228 (2002)

Hoffman, A.J.: On the approximate solutions of linear inequalities. J. Res. Natl. Bur. Stand. 49(4), 263–265 (1952)

Yamashita, N., Fukushima, M.: On the rate of convergence of the Levenberg–Marquardt method. Computing, Suppl. 15, 237–249 (2001)

Acknowledgements

The authors wish to express their sincere thanks to the associated editor and two anonymous referees for their valuable suggestions and helpful comments which improve the presentation of the paper.

This work was supported by the Natural Science Foundation of China (Grant Nos. 11171180, 11101303), and Specialized Research Fund for the doctoral Program of Chinese Higher Education (20113705110002), and Shandong Provincial Natural Science Foundation (ZR2010AL005, ZR2011FL017).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Nobuo Yamashita.

Rights and permissions

About this article

Cite this article

Sun, H., Wang, Y. Further Discussion on the Error Bound for Generalized Linear Complementarity Problem over a Polyhedral Cone. J Optim Theory Appl 159, 93–107 (2013). https://doi.org/10.1007/s10957-013-0290-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-013-0290-z