Abstract

This paper studies the optimization of complex-order algorithms for the discrete-time control of linear and nonlinear systems. The fundamentals of fractional systems and genetic algorithms are introduced. Based on these concepts, complex-order control schemes and their implementation are evaluated in the perspective of evolutionary optimization. The results demonstrate not only that complex-order derivatives constitute a valuable alternative for deriving control algorithms, but also the feasibility of the adopted optimization strategy.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fractional calculus (FC) generalizes the classical differential calculus and deals with integrals and derivatives of real, or even complex, order [1–7]. In the last decades, a vast number of applications emerged in the areas of physics and engineering, and active research is being pursued [8–19]. It was demonstrated that fractional models capture easily dynamic phenomena with long range memory behavior, in opposition with classical integer order models that reveal difficulties in capturing those effects. Nevertheless, many aspects of this mathematical tool are still to be explored and further research efforts are necessary for the development of practical models. Furthermore, fractional derivatives (FDs) are more elaborated than their integer counterparts and their calculation requires some type of approximation [20–26].

Evolutionary strategies [27, 28] were proposed during the last years for optimizing fractional algorithms [29–36]. Therefore, embedding the FC concepts and evolutionary optimization is a fruitful strategy for controller design.

Several studies focussed onto the application of complex-order derivatives (CDs) [37–44]. CDs yield complex valued results and, therefore, are of apparently limited interest. To overcome this difficulty, the use of conjugated-order differintegrals was proposed, that is, of pairs of derivatives whose orders are complex conjugates. These pairs allow the use of CDs while still producing real-valued responses and transfer functions. This article describes the adoption of CDs in control systems. Since CDs are a further step of generalization of FDs, we can foresee significant advantages in the resulting algorithms.

Bearing these ideas in mind, this paper addresses the optimal tuning of controllers using CDs and is organized as follows. Section 2 introduces FDs and their numerical approximation, and formulates the problem of optimization through genetic algorithms (GAs). Section 3 formulates the concepts underlying CDs and presents a set of experiments that demonstrate the effectiveness of the proposed optimization strategy. Finally, Sect. 4 outlines the main conclusions.

2 Fundamental Concepts

2.1 Fractional Calculus

There are several definitions of FDs of order α of the function f(t). The most commonly adopted are the Riemann–Liouville, Grünwald–Letnikov, and Caputo definitions:

where Γ(⋅) is Euler’s gamma function, [x] means the integer part of x, and h is the step time increment.

The Laplace transform leads to the expression:

where s and \(\mathcal{L}\) represent the Laplace variable and operator, respectively.

The long standing discussion about the advantages of the different definitions is outside the scope of this paper, but, in short, while the Riemann–Liouville formulation involves an initialization of fractional order, the Caputo counterpart requires integer order initial conditions which are easier to apply. The Grünwald–Letnikov expression is often adopted in real-time control systems because it leads directly to a discrete-time algorithm based on the approximation of the time increment h through the sampling period T s . In fact, for converting expressions from continuous to discrete time, the Euler and Tustin formulae are often considered:

where z and T s represent the Z-transform variable and controller sampling period, respectively. Expression H 0 is simply the Grünwald–Letnikov definition of FD with the infinitesimal time increment h replaced by the sampling period T s . Weighting H 0 and H 1 by the factors p and 1−p leads to the arithmetic average:

For obtaining rational expressions, the Taylor or Padé expansions of order r in the neighborhood of z=0 are usually adopted. In [45], several averages based on the generalized mean are evaluated, and in [46] the performances of series and fraction approximations for closed-loop discrete control systems are compared. In this paper, for simplicity, the Euler backward formula and the Taylor series expansion are considered. Therefore, for the real-valued fractional derivative and integral we have (α>0):

2.2 Genetic Algorithms

Genetic Algorithms (GAs) constitute a computational scheme for finding the solution of optimization problems. The GA computer simulation involves an evolving population with representatives of candidate solutions of the optimization problem accessed by means of the fitness function J. The GA starts by initializing the population randomly, and then evolves it towards better solutions through the iterative application of crossover, mutation, and selection operators. Therefore, in each generation, a part of the population is selected to breed an offspring. To avoid premature convergence towards sub-optimal solutions, and to guarantee diversity, some elements are modified randomly. The solutions are then evaluated through the fitness function, where fitter solutions are more likely to be selected to form a new population in the next iteration. The GA ends when a given termination criteria is accomplished, for example, when a maximum number of generations N is calculated, or a satisfactory fitness value is reached. The GA pseudo-code is:

-

1.

Generate an initial population.

-

2.

Evaluate the fitness of each element in the initial population.

-

3.

Repeat.

-

(a)

Select the elements with best fitness for reproducing.

-

(b)

Generate new generation through crossover producing offspring and evaluate their fitness.

-

(c)

Mutate randomly some elements in the population and evaluate their fitness.

-

(d)

Replace the worst ranked part of population with the best elements of offspring.

-

(a)

-

4.

Until termination criteria is reached.

For easing the GA convergence, a common scheme, denoted as elitism, consists in selecting the best elements of the population to be part of the next generation.

3 Complex-Order Controllers

3.1 Complex-Order Operators

The FC theory can be adopted in control systems and a typical case is the generalization of the classical Proportional-Integral-Differential (PID) controller. The fractional PID, or FrPID, consists of an algorithm with the integer I and D actions replaced by their fractional generalizations of orders 0<α≤1 and 0<β≤1, yielding the transfer function:

where s denotes the Laplace variable, and K p , K i , and K d represent the proportional, integral, and derivative gains, respectively. Therefore, the classical PID is simply a particular case where α=1 and β=1.

For a sine function, at steady-state, the CD of order α±ıβ is given by:

where \(\imath=\sqrt{-1}\), t denotes time, and ω is the angular frequency.

Since we are interested in applications, for getting only real-valued results, we can group the conjugate order, into the operators:

Figure 1 shows the polar diagram of the frequency response of expressions (12)–(13) for \((\alpha,\beta )= \{ (-1,1 ), (1,1 ), (\frac{1}{2},-1 ), (-\frac{1}{2},-1 ) \}\).

For real-time calculation, Taylor expansions of order r in the neighborhood of z=0 are adopted. Therefore, the pair of operators becomes:

where ψ 1(z −1) and ψ 2(z −1) are given by:

So, we can define the operators φ 1(z −1) and φ 2(z −1) such that

We verify that the pair of operators {φ 1(z −1),φ 2(z −1)} represents a weighted average of ψ 1 and ψ 2 by means of the terms cos[βln(T s )] and sin[βln(T s )]. These factors are due to the presence of \(\frac{1}{T_{s}^{\alpha\pm\imath\beta}}\) in (14)–(15). In the calculation of FDs of real order, the term \(\frac {1}{T_{s}^{\alpha}}\) is usually included in the control gain and only the z-series is considered. In this line of thought, the pair {ψ 1(z −1),ψ 2(z −1)} is also considered as another set of possible complex-order operators.

3.2 Application in Control Systems

We start by defining an appropriate optimization index in the perspective of system control. We consider the integral square error (ISE) and integral time square error (ITSE) defined as:

where e(t) represents the closed-loop control system error and T w is a time period sufficiently long for settling the response close to steady-state. Other optimization indexes, such as the IAE and ITAE, can also be adopted, leading to the same type of conclusions.

The set of controllers to be compared consists of four options:

-

PID,

-

FrPID,

-

proportional action and pair {ψ 1(z −1),ψ 2(z −1)},

-

proportional action and pair {φ 1(z −1),φ 2(z −1)}

given by

where k 0,k 1,k 2 are gains.

The system to be controlled may consist of three cases of increasing dynamical difficulty, namely:

-

S 1, a linear second order system with transfer function \(G_{p} (s )=\frac{1}{s (s+1 )}\),

-

S 2, a second order system \(G_{p} (s )=\frac{1}{s (s+1 )}\) followed by a static backlash [47, 48] with width Δ=1, and

-

S 3, a second order system with delay \(G_{p} (s )=\frac{1}{s (s+1 )}e^{-s}\).

For the experiments, the optimization of either J=ISE or J=ITSE is considered by means of a GA with a population of N=500 elements with I=200 iterations. Elitism is used and, during evolution, any unstable system is eliminated and substituted by a new randomly-generated element, so that the population number remains constant. The closed loop system is excited by a unit step input, the sampling period is T s =0.005, the truncation order of the Taylor series is r=10, the system is simulated using a Runge–Kutta algorithm of order four, and the time window for the calculation of the optimization indices is T w =15.

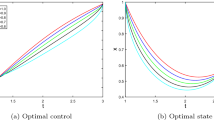

Figures 2, 3 and 4 show the closed-loop system time response for the ISE and ITSE indices under the action of the four controllers for the system S 1, S 2, and S 3, respectively.

The results for the two optimization indices, four controllers, and three types of systems is summarized in Tables 1 and 2. We verify that the new complex-order controllers lead to better time responses in all cases. In what concerns the two proposed variants, we observe almost identical behavior, with G c3 slightly better than G c4.

In conclusion, complex order algorithms reveal promising performances and may constitute the next step of development with non-integer controllers.

4 Conclusions

The advances in FC demonstrate the importance of this mathematical concept. During the last years, several control algorithms based on real-order FDs were proposed. This paper proposed a further step of generalization by adoption complex-order operators. For that purpose, several combinations of CDs were examined. Two alternative complex operators were proposed and their performance compared with classical integer-order and fractional order control actions. The tuning of the controller was accomplished by means of genetic algorithms and three systems were evaluated. The results reveal the superior performance and the adaptability of the complex-order operators.

References

Oldham, K., Spanier, J.: The Fractional Calculus: Theory and Application of Differentiation and Integration to Arbitrary Order. Academic Press, New York (1974)

Miller, K., Ross, B.: An Introduction to the Fractional Calculus and Fractional Differential Equations. Wiley, New York (1993)

Samko, S., Kilbas, A., Marichev, O.: Fractional Integrals and Derivatives: Theory and Applications. Gordon and Breach, London (1993)

Podlubny, I.: Fractional Differential Equations. Academic Press, San Diego (1999)

Kilbas, A., Srivastava, H.M., Trujillo, J.: Theory and Applications of Fractional Differential Equations. Elsevier, Amsterdam (2006)

Machado, J.T., Kiryakova, V., Mainardi, F.: Recent history of fractional calculus. Commun. Nonlinear Sci. Numer. Simul. 16(3), 1140–1153 (2011)

Baleanu, D., Diethelm, K., Scalas, E., Trujillo, J.J.: Fractional Calculus Models and Numerical Methods. Series on Complexity, Nonlinearity and Chaos. World Scientific, Singapore (2012)

Oustaloup, A.: La Commande CRONE: Commande Robuste D’Ordre Non Entier. Hermès, Paris (1991)

Machado, J.T.: Analysis and design of fractional-order digital control systems. Syst. Anal. Model. Simul. 27(2–3), 107–122 (1997)

Podlubny, I.: Fractional-order systems and PIλ D μ -controllers. IEEE Trans. Autom. Control 44(1), 208–213 (1999)

Zaslavsky, G.: Hamiltonian Chaos and Fractional Dynamics. Oxford University Press, New York (2005)

Magin, R.: Fractional Calculus in Bioengineering. Begell House, Redding (2006)

Baleanu, D., Ozlem, D., Agrawal, O.: A central difference numerical scheme for fractional optimal control problems. J. Vib. Control 15(4), 583–597 (2009)

Diethelm, K.: The Analysis of Fractional Differential Equations. Springer, Berlin (2010)

Agrawal, O., Ozlem, D., Baleanu, D.: Fractional optimal control problems with several state and control variables. J. Vib. Control 16(13), 1967–1976 (2010)

Mainardi, F.: Fractional Calculus and Waves in Linear Viscoelasticity: an Introduction to Mathematical Models. Imperial College Press, London (2010)

Monje, C.A., Chen, Y., Vinagre, B.M., Xue, D., Feliu, V.: Fractional-order Systems and Controls: Fundamentals and Applications. Advances in Industrial Control. Springer, Berlin (2010)

Tarasov, V.E.: Fractional Dynamics: Applications of Fractional Calculus to Dynamics of Particles, Fields and Media. Nonlinear Physical Science. Springer, Berlin (2010)

Petráš, I.: Fractional-Order Nonlinear Systems: Modeling, Analysis and Simulation. Nonlinear Physical Science. Springer, Berlin (2011)

Al-Alaoui, M.A.: Novel digital integrator and differentiator. Electron. Lett. 29(4), 376–378 (1993)

Machado, J.T.: Discrete-time fractional-order controllers. Fract. Calc. Appl. Anal. 4(1), 47–66 (2001)

Tseng, C.C.: Design of fractional order digital FIR differentiators. IEEE Signal Process. Lett. 8(3), 77–79 (2001)

Chen, Y.Q., Moore, K.L.: Discretization schemes for fractional-order differentiators and integrators. IEEE Trans. Circuits Syst. I, Fundam. Theory Appl. 49(3), 363–367 (2002)

Vinagre, B.M., Chen, Y.Q., Petras, I.: Two direct Tustin discretization methods for fractional-order differentiator/integrator. J. Franklin Inst. 340(5), 349–362 (2003)

Chen, Y.Q., Vinagre, B.M.: A new IIR-type digital fractional order differentiator. Signal Process. 83(11), 2359–2365 (2003)

Barbosa, R.S., Machado, J.T., Silva, M.: Time domain design of fractional differintegrators using least squares approximations. Signal Process. 86(10), 2567–2581 (2006)

Holland, J.H.: Adaptation in Natural and Artificial Systems. University of Michigan Press, Ann Arbor (1975)

Goldenberg, D.E.: Genetic Algorithms in Search Optimization, and Machine Learning. Addison-Wesley, Reading (1989)

Cao, J.-Y., Cao, B.-G.: Design of fractional order controller based on particle swarm optimization. Int. J. Control. Autom. Syst. 4(6), 775–781 (2006)

Valério, D., da Costa J, S.: Tuning of fractional controllers minimising H 2 and H ∞ norms. Acta Polytech. Hung. 3(4), 55–70 (2006)

Maiti, D., Acharya, A., Chakraborty, M., Konar, A., Janarthanan, R.: Tuning PID and PIλ D δ controllers using the integral time absolute error criterion. In: 2008 IEEE Forth International Conference on Information and Automation for Sustainability, Colombo, Sri Lanka (2008)

Biswas, A., Das, S., Abraham, A., Dasgupta, S.: Design of fractional-order PIλ D μ controllers with an improved differential evolution. Eng. Appl. Artif. Intell. 22, 343–350 (2009)

Machado, J.T., Galhano, A., Oliveira, A.M., Tar, J.K.: Optimal approximation of fractional derivatives through discrete-time fractions using genetic algorithms. Commun. Nonlinear Sci. Numer. Simul. 15(3), 482–490 (2010)

Machado, J.T.: Optimal tuning of fractional controllers using genetic algorithms. Nonlinear Dyn. 62(1–2), 447–452 (2010)

Padhee, S., Gautam, A., Singh, Y., Kaur, G.: A novel evolutionary tuning method for fractional order PID controller. Int. J. Soft Comput. Eng. 1(3), 1–9 (2011)

Gao, Z., Liao, X.: Rational approximation for fractional-order system by particle swarm optimization. Nonlinear Dyn. 67(2), 1387–1395 (2012)

Hartley, T.T., Adams, J.L., Lorenzo, C.F.: Complex-order distributions. In: ASME International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Long Beach, CA, DETC2005-84952 (2005)

Hartley, T.T., Lorenzo, C.F., Adams, J.L.: Conjugated-order differintegrals. In: ASME International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, Long Beach, CA, DETC2005-84951 (2005)

Silva, M.F., Machado, J.T., Barbosa, R.S.: Complex-order dynamics in hexapod locomotion. Signal Process. 86(10), 2785–2793 (2006)

Adams, J.L., Hartley, T.T., Lorenzo, C.F.: Complex-order distributions using conjugated-order differintegrals. In: Sabatier, J., Agrawal, O.P., Tenreiro Machado, J.A. (eds.) Theoretical Developments and Applications in Physics and Engineering, pp. 347–360. Springer, Berlin (2007)

Barbosa, R.S., Machado, J.T., Silva, M.F.: Discretization of complex-order algorithms for control applications. J. Vib. Control 14(9–10), 1349–1361 (2008)

Adams, J.L., Hartley, T.T., Lorenzo, C.F.: Identification of complex order-distributions. J. Vib. Control 14(9–10), 1375–1388 (2008)

Pinto, C.M., Machado, J.T.: Complex-order Van der Pol oscillator. Nonlinear Dyn. 65(3), 247–254 (2011)

Pinto, C.M., Machado, J.T.: Complex order biped rhythms. Int. J. Bifurc. Chaos Appl. Sci. Eng. 21(10), 3053–3061 (2011)

Machado, J.T., Galhano, A., Oliveira, A.M., Tar, J.K.: Approximating fractional derivatives through the generalized mean. Commun. Nonlinear Sci. Numer. Simul. 14(11), 3723–3730 (2009)

Machado, J.T.: Multidimensional scaling analysis of fractional systems. Comput. Math. Appl. (2012). doi:10.1016/j.camwa.2012.02.069

Barbosa, R.S., Machado, J.T.: Describing function analysis of systems with impacts and backlash. Nonlinear Dyn. 29(1–4), 235–250 (2002)

Duarte, F., Machado, J.T.: Describing function of two masses with backlash. Nonlinear Dyn. 56(4), 409–413 (2009)

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Machado, J.A.T. Optimal Controllers with Complex Order Derivatives. J Optim Theory Appl 156, 2–12 (2013). https://doi.org/10.1007/s10957-012-0169-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-012-0169-4