Abstract

This study aimed to develop and validate a problem-based learning environment inventory which would help teachers and researchers to better understand student views on problem-based learning environments. The development of the inventory included the following four steps: Item Formulation; Content Validation; Construct Validation; Reliability Calculation. It has 23 items allocated to four scales: (1) Student Interaction and Collaboration; (2) Teacher Support; (3) Student Responsibility; (4) Quality of Problem. Each learning environment item had a factor loading of at least 0.40 with its own scale, and less than 0.40 with all other scales. The results of the factor analysis revealed that the four scales accounted for the 53.72% of the total variance. The alpha reliability coefficient for the four scales ranged from 0.80 to 0.92. According to these findings, the Problem-based Learning Environment Inventory is a valid and reliable instrument that can be used in the field of education.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The purpose of this study is to describe the development of an instrument for assessing undergraduate science student perceptions on problem-based learning (PBL) environments in higher education. It is suggested that the development of such an instrument will make an important contribution to PBL studies so that it can be implemented in a wide range of domains. The development of Problem-based Learning Environment Inventory (PBLEI) is also expected to help teachers and researchers to better understand general and particular student views on the PBL process. Additionally, measures of PBL environments can also assist curriculum developers in their attempt to change and improve the curricula. Instruments that assess student perceptions on the learning environment also contribute towards effective learning environments. Therefore, it is believed that the development of such an instrument will help teachers, researchers and curriculum designers working with PBL to assess whether and what kind of PBL is happening in any specific curriculum implementation. Also, it would be useful for those evaluating or researching PBL to help them understand/explain the ‘outcomes’ found in their investigation and/or to assess the fidelity of implementation. This article firstly starts with information on PBL and the field of learning environments. Secondly, the stages in the development of the PBLEI are described in detail (namely, factorial validity and reliability). Lastly, contributions of the study to research in PBL, science education and learning environment were discussed.

What is Problem-Based Learning?

The researchers in the field of learning have long emphasized that acquiring thinking and problem solving skills are primary objectives of education (Pellegrino et al. 2001). In similar vein, Allan (1996) describes the aims of higher education in terms of desired learning outcomes such as subject-based, personal transferable, and generic cognitive outcomes. With technology becoming more widespread rapidly, societies need graduates who not only possess a certain knowledge-base but also employ the skills to solve, analyse, synthesise, present and evaluate contemporary problems. However, educational practices have been criticised for not fostering such qualities. Research has shown that students acquire the knowledge; however, they cannot use it to solve complex daily life problems (Dahlgren et al. 1998). According to Tynjala (1999), the important challenge for today’s higher education is the development and the implementation of instructional practices that integrate subject-based knowledge with the personal-transferable and generic cognitive skills. For this purpose, many educational approaches based on a constructivist view of learning have been designed (e.g., Gijbels et al. 2006; Gibson and Chase 2002; Johnson et al. 1994; Boud and Feletti 1997). The aim of such designs is to lead to the development and implementation of effective learning environments which go together with the constructivist theory of learning. Namely, students construct knowledge and skills through interaction with the environment.

The pedagogical implications of the constructivist theory of learning lead to the defining features of effective learning environments as follows:

-

Knowledge is not absolute, but is rather constructed by the learner based on previous knowledge and overall views of the world (Savin-Baden and Major 2004).

-

Learning is the process of constructing knowledge individually and in interaction with others.

-

Problematic situations provide favorable conditions for learning (Roth 1994).

-

Learning occurs in a context and the understanding about the context is part of what is learned.

-

Assessment reflects an understanding of learning as a multidimensional process and the learners’ development from a novice to an expert practitioner (MacDonald and Savin-Baden 2003).

-

A teacher is a coach or a partner rather than a provider of knowledge.

These characteristics are in accordance with the features of new educational approaches such as problem-based learning (PBL). Educational approaches based on the abovementioned principles enclose a curriculum that intends to incorporate the pedagogical implications of constructivism. They are designed to construct an effective learning environment. Various researchers have described the characteristics of PBL environments (Barrows 1986; Ward and Lee 2002; Hmelo-Silver 2004). PBL is a powerful vehicle for this, in which real-world problems become a context for students to learn, in depth, what they need to know as well as what they want to know (Checkly 1997). It aims to help students develop higher order thinking skills and a substantial disciplinary knowledge base by placing students in the active role of practitioners confronted with a complex daily life problem (i.e., an ill-structured problem) that reflects the real world (Senocak et al. 2007).

Problem-based learning was originally developed in undergraduate college programs (Barrows and Tamblyn 1980) and later adapted for use in elementary and high school settings (Chin and Chia 2005; Tarhan et al. 2008). In PBL, students learn by solving problems and reflecting on their experiences (Barrows and Tamblyn 1980). It helps students become active learners by contextualizing learning in real-life problems and making students responsible for their learning. In PBL, ill-structured problems act as stimulus and focus for student activity and learning (Boud and Feletti 1997). Problem solving is the process used to solve a problem. Since problem-based learning starts with an ill-structured problem to be solved, students working in a PBL environment should be skilled in problem solving or critical thinking. Gallagher et al. (1995) define an ill-structured problem as a real-life problem that can be solved in more than one way. Thus it should be presented without all the necessary information to solve the ill-structured problem. Ill-structured problems are those where (a) the initial situation lack all the information necessary to develop a solution, (b) there is no single right way to approach the task of the problem-solving, (c) as new information is gathered, the problem definition changes, and (d) students will never be completely sure that they have made the correct selection of solution options (Gallagher et al. 1995; Greenwald 2000).

Hassan et al. (2004) state that PBL has several distinct characteristics which may be identified and utilized in designing a curriculum. These are: (a) Confidence problems to drive the curriculum—the problems do not test the skills; they assist the development of the skills themselves. (b) The problems are truly ill-structured—there is no single solution. (c) Students solve the problems—the teacher is a metacognitive coach or facilitator. (d) Students are only given guidelines to approach the problems—there is no one formula to approach any problem. (e) Authentic, performance based assessment is used—this kind of assessment is a seamless part and end of the instruction. Barrows (1996) has similarly defined six fundamental characteristics for PBL environments: First, learning needs to be student-centred. Second, learning has to occur in small student groups. Third, the teacher should act as a facilitator or metacognitive coach in the learning process. Fourth, ill-structured problems derived from daily-life events ought to be encountered in the learning sequence, before any information about target knowledge. Fifth, the problems encountered should be used as a tool to achieve the required knowledge and the problem-solving skills necessary to eventually solve the problem. Final, new information is acquired through self-directed learning.

In PBL there is a shift in the roles of students and teachers. The student, not the teacher, takes primary responsibility for what is learned and how. The teacher is as a facilitator or a metacognitive coach in contrast to the “stage on the stage”, raising questions that challenge student thinking and encourage self-directed learning so that the search for meaning becomes a personal construction of the learner. In PBL, teachers act as models, thinking aloud with the students and practicing behavior they want to instill in their students. They use questions that stimulate students higher order thinking, like, “What is going on here?”, “What do we need to know more about?” and “What did we do during the problem that was effective?”. In PBL, the teacher/facilitator is an expert learner, able to model good strategies for learning and thinking, rather than an expert in the content itself (Barrows 1996). Hmelo-Silver (2004) emphasized that the facilitator directly supports several of the goals of PBL. S/he not only models the problem solving and self-directed learning processes, but also helps students learn how to collaborate. The underlying assumption here is that when facilitators support the learning and collaboration processes, students are better able to construct flexible knowledge for themselves.

One of the most characteristic features of PBL is small group work. In order to enable students to successfully solve ill-structured problems, they are put to work in collaborative groups where they can build on each others’ knowledge. Working in such groups also promotes learning how to articulate and justify as one cannot work successfully in groups without being able both to understand others and to make oneself clear to them. The teacher working as a facilitator helps students to manage their collaboration, to stay on track in solving problems, and to reflect on their experiences in such a way that they learn the broadest range of knowledge and skills that can be learned from these experiences.

While many educators have emphasized the impact of assessment on the learning process, there is little agreement on methodologies for assessment among PBL advocates (Boud and Feletti 1997). Specific instruments have been proposed for use in problem-based environments, but few complete, integrated systems have been presented. PBL recognizes the validity of a differentiation of learning outcomes, and students are free to choose and define their own learning goals. Therefore, the relationship between the learning goals and assessment methods has to be looser than a conventional course (Savin-Baden and Wilkie 2004). For example, Savin-Baden (2004) stated that one of the ways of doing this is to involve the students in the assessment process.

Problem-based learning has become increasingly widespread across all educational levels and areas of teaching, from medical education, (i.e., Albanese and Mitchell 1993; Barrows 1986), nursing (i.e., Habib et al. 1999; Rideout et al. 2002) pharmacy (i.e., Miller 2003), law (i.e., Driessen and Van Der Vleuten 2000), engineering (i.e., Polonco et al. 2004), to science education in recent years (i.e., West 1992; Gallagher et al. 1995; Peterson and Treagust 1998; Ying 2003; Soderberg and Price 2003; Yuzhi 2003; Senocak et al. 2007). As an instructional approach, PBL has high potential in promoting inquiry in science classrooms. However, there is inadequate research on what happens in PBL environments.

Background of the Field of Learning Environments

The focus of the study is to develop a learning environment instrument; therefore, it is essential that the learning environment literature be examined to ensure that the present study builds upon and extends research in the field. In the last three decades or so, considerable interest has been shown internationally in the study of learning environments. The first learning environment instruments were developed in the late 1960s and early 1970s (Walberg and Anderson 1968a, b) The Learning Environment Inventory (Walberg and Anderson 1968a) was developed to evaluate student perceptions of their secondary physics classrooms. Simultaneously, Moos began studying environments as diverse as psychiatric hospitals, university residences, conventional work sites, and correctional institutions. His studies eventually led him to schools where he subsequently developed the Classroom Environment Scale (Moos 1979; Moos and Trickett 1974). In the last 30 years, there has been a significant number of research studies focusing on the conceptualization, assessment and study of student perceptions of the psychological and social characteristics of the classroom learning environment (Fraser and Walberg 1991), some of which are the Learning Environment Inventory (Fraser et al. 1982), the Individualized Classroom Environment Questionnaire (Rentoul and Fraser 1979), the My Class Inventory (Fisher and Fraser 1981; Fraser et al. 1982) and the College and University Classroom Environment Inventory (Fraser and Treagust 1986), the Constructivist Learning Environment Survey (Taylor et al. 1993), the Science Laboratory Environment Inventory (Fraser et al.1995), the Science Outdoor Learning Environment Inventory (Orion et al. 1997), the What Is Happening In this Class? (Aldridge et al. 1999).

Fraser (2002a) states that learning environment research instruments have been utilized in multiple countries, at multiple educational levels, and in many subject areas. These instruments have been used by “hundreds of researchers, thousands of teachers, and millions of students around the world” (Fraser 2002a, p. 7). In the learning environment literature, the strongest tradition has been investigating associations between students’ cognitive and affective learning outcomes and their perceptions of the learning environment (Fraser 1998). Learning environments research has consistently demonstrated that, across nations, languages, cultures, subject matter, and educational levels, there are consistent and appreciable associations between classroom environment perceptions and student outcomes (Fraser 2002b). Often affective student outcomes are considered integral in studies of educational environments (Walker and Fraser 2005, p. 294). The results of research in the field of learning environments show that learning environment characteristics are important in curriculum evaluation studies and can provide teachers with useful information to improve their classroom environment.

In parallel to the learning environment research, much attention has been given to the development and use of instruments to assess the science learning environments from the perspective of the students (Fraser 1986, 1994; Fraser and Walberg 1991; Hofstein et al. 1999; Tsai 2003; Lang et al. 2005). Science educators have developed instruments such as the Science Laboratory Environment Inventory, the Science Outdoor Learning Environment Inventory, and the Industrial Classroom learning Environment Inventory to assess students’ or teachers’ perceptions about learning environments. These instruments have been used by several researchers to examine associations between student outcomes and student perceptions of the learning environment in science classes. For example, Wong and Fraser (1996) have used the Science Laboratory Environment Inventory to investigate the relationship between students’ perceptions and their attitudinal and cognitive outcomes. They found that the attitudes of a group of tenth grades towards chemistry were likely to be enhanced in chemistry environments where laboratory work was linked with the theory learned in non-laboratory classes and where clear rules were provided. Hofstein et al. (1999) conducted a study which focused on how learning industrial chemistry case studies affects students’ perceptions of their classroom learning environment and their interest in chemistry studies by using the Industrial Classroom learning Environment Inventory. They found that industrial chemistry case studies helped in providing students with a relevant chemistry classroom learning environment. It was also found that teachers who had attended an intensive training workshop were the most successful in presenting the relevance of chemistry in the case studies. The learning environment researchers have commonly suggested that the findings of this type studies provide a better understanding of the students’ perceptions on their existing and useful information for the teachers, administrators and other stakeholders about aspects of the learning environment that could lead to increase in students’ attitudinal and achievement gains.

Although the field of learning environments research has a long and illustrious history involving a variety of instruments, there is limited study focusing on the development of the PBLEI. This study is one of the first that attempts to develop the PBLEI by using science student perceptions in the undergraduate level PBL-based science courses.

Method

Sample

The target population for this study was science students in PBL classes at universities and colleges. The sample of the study consisted of 387 undergraduate students enrolled the PBL-based science courses from eleven classes during the academic years 2007, 2008 and 2009. The participants went through five consecutive stages during these courses. In stage 1, the students were given an ill-structured problem state and told to carefully read it and were encouraged to write their ideas about the problem. In stage 2, the students identified learning issues related to the ill-structured problem and organized them around three focus questions using a ‘Need-to-Know’ worksheet. The questions were as follows: (a) What do you know? (b) What do you need to know? (c) How can you find out what you need to know? The students recorded their ideas and questions onto this worksheet regularly as a group. In stage 3, the students gathered data to answer their own questions. Some of the students used the science laboratory to carry out their investigations, some looked up information from print and electronic resources using both library research and the internet, and others consulted expert professionals. In stage 4, the students reported on what they had done, and prepared a report for the presentation to the classroom. In stage 5, each group gave a 5–10 min oral presentation on what they had learned about their problem. The students also submitted a group report which documented the group’s findings and details of the inquiry process. The lecturer evaluated the groups based on criteria related to both the process and the products of the project work, including the oral presentation. After the courses, the participants were asked to complete the final version of inventory based on their experiences involving PBL activities in science courses as learners.

Stages in the Development of the Problem-Based Learning Environment Inventory

The development of the PBLEI included the following stages which are used commonly for developing learning environment instruments (Fraser 1986; Jegede et al. 1998). These stages comprise identification of crucial learning environment characteristics to cover Moos’ (1974) three social organization dimensions; written items within the learning environment characteristics; item analysis, validation and reliability procedures. Below the steps involved in each stage are described:

-

1.

Item formulation

-

2.

Content validation

-

3.

Construct validation

-

4.

Reliability calculation

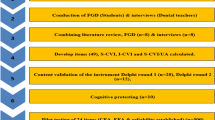

Stage 1: Item Formulation

This stage included three steps that led to the identification and development of the PBLEI. Firstly, an extensive literature review of learning environments and PBL was carefully examined. This crucial step was carried out to identify the key characteristics of high-quality PBL environments. The key characteristics of PBL environments described in the literature (Schmidt 1983; Barrows 1986; Norman and Schmidt 1992; Gallagher et al. 1995; Macdonald 2001; Ward and Lee 2002; Savin-Baden 2004) include an ill-structured problem that is situated in real-life contexts, a teacher acting as a metacognitive coach, students working in collaboration, and student relevance on acquisition of knowledge via their own effort (Grasel et al. 2001; Ward and Lee 2002; Mackenzie et al. 2003; Hendry et al. 2003; Lindblom-Ylanne et al. 2003; Chin and Chia 2005; Savin-Baden and Major 2004; Spronken-Smith 2005). Secondly, many items were written by the author for the PBLEI. The written of these items was based on the educational philosophy of PBL which includes students in small groups deciding what they need to study in order to solve the ill-structured problem, the problem relevant to real-life situations that helps students to establish links between theory and practice, teachers acting as coaches and facilitators, students reflecting on the learning process, teaching both a method of approach and an attitude towards problem-solving as the key factors for meaningful learning during PBL. Lastly, one science educator and one environmental tutor examined the items and modified them as a result of discussion and final agreement. Following this step, the initial version of the PBLEI consisting of 52 items was constituted.

Stage 2: Content Validation

For the purpose of content validation, a group of three science educators, two lecturers, and one environmental tutor who had experience in PBL were provided with a list of 52 items. They were asked to assess the quality of each of the items, check their classification in the PBLEI, and suggest necessary item revisions. After this procedure, there was agreement on 28 items.

Stage 3: Construct Validation

The 28-item version of the PBLEI was administered to 387 undergraduate students who participated in PBL-based science activities. In this study, the PBLEI was construct validated using factor analysis which aimed at identifying items whose removal would enhance the inventory factor structure and internal consistency reliability analysis, to determine the extent to which items within a scale measured the same construct as other items within that scale (Crocker and Algina 1986). Before using factor analysis, Kaiser Meyer Olkin Test (KMO) and Bartlett’s Test of Sphericity were used to examine whether the sample data was suitable for factor analaysis (Kaiser 1974; Bartlett 1954). The KMO values range from 0 to 1, with values over 0.80 and 0.90 suggesting that the data is adequate for factor analysis (Kaiser 1974). The Bartlett’s Test of Sphericity should be significant (Bartlett 1954). The results of KMO and Bartlett’s Test indicated that p = 0.00 and the value of KMO was above 0.80. According to these findings, it was possible to state that the sample data was adequate for factor analysis. Then, the data was analyzed with principal component analysis to explore the component structure underlying the instrument. Two items were omitted because they did not really examine the components of the PBLI (Items 28 and 26). Three items that had loadings on their a priori factors below 0.4 were eliminated from the instrument (Items 18, 25 and 15). Thus, the final inventory consisted of 23 items. Four factors were retained and accounted for 53.72% of total variance. The results of factor analysis were summarized in Table 1.

Following examination of each of the items in each factor, it was concluded that each factor covered that a different dimension of the problem-based learning environment. Therefore, the factors were used as scales for further analysis. Each scale was given a title reflecting its general meaning. As seen Table 1, the Teacher Support scale was developed with eight items and factor analysis eliminated one item. In the Student Responsibility, seven items were developed and only one was lost. The Student Interaction and Collaboration scale was developed with six items, no items were lost from original seven. Finally, the scale of Quality of Problem was conceived with five items, but one item was eliminated in factor analysis. In this way, the final instrument with the four sub-scales consisted of 23 items.

Table 1, also, reports the percentage variance for each scale, as well as the factor loadings for the remaining 23 items. The scale of Teacher Support accounted for the highest proportion of variance among the items at 17.68%. This was followed by the Student Responsibility scale which explained an additional 13.86% of the variance. These two scales almost accounted for half (53.72%) of the variance in the items within the PBLEI. To a lesser degree, the scale of Student Interaction and Collaboration explained 11.40% of the variance, while the Quality of Problem scale explained 10.78% among the variables. The cumulative variance explained by all four PBLEI scales was 53.72%. A brief description of the four scales is presented in Table 2, which contains the name of the scale, its meaning, and a sample item each dimension.

Stage 4: Reliability Calculation

Estimates of the internal consistency reliability of the four scales of the PBLEI were determined by calculating Cronbach’s alpha reliability coefficient for the above sample. This analysis aimed to determine the extent to which items within a scale measure the same construct as other items within that scale. Table 3 lists the number of items of the scales together with the Cronbach alpha reliability coefficient of the final version. The results in Table 3 indicate that the internal consistency reliability (coefficient alpha) ranged from 0.80 to 0.92 for the four PBLEI scales. Using a generally-applied “rule-of-thumb”, this range is considered to be acceptable to good (George and Mallery 2001). Likewise, the alpha reliability for the scale of Teacher Support (0.92) is considered ‘excellent’, while reliabilities for scales of Quality of Problem (0.83), Student Interaction and Collaboration (0.82), and Student Responsibility (0.80) are considered ‘good’ using this rule-of-thumb.

Discussion

In this study, 23-item instrument to assess undergraduate science student perceptions about PBL environments was developed (see “Appendix”). This instrument (PBLEI) consisted of four scales and 23 items with responses recorded on a five-point format ranging from Always to Never (5-Always, 1-Never). The maximum score that can be received from the instrument is 115 and the minimum score is 23.

The construct validity of the PBLEI was examined using factor analysis with varimax rotation. Our sample of 387 students, according to Tabachnick and Fidell (2001), is sufficiently large to allow meaningful factor analysis to scrutinize the construct validity of the PBLEI. The results of the factor analysis revealed the four scales structure of the instrument which assessed Teacher Support, Student Responsibility, Student Interaction and Collaboration, and Quality of Problem. These scales agree with the relevant literature on the key dimensions of PBL (Barrows 1986; Boud and Feletti 1997; Bigelow 2004). The factor analysis resulted in only one item from the Teacher Support, one item from the Student Responsibility, and one item from the Quality of Problem scale being omitted from further analyses. This was based on the decision to exclude any item that did not have a factor loading of 0.40 or greater on its a priori scale and less than 0.40 on all other environment scales. In the literature, 0.30 or higher is suggested for items loadings (Lang et al. 2005; Martin-Dunlop and Fraser 2007). However, in the present study, in order to differentiate among the scales of the instrument in this preliminary development of the scale, a more conservative cut off score for item loading (≤0.40) has been selected. All the items of the instrument combined accounted for 53.72% of the total variability in students’ PBLEI scores. Though it may seem that about half of the variability is unaccounted for, 53.72% explained variability is considered as sufficient variance explanation in social sciences studies (Tabachnick and Fidell 2001; Buyukozturk 2002). Because this study was preliminary, future studies with larger sample size might show an increased accounted variance. Overall, these results support the factorial validity of the PBLEI.

Alpha reliability coefficients for the four scales were also examined. Analyses revealed that all of the coefficients were high enough to be considered adequate, namely, all items lead to a higher alpha coefficient for the overall scale reliability. The results of reliability for the scales ranged from 0.80 to 0.92. The highest alpha coefficients were for Teacher Support, and Quality of Problem. As a result, it can be said that reliability coefficients of the scales exceed the value of 0.60, which is considered acceptable for research purposes (Nunnally 1967). As a result, it can be said that the PBLEI consisting of 23 items is a valid and reliable instrument to assess undergraduate science student perceptions on PBL environments.

This study contributes to PBL research, science education studies and learning environment research. First, in terms of PBL research, although PBL is not new, there is certainly a recent widespread acceptance of it, especially in higher education (Gallagher et al. 1995; Driessen and Van Der Vleuten 2000; Acar 2004; Spronken-Smith 2005; Senocak et al. 2007; Chiriac 2008). In the light of the trend towards the rapid expansion of problem-based education, the appearance of this new instrument is timely. Also, it is relatively easy to administer without requiring large amounts of valuable classroom learning time. Second, in terms of contributions toward science education studies, the results of the research which used this instrument as research tool can help teachers improve their instruction and, as a consequence, increase students’ motivation to learn science. Measure of PBL environment can also assist curriculum developers in their attempt to change and improve science curricula. Last, in terms of contributions toward the field of learning environment research, the PBLEI is designed to assess students’ perceptions of an effective learning environment (namely, problem-based) for which an instrument previously did not exist. Learning environment researchers, teachers and education practitioners seeking to develop high-quality problem-based environment now have an instrument with which they can measure what goes on in problem-based classes.

References

Acar S (2004) Analysis of an assessment method for problem-based learning. Eur J Eng Educ 29(2):231–240. doi:10.1080/0304379032000157213

Albanese M, Mitchell S (1993) Problem-based learning: a review of literature on its outcomes and implementation issues. Acad Med 68(1):52–81. doi:10.1097/00001888-199301000-00012

Aldridge JM, Fraser BJ, Huang ITC (1999) Investigating classroom environments in Taiwan and Australia with multiple research methods. J Educ Res 93:48–62

Allan J (1996) Learning outcomes in higher education. Stud High Educ 21:93–108. doi:10.1080/03075079612331381487

Barrows HS (1986) A taxonomy of problem-based learning methods. Med Educ 20:481–486. doi:10.1111/j.1365-2923.1986.tb01386.x

Barrows HS (1996) Problem-based learning in medicine and beyond: a brief overview. Jossey-Bass, San Francisco

Barrows HS, Tamblyn RS (1980) Problem-based learning and approach to medical education. Springer, Berlin

Bartlett MS (1954) A further note on the multiplying factors for various χ² approximations in factor analysis. J R Stat Soc [Ser A] 16:296–298

Bigelow JD (2004) Using problem-based learning to develop skills in solving unstructured problems. J Manage Educ 28:591–609. doi:10.1177/1052562903257310

Boud D, Feletti GI (1997) The challenge of problem-based learning. Kogan Page, London

Buyukozturk S (2002) A handbook of data analysis for social sciences. Pegema, Ankara

Checkly K (1997) Problem-based learning. ASCD Curriculum Update, Summer, 3

Chin C, Chia L (2005) Problem-based learning: using students’ questions to drive knowledge construction. Sci Educ 88(5):707–727

Chiriac EH (2008) A scheme for understanding group processes in problem-based learning. High Educ 55:505–518. doi:10.1007/s10734-007-9071-7

Crocker L, Algina J (1986) Introduction to classical and modern test theory. Harcourt Brace Jovanovich College, Fort Worth

Dahlgren MA, Castensson R, Dahlgren LO (1998) PBL from teachers’ perspective. High Educ 36:437–447. doi:10.1023/A:1003467910288

Driessen E, Van Der Vleuten C (2000) Matching student assessment to problem-based learning: lessons from experience in a law faculty. Stud Contin Educ 22(2):235–248. doi:10.1080/713695731

Fisher DL, Fraser BJ (1981) Validity and use of my class inventory. Sci Educ 65:145–156. doi:10.1002/sce.3730650206

Fraser BJ (1986) Classroom environment. Croom Helm, London

Fraser BJ (1994) Research on classroom and school climate. In: Gabel D (ed) Handbook of research on science teaching and learning. Macmillan, New York, pp 493–541

Fraser BJ (1998) Classroom environment instruments: development, validity and applications. Learn Environ Res 1:7–33. doi:10.1023/A:1009932514731

Fraser BJ (2002a) Preface. In: Goh SC, Khine MS (eds) Studies in educational learning environments: an international perspective. World Scientific, River Edge, pp vii–viii

Fraser BJ (2002b) Learning environments research: yesterday, today and tomorrow. In: Goh SC, Khine MS (eds) Studies in educational learning environments: an international perspective. World Scientific, River Edge, pp 1–25

Fraser BJ, Treagust DF (1986) Validity and use of an instrument for assessing classroom psychosocial environment in higher education. High Educ 15:37–57. doi:10.1007/BF00138091

Fraser BJ, Walberg HJ (eds) (1991) Educational environments: evaluation, antecedents, and consequences. Pergamon, London

Fraser BJ, Anderson GJ, Walberg HJ (1982) Assessment of learning environments: manual for learning environment ınventory (LEI) and my class ınventory (MCI). Western Australian Institute of Technology, Perth

Fraser BJ, Giddings GJ, McRobbie CJ (1995) Evolution and validation of a personal form of an instrument for assessing science laboratory classroom environments. J Res Sci Teach 32:399–422. doi:10.1002/tea.3660320408

Gallagher SA, Stepien WJ, Sher BT, Workman D (1995) Implementing problem-based learning in science classroom. Sch Sci Math 95(3):136–146

George D, Mallery P (2001) SPSS for windows step by step: a simple guide and reference 10.0 update, 3rd edn. Allyn and Bacon, Torontob

Gibson HL, Chase C (2002) Longitudinal impact of an inquiry-based science program on middle school students’ attitudes toward science. Sci Educ 86:693–705. doi:10.1002/sce.10039

Gijbels D, Van de Watering G, Dochy FA, Van der Bossche P (2006) New learning environments and constructivism: the students’ perspective. Instr Sci 34:213–226. doi:10.1007/s11251-005-3347-z

Grasel C, Fischer F, Mandl H (2001) The use of additional information in problem-oriented learning environments. Learn Environ Res 3:287–305. doi:10.1023/A:1011421732004

Greenwald NL (2000) Learning from problems. Sci Teach 67(4):28–32

Habib F, Eshra DK, Weaver J, Newcomer W, O’Donnell C, Neff-Smith M (1999) Problem based learning: a new approach for nursing education in Egypt. J Multicult Nurs Health 5(3):6–11

Hassan MAA, Yusof KM, Hamid MKA, Hassim MH, Aziz AA, Hassan SAHS (2004) A review and survey of problem-based learning application in engineering education, Conference on Engineering Education, Kuala Lumpur, Malaysia

Hendry GD, Ryan G, Harris J (2003) Group problems in problem-based learning. Med Teach 25(6):609–616. doi:10.1080/0142159031000137427

Hmelo-Silver CE (2004) Problem-based learning: what and how do students learn? Educ Psychol Rev 16(3):235–266. doi:10.1023/B:EDPR.0000034022.16470.f3

Hofstein A, Kesner M, Ben-Zvi R (1999) Student perceptions of industrial chemistry classroom learning environments. Learn Environ Res 2:291–306. doi:10.1023/A:1009973908142

Jegede O, Fraser BJ, Fisher DL (1998) The distance and open learning environment scale: its development, validation and use. Paper presented at the annual meeting of the National Association for Research in Science Teaching, San Diego, CA

Johnson DW, Johnson RT, Holubec EJ (1994) Cooperative learning in the classroom. Association for Supervision and Curriculum Development, USA

Kaiser HF (1974) An index of factorial simplicity. Psychometrika 39:31–36. doi:10.1007/BF02291575

Lang QC, Wong AFL, Fraser BJ (2005) Student perceptions of chemistry laboratory environments, student-teacher interactions and attitudes in secondary school gifted education classes in Singapore. Res Sci Educ 35:299–321. doi:10.1007/s11165-005-0093-9

Lindblom-Ylanne S, Pihlajamaki H, Kotkas T (2003) What makes a student group successful? Student-student and student-teacher interaction in a problem-based learning environment. Learn Environ Res 6:59–76. doi:10.1023/A:1022963826128

Macdonald R (2001) Problem-based learning: implications for educational developers. Educ Dev 2(2):1–5

MacDonald R, Savin-Baden M (2003) A briefing on assessment in problem-based learning. LTSN Generic Centre Assessment Series, no. 7, York: LTSN Generic Centre

Mackenzie AM, Jonstone AH, Brown RI (2003) Learning from problem based learning. Univ Chem Educ 7:13–26

Martin-Dunlop C, Fraser BJ (2007) Learning environment and attitudes associated with an innovative science course designed for prospective elementary teachers. Int J Sci Math Educ 6:163–190. doi:10.1007/s10763-007-9070-2

Miller SK (2003) A comparison of student outcomes following problem-based learning: instruction versus traditional lecture learning in a graduate pharmacology course. J Am Acad Nurse Pract 15(12):550–556. doi:10.1111/j.1745-7599.2003.tb00347.x

Moos RH (1974) The social climate scales: an overview. Consulting Psychologists Press, Palo Alto

Moos RH (1979) Evaluating educational environments. Jossey-Bass, San Francisco

Moos RH, Trickett EJ (1974) Classroom environment scale manual. Consulting Psychologists Press, Palo Alto

Norman GR, Schmidt HG (1992) The psychological basis of problem-based learning: a review of the evidence. Acad Med 67:557–565

Nunnally J (1967) Psychometric theory. McGraw Hill, New York

Orion N, Hofstein A, Tamir P, Gidding GJ (1997) Development and validation of an instrument for assessing the learning environment of outdoor science activities. Sci Educ 81:161–171. doi:10.1002/(SICI)1098-237X(199704)81:2<161::AID-SCE3>3.0.CO;2-D

Pellegrino JW, Chudowsky N, Glaser R (eds) (2001) Knowing what students know. National Academy Press, Washington, DC

Peterson RF, Treagust DF (1998) Learning to teach primary science through problem-based learning. Sci Educ 82(2):215–237. doi:10.1002/(SICI)1098-237X(199804)82:2<215::AID-SCE6>3.0.CO;2-H

Polonco R, Calderon P, Delgado F (2004) Effects of a problem-based learning program on engineering students’ academic achievements in a Mexican university. Innov Educ Teach Int 4(2):145–155. doi:10.1080/1470329042000208675

Rentoul AJ, Fraser BJ (1979) Conceptualization of enquiry-based or open classroom learning environments. J Curric Stud 11:233–245. doi:10.1080/0022027790110306

Rideout E, England-Oxford V, Brown B, Fothergill-Bourbonnais F, Ingram C, Benson G, Ross M, Coates A (2002) A comparison of problem-based and conventional curricula in nursing education. Adv Health Sci Educ 7:3–7. doi:10.1023/A:1014534712178

Roth W-R (1994) Experimenting in a constructivist high school physics laboratory. J Res Sci Teach 31:197–223. doi:10.1002/tea.3660310209

Savin-Baden M (2004) Understanding the impact of assessment on students in problem-based learning. Innovations Educ Teach Int 42(2):223–233

Savin-Baden M, Major CH (2004) Foundations of problem-based learning. Society for Research into Higher Education & Open University press, New York

Savin-Baden M, Wilkie K (2004) Challenging research in problem-based learning. Society for Research into Higher Education & Open University press, NY

Schmidt HG (1983) Problem-based learning: rationale and description. Med Educ 17:11–16. doi:10.1111/j.1365-2923.1983.tb01086.x

Senocak E, Taskesenligil Y, Sozbilir M (2007) A study on teaching gases to prospective primary science teachers through problem-based learning. Res Sci Educ 37:279–290. doi:10.1007/s11165-006-9026-5

Soderberg P, Price F (2003) An examination of problem-based teaching and learning in population genetics and evolution using EVOLVE, a computer simulation. Int J Sci Educ 25(1):35–55. doi:10.1080/09500690110095285

Spronken-Smith R (2005) Implementing a problem-based learning approach for teaching research methods in geography. J Geogr High Educ 29(2):203–221. doi:10.1080/03098260500130403

Tabachnick BC, Fidell LS (2001) Using multivariate statistics, 4th edn. Allyn & Bacon, Needham Heights

Tarhan L, Kayali Ayar, Ozturk H, Urek R, Acar B (2008) Problem-based learning in 9th grade chemistry class: intermolecular forces. Res Sci Educ 38:285–300. doi:10.1007/s11165-007-9050-0

Taylor P, Fraser B, Fisher D (1993) Monitoring the development of constructivist learning environments. Paper presented at the annual convention of the National Science Teachers Association, Kansas City, MO

Tsai C (2003) Taiwanese science students’ and teachers’ perceptions of the laboratory learnibg environments: exploring epistemological gaps. Int J Sci Educ 25(7):847–860. doi:10.1080/09500690305031

Tynjala P (1999) Towards expert knowledge? A comparison between a constructivist and a traditional learning environment in the university. Int J Educ Res 31:357–442. doi:10.1016/S0883-0355(99)00012-9

Walberg HJ, Anderson GJ (1968a) Classroom climate and individual learning. J Educ Psychol 59:414–419. doi:10.1037/h0026490

Walberg HJ, Anderson GJ (1968b) The achievement-creativity dimension of classroom climate. J Creat Behav 2:281–291

Walker SL, Fraser BJ (2005) Development and validation of an ınstrument for assessing distance education learning environments in higher education: the distance education learning environments survey (DELES). Learn Environ Res 8:289–308. doi:10.1007/s10984-005-1568-3

Ward JD, Lee CL (2002) A review of problem-based learning. J fam Consum Sci Educ 20(1):16–26

West SA (1992) Problem-based learning—a viable edition for secondary school science. Sch Sci Rev 73(265):47–55

Wong AFL, Fraser BJ (1996) Environment–attitude associations in the chemistry laboratory classroom. Res Sci Technol Educ 14:91–102. doi:10.1080/0263514960140107

Ying Y (2003) Using problem-based teaching and problem-based learning to improve the teaching of electrochemistry. The China Papers July: 42–47

Yuzhi W (2003) Using problem-based learning in teaching analytical chemistry. The China Papers July: 28–33

Author information

Authors and Affiliations

Corresponding author

Appendix

Rights and permissions

About this article

Cite this article

Senocak, E. Development of an Instrument for Assessing Undergraduate Science Students’ Perceptions: The Problem-Based Learning Environment Inventory. J Sci Educ Technol 18, 560–569 (2009). https://doi.org/10.1007/s10956-009-9173-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10956-009-9173-3