Abstract

Finding a ground state of a given Hamiltonian of an Ising model on a graph \(G=(V,E)\) is an important but hard problem. The standard approach for this kind of problem is the application of algorithms that rely on single-spin-flip Markov chain Monte Carlo methods, such as the simulated annealing based on Glauber or Metropolis dynamics. In this paper, we investigate a particular kind of stochastic cellular automata, in which all spins are updated independently and simultaneously. We prove that (i) if the temperature is fixed sufficiently high, then the mixing time is at most of order \(\log |V|\), and that (ii) if the temperature drops in time n as \(1/\log n\), then the limiting measure is uniformly distributed over the ground states. We also provide some simulations of the algorithms studied in this paper implemented on a GPU and show their superior performance compared to the conventional simulated annealing.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Main Results

There are several occasions in real life when we have to choose one among extremely many options quickly. In addition, we want our choice to be optimal in a certain sense. The so-called combinatorial optimization problems [19, 24] are ubiquitous and possibly quite hard to be solved in a fast way. In particular, polynomial-time algorithms for NP-hard problems are not known to exist [11].

A possible approach as an attempt to provide an optimal solution for a given problem would be to translate it into the problem of minimizing the Hamiltonian of an Ising model on a finite graph such that each of its ground states corresponds to an optimal solution to the original problem and vice versa. See, for instance, [21] for a broad list of examples of such mappings. Let us consider a finite graph \(G=(V,E)\) with no multi- or self-edges. Given a collection of spin-spin coupling constants \(\{J_{x,y}\}_{x,y \in V}\) which is symmetric (that is, \(J_{x,y} = J_{y,x}\) for all x, y) and satisfies \(J_{x,y}=0\) whenever \(\{x,y\}\notin E\), and a collection of external fields \(\{h_x\}_{x\in V}\), the Hamiltonian of an Ising spin configuration \(\varvec{\sigma }=\{\sigma _x\}_{x\in V}\in \Omega \equiv \{\pm 1\}^V\) is defined by

Let GS denote the set of its ground states, the configurations at which the Hamiltonian attains its minimum value, i.e.,

A method that can possibly be considered in the search for ground states consists of using a Markov chain Monte Carlo (MCMC) to sample the Gibbs distribution \(\pi ^{\scriptscriptstyle \mathrm G}_\beta \propto e^{-\beta H}\) at the inverse temperature \(\beta \ge 0\) given by

It is straightforward to verify that the Gibbs distribution reaches its highest peaks on GS. There are several MCMCs that can be applied to generate the Gibbs distribution as the equilibrium measure, one of them is the Glauber dynamics [12], whose transition matrix \(P^{\scriptscriptstyle \mathrm G}_\beta \) is defined by

Notice that, by introducing the cavity fields

the transition probability \(P^{\scriptscriptstyle \mathrm G}_\beta (\varvec{\sigma },\varvec{\sigma }^x)\) can also be written as

The transition probability above can be interpreted as the probability of choosing the vertex x uniformly at random from V and then flipping its spin value with probability proportional to \(w^{\scriptscriptstyle \mathrm G}_\beta (\varvec{\sigma }^x)\). Furthermore, since \(P^{\scriptscriptstyle \mathrm G}_\beta \) is aperiodic, irreducible, and reversible with respect to \(\pi ^{\scriptscriptstyle \mathrm G}_\beta \) (i.e., the identity \(\pi ^{\scriptscriptstyle \mathrm G}_\beta (\varvec{\sigma })P^{\scriptscriptstyle \mathrm G}_\beta (\varvec{\sigma },\varvec{\tau }) =\pi ^{\scriptscriptstyle \mathrm G}_\beta (\varvec{\tau })P^{\scriptscriptstyle \mathrm G}_\beta (\varvec{\tau },\varvec{\sigma })\) holds for all \(\varvec{\sigma },\varvec{\tau }\in \Omega \)), it follows that the Glauber dynamics converges to its unique equilibrium distribution, namely, the Gibbs distribution \(\pi ^{\scriptscriptstyle \mathrm G}_\beta \).

In practice, the method that actually has been widely employed in several real-world applications is known as the simulated annealing algorithm [1, 6, 16, 18, 27, 28], which motivated the main subject of this paper. The standard approach consists of applying a discrete-time inhomogeneous Markov chain based on single-spin-flip dynamics (such as Glauber dynamics or Metropolis dynamics [22]) where the temperature drops every time a spin value is updated. If the temperature is set to decrease at an appropriate rate, then it is guaranteed that such a procedure will asymptotically converge to one of the ground states, see [1, 3, 13].

Note that, in the methods described above, the number of spin-flips per update is at most one, so, in principle, we may take some benefit if we consider methods that allow a larger number of spin-flips. In respect of ferromagnetic spin systems, the Swendsen-Wang algorithm [26] is a cluster-flip Markov chain, in which many spins can be flipped simultaneously, unlike in the Glauber and other single-spin-flip dynamics. However, forming a cluster to be flipped yields strong dependency among spin variables.

In recent studies such as in [23, 29], some algorithms that rely on parallel and independent spin-flips have shown significantly higher performance in approximating ground states compared to some of the well-established algorithms based on single-spin-flip dynamics. For that reason, such algorithms of that nature deserve some attention and a rigorous treatment from the mathematical point of view, in order to understand their mechanisms and limitations, becomes necessary. Furthermore, due to their main feature, the possibility of employing hardware accelerators such as annealing processors strongly relying on parallelization [2, 17, 29] to speed up simulations has a great appeal and may be advantageous for the computation of solutions of real-time and time-constrained problems. So, in Sect. 4 we include some analyses comparing the accuracy and the simulation times of Glauber dynamics and the algorithms present in this paper when implemented on a CPU and a GPU.

In this paper, we investigate a particular class of probabilistic cellular automata, or PCA, studied in [7, 25]. Since the acronym PCA has already been long used to stand for principal component analysis in statistics, we would rather use the term stochastic cellular automata (SCA). Let us start by considering the so-called pinning parameters \(\varvec{q}=\{q_x\}_{x\in V}\) and using them to introduce an extended version of the Hamiltonian, whose expression is given by

Note that the relationship between H and \({\tilde{H}}\) is given by

Then, we introduce

and define the SCA transition matrix \(P^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}\) by letting

Due to the rightmost expression from equation (1.10), we can interpret that all spins in the system are updated independently and simultaneously according to certain local probability rules. This implies that the SCA is allowed to move from any spin configuration to another in just one step, which, in principle, may potentially result in a faster convergence to equilibrium. Since \({\tilde{H}}\) is symmetric (due to the symmetry of the spin-spin coupling constants), i.e., \({\tilde{H}}(\varvec{\sigma },\varvec{\tau })={\tilde{H}}(\varvec{\tau },\varvec{\sigma })\), then, the middle expression in equation (1.10) implies that \(P^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}\) is reversible with respect to the equilibrium distribution \(\pi ^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}\) given by

Although this does not necessarily coincide with the Gibbs distribution, and therefore we cannot naively use it to search for the ground states, the total-variation distance (cf., [3, Definition 4.1.1 and (4.1.5)])

tends to zero as \(\min _xq_x\uparrow \infty \). This is a positive aspect of the SCA with large \(\varvec{q}\). On the other hand, since the off-diagonal entries of the transition matrix \(P^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}\) tends to zero as \(\min _xq_x\uparrow \infty \), the SCA with large \(\varvec{q}\) may well be much slower than expected. This is why we call \(\varvec{q}\) the pinning parameters.

Having in mind the development of a simulated annealing algorithm based on SCA aiming at determining the ground states of H, in Sect. 3 we investigate the SCA with the set of pinning parameters \(\varvec{q}\) satisfying

where C is an arbitrary subset of V and \(\lambda \) is the largest eigenvalue of the matrix \([-J_{x, y}]_{V\times V}\). This is a sufficient condition on \({\tilde{H}}\) that assures that its minimum value is attained on the diagonal entries, that is,

Condition (1.13) originated in [23], where on its supplemental material a rather more general result is proved, establishing that, under this assumption, the inequality

holds whenever \(\varvec{\sigma }\) and \(\varvec{\tau }\) are distinct, which, according to equation (1.8), implies that

In this paper, we prove the following two statements:

-

(i)

If \(\beta \) is sufficiently small and fixed, then the time-homogeneous SCA has a mixing time at most of order \(\log |V|\) (Theorem 2.2).

-

(ii)

If \(\beta _n\) increases in time n as \(\propto \log n\), then the time-inhomogeneous SCA weakly converges to \(\pi ^{\scriptscriptstyle \mathrm G}_\infty \), the uniform distribution over GS (Theorem 3.2).

The former result implies faster mixing than conventional single-spin-flip MCs, such as the Glauber dynamics (see Remark 2.3(i)). The latter refers to the applicability of the standard temperature-cooling schedule in the simulated annealing (see Remark 3.3(i)).

Although the two results above are proven mathematically rigorously, they may be difficult to be directly applied in practice. As mentioned earlier, the SCA is allowed to flip multiple spins in a single update, therefore, in principle, it potentially can reduce the mixing time compared to other single-spin-flip algorithms. However, to attain such a small mixing time as in (i), we have to keep the temperature very high (as comparable to the radius of convergence of the high-temperature expansion, see (2.5) below). Also, if we want to find a ground state by using an SCA-based simulated annealing algorithm, as stated in (ii), the temperature has to drop so slowly as \(1/\log n\) (with a large multiplicative constant \(\Gamma \), see (3.7) below), and therefore the number of steps required to come close to a ground state may well be extremely large. The problem seems to be due to the introduction of the total-variation distance. In order to make the distance \(\Vert \mu -\nu \Vert _{\scriptscriptstyle \mathrm TV}\) small, the two measures \(\mu \) and \(\nu \) have to be very close at every spin configuration. Moreover, since \(\Vert \pi ^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}-\pi ^{\scriptscriptstyle \mathrm G}_\beta \Vert _{\scriptscriptstyle \mathrm TV}\) is not necessarily small for finite \(\varvec{q}\), we cannot tell anything about the excited states \(\Omega \setminus \textrm{GS}\). In other words, we might be able to use the SCA under the condition (1.13) to find the best options in combinatorial optimization, but not the second- or third-best options. To overcome those difficulties, we will shortly discuss a potential replacement for the total-variation distance at the end of Sect. 3.

In practice, to avoid applying logarithmic cooling schedules and having to wait for long execution times, faster cooling is often considered. In Sect. 4, we introduce a variant of the SCA called \(\varepsilon \)-SCA and show in a series of examples that simulated annealing with exponential cooling schedules can be successfully applied to the Glauber dynamics, SCA, and \(\varepsilon \)-SCA. The results are consistent with the preliminary findings from [8, 9], which reveal that the SCA outperforms Glauber dynamics in most of the scenarios, while \(\varepsilon \)-SCA outperforms both algorithms in all tested models. Providing a mathematical foundation for the \(\varepsilon \)-SCA and proving a generalization of (ii) for exponential schedules comprise a future direction of our research.

2 Mixing Time for the SCA

In this section, we show that the mixing in the SCA is faster than in the Glauber dynamics when the temperature is sufficiently high. To do so, we first introduce some notions and notation.

For \(\varvec{\sigma },\varvec{\tau }\in \Omega \), we let \(D_{\varvec{\sigma },\varvec{\tau }}\) be the set of vertices at which \(\varvec{\sigma }\) and \(\varvec{\tau }\) disagree:

For a time-homogeneous Markov chain, whose t-step distribution \(P^t\) converges to its equilibrium \(\pi \), we define the mixing time as follows: given an \(\varepsilon \in [0,1]\),

In particular, we denote it by \(t_\textrm{mix}^{\scriptscriptstyle \mathrm SCA}(\varepsilon )\) when \(P=P^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}\) and \(\pi =\pi ^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}\). Then we define the transportation metric \(\rho _{\scriptscriptstyle \mathrm TM}\) between two probability measures on \(\Omega \) as

where \({\textbf {E}}_{\mu ,\nu }\) is the expectation against the coupling measure \({\textbf {P}}_{\mu ,\nu }\) whose marginals are \(\mu \) for X and \(\nu \) for Y, respectively. By [20, Lemma 14.3], \(\rho _{\scriptscriptstyle \mathrm TM}\) indeed satisfies the axioms of metrics, in particular the triangle inequality: \(\rho _{\scriptscriptstyle \mathrm TM}(\mu ,\nu )\le \rho _{\scriptscriptstyle \mathrm TM}(\mu ,\lambda )+\rho _{\scriptscriptstyle \mathrm TM}(\lambda ,\nu )\) holds for all probability measures \(\mu ,\nu ,\lambda \) on \(\Omega \).

The following is a summary of [20, Theorem 14.6 and Corollary 14.7], but stated in our context.

Proposition 2.1

If there is an \(r\in (0,1)\) such that \(\rho _{\scriptscriptstyle \mathrm TM}(P(\varvec{\sigma },\cdot ),P(\varvec{\tau },\cdot ))\le r|D_{\varvec{\sigma }, \varvec{\tau }}|\) for any \(\varvec{\sigma }, \varvec{\tau }\in \Omega \), then

Consequently,

It is crucial to find a coupling (X, Y) in which the size of \(D_{X,Y}\) is decreasing in average, as stated in the hypothesis of the above proposition. Here is the main statement on the mixing time for the SCA.

Theorem 2.2

For any non-negative \(\varvec{q}\), if \(\beta \) is sufficiently small such that, independently of \(\{h_x\}_{x\in V}\),

then \(\rho _{\scriptscriptstyle \mathrm TM}(P^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}(\varvec{\sigma },\cdot ),P^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}(\varvec{\tau },\cdot )) \le r|D_{\varvec{\sigma }, \varvec{\tau }}|\) for all \(\varvec{\sigma }, \varvec{\tau }\in \Omega \), and therefore \(t_\textrm{mix}^{\scriptscriptstyle \mathrm SCA}(\varepsilon )\) obeys (2.4).

Proof

It suffices to show \(\rho _{\scriptscriptstyle \mathrm TM}(P^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}(\varvec{\sigma },\cdot ),P^{\scriptscriptstyle \mathrm SCA}_{\beta , \varvec{q}}(\varvec{\tau },\cdot ))\le r\) for all \(\varvec{\sigma },\varvec{\tau }\in \Omega \) with \(|D_{\varvec{\sigma },\varvec{\tau }}|=1\). If \(|D_{\varvec{\sigma },\varvec{\tau }}|\ge 2\), then, by the triangle inequality along any sequence \((\varvec{\eta }_0,\varvec{\eta }_1,\dots ,\varvec{\eta }_{|D_{\varvec{\sigma },\varvec{\tau }}|})\) of spin configurarions that satisfy \(\varvec{\eta }_0=\varvec{\sigma }\), \(\varvec{\eta }_{|D_{\varvec{\sigma },\varvec{\tau }}|}=\varvec{\tau }\) and \(|D_{\varvec{\eta }_{j-1},\varvec{\eta }_j}|=1\) for all \(j=1,\dots ,|D_{\varvec{\sigma },\varvec{\tau }}|\), we have

Suppose that \(D_{\varvec{\sigma },\varvec{\tau }}=\{x\}\), i.e., \(\varvec{\tau }=\varvec{\sigma }^x\). For any \(\varvec{\sigma }\in \Omega \) and \(y\in V\), we let \(p(\varvec{\sigma },y)\) be the conditional SCA probability of \(\sigma _y\rightarrow 1\) given that the others are fixed (cf., (1.10)):

Notice that \(p(\varvec{\sigma },y)\ne p(\varvec{\sigma }^x,y)\) only when \(y=x\) or \(y\in N_x\equiv \{v\in V:J_{x,v}\ne 0\}\). Using this as a threshold function for i.i.d. uniform random variables \(\{U_y\}_{y\in V}\) on [0, 1], we define the coupling (X, Y) of \(P^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}(\varvec{\sigma },\cdot )\) and \(P^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}(\varvec{\sigma }^x,\cdot )\) as

Denote the measure of this coupling by \({\textbf {P}}_{\varvec{\sigma },\varvec{\sigma }^x}\) and its expectation by \({\textbf {E}}_{\varvec{\sigma },\varvec{\sigma }^x}\). Then we obtain

where, by using the rightmost expression in (2.7),

and for \(y\in N_x\),

Since \(|\tanh (a+b)-\tanh (a-b)|\le 2\tanh |b|\) for any a, b, we can conclude

as required. \(\square \)

Remark 2.3

-

(i)

It is known that, in a very general setting, the mixing time for the Glauber dynamics (1.4) is at least of order \(|V| \log |V|\), see [15]. Therefore, Theorem 2.2 implies that the SCA reaches equilibrium way faster than the Glauber dynamics, as long as the temperature is high enough. Even though the result above does not play a role in practical applications and the SCA cannot be used to sample the Gibbs distribution, it does give us a hint that we may extract some benefit by considering multiple spin-flip algorithms to speed up simulations.

-

(ii)

It is of some interest to investigate the average number of spin-flips per update, although it does not necessarily represent the speed of convergence to equilibrium. In [7], where \(q_x\) is set to be a common q for all x, the average number of spin-flips per update is conceptually explained to be \(O(|V|e^{-\beta q})\). Here, we show an exact computation of the SCA transition probability and approximate it by a binomial expansion, from which we claim that the actual average number of spin-flips per update is much smaller than \(O(|V|e^{-\beta q})\). First, we recall equation (1.10). Notice that

$$\begin{aligned} \frac{e^{\frac{\beta }{2} ({\tilde{h}}_x(\varvec{\sigma })+q_x\sigma _x)\tau _x}}{2\cosh (\frac{\beta }{2}({\tilde{h}}_x(\varvec{\sigma })+q_x\sigma _x))}&=\frac{e^{-\frac{\beta }{2}({\tilde{h}}_x(\varvec{\sigma })\sigma _x+q_x)}\mathbbm {1}_{\{x\in D_{\varvec{\sigma },\varvec{\tau }}\}}}{2\cosh (\frac{\beta }{2} ({\tilde{h}}_x(\varvec{\sigma })+q_x \sigma _x))}\\ \nonumber&+\frac{e^{\frac{\beta }{2} ({\tilde{h}}_x(\varvec{\sigma })\sigma _x+q_x)}\mathbbm {1}_{\{x\in V\setminus D_{\varvec{\sigma },\varvec{\tau }}\}}}{2\cosh (\frac{\beta }{2}({\tilde{h}}_x(\varvec{\sigma }) +q_x\sigma _x))}. \end{aligned}$$(2.13)Isolating the \(\varvec{q}\)-dependence, we can rewrite the first term on the right-hand side as

$$\begin{aligned} \frac{e^{-\frac{\beta }{2}({\tilde{h}}_x(\varvec{\sigma })\sigma _x+q_x)}\mathbbm {1}_{\{x\in D_{\varvec{\sigma }, \varvec{\tau }}\}}}{2\cosh (\frac{\beta }{2} ({\tilde{h}}_x(\varvec{\sigma })+q_x\sigma _x))}= \underbrace{\frac{e^{-\frac{\beta }{2}q_x}\cosh (\frac{\beta }{2}{\tilde{h}}_x(\varvec{\sigma }))}{\cosh (\frac{\beta }{2}({\tilde{h}}_x(\varvec{\sigma })+q_x\sigma _x))}}_{\equiv \,\varepsilon _x (\varvec{\sigma })}\,\underbrace{\frac{e^{-\frac{\beta }{2}{\tilde{h}}_x(\varvec{\sigma }) \sigma _x}}{2\cosh (\frac{\beta }{2} {\tilde{h}}_x(\varvec{\sigma }))}}_{\equiv \,p_x(\varvec{\sigma })}\mathbbm {1}_{\{x\in D_{\varvec{\sigma },\varvec{\tau }}\}}, \end{aligned}$$(2.14)and the second term as \((1-\varepsilon _x(\varvec{\sigma })p_x(\varvec{\sigma })) \mathbbm {1}_{\{x\in V{\setminus } D_{\varvec{\sigma },\varvec{\tau }}\}}\). As a result, we obtain

$$\begin{aligned} P^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}(\varvec{\sigma },\varvec{\tau })=\prod _{x\in D_{\varvec{\sigma },\varvec{\tau }}}\big (\varepsilon _x (\varvec{\sigma })p_x(\varvec{\sigma })\big )\prod _{y\in V\setminus D_{\varvec{\sigma },\varvec{\tau }}} \big (1-\varepsilon _y(\varvec{\sigma })p_y(\varvec{\sigma })\big ). \end{aligned}$$(2.15)Suppose that \(\varepsilon _x(\varvec{\sigma })\) is independent of x and \(\varvec{\sigma }\), which is of course untrue, and simply denote it by \(\varepsilon =O(e^{-\beta q})\). Then we can rewrite \(P^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}(\varvec{\sigma },\varvec{\tau })\) as

$$\begin{aligned} P^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}(\varvec{\sigma },\varvec{\tau })&\simeq \prod _{x\in D_{\varvec{\sigma },\varvec{\tau }}}\big ( \varepsilon p_x(\varvec{\sigma })\big )\prod _{y\in V\setminus D_{\varvec{\sigma },\varvec{\tau }}}\Big ((1 -\varepsilon )+\varepsilon \big (1-p_y(\varvec{\sigma })\big )\Big )\nonumber \\&=\prod _{x\in D_{\varvec{\sigma },\varvec{\tau }}}\big (\varepsilon p_x(\varvec{\sigma })\big )\sum _{S: D_{\varvec{\sigma },\varvec{\tau }}\subset S\subset V} (1-\varepsilon )^{|V\setminus S|}\prod _{y\in S\setminus D_{\varvec{\sigma },\varvec{\tau }}}\Big (\varepsilon \big (1-p_y(\varvec{\sigma })\big )\Big )\nonumber \\&=\sum _{S:D_{\varvec{\sigma },\varvec{\tau }}\subset S\subset V}\varepsilon ^{|S|} (1-\varepsilon )^{|V\setminus S|} \prod _{x\in D_{\varvec{\sigma },\varvec{\tau }}}p_x(\varvec{\sigma })\prod _{y\in S \setminus D_{\varvec{\sigma },\varvec{\tau }}}\big (1-p_y(\varvec{\sigma })\big ). \end{aligned}$$(2.16)This implies that the transition from \(\varvec{\sigma }\) to \(\varvec{\tau }\) can be seen as determining the binomial subset \(D_{\varvec{\sigma },\varvec{\tau }}\subset S\subset V\) with parameter \(\varepsilon \) and then changing each spin at \(x\in D_{\varvec{\sigma },\varvec{\tau }}\) with probability \(p_x(\varvec{\sigma })\). Therefore, \(|V|\varepsilon \) is much larger than the actual average number of spin-flips per update. Currently, the authors are investigating an MCMC inspired by (2.16) with a constant \(\varepsilon \in (0,1]\). Some numerical results have shown better performance than Glauber dynamics and SCA in finding ground states for several problems. For more details, see Sect. 4.

-

(iii)

In fact, we can compute the average number \(E^*[|D_{\varvec{\sigma },X}|] \equiv \sum _{\varvec{\tau }}|D_{\varvec{\sigma },\varvec{\tau }}|P^*(\varvec{\sigma },\varvec{\tau })\) of spin-flips per update, where X is an \(\Omega \)-valued random variable whose law is \(P^*(\varvec{\sigma },\cdot )\). For Glauber, we have

$$\begin{aligned} E^{\scriptscriptstyle \mathrm G}_\beta [|D_{\varvec{\sigma },X}|]&=\sum _{x\in V}P^{\scriptscriptstyle \mathrm G}_\beta (\varvec{\sigma },\varvec{\sigma }^x) =\frac{1}{|V|}\sum _{x\in V}\frac{e^{-\beta {\tilde{h}}_x(\varvec{\sigma }) \sigma _x}}{2\cosh (\beta {\tilde{h}}_x(\varvec{\sigma }))}\nonumber \\&=\frac{1}{|V|}\sum _{x\in V}\frac{1}{e^{2\beta {\tilde{h}}_x(\varvec{\sigma }) \sigma _x}+1}. \end{aligned}$$(2.17)For the SCA, on the other hand, since \(|D_{\varvec{\sigma },\varvec{\tau }}|=\sum _{x\in V}\mathbbm {1}_{\{\sigma _x\ne \tau _x\}}\), we have

$$\begin{aligned} E^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}[|D_{\varvec{\sigma },X}|]=\sum _{x\in V}\sum _{\varvec{\tau }:\tau _x\ne \sigma _x}P^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}(\varvec{\sigma },\varvec{\tau })&=\sum _{x\in V}\frac{e^{-\frac{\beta }{2}({\tilde{h}}_x(\varvec{\sigma })\sigma _x+q_x)}}{2\cosh (\frac{\beta }{2}({\tilde{h}}_x (\varvec{\sigma })\sigma _x+q_x))}\nonumber \\&=\sum _{x\in V}\frac{1}{e^{\beta ({\tilde{h}}_x(\varvec{\sigma })\sigma _x+q_x)}+1}. \end{aligned}$$(2.18)Therefore, the SCA has more spin-flips per update than Glauber, if \(|V|e^{2\beta {\tilde{h}}_x(\varvec{\sigma })\sigma _x}\ge e^{\beta ({\tilde{h}}_x(\varvec{\sigma }) \sigma _x+q_x)}\) for any \(x\in V\) and \(\varvec{\sigma }\in \Omega \), which is true when the temperature is sufficiently high such that

$$\begin{aligned} \max _{x\in V}\frac{\beta }{2}\bigg (q_x+|h_x|+\sum _{y\in V}|J_{x,y}|\bigg )\le \log \sqrt{|V|}. \end{aligned}$$(2.19)Compare this with the condition (2.5), which is independent of \(\{h_x\}_{x\in V}\), hence better than (2.19) in this respect. On the other hand, the bound in (2.19) can be made large as |V| increases, while it is always 1 in (2.5).

3 Simulated Annealing for the SCA

In this section, we show that, under a logarithmic cooling schedule \(\beta _t\propto \log t\), the simulated annealing for the SCA weakly converges to the uniform distribution over GS. To do so, we introduce the Dobrushin’s ergodic coefficient \(\delta (P)\) of the transition matrix \([P(\varvec{\sigma },\varvec{\tau })]_{\Omega \times \Omega }\) as

The following proposition is a summary of [3, Theorems 6.8.2 & 6.8.3], but stated in our context.

Proposition 3.1

Let \(\{X_n\}_{n=0}^\infty \) be a time-inhomogenous Markov chain on \(\Omega \) generated by the transition probabilities \(\{P_n\}_{n\in {\mathbb N}}\), i.e., \(P_n(\varvec{\sigma },\varvec{\tau })={\mathbb P}(X_n=\varvec{\tau }|X_{n-1}=\varvec{\sigma })\). Let \(\{\pi _n\}_{n\in {\mathbb N}}\) be their respective equilibrium distributions, i.e., \(\pi _n=\pi _nP_n\) for each \(n\in {\mathbb N}\). If

and if there is a strictly increasing sequence \(\{n_j\}_{j\in {\mathbb N}}\subset {\mathbb N}\) such that

then there is a probability distribution \(\pi \) on \(\Omega \) such that, for any \(j\in {\mathbb N}\),

where the supremum is taken over the initial distribution on \(\Omega \).

The second assumption (3.3) is a necessary and sufficient condition for the Markov chain to be weakly ergodic [3, Definition 6.8.1]: for any \(j\in {\mathbb N}\),

On the other hand, if (3.4) holds, then the Markov chain is called strongly ergodic [3, Definition 6.8.2]. The first assumption (3.2) is to guarantee strong ergodicity from weak ergodicity, as well as the existence of the limiting measure \(\pi \).

To apply this proposition to the SCA, it is crucial to find a cooling schedule \(\{\beta _t\}_{t\in {\mathbb N}}\) under which the two assumptions (3.2)–(3.3) hold, and to show that the limiting measure is the uniform distribution \(\pi ^{\scriptscriptstyle \mathrm G}_\infty \) over GS. Here is the main statement on the simulated annealing for the SCA.

Theorem 3.2

Suppose that the pinning parameters \(\varvec{q}\) satisfy the condition (1.13). For any non-decreasing sequence \(\{\beta _t\}_{t\in {\mathbb N}}\) satisfying \(\lim _{t\uparrow \infty }\beta _t=\infty \), we have

In particular, if we choose \(\{\beta _t\}_{t\in {\mathbb N}}\) as

then we obtain

As a result, for any initial \(j\in {\mathbb N}\),

Proof

Since (3.9) is an immediate consequence of Proposition 3.1, (3.6) and (3.8), it remains to show (3.6) and (3.8).

To show (3.6), we first define

where \(m=\min _{\varvec{\sigma },\varvec{\eta }}{\tilde{H}}(\varvec{\sigma },\varvec{\eta })\). Since \(\varvec{q}\) is chosen to satisfy (1.15), we can conclude that

Summing this over \(\varvec{\tau }\in \Omega \equiv \{\pm 1\}^V\) yields the second relation in (3.6). To show the first relation in (3.6), we note that

and that \({\mathbb E}_{\mu _\beta }[{\tilde{H}}]\equiv \sum _{\varvec{\sigma },\varvec{\tau }}{\tilde{H}} (\varvec{\sigma },\varvec{\tau })\,\mu _\beta (\varvec{\sigma },\varvec{\tau })\) tends to m as \(\beta \uparrow \infty \), due to (3.11). Therefore, \(\frac{\partial }{\partial \beta }\mu _\beta (\varvec{\sigma },\varvec{\tau })>0\) for all \(\beta \) if \({\tilde{H}}(\varvec{\sigma },\varvec{\tau })=m\), while it is negative for sufficiently large \(\beta \) if \({\tilde{H}}(\varvec{\sigma },\varvec{\tau })>m\). Let \(n\in {\mathbb N}\) be such that, as long as \(\beta \ge \beta _n\), (3.12) is negative for all pairs \((\varvec{\sigma },\varvec{\tau })\) satisfying \({\tilde{H}}(\varvec{\sigma },\varvec{\tau })>m\). As a result,

holds uniformly for \(N\ge n\). This completes the proof of (3.6).

To show (3.8), we use the following bound on \(P^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}\), which holds uniformly in \((\varvec{\sigma },\varvec{\tau })\):

Then, by (3.1), we obtain

which diverges, as required, under the cooling schedule (3.7). This completes the proof of the theorem. \(\square \)

Remark 3.3

-

(i)

The main message contained in the above theorem is that, in order to achieve weak convergence to the uniform distribution over the ground states, it is enough for the temperature to drop no faster than \(1/\log t\) with a large multiplicative constant \(\Gamma \). For logarithmic schedules, due to our approach, it is not trivial to ensure whether the value of \(\Gamma \) as in (3.7) is optimal for the SCA, contrasting with the Metropolis dynamics, whose optimal value can be theoretically determined, see [13].

-

(ii)

Simulated annealing with a logarithmic cooling schedule may not be so practical in finding a ground state within a feasible amount of time. Instead, an exponential cooling schedule is often used in engineering. In [17, 29], we have developed annealing processors called Amorphica and STATICA, that rely on the SCA with an exponential schedule. Experimental results have shown faster in searching for a ground state than conventional simulated annealing (based on the Glauber dynamics with an exponential schedule) and better performance in finding solutions to a max-cut problem. The authors are investigating the use of exponential schedules. We do not expect weak convergence to the uniform distribution over the ground states. Instead, we want to evaluate the probability that the SCA with an exponential schedule reaches a spin configuration \(\varvec{\sigma }\) such that \(H(\varvec{\sigma })-\min H\) is within a given error margin. A similar problem was considered by Catoni [5] for the Metropolis dynamics with an exponential schedule.

-

(iii)

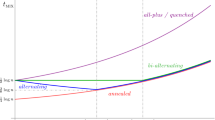

However, this may imply that we have not yet been able to make the most of the SCA’s independent multi-spin flip rule for better cooling schedules. The use of the total-variation distance may be one of the reasons why we have to impose such tight conditions on the temperature; if two measures \(\mu \) and \(\nu \) on \(\Omega \) are close in total variation, then \(|\mu (\varvec{\sigma })-\nu (\varvec{\sigma })|\) must be small at every \(\varvec{\sigma }\in \Omega \). We should keep in mind that the most important thing in combinatorial optmization is to know the ordering among spin configurations, and not to perfectly fit \(\pi ^{\scriptscriptstyle \mathrm G}_\beta \) by \(\pi ^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}\). For example, \(\pi ^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}\) does not have to be close to \(\pi ^{\scriptscriptstyle \mathrm G}_\beta \) in total variation, as long as we can say instead that \(H(\varvec{\sigma })\le H(\varvec{\tau })\) (or equivalently \(\pi ^{\scriptscriptstyle \mathrm G}_\beta (\varvec{\sigma })\ge \pi ^{\scriptscriptstyle \mathrm G}_\beta (\varvec{\tau })\)) whenever \(\pi ^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}(\varvec{\sigma })\ge \pi ^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}(\varvec{\tau })\) (see Fig. 1). In [14], we introduced a slightly relaxed version of this notion of closeness, which is also used in the stability analysis [10]. Given an error ratio \(\varepsilon \in (0,1)\), the SCA equilibrium measure \(\pi ^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}\) is said to be \(\varepsilon \)-close to the target Gibbs \(\pi ^{\scriptscriptstyle \mathrm G}_\beta \) in the sense of order-preservation if

$$\begin{aligned} \pi ^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}(\varvec{\sigma })\ge \pi ^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}(\varvec{\tau }) \quad \Rightarrow \quad H(\varvec{\sigma })\le H(\varvec{\tau })+\varepsilon R_H~~ \Big (\Leftrightarrow ~\pi ^{\scriptscriptstyle \mathrm G}_\beta (\varvec{\sigma })\ge \pi ^{\scriptscriptstyle \mathrm G}_\beta (\varvec{\tau })e^{-\beta \varepsilon R_H}\Big ), \end{aligned}$$(3.16)where \(R_H\equiv \max _{\varvec{\sigma },\varvec{\tau }}|H(\varvec{\sigma })-H(\varvec{\tau })|\) is the range of the Hamiltonian. By simple arithmetic [14] (with a little care needed due to the difference in the definition of \({\tilde{H}}\)), we can show that \(\pi ^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}\) is \(\varepsilon \)-close to \(\pi ^{\scriptscriptstyle \mathrm G}_\beta \) if, for all \(x\in V\),

$$\begin{aligned} q_x\ge |h_x|+\sum _y|J_{x,y}|+\frac{1}{\beta }\log \frac{2|V|(|h_x|+\sum _y|J_{x,y}|)}{\varepsilon R_H}. \end{aligned}$$(3.17)Unfortunately, this is not better than (1.13), which we recall is a sufficient condition for \(\pi ^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}\) to attain the highest peaks over GS, and not anywhere else. Since \(|V|(|h_x|+\sum _y|J_{x,y}|)/R_H\) in the logarithmic term in (3.17) is presumably of order 1, we can say that, if the assumption (1.13) is slightly tightened to \(q_x\ge |h_x|+\sum _y|J_{x,y}|+O_\varepsilon (\beta ^{-1})\), then the SCA can be used to find not only the best options in combinatorial optimization, but also the second- and third-best options, etc. In an ongoing project, we are also aiming to improve the cooling schedule under the new notion of closeness.

On the left, \(\Vert \pi ^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}-\pi ^{\scriptscriptstyle \mathrm G}_\beta \Vert _{\scriptscriptstyle \mathrm TV}\) is small, but the ordering among spin configurations is not preserved. On the right, \(\Vert \pi ^{\scriptscriptstyle \mathrm SCA}_{\beta ,\varvec{q}}-\pi ^{\scriptscriptstyle \mathrm G}_\beta \Vert _{\scriptscriptstyle \mathrm TV}\) is not small, but the ordering among spin configurations is preserved

4 Comparisons and Simulations

Based on the discussion from Remark 2.3(ii), let us propose a new algorithm derived from the SCA studied in this paper and make a quick comparison regarding their effectiveness in obtaining the ground states. Given a fixed inverse temperature \(\beta \ge 0\) and a number \(\varepsilon \in [0,1]\), let the transition matrix of the \(\varepsilon \)-SCA be defined by

where we recall that

is the probability of flipping the spin \(\sigma _x\) from the configuration \(\varvec{\sigma }\) disregarding a pinning parameter at x. Note that \(1-\varepsilon p_x(\varvec{\sigma }) = (1 - \varepsilon ) + \varepsilon (1 - p_x(\varvec{\sigma }))\). Therefore, we can visualize this new algorithm by decomposing it into two steps: in the first step, the spins which are eligible to be flipped are selected independently at random, where each spin is selected with probability \(\varepsilon \), while it remains unchanged with probability \(1 - \varepsilon \); in the second step all spins which were selected in the previous step are updated simultaneously and independently, where the probability of flipping the spin at x is equal to \(p_x(\varvec{\sigma })\).

Note that, in the particular case where \(\varepsilon = 1\), the update rule we have just introduced coincides with the SCA transition probability without the pinning parameters. Our experience [8, 9, 17] has shown that, for a fixed Hamiltonian, exponential cooling schedule and number of Markov chain steps, the simulated annealing algorithm based on \(\varepsilon \)-SCA with appropriately chosen parameter \(\varepsilon \) surpasses the performance of the algorithms based on SCA and Glauber dynamics with respect to the success probability in obtaining an approximation for a ground state. Later in this section, we will return to the question of how the performance of this algorithm is affected by the value of the parameter \(\varepsilon \).

Now, let us make a comparison between the performances of the simulated annealing algorithms based on Glauber dynamics, SCA (with pinning parameters uniformly taken as \(q_x = \frac{\lambda }{2}\)) and \(\varepsilon \)-SCA applied to the particular problems of determining the maximum cut of a given graph and to the minimization of a spin-glass Hamiltonian. Even though we only have rigorous results that justify the application of logarithmic cooling schedules to the Glauber dynamics [1, 3] and to the SCA, our practice has shown that exponential cooling schedules may also work for all these three algorithms, but we still do not have a solid theoretical justification for that. Let us restrict ourselves to the case where we have \(N = 128\) spin variables. The plots from Fig. 2 illustrate the histograms of the minimal energies achieved by running \(M = 1024\) independent trials starting from randomly chosen initial spin configurations, where for each trial, we applied \(L = 20000\) Markov chain steps and considered the exponential cooling schedule with initial temperature \(T_\text {init} = 1000\) and final temperature \(T_\text {fin} = 0.05\), explicitly, we considered

for \(t = 1, 2, \dots , L\).

First, we fixed a randomly generated Erdös-Rényi random graph G(N, p) with \(N = 128\) vertices and edge probability \(p = 0.25\), and considered the Hamiltonian corresponding to the max-cut problem on G(N, p), where \(h_x = 0\) for every vertex x, and \(J_{x,y} = -1\) if \(\{x,y\}\) is an edge of the graph, and \(J_{x,y} =0\) otherwise. The smallest energy obtained by the Glauber dynamics and the \(\varepsilon \)-SCA with parameter \(\varepsilon = 0.3\) was equal \(-476\), reached with success rates of \(4.69\%\) and \(83.50\%\), respectively, while the smallest energy obtained by the SCA was \(-474\), reached with a success rate of \(0.19\%\). Later, we fixed a spin-glass Hamiltonian on the complete graph \(K_N\), where \(N = 128\), whose spin-spin coupling constants were taken as the realizations of mutually independent standard normal random variables. In this case, the three algorithms obtained the same lowest energy, equal to \(-1052.57\), with a success rate of \(3.32\%\) for the Glauber dynamics, \(38.67\%\) for the SCA, while the obtained for the \(\varepsilon \)-SCA with parameter \(\varepsilon = 0.8\) was of \(61.33\%\). All such results are summarized in Table 1. These examples are consistent with our observations in [8, 9, 17], where it was possible to conclude that the SCA outperforms the Glauber dynamics in certain scenarios where anti-ferromagnetic interactions are not prevalent, and the \(\varepsilon \)-SCA outperforms both algorithms in all the studied scenarios.

The role played by the parameter \(\varepsilon \) in the \(\varepsilon \)-SCA is analogous to the one played by the pinning parameters \(\varvec{q}=\{q_x\}_{x\in V}\) in the SCA. However, the difference is that the pinning effect for the SCA gets stronger as we decrease the temperature, so, the system will tend to flip less and less spins and might get stuck in an energetic local minimum. Regarding the \(\varepsilon \)-SCA, due to the absence of the pinning parameters in the local transition probabilities and due to the effect of \(\varepsilon \) not being influenced by the temperature, the probability of flipping a certain spin will be larger compared to the SCA. Thus, this new algorithm allows the system to visit more configurations, especially at low temperatures, while preventing it from getting stuck in a local minimum. Nevertheless, a rigorous explanation that indicates what leads the system to converge to a ground state is still under investigation.

In order to get some intuition about the dependence on \(\varepsilon \) of the success rate of reaching a ground state, we considered the same max-cut problem and spin-glass Hamiltonian again and performed \(M=1024\) \(\varepsilon \)-SCA annealing trials for different values of \(\varepsilon \) with the same cooling schedule and number of Markov chain steps as before. Typically, for Hamiltonians containing predominantly anti-ferromagnetic pairwise interactions (such as the Hamiltonian corresponding to the max-cut problem), such parameter \(\varepsilon \) has to be taken relatively small since the system tends to show an oscillatory behavior and does not converge to a ground state as we allow a larger number of spins to be flipped at a time, see Fig. 3a. On the other hand, for the spin-glass Hamiltonian, there is a tendency of growth of the success rate as the parameter \(\varepsilon \) increases. However, when \(\varepsilon \) gets sufficiently close to 1, the algorithm behaves similarly to the SCA with pinning parameters \(q_x = 0\), so the success rate decreases since the system will not necessarily converge to a ground state of the Hamiltonian, see Fig. 3b. Differently from the SCA, in which we have a sufficient condition on the values for the pinning parameters that guarantee the convergence of the algorithm, it is still necessary to derive an analogous condition on \(\varepsilon \).

As we mentioned previously in this paper, the parallel spin update, which is the most notorious feature shared by the SCA and the \(\varepsilon \)-SCA, leads us to consider hardware accelerators that can fully take advantage of such parallel nature and be very decisive in solving large-scale combinatorial optimization problems within a shorter amount of time as compared to these algorithms implemented on an average consumer-level device. STATICA [29] and Amorphica [17] are cutting-edge annealing processors developed to accommodate SCA and \(\varepsilon \)-SCA annealing algorithms and have achieved results with higher precision, energy efficiency, and shorter execution times, compared to other state-of-art devices. In order to test the efficiency of our algorithms implemented in consumer-level processors, we evaluated the annealing time of Glauber dynamics, SCA, and \(\varepsilon \)-SCA, by executing each algorithm in C++ (for running on a CPU) and C++/CUDA (for running on a GPU). In this evaluation, we ran the C++ code on an Intel Core i7-9700 Processor and the GPU kernel on an NVIDIA GeForce RTX 2080 Ti. Let N be the number of spin variables involved and M the number of annealing trials/replicas. The execution time on a CPU is approximately proportional to \(N^2 \times M\). In contrast, thanks to the parallel computing capability, the execution efficiency can be improved on a GPU by increasing both N and M. Due to the relevance of fully connected spin systems in real applications, we have considered in this evaluation spin-glass Hamiltonians on complete graphs with \(N = 2^n\) (\(7 \le n \le 12\)), and we performed simulated annealing once (\(M = 1\)) or 128 times (\(M = 128\)), where each annealing trial consisted of executing \(L = 20000\) Markov chain steps. In the results summarized in Table 2, each value represents the total annealing time per replica (i.e., the total annealing time \(t_A\) divided by M) measured in milliseconds. Let us note that the algorithms implemented on a GPU have significantly shorter execution times compared to their CPU implementation counterparts. Although the annealing time per trial for the Glauber dynamics, SCA, and \(\varepsilon \)-SCA are approximately the same when implemented on a GPU, such an observation can be compensated by the fact that, as we will see in the following discussion, the \(\varepsilon \)-SCA and the SCA outperform Glauber dynamics in terms of the success rate of finding a ground state.

In order to understand the dependence of the performance of the algorithms with respect to certain variables, such as the system size N and the number of Markov Chain steps L, we performed simulations on a GPU specifically for the problem of minimization of a spin-glass Hamiltonian on a complete graph considering a fixed number of replicas \(M = 1024\) and a cooling schedule such as in equation (4.3) with initial temperature \(T_\text {init} = 1000\) and final temperature \(T_\text {fin} = 0.05\). In Table 3, corresponding to each algorithm, we analyze its success rate \(\rho _\text {min}\) of hitting the smallest energy reached among all algorithms, the average minimum energy \(H_{\text {mean}}^{\text {(min)}}\) obtained, and total annealing time per replica \(t_A / M\). As expected, we observe that for all algorithms, the success rates increase and the average minimum energy decrease as the number of Markov chain steps assume larger values. In that respect, the values of \(\rho _\text {min}\) and \(H_{\text {mean}}^{\text {(min)}}\) for SCA and \(\varepsilon \)-SCA (with \(\varepsilon = 0.8\)) are consistently better compared to the Glauber dynamics. Furthermore, the performances associated with the Glauber dynamics and SCA rapidly decrease as we consider problems with a larger number of vertices, while the \(\varepsilon \)-SCA keeps the highest performance among the three algorithms.

The greater effectiveness of the \(\varepsilon \)-SCA in reaching lower energy configurations in several observations is very intriguing due to the lack of any rigorous mathematical justification (at the moment) for that. Therefore, it raises several questions to be answered that bring us a new direction to be explored for the development of efficient algorithms for obtaining ground states of Ising Hamiltonians. Moreover, in future investigations, hardware-related questions should be addressed, such as memory bandwidth problems and efficient implementation of parallel spin-flip algorithms on a GPU, similar to those explored in [4].

References

Aarts, E., Korst, J.: Simulated Annealing and Boltzmann Machines: A Stochastic Approach to Combinatorial Optimization and Neural Computing. Wiley, Hoboken (1989)

Aramon, M., Rosenberg, G., Valiante, E., Miyazawa, T., Tamura, H., Katzgraber, H.G.: Physics-inspired optimization for quadratic unconstrained problems using a digital annealer. Front. Phys. 7, 48 (2019)

Brémaud, P.: Markov Chains: Gibbs Fields, Monte Carlo Simulation, and Queues. Texts in Applied Mathematics. Springer, New York (2001)

Cagigas-Muñiz, D., Diaz-del Rio, F., Sevillano, J.L., Guisado, J.L.: Efficient simulation execution of cellular automata on GPU. Simul. Modell. Pract. Theory 118, 102519 (2022)

Catoni, O.: Rough large deviation estimates for simulated annealing: application to exponential schedules. Ann. Probab. 20, 1109–1146 (1992)

Černý, V.: Thermodynamical approach to the traveling salesman problem: an efficient simulation algorithm. J. Optim. Theory Appl. 45(1), 41–51 (1985)

Dai Pra, P., Scoppola, B., Scoppola, E.: Sampling from a Gibbs measure with pair interaction by means of PCA. J. Stat. Phys. 149, 722–737 (2012)

Fukushima-Kimura, B.H., Kamijima, Y., Kawamura, K., Sakai, A.: Stochastic optimization via parallel dynamics: rigorous results and simulations. Proc. ISCIE Int. Symp. Stoch. Syst. Theory Appl. 65–71, 2022 (2022)

Fukushima-Kimura, B.H., Kamijima, Y., Kawamura, K., Sakai, A.: Stochastic optimization - Glauber dynamics versus stochastic cellular automata. Trans. Inst. Syst. Control Inf. Eng. 36(1), 9–16 (2023)

Fukushima-Kimura, B.H., Sakai, A., Toyokawa, H., Ueda, Y.: Stability of energy landscape for Ising models. Physica A 583, 126208 (2021)

Garey, M.R., Johnson, D.S.: Computers and Intractability: A Guide to the Theory of NP-Completeness. Mathematical Sciences Series, Freeman, Austin (1979)

Glauber, R.J.: Time-dependent statistics of the Ising model. J. Math. Phys. 4(2), 294–307 (1963)

Hajek, B.: Cooling schedules for optimal annealing. Math. Oper. Res. 13(2), 311–329 (1988)

Handa, S., Kamakura, K., Kamijima, Y., Sakai, A.: Finding optimal solutions by stochastic cellular automata. arXiv: Optimization and Control (2019)

Hayes, T.P., Sinclair, A.: A general lower bound for mixing of single-site dynamics on graphs. Ann. Appl. Probab. 17(3), 931–952 (2007)

Isakov, S.V., Zintchenko, I.N., Rønnow, T.F., Troyer, M.: Optimised simulated annealing for Ising spin glasses. Comput. Phys. Commun. 192, 265–271 (2015)

Kawamura, K., Yu, J., Okonogi, D., Jimbo, S., Inoue, G., Hyodo, A., Garcìa-Arias, A.L., Ando, K., Fukushima-Kimura, B.H., Yasudo, R., Van Chu 1, T., Motomura, M.: 2.3 Amorphica: 4-replica 512 fully connected spin 336mhz metamorphic annealer with programmable optimization strategy and compressed-spin-transfer multi-chip extension. In: Proceedings of the 2023 IEEE International Solid- State Circuits Conference (2023)

Kirkpatrick, S., Gelatt, C.D., Vecchi, M.P.: Optimization by simulated annealing. Science 220(4598), 671–680 (1983)

Lawler, E.L.: Combinatorial Optimization: Networks and Matroids. Holt, Rinehart and Winston, New York (1976)

Levin, D.A., Peres, Y.: Markov Chains and Mixing Times. American Mathematical Society, Providence (2017)

Lucas, A.: Ising formulations of many NP problems. Front. Phys. 2, 5 (2014)

Metropolis, N., Rosenbluth, A.W., Rosenbluth, M.N., Teller, A.H., Teller, E.: Equation of state calculations by fast computing machines. J. Chem. Phys. 21(6), 1087–1092 (1953)

Okuyama, T., Sonobe, T., Kawarabayashi, K., Yamaoka, M.: Binary optimization by momentum annealing. Phys. Rev. E 100(1), 012111 (2019)

Papadimitriou, C.H., Steiglitz, K.: Combinatorial Optimization: Algorithms and Complexity. Prentice-Hall Inc, Hoboken (1982)

Scoppola, B., Troiani, A.: Gaussian mean field lattice gas. J. Stat. Phys. 170(6), 1161–1176 (2018)

Swendsen, R.H., Wang, J.S.: Nonuniversal critical dynamics in Monte Carlo simulations. Phys. Rev. Lett. 58, 86–88 (1987)

van Laarhoven, P.J., Aarts, E.H.: Simulated Annealing: Theory and Applications. Mathematics and Its Applications. Springer, Dordrecht (1987)

Wong, D.F., Leong, H.W., Liu, H.W.: Simulated Annealing for VLSI Design. The Springer International Series in Engineering and Computer Science. Springer, New York (1988)

Yamamoto, K., Kawamura, K., Ando, K., Mertig, N., Takemoto, T., Yamaoka, M., Teramoto, H., Sakai, A., Takamaeda-Yamazaki, S., Motomura, M.: STATICA: A 512-spin 0.25M-weight annealing processor with an all-spin-updates-at-once architecture for combinatorial optimization with complete spin-spin interactions. IEEE J. Solid-State Circuits 56(1), 165–178 (2021)

Acknowledgements

We are grateful to the following members for continual encouragement and stimulating discussions: Masato Motomura from Tokyo Institute of Technology; Shinya Takamaeda-Yamazaki from the University of Tokyo; Hiroshi Teramoto from Kansai University; Takashi Takemoto, Takuya Okuyama, Normann Mertig and Kasho Yamamoto from Hitachi Ltd.; Masamitsu Aoki and Suguru Ishibashi from the Graduate School of Mathematics at Hokkaido University; Hisayoshi Toyokawa from Kitami Institute of Technology; Yuki Ueda from Hokkaido University of Education.

Funding

This work was supported by JST CREST Grant Number PJ22180021, Japan. All data generated or analyzed during this study are included in this published article. The authors have no relevant financial or non-financial interests to disclose.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Eric A. Carlen.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Fukushima-Kimura, B.H., Handa, S., Kamakura, K. et al. Mixing Time and Simulated Annealing for the Stochastic Cellular Automata. J Stat Phys 190, 79 (2023). https://doi.org/10.1007/s10955-023-03090-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10955-023-03090-x