Abstract

A general approach for the derivation of nonlinear parameterizations of neglected scales is presented for nonlinear systems subject to an autonomous forcing. In that respect, dynamically-based formulas are derived subject to a free scalar parameter to be determined per mode to parameterize. For each high mode, this free parameter is obtained by minimizing a cost functional—a parameterization defect—depending on solutions from direct numerical simulation (DNS) but over short training periods of length comparable to a characteristic recurrence or decorrelation time of the dynamics. An important class of dynamically-based formulas, for our parameterizations to optimize, are obtained as parametric variations of manifolds approximating the invariant ones. To better appreciate the origins of the modified manifolds thus obtained, the standard approximation theory of invariant manifolds is revisited in Part I of this article. A special emphasis is put on backward–forward (BF) systems naturally associated with the original system, whose asymptotic integration provides the leading-order approximation of invariant manifolds. Part II presents then (i) the modifications of these approximating manifolds based also on integration of the same BF systems but this time over a finite time \(\tau \), and (ii) the variational approach aimed at making an efficient selection of \(\tau \) per mode to parameterize. The parametric class of leading interaction approximation (LIA) of the high modes obtained this way, is completed by another parametric class built from the quasi-stationary approximation (QSA); close to the first criticality, the QSA is an approximation to the LIA, but it differs as one moves away from criticality. Rigorous results are derived that show that—given a cutoff dimension—the best manifolds that can be obtained through our variational approach, are manifolds which are in general no longer invariant. The minimizers are objects, called the optimal parameterizing manifolds (PMs), that are intimately tied to the conditional expectation of the original system, i.e. the best vector field of the reduced state space resulting from averaging of the unresolved variables with respect to a probability measure conditioned on the resolved variables. Applications to the closure of low-order models of Atmospheric Primitive Equations and Rayleigh–Bénard convection are then discussed. The approach is finally illustrated—in the context of the Kuramoto–Sivashinsky turbulence—as providing efficient closures without slaving for a cutoff scale \(k_\mathfrak {c}\) placed within the inertial range and the reduced state space is just spanned by the unstable modes, without inclusion of any stable modes whatsoever. The underlying optimal PMs obtained by our variational approach are far from slaving and allow for remedying the excessive backscatter transfer of energy to the low modes encountered by the LIA or the QSA parameterizations in their standard forms, when they are used at this cutoff wavelength.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A number of theories have been proposed to explain the phenomenon of turbulence in fluid dynamics, but none has been universally accepted. Landau [117] and Hopf [93] suggested that turbulence is the result of an infinite sequence of bifurcations, each adding another independent period to a quasi-periodic motion of increasingly greater complexity. More recently, it has been shown numerically that the original quasiperiodic Landau’s view of turbulence, with the amendment of the inclusion of stochasticity, may be well suited to describe certain turbulent behavior [105], at least for the motion of large eddies. In the 1970s it has been theoretically argued and confirmed by many experiments that dynamical systems may exhibit strange attractors which result in chaotic but deterministic behavior after a (very) few bifurcations have taken place. Ruelle and Takens [151] and others have suggested this as a mechanism underlying turbulence. In realistic physical problems one is seldomly able to carry out the mathematics beyond the first or second bifurcation, in particular regarding the derivation of reduced equations that capture effectively the amplitude and frequency content of the bifurcated solutions [42, 118]. Noteworthy is normal form reduction that have been carried for degenerate singularities with simultaneous onset of co-existing and possibly many instabilities, but still close to first criticality [4, 40, 59].

It is typical of many bifurcation problems that, as the condition for instability is exceeded, increasingly many modes become unstable. This circumstance considerably complicates an effective reduction because it often corresponds to going through higher-order bifurcations to reach possibly chaos, for which a failure of the slaving principle of the unresolved variables onto the resolved ones—mandatory for the success of standard reduction techniques—is typically observed.

Center manifold techniques [42, 81, 172] require such a slaving principle to provide an efficient reduction of the dynamics, and in that sense is reliable only in the vicinity of low-order bifurcations associated with the onset of instability. Center manifolds form a particular class of more general invariant manifolds associated with a fixed point, on which solutions obey de facto a slaving principle. A comprehensive treatment of the computational aspects relative to the underlying parameterizations can be found in [85]. The treatment in [85] is based on the so-called parameterization method [16,17,18] itself built upon the invariance equation (see Eq. (2.26) below) and the associated cohomological equations that the sought (slaving) parameterization solves at different orders. The parameterization method allows for efficient computations for not only the case of invariant manifolds associated with fixed points, but also for the cases of invariant tori for autonomous or quasi-periodically forced systems, averaging and periodic diffeomorphisms [27], invariant tori in Hamiltonian systems [85], as well as normally hyperbolic invariant tori. Other complementary approaches include e.g. the Lyapunov–Schmidt reduction [77, 125] and the Lyapunov–Perron method [88, 125], as well as the usage of symmetries [77, 83].

Despite the success for analyzing a broad class of bifurcations or detecting special solutions in dynamical systems such as quasi-periodic ones, these methods relying on invariant manifold theory, have failed to prove their efficiency for reducing complicated behaviors resulting from the presence of chaos. In a certain sense, the “story” of the inertial manifold (IM) constitutes perhaps an epitome of this failure. Despite appealing mathematical results showing existence of IMs for a broad class of dissipative systems [38, 62, 66, 130, 164], and convergence error estimates when e.g. slaving is not guaranteed to be satisfied (Approximate Inertial Manifold (AIM)) [48, 52, 98, 131], early promises [55, 64, 65, 95, 96] have been challenged due to practical shortcomings pointed out for efficient closure by IMs or AIMs for turbulent flows and route chaos [46, 68, 72, 80, 87, 97, 137].

Essentially, the current IM theory [180] predicts that the underlying slaving of the high modes to the low modes, holds when the cutoff wavenumber, \(k_\mathfrak {c}\), is taken sufficiently far within the dissipative range, especially in “strongly” turbulent regimes that correspond e.g. to the presence of many unstable modes. Still, as the AIM theory underlines, satisfactory closures may be expected to be derived for \(k_\mathfrak {c}\) corresponding to scales larger than what the IM theory predicts. Nevertheless, as one seeks to further decrease \(k_\mathfrak {c}\) within the inertial range, standard AIMs fail typically in providing relevant closures and one needs to rely on no longer a fixed cutoff but instead a dynamic one so as to avoid energy accumulation on the cutoff level [50, 54, 56].

In general, to aim at closing a given chaotic system at a fixed cutoff scale such that the neglected scales contain a non-negligible fraction of the energy,Footnote 1 makes, a priori, the closure problem difficult to address. This difficulty is often manifested by either an under- or over-parameterization of the small scales, i.e. a deficient or excessive parameterization of the small-scale energy, leading to an incorrect reproduction of the backscatter transfer of energy to the large scales [9, 94, 108, 121, 140]. Thus, a deficiency in the (nonlinear) parameterization of the high modes leads to errors in the backscatter transfer of energy which is due to nonlinear interactions between the modes, especially those near the cutoff scale. We can speak of an inverse error cascade, i.e. errors in the modeling of the parameterized (small) scales that contaminate gradually the larger scales, and may spoil severely the closure skills for the resolved variables.

To remedy such a pervasive issue, it is thus reasonable, given a cutoff scale to seek for nonlinear parameterizations (manifolds) that minimize as much as possible a defect of parameterization in order to reduce spurious backscatter transfer of energy to the large scales. Obviously such manifolds should coincide with the invariant ones as one approaches towards the first bifurcation.

This latter point explains the two-part structure of our article. We show here that an important class of dynamically-based formulas for our parameterizations are obtained as parametric variations of manifolds approximating the invariant ones. To better appreciate the origins of the modified manifolds thus obtained, the standard approximation theory of invariant manifolds is revisited in Part I of this article. A special emphasis is put on backward–forward (BF) systems naturally associated with the original system, whose asymptotic integration provides the leading-order approximation of invariant manifolds.

Part II presents then (i) the modifications of these approximating manifolds based also on integration of the same BF systems but this time over a finite time \(\tau \), and (ii) the variational approach aimed at making an efficient selection of \(\tau \) per mode to parameterize, in order to minimize a parameterization defect. The parametric class of leading interaction approximation (LIA) of the high modes obtained this way, is completed by another parametric class built from the quasi-stationary approximation (QSA); close to the first criticality, the QSA is an approximation to the LIA, but differs as one moves away from criticality.

In this article our formulations are general, but our primary motivations are geophysical fluid dynamics, and our numerical illustrations are with simple systems of this type. With this in mind, we elaborate our approach for a broad class of ordinary differential equations (ODEs), that includes forced-dissipative systems of the form

Here A denotes a linear \(N\times N\) matrix, B a quadratic nonlinearity (as in the fluid advection operator) and F a constant forcing, i.e. autonomous. Such systems with complex entries arise e.g. as equations for the perturbed variable around a mean state, when the latter are expressed in the eigenbasis \(\{\varvec{e}_j\}_{j=1}^N\) of the linearization at this mean state.

We decompose the phase space into the sum of the subspace, \(E_\mathfrak {c}\), of resolved variables (“coarse-scale”), and the subspace, \(E_\mathfrak {s}\), of unresolved variables (“small-scale”). In practice \(E_\mathfrak {c}\) is spanned by the first few eigenmodes with dominant real parts (e.g. unstable), and \(E_\mathfrak {s}\) by the rest. Within this framework, and given a cutoff dimension, m (i.e. dim(\(E_\mathfrak {c}\))=m), we consider for systems such as (1.1) parametric families of nonlinear parameterizations of the form

The purpose is to dispose of parameterizations that cover situations of slaving between the resolved and unresolved variables as well as situations for which slaving is not expected to occur (e.g. far from criticality), as \(\varvec{\tau }\) is varied. In that respect, we aim at determining a family of parameterizations that include the leading-order approximation of invariant manifolds when the system is placed near the first bifurcation value. The theory of approximation of invariant manifolds revisited in Part I teaches us that such a family can be produced by finite time-integration of auxiliary BF systems derived from Eq. (1.1); see e.g. (2.29) and (4.12) below. This gives rise to the LIA class, for which taking the limit (under appropriate non-resonance conditions) of \(H_n(\tau _n,\xi ) \) as \(\tau _n\rightarrow \infty \) provides the leading-order approximation of the invariant manifold; see Theorems 1 and 2 below.

We propose a variational approach to deal with situations far away from criticality. It consists of determining the optimal \(\tau _n\)-value, \(\tau _n^*\), by minimizing (relevant) cost functionals that depend on solutions from direct numerical simulation (DNS) but over a training interval of length comparable to a characteristic recurrence or decorrelation time of the dynamics; see Sects. 5 and 6 below for applications.

Given a solution y(t) of Eq. (1.1) available over an interval \(I_T\) of length T, one such cost functional on which a substantial part of this article focuses on is given by the following parameterization defect

Here \(\overline{(\cdot )}\) denotes the time-mean over \(I_T\) while \(y_n(t)\) and \(y_\mathfrak {c}(t)\) denote the projections onto the high-mode \(\varvec{e}_n\) and the reduced state space \(E_\mathfrak {c}\) of y(t), respectively. Our goal is then to optimize \(\mathcal {Q}_n(\tau _n,T)\) by solving for each \( m+1 \le n \le N\),

This procedure corresponds to minimizing the variance of the residual error per high mode in case \(y_n\) and \(H_n\) are zero-mean, and to minimizing the residual error as measured in a least-square sense, in the general case.

Geometrically, as shown in Sect. 4.2 below, the graph of \(H_{{\varvec{\tau }}}\) gives rise to a manifold \(\mathfrak {M}_{{\varvec{\tau }}}\) that satisfies

where \(\text {dist}(y(t),\mathfrak {M}_{{\varvec{\tau }}})\) denotes the distance of y(t) (lying on the attractor) to the manifold \(\mathfrak {M}_{{\varvec{\tau }}}\).

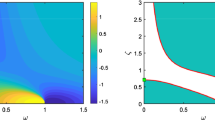

Thus minimizing each \(\mathcal {Q}_n(\tau _n,T)\) (in the \(\tau _n\)-variable) is a natural idea to enforce closeness of y(t) in a least-square sense to the manifold \(\mathfrak {M}_{{\varvec{\tau }}}\). The left panel in Fig. 1 illustrates (1.5) for the \(y_n\)-component: The optimal parameterization, \(H_n(\tau _n^*,\xi )\), minimizing (1.4) is shown; it illustrates a situation where the dynamics is transverse to it (i.e. absence of slaving) while \(H_n(\tau _n^*,\xi )\) provides the best (quadratic) parameterization in a least-square sense.

In practice, the following normalized parameterizing defect (for the nth mode), \(Q_n\), is a useful tool to compare the different parameterizations \(H_{n}(\tau ;\cdot )\) as \(\tau \) is varied. It is defined as

It provides a non-dimensional number to judge objectively of the quality of a parameterization. If \(Q_n(\tau ,T)=0\) for each \(n\ge m+1\), then \(H_{{\varvec{\tau }}}\) provides an exact slaving relation, and if \(H_n=0\) i.e. \(H_{{\varvec{\tau }}}\equiv 0\), corresponding to a standard Galerkin approximation, then \(Q_n(\tau ,T)=1\). Thus, the notion of (normalized) parameterizing defect allows us to bring another perspective on criticisms brought to the (approximate) inertial manifold theory [72, 90]: given a cutoff scale, if \(Q_n(\tau ,T)>1\) (over-parameterization) for several high modes, then a parameterization \(H_{{\varvec{\tau }}}\) may indeed lead to closure skills worse than those that would be obtained from a standard Galerkin scheme (cf. \(Q_p\) in Fig. 1; right). In other words, only a parameterization associated with a manifold that avoids such a situation is useful compared to a standard Galerkin scheme. This understanding alone is overlooked in the literature concerned with inertial manifolds and the like. We call such a manifold a parameterizing manifold (PM); see Definition 1 for a precise characterization of a PM.

Minimizing the parameterization defects leads thus to an optimal PM, for the cost functionals \(\mathcal {Q}_n\). We emphasize that each component \(H_n\), of the parameterization \(H_{{\varvec{\tau }}}\) given in (1.2), depends only on \(\tau _n\) (and not the other \(\tau _p\)’s for \(p\ne n\)), and thus the cost functionals, \(\mathcal {Q}_n\), may be minimized independently from each other.

Left panel: The optimal parameterization, \(H_n(\tau _n^*,\xi )\), minimizing (1.4) is shown (in gray). Here the dynamics (black curve) is transverse to it (i.e. absence of slaving) while \(H_n(\tau _n^*,\xi )\) provides the best (quadratic) parameterization in a least-square sense. See Fig. 4 below for a concrete example in the case of a truncated Primitive Equation model due to Lorenz [123]. The parameter \(\tau _n^*\) corresponds to the argmin of \(Q_n\) (red asterisk) shown in the right panel. Right panel: Dependence on \(\tau \) shown for two parameterization defects \(Q_n\) and \(Q_p\) given by (1.6), with \(p, n \ge m+1\). The minimum is marked by a red asterisk (Color figure online)

The parametric dependence on \({\varvec{\tau }}\) of \(H_{{\varvec{\tau }}}\) is of practical importance. To understand this, let us consider for a moment a parameterization, \(H_n\), given as a homogeneous quadratic polynomial of the m-dimensional \(\xi \)-variable with unknown coefficients (not depending on \(\tau _n\)). To learn these coefficients via a standard regression would lead to \(m(m-1)/2\) coefficients to estimate. Instead, adopting the parametric formulation given in (1.3), only the parameter \(\tau \) needs to be learned (per high-mode) in case each coefficient of \(H_n(\tau ,\xi )\) is given by a function of \(\tau \). This way, we benefit from a significant reduction of the amount \(N_T\) of snapshots \(y(t_k)\) required from numerical integration of Eq. (1.1) to obtain robust parameterizations (in a statistical sense). Roughly speaking, if \(N_T\) is smaller or comparable to \(m(m-1)/2\), then learning the unknown (and arbitrary) coefficients of a homogeneous quadratic parameterization (not given under the parametric form (1.3)) is either undetermined or not robust statistically.

Explicit formulas for the coefficients of \(H_n(\tau ,\xi )\) are derived in Sects. 4.3 and 4.4 below. These formulas are dynamically-based in the sense that these coefficients involve structural elements of the right-hand side (RHS) of Eq. (1.1) such as the eigenvalues \(\beta _j\) of A, projections onto the \(n^{\mathrm{th}}\) high-mode of nonlinear interactions \(B_{ij}^n\) between pairs of low eigenmodes \((\varvec{e}_i, \varvec{e}_j)\) of A (\(1 \le i,j \le m\)), as well as possible nonlinear interactions between these modes and the forcing term.

For instance, for the LIA class, the coefficients of the \(H_n(\tau ,\xi )\)’s monomials are given by \(D_{ij}^n (\tau )B_{ij}^n\) with

We emphasize that at an heuristic level, the coefficient \(D_{ij}^n (\tau )\) allows for balancing the denominator \(\delta _{ij}^n\) by the numerator \(1-e^{-\tau \delta _{ij}^n}\) when the former is small. Such compensating \(\tau \)-factors are in general absent from parameterizations built from invariant manifold or (approximate) inertial manifolds techniques.

From the approximation theory of invariant manifolds revisited in Part I below, one notes that \(D_{ij}^n (\tau )\) is equal to \(1/\delta _{ij}^n\) in the case of standard approximation formulas of invariant manifolds (Theorem 2), corresponding thus to the asymptotic case \(\tau \rightarrow \infty \) if \(\delta _{ij}^n>0\). When adopting these approximation formulas outside their domain of applicability (i.e. not for approximating an underlying invariant manifold), it corresponds typically to small \(\delta _{ij}^n\)’s which without the compensating \(\tau \)-factors lead to an over-parameterization and an incorrect reproduction of the backscatter transfer of energy to the large scales. This problem is typically encountered in invariant manifold approximation when small spectral gaps are present, regardless of whether the solution dynamics is simple or complicated; see the Supplementary Material for a simple example. It turns out that, to seek for an optimal backward integration time \(\tau \) actually helps alleviate this problem by introducing numerators balancing the small denominators present in standard LIA parameterizations such as provided by Theorem 2 below.

At the same time, \(\tau =0\) implies \(D_{ij}^n (\tau )=0\), which corresponds to the null parameterization, namely to a Galerkin approximation of dimension m. Thus, minimizing the \(Q_n\)’s gives rise to an intermediate (and optimized) parameterization compared to a Galerkin approximation (\(H_n=0\)) or an invariant manifold approximation (\(Q_n=0\)).

The right panel in Fig. 1 shows a typical dependence on \(\tau \) of the \(Q_n\)’s defined in (1.6) for the LIA class. Similar dependences hold for the QSA class. On a practical ground, the minimization problem (1.4) is greatly facilitated by exploiting the explicit formulas of Sects. 4.3 and 4.4. An efficient minimization can be indeed operated by application of a simple gradient-descent algorithm in the real variable \(\tau \), when the appropriate moments up to fourth order have been estimated; see Appendix.

We emphasize that the parameterization formulas of the LIA or QSA classes can be derived for dissipative nonlinear partial differential equations (PDEs) as well; see Sect. 6 below. The LIA class as rooted in the backward–forward method mentioned above was initially introduced for PDEs (possibly driven by a multiplicative linear noise) in [31, Chap. 4] and was applied to the closure of a stochastic Burgers equation in [31, Chaps. 6 & 7] and to optimal control in [26]. The main novelty compared to these previous works is the idea of optimizing per high mode the backward integration time, \(\tau _n\), by minimization of the parameterization defect \(Q_n\). Here, we also restrict ourselves to quadratic parameterizations that we prefer to optimize instead of computing higher-order terms that although being potentially useful make more cumbersome the numerical integration of the corresponding closure systems by adding too many extra terms in the RHS of the latter.

The justification of the variational approach proposed in this article relies on the ergodic theory of dissipative deterministic dynamical systems. In that respect, given the flow \(T_t\) associated with Eq. (1.1), we assume in Part II of this article that \(T_t\) possesses an invariant probability measure \(\mu \), which is physically relevant [37, 57], in the sense that time-average equals to ensemble average for trajectories emanating from Lebesgue almost every initial condition. More precisely, we say that the invariant measure, \(\mu \), is physical if the following property holds for y in a positive Lebesgue measure set \(B(\mu )\) (of \(\mathbb {C}^N\)) and for every continuous observable \(\varphi :\mathbb {C}^N\rightarrow \mathbb {C}\)

This property assures that meaningful averages can be calculated and the statistics of the dynamical system can be investigated by the asymptotic distribution of orbits starting from Lebesgue almost every initial condition in e.g. the basin of attraction \(B(\mu )\) of the statistical equilibrium, \(\mu \).

It can be proven for e.g. Anosov flows [13], partially hyperbolic systems [1], Lorenz-like flows [12], and observed experimentally for many others [28, 33, 57, 71] that a common feature of (dissipative) chaotic systems is the transformation (under the action of the flow) of the initial Lebesgue measure into a probability measure with finer and finer scales, reaching asymptotically an invariant measure \(\mu \) of Sinai–Ruelle–Bowen (SRB) type. This measure is singular with respect to the Lebesgue measure, is supported by the local unstable manifolds contained in the global attractor or the non-wandering set [37, Definition 6.14], and if it has no zero Lyapunov exponents it satisfies (1.8) [177]. This latter property is often referred to as the chaotic hypothesis that, roughly speaking, expresses an extension of the ergodic hypothesis to non-Hamiltonian systems [71].

At the core of our analysis, is the disintegration \(\mu _\xi \) of statistical equilibrium \(\mu \) with respect to the resolved variable \(\xi \) in \(E_\mathfrak {c}\); see [23, Sec. 3]. In our case, the probability measure \(\mu _\xi \) gives the conditional probability of the unresolved variables (in \(E_\mathfrak {s}\)), contingent upon the value taken by the resolved variable \(\xi \). Denoting by \(y_\mathfrak {s}(t)\) the high-mode projection of y(t), Theorem 4 below shows, under a natural boundedness assumption on the 2nd-order moments, that the optimal PM that minimizes the defect

with \(\varPsi \) denoting a square-integrable mappingFootnote 2 from \(E_\mathfrak {c}\) to \(E_\mathfrak {s}\), is given, when \(T \rightarrow \infty \), by

This formula shows that the optimal PM corresponds actually to the manifold that maps to each resolved variable \(\xi \) in \(E_\mathfrak {c}\), the averaged value of the unresolved variable \(\zeta \) in \(E_\mathfrak {s}\) as distributed according to the conditional probability measure \(\mu _\xi \). In other words, the optimal PM provides the best manifold (in a least-square sense) that averages out the fluctuations of the unresolved variable. The closure system that consists of approximating the unresolved variables by this optimal parameterization provides then, when the high-mode to high-mode interactions are small, the conditional expectation of the original system; see Theorem 5 below. The latter provides the best vector field of the reduced state space for which the effects of the unresolved variables are averaged out with respect to the probability measure \(\mu _\xi \) on the space of unresolved variables, itself conditioned on the resolved variables. For slow-fast systems, in the limit of infinite time-scale separation, it is well-known that the slow dynamics is approximated (on bounded time scales) by the conditional expectation of the multiscale system [100, 101, 138] and that slow trajectories may be obtained through a variational principle [119]. Nevertheless, the conditional expectation may be useful to approximate other global features of the multiscale dynamics when time-scale separation is lacking. For instance, the low-frequency variability dynamics may be well approximated for chaotic systems that do not exhibit distinguished fast variables but rather episodic bursts of fast oscillations punctuated by slow oscillations for each variable; see [32] and Sect. 3.4 below.

The optimal PM, \(\varPsi ^*\), comes with a normalized parameterization defect, \(Q_T(\varPsi ^*)=\mathcal {Q}_T(\varPsi ^*)/\overline{\Vert y_\mathfrak {s}(t)\Vert ^2}\), that satisfies necessarily (Theorem 4)

This variational view on the parameterization problem of the unresolved variables removes any sort of ambiguity that has surrounded the notion of (approximate) inertial manifold in the past. Indeed, within this paradigm shift, given an ergodic invariant measure \(\mu \) and a reduced dimension m, the optimal PM may have a parameterization defect very close to 1 and thus the best possible nonlinear parameterization one could ever imagine may not a priori do much better than a classical Galerkin approximation, and sometimes even worse. To the opposite, the smaller \(Q_T(\varPsi ^*)\) is (for T large), the better the parameterization. All sort of nuances are actually admissible, even when the parameterization defect is just below unity; see [32].

The parameterization defect analysis will be often completed by the evaluation of the correlation parameterization, c(t) (see (3.6)), that provides a measure of collinearity between the parameterized variable \(\varPsi (y_{\mathfrak {c}}(t))\) and the unresolved variable \(y_{\mathfrak {s}}(t)\), as time evolves. It allows thus for measuring how far from a slaving situation a given PM is on a more geometrical ground than with \(Q_T\) (Sect. 3.1). As we will see in applications, the parameterization correlation allows us, once an optimal PM has been determined, to select the dimension m of the reduced state space according to the following criterium: m should correspond to the lowest dimension of \(E_\mathfrak {c}\) for which the probability distribution function (PDF) of the corresponding parameterization angle, \(\alpha (t)=\arccos (c(t)), \) is the most skewed towards zero and the mode (i.e. the value that appears most often) of this PDF is the closest to zero. The basic idea is that one should not only parameterize properly the statistical effects of the neglected scales but also avoid to lose their phase relationships with the retained scales [132]. This is particularly important to derive closures that respect a certain phase coherence between the resolved and unresolved scales.

Although finite-time error estimates are easily accessible when PMs are used to derive surrogate low-dimensional systems in view of the optimal control of dissipative nonlinear PDEs (see e.g [26, Theorem 1 & Corollary 2]), error estimates that relate the parameterization defect to the ability of reproducing the original dynamics’s long term statistics by a surrogate system are difficult to produce for uncontrolled deterministic systems, in particular for chaotic regimes, due to the singular nature (with respect to the Lebesgue measure) of the invariant measure \(\mu \) satisfying (1.8). In the stochastic realm, this invariant measure becomes smooth for a broad class of systems and the tools of stochastic analysis make the obtention of such estimates more amenable albeit non trivial; see [21]. Nevertheless, as discussed above, considerations from ergodic theory and conditional expectations are already insightful for the deterministic systems dealt with in this article. They allow us to envision the addition of memory effects (non-Markovian terms) and/or stochastic parameterizations when a PM alone is not sufficient to provide an accurate enough closure. The addition of such ingredients are beyond the scope of this article, but are outlined in the Concluding Remarks (Sect. 7) as a natural direction to extend the present work. The latter sets up a framework for determining, via dynamically-based formulas to optimize, approximations of the Markovian terms arising in the Mori-Zwanzig formalism [34, 79]; this formalism providing a conceptual framework to study the reduction of nonlinear autonomous systems.

The structure of this article is as follows. In Sect. 2 we revisit the approximation formulas of invariant manifolds for equilibria. The leading-order approximation \(h_k\) to these manifolds is obtained as the pullback limit of the high-mode part of the solution to an auxiliary backward–forward system (Theorem 1) and explicit formulas of \(h_k\) are derived (Theorem 2). The resulting invariant manifold approximation formulas are applied to an El Niño-Southern Oscillation ODE model in the Supplementary Material, in the case of a subcritical Hopf bifurcation. In Sect. 3, we introduce the measure-theoretic framework in which our variational approach is formulated. Theorem 4 characterizes the minimizers (optimal PMs) of the parameterization defect, and Theorem 5 shows that optimal PMs relate naturally to conditional expectations. As a first application, in Sect. 3.4 the closure results of [32] concerning the low-order model atmospheric Primitive Equations of [123], are enlightened by new insights introduced in this article. Building upon the backward–forward systems of Sect. 2, we derive in Sect. 4 parametric formulas of dynamically-based parameterizations aimed at being optimized.

Applications to the closure of a low-order model of Rayleigh-Bénard convection are then discussed in Sect. 5, for which a period-doubling regime and a chaotic regime are analyzed. In Sect. 6 the approach is finally illustrated—in the context of the Kuramoto-Sivashinsky turbulence—as providing efficient closures without slaving and for cutoff scales placed well within the inertial range, keeping only the unstable modes in the reduced state space. It is shown that the variational approach introduced in this article allows for fixing the excessive backscatter transfer of energy to the low modes encountered by standard parameterizations. We conclude in Sect. 7 by outlining future directions of research.

2 Part I: Invariant Manifold Reduction Revisited

3 Approximation Formulas for Invariant Manifolds of Nonlinear ODEs

3.1 Local Invariant Manifolds for Equilibria: Validity and Motivations for Other Parameterizations

Our framework takes place with autonomous systems of ordinary differential equations (ODEs) in \(\mathbb {R}^N\) of the form:

for which the vector field F is assumed to be sufficiently smooth in the state variable Y.

Invariant manifold theory allows for the rigorous derivation of low-dimensional surrogate systems from which not only the system’s qualitative behavior near e.g. a steady state is preserved, but also quantitative features of the nonlinear dynamics are reasonably well approximated such as the solution’s amplitude or possible dominant periods. This aspect of the theory is recalled below in the Supplementary Material, for the unfamiliar reader.

To set the ideas, assuming that \(\overline{Y}\) is a steady state of the system (2.1), we rewrite the system (2.1) in terms of the perturbed variable, \(y = Y - \overline{Y}\), namely

where DF(x) denotes the Jacobian matrix of F at x.

From its definition, the nonlinear mapping, \(G:\mathbb {R}^N \rightarrow \mathbb {R}^N\), satisfies

As a consequence, G(y) admits the following expansion for y near the origin:

where

denotes a homogenous polynomial of order \(k\ge 2\). That is, \(G_k\) is the homogeneous part of lowest degree. Sometimes, \(G_k(y)\) will be used as a compact notation for \(G_k(y,\,\ldots \, , y)\).

The spectrum of A is denoted by \(\sigma (A)\), i.e.

where the \(\beta _j\)s denote the eigenvalues of A for which we have accounted for their algebraic multiplicity in the sense that if \(\lambda \) is a root of multiplicity p of the characteristic polynomial \(\chi _A\), then e.g. \(\beta _1=\lambda ,\ldots ,\beta _p=\lambda \). The corresponding generalized eigenvectors are denoted by

The index in (2.6) also accounts for an arrangement of the eigenvalues in lexicographical order, that is the eigenvalues are ordered so that their real parts decrease as the index increases, and for eigenvalues with the same real parts, they are arranged so that the imaginary parts decrease.

Taking into account this ordering, grouping the first m eigenvalues of A, and assuming

the spectrum of A is decomposed as follows

where

and

Note that due to (2.8) and the aforementioned lexicographical order, we have

This spectral decomposition implies a natural decomposition of \(\mathbb {C}^N\):

in terms of the generalized eigenspaces

This spectral decomposition of \(\mathbb {C}^N\) along with the corresponding canonical projectors \(\varPi _{\mathfrak {c}}\) and \(\varPi _{\mathfrak {s}}\) onto \(E_{\mathfrak {c}}\) and \(E_{\mathfrak {s}}\), respectively, are at the core of our dimension reduction of Eq. (2.2).

The theory of local invariant manifolds for equilibria says that the simple condition (2.12) combined with the tangency condition (2.3) about the nonlinear term G ensure the existence of a local m-dimensional invariant manifold, namely a manifold obtained as the local graph over an open ball \( \mathfrak {B}\) in \(E_{\mathfrak {c}}\) centered at the origin, that is

where \( h:E_{\mathfrak {c}} \rightarrow E_{\mathfrak {s}}\) is a \(C^1\)-smooth manifold function such that \(h(0) = 0\) and \(D h(0) = 0\), for which the following property holds:

-

(i)

any solution y(t) of Eq. (2.2) such that \(y(t_0)\) belongs to \(\mathfrak {M}\) for some \(t_0\), stays on \(\mathfrak {M}\) over an interval of time \([t_0,t_0+\alpha )\), \(\alpha >0\), i.e.

$$\begin{aligned} y(t)=y_\mathfrak {c}(t)+h(y_\mathfrak {c}(t)), \; t\in [t_0,t_0+\alpha ), \end{aligned}$$(2.16)where \(y_\mathfrak {c}(t)\) denotes the projection of y(t) onto the subspace \(E_{\mathfrak {c}}\).

Additionally, if \(\mathrm {Re}(\beta _{m+1})<0\) and \(\mathrm {Re}(\beta _{m})\ge 0\), then the local invariant manifold is the so-called local center-unstable manifold and the following property holds

-

(ii)

If there exists a trajectory \(t\mapsto y(t)\) such that \(y_\mathfrak {c}(t)\) belongs to \( \mathfrak {B}\) for all \(-\infty<t<\infty \), then the trajectory must lie on \(\mathfrak {M}\).

Property (ii) implies that an invariant set \(\varSigma \) of any type, e.g., equilibria, periodic orbits, invariant tori, must lie in \(\mathfrak {M}\) if its projection onto \(E_\mathfrak {c}\) is contained in \(\mathfrak {B}\), i.e. if \(\varPi _\mathfrak {c}\varSigma \subset \mathfrak {B}\). Property (2.16) holds then globally in time for the solutions that composed such invariant sets, and thus the knowledge of the m-dimensional variable, \(y_\mathfrak {c}(t)\), is sufficient to entirely determine any solution y(t) that belongs to such an invariant set. Furthermore, \(y_\mathfrak {c}(t)\) is obtained as the solution of the following reduced m-dimensional problem

which in turn characterizes the solution y(t) in \(\varSigma \), since the slaving relationship \(y_\mathfrak {s}(t)=h(y_\mathfrak {c}(t))\) holds for any solution y(t) that belongs to an invariant set \(\varSigma \) for which \(\varPi _\mathfrak {c}\varSigma \subset \mathfrak {B}\).

More generally, property (i) allows for \(y_\mathfrak {c}(t)\) to leave the neighborhood \(\mathfrak {B}\) for some time instance, t, and thus to violate the parameterization (2.16) for y(t), but does not exclude to have (2.16) to hold again over another interval \([t_1,t_1+\alpha _1)\) as soon as \(y(t_1)\) belongs to \(\mathfrak {M}\).

Regarding the neighborhood \(\mathfrak {B}\), the theory shows that it shrinks as the spectral gap,

gets small and the nonlinear term G deviates quickly from the tangency condition as one moves away from the origin, leaving possible an (exact) parameterization only for solutions with sufficiently small amplitude. Indeed, the existence of such a (local) exact parameterization or say in other words, of a local m-dimensional invariant manifold is subject to the following spectral gap condition:

where \(\text{ Lip }(G\vert _{\mathcal {V}})\) denotes the Lipschitz constant of the nonlinearity G, restricted to a neighborhood \(\mathcal {V}\) of the origin in \(\mathbb {C}^N\) such that \(\mathcal {V}\cap E_\mathfrak {c}=\mathfrak {B}\), and \(C >0\) is typically independent on \(\mathcal {V}\). Due to the tangency condition (2.3), the condition (2.18) always holds once \(\mathcal {V}\) (and thus \(\mathfrak {B}\)) is chosen sufficiently small. The theory of local invariant manifolds makes thus sense if solutions with sufficiently small amplitudes lie in the neighborhood \(\mathcal {V}\). This situation is encountered for many bifurcations, near criticality for which the system’s linear part has modes that become unstable, although a condition on the asymptotic stability of the origin is often required to have a local attractor that continuously unfolds from the origin as the bifurcation parameter is varied [125, Theorem 6.1]. In the context of e.g. nonlinear oscillations that bifurcate from a steady state, local invariant manifolds provide exact parameterizationsFootnote 3 of stable limit cycles near criticality in the case of a supercritical Hopf bifurcation, whereas it is the parameterization of the unstable limit cycle that emerges continuously from the steady state that is guaranteed to be exact, at least sufficiently close to criticality in the case of a subcritical Hopf bifurcation. In the Supplementary Material, we show that the approximation formulas of Sect. 2.2, allow for approximating not only the unstable “inner” unstable limit cycle but also the “outer” stable limit cycle arising in an El Niño-Southern Oscillation (ENSO) model via subcritical Hopf bifurcation.

In any event, local invariant manifolds by their local nature, although useful in many applications do not allow for an efficient dimension reduction of arbitrary or at least generic solutions. Attempts to extend the theory to a more global setting, have failed dramatically to systematically provide nonlinear parameterizations of type (2.16) for a broader set of solutions, since, in general, the same type of spectral gap condition as (2.18) is also encountered in such an endeavor. For instance, the theory of inertial manifolds is known to be conditioned on spectral gap conditions such as given by (2.18) for which the Lipschitz constant is global or taken over a neighborhood \(\mathcal {V}\) that contains the (projection onto \(E_\mathfrak {c}\) of the) global attractor.

Part II proposes a new framework to provide manifolds which are no-longer locally invariant—and thus not subject to a spectral gap condition—but still provide meaningful nonlinear parameterizations of nonlinear dynamics; these manifolds being called parameterizing manifolds (PMs). Nevertheless, the calculation of PMs departs from the theory of approximation of local invariant manifolds which we revisit in the next section, before presenting the main, new, analytical ingredients in Sect. 4.

The material presented in Sect. 2.2 below will serve to derive (approximate) parameterizations for perturbed variable taken with respect to a mean state \(\overline{Y}\), instead of a steady state; see Sect. 4.3. To set the ideas, we consider F(Y) to be given by \(L Y + B(Y,Y)\) with L linear, and B a quadratic homogeneous polynomial and symmetric, \(B(X,Y)=B(Y,X)\). The equation for the perturbed variable y then becomes

which adopting the notations of Eq. (2.2), corresponds to \(A=Ly + 2 B(y,\overline{Y})\) and \(G(y)=B(y,y) + { L \overline{Y}} + B(\overline{Y},\overline{Y})\). Since \(\overline{Y}\) is no longer a steady state, \(G(0)\ne 0\), and \({ L \overline{Y}} + B(\overline{Y},\overline{Y})\) is a time-independent forcing term. Thus the standard local invariant manifold theory for equilibria cannot be applied.

Nevertheless, as shown in Sect. 4 below, the theory underlying the derivation of approximation formulas for invariant manifolds is still relevant for their appropriate modification in view of providing approximate parameterizations in presence of forcing, once a good representation of these formulas is adopted; see Theorem 1 below for the representation of these approximation formulas (see (2.33)), and Sect. 4.3 for the modified parameterizations in presence of forcing.

3.2 Leading-Order Approximation of Invariant Manifolds

This section is devoted to the derivation of analytic formulas for the approximation of the (local) invariant manifold function h in (2.15). As shown below these formulas are easily obtained by relying only on the invariance property of \(\mathfrak {M}\), responsible for the invariance equation to be satisfied by h. We recall first the derivation of this fundamental equation; see also [88, pp. 169–171] and [42, VII. A. 1]. For the existence of the invariant/center manifolds for ODEs, we refer to [172].

In that respect, note first that by applying respectively the projectors \(\varPi _{\mathfrak {c}}\) and \(\varPi _{\mathfrak {s}}\) on both sides of Eq. (2.2) and by using that A leaves invariant the eigensubspaces \(E_{\mathfrak {c}}\) and \(E_{\mathfrak {s}}\), we obtain that Eq. (2.2) can be split as follows

with

Since \(\mathfrak {M}\) is locally invariant, any solution y(t) of Eq. (2.2) with initial datum on \(\mathfrak {M}\) stays on \(\mathfrak {M}\) as long as \(y_{\mathfrak {c}}(t)\) stays in \(\mathcal {B}\) (where \(\mathcal {B}\) is given in (2.15)), i.e.

provided that \(y_{\mathfrak {c}}(t)\) lies in \(\mathcal {B}\); see (2.16).

This implies, as long as \(y_{\mathfrak {c}}(t)\) belongs to \(\mathcal {B}\), that \(y_{\mathfrak {s}}(t)=h(y_{\mathfrak {c}}(t))\), which, when substituted into Eq. (2.20b) gives

On the other hand since h is differentiable, we have by using Eq. (2.20a),

Then (2.23) and (2.24) allow us to conclude that as long as \(y_{\mathfrak {c}}(t)\) belongs to \(\mathcal {B}\), h evaluated along the corresponding “segment” of trajectory satisfies

which can be recast into the aforementioned invariance equation to be satisfied by h, namely

This functional equation is a nonlinear system of first order PDEs that cannot be solved in closed form except in special cases. However, one can solve Eq. (2.26) approximately by representing \(h(\xi )\) as a formal power series. The solution is thus sought in terms of Taylor expansion in the \(\xi \)-variable and various numerical techniques—based, e.g., on the resolution of the multilinear Sylvester equations associated with the invariance equation—have been proposed in the literature to find the corresponding coefficients [10, 58]. Once a power series approximation has been found, a posteriori error estimates can be checked by applying for instance [19, Theorem 3, p. 5].Footnote 4

For a broad class of systems, the leading-order approximation of h can be efficiently and analytically calculated. It consists of dropping in Eq. (2.26) the terms involving nonlinear dependence on h. This operation leads to the following equation for the corresponding leading-order approximation \(h_k\) (see, e.g., [30, 88]):

where \(G_k\) is the leading-order term in the Taylor expansion of G about the origin; cf. Eq. (2.4).

Easily checkable conditions on the eigenvalues of A, allows then for guaranteeing an analytic solution to Eq. (2.27). For instance, in the case A is self-adjoint, it simply requires certain cross non-resonance conditions to be satisfied as stated in Theorem 2 below. Namely, for any given set of resolved modes for which their self-interactions (through the leading-order nonlinear term \(G_k\)) do not vanish when projected against an unresolved mode \(\varvec{e}_n\), it is required that some specific linear combinations of the corresponding eigenvalues dominate the eigenvalue associated with \(\varvec{e}_n\); see (NR) below.

In the general case, when A is not necessarily diagonal, the cross non-resonance condition is strengthened to the requirement that \(\mathrm {Re}(\beta _{m+1}) < k \, \mathrm {Re}(\beta _{m})\) which ensures that the following Lyapunov–Perron integral \(\mathfrak {I} :E_{\mathfrak {c}} \rightarrow E_{\mathfrak {s}}\),

is well defined and in fact provides a solution \(h_k\) to Eq. (2.27); see Theorem 1 below. This solutions provides actually the leading-order approximation of the (local) invariant manifold function h if we assume furthermore that \(\mathrm {Re}(\beta _{m+1}) < \min \{ 2k \mathrm {Re}(\beta _{m}), 0\}\); see Theorem 1 again.

This Lyapunov–Perron integral itself possesses a flow interpretation: it is obtained as the pullback limit constructed from the solution of the following backward–forward auxiliary system

Indeed, the solution to Eq. (2.29b) at \(s=0\) is given by

and taking the limit formally in (2.30) as \(\tau \rightarrow \infty \), leads to \(\mathfrak {I}\) given by (2.28).

The theorem below states more precisely the relationships between Eq. (2.27), the Lyapunov–Perron integral (2.28), and the solution to the backward–forward system (2.29).

Theorem 1

Consider Eq. (2.2). Let the subspaces \(E_\mathfrak {c}\) and \(E_{\mathfrak {s}}\) be given by (2.14) and let m be the dimension of \(E_{\mathfrak {c}}\). Assume (2.12) and furthermore that

where k denotes the leading order of the nonlinearity G; cf. (2.4).

Then, the Lyapunov–Perron integral

is well defined and is a solution to Eq. (2.27). Moreover, \(\mathfrak {I}\) is the pullback limit of the high-mode part of the solution to the backward–forward system (2.29):

where \(y^{(1)}_{\mathfrak {s}}[\xi ](0; -\tau )\) denotes the solution to Eq. (2.29b) at \(s=0\).

Finally, if we assume furthermore that

then \(\mathfrak {I}\) provides the leading-order approximation of the invariant manifold function h in the sense that

Proof

First, we outline how condition (2.31) combined with the fact that \(G_k\) is a homogeneous polynomial of order k, ensure that the Lyapunov–Perron integral \(\mathfrak {I}\) is well defined. In that respect, we note first that natural estimates about \(\Vert e^{t A_{\mathfrak {s}}} \varPi _{\mathfrak {s}}\Vert _{L(\mathbb {C}^N)}\) and \(\Vert e^{t A_{\mathfrak {c}}} \varPi _{\mathfrak {c}}\Vert _{L(\mathbb {C}^N)}\) hold.

This is essentially a consequence of (2.12). Indeed, any choice of real constants \(\eta _1\) and \(\eta _2\) such that

ensures the existence of a constant \(K > 0\) (depending on \(\eta _1\) and \(\eta _2\)) such that the following estimates hold:

The latter inequalities resulting essentially from the fact that \(\Vert e^{tB} \Vert _{L(\mathbb {C}^N)}\) is bounded for \(t\ge 0\) if \(\mathrm {Re} \lambda <0\) for all \(\lambda \) in \(\sigma (B)\).

Since \(G_k\) is a homogeneous polynomial of order k, there exists \(C>0\) such that

Now, by using (2.37) and (2.38), we obtain for each \(s \le 0\) that

Assumption (2.31) allows us to choose \(\eta _1\) and \(\eta _2\) in (2.36) such that \(\eta _2-k\eta _1 < 0\) which in turns leads to

We have thus shown that \(\mathfrak {I}\) is well defined.

We show next that \(\mathfrak {I}\) satisfies Eq. (2.27). To do so, for any \(\xi \) in \(E_{\mathfrak {c}}\) we introduce the following function

On one hand, by differentiating \(\psi (t) = \int _{-\infty }^t e^{(t-s)A_{\mathfrak {s}}} \varPi _{\mathfrak {s}} G_k(e^{sA_{\mathfrak {c}}}\xi ) \,\mathrm {d}s\), we obtain

On the other, using that \(\psi (t) = \mathfrak {I}(e^{tA_{\mathfrak {c}}} \xi )\), we have

It follows then that

Set \(t=0\) in the above equality, we then obtain

which is equivalent to

We have thus verified that \(\mathfrak {I}\) is a solution to Eq. (2.27).

Recall from Eq. (2.30) that the high-mode part of the solution to the backward–forward system (2.29) is given (at \(s=0\)) by:

By using the same type of estimates as in (2.39), it is easy to show that the limit, \(\lim _{\tau \rightarrow \infty } y^{(1)}_{\mathfrak {s}}[\xi ](0; -\tau )\), exists and it is equal to \(\mathfrak {I}(\xi )\).

The leading-order approximation property stated in (2.35) under the assumption (2.34) is a direct consequence of the general result [30, Corollary 7.1] proved for stochastic evolution equations in infinite dimension, driven by a multplicative white noise which thus applies to our finite dimensional and deterministic setting. Indeed, to apply [30, Corollary 7.1], we are only left with the checking of constants \(\eta _1\) and \(\eta _2\) for which [30, condition (7.1)] is verified, namely

with \(\eta _{\mathfrak {s}} = \mathrm {Re}(\beta _{m+1})\) and \(\eta _{\mathfrak {c}} = \mathrm {Re}(\beta _{m})\) here. One can readily check that this condition is guaranteed under the assumptions (2.12) and (2.34). Indeed, if \(\mathrm {Re}(\beta _{m+1})< 2k \mathrm {Re}(\beta _{m}) < 0\), we just need to choose

with sufficiently small positive \(\epsilon \); and if \(\mathrm {Re}(\beta _{m+1})< 0 < 2k \mathrm {Re}(\beta _{m})\), we just need to choose \(\eta _1= - \epsilon \) and \(\eta _2 = \mathrm {Re}(\beta _{m+1})+ \epsilon \) with again \(\epsilon \) sufficiently small. \(\square \)

The next Theorem shows, under a slightly relaxed spectral condition (see (NR) below), that if the matrix A is assumed to be diagonal, then even when the Lyapunov–Perron integral (2.32) is no longer defined, a solution \(h_k\) to Eq. (2.27) can still be derived and that this solution possesses even an explicit expression.

This expression consists of an expansion in terms of the eigenvectors \(\varvec{e}_n\) lying in the eigenspace \(E_\mathfrak {s}\), and whose coefficients are homogeneous polynomials of order k in the \(\xi \)-variable lying in eigenspace \(E_\mathfrak {c}\); the coefficients of these polynomials being themselves expressed in terms of ratios between the linear combinations of eigenvalues of A and the corresponding eigenmodes interactions through the leading-order nonlinear term \(G_k\); see (2.48). More precisely, we have

Theorem 2

Consider Eq. (2.2). Let the subspaces \(E_\mathfrak {c}\) and \(E_{\mathfrak {s}}\) be given by (2.14) and let m be the dimension of \(E_{\mathfrak {c}}\). Assume (2.12) and that the matrix A is diagonal under its eigenbasis \(\{\varvec{e}_j \in \mathbb {C}^N : j = 1,\ldots , N\}\). We denote by \(\{\varvec{e}_j^*, j=1,\ldots ,N\}\) the eigenvectors of the conjugate transpose \(A^*\).

Recalling that \(G_k\) denotes the leading-order homogeneous polynomial in the expansion of G (see (2.4)), let us assume furthermore that the eigenvalues \(\beta _j\) of A satisfies the following cross non-resonance condition:

where \(\mathcal {I}= \{1, \ldots , m\}\), and \(\langle \cdot , \cdot \rangle \) denotes the inner product on \(\mathbb {C}^N\) defined by

Then, a solution to Eq. (2.27) exists, and is given by

where \(h_{k,n}(\xi )\) is a homogeneous polynomial of degree k in the variables \(\xi _1, \ldots \), \(\xi _m\) given by

Remark 1

-

(i)

The formulas (2.47)–(2.48) for the case of real and symmetric matrices, are known; see e.g. [126, Appendix A]. The result presented in Theorem 2 extends nevertheless these formulas to cases for which A is diagonalizable in \(\mathbb {C}\), allowing in particular for an arbitrary number of complex conjugate eigenpairs. The case when the neutral/unstable modes correspond to a single complex conjugate pair has been dealt with in [126, Appendix A]. Even in this special case, our formulas are in contradistinction simpler than those given in [126, Eq. (A.1.15)]. This is due to the use of generalized eigenvectors adopted here and the method of proof of Theorem 2 which relies on the calculation of spectral elements of the homological operator \(\mathcal {L}_A\) naturally associated with Eq. (2.27); see (2.54) below.

-

(ii)

The case of eigenvalues of higher-order multiplicity is more involved. The presence of Jordan blocks makes indeed the derivation of general analytic formulas challenging but still possible by the method used in the derivation of the formulas (2.47)–(2.48). Communication about these formulas will be pursued elsewhere.

-

(iii)

By only assuming the (NR) condition, the solution to Eq. (2.27) given by the formulas (2.47)–(2.48) is not necessarily unique. This situation happens for instance when we have a k-uple \((i_1, \ldots , i_k)\) and an index n for which \(\langle G_k(\varvec{e}_{i_1}, \ldots , \varvec{e}_{i_k}), \varvec{e}_n^*\rangle = 0\) while \(\sum _{j=1}^{k} \beta _{i_j} - \beta _n = 0\). In this case, we can add to any solution \(h_k\) to Eq. (2.27) a monomial \(c x_{i_1} \cdots x_{i_k}\) with any scalar coefficient c and get another solution; see (2.63)–(2.64) below.

-

(iv)

Note that if the (NR) condition is strengthened to

$$\begin{aligned}&\text { } \forall \,\, (i_1, \ldots , i_k ) \in \mathcal {I}^k, \ n \in \{ m+1, \ldots , N\}, \text { it holds that} \nonumber \\&\Bigl (\langle G_k(\varvec{e}_{i_1}, \ldots , \varvec{e}_{i_k}), \varvec{e}_n^*\rangle \ne 0 \Bigr ) \Longrightarrow \biggl ( \sum _{j=1}^{k} \mathrm {Re}(\beta _{i_j}) - \mathrm {Re}(\beta _n) > 0 \biggr ), \end{aligned}$$(2.49)then the expression of \(h_k\) given by (2.47)–(2.48) results directly from the expression of Lyapunov–Perron integral \(\mathfrak {I}\). Indeed,

$$\begin{aligned} \mathfrak {I}(\xi )&= \int _{-\infty }^0 e^{-sA_{\mathfrak {s}}} \varPi _{\mathfrak {s}} G_k\Big (\sum _{i=1}^m e^{\beta _i s}\xi _i \varvec{e}_i \Big ) \,\mathrm {d}s\nonumber \\&= \int _{-\infty }^0 \sum _{j=m+1}^N e^{-s\beta _j} \Big \langle G_k\Big (\sum _{i=1}^m e^{\beta _i s}\xi _i \varvec{e}_i \Big ),\varvec{e}_{n} \Big \rangle \varvec{e}_{n} \,\mathrm {d}s \end{aligned}$$(2.50)i.e.

$$\begin{aligned} \mathfrak {I}(\xi )= \sum _{j=m+1}^N \sum _{(i_1, \ldots , i_k )\in \mathcal {I}^k} \, \Big \langle G_k\Big (\varvec{e}_{i_1}, \ldots , \varvec{e}_{i_k} \Big ),\varvec{e}_{n}^*\Big \rangle \xi _{i_1} \cdots \xi _{i_k} \varvec{e}_{n} \int _{-\infty }^0 e^{(\beta _{i_1} + \cdots + \beta _{i_k}- \beta _j)s} \,\mathrm {d}s, \end{aligned}$$(2.51)recalling that \(G_k(u)\) denotes \(G_k(u,\ldots ,u)\), a homogeneous polynomial or order k. The condition (2.49) ensures that the integrals in (2.51) are well-defined, leading to (2.47)–(2.48) after integration.

Of course, by assuming only (NR) instead of (2.49), the Lyapunov–Perron integral may not be well defined anymore. But as shown below, the solution to Eq. (2.27) still exists, and is given again by (2.47)–(2.48).

-

(v)

Finally, it is worth mentioning that cross non-resonance conditions of the form

$$\begin{aligned} \sum _{j=1}^{k}\beta _{i_j} - \beta _n \ne 0, \text { } \forall \,\, (i_1, \ldots , i_k ) \in \mathcal {I}^k, \ n \in \{ m+1, \ldots , N\}, \end{aligned}$$is also encountered for the study of normal forms on an invariant manifolds; see, e.g. [84, Sect. 3.2.1], [60, Thm. 2.4] and also [11, Thm. 3.1].

Proof of Theorem 2

The proof is inspired by Lie algebra techniques used in the derivation of normal forms for ODEs (see, e.g., [5, Chap. 5] and [11, Chap. 1]). We proceed in three steps.

Step 1 We seek a solution to Eq. (2.27) as a mapping \(h_{k} : E_\mathfrak {c}\rightarrow E_\mathfrak {s}\) that admits the following expansion:

Here, for each \((i_1, \ldots , i_k) \in \mathcal {I}^k\), the function \(\varPsi ^n_{i_1, \ldots , i_k}(\xi )\) is a complex-valued homogeneous polynomial of degree k given by

The task is then to determine the coefficients \(\varGamma ^n_{i_1, \ldots , i_k}\) (in \(\mathbb {C}\)) by using Eq. (2.27).

Step 2 In that respect, we introduce the following homological operator \(\mathcal {L}_{A}\):

where \(\phi :E_\mathfrak {c}\rightarrow E_\mathfrak {s}\) is a smooth function.

A key observation consists of noting that the \(E_\mathfrak {s}\)-valued function, \(\xi \mapsto \varPsi ^n_{i_1, \ldots , i_k}(\xi )\varvec{e}_n\), provides an eigenfunction of \(\mathcal {L}_{A}\) corresponding to the eigenvalue \(\sum _{j = 1}^k \beta _{i_j} - \beta _n\), in other words that the following identity holds

In order to check (2.55), we first calculate \(D \phi (\xi ) A_{\mathfrak {c}} \xi \) when \(\phi (\xi )=\varPsi ^n_{i_1, \ldots , i_k}(\xi ) \varvec{e}_n\). In that respect, denoting by \(e^n_j\) the \(j^{\mathrm {th}}\) component of \(\varvec{e}_n\), the Jacobian matrix \(D [\varPsi ^n_{i_1, \ldots , i_k}(\xi ) \varvec{e}_n]\), given by the following \(N\times m\) matrix,

possesses the following representation

where \(\varvec{B}(\xi )=(B_1(\xi ), \ldots , B_m(\xi ))\) is an m-dimensional row vector whose components are given for any j in \(\{1, \ldots , m\}\) by

where p denotes the number of indices in the set \(\{i_1,\ldots , i_k\}\) that equal j.

Thus,

which leads to

since A is assumed to be diagonal.

By noting that the product \( \varvec{B}(\xi ) \left( \beta _1 \xi _1, \ldots , \beta _m \xi _m \right) ^\mathrm {tr}\) is nothing else that \(\sum _{j = 1}^k \beta _j \xi _{i_1} \cdots \xi _{i_k},\) and recalling the expression of \(\varPsi ^n_{i_1, \ldots , i_k}(\xi )\) in (2.53), we infer from (2.60) that

On the other hand,

and recalling the definition of \(\mathcal {L}_A\) in (2.54), the identity (2.55) follows.

Step 3 By using the expansion of \(h_{k}(\xi )\) given by (2.52) in Eq. (2.27), and by using the fact that \(\varPsi ^n_{i_1, \ldots , i_k}(\xi )\varvec{e}_n\) are eigenvectors of the homological operator \(\mathcal {L}_{A}\) with eigenvalue \(\sum _{j = 1}^k \beta _{i_j} - \beta _n\) (cf. (2.55)), we get

Recalling from (2.53) that \(\varPsi ^n_{i_1, \ldots , i_k} = \varGamma ^n_{i_1, \ldots , i_k} \xi _{i_1} \cdots \xi _{i_k}\), we obtain

At the same time, since \(G_k\) is a homogeneous polynomial of order k and \(\xi = \sum _{i = 1}^m \xi _i \varvec{e}_i\), we obtain

By using the above identity in (2.63), we obtain the following formulas for the coefficients \(\varGamma ^n_{i_1, \ldots , i_k}\) in (2.53):

The formula of \(h_k\) given in (2.47)–(2.48) is thus derived by combining (2.52), (2.53) and (2.65). The proof is complete. \(\square \)

3.3 Analytic Formulas for Higher-Order Approximations

We discuss briefly here simple considerations to derive higher-order approximations of an invariant manifold. The approach relies on the use of a power series expansion of the manifold function h in the invariance equation (2.26). However, instead of keeping all the monomials at a given degree arising from this expansion, we filter out terms that carries significantly less energy compared with those that are kept. This elimination procedure relies on the assumption that the projected ODE dynamics onto the resolved subspace \(E_{\mathfrak {c}}\) contains most of the energy; an assumption which is often met in practical applications concerned with invariant manifold reduction. To present the idea in a simple setting, we consider below the case for which \(G(y) = G_2(y,y) + G_3(y,y,y)\) and a cubic approximation is sought.

When \(G = G_2 + G_3\), the leading-order approximation of h is \(h_2\) given by (2.47)–(2.48) with \(k=2\). Recall also \(h_2\) satisfies (2.27). To determine the approximation of order 3, we replace h in the invariance equation (2.26) by \(h^{\mathrm {app}}= h_2 + \psi \), where \(\psi \) represents the homogeneous cubic terms in the power expansion of h, to be determined. By identifying all the terms of order two, we recover (2.27) with \(k=2\) to be satisfied for \(h_2\), and by identifying all the terms of order three, we obtain the following equation for \(\psi \):

Notice that the LHS of (2.66) is \(\mathcal {L}_A\psi \), and that the RHS is a homogeneous cubic polynomial in the \(\xi \)-variable. If most of the energy of the ODE dynamics is contained in the low modes, one gets that the energy carried by \(y_\mathfrak {s}\) is much smaller than \(\Vert y_\mathfrak {c}\Vert ^2\). It is then reasonable to expect that the energy carried by \(h_2(\xi )\) is much smaller than \(\Vert \xi \Vert ^2\) for \(\xi = y_\mathfrak {c}(t)\) as t varies. This energy consideration implies that on the RHS of (2.66), the term \(\varPi _{\mathfrak {s}} G_3(\xi )\) dominates the other three terms provided that \(\Vert G_2(y, y)\Vert /\Vert y\Vert ^2\) is on the same order of magnitude as \(\Vert G_3(y, y,y)\Vert /\Vert y\Vert ^3\). Thus, it is reasonable to seek for a good approximation of \(\psi \) by simply solving the equation:

Note that this is exactly (2.27) with \(k=3\). In virtue of Theorem 2, the existence of \(h_3\) is guaranteed under the non-resonance condition (NR), and \(h_3\) is given by (2.47)–(2.48). We denote this cubic parameterization by

with \(\mathcal {I}= (1, \ldots , m)\). See the Supplementary Material for an application to the derivation of effective reduced models able to capture a subcritical Hopf bifurcation arising in an ENSO model.

In what precedes, we considered the case G of order 3, and determined approximations of order 3. We could nevertheless, seek for higher-order approximations of invariant manifolds, independently of the nonlinearity to be of high-order or not. For instance if \(G(y)=B(y,y)\), i.e. quadratic, we outline hereafter how recursive solutions to a hierarchy of homological equations arise naturally once we look for higher-order approximations.

In that respect, we introduce some notations. We denote by \(\text{ Poly }_k(E_\mathfrak {c};E_\mathfrak {s})\) (resp. \(\text{ Poly }_k(E_\mathfrak {c};E_\mathfrak {c})\)) the space of vectors in \(E_\mathfrak {s}\) (resp. \(E_\mathfrak {c}\)) whose components are homogeneous polynomials of order k in the \(E_\mathfrak {c}\)-variable. Given a polynomial \(\mathcal {P}\) in \(\text{ Poly }_k(E_\mathfrak {c};E_\mathfrak {s})\) or in \(\text{ Poly }_k(E_\mathfrak {c};E_\mathfrak {c})\), the symbol \(\big [ \mathcal {P}(\xi ) \big ]_k\) represents the collection of terms of order k in \(\mathcal {P}\).

By seeking a solution, \(\varPsi \), to the invariance equation Eq. (2.26) under the form,

we infer that the \(\varPsi _k\)’s satisfy the following recursive homological equations given by

where \(\varPhi _{<\ell }(\xi )\) denotes

Note that with the convention \(\sum _{2}^1 \equiv 0\), we recover the first homological equation, namely

In other words \(\varPsi _2=h_2\). We refer to [85] for a detailed account regarding the rigorous and computational aspects for the determination of solutions to Eq. (2.70). [109, Chap. 11] contains also a detailed survey of algorithms to compute numerically invariant manifolds for fast-slow systems.

4 Part II: Variational Approach to Closure

5 Optimal Parameterizing Manifolds

5.1 Variational Formulation

5.1.1 Parameterizing Manifolds (PM) and Parameterization Defect

A cornerstone of our approach presented below is the notion of parameterizing manifold (PM) that we recall below from [26, 31, 32]. Our framework takes place in finite dimension as in Part I, however here we consider more general systems of the form

where F denotes a time-independent forcing in \(\mathbb {C}^N\), A is a \(N\times N\) matrix with complex entries, while G is assumed to be a smooth nonlinearity for which we do not assume \(G(0)=0\) anymore. In practice Eq. (3.1) can be thought as derived in the perturbed variable from an original system, for which A is either the Jacobian matrix at a mean state (\(F\ne 0\)) or at a steady state (\(F=0\)), although the concepts presented below do not restrict to such situations. Hereafter we assume that A, F and G are such that classical solutions (at least \(C^1\)) exist and that the corresponding initial value problem possesses a unique solution, at least for initial data taken in an open domain \(\mathcal {D}\) of \(\mathbb {C}^N\). Dynamically-based formulas to design PMs for Eq. (3.1) are given in Sects. 4.3 and 4.4 below. For the moment we recall the definition of a PM, and introduce the notion of parameterization defect that will be used for the optimization of PMs.Footnote 5

Definition 1

Let \(T > 0\) and \(0\le t_1 <t_2 \le \infty \). Let y be a solution to Eq. (3.1), and \(\varPsi :E_{\mathfrak {c}} \rightarrow E_{\mathfrak {s}}\) be a continuous mapping satisfying the following energy inequality for all t in \([t_1,t_2)\)

where \(y_{\mathfrak {c}}(s)=\varPi _{\mathfrak {c}} y(s)\) and \(y_{\mathfrak {s}}(s)=\varPi _{\mathfrak {s}}y(s)\), with \(\varPi _\mathfrak {c}\) and \(\varPi _\mathfrak {s}\) that denote the canonical projectors onto \(E_\mathfrak {c}\) and \(E_\mathfrak {s}\), respectively (\(E_\mathfrak {c}\) and \(E_\mathfrak {s}\) being defined in (2.14)).

Then, the manifold, \( \mathfrak {M}_\varPsi \), defined as the graph of \(\varPsi \), i.e.

is a finite-horizon parameterizing manifold associated with the system of ODEs (3.1), over the time interval \([t_1,t_2)\). The time-parameter T measuring the length of the “finite-horizon” is independent on \(t_1\) and \(t_2\). If (3.2) holds for \(t_2=\infty \), then \( \mathfrak {M}_{\varPsi }\) is simply called a finite-horizon parameterizing manifold, and if it holds furthermore for all T, it is called a parameterizing manifold (PM).

Given a parameterization \(\varPsi \) of the unresolved variables (in \(E_\mathfrak {s}\)) in terms of the resolved ones (in \(E_\mathfrak {c}\)), a natural non-dimensional number, the parameterization defect, is defined as

Sometimes, the dependence on t will be secondary, and by making \(t=t_1\) in (3.4) with \(t_1\) sufficiently large so that for instance transient dynamics has been removed, we will denote \(Q_T(t,\varPsi )\) simply by \(Q_T(\varPsi )\). In any event, either \(Q_T(t,\varPsi )\) or \(Q_T(\varPsi )\) allows us to compare objectively two manifolds in their ability to parameterize the variables that lie in the subspace \(E_\mathfrak {s}\) by those that lie in the subspace \(E_{\mathfrak {c}}\). Clearly a situation corresponding to an exact slaving of the variables in \(E_\mathfrak {s}\) by those in \(E_\mathfrak {c}\) as encountered in the invariant manifold theory revisited in Part I, corresponds to \(Q_T(\varPsi )\equiv 0\) for any solution y that lies on the invariant manifold, \(\mathfrak {M}_{\varPsi }\), associated with the parameterization \(\varPsi \). If furthermore \(\mathfrak {M}_{\varPsi }\) attracts e.g. exponentially any trajectory like in the case of an inertial manifold, then \(Q_T(\varPsi )\rightarrow 0\), as \(T \rightarrow \infty \) whatever the solution y.

A standard m-dimensional Galerkin approximation based on the modes in \(E_\mathfrak {c}\) (with dim\((E_\mathfrak {c})=m\)), corresponds to \(\varPsi =0\) and thus to \(Q_T(\varPsi )\equiv 1\). Thus,

Clearly, given a parameterization \(\varPsi \), it may happen that the corresponding parameterization defect \(Q_T(\varPsi )\) fluctuates from solutions to solutions, and depends also substantially on the time interval \([t_1,t_2)\) over which the initial time t is taken to compute the integrals in (3.4), as well as the horizon T.

Nevertheless, given a set of solutions of interest, a horizon T, an interval \([t_1,t_2)\), and a set dimension of the reduced state space (i.e. dim(\(E_\mathfrak {c}\))\(=m\)), one is naturally inclined for seeking for parameterizations, \(\varPsi \), that come with the smallest parameterization defect. In other words, we aim at solving the following minimization problem

for which \(\mathcal {E}\) denotes a space of parameterizations that makes not only tractable the determination of a minimizer, but also that is not too greedy in terms of data. This latter requirement comes from important practical considerations. For instance, for high-dimensional systems (e.g. N of about few hundred thousands), one has typically y(t) available over a relatively small interval of time, and thus if e.g. \(m\sim N/100\) and the choice of \(\mathcal {E}\) is too naive, such as homogeneous polynomials in the \(E_\mathfrak {c}\)-variable, with arbitrary coefficients, one might easily face an overfitting problem in which too many coefficients have to be determined while not enough snapshots of y(s) are available over \([t, t+T]\). Section 4 below shows that the backward–forward system (2.29) provides a space \(\mathcal {E}\) of dynamically-based parameterizations that allow to bypass this difficulty as the coefficients to be determined are dependent only on a scalar parameter, the backward integration time \(\tau \) in (2.29).

These practical considerations are central in our approach but before providing their details, we consider in the next section other important theoretical questions. These questions deal with the existence (and uniqueness) of minimizers to (3.5) on one hand, and with the characterization of the closure system that is reached once (3.5) is solved, on the other. Thus, we show in Sect. 3.2 below that, under assumptions of ergodicity, reasonable for a broad class of forced-dissipative nonlinear systems such as arising in fluid dynamics, the minimization problem (3.5) possesses a unique solution, as \(T\rightarrow \infty \); see Theorem 4 and also [32, Theorem A.1 and Remark 4.1]. We call the corresponding minimizer, the optimal parameterizing manifold. We conclude finally by showing that an optimal PM, once used as a substitute of the unresolved variables, leads to a reduced system in \(E_\mathfrak {c}\) that gives the conditional expectation of the original system, i.e. the best vector field of the reduced state space resulting from averaging of the unresolved variables with respect to a probability measure conditioned on the resolved variables; see Theorem 5 below.

We emphasize that PMs have already demonstrated their utility in other applications. For instance, PMs have shown their usefulness for the effective determination of surrogate low-dimensional systems in view of the optimal control of dissipative nonlinear PDEs. In this case, rigorous error estimates show that parameterization defects arise naturally in the efficient model reduction of optimal control problems (see [26, Thm. 1 and Cor.2]) as furthermore supported by detailed numerical results (see [26, Sec. 5.5] and [22]). Speaking roughly, these estimates show that the smaller is the parameterization defect, the better a low-dimensional controller designed from the surrogate system, behaves. Error estimates that relate the parameterization defect to the ability of reproducing the original dynamics’ long term statistics by a surrogate system are difficult to produce for uncontrolled deterministic systems, in particular for chaotic regimes such as considered hereafter in Sects. 5 and 6, due to the singular nature (with respect to the Lebesgue measure) of the underlying invariant measure. In the stochastic realm, this invariant measure becomes smooth for a broad class of systems and the tools of stochastic analysis make the obtention of such estimates more amenable albeit non trivial; see [21]. Nevertheless, considerations from ergodic theory and conditional expectations are already insightful for the deterministic systems dealt with in this article as explained in Sect. 3.2 below.

5.1.2 Parameterization Correlation and Angle

Given a parameterization \(\varPsi \) that is not trivial (i.e. \(\varPsi \ne 0\)), we define the parameterization correlation as,

It provides a measure of collinearity between the parameterized variable \(\varPsi (y_{\mathfrak {c}}(t))\) and the unresolved variable \(y_{\mathfrak {s}}(t)\), as time evolves. In case of exact slaving, \(y_{\mathfrak {s}}(t)=\varPsi (y_{\mathfrak {c}}(t))\) and thus \(c(t)\equiv 1\).

The parameterization correlation, c(t), is another key quantity in our approach. Speaking roughly, we aim for not only at finding a PM with the smallest parameterization defect but also with a parameterization correlation, c(t), to be as much close to one as possible. The basic idea is to find parameterizations that approximate as much as possible an ideal slaving situation, for regimes in which slaving does not hold necessarily.

In particular, the parameterization correlation allows us, once an optimal PM has been determined, to select the dimension m of the reduced phase space according to the following criterium: m should correspond to the lowest dimension of \(E_\mathfrak {c}\) for which the probability distribution function (PDF) of the corresponding parameterization angle,

is the most skewed towards zero and the mode of this PDF (i.e. the value that appears most often) is the closest to zero; see Fig. 2.

As a rule of thumb, we aim at finding PMs, \(\varPsi \), such that:

-

1.

The parameterization defect, \(Q_T(\varPsi )\), is as small as possible, and

-

2.

The PDF of the parameterization angle \(\alpha (t)\) is skewed towards zero as much as possible, and its mode (i.e. the value that appears most often) is close to zero.

We illustrate in Sects. 3.4 and 5 below that, when breakdown of slaving principle occurs, these rules manifest as a natural framework to diagnose and select a parameterization. Nevertheless as the dimension of the original problem gets large, one may have to inspect a modewise version of \(Q_T\) (as discussed in Sect. 4.2) as well as of \(\alpha (t)\); see Sect. 6.3 for the latter. In any case, the idea is that one should not only parameterize properly the statistical effects of the neglected scales but also avoid to lose their phase relationships with the retained scales [132]. This is particularly important to derive closures that respect a certain phase coherence between the resolved and unresolved scales.

5.2 Optimal Parameterizing Manifold and Conditional Expectation

We present in this section the main results that serve as a foundational basis for the applications discussed hereafter. We denote by X the vector field associated with Eq. (3.1) i.e.

To simplify the presentation, we assume this vector field to be sufficiently smooth and dissipative on \(\mathbb {C}^{N}\), such that the corresponding flow, \(T_t\), is well-defined. We assume, furthermore, that \(T_t\) possesses an invariant probability measure \(\mu \), which is physically relevant [37, 57], in the sense that the following property holds for y in a positive Lebesgue measure set \(B(\mu )\) (of \(\mathbb {C}^N\)) and for every continuous observable \(\varphi :\mathbb {C}^N\rightarrow \mathbb {C}\)

This property assures that meaningful averages can be calculated and the statistics of the dynamical system can be investigated by the asymptotic distribution of orbits starting from Lebesgue almost every initial condition in e.g. the basin of attraction, \(B(\mu )\), of the statistical equilibrium \(\mu \).

Recall that, like all probability measures invariant under \(T_t,\) an invariant measure that satisfies (3.9) is supported by the global attractor \(\mathcal {A}\) when the latter exists; e.g. [24, Lemma 5.1]. In the case a global attractor is not known to exist, an invariant measure has its support in the non-wandering set, \(\varLambda \); see [69, Remark 1.4, p. 197].

It can be proven for e.g. Anosov flows [13], partially hyperbolic systems [1], Lorenz-like flows [12], and observed experimentally for many others [28, 33, 57, 71] that a common feature of (dissipative) chaotic systems is the transformation (under the action of the flow) of the initial Lebesgue measure into a probability measure with finer and finer scales, reaching asymptotically an invariant measure \(\mu \) of Sinai–Ruelle–Bowen (SRB) type. This measure is singular with respect to the Lebesgue measure, is supported by the local unstable manifolds contained in \(\mathcal {A}\) or in \(\varLambda \) [37, Def. 6.14], and if it has no zero Lyapunov exponents it satisfies (3.9) [177]. This latter property is often referred to as the chaotic hypothesis that, roughly speaking, expresses an extension of the ergodic hypothesis to non-Hamiltonian systems [71]. We work thus hereafter within this hypothesis and we assume furthermore that (3.9) holds for \(\varphi \) that lies in the space of integrable function, \(L_\mu ^1 (\mathbb {C}^N)\), with respect to the invariant measure \(\mu \).