Abstract

We study linear statistics of a class of determinantal processes which interpolate between Poisson and GUE/Ginibre statistics in dimension 1 or 2. These processes are obtained by performing an independent Bernoulli percolation on the particle configuration of a log-gas confined in a general potential. We show that, depending on the expected number of deleted particles, there is a universal transition for mesoscopic linear statistics. Namely, at small scales, the point process behave according to random matrix theory, while, at large scales, it needs to be renormalized because the variance of any linear statistic diverges. The crossover is explicitly characterized as the superposition of a \(H^{1}\)- or \(H^{1/2}\)-correlated Gaussian noise depending on the dimension and an independent Poisson process. The proof consists in computing the limits of the cumulants of linear statistics using the asymptotics of the correlation kernel of the process.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Results

1.1 Introduction

In these notes, we consider a log-gas, also known as \(\beta \)-ensemble or one component plasma, in dimension 1 or 2 at inverse temperature \(\beta =2\). Let \({\mathfrak {X}}= {\mathbb {R}}\) equipped with the Lebesgue measure \(d\mu = dx\) or \({\mathbb {C}}\) equipped with the area measure \(d\mu = d\mathrm {A}= \frac{r dr d\theta }{\pi }\). Let also \(V\in C^2({\mathfrak {X}})\) be a real-valued function such that for a \(\nu >0\),

We consider the probability measure on \({\mathfrak {X}}^N\) with density \(G_N(x)=e^{-\beta {\mathscr {H}}^N_V(x)}/Z^N_V\) where the Hamiltonian is

Regardless of the dimension \(\mathrm {d}\), the condition \(\beta =2\) implies that if a configuration \((\lambda _1,\dots , \lambda _N)\) is sampled from \(G_N\), then the point process \(\Xi := \sum _{k=1}^N \delta _{\lambda _k}\) is determinantal with a correlation kernel

with respect to \(\mu \). Moreover, for all \(k\ge 0\),

where \(\{P_k \}_{k=0}^\infty \) is the sequence of orthonormal polynomialsFootnote 1 with respect to the weight \(e^{- 2N V(x)}\) on \(L^2(\mu )\). It turns out that for \(\beta =2\), the density \(G_N\) also corresponds to the joint law of the eigenvalues of the ensemble of Hermitian (or normal) matrices with weight \(e^{-2N {\text {Tr}}V(M)}\) on \({\mathfrak {X}}={\mathbb {R}}\) (or \({\mathfrak {X}}={\mathbb {C}}\)). In particular, when \(V(z)=|z|^2\), these correspond to the well-know Gaussian Unitary (GUE) and Ginibre ensembles respectively. It is well known that if the condition (1.1) holds, the thermodynamical limit of the log-gas is described by an equilibrium measure which has compact support. Moreover, if the potential \(V\in C^2({\mathfrak {X}})\), then the equilibrium measure is absolutely continuous and we let \(\varrho _V\) be its density. This implies that for any bounded test function \(f \in C({\mathfrak {X}})\), as \(N\rightarrow +\infty \),

where the expected density of states is given by \(u^N_V(x) = N^{-1}K^N_V(x,x)\). The asymptotics (1.5) follows either from potential theory for general \(\beta >0\) or from the asymptotics of the correlation kernel (1.3) when \(\beta =2\). We refer to [4, Section 2.6] for a proof of the large deviation principle in dimension 1 and to [18] for analogous results for Coulomb gases in higher dimension and further references.

In the following, we consider the problem of describing the fluctuations of the so-called thinned log-gases in dimension \(\mathrm {d}=1,2\). In general, a thinned or incomplete point process is defined by performing a Bernoulli percolation on the configuration of a the original process. That is the incomplete log-gas, denoted by \({\widehat{\Xi }}\), is obtained by deleting independently each particle with probability \(q_N \in (0,1)\) or by keeping it with probability \(p_N=1-q_N\). It turns out that the incomplete process \({\widehat{\Xi }}\) is also determinantal with correlation kernel \({\widehat{K}}^N_V(z,w) = p_NK^N_V(z,w)\); see the appendix A for a short proof. In the context of random matrix theory, this procedure was first considered by Bohigas and Pato [8, 9] who showed that it gives rise to a crossover to Poisson statistics and the problem of rigorously analyzing this transition in the context of Coulomb gases was popularized by Deift in [23, Problem 2]. Indeed, these types of transitions are supposed to arise in many different contexts in statistical physics, such as the localization/delocalization phenomena, the crossover from chaotic to regular dynamics in the theory of quantum billiards, or in the spectrum of certain band random matrices, see [27, 28, 50] and reference therein. Although such transitions are believed to be non-universal, the model of Bohigas and Pato is arguably one of the most tractable to study this phenomenon because it is determinantal. In a different context, the effect of thinning determinantal process on statistical inferences has been recently discussed in [40] and it should be emphasized that the general strategy explained in Sect. 2 applies to more general determinantal processes, see Theorem 2.2. For instance, our method applies to the Sine and the \(\infty \)-Ginibre processes which describes the local limits of the log-gases in dimensions 1 and 2 respectively. In fact, this paper is motivated by an analogous result obtained recently by Berggren and Duits for smooth linear statistics of the incomplete Sine and CUE processes [6]. Based on the fact that these processes come from integrable operators, they fully characterized the transition for a large class of mesoscopic linear statistics and suggested that it should be universal for thinned point processes coming from random matrix theory. There are also results for the gap probabilities of the critical thinned ensembles. In [14, 15], for the Sine process, Deift et al. computed very detailed asymptotics for the crossover from the Wigner surmise to the exponential distribution making rigorous a prediction of Dyson [26], and Charlier–Claeys obtained an analogous result for the CUE [19]. The contribution of this paper is to elaborate on universality for smooth linear statistics of \(\beta \)-ensemble in dimension 1 or 2 when \(\beta =2\). Although our proof relies on the determinantal structure of these models, instead of the connection with Riemann-Hilbert problems used in the previous works, we apply the cumulants method which appears to be very robust to study the asymptotic fluctuations of smooth linear statistics.

Let us point out that based on the theory of [30], an alternative correlation kernel for the incomplete process is

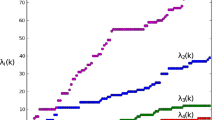

where \((J_k^N)_{k=1}^\infty \) is a sequence of i.i.d. Bernoulli random variables with expected values \({\mathbb {E}}[J_k^N]= p_N \mathbf {1}_{k<N} \). This shows that removing particles builds up randomness in the system and when the disorder becomes sufficiently strong, it will behave like a Poisson process rather than according to random matrix theory.

To keep the analysis as simple as possible, we will restrict ourself to real-analytic V, although the results should be valid for more general potential as well (especially in dimension 1 where the asymptotics of the correlation kernels have been studied in great generality). We keep track of the transition by looking at linear statistics \(\widehat{\Xi }(f) = \sum f(\lambda )\) for smooth test functions, where the sum is over the configuration of the incomplete log-gas. The random matrix regime is characterized by the property that the fluctuations of \(\widehat{\Xi }(f)\) are of order 1 and described by a universal Gaussian noise as the number of particles tends to infinity. On the other hand, in the Poisson regime, the variance of any non-trivial statistic diverges and, once properly renormalized, the point process converges in distribution to a white noise. In the remainder of this introduction, we first formulate our assumptions and main results for the fluctuations of the incomplete 2-dimensional log-gases. Then, we will present analogous results in dimension 1.

In what follows, we let \({\mathcal {C}}^k_c({\mathscr {S}})\) be the set of functions with k continuous derivatives and compact support in \({\mathscr {S}}\subset {\mathfrak {X}}\) and we use the notation:

If \(\varrho _V\) is the equilibrium density function, we also denote

1.2 Main Results for 2-Dimensional Coulomb Gases

If the potential V is real-analytic and satisfies the condition (1.1), then the log-gas lives on the compact set \(\overline{{\mathscr {S}}_V} \subset {\mathbb {C}}\) which is called the droplet and the equilibrium density is given by \(\varrho _V = 2\Delta V \mathbf {1}_{{\mathscr {S}}_V}\). It is also well known that the bulk fluctuations of a two dimensional log-gas around its equilibrium configuration are described by a centered Gaussian process \(\mathrm {X}\) with correlation structure:

for any (real-valued) smooth functions f and g. Modulo constants, the RHS of formula (1.8) defines a Hilbert space, denoted \( H^{1}({\mathbb {C}})\), with norm:

Therefore the stochastic process \(\mathrm {X}\) is called a \(H^1\)-Gaussian noise. The central limit theorem (CLT) was first established for the Ginibre process by Rider and Viràg [45] for \({\mathcal {C}}^1\) test functions with at most exponential growth at infinity. For general real-analytic potentials, it was proved in [3] that for any smooth function f with compact support, one has as \(N\rightarrow \infty \),

where \(f^\dagger \) is the (unique) continuous and bounded function on \({\mathbb {C}}\) such that \(f^\dagger = f\) on the droplet \(\overline{{\mathscr {S}}_V}\) and \(\Delta f^\dagger =0\) on \({\mathbb {C}}\backslash \overline{{\mathscr {S}}_V}\). Actually, when \({\text {supp}}(f) \subset {\mathscr {S}}_V \), we have \(f^\dagger = f\) on \({\mathbb {C}}\) and the CLT was obtained previously in the paper [2] from which part of our method is inspired. We also refer to [10, 41] for more recent proofs which hold for general \(\beta >0\). By convention, in (1.8) and below, \(\Rightarrow \) means that the convergence holds in distribution and that all moments of the random variable converge.

In order to describe the crossover from the \(H^1\)-Gaussian noise to white noise, let \(\Lambda _\eta \) be a mean-zero Poisson process with intensity \(\eta \in L^\infty ({\mathfrak {X}})\). This process is characterized by the fact that for any function \(f\in {\mathcal {C}}_c({\mathfrak {X}})\), the Laplace transform of the random variable \(\Lambda _\eta (f)\) is well-defined and given by

Theorem 1.1

Let \(\mathrm {X}\) be a \(H^1\)-Gaussian noise and \(\Lambda _{\tau \varrho _V}\) be an independent Poisson process with intensity \(\tau \varrho _V\) where \(\tau >0\) defined on the same probability space. Let \(f \in {\mathcal {C}}^3_c({\mathscr {S}}_V)\), \(p_N = 1-q_N\), and let \(T_N = N q_N\). As \(N\rightarrow \infty \) and \(q_N \rightarrow 0\), we have

The proof of Theorem 1.1 is based on the cumulants’ method and it is explained in details in Sect. 2. In particular, we formulate a result—Theorem 2.2—valid for general determinantal point process and might be of independent interest. The details of the proof of Theorem 1.1 are given in Sect. 3.2. Our method relies on the approximations of the correlation kernel \(K^N_V\) from [2]—see Lemma 3.1 below—and it restricts us to work with test functions which are supported inside the bulk. However, the result should be true for general functions if we replace f by \(f^\dagger \) on the RHS of (1.12) and (1.14).

Theorem 1.1 can be interpreted as follows. In the regime \(Nq_N \rightarrow 0\), virtually no particles are deleted and linear statistics behave according to random matrix theory. On the other hand, in the regime \(Nq_N \rightarrow \infty \), the variance of a linear statistic diverge. So, if we renormalize the random variable \(\widehat{\Xi }(f)\), we obtain a classical CLT and (1.13) shows that the limit is described by a white noise supported on \({\mathscr {S}}_V\) whose intensity is the equilibrium measure \(\varrho _V\). In the critical regime, when the expected number of deleted particles equals \(\tau >0\), the limiting process is the superposition of a \(H^1\)-correlated Gaussian noise and an independent mean-zero Poisson process applied to \(-f\). Finally, by using formula (1.11), it is not difficult to check that as \(\tau \rightarrow \infty \), the random variable

so that the critical regime clearly interpolates between (1.12) and (1.13).

In fact, the crossover is more interesting at mesoscopic scales. Namely, the density of a log-gas is of order N and one can also investigate fluctuations at small scales by zooming inside the bulk of the process. If \(L_N \nearrow \infty \), \(x_0 \in {\mathscr {S}}_V\), and \(f\in {\mathcal {C}}_c({\mathbb {C}})\), we consider the test function

The regime \(L_N=N^{1/\mathrm {d}}\) is called microscopic and it was shown in [2, Proposition 7.5.1] that when \(\mathrm {d}=2\),

where the process \( \Xi ^\infty _{\rho }\) is called the \(\infty \)-Ginibre process with density \(\rho >0\). It is a determinantal process on \({\mathbb {C}}\) with correlation kernel

Based on the argument from [2], it is straightforward to verify that the incomplete process has a local limit as well:

For any \(0<p\le 1\), \( \widehat{\Xi }^{\infty }_{\varrho ; p}\) is a (translation invariant) determinantal process on \({\mathbb {C}}\) with correlation kernel \(p K^\infty _\varrho (z,w) \). This process is constructed by running an independent Bernoulli percolation with parameter p on the point configuration of the \(\infty \)-Ginibre process with density \(\rho >0\). In particular, (1.17) shows that one needs to delete a non-vanishing fraction of the N particles of the gas in order to get a local limit which is different from random matrix theory. It was proved in [44] that, as the density \(\rho \rightarrow \infty \), the fluctuations of the \(\infty \)-Ginibre process are of order 1 and described by the \(H^1\)-Gaussian noise:

for any \(f \in H^1\cap L^1({\mathbb {C}})\). Therefore it is expected that, in the mesoscopic regime, \(\text {i.e. }L_N = o(\sqrt{N})\), the asymptotic fluctuations of the linear statistic \(\Xi (f_N)\) are universal and described by \(\mathrm {X}(f)\). However, to our best knowledge, a proof was missing from the literature and, in Sect. 3, we show that this fact follows quite simply by combining the ideas from [44] and [2].

Theorem 1.2

Let \(x_0 \in {\mathscr {S}}_V\), \(f \in {\mathcal {C}}_c^3({\mathbb {C}})\), \(\alpha \in (0,1/2)\), and let \(f_N\) be given by formula (1.15) with \(L_N =N^\alpha \). Then, we have as \(N \rightarrow \infty \),

Using the same method, we can also describe the fluctuations of smooth mesoscopic linear statistics of a incomplete Coulomb gas.

Theorem 1.3

Let \(\mathrm {X}\) be a \(H^1\)-Gaussian noise and \(\Lambda _{\tau }\) be an independent Poisson process with constant intensity \(\tau >0\) on \({\mathbb {C}}\). Let \(x_0 \in {\mathscr {S}}_V\), \(f \in {\mathcal {C}}^3_c({\mathscr {S}}_V)\), \(\alpha \in (0,1/2)\), and let \(f_N\) be the mesoscopic test function given by formula (1.15) with \(L_N =N^\alpha \). We also let \(p_N = 1-q_N\) and \(T_N= N q_N L_N^{-2} \varrho _V(x_0)\). We have as \(N\rightarrow \infty \) and \(q_N\rightarrow 0\),

The proof of Theorem 1.3 follows the same strategy are that of Theorem 1.1 and the technical differences are explained in Sect. 3.2. This result shows that, at mesoscopic scales, the transition occurs when the mesoscopic density of deleted particles which is given by the parameter \(T_N>0\) converges to a positive constant \(\tau \). In contrast to previous results, this transition appears to be non-Gaussian and it is somewhat surprising that it can also be described in an elementary way. In dimension 1, one can obtain a crossover from GUE eigenvalues to a Poisson process by letting independent points evolved according to Dyson’s Brownian motion. This leads to a determinantal process sometimes called the deformed GUE whose kernel depends on the diffusion time, see [32]. This model also exhibit a transition which has been analyzed for mesoscopic linear statistics in [25] and it was proved that the critical fluctuations are Gaussian. One can also consider non-intersecting Brownian motions on a cylinder. It turns out that this point process describes the positions of free fermions confined in a harmonic trap at a temperature \(\tau >0\). It was established in [34], see also [21], that the corresponding grand canonical ensemble is determinantal with a correlation kernel of the form (1.6) with for \(k\ge 0\),

For sufficiently small temperature, this system behaves like its ground-state, the GUE, while it behaves like a Poisson process at larger temperature. It was proved in [35] that this leads to yet another crossover where non-Gaussian fluctuations are observed at the critical temperature. However, to the author’s knowledge, in contrast to the incomplete ensembles considered here, the critical processes discovered in [35] cannot be described in simple terms like Theorem 1.5 below.

1.3 Main Results for Eigenvalues of Unitary Invariant Hermitian Random Matrices

For 1-dimensional log-gases, for general \(\beta >0\) and for a large class of potentials, Johansson established in [31] the existence of the equilibrium measure and also managed to describe the fluctuations around the equilibrium configuration. To state the result in a universal way, note that one can make an affine rescaling of the potential and assume that \({\mathscr {I}}_V \subset [-1,1] \). If V is a polynomial and \({\mathscr {I}}_V =(-1,1)\), Johansson proved that linear statistics of the process \(\Xi \) satisfy a central limit theorem:

for any \(f \in {\mathcal {C}}^2({\mathbb {R}})\) such that \(f'(x)\) grows at most polynomially as \(|x|\rightarrow \infty \). The process \(\mathrm {Y}\) is a centered Gaussian noise defined on \([-1,1]\) with covariance structure:

In (1.19), \(\mathrm {c}_k(f)\) denote the Fourier–Chebyshev coefficients of the function f:

where \((T_k)_{k=0}^\infty \) are the Chebyshev polynomials of the first kind.Footnote 2 The CLT (1.18) holds for more general potentials and for other orthogonal polynomial ensembles as well, see [43, Section 11.3] or [12, 17, 39] and it is known that the one-cut condition, i.e. the assumption that the support of the equilibrium measure is connected, is necessary. Otherwise, the asymptotic fluctuations of a generic linear statistic \(\Xi (f)\) are still of order 1 but are not Gaussian, see [13, 42, 46]. In fact, the one-cut condition is closely related to the fact the recurrence coefficients [see formula (4.1)] which defines the orthogonal polynomials \((P_k)_{k\ge 0}\) appearing in the correlation kernel (1.3) satisfy for any \(j\in {\mathbb {Z}}\),

see Remark 4.2 below. Like for 2-dimensional Coulomb gas, we obtain analogous transitions for the eigenvalues of random unitary invariant Hermitian matrices.

Theorem 1.4

Let \(p_N = 1-q_N\), \(T_N = N q_N\), and suppose that the recurrence coefficients of the orthogonal polynomials \(\{P_k \}_{k=0}^\infty \) satisfy the conditions (1.21). Then, for any polynomial Q, we obtain as \(N\rightarrow \infty \) and \(q_N \rightarrow 0\),

where the Poisson process \( \Lambda _{\tau \varrho _V}\) is independent from the Gaussian process \(\mathrm {Y}\) and both are defined on \({\mathscr {I}}_V =(-1,1)\).

The proof of Theorem 1.4 is also based on the cumulants’ method and on Theorem 2.2. However, the technical details, which are explained in Sects. 4.1 and 4.2, rely on the formulation from [39] and are very different from that of the proof of Theorem 1.1.

We also obtain the counterpart of Theorem 1.4 for mesoscopic linear statistics. For any function \(f\in L^1({\mathbb {R}})\), we define its Fourier transform :

We let \(\mathrm {Z}\) be a mean-zero Gaussian process on \({\mathbb {R}}\) with correlation structure:

Since \({\text {Var}}\big [ \mathrm {Z}(f)\big ] = \Vert f\Vert _{H^{1/2}({\mathbb {R}})}\), the process \(\mathrm {Z}\) is usually called the \(H^{1/2}\)—Gaussian noise. It describes the mesoscopic fluctuations of the eigenvalues of Hermitian random matrices, see [16, 29, 38], as well as the mesoscopic fluctuations of the log-gases for general \(\beta >0\), [5], and of certain random band matrices in the appropriate regime [27, 28].

Theorem 1.5

We let \(x_0\in {\mathscr {I}}_V\), \(f\in {\mathcal {C}}^2_c({\mathbb {R}})\), \(\alpha \in (0,1)\), and \(f_N(x) = f\big (N^\alpha (x-x_0) \big )\). We also let \(p_N = 1-q_N\) and \(T_N = q_NN^{1-\alpha } \varrho _V(x_0)\). We obtain as \(N\rightarrow \infty \) and \(q_N\rightarrow 0\),

where the Poisson process \(\Lambda _{\tau }\) has constant intensity \(\tau >0\) on \({\mathbb {R}}\) and is independent from the \(H^{1/2}\)—Gaussian noise \(\mathrm {Z}\).

The proof of Theorem 1.5 is quite similar to that of Theorem 1.3. It follows the strategy explained in Sect. 2 and is based on the asymptotics for the correlation kernel \(K^N_V\) in terms of the sine-kernel, see [37]. The details relies on the method from [38] and are given in Sect. 4.3.

1.4 Overview of the Rest of the Paper

In Sect. 2, we present the strategy of the proofs of the results from Sects. 1.2 and 1.3. We begin by reviewing Soshnikov’s cumulants’ method. Then, we explain how to apply it to the incomplete ensemble \(\widehat{\Xi }\) and we obtain a general result—Theorem 2.2—which characterizes the transition from Gaussian to Poisson statistics for general determinantal point processes. The rest of the paper consists in verifying the assumptions of Theorem 2.2 for determinantal log-gases in dimensions 1 and 2. In Sect. 3, we prove Theorems 1.1–1.3 for the 2-dimensional log-gases. The proof relies on estimates for the correlation kernel (1.3) which come from the paper [2] and are collected in the Appendix B. In Sect. 4, we provide the details of the proofs of Theorems 1.4 and 1.5 by relying on the method from [39] and [38] respectively.

In the following, \(C>0\) denotes a numerical constant which changes from line to line. For any \(n\in {\mathbb {N}}\), we let for all \(\mathrm {x} \in {\mathfrak {X}}^n\),

If \((\mathrm {u}_N)\) and \((\mathrm {v}_N)\) are two sequences, we use the notation:

2 Outline of the Proof

In this section, we consider a general state space \({\mathfrak {X}}\) which is a complete separable metric space equipped with a Radon measure \(\mu \) as in [30, 47] and let \(\Xi \) be a sequence of determinantal point processes on \({\mathfrak {X}}\) with correlation kernels \(K^N\) which are reproducing:

One may think of the parameter \(N \in {\mathbb {N}}\) as the density of particles. Since it is generally the case in the context of random matrix theory, we shall also assume that the kernels \(K^N\) are continuous on \({\mathfrak {X}}\times {\mathfrak {X}}\), Hermitian symmetric, and that they define locally trace-class integral operators acting on \(L^2({\mathfrak {X}},\mu )\).

The cumulants’ method to analyze the asymptotic distribution of linear statistic of determinantal processes goes back to the work of Costin and Lebowitz [20] for count statistics of the Sine process. The general theory was developed by Soshnikov in [47,48,49] and subsequently applied to many different ensembles coming from random matrix theory, see for instance [2, 16, 17, 35, 38, 39, 44, 45].

In this section, we show how to implement the cumulants’ method to describe the asymptotics law of linear statistics of the incomplete ensemble \(\widehat{\Xi }\) with correlation kernel \(p_N K^N(z,w)\) when the density of particles \(0<p_N<1\) converges to 1 in the large N limit.

Let

and let \(\ell (\mathbf {k})= l\) denote the length of the tuple \(\mathbf {k}\). Let us denote the set of compositions of the integer \(n>0\) by

We also denote by \(n\in \mho \) the trivial composition. For any map \(\Upsilon : \mho \mapsto {\mathbb {R}}\), for any function \(f: {\mathfrak {X}}\rightarrow {\mathbb {R}}\), and for any \(n\in {\mathbb {N}}\), we define for all \(x\in {\mathfrak {X}}^n\),

If \(\mathbf {k}\vdash n\), we let \(\displaystyle \mathrm {M}(\mathbf {k}) =\frac{n!}{k_1!\cdots k_l!}\) be the corresponding multinomial coefficient and for all integers \(n\ge 1\) and \( m \in \{0, \dots , n\}\), we define the coefficients

We will also use the notation: \(\displaystyle \delta _{k}(n) = {\left\{ \begin{array}{ll} 1 &{}\text {if}\ n=k \\ 0 &{}\text {else} \end{array}\right. }\) for any \(k\in {\mathbb {Z}}\).

Lemma 2.1

For all \(n\in {\mathbb {N}}\), we have \(\gamma ^n_0 = \delta _{1}(n)\) and \( \gamma ^n_1 = (-1)^n\).

Proof

The coefficients (2.3) have the generating function:

In particular, setting \(q=0\), we see that \( \gamma ^n_0 = \delta _{1}(n) \). Moreover, since

we also see that \( \gamma ^n_1 = (-1)^n\) . \(\square \)

Given a test function \(f: {\mathfrak {X}}\rightarrow {\mathbb {R}}\), say locally integrable with compact support, the cumulant generating function of the random variable \(\Xi (f)\) is

It was proved by Soshnikov that under our general assumptions, the cumulants \({\text {C}}^n_{K}[f] \) characterize the law of the linear statistics \(\Xi (f)\) and that for any \(n\in {\mathbb {N}}\),

Under stronger assumptions, for instance if the kernel \(K^N\) has finite rank, this formula makes sense also for test functions which are not necessarily compactly supported. We use the convention that the variables \(x_0\) and \(x_l\) are identified in the previous integral. Since we assume that the correlation kernel \(K^N\) is reproducing, we can rewrite this formula:

where for any \(\mathbf {k}\in \mho \),

A simple observation which turns out to be very important when it comes to asymptotics is that, by Lemma 2.1, for all \(n\ge 2\),

For any \(m\in {\mathbb {N}}\), we defineFootnote 3

These functions are constructed so that we have for all \(n,m\in {\mathbb {N}}\),

According to formula (2.4), the cumulants of the process \(\widehat{\Xi }\) with correlation kernel \({\widehat{K}}_N = p_N K^N\) are given by

Since the kernel \(K^N\) is reproducing, if we set \(q_N=1-p_N\), using the binomial formula, we obtain

We are now ready to state our general result from which Theorems 1.1, 1.3, 1.4 and 1.5 in the introduction follow.

Theorem 2.2

Let \(0<q_N<1\) be a sequence which converges to 0 as \(N\rightarrow +\infty \). Under our general assumptions above, let \(f_N\) be a sequence of functions for which the cumulants \({\text {C}}^n_{K^N}[f_N]\) are well-defined for all \(n, N \in {\mathbb {N}}\) and the following conditions hold:

-

1.

There exists a (Radon) measure \(\eta \) on \({\mathfrak {X}}\), a function \(f\in L^p(\eta )\) for any \(p\ge 2\), and a sequence \(M_N \nearrow +\infty \) as \(N\rightarrow +\infty \) such that for all \(n\ge 1\),

$$\begin{aligned} \frac{1}{M_N} \int _{\mathfrak {X}}f_N(x)^n K^N(x,x) d\mu (x) \simeq \int _{\mathfrak {X}}f(x)^n d\eta (x). \end{aligned}$$(2.11) -

2.

For all \(n\ge 2\) and all \(m\ge 1\), as \(N\rightarrow +\infty \)

$$\begin{aligned} \left| \underset{x_{0} =x_n}{\int _{{\mathfrak {X}}^n}} \Upsilon ^n_m[f_N](x) \prod _{1\le j\le n} K^N(x_j, x_{j-1}) d\mu ^n(x) \right| = o\left( q_N^{-1} \vee M_N \right) . \end{aligned}$$(2.12) -

3.

There exists \(\sigma >0\) such that for all \(n\in {\mathbb {N}}\),

$$\begin{aligned} \lim _{N\rightarrow \infty } {\text {C}}^n_{K^N}[f_N] = {\left\{ \begin{array}{ll} \sigma ^2 &{}\text {if } n=2 \\ 0 &{}\text {if } n>2 \end{array}\right. }. \end{aligned}$$(2.13)

Then, depending on the parameter \(T_N = q_N M_N >0\), we distinguish three different asymptotic regimes for the linear statistic \(\widehat{\Xi }(f_N)\) of the thinned point process with density \(p_N=1-q_N\):

-

(i)

If \(T_N \rightarrow 0\) as \(N\rightarrow +\infty \),

$$\begin{aligned} \widehat{\Xi }(f_N) - {\mathbb {E}}\big [\widehat{\Xi }(f_N) \big ] \Rightarrow {\mathcal {N}}(0,\sigma ^2) . \end{aligned}$$ -

(ii)

If \(T_N \rightarrow \tau \) with \(\tau >0\) as \(N\rightarrow +\infty \),

$$\begin{aligned} \widehat{\Xi }(f_N) - {\mathbb {E}}\big [\widehat{\Xi }(f_N) \big ] \Rightarrow \mathrm {X} + \Lambda _{\tau \eta }(f), \end{aligned}$$where \(\mathrm {X} \sim {\mathcal {N}}(0,\sigma ^2)\) and \(\Lambda _{\tau \eta }\) is Poisson process on \({\mathfrak {X}}\) with intensity \(\tau \eta \) independent from \(\mathrm {X}\).

-

(iii)

If \(T_N \rightarrow +\infty \) as \(N\rightarrow +\infty \),

$$\begin{aligned} \frac{\widehat{\Xi }(f_N) - {\mathbb {E}}\big [\widehat{\Xi }(f_N) \big ]}{\sqrt{T_N}} \Rightarrow {\mathcal {N}}\Big (0, \int f(x)^2 d\eta (x) \Big ) . \end{aligned}$$

Before giving our proof of Theorem 2.2, let us make two remarks about the Assumptions (2.11)–(2.13).

Remark 2.1

For any function \(g:{\mathfrak {X}}\rightarrow {\mathbb {R}}_+\), we have \(\displaystyle {\mathbb {E}}[\Xi (g)] = \int _{\mathfrak {X}}g(x) K^N(x,x) d\mu (x)\), so that one can interpret (2.11) as a condition about the mean of the point process \(\Xi \). In contrast, (2.12) can be seen as a condition about the fluctuations of the incomplete point process. We also implicitely assume that for \(n=2\), the RHS of (2.11) is positive, so that the measure \(\eta \) is non-trivial and puts mass on the support of f. Then the random variables \({\mathcal {N}}\big (0, \int f(x)^2 d\eta (x) \big )\) and \(\Lambda _{\tau \eta }(f)\) are non zero for any \(\tau >0\). In order to handle mesoscopic linear statistics, we have allowed our test functions to depend on the parameter N. However, for simplicity, one can think of the case where \(f_N = f\) is a smooth and compactly supported test function.

Remark 2.2

Instead of (2.13), we could assume that the cumulants of the linear statistics \(\Xi (f_N)\) converge to that of a random variable \(\mathrm {X}\) which is not necessarily Gaussian. Then, the conclusion (ii) of Theorem 2.2 remains true and we obtain a crossover from a non-Gaussian process to a Poisson process. For instance, this more general situation arises when considering linear statistics of 1-dimensional log-gases in the multi-cut regime.

Proof

Observe that it follows from formula (2.10) and the condition (2.12) that the cumulants of the linear statistic \(\widehat{\Xi }(f_N)\) satisfy for all \(n\ge 2\) as \(N\rightarrow +\infty \),

where \(T_N = q_N L_N\). Then, using the condition (2.11), we obtain for any \(n\ge 2\), as \(N\rightarrow +\infty \),

Let us observe that in the previous sum, regardless of the regime we consider, since \(q_N\rightarrow 0\) as \(N\rightarrow +\infty \), only the term \(m=0\) is asymptotically relevant. For instance, if we assume that \(T_N = \tau +o(1)\) with \(\tau \ge 0\), this implies that

On the one hand by Lemma 2.1, since \(\gamma ^n_1 = (-1)^n\) and using the condition (2.13), we obtain for any \(n\ge 2\)

In the regime (i)—which corresponds to \(\tau =0\)—this shows that the linear statistic \(\widehat{\Xi }(f_N)\), once centered, converges in distribution (as well as in the sense of moments) to a Gaussian random variable with variance \(\sigma ^2\). Let us observe that by formula (1.11), if \(\tau >0\), the second term on the RHS of (2.15) corresponds to the \(n{\mathrm{th}}\) cumulant of the random variable \(\Lambda _{\tau \eta }(f)\). This proves the claim in the regime (ii).

On the other hand, in the regime (iii) where \(T_N\rightarrow +\infty \), we see from (2.14) that the variance \({\text {C}}^n_{{\widehat{K}}^N}[f_N]\) diverges as \(N\rightarrow +\infty \). Thus, in order to have a non-trivial limit, we need to renormalize the linear statistic \(\widehat{\Xi }(f_N)\). Namely, we consider instead the test function \(g_N = f_N /\sqrt{T_N}\) and it follows from (2.14) that for all \(n\ge 2\), as \(N\rightarrow +\infty \)

These asymptotics show that in the the regime (iii), \(\frac{\widehat{\Xi }(f_N) - {\mathbb {E}}\big [\widehat{\Xi }(f_N) \big ]}{\sqrt{T_N}}\) converges in distribution (as well as in the sense of moments) to a centered Gaussian random variable with variance \(\int _{\mathfrak {X}}f(x)^{2} d\eta (x)\). \(\square \)

3 Transition for Coulomb Gases in Two Dimensions

3.1 Asymptotics of the Correlation Kernel

In this section, we begin by reviewing the basics of the theory of eigenvalues of random normal matrices developed by Ameur, Hedenmalm and Makarov. In particular, we are interested in the properties of the correlation kernel (1.3) in the bulk of the gas. It has been established in [2] that, if the potential V is real-analytic, the equilibrium measure is \(\varrho _V = 2\Delta V \mathbf {1}_{{\mathscr {S}}_V}\) and the droplet \(\overline{{\mathscr {S}}_V}\) is a compact set with a nice boundary. Moreover, in order to compute the asymptotics of the cumulants of a smooth linear statistic, instead of working with the correlation kernel \(K^N_V\), one can use the so-called approximate Bergman kernel:

The functions \(b_0(z,w)\), \(b_1(z,w)\) and \(\Phi (z,w)\) are the (unique) bi-holomorphic functions defined in a neighborhood in \({\mathbb {C}}^2\) of the set \(\big \{ (z ,{\overline{z}}) : z\in {\mathscr {S}}_V \big \}\) such that \(b_0(z,{\overline{z}}) = 2 \Delta V(z)\), \(b_1(z,{\overline{z}}) = \frac{1}{2} \Delta \log ( \Delta V)(z)\), and \(\Phi (z,{\overline{z}}) = V(z)\).

Lemma 3.1

(Lemma 1.2 in [2], proved in [1, 7]) For any \(x_0 \in {\mathscr {S}}_V \), there exists \(\epsilon _0>0\) and \(C_0>0\) so that when the dimension N is sufficiently large, we have for all \(z, w \in {\mathbb {D}}(x_0 , \epsilon _0)\),

Moreover, at sufficiently small mesoscopic scale, up to a gauge transform, the asymptotics of the approximate Bergman kernel \(B^N_V\) is universal.

Lemma 3.2

Let \(\kappa >0\) and \(\epsilon _N= \kappa N^{-1/2}\log N \) for all \(N\in {\mathbb {N}}\). For any \(x_0 \in {\mathscr {S}}_V\), there exists \(\varepsilon _0>0\) and a function \({\mathfrak {h}}: {\mathbb {D}}(0,\varepsilon _0) \rightarrow {\mathbb {R}}\) such that if the parameter N is sufficiently large, the function

satisfies

uniformly for all \(u, v \in {\mathbb {D}}(0, \epsilon _N)\), where \(K^\infty _{N \varrho _V(x_0)}\) is the \(\infty \)-Ginibre kernel with density \(N \varrho _V(x_0)= 2N \Delta V(x_0)\).

A key ingredient in the paper [2], as well as [44], is to reduce the domain of integration in formula (2.5), using the exponential off-diagonal decay of the correlation kernels \(K_V^N\), to a set where we can use the asymptotics (3.4)—see the next Lemma. For completeness, the proofs of Lemmas 3.2 and 3.3 are given in the Appendix B.

Lemma 3.3

Let \(n\in {\mathbb {N}}\) and \(\epsilon _N = \kappa N^{-1/2} \log N\) for some constant \(\kappa >0\) which is sufficiently large compared to n. We let

Let \({\mathscr {S}}\) be a compact subset of \({\mathscr {S}}_V\), \(N_0\in {\mathbb {N}}\), and \(F_N : {\mathbb {C}}^{n+1} \rightarrow {\mathbb {R}}\) be a sequence of continuous functions such that

We have

Remark 3.1

Recall that \(K^\infty _\rho \), (1.16), denotes the correlation kernel of the \(\infty \)-Ginibre process with density \(\rho >0\). Using the fact that for all \(z, w\in {\mathbb {C}}\),

it is easy to obtain the counterpart of Lemma 3.3 for the \(\infty \)-Ginibre kernel, see Lemma 4.3.

3.2 Proof of Theorem 1.1

In this section, we show how to apply Theorem 2.2 for Coulomb gases in the global regime by relying on the asymptotics from Sect. 3.1 for the correlation kernel \(K^N_V\). First, observe that with \(f_N = f\) and \(M_N = N\), the Assumptions (2.11) follow immediately from the law of large numbers (1.5). Then the measure \(d\eta = \varrho _V d\mu \) is absolutely with respect to \(\mu \) with compact support. Moreover, if \(f\in {\mathcal {C}}_c^3({\mathscr {S}}_V)\), then the conditions (2.13) are well-known from [2, Theorem 4.4] with \(\sigma ^2 = {\mathbb {E}}[\mathrm {X}(f)]\) according to formula (1.8). So, our main technical challenge is to obtain the estimates (2.12) for a large class of test functions. The first step of the proof is the following approximation.

Proposition 3.4

Let \({\mathcal {K}}\subset {\mathbb {C}}\) be a compact set, \(f\in {\mathcal {C}}^3_c({\mathcal {K}})\) and let \(\Upsilon : \mho \rightarrow {\mathbb {R}}\) be any map such that \(\sum _{\mathbf {k}\vdash n} \Upsilon (\mathbf {k}) = 0\) for all \(n\ge 2\). Let \(L_N \) be an increasing sequence such that \(L_N^{-1} (\log N)^4 = o(1)\) and \(L_N = o \big (\sqrt{N}/(\log N)^{3}\big )\) as \(N\rightarrow +\infty \). We also denote by \(H^n(\lambda ; \mathrm {w})\) the second order Taylor polynomial at 0 of the function \(\mathrm {w}\in {\mathbb {C}}^n \mapsto \Upsilon ^{n+1}[f](\lambda , \lambda + \mathrm {w})\). Fix \(x_0 \in {\mathscr {S}}_V\) and let \(f_N(z)=f(L_N(z- x_0))\) as in (1.15). Then, we have for any \(n\ge 1\), as \(N\rightarrow +\infty \),

where the density is given by \(\eta _N(\lambda ) = N L_N^{-2} \varrho _V(x_0+ \lambda /L_N)\).

Remark 3.2

Observe that under the assumptions of Proposition 3.4, we have \(\eta _N(\lambda ) \rightarrow +\infty \) as \(N\rightarrow +\infty \) uniformly for all \(\lambda \in {\mathcal {K}}\). Moreover, it follows from the proof below that in the global regime where \(L_N =1\) and \(x_0 = 0\), provided that \({\mathcal {K}}\subset {\mathscr {S}}_V\), the estimates (3.9) remain valid with an extra error. Namely, we obtain for all \(n\ge 1\),

Proof

We let \(F_N = \Upsilon ^{n+1}[f_N]\) and

Since \(x_0\in {\mathscr {S}}_V\), there exists a compact set \({\mathscr {S}}\subset {\mathscr {S}}_V\) so that \({\text {supp}}(f_N) \subseteq {\mathscr {S}}\) when the parameter N is sufficiently large. Then, according to formula (2.2), the function \(F_N\) satisfies the Assumption (3.6). Thus, by Lemma 3.3, we obtain as \(N\rightarrow +\infty \)

By (3.5), the set

and we can apply Lemma 3.1 to replace the kernels \(K^N_V(z_j,z_{j+1})\) in formula (3.11). Namely, if \(z_{n+1} = z_0 \) and \(\mathrm {z}\in {\mathscr {A}}(z_0; \epsilon )\), then

where \(S_N = \sup \big \{ |B^N_V(z,w)| : z, w \in {\mathbb {D}}(z_0, n \epsilon _N) , z_0 \in {\mathscr {S}}\big \} \). By Lemma 3.2, we have for all \(u,v \in {\mathbb {D}}(0, n\epsilon _N) \),

and, by formula (3.8), this implies that \(S_N \le C N\). If we combine these estimates with formula (3.11), since the functions \(F_N\) are uniformly bounded, we obtain

where \(|{\mathscr {A}}|\) denotes the Lebesgue measure of the set \({\mathscr {A}}\). By definition, \(\epsilon _N = \kappa N^{-1/2} \log N\) so that \( \big | {\mathscr {A}}(z_0;\epsilon _N) \big | \le C N^{-n} (\log N)^{2n}\) for all \(z_0\in {\mathbb {C}}\). Thus, the previous error term converges to 0 like \((\log N)^{2n}/ N\). Hence, if we make the change of variables \(\mathrm {z}= z_0 +\mathrm {u}\) and the appropriate gauge transform in the previous integral, according to formula (3.3), we obtain

Note that in formula (3.12), the integral is over a small subset of the surface \(\{ \mathrm {u}\in {\mathbb {C}}^{n+2} : u_0 = u_{n+1} =0\} \) and we denote \(F_N(z_0 +\mathrm {u}) = F_N(z_0, z_0+u_1,\dots , z_0+u_n)\). Then, we can apply Lemma 3.2 to replace the kernel \( {\widetilde{B}}^N_{V, z_0}\) by \(K^\infty _{N \varrho _V(z_0)}\) in formula (3.12), we obtain

where \(\displaystyle \chi _N(z_0,\mathrm {u}) = 1 + \underset{N\rightarrow \infty }{O}\big ( (\log N)^{2}\epsilon _N \big )\) uniformly for all \(z_0\in {\mathscr {S}}\) and all \(\mathrm {u}\in {\mathscr {A}}(0; \epsilon _N)\).

Let \(F=\Upsilon ^{n+1}[f]\), \(\delta _N = \epsilon _N L_N\) and \(\eta _N(\lambda ) =N L_N^{-2} \varrho _V(x_0 + \lambda /L_N) \). By definition, \(F_N(z_0 +\mathrm {u}) = F\big (L_N(z_0- x_0 +\mathrm {u})\big ) \) and we can make the change of variables \(\lambda = L_N(z_0-x_0)\) and \(\mathrm {w}= L_N \mathrm {u}\) to get rid of the scale \(L_N\) and \(x_0\) in the previous integral. Using the obvious scaling property of the \(\infty \)-Ginibre kernel, (1.16), we obtain

where \(\displaystyle {\widetilde{\chi }}_N(\lambda ,\mathrm {w})= 1 + \underset{N\rightarrow \infty }{O}\big ( (\log N)^{2}\epsilon _N \big )\) uniformly for all \(\lambda \in {\mathcal {K}}\) and for all \(\mathrm {u}\in {\mathscr {A}}(0; \delta _N)\). Here we used that the test function f is supported in the set \({\mathcal {K}}\). The condition \(\sum _{\mathbf {k}\vdash n+1} \Upsilon (\mathbf {k}) = 0\) implies that \(F(\lambda +0)=0\) for all \(\lambda \in {\mathbb {C}}\) so that for all \(\mathrm {w}\in {\mathscr {A}}(0; \delta _N)\),

Moreover, by formula (3.8), we have for any \(n \in {\mathbb {N}}\),

where \(v_j = w_j - w_{j-1}\) for all \(j=1,\dots , n\). Hence, we see that

and, since \(\eta _N(\lambda ) \le C N L_N^{-2}\) for all \(\lambda \in {\mathcal {K}}\), we deduce from formula (3.13) that

Recall that \(\delta _N= L_N \epsilon _N\) and \(\epsilon _N=\kappa N^{-1/2}\log N\), so that the error term in (3.15) is of order \((\log N)^4 L_N^{-1}\). Moreover, if \(L_N = o\big ( \sqrt{N}/\log N \big )\), a Taylor approximation shows that for any \(\mathrm {w}\in {\mathscr {A}}(0; \delta _N) \),

Using the estimate (3.14) once more, by formula (3.15), this implies that

By Lemma 4.3, the leading term in formula (3.16) has the same limit (up to an arbitrary small error term) as

and, since \(N L_N^{-2} \delta _N^3 \rightarrow 0\) when \(L_N = o \big ( \sqrt{N}/(\log N)^3 \big )\), this completes the proof. \(\square \)

Since the function \(\mathrm {w}\mapsto H^n(\lambda ; \mathrm {w})\) is a multivariate polynomial of degree 2, the leading term in the Asymptotics (3.9) can be computed explicitly using the reproducing property of the \(\infty \)-Ginibre kernel; see for instance [44]. For any \(\rho >0\), the function \((z,w) \mapsto e^{\rho z {\overline{w}}}\) is the reproducing kernel for the Bergman space with weight \(\rho e^{-\rho |z|^2/2}\) on \({\mathbb {C}}\). This implies that for any \(w_1, w_2 \in {\mathbb {C}}\) and for all integer \(k\ge 0\),

As a basic application of these identities, we obtain the following Lemma.

Lemma 3.5

Let \(n \ge 1\) and \(\rho >0\). For any polynomial \(H(\mathrm {w})\) of degree at most 2 in the variables \(w_1,\dots , w_n, \overline{w_1}, \dots , \overline{w_n}\) such that \(H(0)=0\), we have

Under the assumptions of Proposition 3.4, since \(\eta _N(\lambda ) \rightarrow +\infty \) as \(N\rightarrow +\infty \) uniformly for all \(\lambda \in {\mathcal {K}}\), we deduce from Lemma 3.5 that for any test function \(f\in {\mathcal {C}}^3_c({\mathcal {K}})\), we have for all integers \(n\ge 1\) and \(m\ge 0\), as \(N\rightarrow +\infty \),

Here we used that according to formulae (2.7) and (2.9), we have for any \(m \ge 0\) and \(n\ge 1\),

In the macroscopic regime (\(L_N=1\), \(x_0=0\) and \({\mathcal {K}}= {\text {supp}}(f) \subset {\mathscr {S}}_V\)), by Remark 3.2, this also shows that for any \(n,m \ge 1\),

This shows that the estimate (2.12) with \(M_N = N\) holds for any sequence \(q_N \searrow 0\) as \(N\rightarrow +\infty \). By Theorem 2.2, this completes the proof of Theorem 1.1.

3.3 Mesoscopic Fluctuations for 2-Dimensional Coulomb Gases and the Proofs of Theorems 1.2 and 1.3

In the mesoscopic regime, we claim that the asymptotics (3.18) with \(m=0\) implies the Central Limit Theorem 1.2. Indeed, the fact that the cumulants of order \(n\ge 3\) vanish in the large N limit comes from the following combinatorial Lemma.

Lemma 3.6

([44], Lemma 9) For any \(n\ge 1\), let

We have \(\displaystyle {\mathscr {Y}}_n = {\left\{ \begin{array}{ll} 1 &{}\text {if } n=2 \\ 0 &{}\text {else} \end{array}\right. }. \)

Proof of Theorem 1.2

Let \(\lambda \in {\mathbb {C}}\) and \(\varvec{\lambda }=(\lambda ,\dots , \lambda )\in {\mathbb {C}}^{n+1}\). According to formula (2.2), an elementary computation shows that for any \(2 \le r < s\le n+1\),

and

Since, by integration by parts,

we deduce from formulae (3.19) and (3.20) that

When \( L_N =N^\alpha \) and \(0<\alpha <1/2\), formulae (2.5) and (3.18) with \(m=0\) imply that for any \(n\ge 1\),

By Lemma 3.6, this proves that for any test function \(f\in {\mathcal {C}}^3_0({\mathbb {C}})\) and any \(n\ge 2\),

This shows that the centered mesoscopic linear statistics \(\Xi (f_N) -{\mathbb {E}}\big [\Xi (f_N) \big ] \) converges in distribution as \(N\rightarrow \infty \) to the mean-zero Gaussian random variable \(\mathrm {X}(f)\). \(\square \)

We are now ready to finish the proof of Theorem 1.3. By Lemma 3.1, for any bounded function f with compact support, we have for any \(n\ge 1\),

Here we used that the potential V is smooth and \(\rho _V = 2 \Delta V >0\) on a small neighborhood of the point \(x_0 \in {\mathscr {S}}_V\). This implies the Assumption (2.11) with \(M_N = N L_N^{1-2\alpha } \varrho _V(x_0)\)—since the parameter \(\alpha <1/2\), \(M_N \nearrow +\infty \) as \(N\rightarrow +\infty \). As we already pointed out, the asymptotics (3.18) yield the Assumption (2.12) with an error which is O(1). Finally, the Assumption (2.13) was proved just above—see (3.22). So, by Theorem 2.2, this completes the proof of Theorem 1.3.

4 Transition for 1-Dimensional Log-Gases

4.1 Asymptotics of Orthogonal Polynomials

In this section, we begin by reviewing basic facts about the asymptotics of orthogonal polynomials which are required for the proofs of Theorems 1.4 and 1.5. A comprehensive reference for the results discussed in this section is the book of Deift [22]. We assume that the potential \(V \in C^2({\mathbb {R}})\) is a function which satisfies the condition (1.1) and we let \(\Xi \) and \(\widehat{\Xi }\) be the determinantal processes with correlation kernels \(K^N_V\) and \({\widehat{K}}^N_V = p_N K^N_V\) respectively.

The proof of Theorem 1.4 relies on a combinatorial method introduced in [17] which consists in using the three-terms recurrence relation of the orthogonal polynomials \(\{P_k \}_{k=0}^\infty \) with respect to the measure \(d\mu _N = e^{- 2N V(x)}dx\) to compute the cumulants of polynomial linear statistics. For any \(N\in {\mathbb {N}}\), there exists two sequences \(a^N_k >0\) and \(b^N_k \in {\mathbb {R}}\) such that the orthogonal polynomials \(P_k\) in (1.4) satisfy

In particular, the completion \({\mathscr {L}}_N\) of the space of polynomials with respect to \(L^2({\mathbb {R}},\mu _N)\) is isomorphic to \(L^2({\mathbb {N}}_0)\) and formula (4.1) implies that the multiplication by x on \({\mathscr {L}}_N\) is unitary equivalent to applying the Jacobi matrix

We also let \(\varvec{\Pi }_N\) be the orthogonal projection on \({\text {span}}\{e_0, \dots , e_{N-1}\}\) acting on \(L^2({\mathbb {N}}_0)\). The connection with eigenvalues statistics comes from the fact that for any polynomial Q and for any composition \(\mathbf {k}\vdash n\), one has

where \({\mathcal {G}}\) denotes the adjacency graph of the matrix \(Q(\mathbf {J})\) and

Given a path \(\pi \) of length n and a composition \(\mathbf {k}\vdash n\), we let

Observe that

so that by formula (2.5), the cumulants of a polynomial linear statistics are given by

By definitions, there exists a constant \(M>0\) which only depends on the degree of Q and n so that \(\Phi _\pi ^N=0 \) for any path \(\pi \in \Gamma ^n_m\) as long as \(m < N - M\). Since \( \sum _{\mathbf {k}\vdash n}\Upsilon _0(\mathbf {k}) = 0\) for all \(n \ge 2\), formula (4.4) implies that

In particular, if the Jacobi matrix has a right-limit, i.e. there exists an (infinite) matrix \(\mathbf {L}\) such that for all \(i,j \in {\mathbb {Z}}\),

then

where \(\widetilde{{\mathcal {G}}}\) denotes the adjacency graph of the matrix \(Q(\mathbf {L})\) and

The condition (1.21) implies that the right-limit of the Jacobi matrix is a tridiagonal matrix \(\mathbf {L}\) such that \(\mathbf {L}_{jj}= 0\) and \(\mathbf {L}_{j, j\pm 1} =1/2\) for all \(j \in {\mathbb {Z}}\) and it was proved in [39], see also [17], that in this case:

The combinatorial method used in the previous section is well-suited to investigate the global fluctuations of 1-dimensional log-gas, but it is difficult to implement in the mesoscopic regime since we cannot use polynomials as test functions. So, to describe the transition for mesoscopic linear statistics and to prove Theorem 1.5, we rely on the asymptotics of the correlation kernel \(K^N_V\) from [24] and the method from [38] that we review below. Recall that \(\varrho _V\) is the equilibrium density of the gas and define the integrated density of states:

Let us fix \(x_0\in {\mathscr {I}}_V\), \(0<\alpha <1\), and set

Based on the results of [24], we have for any \(\alpha \in (0,1]\),

uniformly for all x, y in compact subsets of \({\mathbb {R}}\); c.f. [38, Proposition 3.5]. The main idea of the method of [38] is to compare the kernel (4.9) to the sine-kernel and use the results from Soshnikov [48] for the cumulants of linear statistics of the Sine process. We define the sine-kernel with density \(\rho >0\) on \({\mathbb {R}}\) by

We see by taking \(\alpha =1\) in formula (4.9) that the Sine process with correlation kernel (4.10) describes the local limit in the bulk of the 1-dimensional log-gases. In the mesoscopic regime, it was proved in [38] that, up to a change of variable, it is possible to replace the kernel \({\widetilde{K}}^N_{V,x_0}\) by an appropriate sine-kernel using the asymptotics (4.9) in the cumulant formulae. Namely, for any \(n\ge 2\),

where

Here, the function \(G_V\) denotes the inverse of the integrated density of sates \(F_V\), (4.7). By the inverse function theorem, it exists in a neighborhood of any point \(F_V(x_0)\) when \(x_0\in {\mathscr {I}}_V\) and the map \(\zeta _N\) is well-defined on any compact subset of \({\mathbb {R}}\) as long as the parameter N is sufficiently large. Then, using Soshnikov’s main combinatorial Lemma, it was proved in [38] that

4.2 Proof of Theorem 1.4: The Global Regime

In this section, we modify the strategy described above in order to deduce Theorem 1.4 from our general Theorem 2.2. The first step is to verify the Assumption (2.11). By [43, Theorem 11.1.2], the expected density of states satisfies for all \(x\in {\mathbb {R}}\),

where C is a constant which depends only on the potential V. This implies that the law of large numbers (1.5) can be extended to all continuous functions with polynomial growth. Moreover, by (4.6), we obtain the asymptotics (2.13) for the cumulants \( {\text {C}}^n_{K_V^N}[Q]\) of the linear statistic \(\Xi (Q)\). Then, it only remains to verify that the estimates (2.12) hold for any polynomial test function. By (2.9), since \(\sum _{\mathbf {k}\vdash n} \Upsilon _m(\mathbf {k}) = 0\), the very same computation leading to (4.5) shows that for any integers \(n,m\ge 1\)

Since the (infinite) matrix \(\mathbf {L}\) is bounded with \(\Vert \mathbf {L}\Vert \le 1/2\), we obtain the estimates (2.12) with an error which is O(1). This completes the proof of Theorem 1.4.

Remark 4.1

(Generalizations of Theorem 1.4) Note that we have formulated Theorem 1.4 for a log-gas at inverse temperature \(\beta =2\), but the previous proof can be generalized to other one-dimensional biorthogonal ensemble with a correlation kernel of the form:

The appropriate assumptions are that there exists an equilibrium density and a law of large numbers holds for all polynomials, the family \(\{\varphi _k^N \}_{k=0}^\infty \) satisfies a q-term recurrence relation for all \(N\in {\mathbb {N}}\) and the corresponding recurrence matrix \(\mathbf {J}\) has a right-limit \(\mathbf {L}\) as \(N\rightarrow \infty \). This applies to other orthogonal polynomial ensembles, such as the discrete point processes coming from domino tilings of hexagons, as well as some non-symmetric biorthogonal ensembles such as the Muttalib-Borodin ensembles, square singular values of product of complex Ginibre matrices or certain two-matrix models, see [17, 39] for more details. Moreover, we only require that the right-limit \(\mathbf {L}\) exists but it need not be a Toeplitz matrix. Then, we obtain a crossover from a non-Gaussian process (described by \(\mathbf {L}\) in the regime where \(T_N\rightarrow 0\)) to a Poisson process (when \(T_N\rightarrow \infty \)). For instance, such a transition arises when considering linear statistics of the log-gases in the multi-cut regime [39].

Remark 4.2

It was proved in [31, Section 5] that when V is a convex polynomial, then \({\mathscr {S}}_V=(-1,1)\) and the conditions (1.21) are satisfied. In fact, Johansson’s argument shows that these conditions are also necessary to have a CLT for polynomial test functions. Therefore, it is an interesting question to know whether (1.21) and the one-cut condition \({\mathscr {S}}_V=(-1,1)\) are equivalent.

4.3 Proof of Theorem 1.5: The mesoscopic regime

Let us fix \(x_0\in {\mathscr {I}}_V\), \(0<\alpha <1\) and let \(f_N= f\big (N^\alpha ( \cdot -x_0) \big )\) where \(f\in {\mathcal {C}}^2_c({\mathbb {R}})\). First, observe that by a change of variable, we have for all \(n\ge 1\),

where \({\widetilde{K}}^N_{V,x_0}\) is given by (4.8). Using the asymptotics (4.9), we have that

uniformly for all \(x\in {\text {supp}}(f)\). If \(M_N = N^{1-\alpha } \varrho _V(x_0) \), this implies that for all \(n\in {\mathbb {N}}\),

Thus, we obtain the condition (2.11) with \(d\eta =dx\). Moreover, the Assumption (2.13) is given by (4.13). Then it just remains to prove the estimates (2.12). Let us observe that by a change of variables, we also have for all \(n, m \ge 1\),

Exactly like the proof of (4.11)—which corresponds to the case \(m=0\) by formula (2.5)—we deduce from the proof of [38, Proposition 2.2] that for any \(m \ge 1\),

where \(h_N = f\circ \zeta _N\) and \(\zeta _N\) is given by (4.12). To finish the proof, we need the following estimates.

Proposition 4.1

Suppose that \({\text {supp}}(f) \subset (-L, L)\). There exists \(N_0 >0\) such that for all \(N \ge N_0\), the functions \(h_N= f\circ \zeta _N\) are well-defined on \({\mathbb {R}}\), \( h_N\in C^2_c([-L,L])\) and for all \(u\in {\mathbb {R}}\),

Proof

When the potential V is analytic, the bulk\({\mathscr {I}}_V\) consists of finitely many open intervals and the equilibrium density \(\varrho _V\) is smooth on \({\mathscr {I}}_V\). Since \(x_0\in {\mathscr {I}}_V\), by formula (4.12), the function \(\zeta _N\) is increasing and smooth on the interval \([-L,L]\) with

Moreover, since \(\zeta _N(0)=0\) and \(\zeta '_N(0) =G_V'\big (F_V(x_0)\big ) \varrho _V(x_0) =1\), this implies that

uniformly for all \(x\in [-L,L]\). Since the open interval \((-L, L)\) contains the support of the test function f, this estimate shows that when the parameter N is large, we can define \(h_N(x) = f\big ( \zeta _N(x) \big )\) for all \(x\in [-L, L]\) and extend it by 0 on \({\mathbb {R}}\backslash [-L,L]\). Then \(h_N \in C^2_0({\mathbb {R}})\) and

for all \(x\in [-L,L]\). Moreover, we can use the estimate

to get the upper-bound (4.16). Plainly \(\Vert h_N \Vert _\infty \le \Vert f\Vert _\infty \) and it is easy to deduce from formula (4.17) that

\(\square \)

To compute the limit of the RHS of (4.15), we also need the following asymptotics which come from the proof of Lemma 1 in Soshnikov’s paper [48] on linear statistics of the CUE and Sine process.

Lemma 4.2

Let \(n\ge 2\) and let \(\eta _N>0\) such that \(\eta _N\nearrow \infty \) as \(\rightarrow +\infty \). Suppose that \(h_N\) is a sequence of integrable functions such that

Then, for any map \(\Upsilon : \mho \rightarrow {\mathbb {R}}\) such that \(\sum _{\mathbf {k}\vdash n} \Upsilon (\mathbf {k}) =0\), we have

where for any \(u \in {\mathbb {R}}^n\) and for any composition \(\mathbf {k}\vdash n\),

Proof

Based on the formula

we obtain

Then, the condition \(\sum _{\mathbf {k}\vdash n} \Upsilon (\mathbf {k}) =0\) implies that

Since \( \big | \Psi _u(\mathbf {k})/2 \big | \le |u_1|+\cdots +|u_n|\) for any \(\mathbf {k}\vdash n\), the condition (4.19) is sufficient to obtain the asymptotics (4.20). \(\square \)

We are now ready to complete the proof of Theorem 1.5. Using the estimate (4.16), we see that when the parameter N is sufficiently large, there exists a constant \(C>0\) so that

A similar upper-bound shows that the sequence \(h_N = f\circ \zeta _N\) satisfies the condition (4.19) of Lemma 4.2. Hence, combining the asymptotics (4.15) and (4.20), we obtain

By (4.21), we see that the previous integral is uniformly bounded by a constant which depends only on the test function f and \(n,m \in {\mathbb {N}}\). Hence, by (4.14), we obtain the estimates (2.12) with an error which is O(1) and we can apply Theorem 2.2. This completes the proof of Theorem 1.5.

Notes

For any \(k \ge 0\), \(P_k\) is a polynomial of degree k and its leading coefficient is positive.

\(T_k(\cos \theta ) = \cos (k\theta )\) for any \(k\ge 0\) and \(\theta \in {\mathbb {R}}\).

By convention \( {l \atopwithdelims ()m} = 0 \) if \(m>l\).

References

Ameur, Y., Hedenmalm, H., Makarov, N.: Berezin transform in polynomial Bergman spaces. Commun. Pure Appl. Math. 63, 1533–1584 (2010)

Ameur, Y., Hedenmalm, H., Makarov, N.: Fluctuations of eigenvalues of random normal matrices. Duke Math. J. 159, 31–81 (2011)

Ameur, Y., Hedenmalm, H., Makarov, N.: Random normal matrices and Ward identities. Ann. Probab. 43, 1157–1201 (2015)

Anderson, G.W., Guionnet, A., Zeitouni, O.: An Introduction to Random Matrices. Cambridge Univ. Press, Cambridge (2010)

Bekerman, F., Lodhia, A.: Mesoscopic central limit theorem for general \(\beta \)-ensembles. Ann. Inst. Henri Poincar Probab. Stat. 54(4), 1917–1938 (2018)

Berggren, T., Duits, M.: Mesoscopic fluctuations for the thinned circular unitary ensemble. Math. Phys. Anal. Geom. 20(3), 19 (2017)

Berman, R.J.: Bergman kernels for weighted polynomials and weighted equilibrium measures of \({\mathbb{C}}^n\). Indiana U. Math. J. 58, 1921–1946 (2009)

Bohigas, O., Pato, M.P.: Missing levels and correlated spectra. Phys. Lett. B 595(036212), 171–176 (2004)

Bohigas, O., Pato, M.P.: Randomly incomplete spectra and intermediate statistics. Phys. Rev. E 3(74), 036212 (2006)

Bauerschmidt, R., Bourgade, P., Nikula, M., Yau, H.-T.: The two-dimensional Coulomb plasma: quasi-free approximation and central limit theorem. arXiv:1609.08582

Borodin, A.: Determinantal point processes, in the Oxford handbook of random matrix theory, pp. 231–249. Oxford Univ. Press, Oxford (2011)

Borot, G., Guionnet, A.: Asymptotic expansion of beta matrix models in the one-cut regime. Commun. Math. Phys. 317(2), 447–483 (2013)

Borot, G., Guionnet, A.: Asymptotic expansion of beta matrix models in the multi-cut regime. arXiv:1303.1045

Bothner, T., Deift, P., Its, A., Krasovsky, I.: On the asymptotic behavior of a log gas in the bulk scaling limit in the presence of a varying external potential I. Commun. Math. Phys. 337, 1397–1463 (2015)

Bothner, T., Deift, P., Its, A., Krasovsky, I.: On the asymptotic behavior of a log gas in the bulk scaling limit in the presence of a varying external potential II. In Large truncated Toeplitz matrices, Toeplitz operators, and related topics, pp. 213–234. Birkhuser/Springer, Cham (2017)

Breuer, J., Duits, M.: Universality of mesoscopic fluctuations for orthogonal polynomial ensembles. Commun. Math. Phys. 342, 491–531 (2016)

Breuer, J., Duits, M.: Central limit theorems for biorthogonal ensembles and asymptotics of recurrence coefficients. J. Am. Math. Soc. 30(1), 27–66 (2017)

Chafaï, D., Hardy, A., Maïda, M.: Concentration for Coulomb gases and Coulomb transport inequalities. J. Funct. Anal. 275(6), 1447–1483 (2018)

Charlier, C., Claeys, T.: Thinning and conditioning of the circular unitary ensemble. Random Matrices 6(2), 1750007 (2017)

Costin, O., Lebowitz, J.: Gaussian fluctuations in random matrices. Phys. Rev. Lett. 75, 69–72 (1995)

Dean, D.S., Doussal, P.L., Majumdar, S.N., Schehr, G.: Finite temperature free fermions and the Kardar–Parisi–Zhang equation at finite time. Phys. Rev. Lett. 114(11), 110402 (2015)

Deift, P.: Orthogonal Polynomials and Random Matrices: A Riemann–Hilbert approach. Courant Lecture Notes in Mathematics, vol. 3. Courant Institute of Mathematical Sciences; American Mathematical Society, New York; Providence (1999)

Deift, P.: Some open problems in random matrix theory and the theory of integrable systems. II. SIGMA 13, 016 (2017)

Deift, P., Kriecherbauer, T., McLaughlin, K.T.-R., Venakides, S., Zhou, X.: Uniform asymptotics for polynomials orthogonal with respect to varying exponential weights and applications to universality questions in random matrix theory. Commun. Pure Appl. Math. 52(11), 1335–1425 (1999)

Duits, M., Johansson, K.: On mesoscopic equilibrium for linear statistics in Dyson’s Brownian motion. Mem. Am. Math. Soc. 255(1222), 118 (2018)

Dyson, F.J.: The Coulomb fluid and the fifth Painlevé transcendent. In: Liu, C.S., Yau, S.T. (eds.) Chen Ning Yang: A Great Physicist of the Twentieth Century, pp. 131–146. International Press, Cambridge (1995)

Erdős, L., Knowles, A.: The Altshuler-Shklovskii formulas for random band matrices I: the unimodular case. Commun. Math. Phys. 333, 1365–1416 (2015)

Erdős, L., Knowles, A.: The Altshuler–Shklovskii formulas for random band matrices II: the general case. Ann. Henri Poincaré 16, 709–799 (2015)

He, Y., Knowles, A.: Mesoscopic eigenvalue statistics of Wigner matrices. Ann. Appl. Probab. 27(3), 1510–1550 (2017)

Hough, B., Krishnapur, M., Peres, Y., Virág, B.: Determinantal processes and independence. Probab. Surv. 3, 206–229 (2006)

Johansson, K.: On fluctuations of eigenvalues of random Hermitian matrices. Duke Math. J. 91, 151–204 (1998)

Johansson, K.: Universality of the local spacing distribution in certain ensembles of Hermitian Wigner matrices. Commun. Math. Phys. 215, 683–705 (2001)

Johansson, K.: Random Matrices and Determinantal Processes. Mathematical Statistical Physics, pp. 1–55. Elsevier B. V, Amsterdam (2006)

Johansson, K.: From Gumble to Tracy–Widom. Probab. Theory Relat. Fields 138, 75–112 (2007)

Johansson, K., Lambert, G.:Gaussian and non-Gaussian fluctuations for mesoscopic linear statistics in determinantal processes. arXiv:1504.06455

König, W.: Orthogonal polynomial ensembles in probability theory. Probab. Surv. 2, 385–447 (2005)

Kuijlaars, A.B.J.: Universality in the Oxford Handbook of Random Matrix Theory, pp. 103–134. Oxford Univ. Press, Oxford (2011)

Lambert, G.: Mesoscopic fluctuations for unitary invariant ensembles. Electron. J. Probab. 23(7), 33 (2018)

Lambert, G.: CLT for biorthogonal ensembles and related combinatorial identities. Adv. Math. 329, 590–648 (2018)

Lavancier, F., Møller, J., Rubak, E.: Determinantal point process models and statistical inference. J. R. Stat. Soc. Ser. B 77(4), 853–877 (2015)

Leblé, T., Serfaty, S.: Fluctuations of two-dimensional Coulomb gases. Geom. Funct. Anal. 28(2), 443–508 (2018)

Pastur, L.A.: Limiting laws of linear eigenvalue statistics for Hermitian matrix models. J. Math. Phys. 47, 103303 (2003)

Pastur, L.A., Shcherbina, M.: Eigenvalue Distribution of Large Random Matrices Mathematical Surveys and Monographs, vol. 171. American Mathematical Society, Providence, RI (2011)

Rider, B., Virág, B.: Complex determinantal processes and \(H^1\) noise. Electron. J. Probab. 12, 1238–1257 (2007)

Rider, B., Virág, B (2007) The noise in the circular law and the Gaussian free field. Int. Math. Res. Notes. https://doi.org/10.1093/imrn/rnm006 (2007)

Shcherbina, M.: Fluctuations of linear eigenvalue statistics of \(\beta \)-matrix models in the multi-cut regime. J. Stat. Phys. 151, 1004–1034 (2013)

Soshnikov, A.: Determinantal random point fields. Russ. Math. Surv. 55, 923–975 (2000)

Soshnikov, A.: The Central Limit Theorem for local linear statistics in classical compact groups and related combinatorial identities. Ann. Probab. 28, 1353–1370 (2000)

Soshnikov, A.: Gaussian limit for determinantal random point fields. Ann. Probab. 30, 1–17 (2001)

Spencer, T.: Random Banded and Sparse Matrices in the Oxford Handbook of Random Matrix Theory, pp. 471–488. Oxford Univ. Press, Oxford (2011)

Acknowledgements

G. L. was supported by the grant KAW 2010.0063 from the Knut and Alice Wallenberg Foundation and by the University of Zurich Forschungskredit grant FK-17-112. I thank Tomas Berggren and Maurice Duits from which I learned about the model of Bohigas and Pato, for sharing their inspiring work with me and for the valuable discussions which followed. I also thank Mariusz Hynek for many interesting discussions, as well as the referee whose comments help to improve the structure of this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Alessandro Giuliani.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Incomplete Determinantal Processes

In this appendix, we review some background material on determinantal processes and we give an alternative proof of the results of Bohigas and Pato, [9], that the incomplete process is determinantal with correlation kernel \(p_NK^N_V(z,w)\). There are many excellent surveys about determinantal processes and we refer to [11, 33, 36, 47] for further details and an overview of the main examples.

Let \({\mathfrak {X}}\) be a complete separable metric space equipped with a Radon measure \(\mu \). The configuration space \({\mathscr {Q}}({\mathfrak {X}})\) is the set of integer-valued locally finite Borel measure on \({\mathfrak {X}}\) equipped with the topology which is generated by the maps

for every Borel set \(A\subseteq {\mathfrak {X}}\). A point process \({\mathbb {P}}\) is a Borel probability measure on the configuration space \({\mathscr {Q}}({\mathfrak {X}})\). A point process can be characterized by its correlation functions \((\rho _n)_{n=1}^\infty \). If it exists, \(\rho _n\) is a symmetric function on \({\mathfrak {X}}^n\) which satisfies the identity

for all compositions \(\mathbf {k}\vdash n\) and for all disjoint Borel sets \(A_1,\dots , A_\ell \subseteq {\mathfrak {X}}\). A point process is called determinantal if all its correlation functions exist and are given by

The function \(K: {\mathfrak {X}}\times {\mathfrak {X}}\rightarrow {\mathbb {C}}\) is called the correlation kernel. For instance, given random points \((\lambda _1,\dots , \lambda _N)\) with the joint density \(G_N(x)=e^{-\beta {\mathscr {H}}^N_V(x)}/Z^N_V\) on \({\mathfrak {X}}^N\), see (1.2), the random measure \( \sum _{k=1}^N \delta _{\lambda _k}\) defines a point process and its correlation functions satisfy \(\rho _N = N! G_N\) and

for all \(k<N\). When \(\beta =2\), it is easy to verify that

and that, by formula (A.3), the process \(\Xi \) is determinantal with the correlation kernel \(K^N_V\) given by (1.3). In general, if K is a continuous, Hermitian symmetric, function which satisfies property (2.1), then there exists a determinantal process on \({\mathfrak {X}}\) with correlation kernel K; c.f. [47, Theorem 3].

Let \(q\in (0,1)\) and \(p=1-q\). Recall that X is a Binomial random variable with parameter p and \(N\in {\mathbb {N}}\) if for all \(k \in {\mathbb {N}}_0\),

By convention, \({N \atopwithdelims ()k} = 0\) if \(k>N\). Let \(\Xi \) be a random (point) configuration and let \(\widehat{\Xi }\) be the configuration obtained after performing a Bernoulli percolation on \(\Xi \). By construction, for any disjoint Borel set \(A\subseteq {\mathfrak {X}}\), the conditional distribution of the random variable \(\widehat{\Xi }_A\) given \(\Xi \) has a Binomial distribution with parameters p and \(\Xi _A\) and it is statistically independent of \(\Xi _B\) for any Borel set B disjoint of A. By formula (A.4), this implies that

for any composition \(\mathbf {k}\vdash n\) and for all disjoint Borel sets \(A_1,\dots , A_\ell \subseteq {\mathfrak {X}}\). Hence, we obtain

so that, by formula (A.1), the correlation functions of the incomplete process\(\widehat{\Xi }\) are given by \(p^n \rho _n(x_1,\dots , x_n)\) for all \(n \ge 1\). In particular, we deduce from formula (A.2) that, if \(\Xi \) is a determinantal process with a correlation kernel K, then the point process \(\widehat{\Xi }\) is also determinantal with kernel pK.

Appendix B: Off-Diagonal Decay of the Correlation Kernel \(K^N_V\) in Dimension 2

In this section, we review some classical estimates for the correlation kernel (1.3) which have been used in [2] to prove the CLT (1.10). Then, we prove Lemma 3.3 and an analogous result for the cumulants of the \(\infty \)-Ginibre process. For completeness, we also give the proof of Lemma 3.2. We will use the formulation of Sect. 5 in [2] but the estimates (B.1) and (B.2) go back to the papers [7] and [1]. Suppose that the potential \(V:{\mathbb {C}}\rightarrow {\mathbb {R}}\) is real-analytic and satisfies the condition (1.1). Then, there a function \(\phi _V : {\mathbb {C}}\rightarrow {\mathbb {R}}^+\) such that \(\phi _V(z) \ge \nu \log |z|^2\) as \(|z| \rightarrow \infty \) and some constants \(C, c, \delta >0\) such that

and

for all \(w \in {\mathscr {S}}\) and \(z\in {\mathbb {C}}\).

Proof of Lemma 3.3

We use the convention \(z_0=z_{n+1}\). Since the kernel \(K_V^N\) is reproducing, by the Cauchy-Schwartz inequality,

for all \(z, w\in {\mathbb {C}}\), so that

Since \(\displaystyle \int _{\mathbb {C}}K_V^N(z,z) d\mathrm {A}(z) = N\) and \(K_V^N(z,z) \le C N \) [see the estimate (B.2)], we obtain

Then, it easy to check that the estimates (B.1) and (B.2) imply that

Hence, if \(\epsilon _N = \kappa N^{-1/2} \log N \) and \(\kappa \ge (n+1)/ c\), we obtain

Moreover, since \(\sup \big \{ |F_N(z_0, \mathrm {z})| : \mathrm {z}\in {\mathbb {C}}^{n} , N \ge N_0 \big \} \le C \mathbf {1}_{z_0 \in {\mathscr {S}}}\) by (3.6), the estimate (B.3) implies that

Now, we can proceed by induction to get formula (3.7). If \({\mathscr {C}}_N = \{\mathrm {z}\in {\mathbb {C}}^{n+1} : z_{n+1} \in {\mathscr {S}}, |z_1-z_{n+1}| \le \epsilon _N \} \), the next step is to show that

Since the set \({\mathscr {S}}_V\) is open, there exists a compact set \({\mathscr {S}}' \subset {\mathscr {S}}_V\) such that \({\mathscr {S}}\subset {\mathscr {S}}'\) and \({\mathscr {C}}_N \subset \{\mathrm {z}\in {\mathbb {C}}^{n+1} : z_{n+1} , z_1 \in {\mathscr {S}}' \}\) when the parameter N is sufficiently large. Then, as before, we obtain

and formula (B.5) also follows directly from the estimate (B.3). Hence, by formula (B.4), this implies that

If we repeat this argument, we obtain (3.7). \(\square \)

Lemma 4.3

Let \(n \in {\mathbb {N}}\), \(w_0= w_{n+1} =0\), and let \(H(\mathrm {w})\) be a polynomial of degree at most 2 in the variables \(w_1,\dots , w_n, \overline{w_1},\dots , \overline{w_n}\). For any sequence \(\delta _N \ge k \rho _N^{-1/2} \sqrt{\log \rho _N}\) with \(k>0\), we have

where the set \({\mathscr {A}}(0; \delta _N)\) is given by formula (3.5).

Proof

We will first show that

First, notice that if \(H =1\), since \(w_0=0\), by formula (3.14), we have

In general, there exists a constant \(C>0\) which only depends on the polynomial H so that

or

Since, for any \(k=1,\dots , n\),

the estimate (B.6) follows directly from (B.7) and the leading contribution comes from the constant term. If we use the estimate

instead, the same argument shows that for any \(k= 1,\dots n\),

Hence, the Lemma follows from applying a union bound and from the choice of the sequence \(\delta _N\). \(\square \)

Proof of Lemma 3.2

The map \((z, w) \mapsto \Psi (z,w)\) is bi-holomorphic in a neighborhood of \((x_0, \overline{x_0})\), so there exists \(0<\epsilon <1\) such that for all \(|u|, |v| \le \epsilon \),

By definition, \(\overline{\Psi (z,w)} = \Psi ({\overline{w}},{\overline{z}})\), so that the coefficients of the previous power series are Hermitian-symmetric: \(a_{kj} = \overline{a_{jk}}\) for all \(k, j \ge 0\). Moreover, by definition, we have

Let

Since \(V(x_0 + u) = \Psi (x_0 + u , \overline{x_0} + {\overline{u}} )\), we see that for any \(|u|, |v| \le \epsilon \),

By formula (3.1), this implies that for any \(|u|, |v| \le \epsilon _N = \log (N^\kappa ) N^{-1/2}\),

By formula (B.8) and the definition of the \(\infty \)-Ginibre kernel, it completes the proof. \(\square \)

Rights and permissions

About this article

Cite this article

Lambert, G. Incomplete Determinantal Processes: From Random Matrix to Poisson Statistics. J Stat Phys 176, 1343–1374 (2019). https://doi.org/10.1007/s10955-019-02345-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-019-02345-w