Abstract

The linear response of a dynamical system refers to changes to properties of the system when small external perturbations are applied. We consider the little-studied question of selecting an optimal perturbation so as to (i) maximise the linear response of the equilibrium distribution of the system, (ii) maximise the linear response of the expectation of a specified observable, and (iii) maximise the linear response of the rate of convergence of the system to the equilibrium distribution. We also consider the inhomogeneous, sequential, or time-dependent situation where the governing dynamics is not stationary and one wishes to select a sequence of small perturbations so as to maximise the overall linear response at some terminal time. We develop the theory for finite-state Markov chains, provide explicit solutions for some illustrative examples, and numerically apply our theory to stochastically perturbed dynamical systems, where the Markov chain is replaced by a matrix representation of an approximate annealed transfer operator for the random dynamical system.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

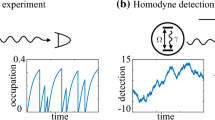

The notion of linear response crosses many disciplinary boundaries in mathematics and physics. At a broad level, one is interested in how various quantities respond to small perturbations in the dynamics. Historically, this response is often studied through the changes in the equilibrium probability distribution of the system. In certain cases, if the governing dynamics varies according to a parameter, one can formally express the change in the equilibrium probability distribution as a derivative of the governing dynamics with respect to this parameter.

Finite state Markov chains are one of the simplest settings in which to study formal linear response, and early work includes Schweitzer [36] who stated response formulae for invariant probability distributions under perturbations of the governing \(n\times n\) stochastic matrix P. The perturbations in [36] were either macroscopic or infinitesimal, and in the latter case the response was expressed as a derivative. Linear response has been heavily studied in the context of smooth or piecewise smooth dynamical systems. In the case of uniformly (and some nonuniformly) hyperbolic dynamics, there is a distinguished equilibrium measure, the Sinai-Bowen-Ruelle (SBR) measure, which is exhibited by a positive Lebesgue measure set of initial conditions. Ruelle [34] developed response formulae for this SBR measure for uniformly hyperbolic maps; this was extended to partially hyperbolic maps by Dolgopyat [13] and to uniformly hyperbolic flows [9, 35]. Modern approaches to proving linear response, such as [9, 18, 19] do not rely on coding techniques as in [34], but work directly with differentiability properties of transfer operators acting on anisotropic Banach spaces. For expanding and/or one-dimensional dynamics, linear response (and the lack thereof) for unimodal maps [4, 5] and intermittent maps [2, 6] has been treated; see also the surveys [3, 28]. Linear response results for stochastic systems using Markov (transfer) operator techniques have also been developed [21] and linear response for inhomogeneous Markov chains have also been considered [8]. There is a great deal of activity concerning the linear (or otherwise) response of the Earth’s climate system to external perturbations [1, 10, 33], and there have been recent extensions to the linear response of multipoint correlations of observables [30].

Much of the theoretical focus on linear response has been on establishing that for various classes of systems, there is a principle of linear response. Our focus in this work is in a much less studied direction, namely, determining those perturbations that lead to maximal response. This problem of optimizing response is of intrinsic mathematical interest, and also has practical implications: not only is it important to establish the maximal sensitivity of a system to small perturbations, but it is also of great interest to identify those specific perturbations that provoke a maximal system response. For example, a common application of linear response is the response of various models of the Earth’s climate to external (man-made) forcing. Mitigation strategies ought to specifically avoid those perturbations that lead to large and unpredictable responses, and it is therefore important to be able to efficiently identify these maximal response perturbations. This important avenue of research has relatively few precedents in the literature. One exception is [38] who consider optimal control of Langevin dynamics; using a linear response approach, they apply a gradient descent algorithm to minimise a specified linear functional.

The questions we ask are: (i) What is the perturbation that provokes the greatest linear response in the equilibrium distribution of the dynamics? (ii) What is the perturbation that maximally increases the value of a specified linear functional? (iii) What is the perturbation that has the greatest impact on the rate of convergence of the system to equilibrium? We answer these questions in the setting of finite state Markov chains, including the inhomogeneous situation. Question (i) turns out to be the most difficult because of its non-convex nature: we are maximising (not minimising) an \(\ell _2\) norm. We develop an efficient numerical approach, based on solving an eigenproblem. We are able to solve questions (ii) and (iii) in closed form, following some preliminary computations (solving a linear system and solving an eigenproblem, respectively).

In the numerics section, we apply our results to Ulam discretisations of stochastically perturbed dynamical systems in one dimension. These Ulam discretisations are large sparse stochastic matrices and thus our previous results readily apply. We limit ourselves to one-dimensional examples (dynamics on a circle or interval) to provide a clearer presentation of the results, but there is no obstacle to carrying out these computations in two- or three-dimensional systems. Examples of the types of dynamical systems that can be considered are:

-

1.

One has deterministic dynamics \(T:X\rightarrow X\), \(X=\mathbb {R}^d\) with stochastic perturbations that are an integral part of the model. There is a background i.i.d. stochastic process \(\{\xi _n\}_{n=0}^\infty \), with the random variables \(\xi :\Omega \rightarrow X\) creating the perturbed dynamics \(x_{n+1}=T(x_n)+\xi _n\), \(n\ge 0\).

-

2.

One has a collection of deterministic maps \(\{T_{\omega _n}\}_{n=0}^\infty \) which are composed in an i.i.d. fashion: \(\cdots \circ T_{\omega _k}\cdots \circ T_{\omega _2}\circ T_{\omega _1}\), where \(\omega \in \Omega \) is distributed according to a probability measure \(\mathbb {P}\) on \(\Omega \). In the special case where \(\Omega \subset X=\mathbb {R}^d\) and \(T_{\omega _i}x=Tx+\omega _i\) for some fixed T, this situation coincides with the previous one with \(\omega _i=\xi _i\).

In both cases, using the notation in point 2. above, one forms an annealed transfer operator \(\mathcal {P}f=\int _\Omega \mathcal {P}_{T_{\omega }}\ d\mathbb {P}(\omega )\). If \(\Omega \subset X\) and \(\mathbb {P}\) has density q with respect to Lebesgue measure we may write

Under mild conditions (see Sect. 7) \(\mathcal {P}:L^2(X)\rightarrow L^2(X)\) is compact and has a unique fixed point h, which can be normalised as \(\int _X h(x)\ dx=1\) to form an invariant density of the annealed stochastic dynamics. We refer the reader to Sect. 10.5 [26] for further background on this type of stochastic dynamics. One can ask how to alter the stochastic kernel q, which governs the stochastically perturbed dynamical system, to achieve maximal linear responses.

The first question we consider is “how should the new stochastic process be changed in order to produce the greatest linear response to the \(L^2\) norm of h?”. Given a small change in the kernel q we obtain a new invariant measure \(\mu '\). Denote \(\delta \mu =\mu '-\mu \) and \(\delta h\) the density of \(\delta \mu \) with respect to Lebesgue; we wish to select q so as to provoke the greatest change \(\delta h\) in an \(L^2\) sense. One motivation for this question is to determine the maximal sensitivity for all normalised observables \(c\in L^2(X)\). One has \(|\mathbb {E}_{\delta \mu }(c)|\le \Vert c\Vert _{L^2}\cdot \Vert \delta h\Vert _{L^2}\) and thus \(\sup _{\Vert c\Vert _{L^2}\le 1} |\mathbb {E}_{\delta \mu }(c)|\le \Vert \delta h\Vert _{L^2}\). In certain situations, if the density h is important in an energy sense, then the \(L^2\) norm of the response is important from an energy point of view. In a recent article [17] consider expanding maps of the interval and determine the perturbation of least (Sobolev-type) norm which produces a given linear response; see [24] for further work on this problem. Here we study the question of finding the perturbation of \(L^2\)-unit size that produces the linear response of greatest \(L^2\) norm.

Second, we consider the problem of maximising linear response of a specific observable \(c:X\rightarrow \mathbb {R}\) to a change in the stochastic perturbations. Given a small change in the kernel q we obtain a new invariant measure \(\mu '\), and we compare \(\mathbb {E}_\mu (c)\) with \(\mathbb {E}_{\mu '}(c)\). How should the stochastic process be changed in order that the expectation \(\mathbb {E}_\mu (c)\) increases at the greatest rate? Put another way, which is the most “c-sensitive direction” in the space of stochastic perturbations?

Third, we ask which perturbation of the kernel q produces the greatest change in the rate of convergence to the equilibrium measure of the stochastic process. This rate of convergence is determined by the magnitude of the second eigenvalue \(\lambda _2\) of the transfer operator \(\mathcal {P}\) and we determine the perturbation that pushes the eigenvalue farthest from the unit circle. Related perturbative approaches include [15], where the mixing rate of (possibly periodically driven) fluid flows was increased by perturbing the advective part of the dynamics and solving a linear program; [16], where similar kernel perturbation ideas were used to drive a nonequilibrium density toward equilibrium by solving a convex quadratic program with linear constraints; and [20] where a governing flow is perturbed deterministically so as to evolve a specified initial density into a specified final density over a fixed time duration, with the perturbation determined as the numerical solution of a convex optimisation problem. In the current setting, our perturbation acts on the stochastic part of the dynamics and we can find a solution in closed form after some preliminary computations.

An outline of the paper is as follows. In Sect. 2 we set up the fundamentals of linear response in finite dimensions. Section 3 tackles the problem of finding the perturbation that maximises the linear response of the equilibrium measure in an \(\ell _2\) sense. We first treat the easier case where the transition matrix for the Markov chain is positive, before moving to the situation of a general irreducible aperiodic Markov chain. In both cases we provide sufficient conditions for a unique optimum, and present explicit algorithms, including "MATLAB" code to carry out the necessary computations. We illustrate these algorithms with a simple analytic example. Section 4 solves the problem of maximising the linear response of the expectation with respect to a particular observable, while Sect. 5 demonstrates how to find the perturbation that maximises the linear response of the rate of convergence to equilibrium. In both of these sections, we provide sufficient conditions for a unique optimum, present explicit algorithms, code, and treat an analytic example. Section 6 considers the linear response problems for a finite sequence of (in general different) stochastic transition matrices. Section 7 applies the theory of Sect. 3–5 to stochastically perturbed one-dimensional chaotic maps. We develop a numerical scheme to produce finite-rank approximations of the transfer (Perron-Frobenius) operators corresponding to the stochastically perturbed maps. These finite-rank approximations have a stochastic matrix representation, allowing the preceding theory to be applied.

2 Notation and Setting

We follow the notation and initial setup of [29]. Consider a column stochastic transition matrix \(M=(M_{ij})\in \mathbb {R}^{n\times n}\) of a mixing Markov chain on a finite state space \(\{1,\dots ,n\}\). More precisely, we assume that M satisfies:

-

1.

\(0\le M_{ij}\le 1\) for every \(i,j \in \{1,\dots ,n \}\);

-

2.

\(\sum _{i=1}^n M_{ij} = 1\) for every \(j\in \{1,\dots ,n\}\);

-

3.

there exists \(N \in \mathbb {N}\) such that \(M^N_{ij}>0\) for every \(i,j \in \{1,\dots ,n \}\).

Let \(\mathbf{h }_M= (h_1,\dots ,h_n)^\top \in \mathbb {R}^n\) denote the invariant probability vector of M, i.e. the probability vector such that \(M\mathbf{h }_M = \mathbf{h }_M\). We note that the existence and the uniqueness of \(\mathbf{h }_M\) follow from the above assumptions on M. Moreover, let us consider perturbations of M of the form \(M+\varepsilon m\), where \(\varepsilon \in \mathbb {R}\) and \(m \in \mathbb {R}^{n\times n}\). In order to ensure that \(M+\varepsilon m\) is also a column stochastic matrix, we need to impose some conditions on m and \(\varepsilon \). For a fixed \(m=(m_{ij})\in \mathbb {R}^{n\times n}\), we require that

Furthermore, we assume that \(\varepsilon \in [\varepsilon _-,\varepsilon _+]\) and \(\varepsilon _-<\varepsilon _+\), where

and

Let us denote the invariant probability vector of the perturbed transition matrix \(M+\varepsilon m\) by \(\mathbf{h }_{M+\varepsilon m}\). We remark that by decreasing \(\left[ \varepsilon _-, \varepsilon _+\right] \) we can ensure that the invariant probability vector \(\mathbf{h }_{M+\varepsilon m}\) remains unique. If we write

where \(\varepsilon \in \mathbb {R}\) is close to 0, then \(\mathbf{u }_1\) is defined as the linear response of the invariant probability vector \(\mathbf{h }_M\) to the perturbation \(\varepsilon m\).

By summing the entries of both sides of (3) and comparing \(\varepsilon \) orders, we must have that the column sum of the vector \(\mathbf{u }_1\) is zero. On the other hand, since \(\mathbf{h }_{M+\varepsilon m}\) is an invariant probability vector of \(M+\varepsilon m\), we have that

By expanding the left-hand side of (4), we obtain that

Hence, it follows from (3) and (4) that the linear response \(\mathbf{u }_1\) satisfies equations

and

where \(\mathbf{1 }^\top = (1,\dots ,1)\in \mathbb {R}^n\). By Theorem 2 [23] the linear system (5)–(6) has the unique solution given by

where

is the fundamental matrix of M.

We note that (7) is a standard linear response formula, holding in more general settings, such as where M is replaced by a transfer operator with a spectral gap (see [3] and [18]). In the rest of the paper, we will denote \(\mathbf{h }_M\) simply by \(\mathbf{h }\).

3 Maximizing the Euclidean Norm of the Linear Response of the Invariant Measure

Our aim in this section is to find the perturbation m that will maximise the Euclidean norm of the linear response. We will start by considering the case when M has all positive entries and later we will deal with the general case when \(M\in \mathbb {R}^{n\times n}\) is the transition matrix of an arbitrary mixing Markov chain.

3.1 The Kronecker Product

In this subsection, we will briefly introduce the Kronecker product and some of its basic properties. These results will be used to convert some of our optimization problems into simpler, smaller, and more numerically stable forms.

Definition 1

Let \(A = (\mathbf a _1|\dots |\mathbf a _n) = (a_{ij})\) be an \(m\times n\) matrix and B a \(p\times q\) matrix. The \(mp\times nq\) matrix given by

is called the Kronecker product of A and B and is denoted by \(A\otimes B\). Furthermore, the vectorization of A is given by the vector

The following result collects some basic properties of the Kronecker product.

Proposition 1

([27]) Let A, B, C, D be \(m\times n\), \(p\times q\), \(n\times n\) and \(q\times q\) matrices respectively, and let \(\alpha \in \mathbb {R}\). Then, the following identities hold:

-

(i)

\((A\otimes B)(C\otimes D) = AC\otimes BD\);

-

(ii)

\(\alpha A = \alpha \otimes A = A\otimes \alpha \);

-

(iii)

\((A\otimes B)^\top = A^\top \otimes B^\top \), where \(A^\top \) denotes the transpose of A;

-

(iv)

\({{\mathrm{Rank}}}(A\otimes B) = ({{\mathrm{Rank}}}(A))\cdot ({{\mathrm{Rank}}}(B))\);

-

(v)

let \(\lambda _1,\dots ,\lambda _n\) be the eigenvalues of C and \(\mu _1,\dots ,\mu _q\) be the eigenvalues of D. Then, the nq eigenvalues of \(C\otimes D\) are given by \(\lambda _i\mu _j\), for \(i=1,\dots ,n\) and \(j=1,\dots ,q\). Moreover, if \(\mathbf{x }_1\dots ,\mathbf x _n\) are linearly independent right eigenvectors of C corresponding to \(\lambda _1,\dots ,\lambda _n\) and \(\mathbf y _1\dots ,\mathbf y _q\) are linearly independent right eigenvectors of D corresponding to \(\mu _1,\dots ,\mu _q\), then \(\mathbf{x }_i\otimes \mathbf y _j\) are linearly independent right eigenvectors of \(C\otimes D\) corresponding to \(\lambda _i\mu _j\);

-

(vi)

for any \(n\times p\) matrix E, we have \(\widehat{AEB} = (B^\top \otimes A)\widehat{E}.\)

3.2 An Alternative Formula for the Linear Response of the Invariant Measure

As a first application of the Kronecker product, we give an alternative formula for the linear response (7). Using Proposition 1(vi) and noting that \(Qm\mathbf{h }\) is an \(n\times 1\) vector, we can write

where \(W = \mathbf{h }^\top \otimes Q\). The dimension of W is \(n\times n^2\). We now have two equivalent formulas for the linear response: (7) in terms of the matrix m and (9) in terms of the vectorization \(\widehat{m}\). In Sects. 3.3 and 3.4 of the paper, the formula (9) will be predominately used.

3.3 Positive Transition Matrix M

We first suppose that the transition matrix is positive, i.e. \(M_{ij}>0\) for every \(i, j\in \{1, \ldots , n\}\). In some situations, positivity is a strong assumption; in Sect. 3.4 we handle general (aperiodic and irreducible) stochastic M. We will find the perturbation m that maximises the Euclidean norm of the linear response. More precisely, we consider the following optimization problem:

where \(\Vert \cdot \Vert _2\) is the Euclidean norm and \(\Vert \cdot \Vert _F\) is the Frobenius norm defined by \(\Vert A\Vert ^2_F = \sum _i\sum _j |a_{ij}|^2\), for \(A=(a_{ij})\). We note that the constraint (11) corresponds to the condition (2), while (12) is imposed to ensure the existence (finiteness) of the solution. Furthermore, we observe that a solution to the above optimization problem exists since we are maximising a continuous function on a compact subset of \(\mathbb {R}^{n\times n}\).

3.3.1 Reformulating the Problem (10)–(12) in Vectorized Form

We begin by reformulating the problem (10)–(12) in order to obtain an equivalent optimization problem over a space of vectors as opposed to a space of matrices. Using (9), we can write the objective function in (10) as \(\Vert W{\widehat{m}}\Vert _2^2\). Similarly, we can rewrite the constraint (11) in terms of \({\widehat{m}}\). More precisely, we have the following auxiliary result. Let \(\text {Id}_n\) denote an identity matrix of dimension n.

Lemma 1

The constraint (11) can be written in the form \(A\widehat{m}=\mathbf {0}\), where A is an \(n\times n^2\) matrix given by

Proof

We have that \(\mathbf{1 }^\top m\) is a \(1\times n\) vector and thus \(\widehat{{\mathbf{1 }}^\top m} =m ^\top \mathbf{1 }\). Furthermore, using Proposition 1(vi) we have that \(m^\top \mathbf{1 } = \widehat{{\mathbf{1 }}^\top m} = \widehat{{\mathbf{1 }}^\top m \text {Id}_n} = \left( \text {Id}_n\otimes \mathbf{1 }^\top \right) \widehat{m} = A\widehat{m}.\) \(\square \)

We also observe that \(\Vert m\Vert _F^2 = \sum _i\sum _j |m_{ij}|^2 = \Vert \widehat{m}\Vert _2^2.\) Consequently, we can rewrite constraint (12) in terms of the Euclidean norm of the vector \(\widehat{m}\). Let A be as in (13). Our optimization problem (10)–(12) is therefore equivalent to the following:

3.3.2 Reformulating the Problem (14)–(16) to Remove Constraint (15)

Finally, we reformulate the problem (14)–(16) to solve it as an eigenvalue problem. Consider the subspace V of \(\mathbb {R}^{n^2}\) given by

We can write V as \(V = \text {span}\{\mathbf{v }_1,\dots ,\mathbf{v }_{\ell }\}\), where \(\mathbf{v }_k \in {\mathbb {R}}^{n^2}\), \(k\in \{1,\ldots ,\ell \}\) form a basis of V. Note that \(\ell = n^2-n\). Indeed, it follows from Proposition 1(iv) and (13) that \({{\mathrm{Rank}}}(A) = {{\mathrm{Rank}}}(\text {Id}_n){{\mathrm{Rank}}}({\mathbf{1 }}^\top ) = n\), and thus by the rank-nullity theorem we have that \(\ell =n^2-n\).

Taking \(\widehat{m} \in V\) and writing

we conclude that there exists a unique \(\varvec{\alpha }\in \mathbb {R}^{\ell }\) such that \({\widehat{m}} = E\varvec{\alpha }\). Hence, \({\varvec{\alpha }} = E^+{\widehat{m}}\), where \(E^+\) denotes the left inverse of E given by \(E^+{:}= (E^\top E)^{-1}E^\top \). Note that since E has full rank, we have that \(E^\top E\) is non-singular (see p.43, [7]) and therefore \(E^+\) is well-defined. Using the above identities, we obtain

Let

Since the only assumption on \({\widehat{m}}\) was that \({\widehat{m}}\in V\), the problem (14)–(16) is equivalent to the following:

The solution \(\widehat{m}^*\) to the problem (21)–(22) is the \(\Vert \cdot \Vert _2\)-normalised eigenvector corresponding to the largest eigenvalue of the \(\ell \times \ell \) matrix \(U^\top U\) (see p.281, [31]).

In the particular case when \(\{ \mathbf v _1,\ldots ,\mathbf v _{\ell }\}\) is an orthonormal basis of V, we have that \(E^\top E = \text {Id}_\ell \) and therefore \(\Vert \widehat{m}\Vert _2^2 = \varvec{\alpha }^\top E^\top E\varvec{\alpha } = \varvec{\alpha }^\top \varvec{\alpha } = \Vert \varvec{\alpha }\Vert _2^2.\) Using (19), we conclude that the optimization problem (21)–(22) further simplifies to

where

The solution \(\varvec{\alpha }^*\) to (23)–(24) is the eigenvector corresponding to the largest eigenvalue of \(\widetilde{U}^\top \widetilde{U}\). Finally, we note that the relationship between solutions of (21)–(22) and (23)–(24) is given by

3.3.3 An Optimal Solution and Optimal Objective Value

For positive M, we can now derive an explicit expression for E and thus obtain an explicit form for the solution of the optimization problem (10)–(12). We will do this by considering the reformulation (23)–(24) of our original problem (10)–(12). Let \(V_0\) denote the null space of \(\mathbf{1 }^\top \). An orthonormal basis for \(V_0\) is the set \(\{\mathbf{x }_1, \ldots , \mathbf{x }_{n-1}\}\), where

and

Let B be an \(n\times (n-1)\) matrix given by

Therefore, we can take

in (18). Using Proposition 1(i), (9) and (25), we have \(\widetilde{U}=WE = \mathbf{h }^\top \otimes QB\). Hence, it follows from Proposition 1(i) and (iii) that \(\widetilde{U}^\top \widetilde{U} = \mathbf{h }\mathbf{h }^\top \otimes B^\top Q^\top QB.\) By Proposition 1(v), the eigenvector corresponding to the largest eigenvalue \(\lambda \) of \(\widetilde{U}^\top \widetilde{U}\) is given by \(\varvec{\alpha }^* = \mathbf{h }\otimes \mathbf y \), where \(\mathbf y \) is the eigenvector corresponding to the largest eigenvalue (which we denote by \(\kappa \)) of an \((n-1)\times (n-1)\) matrix \(B^\top Q^\top Q B\). From Proposition 1(v), \(\lambda =\kappa \Vert \mathbf{h }\Vert _2^2\) is the eigenvalue corresponding to \(\varvec{\alpha }^*\). Hence, it follows from (26) and (30) that an optimal perturbation is

Note that this expression for \(\widehat{m}^*\) is an improvement over computing an eigenvector of the \((n^2-n)\times (n^2-n)\) matrix \(\widetilde{U}^\top \widetilde{U}\) because we only need to find \(\mathbf y \), which is an eigenvector of an \((n-1)\times (n-1)\) matrix.

Taking into account (22), we must have \(\Vert \widehat{m}^* \Vert _2^2 =1\) and thus

as \(B^\top B=\text {Id}_{n-1}\) (columns of B form an orthonormal basis of \(V_0\)). So, \(\mathbf y \) must satisfy

Finally, using Proposition 1(ii), (9) and (31), we obtain

and therefore the optimal objective value is

We impose the normalization condition (32) for \(\mathbf y \) throughout the paper when dealing with positive M. Note that replacing \(\widehat{m}^*\) with \(-\widehat{m}^*\) in (33) yields the same Euclidean norm of the response.

The issue of dependency of optimal solutions \(\widehat{m}^*\) and optimal objective values \(\lambda \) on the selected set of orthonormal columns for B will be treated in full generality in Proposition 4. There we show the optimal objective value is independent of the orthonormal basis vectors forming the columns of B (or alternatively the columns of E), and provide a sufficient condition for an optimal \(m^*\) to be independent of this choice (up to sign).

3.4 General Transition Matrix M for Mixing Markov Chains

In the general setting, when M is a transition matrix of an arbitrary mixing Markov chain, we consider the following optimization problem:

The (complicating) constraint (37) models the natural situation of probabilistic fluctuations occurring only where nonzero probabilities already exist. We note that the solution to the optimization problem (34)–(37) exists since we are again maximising a continuous function on a compact subset of \(\mathbb {R}^{n\times n}\).

3.4.1 Reformulating the Problem (34)–(37) in Vectorized Form

As in the positive M case, we want to find a matrix A so that the constraints (35) and (37) can be written in terms of \(\widehat{m}\) in the linear form (15). Let \(\mathcal {M}{:} = \{ i: \widehat{M}_i \in \{0, 1\}\}\,{=}\,\{\gamma _1,\dots ,\gamma _j\}\subset \{1,2,\dots ,n^2\}\), where \(\widehat{M}\) denotes the vectorization of M. Proceeding as in the proof of Lemma 1, it is easy to verify that constraints (35) and (37) can be written in the form (15), where A is a \(k\times n^2\) matrix (\(k\ge n\)) given by

where the \(\mathbf e _i\)s in (38) are the i-th standard basis vectors in \(\mathbb {R}^{n^2}\). As in the positive M case, the term \(\text {Id}_n\otimes \mathbf{1 }^\top \) in (38) corresponds to the constraint (35), while all other entries of A are related to constraints (37). We conclude that we can reformulate the optimization problem (34)–(37) in the form (14)–(16) with A given by (38).

3.4.2 Explicit Construction of an Orthonormal Basis of the Null Space of the Matrix A in (38)

Proceeding as in the positive M case, we want to simplify the optimization problem (14)–(16) by constructing a matrix E as in (18), whose columns form an orthonormal basis for the null space of A. We first note that E is an \(n^2\times \ell \) matrix, where \(\ell \) is the nullity of A. Let us begin by computing \(\ell \) explicitly.

Lemma 2

The nullity of the matrix A in (38) is \(n^2 - (n+n_1)\), where n is the dimension of the square matrix M and \(n_1\) is the number of zero entries in M.

Proof

Let \(Y = \{\mathbf{v }=(v_1, \ldots , v_n)\in \mathbb {R}^n:v_i = 1\text { for some }1\le i\le n\}.\) Assume first that M doesn’t contain any columns that belong to Y and consider \(\mathbf{M }_j\), the j-th column of M. Note that the j-th row of A is given by

On the other hand, for every zero in \(\mathbf{M }_j\), we have the following row in A

where 1 is in a position corresponding to the position of the zero entry in \(\mathbf{M }_j\). Since \(\mathbf{M }_j \notin Y\), we have that the number of rows of the form (40) in A is at most \(n-2\). Therefore, we obviously have that the set spanned by row (39) and rows (40) is linearly independent. Moreover, since all other rows of A have only zeros on places where vectors in (39) and (40) have nonzero entries and since j was arbitrary, we conclude that rows of A are linearly independent and that \({{\mathrm{Rank}}}(A)=n+n_1\). This immediately implies that the nullity of A is \(n^2 - (n+n_1)\).

The general case when M can have columns that belong to Y can be treated similarly. Indeed, it is sufficient to note that each \(\mathbf{M }_{j} \in Y\) will generate \(n+1\) rows in A (given again by (39) and (40)) but only form a subspace of dimension \(n=1+(n-1)\) and \(n-1\) is precisely the number of zero entries in \(\mathbf{M }_j\). \(\square \)

For A given by (38) written in the form

let V be defined as in (17). We aim to construct a matrix E as in (18) whose columns form an orthonormal basis for V. We first need to introduce some additional notation. For a matrix \(J\in \mathbb {R}^{p_1\times p_2}\) and a set \(L=\{l_1,\dots ,l_s\}\subset \{1, \ldots , p_1\}\), we define J[L] to be the matrix consisting of the rows \(l_1,\ldots ,l_s\) of J. We note that J[L] is an \(s\times p_2\) matrix.

Note that \(A_i\) in (41) can be written as

where \(R_i{:}= \{j: M_{ji} \in \{0, 1\} \}\) and for some \(i_c\in \{0,1,\dots , n^2\}\), \(c\in \{1, 2, 3\}\) such that \(\sum _{c=1}^3 i_c = k-|R_i|-1\); recall A has k rows (see (38)). It follows from (42) that the null space of \(A_i\) is the same as the null space of the matrix \(\widetilde{A}_i {:}=\left( \begin{array}{c} \mathbf{1 }_n^\top \\ \text {Id}_n[R_i] \end{array}\right) .\) Let \(r_i\in \{0, \ldots , n-1\}\) denote the number of zeros in the i-th column of M. It follows from the arguments in the proof of Lemma 2 that

In particular, when \(r_i=n-1\), the nullity of \(\widetilde{A}_i\) is zero.

The first step in constructing an explicit E is provided by the following result, where \(\text{ diag }(B_1,\dots ,B_n)\) denotes the block matrix with diagonal blocks \(B_1,\ldots ,B_n\).

Proposition 2

Define the matrix \(E = \text {diag}(B_1,\dots ,B_n)\), where \(B_i\) is the matrix whose columns form an orthonormal basis of the null space of \(A_i\) (if this null space is trivial, we omit the block \(B_i\)). The columns of E form an orthonormal basis for the null space of A.

Proof

We begin by showing that \(V=\text {null}(A)\subset \text {col}(E)\) (the column space of E). For \(\mathbf w \in V\), we write \(\mathbf w =(\mathbf w _1^\top ,\dots ,\mathbf w _n^\top )^\top ,\) where \(\mathbf w _i\in \mathbb {R}^n\) for \(1\le i \le n\). From (41) we have that \(A\mathbf w =\sum _{i=1}^nA_i\mathbf w _i\). Using (42), we have

As \(A\mathbf w =\mathbf 0 \), we conclude that \(A_i\mathbf w _i=\mathbf 0 \) for each \(i\in \{1,\dots ,n\}\). Thus each \(\mathbf w _i\) can be written as a linear combination of columns of \(B_i\) and therefore \(\mathbf w \) can be written as a linear combination of columns of E; thus \(V\subset \) col(E). The orthonormality of the columns of E follows from the orthonormality of the columns of \(B_i\). As \(B_i\) has full column rank, the number of columns of E equals the sum of the rank of the \(B_i\)s. The number of columns of E can be computed as \(\sum _{i=1}^n\)Rank(\(B_i\)) = \(\sum _{i=1}^n\)Nullity(\(A_i\)) = \(\sum _{i=1}^n\) \(n-\)Rank(\(A_i\)) = \(n^2-(n+n_1)\)= Nullity(A), where the second last equality follows from (43) and the fact that \(n_1 = \sum _{i=1}^n r_i\), and the last equality follows from Lemma 2. Thus, the columns of E form a basis for the null space of A. \(\square \)

The final step is to construct the matrices \(B_i\), \(1\le i \le n\), explicitly.

Proposition 3

Assume that \(r_i<n-1\) and let \(\widetilde{B}_i = (\mathbf{x }_1|\dots |\mathbf{x }_{(n-1)-r_i})\in \mathbb {R}^{(n-r_i) \times ((n-1)-r_i)},\) where \(\mathbf{x }_i\) form the orthonormal basis of the null space of \(\mathbf{1 }^\top _{n-r_i}\) having the form (27). Furthermore, let \(B_i\in \mathbb {R}^{n \times ((n-1)-r_i)}\) be a matrix defined by the conditions:

The columns of \(B_i\) form an orthonormal basis for the null space of \(A_i\).

Proof

As the null spaces of matrices \(A_i\) and \(\widetilde{A}_i\) coincide, it is sufficient to show that columns of \(B_i\) form an orthonormal basis for the null space of \(\widetilde{A}_i\). We first note that the orthonormality of \(\mathbf{x }_1, \ldots ,\mathbf{x }_{n-1-r_i}\) in \(\mathbb {R}^{n-r_i}\) directly implies that the columns of \(B_i\) form an orthonormal set in \(\mathbb {R}^n\), since the j-th column of \(B_i\) is built from \(\mathbf{x }_j\) by adding zeroes on appropriate places that are independent of j. Furthermore, since \(\mathbf{x }_1, \ldots , \mathbf{x }_{n-1-r_i}\) are in the null space of \(\mathbf{1 }^\top _{n-r_i}\), we have that the columns of \(B_i\) belong to the null space of \(\mathbf{1 }^\top _{n}\). Moreover, it follows from the first equality in (44) that columns of \(B_i\) are also orthogonal to all other rows of \(\widetilde{A}_i\). Consequently, we conclude all columns of \(B_i\) lie in the null space of \(\widetilde{A}_i\). Finally, by (43) we have that the nullity of \(\widetilde{A}_i\) is \(n-r_i-1\) which is the same as the number of columns of \(B_i\) and therefore columns of \(B_i\) span the null space of \(\widetilde{A}_i\). \(\square \)

Using Propositions 2 and 3, and exploiting block structure we can arrive at a computationally convenient form of \(\widetilde{U}=WE\): First, noting that \(\mathbf{h }^\top \otimes Q = (1\otimes Q)(\mathbf{h }^\top \otimes \text {Id}_n) = Q(\mathbf{h }^\top \otimes \text {Id}_n)\), using Proposition 1(i) and (ii), respectively, we have

using (9) for the second equality and Proposition 2 for the third equality.

3.4.3 Solution to the Problem (34)–(37)

Now that we have constructed an appropriate E (Proposition 2 gives the form of E and Proposition 3 provides the specific components of E), we can reformulate our problem (14)–(16) (with the matrix A in (38)), to obtain the optimization problem (23)–(24) with \(\widetilde{U}\) as in (45). A vectorized solution to (34)-(37) is given by \(\widehat{m}^*\) as in (26), where \(\varvec{\alpha }^*\) again denotes the eigenvector corresponding to the largest eigenvalue, \(\lambda \), of the matrix \(\widetilde{U}^\top \widetilde{U}\). As for the positive M case, we have that both \(m^*\) and \(-m^*\) yield the same Euclidean norm of the response (34). Finally, the optimal value may be calculated as \(\Vert Qm^*\mathbf{h }\Vert _2^2 = \Vert W\widehat{m}^*\Vert _2^2=\Vert WE\varvec{\alpha }^*\Vert _2^2=\Vert \widetilde{U}\varvec{\alpha }^*\Vert _2^2 = \lambda \varvec{\alpha }^{*\top }\varvec{\alpha }^*=\lambda \), where the first three equalities follow by (9), (26) and (25), respectively.

3.4.4 A Sufficient Condition for a Unique Optimal Solution and Independence of the Choice of Basis of the Null Space of A

The following result provides an easily checkable sufficient condition (simplicity of the leading eigenvalue of \(\widetilde{U}^\top \widetilde{U}\)) for the uniqueness of the solution \(m^*\) (up to sign) to the problems (10)–(12) and (34)–(37). Under this condition, the specific choice of basis for the null space of the constraint matrix A is unimportant, and the \(m^*\) computed in Algorithms 1 and 2 in Sect. 3.5 is independent of this basis choice. Recall that \(W=\mathbf{h }^\top \otimes Q\) and A is the matrix of equality constraints (i.e. \(A\widehat{m}^* = \mathbf 0 \)).

Proposition 4

Consider two distinct orthonormal bases for the null space of A and construct matrices \(E_1\ne E_2\) from these bases as in (18).

-

1.

The matrices \(\widetilde{U}_i^\top \widetilde{U}_i\) (for \(\widetilde{U}_i = WE_i\)), \(i=1,2\) are similar.

-

2.

If the largest eigenvalue \(\lambda _1\) of \(\widetilde{U}_1^\top \widetilde{U}_1\) is simple, let \(\varvec{\alpha }_i^*\) denote the eigenvector of \(\widetilde{U}_i^\top \widetilde{U}_i\) corresponding to \(\lambda _1\), normalised so that \(\Vert \varvec{\alpha }_i^*\Vert _2=1\), \(i=1,2\). One has \(\widehat{m}^*_1\) equals \(\widehat{m}^*_2\), up to sign, when computed with (26).

Proof

As the columns of \(E_1\) and the columns of \(E_2\) span the same space, there exists some matrix \(R\in \mathbb {R}^{\ell \times \ell }\) such that \(E_2=E_1R\). Noting that \(E_i^\top E_i =\) Id\(_{\ell }\), \(i=1,2\), we have that Id\(_{\ell } = E_2^\top E_2 = R^\top E_1^\top E_1 R = R^\top R\); using the fact that R is square, we also have that \(R^\top = R^{-1}\) and hence R is orthogonal. As \(\widetilde{U}_1^\top \widetilde{U}_1 = E_1^\top W^\top W E_1\) and \(\widetilde{U}_2^\top \widetilde{U}_2 = R^{-1} E_1^\top W^\top W E_1 R\), the matrices \(\widetilde{U}_1^\top \widetilde{U}_1\) and \(\widetilde{U}_2^\top \widetilde{U}_2\) are similar. Using \(\varvec{\alpha }^*_1=\pm R \varvec{\alpha }^*_2\) we obtain \(\widehat{m}^*_1 = E_1 \varvec{\alpha }^*_1 = \pm E_1 R \varvec{\alpha }^*_2 = \pm E_2 \varvec{\alpha }^*_2 = \pm \widehat{m}^{*}_2.\) \(\square \)

3.5 Computations

In this section, we will discuss computational aspects of the content presented so far. We provide separate algorithms for positive M and general stochastic (mixing) M: Algorithms 1 and 2 respectively.

3.5.1 Algorithms for Solving (10)–(12) and (34)–(37)

We first present the algorithm for finding the solution \(m^*\) of the problem (10)–(12).

Next, we state the algorithm for solving (34)–(37).

3.6 Analytic Example

We now explicitly construct the solution for the problem (34)-(37) when \(M\in \mathbb {R}^{2\times 2}\). Since M is column stochastic and the columns of m sum to zero, we can write

and

We first note that without any loss of generality, we can assume that M is positive. Indeed, if \(M_{11} = 0\) then by (36) and (37), we have that \(m_{11}=0\) and \(m_{22}=\pm \frac{1}{\sqrt{2}}\). Similarly, if \(M_{22} = 0\) then \(m_{22}=0\) and \(m_{11}=\pm \frac{1}{\sqrt{2}}\). Furthermore, we note that \(M_{11} \ne 1\) and \(M_{22} \ne 1\) since otherwise M would not be a transition matrix of an ergodic Markov chain. One may calculate that

and

We see from (47) that the greatest \(\ell ^2\) response of the invariant probability vector \(\mathbf{h }=(M_{12},M_{21})/(M_{12}+M_{21})\) is achieved by increasing whichever of \(M_{12}\) or \(M_{21}\) is greatest. Furthermore, as expected \(\mathbf{h }\) is most sensitive when M is near diagonal. The minimum value of \(\Vert \mathbf{u }_1\Vert _2^2\) occurs when \(M_{12}=M_{21}=1\) (value of 1 / 8) and increases with decreasing values of \(M_{12}\) and \(M_{21}\). There is a singularity at \(M_{12}=M_{21}=0\) when the second eigenvalue merges with the eigenvalue 1; see Fig. 1.

Contour plot of \(\log _e(\Vert \mathbf {u}_1\Vert _2^2=\log _e((M_{12}^2+M_{21}^2)/(M_{12}+M_{21})^4))\) from Sect. 3.6

4 Maximising the Linear Response of the Expectation of an Observable

In this section, we consider maximizing the linear response of the expected value of a cost vector \(\mathbf c \) with respect to the invariant probability vector \(\mathbf{h }\). The computations developed in this section will be used in Sect. 7 to solve a discrete version of the problem of maximizing the linear response of an observable with respect to the invariant measure of a stochastically perturbed dynamical system.

We recall that the linear response to the invariant probability vector \(\mathbf{h }\) of an irreducible, aperiodic transition matrix M, under a perturbation matrix m, is denoted by \(\mathbf{u }_1\). We wish to select a perturbation matrix m so that we maximise \(\mathbf c ^T\mathbf{u }_1\). For \(\mathbf c \in \mathbb {R}^n\), using (7), we consider the following problem:

where \(N = \{(i,j)\in \{1,\ldots ,n\}^2: M_{ij}= 0 \text { or } 1\}\). Note that as \(m_{ij}\) takes the value 0 for all \((i,j)\in N\), we just need to determine \(m_{ij}\) for \((i,j)\not \in N\).

We employ Lagrange multipliers (see e.g. Sects. 12.3–12.5 [32]). Consider the Lagrangian function

where \(\mathbf w ^\top = \mathbf c ^\top Q \in \mathbb {R}^n\) and \(\varvec{\varrho }\in \mathbb {R}^n, \nu \in \mathbb {R}\) are the Lagrange multipliers. Differentiating (52) with respect to \(m_{ij}\), we obtain

Using the first-order optimality (KKT) conditions from the method of Lagrange multipliers (e.g. Theorem 12.1 [32]) we require

\(\Vert m\Vert _F=1\), and a regularity condition (LICQ).Footnote 1 Equation (53) yields \(\varrho _j = -2\nu m_{ij} +w_ih_j\) for \((i,j)\not \in N\). Using (54), we calculate

where \(N_j^c = \{i: (i,j)\not \in N\}\). Thus, substituting \(\varrho _j=(h_j/|N_j^c|)\sum _{l:(l,j)\not \in N}w_l\) we obtain

We now scale \(\nu \) to ensure \(\Vert m^*\Vert _F=1\). The matrix \(m^*\) satisfies the first-order equality constraints (49)–(51) and \(\frac{\partial L}{\partial m_{ij}}(m^*,\varvec{\varrho },\nu )=0\) for \((i,j)\not \in N\). Finally, we determine the sign of \(\nu \) by checking the standard sufficient second order conditions for \(m_{ij}^*\) to be a maximiser (namely (57) below); see e.g. Theorem 12.6 [32]. We compute

thus, the Hessian matrix of the Lagrangian function is \(H(m^*,\varvec{\varrho },\nu ) = -2\nu \) Id\(_{n^2-|N|}\). If \(\nu >0\) one has

for any \(\mathbf{s }\in \mathbb {R}^{n^2-|N|}\setminus \{\mathbf{0 }\}\) (indeed for any \(\mathbf{s }\in \mathbb {R}^{n^2}\setminus \{\mathbf{0 }\}\)).

4.1 Algorithm for Solving Problem (48)–(51)

We can solve problem (48)–(51) using the following algorithm.

Remark 1

When M is large, but sparse, to avoid creating the full matrix \(\mathbf{h }\mathbf{1 }^\top \) in Step 2 above, one can replace Step 2 with: Solve the following (sparse) linear system for \(\mathbf w \)

4.2 Analytic Example

Suppose that \(M\in \mathbb {R}^{2\times 2}\) and we would like to solve (48)–(51) for \(\mathbf c \in \mathbb {R}^2\), \(\mathbf c \ne a\mathbf{1 }\), where \(a\in \mathbb {R}\). As in the example in Sect. 3.6, we only need to consider the case when M is positive. Let \(\mathbf w =Q^\top \mathbf c \); one may calculate that

and

5 Maximising the Linear Response of the Rate of Convergence to Equilibrium

In this section, we consider maximizing the linear response of the rate of convergence of the Markov chain to its equilibrium measure. We achieve this by maximizing the linearised change in the magnitude of the (assumed simple) second eigenvalue \(\lambda _2\) of the stochastic matrix M. The computations in this section will be applied in Sect. 7 to solve a discrete version of the problem of maximizing the linear response of the rate of convergence to equilibrium for some stochastically perturbed dynamical system. A related perturbative approach [15] increases the mixing rate of (possibly periodically driven) fluid flows by perturbing the advective part of the dynamics and solving a linear program to increase the spectral gap of the generator (infinitesimal operator) of the flow. In [16] kernel perturbations related to those used in Sect. 7 were optimised to drive a nonequilibrium density toward equilibrium by solving a convex quadratic program with linear constraints.

Because M is irreducible and aperiodic, \(\lambda _1=1\) is the only eigenvalue on the unit circle. Let \(\lambda _2\in \mathbb {C}\) be the eigenvalue of M strictly inside the unit circle with largest magnitude, and assume that \(\lambda _2\) is simple. Denote by \(\mathbf l _2\in \mathbb {C}^n\) and \(\mathbf r _2\in \mathbb {C}^n\) the left and right eigenvectors of M corresponding to \(\lambda _2\). We assume that we have the normalisations \(\mathbf r _2^*\mathbf r _2=1\) and \(\mathbf l _2^*\mathbf r _2=1\). Considering the small perturbation of M to \(M+\varepsilon m\), by standard arguments (e.g. Theorem 6.3.12 [22]), one has

where \(\lambda _2(\varepsilon )\) is the second largest eigenvalue of \(M+\varepsilon m\). We wish to achieve a maximal decrease in the magnitude of \(\lambda _2\), or equivalently a maximal decrease in the real part of the logarithm of \(\lambda _2\). Denote by \(\mathfrak {R}(\cdot )\) and \(\mathfrak {I}(\cdot )\) the real and imaginary parts, respectively. Now \(d(\mathfrak {R}(\log \lambda _2(\varepsilon )))/d\varepsilon = \mathfrak {R}(d\log (\lambda _2(\varepsilon ))/d\varepsilon )= \mathfrak {R}((d\lambda _2(\varepsilon )/d\varepsilon )/\lambda _2(\varepsilon ))\), which, using (61) becomes

Similarly to Sect. 4 we now have the optimisation problem:

where \(N = \{(i,j)\in \{1,\ldots ,n\}^2: M_{ij}= 0 \text { or } 1\}\). Note that as \(m_{ij}\) takes the value 0 for all \((i,j)\in N\), we just need to solve (63)–(65) for \((i,j)\not \in N\).

Applying Lagrange multipliers, we proceed as in Sect. 4, with the only change being to replace the expression (53) with

where

Following the steps in Sect. 4 we obtain

where \((i,j)\not \in N\) and \(N_j^c = \{i: (i,j)\not \in N\}\). Note that because we are minimising (as opposed to maximising in Sect. 4) we select \(\nu <0\), scaled so that \(\Vert m^*\Vert _F=1\).

5.1 Algorithm

The following algorithm can be used to compute the optimal perturbation \(m^*\) to maximise the linear response of the rate of convergence to equilibrium.

5.2 Analytic Example

Suppose that \(M\in \mathbb {R}^{2\times 2}\) and we would like to solve (63)–(66). As in Sect. 3.6 for \(M\in \mathbb {R}^{2\times 2}\), we only need to consider the case when M is positive. One may calculate that

and

The optimal choice of \(m^*\) depends only on whether M is diagonally dominant or not: if M is diagonally dominant, perturb away from diagonal dominance, and if M is not diagonally dominant, perturb toward diagonal dominance. The linear response of \(\lambda _2\) has a fixed magnitude of 1.

6 Optimizing Linear Response for a General Sequence of Matrices

In this section we extend the ideas of Sects. 3 and 4 to derive the linear response of the Euclidean norm of a probability vector \(\mathbf{h }\) and the expectation of an observable \(\mathbf c \), when acted on by a finite sequence of matrices. We will then introduce and solve an optimization problem that finds the sequence of perturbation matrices that achieve these maximal values.

6.1 Linear Response for the Probability Vector \(\mathbf{h }\)

Let \(M^{(0)},M^{(1)},\dots ,M^{(\tau -1)}\) be a fixed finite sequence of column stochastic matrices. Furthermore, let \(m^{(t)}\), \(t\in \{0,\ldots ,\tau -1\}\) be a sequence of perturbation matrices. Take an arbitrary probability vector \(\mathbf{h }^{(0)}\) and set

We now want to derive the formula for the linear response of \(\mathbf{h }^{(\tau )}\). We require that

where \(\varepsilon \in \mathbb {R}\). We refer to \(\mathbf{u }^{(t)}_1\) as the linear response at time t. By expanding the left-hand side of (72), we have

Denoting for simplicity \(\mathbf{u }^{(t)}_1\) by \(\mathbf{u }^{(t)}\), it follows from (72) and (73) that

Set \(\mathbf{u }^{(0)}=0\). Iterating (74), we obtain that

6.1.1 The Optimization Problem

It follows from Proposition 1(vi) that

where

and \(W=\left( W^{(0)}|W^{(1)}|\dots |W^{(\tau -1)}\right) \). Note that the \(W^{(t)}\)s are \(n\times n^2\) matrices, W is an \(n\times \tau n^2\) matrix and \(\widehat{m}\) is a \(\tau n^2\)-vector.

We consider the following optimization problem, which maximises the response of the Euclidean norm of the response \(\mathbf{u }^{(\tau )}\):

where \(A^{(t)}\) is the constraint matrix (38) associated to the matrix \(M^{(t)}\) and conditions (35) and (37).

6.1.2 Solution to the Optimization Problem

We want to reformulate the optimization problem with the constraints (77) removed. We first note that (77) can be replaced by \(A\widehat{m} =\mathbf 0 \), where

Let \(E^{(t)}\) be an \(n^2\times \ell ^{(t)}\) matrix whose columns form an orthonormal basis of the null space of \(A^{(t)}\) for \(t=0,\dots ,\tau -1\), where \(\ell ^{(t)}\) denotes the nullity of \(A^{(t)}\). Then, \(E = \text {diag}(E^{(0)},\dots ,E^{(\tau -1)})\) is a matrix whose columns form an orthonormal basis of the null space of the matrix A in (79). Thus, if \(\widehat{m}\) is an element of the null space of A then, \(\widehat{m} = E\varvec{\alpha }\) for a unique \(\varvec{\alpha }\in \mathbb {R}^{\sum _{t=0}^{\tau -1}\ell ^{(t)}}\). Finally, as \(\sum _{t=0}^{\tau -1}\Vert \widehat{m}^{(t)}\Vert _2^2 = \Vert \widehat{m}\Vert _2^2 = \Vert E\varvec{\alpha }\Vert _2^2 = \Vert \varvec{\alpha }\Vert _2^2\), we can reformulate the optimization problem (76)–(78) as:

where

Arguing as in Sect. 3.4.3, we conclude that \(\widehat{m}^* = E\varvec{\alpha }^*\) maximises the Euclidean norm of the linear response \(\mathbf{u }^{(\tau )}\), where \(\varvec{\alpha }^*\in \mathbb {R}^{\sum _{t=0}^{\tau -1}\ell ^{(t)}}\) is the eigenvector corresponding to the largest eigenvalue of \(U^\top U\) (with U as in (82)). Finally, if we denote \(\mathbf{h }^{(t+1)}(\varepsilon ) = \left( M^{(t)}+\varepsilon m^{(t),*}\right) \mathbf{h }^{(t)} \), we choose the sign of \(m^{(t),*}\) so that \(\Vert \mathbf{h }^{(t)}\Vert _2<\Vert \mathbf{h }^{(t)}(\varepsilon )\Vert _2\) for small \(\varepsilon >0\) and for each \(t\in \{1,\dots ,\tau \}\); this is possible as \(\mathbf{h }^{(t)}\) is independent of \(m^{(t)}\).

6.2 Linear Response for the Expectation of an Observable

In this section, we consider maximising the linear response of the expected value of an observable \(\mathbf c \) with respect to the probability vector \(\mathbf{h }^{(\tau )}\), when acted on by a finite sequence of matrices. More explicitly, we consider the following problem: For \(\mathbf c \in \mathbb {R}^n\)

where \(N^{(t)} = \{(i,j)\in \{1,\ldots ,n\}^2: M^{(t)}_{ij} = 0\text { or }1\}\).

Multiplying (75) on the left by \(\mathbf c ^\top \) we obtain \(\mathbf c ^\top \mathbf{u }^{(\tau )} = \sum _{t=0}^{\tau -1}{} \mathbf w ^{(t)\top }m^{(t)}\mathbf{h }^{(t)}\), where \(\mathbf w ^{(t)\top }=\mathbf c ^\top M^{(\tau -1)}\dots M^{(t+1)}\) for \(t\in \{0,\dots ,\tau -2\}\) and \(\mathbf w ^{(\tau -1)\top } = \mathbf c ^\top \). Note that as the values of \(m^{(t)}_{ij}=0\) for \((i,j)\in N^{(t)}\), we just need to solve (83)–(85) for \((i,j)\not \in N^{(t)}\).

As in Sect. 4, we solve this problem using the method of Lagrange multipliers. We begin by considering the following Lagrangian function:

where \(\varvec{\varrho }^{(t)}\in \mathbb {R}^n\) and \(\nu \in \mathbb {R}\) are the Lagrange multipliers. Differentiating (87) with respect to \(m^{(t)}_{ij}\), we obtain

where \(w^{(t)}_{i},h^{(t)}_{j},\varrho ^{(t)}_{j}\in \mathbb {R}\) are the elements of the n-vectors \(\mathbf w ^{(t)},\mathbf{h }^{(t)}\) and \( \varvec{\varrho }^{(t)}\) respectively.

Using the first order optimality (KKT) conditions, we require

(85), and a regularity condition, analogous to that treated in Appendix 1, which follows similarly. We note that \(\varrho ^{(t)}_{j}= w^{(t)}_{i}h^{(t)}_{j}-2\nu m^{(t)}_{ij}\). Using (88), we calculate

where \(N_j^{(t),c} = \{i: (i,j)\not \in N^{(t)}\}\). Thus, we obtain

where \((i,j)\not \in N^{(t)}\). We scale \(\nu \) to ensure \(\sum _{t=0}^{\tau -1}\Vert {m}^{(t),*}\Vert _F^2=1\); all first-order optimality conditions are now satisfied. As in section 4, we determine the sign of \(\nu \) by checking the standard sufficient second order conditions for \(m^{(t),*}\), for \(t\in \{0,\dots ,\tau -1\}\), to be a maximiser. We note that the matrices \(m^{(t),*}\) satisfy (84)–(86) and

We compute

If \(\nu >0\) then \(H(m^{(0),*},\dots ,m^{(\tau -1),*},{\varvec{\varrho }}^{(0)},\dots , {\varvec{\varrho }}^{(\tau -1)},\nu )\), the Hessian of the Lagrangian function, satisfies \(\mathbf{s }^\top H(m^{(0),*},\dots ,m^{(\tau -1),*},{\varvec{\varrho }}^{(0)}, \dots ,{\varvec{\varrho }}^{(\tau -1)},\nu )\mathbf{s }<0\) for any \(\mathbf{s }\in \mathbb {R}^{\tau n^2}\setminus \{\mathbf{0 }\}\). Thus, the sufficient second order conditions for a maximiser are satisfied.

7 Numerical Examples of Optimal Linear Response for Stochastically Perturbed Dynamical Systems

We apply the techniques we have developed in Sects. 3–5 to randomly perturbed dynamical systems of the type introduced in Sect. 1. The annealed Perron-Frobenius (or transfer) operator defined by (1) is the linear (Markov) operator that pushes forward densities under the annealed (averaged) action of our random dynamical system. We will consider a connected, compact phase space \(X\subset \mathbb {R}^d\) and replace \(q(x-T(y))\) in (1) with a stochasticFootnote 2 kernel k(x, y) to handle perturbations near the boundary of X. The kernel k defines the integral operator \(\mathcal {P}f(x)=\int _X k(x,y)f(y)\ dy\). We will assume that \(k\in L^2(X\times X)\), which guarantees that \(\mathcal {P}\) is a compact operator on \(L^2(X)\); see e.g. Proposition II.1.6 [11]. A sufficient condition for \(\mathcal {P}\) possessing a unique fixed point in \(L^1\) is that there exists a j such that \(\int _X \inf _y k^{(j)}(x,y)\ dx>0\), where \(k^{(j)}\) is the kernel associated with \(\mathcal {P}^j\); see Corollary 5.7.1 [26]. This is a stochastic “covering” condition, which is satisfied by our examples, which are generated by transitive deterministic T with bounded additive uniform noise. In summary, we have a unique annealed invariant measure for our stochastically perturbed system and by compactness our transfer operator \(\mathcal {P}\) has a spectral gap on \(L^2(X)\) (i.e. the only element of \(\sigma (\mathcal {P})\) on the unit circle is \(\{1\}\), which is a simple eigenvalue).

7.1 Ulam Projection

In order to carry out numerical computations, we project the operator \(\mathcal {P}\) onto a finite-dimensional space spanned by indicator functions on a fine mesh of X. Let \(B_n=\{I_1,\ldots ,I_n\}\) denote a partition of X into connected sets, and set \(\mathcal {B}_n=\text{ span }\{\mathbf{1 }_{I_1},\ldots ,\mathbf{1 }_{I_n}\}\). Define a projection \(\pi _n:L^1(X)\rightarrow \mathcal {B}_n\) by \(\pi _n(f)=\sum _{i=1}^n \left( \frac{1}{\ell (I_i)}\int _{I_i} f\ dx \right) \mathbf{1 }_{I_i}\), where \(\ell \) is Lebesgue measure; \(\pi _n\) simply replaces \(f|_{I_i}\) with its expected value. We now consider the finite-rank operator \(\pi _n\mathcal {P}:L^1\rightarrow \mathcal {B}_n\); this general approach is known as Ulam’s method [37]. When Ulam’s method is applied to compact \(\mathcal {P}\) as above, one achieves convergence of \(\pi _n\mathcal {P}\) to \(\mathcal {P}\) in operator norm (and therefore \(L^2\) convergence of the leading eigenvector of \(\pi _n\mathcal {P}\) to that of \(\mathcal {P}\) via standard operator perturbation theory); see [12]. We calculate

Putting \(f=\sum _{j=1}^n f_j\mathbf{1 }_{I_j}\in \mathcal {B}_n\), where \(f_j\in \mathbb {R}, j=1,\ldots ,n\), we have

where M is the matrix representation of \(\pi _n\mathcal {P}:\mathcal {B}_n\rightarrow \mathcal {B}_n\).

In our examples below, \(X=[0,1]\) or \(X=S^1\), and \(k(x,y){:}=\mathbf{1 }_{B_\epsilon (Ty)}(x)/\ell (X\cap B_\epsilon (Ty))\), where \(B_\epsilon (Ty)\) denotes an \(\epsilon \)-ball centred at the point Ty. This definition of k ensures that we do not stochastically perturb points outside our domain X. Our random dynamical systems therefore comprise deterministic dynamics followed by the addition of uniformly distributed noise in an \(\epsilon \)-ball (with adjustments made near the boundary of X). This choice of k leads to

Combining (90) and (91) we obtain \(M_{ij}=\left( \int _{I_j} \ell (I_i\cap B_\epsilon (Ty))/\ell (X\cap B_\epsilon (Ty))\ dy\right) /\ell (I_i)\). From now on we assume that \(I_i=[(i-1)/n,i/n), i=1,\ldots ,n\), so that \(\mathcal {B}_n\) is an partition of X into equal length subintervals. We now have that \(\sum _{i=1}^n M_{ij}=1\) for each \(j=1,\ldots ,n\), and so M is a column stochastic matrix. We use the matrix M to numerically approximate the operator \(\mathcal {P}\) in the experiments below.

7.1.1 Consistent Scaling of the Perturbation m

In Sects. 7.2–7.4 we will think of the entries of the perturbation matrix m as resulting from the matrix representation of the Ulam projection of a perturbation \(\delta \mathcal {P}\) of \(\mathcal {P}\). To make this precise, we first write \(f\in \mathcal {B}_n\) as \(f=\sum _{j=1}^n \bar{f}_j\mathbf{1 }_{I_j}\), and introduce a projected version of \(\delta k\): \(\pi _n(\delta k)=\sum _{i,j}\bar{\delta k}_{ij}\mathbf{1 }_{I_i\times I_j}\), where the matrix \(\bar{\delta k}_{ij}=(1/(\ell (I_i)\ell (I_j)))\int _{I_i\times I_j}\delta k(x,y)\ dy dx\). We now explicitly compute the Ulam projection of \(\delta P\):

Thus, we have the relationship \(m_{ij}=\ell (I_j)\bar{\delta k}_{ij}\) between the matrix representation of the projected version of the operator \(\delta \mathcal {P}\) (namely m) and the elements of the projected version of the kernel (namely \(\bar{\delta k}\)).

We wish to fix the Hilbert-Schmidt norm of \(\pi _n\delta \mathcal {P}\) to 1.

Since \(\Vert m\Vert _F^2=\sum _{i,j=1}^n \ell (I_j)^2\bar{\delta k}_{ij}^2\), if we assume that \(\ell (I_i)=1/n\), \(1\le i\le n\), we obtain \(\Vert m\Vert _F=(1/n)^2\Vert \bar{\delta k}\Vert _F^2\) and by (92) we know \(\Vert \bar{\delta k}\Vert _F^2=n^2\). We thus conclude that enforcing \(\Vert m\Vert _F=1\) will ensure \(\Vert \pi _n\delta \mathcal {P}\Vert _{HS}=1\), as required.

7.1.2 Consistent Scaling for h and c

In Sects. 7.2–7.4 we will use vector representations of the invariant density h and an \(L^2\) function c. We write \(h=\sum _{i=1}^n \mathbf{h }_i\mathbf{1 }_{I_i}\), where \(\mathbf{h }\in \mathbb {R}^n\). We normalise so that \(\int _X h(x)\ dx=1\), which means that \(\sum _{i=1}^n \mathbf{h }_i=n\). Similarly, we write \(c=\sum _{i=1}^n \mathbf c _i\mathbf{1 }_{I_i}\), where \(\mathbf c \in \mathbb {R}^n\). We normalise so that \(\int _X c(x)^2\ dx=1\), which means that \(\sum _{i=1}^n \mathbf c _i^2=n\) or \(\Vert \mathbf {c}\Vert _2=\sqrt{n}\).

7.2 A Stochastically Perturbed Lanford Map

The first example we consider is the stochastically perturbed Lanford map [25]. We will use the numerical solution of the problems (34)–(37) and (48)–(51) for this map to solve the problem of maximising the \(L^2\) norm of the linear response of the invariant measure and maximising the linear response of the expectation of an observable.

Solution to the problem of maximising the \(L^2\) norm of the linear response of the stochastically perturbed Lanford map. a Colourmap of the stochastically perturbed Lanford map. The colourbar indicates the values of the elements of the matrix. b The invariant density h. c The optimal perturbation \(m^*\). The colourbar indicates the values of the elements of the matrix. Note that the aqua colour outside the support of the two branches corresponds to a zero perturbation. d The optimal linear response \(u^*_1\) of the invariant density

7.2.1 Maximising the Linear Response of the \(L^2\) Norm of the Invariant Measure

Let \(T:S^1\rightarrow S^1\) be the stochastically perturbed Lanford map defined by

where \(\xi \sim \mathcal {U}(0,\frac{1}{10})\) (uniformly distributed on an interval about 0 of radius 1/10). Let \(M\in \mathbb {R}^{n\times n}\) be Ulam’s discretization of the transfer operator of the map T with n subintervals. The matrix M will be mixing (aperiodic and irreducible) by arguments similar to those in Proposition 2.3 [14]. Using Algorithm 2, we solve the problem (34)–(37) for the matrix M for \(n=2000\) to obtain the optimal perturbation \(m^*\). The top two singular values of the matrix \(\widetilde{U}\), computed using MATLAB, are 35.08 and 33.32 (each with multiplicity one), which we consider to be strong numerical evidence that the leading singular value of \(\widetilde{U}\) has multiplicity one. By Proposition 4 we conclude that our computed \(m^*\) is the unique optimal perturbation for the discretized system (up to sign). The sign of the matrix \(m^*\) is chosen so that \(\Vert \mathbf{h }_M\Vert _2<\Vert \mathbf{h }_{M+\varepsilon m^*}\Vert _2\) for \(\varepsilon >0\). Figure 2a shows the Lanford map and Fig. 2b presents the approximation of the invariant density h of the Lanford map. Figure 2c presents the optimal perturbation matrix \(m^*\) which generates the maximal response. Figure 2d presents the approximation of the associated linear response \(u^*_1 = \sum _{i=1}^n\mathbf{u }_1^*\mathbf{1 }_{I_i}\), for the perturbation \(m^*\); for this example, we compute \(\Vert u_1^*\Vert _{L^2}^2 \approx 0.6154\). Figure 2c shows that the selected perturbation preferentially places mass in a neighbourhood of \(x=0.4\) and \(x=0.95\), consistent with local peaks in the response in Fig. 2d.

Having computed the optimal linear response for a specific n, we verify in Table 1 that for various partition cardinalities, the \(L^2\) norm of the approximation of the linear response \(u_1^*\) converges. We also verify that \(\Vert h_{M+\varepsilon m^*}-(h_M+\varepsilon u_1^*)\Vert _{L^2}^2\) is small for small \(\varepsilon >0\). The 10,000-fold improvement in the accuracy is consistent with the error terms of the linearization being of order \(\varepsilon ^{4}\) when considering the square of the \(L^2\) norm (because \(h_{M+\varepsilon m} = h_M+\varepsilon u_1 + O(\varepsilon ^2)\), when we decrease \(\varepsilon \) from 1 / 100 to 1 / 1000, the square of the error term of the linearization is changed by \(((1/10)^2)^2=1/10000\)). The table also illustrates the change in the norm of the invariant density when perturbed; we see that the norm of the invariant density increases when we perturb M by \(\varepsilon m^*\) and decreases when we perturb by \(-\varepsilon m^*\), consistent with the choice of sign of \(m^*\) noted above.

7.2.2 Maximising the Linear Response of the Expectation of an Observable

In this section we find the perturbation that generates the greatest linear response of the expectation

where \(c(x)=\sqrt{2}\sin (\pi x)\) and the underlying dynamics are given by the map (93). We consider problem (48)–(51) with the vector \(\mathbf c =(c_1,\dots ,c_n)\in \mathbb {R}^n\), where \(c_i = \sqrt{2n}\sin (\pi x_i)\) and \(x_i= \frac{i-1}{n}+\frac{1}{2n}\), \(i=1,\ldots ,n\). Let \(M\in \mathbb {R}^{n\times n}\) be the discretization matrix derived from Ulam’s method. We use Algorithm 3 to solve problem (48)–(51). Figure 3 presents the optimal perturbation \(m^*\) and the associated linear response \(u^*_1\) for this problem. Note that the response in Fig. 3b has positive values where c(x) is large and negative values where c(x) is small, consistent with our objective to increase the expectation of c. In this example (\(n=2000\)), we obtain \(\langle c, u^*_1\rangle _{L^2} \approx 0.2514\).

Solution to the problem of maximising the expectation of the response of observable c(x) for the stochastically perturbed Lanford map. a The optimal perturbation \(m^*\). The colourbar indicates the values of the elements of the matrix. b The optimal linear response \(u^*_1\) of the invariant density

Table 2 provides numerical results for various partition cardinalities n. We see that (i) the value of \( \langle c, u^*_1\rangle _{L^2}\) appears to converge when we increase n, (ii) the 100 fold improvement in accuracy is consistent with the error terms of the linearization being of order \(\varepsilon ^{2}\) as \(h_{M+\varepsilon m} = h_M+\varepsilon u_1 + O(\varepsilon ^2)\), and (iii) the expectation increases if we perturb in the direction \(\varepsilon m^*\) and decreases if we perturb in the direction \(-\varepsilon m^*\).

7.3 A Stochastically Perturbed Logistic Map

In this section, we consider the problems of maximising the \(L^2\) norm of the linear response of the invariant measure and maximising the linear response of the expectation of an observable. The underlying deterministic dynamics is given by the logistic map, and this map is again stochastically perturbed, yielding a linear response (see e.g. the survey [3] for a discussion of the failure of linear response for the deterministic logistic map).

Solution to the problem of maximising the \(L^2\) norm of the linear response of the stochastically perturbed logistic map. a Colourmap of the stochastically perturbed logistic map. The colourbar indicates the values of the elements of the matrix. b The invariant density h. c The optimal perturbation \(m^*\). The colourbar indicates the values of the elements of the matrix. d The optimal linear response \(u^*_1\) of the invariant density

7.3.1 Maximising the Linear Response of the \(L^2\) Norm of the Invariant Measure

Let \(T_\xi :[0,1]\rightarrow [0,1]\) be the logistic map with noise defined by

where \(\xi _x\sim \mathcal {U}(B_{\frac{1}{10}}(0)\cap [-x,1-x])\) and \(\mathcal {U}(I)\) denotes the uniform distribution on the interval I. Let \(M\in \mathbb {R}^{n\times n}\) be Ulam’s discretization of the transfer operator of the map \(T_\xi \) with n partitions. We use Algorithm 2 to solve the optimisation problem (34)–(37) with the matrix M for \(n=2000\) to obtain the optimal perturbation \(m^*\). The top two singular values of \(\widetilde{U}\), for this example, were computed in MATLAB to be 36.92 and 29.36 (each with unit multiplicity); thus, by Proposition 4, \(m^*\) is the unique optimal perturbation (up to sign). The sign of the matrix \(m^*\) is chosen so that \(\Vert \mathbf{h }_M\Vert _2<\Vert \mathbf{h }_{M+\varepsilon m^*}\Vert _2\) for \(\varepsilon >0\). Figure 4 shows the results for the stochastically perturbed logistic map; for this example we compute \(\Vert u^*_1\Vert _{L^2}^2 \approx 0.6815\). In the right branch of Fig. 4c, we see sharp increases in mass mapped to neighbourhoods of \(x=0.15\) and \(x=0.4\), as well as a sharp decrease in mass mapped to a neighbourhood of \(x=0.25\); these observations coincide with the local peaks and troughs of the response vector shown in Fig. 4d. Table 3 displays the corresponding numerical results.

Solution to the problem of maximising the expectation of the response of observable c(x) for the stochastically perturbed logistic map. a The optimal perturbation \(m^*\). The colourbar indicates the values of the elements of the matrix. b The optimal linear response \(u^*_1\) of the invariant density

7.3.2 Maximising the Linear Response of the Expectation of an Observable

Using (48)–(51), we calculate the perturbation achieving a maximal linear response of \(\langle c, h\rangle _{L^2}\) for \(c(x)=\sqrt{2}\sin (\pi x)\) for the stochastic dynamics (94). We again compute with the vector \(\mathbf c =(c_1,\dots ,c_n)\in \mathbb {R}^n\), where \(c_i = \sqrt{2n}\sin (\pi x_i)\) and \(x_i= \frac{i-1}{n}+\frac{1}{2n}\), \(i=1,\ldots ,n\). We compute the discretization matrix \(M\in \mathbb {R}^{n\times n}\) derived from Ulam’s method and make use of Algorithm 3.

The \(m^*\) provoking the greatest linear response in the expectation \(\langle c,h\rangle _{L^2}\) is shown in Fig. 5a. The linear response corresponding to \(m^*\) is shown in Fig. 5b; for this example, \( \langle c, u^*_1\rangle _{L^2}\approx 0.1187\). The response takes its minimal values at \(x=0, x=1\), where the values of the observable c is also least, and the response is broadly positive near the centre of the interval [0, 1], where the observable takes on large values; both of these observations are consistent with maximising the linear response of the observable c.

Numerical results for this example are provided in Table 4.

7.4 Double Lanford Map

In this last section, we consider the problem of maximising the linear response of the rate of convergence to the equilibrium. The underlying deterministic dynamics is given by a stochastically perturbed double Lanford map. More explicitly, we consider the map \(T:S^1\rightarrow S^1\) defined by

where \(T_{Lan}(x) = 2x+\frac{1}{2}x(1-x)\) and \(\xi \sim \mathcal {U}(0,\frac{1}{10})\) (uniformly distributed on an interval about 0 of radius 1/10). We have chosen this doubled version of the Lanford map in order to study a relatively slowly (but still exponentially) mixingFootnote 3 system. The subintervals [0, 1 / 2] and [1 / 2, 1] are “almost-invariant” because there is only a relatively small probability that points in each of these subintervals are mapped into the complementary subinterval; see Fig. 6a.

Solution to the problem of maximising the linear response of the rate of convergence to the equilibrium of the stochastically perturbed double Lanford map. a Colourmap of the stochastically perturbed double Lanford map. The colourbar indicates the values of the elements of the matrix. b The invariant density h. c The optimal perturbation \(m^*\). The colourbar indicates the values of the elements of the matrix. d The optimal linear response \(u^*_1\) of the invariant density

Let \(M\in \mathbb {R}^{n\times n}\) be Ulam’s discretization of the transfer operator of the map T with n partitions. Using Algorithm 4, we solve problem (63)–(66) for the matrix M for \(n=2000\). Figure 6 shows the double Lanford map and the approximation of the invariant density h of this map. Figure 6c shows the optimal perturbation matrix \(m^*\) that maximises the linear response of the rate of convergence to the equilibrium and Fig. 6d shows the corresponding linear response \(u_1^*\) of the invariant density h. We note that the sign of the matrix \(m^*\) is chosen such that the \(\nu \) in (69) is negative. The optimal objective is given by \(\rho = \frac{d(\mathfrak {R}(\log \lambda _2(\varepsilon )))}{d\varepsilon }|_{\varepsilon = 0} \approx -0.2843\). Figure 6c shows that most of the large positive values in the perturbation occur in the upper left and lower right blocks of the graph of the double Lanford map, precisely to overcome the almost-invariance of the subintervals [0, 1 / 2] and [1 / 2, 1]. In order to compensate for these increases, there are commensurate negative values in the lower left and upper right. The net effect is that more mass leaves each of the almost-invariant sets at each iteration of the stochastic dynamics, leading to an increase in mixing rate.

Table 5 illustrates the numerical results. The value of \(\rho \), namely the estimated derivative of the real part of \(\log (\lambda _2)\), minimised over all valid perturbations, is shown in the second column. As n increases, \(\rho \) appears to converge to a fixed value. Let r and l denote the discretised left and right eigenfunctions of \(\pi _n\mathcal {P}\) corresponding to the second largest eigenvalue, \(\pi _n\delta \mathcal {P}\) denote the discretised perturbation operator represented by \(m^*\), and \(\eta _2= \langle l, \pi _n\delta \mathcal {P} (r)\rangle _{L^2}\), the analogue of (61) in this discretised continuous setting. In the fourth column, we see that the absolute value of the linearization of the perturbed eigenvalue, \(|\lambda _2+\varepsilon \eta _2|\), is close to the absolute value of the optimally perturbed eigenvalue, \(|\lambda _2(\varepsilon )^*|\). Finally, to verify the parity of \(m^*\) is correct, in Table 5 we observe that the absolute value of the second eigenvalue increases when we perturb in the direction \(-\varepsilon m^*\) and decreases as we perturb in the direction \(\varepsilon m^*\), as required for the perturbation to increase the mixing rate.

Notes

The regularity condition is that the gradients of all (equality) constraints are linearly independent at the local optimum. A proof of this fact is in Appendix 1.

\(k(x,y)\ge 0\), \(\int _X k(x,y)\ dx=1\ \forall y\in X\).

Exponential mixing is guaranteed by expansivity and transitivity of \(T_{Lan}\), which together with the additive noise, yield the stochastic covering condition of Sect. 7.

References

Abramov, R.V., Majda, A.J.: A new algorithm for low-frequency climate response. J. Atmos. Sci. 66(2), 286–309 (2009)

Bahsoun, W., Saussol, B.: Linear response in the intermittent family: differentiation in a weighted \(C^0\)-norm. arXiv preprint arXiv:1512.01080, (2015)

Baladi, V.: Linear response, or else. In: Proceedings of International Congress of Mathematicians, vol. III, pp. 525–545. Seoul (2014)

Baladi, V., Benedicks, M., Schnellmann, D.: Whitney-Hölder continuity of the SRB measure for transversal families of smooth unimodal maps. Invent. Math. 201(3), 773–844 (2015)

Baladi, V., Smania, D.: Linear response formula for piecewise expanding unimodal maps. Nonlinearity 21(4), 677 (2008)

Baladi, V., Todd, M.: Linear response for intermittent maps. Commun. Math. Phys. 3(347), 857–874 (2016)

Ben-Israel, A., Greville, T.N.E.: Generalized Inverses: Theory and Applications, vol. 15. Springer Science & Business Media, Berlin (2003)

Bujorianu, M.L., MacKay, R.S.: Perturbation and sensitivity of inhomogeneous Markov chains in dynamic environments. In: Proceedings of 21st International Symposium on Mathematical Theory of Networks and System, July 7–11, 2014, Groningen, The Netherlands, pp. 184–191 (2014)

Butterley, O., Liverani, C.: Smooth Anosov flows: correlation spectra and stability. J. Modern Dyn. 1, 301–322 (2007)

Chekroun, M.D., Neelin, J.D., Kondrashov, D., McWilliams, J.C., Ghil, M.: Rough parameter dependence in climate models and the role of Ruelle-Pollicott resonances. Proc. Natl. Acad. Sci. 111(5), 1684–1690 (2014)

Conway, J.: A course in Functional Analysis, 2nd edn. Springer, New York (1990)

Dellnitz, M., Junge, O.: On the approximation of complicated dynamical behavior. SIAM J. Numer. Anal. 36(2), 491–515 (1999)

Dolgopyat, D.: On differentiability of SRB states for partially hyperbolic systems. Invent. Math, 155(2), 389–449 (2004)

Froyland, G.: Estimating physical invariant measures and space averages of dynamical systems indicators. PhD thesis, University of Western Australia (1997)

Froyland, G., Santitissadeekorn, N.: Optimal mixing enhancement. SIAM J. Appl. Math. 77(4), 1444–1470 (2017)

Froyland, G., González-Tokman, C., Watson, T.M.: Optimal mixing enhancement by local perturbation. SIAM Rev. 58(3), 494–513 (2016)

Galatolo, S., Pollicott, M.: Controlling the statistical properties of expanding maps. Nonlinearity 30(7), 2737 (2017)

Gouëzel, S., Liverani, C.: Banach spaces adapted to Anosov systems. Ergodic Theory Dyn. Syst. 26(01), 189–217 (2006)

Gouëzel, S., Liverani, C.: Compact locally maximal hyperbolic sets for smooth maps: fine statistical properties. J. Differ. Geom. 79(3), 433–477 (2008)

Grover, P., Elamvazhuthi, K.: Optimal perturbations for nonlinear systems using graph-based optimal transport. arXiv preprint arXiv:1611.06278, (2016)

Hairer, M., Andrew, J.M.: A simple framework to justify linear response theory. Nonlinearity 23(4), 909 (2010)

Horn, R.A., Johnson, C.R.: Matrix Analysis. Cambridge University Press, Cambridge (2012)

Kemeny, J.G.: Generalization of a fundamental matrix. Linear Algebra Its Appl. 38, 193–206 (1981)

Kloeckner, B.: The linear request problem. arXiv:1606.02428, to appear in Proceedings of AMS

Lanford III, O.E.: Informal remarks on the orbit structure of discrete approximations to chaotic maps. Exp. Math. 7(4), 317–324 (1998)

Lasota, A., Mackey, M.C.: Probabilistic properties of deterministic systems. Cambridge University Press, Cambridge (1985)

Laub, A.J.: Matrix Analysis for Scientists and Engineers. SIAM, New Delhi (2005)

Liverani, C.: Invariant measures and their properties. A functional analytic point of view. In: Dynamical Systems. Part II, Pubblicazioni del Centro di Ricerca Matematica Ennio de Giorgi, pp. 185–237. Scuola Norm. Sup., Pisa (2003)

Lucarini, V.: Response operators for Markov processes in a finite state space: radius of convergence and link to the response theory for Axiom A systems. J. Stat. Phys. 162(2), 312–333 (2016)

Lucarini, V., Wouters, J.: Response formulae for n-point correlations in statistical mechanical systems and application to a problem of coarse graining. J. Phys. A 50, 355003 (2017)

Meyer, C.D.: Matrix Analysis and Applied Linear Algebra, vol. 2. SIAM, New Delhi (2000)

Nocedal, J., Wright, S.: Numerical Optimization, second edition. Springer, Berlin (2006)

Ragone, F., Lucarini, V., Lunkeit, F.: A new framework for climate sensitivity and prediction: A modelling perspective. Clim. Dyn. https://doi.org/10.1007/s00382-015-2657-3, (2015)

Ruelle, D.: Differentiation of SRB states. Commun. Math. Phys. 187(1), 227–241 (1997)

Ruelle, D.: Differentiation of SRB states for hyperbolic flows. Ergod. Theory Dyn. Syst. 28(02), 613–631 (2008)

Schweitzer, P.J.: Perturbation theory and finite Markov chains. J. Appl. Probab. 5(02), 401–413 (1968)

Ulam, S.M.: A Collection of Mathematical Problems, vol. 8. Interscience Publishers, New York (1960)

Wang, H., Hartmann, C., Schütte, C.: Linear response theory and optimal control for a molecular system under non-equilibrium conditions. Mol. Phys. 111(22–23), 3555–3564 (2013)

Acknowledgements

The authors thank Guoyin Li for helpful remarks concerning Sect. 3, Jeroen Wouters for some literature suggestions, and two anonymous referees for constructive suggestions. FA is supported by the Australian Government Research Training Program Scholarship and the UNSW School of Mathematics and Statistics. DD is supported by an ARC Discovery Project DP150100017 and received partial support from the Croatian Science Foundation under the Grant IP-2014-09-2285. GF is partially supported by DP150100017.

Author information

Authors and Affiliations

Corresponding author

Appendix A: Proof of the LICQ Condition from Sect. 4

Appendix A: Proof of the LICQ Condition from Sect. 4

Let \(J=\{j:\exists i \text{ with } (i,j)\not \in N\}\). For \(j\in J\), let \(f_j(m)=\sum _{i=1}^n m_{ij}\). For \((i,j)\in N\), let \(g_{ij}(m)\) denote the left hand side of the equality in (51). Finally, let f(m) denote the left hand side of the equality in (50). For \(j\in J\) we have \(\frac{\partial f_j}{\partial m_{kl}}(m) = \delta _{jl}\). For \((i,j)\in N\) we have \(\frac{\partial g_{ij}}{\partial m_{kl}}(m) = \delta _{ik}\delta _{jl}\), and lastly \(\frac{\partial f}{\partial m_{kl}}(m) = 2m_{kl}\). The condition LICQ (Definition 12.4 [32]) is satisfied if

implies \(a_j=0\) for \(j\in J\), \(a_{ij}=0\) for \((i,j)\in N\), and \(a=0\).

-

1.