Abstract

This paper is about nonequilibrium steady states (NESS) of a class of stochastic models in which particles exchange energy with their “local environments” rather than directly with one another. The physical domain of the system can be a bounded region of \(\mathbb R^d\) for any \(d \ge 1\). We assume that the temperature at the boundary of the domain is prescribed and is nonconstant, so that the system is forced out of equilibrium. Our main result is local thermal equilibrium in the infinite volume limit. In the Hamiltonian context, this would mean that at any location x in the domain, local marginal distributions of NESS tend to a probability with density \(\frac{1}{Z} e^{-\beta (x) H}\), permitting one to define the local temperature at x to be \(\beta (x)^{-1}\). We prove also that in the infinite volume limit, the mean energy profile of NESS satisfies Laplace’s equation for the prescribed boundary condition. Our method of proof is duality: by reversing the sample paths of particle movements, we convert the problem of studying local marginal energy distributions at x to that of joint hitting distributions of certain random walks starting from x, and prove that the walks in question become increasingly independent as system size tends to infinity.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper attempts to address, using highly idealized models, two of the major challenges in nonequilibrium statistical mechanics: One is the derivation of the Fourier Law, equivalently the heat equation, from microscopic principles ( see e.g. [2–5, 10, 11, 16, 23, 27]). The other is the proof of local thermal equilibrium (LTE), equivalently the well-definedness of local temperatures, for systems that are driven out of, and possibly far from, equilibrium (see [17] for a discussion of the physics, and e.g. [6, 14, 15, 20, 26]). Both of these topics are of fundamental importance, yet no satisfactory general theory has been proposed. In this paper we study the nonequilibrium steady states (NESS) of a specific class of particle systems with stochastic interactions. The models we consider are simple enough to be amenable to rigorous analysis, yet not overly specialized, so they may offer insight into more general situations. The physical space of our models can be \(\mathbb R^d\) for any \(d \ge 1\). Model behavior depends on d, necessitating different arguments in the proofs, but our results are valid for all \(d \ge 1\).

We begin with a rough model description; see Sect. 2.1 for more detail. For any \(d \ge 1\), let \(\mathcal D \subset \mathbb R^d\) be a bounded domain with smooth boundary, and let T be a prescribed temperature function on \(\partial \mathcal D\). We consider \(\sim L^d\) particles performing independent random walks on \(\mathcal D_L = \mathbb Z^d \cap L \mathcal D\) where \(L\gg 1\) is a real number and \(L \mathcal D\) is the dilation of \(\mathcal D\). These particles do not interact directly with one another, but only via their “local environments”, symbolized by a collection of random variables each representing the energy at a site in \(\mathcal D_L\). More precisely, each particle carries with it an energy. As it moves about, it exchanges energy with each of the sites it visits, and when it reaches the boundary of \(\mathcal D_L\), it abandons the energy it was carrying, replacing it by an energy drawn randomly from the “bath distribution” at the corresponding point in \(\partial \mathcal D\).

These stochastic models are modifications of the 1-D mechanical chains studied in [13] and their 2-D generalizations in [22]. In these mechanical models, energy transport occurs via particle-disk interactions, an idea borrowed from [24]. More precisely, there is an array of rotating disks evenly spaced in the domain. Particles do not interact with each other directly; they exchange energy with these disks upon collision. Our site energies are an abstraction of the kinetic energies of these disks, or the “tank energies” in the stochastic models in [13]. Further simplifications have been introduced in the present models to make the analysis feasible.

In our models, when the prescribed temperature function is constant on \(\partial \mathcal D\), i.e. \(T \equiv T_0\) for some \(T_0 \in \mathbb R^+\), it is easy to see that the unique invariant probability distribution is a product measure: particle numbers are independent and Poissonian, and particle and site energies are independent and exponentially distributed with mean \(T_0\). Let us refer to such a distribution as “the equilibrium distribution at temperature \(T_0\)”. For a nonconstant T (all that we require is that it be a continuous function), the system is forced out of equilibrium. It is not hard to see that there is still a unique NESS to which all initial states converge. Our two main results assert that the following hold in the infinite volume limit:

-

(1)

mean energy profiles with respect to NESS when scaled back to \(\mathcal D\) converge to the unique solution u(x) of

$$\begin{aligned} \Delta u=0 \quad \text{ on } \mathcal D, \qquad u|_{\partial \mathcal D} = T; \end{aligned}$$ -

(2)

given any \(x \in \mathcal D\), for sites located near xL, marginal distributions of the NESS tend to the equilibrium distribution at temperature u(x).

These and other results are formulated precisely in Sect. 2.2.

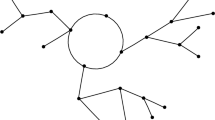

Our method of proof is duality, and the dual used here is similar to that in [26], which in turn borrowed its main idea from [20]. We differ from these earlier works in that we prove a more complete version of local equilibrium that includes the marginal distributions of particle energies, and our results are proved for all \(d\ge 1\). As in [20], our “dual process” (duality is with respect to a function, to be precise) keeps track of movements of certain discrete objects we call “packets” in this paper. Reasoning naively, marginal energy distributions at a site \(v \in \mathcal D_L\) is determined by what particles bring to this site from the bath, so of interest are the points of origin of these energies. The idea is that to identify these points of origin, we can place some packets at site v, to be carried around by particles in a manner analogous to the way energy is transported, run the particle trajectories “backwards”, and look at the hitting distributions of the packets on the boundary of \(\mathcal D_L\). As it turns out, packet movements in this process are effectively independent random walks when their trajectories do not meet, permitting us to leverage known results on hitting distributions of Brownian paths. This connection to Brownian motion is what makes duality a potentially very useful tool for studying local thermal equilibrium. From this line or thinking, one sees immediately that the proof of LTE is simpler in \(d \ge 3\), where independent random walkers tend not to meet. For \(d=1\), where independent walkers meet often, the idea of exchangeability, which was used in [20], is entirely natural and we use it here as well.

Our model falls into the category of ’gradient systems’. There is a vast literature of gradient systems, but very few results on LTE for systems forced out of equilibrium. Two examples are [14, 15], where the entropy method is applied. We will comment on the connection to these results in Sect. 8.2.

2 Model and Results

2.1 Model Description

We fix a dimension \(d \ge 1\), and let \(\mathcal D \subset \mathbb R^d\) be either a bounded open rectangle or a bounded, connected open set for which \(\partial \mathcal D\) is a \(\mathcal C^2\) submanifold. A continuous function \(T : \partial \mathcal D \rightarrow \mathbb R_{+}\) to be thought of as temperature is prescribed. For \(L \gg 1\), the physical domain of our system is

and each lattice point in \(\mathcal D_L\) is referred to as a site. The bath is located at

where \(\partial \mathcal D_L = \{ v\in \mathbb Z^d: v\) has a neighbor in \(\mathcal D_L\) and a neighbor outside of \(\mathcal D_L\}\). Throughout this article, \(|\cdot |\) denotes the cardinality of a finite set. For any \(v \in \mathbb R^d\), \(\langle v \rangle \) denotes its closest point in \(\mathbb Z^d\) and \(\Vert v\Vert \) is the Euclidean norm.

We consider a Markov process \(\varvec{X}_t = \varvec{X}_t^{(L)}\) with random variables

where \(\xi _{v}\) denotes the energy at site \(v \in \mathcal D_L\), \(M=M(L) \in \mathbb Z^+\) is the number of particles in the system, and \(\eta _i\) and \(X_i\) are the energy and location of particle i. The infinitesimal generator of this process has the form

where \(G_1\) and \(G_2\) describe respectively interactions within \(\mathcal D_L\) and with the bath: Each particle carries an exponential clock which rings at rate 1 independently of the clocks of other particles. When its clock rings, the particle exchanges energy with the site at which it is located; immediately thereafter it jumps to a neighboring site, choosing the 2d nearest neighbors with equal probability. The \(G_1\) part of the generator describes the action when the neighboring site chosen is in \(\mathcal D_L\):

where

If the particle jumps to a site \(v \in \mathcal B_L\), then its energy is updated according to the temperature at v, and it is returned immediately to its original site. More precisely, we extend T to a neighborhood of \(\partial \mathcal D\), and define

where \(\xi _v'\) is given by (1) and \(\beta (\frac{w}{L})=T(\frac{w}{L})^{-1}\).

This completes the definition of our model.

Remark on distinguishable versus indistinguishable particles As defined, the particles in \(\varvec{X}_t\) are named and distinguishable. Since our results pertain to infinite-volume limits, it is natural to work with models with indistinguishable particles. For each L, such a model can be obtained from \(\varvec{X}_t^{(L)}\) via the following identification: for \(\underline{x}, \underline{y} \in \mathbb R_+^{\mathcal D_{L}} \times \mathbb R_+^{M} \times \mathcal D_{L} ^M\), we let \(\underline{x} \sim \underline{y}\) if

where \(\sigma \) is a permutation of the set \( \{1,2,\dots , M\}\). It is easy to check that the quotient process \(\varvec{X}_t^{(L)}/_\sim \) is well defined and corresponds to \(\varvec{X}^{(L)}_t\) with indistinguishable particles. As the desired results for \(\varvec{X}_t^{(L)}/_\sim \) are deduced easily from those for \(\varvec{X}_t^{(L)}\), we will, for the most part, be working with \(\varvec{X}_t^{(L)}\).

2.2 Statement of Results

We begin with a result on the existence and uniqueness of invariant measure in the equilibrium case, i.e., when the prescribed bath temperature T is constant.

Proposition 1

Let \(d \in \mathbb Z^+\) and \(\mathcal D \subset \mathbb R^d\) be as above, and let L be such that \(\mathbb Z^d\) restricted to \(\mathcal D_L\) is a connected graph. If the function T is constant, then with the notation \(\beta = 1/T\),

is the unique invariant probability measure of the process \(\varvec{X}_t^{(L)}\).

We are primarily interested in out-of-equilibrium settings defined by non-constant bath temperatures.

Proposition 2

Let \(d, \mathcal D\) and T be as in Sect. 2.1. We assume L is such that \(\mathbb Z^d\) restricted to \(\mathcal D_L\) is a connected graph. Then the process \({\varvec{X}_t^{(L)}}\) has a unique invariant probability measure \(\mu ^{(L)}\). Furthermore, the distribution of \({\varvec{X}_t^{(L)}}\) converges to \(\mu ^{(L)}\) as \(t \rightarrow \infty \) for any initial distribution of \({\varvec{X}_0^{(L)}}\).

The proofs of Propositions 1 and 2 are straightforward; thus we mention only the ideas and leave details to the reader. For Propositions 1, one can check by a direct computation that \(\mu ^{(L)}_e\) is invariant; uniqueness follows from Doeblin’s condition. Once Proposition 1 is established, tightness (at “\(\infty \)” and at “0”) for Proposition 2 can be proved as follows: Given \({\varvec{X}_t^{(L)}}\) defined by a continuous bath temperature function \(T|_{\partial \mathcal D}\), we consider two equilibrium processes corresponding to boundary conditions \(T^\mathrm{max} = \sup _{x \in \partial \mathcal D} T(x)\) and \(T^\mathrm{min} = \inf _{x \in \partial \mathcal D} T(x)\). Let \(\mu ^{(L)}_{e, \mathrm max}\) and \(\mu ^{(L)}_{e, \mathrm min}\) be the invariant probabilities of these two processes respectively. Coupling \(\varvec{X}^{(L)}_t\) to the process defined by \(T^\mathrm{max}\) in the natural way with \(\varvec{X}^{(L)}_0 = \mu ^{(L)}_{e, \mathrm max}\), we see that the distribution of \(\varvec{X}^{(L)}_t\) is stochastically dominated by \(\mu ^{(L)}_{e, \mathrm max}\). Likewise, coupling to the process defined by \(T^\mathrm{min}\), we see that the distribution of \(\varvec{X}^{(L)}_t\) is stochastically bounded from below by \(\mu ^{(L)}_{e, \mathrm min}\).

In Theorems 1–4 below, it is assumed that \(d, \mathcal D, T\) and L are as in Sect. 2.1. For given L, we let \(\mathbb E^{(L)}( \cdot )\) denote expectation with respect to the nonequilibrium steady state \(\mu ^{(L)}\). Our first result is on the profile of mean site energies.

Theorem 1

(Mean site energy profiles) For any choice of \(M(L) \in \mathbb Z^+\) and for each \(x \in \mathcal D\),

where u is the unique solution of the equation

Next we proceed to the definition of LTE for site energies. For \(x\in \mathcal D\) and a finite set \(S \subset \mathbb Z^d\), we denote by \(\mu _{x,S}^{(L)}\) the projection of \(\mu ^{(L)}\) to the coordinates \((\xi _{\langle xL \rangle + v})_{v \in S}\) and identify \(\mu _{x,S}^{(L)}\) with a measure on \(\mathbb R^S\) with coordinates \((\zeta _s)_{s \in S}\). Given \(M(L) \in \mathbb Z^+\), we say the site energies \(\xi _v\) of \({\varvec{X}_t}^{(L)}\) approach local thermodynamic equilibrium (LTE) as \(L \rightarrow \infty \) if for every \(x \in \mathcal D\) and every finite set \(S \subset \mathbb Z^d\),

where \(\Rightarrow \) stands for weak convergence and \(\beta (x)=u(x)^{-1}\), u being the function in Theorem 1.

Theorem 2

(LTE for site energies) For any choice of \(M(L) \in \mathbb Z^+\), site energies \(\xi _v\) of \({\varvec{X}_t}^{(L)}\) approach LTE as \(L \rightarrow \infty \).

In the case where the number of particles tends to a fixed positive density as \(L \rightarrow \infty \), i.e.,

for some constant \(\alpha >0\), a more complete notion of LTE should include not only distributions of site energies but also those of particle energies. In preparation for the formal statement, we introduce the following notation:

Let \(\mu ^{(L)} /_\sim \) be the steady state distribution of the process \(\varvec{X}^{(L)}_t/_\sim \) (see the Remark at the end of Sect. 2.1). Given \(x \in \mathcal D\), \(S =\{ v_1, \ldots , v_s \}\subset \mathbb Z^d\), and non-negative integers \(K_1, \ldots , K_s\), we consider the conditional probability

and project this measure to the site and particle energy coordinates on \(\langle xL \rangle + S\). The resulting measure, \(\nu ^{(L)}_{x, S, K_1, \dots , K_s}\), can be viewed as a measure on \( \Pi _{j =1}^s (\mathbb R_+ \times (\mathbb R_+^{K_j})/_\sim )\), where the relation in \((\mathbb R_+^K)/_\sim \) is defined by \(x \sim y\) if \(x=(x_1,\ldots ,x_K)\), \(y=(x_{\sigma (1)}, \ldots ,x_{\sigma (K)} )\), and \(\sigma \) is a permutation of the set \(\{1, \dots , K\}\). We are concerned with the limit of \(\nu ^{(L)}_{x, S, K_1, \dots , K_s}\) as \(L \rightarrow \infty \).

Theorem 3

(LTE for systems with positive density of particles) Suppose \(M(L)/L^d \rightarrow \alpha \mathop {\mathrm {vol}}\nolimits (\mathcal D) \) for some \(\alpha >0\). Then the system approaches LTE as \(L \rightarrow \infty \) in the sense of both site and particle energy distributions. That is to say, for every \(x \in \mathcal D\), \(S =\{ v_1, \ldots , v_s \}\subset \mathbb Z^d\), and non-negative integers \(K_1, \ldots , K_s\), we have the following limiting distributions as \(L \rightarrow \infty \):

-

(1)

Let \(k^{(L)}_{v_j}\) be the number of particles at site \(\langle xL\rangle + v_j\), \(j=1,2, \dots , s\), seen as a random variable with respect to \(\mu ^{(L)}\). Then \(k^{(L)}_{v_1}, \dots {k^{(L)}_{v_s}}\) tend in distribution to independent Poisson random variables with mean \(\alpha \).

-

(2)

The measures \(\nu ^{(L)}_{x, S, K_1, \dots , K_s}\) converge weakly to

$$\begin{aligned} \Pi _{j=1}^s \ \left( \mathcal E_x \ \times (\mathcal E_x^{K_j})/_\sim \right) \qquad \text{ as } L \rightarrow \infty , \end{aligned}$$(4)where \(\mathcal E_x\) is the exponential distribution on \(\mathbb R_+\) with parameter \(\beta = u(x)^{-1}\), \(\mathcal E_x^K\) is the product of K copies of \(\mathcal E_x\), and \(\sim \) is the usual identification in \((\mathbb R_+^K)/_\sim \).

The notion of LTE considered so far describes marginal energy distributions in regions of microscopic sizes. These results can be extended to a version of LTE at mesoscopic scales:

Theorem 4

(LTE at mesoscopic scales) For any \(\vartheta \in (0,1)\), the results in Theorems 2 and 3 remain valid if we replace

“ \((\langle xL\rangle + v)_{v \in S}\) where \(S \subset \mathbb Z^d\) is any finite set ”

by “ \(({\langle xL + L^{\vartheta }v \rangle })_{v \in S} \) where \(S \subset \mathbb R^d\) is any finite set ” .

3 Preliminaries from Probability Theory

We collect in this section some facts from probability theory that will be used. All the results cited are known, possibly with the exception of Proposition 3.

3.1 Random Walks

Here, we formulate some basic lemmas about random walks. We will use the terminology random walk for any Markov chain of the form

where the \(\xi _k\)’s are independent, identically distributed random variables with values in \(\mathbb Z^d\) (d is the dimension of the random walk). The special case where \(\xi _1\) is supported on the origin and its 2d nearest neighbors is called a nearest neighbor random walk, and the case where \(\xi _1\) is uniformly distributed on the 2d nearest neighbors of the origin is called a simple symmetric random walk (SSRW). The following statement is arguably the most important property of random walks with finite variance.

Lemma 1

(Invariance principle) Consider a d-dimensional random walk \(S_n = \sum _{k=0}^n \xi _k\) where \(\xi _1\) has zero expectation and finite covariance matrix \(\Sigma \), and let \(W_n =(W_n(t))_{t \in [0,1]}\) be the random process defined by \(W_n(\frac{k}{n}) =\frac{S_k}{ \sqrt{k}}\) and linear interpolations between \(\frac{k}{n}\) and \(\frac{k+1}{n}\). Then as \(n \rightarrow \infty \), \(W_n\) converges weakly to the d-dimensional Brownian motion on [0, 1] with covariance matrix \(\Sigma \).

Lemma 2

(Harmonic measure) Let \(x \in \mathcal D \subset \mathbb R^d\) where \(\mathcal D\) is as above, and let \(\tau \) be the first hitting time of \(\partial \mathcal D\) for a Brownian motion \(B^x_t\) starting from x. Then

where u is given by (2).

The next result is a combination of the last two, together with a small perturbation in starting location. A version of this result likely exists in the literature, but we are unable to locate a reference. Since our proofs rely heavily on Proposition 3, we have included its proof in the Appendix.

Proposition 3

Let \(x \in \mathcal D \subset \mathbb R^d\) be as above, and let \(\varepsilon >0\) be given. Then there exist \(\delta >0\) and \(L_0\) such that the following holds true for all \(L>L_0\): Let \(S_n\) be a SSRW on \(\mathbb Z^d\) with \(\Vert S_0 - xL\Vert < \delta L\), and let \(\tau \) be the smallest n such that \(S_n \in \mathcal B_L\). Then

We will also use the following estimate on moderate deviations. It is a consequence of e.g. Theorem 1 in [25], Section VIII.2:

Lemma 3

Let \(\xi _n\) be bounded i.i.d real-valued random variables with variance \(\sigma ^2>0\). There is a constant \(c_1\) such that for any \(n \ge 1\) and for any R with \(1 < R < n^{1/6}\),

The reason for the present review is that instead of studying \(\varvec{X}^{(L)}_t\) directly, we will transform the problem into one involving certain stochastic processes in which a finite number of walkers perform SSRW on \(\mathbb Z^d\). These walks are independent when the walkers are at distinct sites, but when they meet, there is a tendency for them to stick together for some random time. We will need to show that in terms of hitting distributions such as those in Lemma 2, the situation in the \(L \rightarrow \infty \) limit is as though the walks were independent. This is clearly related to the question of how often two walkers meet, a property well known to be dimension dependent.

-

(1)

\(d=1\). The local time of a SSRW in dimension 1 at the origin up to time n is \(\sim \sqrt{n}\). More precisely, the local time up to n, rescaled by \(\sqrt{n}\) converges weakly to the absolute value of the standard normal distribution (see [8]). Since the typical time needed to leave the interval \([-L,L]\) is \(O(L^2)\) by the invariance principle, two independent walkers will meet \(\sim L\) times before leaving the interval \([-L,L]\).

-

(2)

\(d=2\). The local time of a SSRW in dimension 2 at the origin up to time n is \(\sim \log n\), so that two independent walkers meet \(\sim \log L\) times before leaving the domain \(\mathcal D_L\).

-

(3)

\(d>2\). SSRWs in dimensions \(d>2\) are transient, meaning two independent walkers only meet finitely many times.

These observations have prompted us to proceed as follows: We will first treat the \(d=2\) case, by comparing the process in question to independent walkers. Once that is done, we will observe that a simplified version of the argument gives immediately results for \(d>2\). Dimension one is treated differently: The large number of encounters makes it difficult to compare the stochastic processes above to independent walkers. Instead, we make use of the meetings of the walkers to show that their identities can be switched; see Sect. 3.3.

We collect below two other dimension-dependent facts about random walks that will be used in the sequel.

Lemma 4

(\(d=2\)) Let \(\mathcal D \subset \mathbb R^2\) be above. We fix \(\beta \in (0,1)\), \(C<\infty \) and \(\varepsilon >0\), and let \(W_n\) be a SSRW on \(\mathbb Z^2\). Then

for all L large enough.

It is a well known fact in the probabilistic literature that the left hand side of (6) converges to \(\beta \) as \(L \rightarrow \infty \) (see for instance Proposition 1.6.7 in [21]). This implies Lemma 4.

For completeness, we provide a heuristic justification for this cited result: Note that \(W_n\) converges to a Brownian motion after rescaling. Since the logarithm of a planar Brownian motion is a martingale, it is not difficult to deduce the statement that if \(B_t\) is a planar Brownian motion with \(B_0 \ne 0\), then the probability that \(\Vert B_t\Vert \) reaches \(2\Vert B_0\Vert \) before reaching \(\Vert B_0\Vert /2\) is 1 / 2. Consequently,

For \(k \in \mathbb Z\), let

and define the random variables \(t_j, k_j\) in the following way:

and \(k_1\) is such that \(W_{t_1} \in \partial \mathcal C_{2^{k_1}}\). Inductively, we define

and \(k_j\) such that \(W_{t_j} \in \partial \mathcal C_{2^{k_j}}\). Similarly to (7), we see that \(k_j \approx \log _2 \Vert W_{t_j}\Vert \) is approximately a one dimensional SSRW for L large with starting position \(\beta \log _2 L\). A simple computation (often referred to as gambler’s ruin) gives that

The following is a well known property of high dimensional random walks.

Lemma 5

(\(d>2\)) In dimensions \(d>2\), any non-degenerate random walk is transient, i.e., \(\Vert S_n\Vert \rightarrow \infty \) as \(n \rightarrow \infty \) with probability 1.

3.2 Moments of Exponential Random Variables

Let \(\lambda \in \mathbb R\) and \(s \in \mathbb Z^+\). The moments of s independent exponential random variables \(X_1, \dots , X_s\) with parameter \(\lambda \) are given by

Conversely, if \(X_1, \dots , X_s\) are such that their joint moments are given by (8) for all \((n_1, \dots , n_s) \in \{0,1,2, \dots \}^s\), then they are independent exponential random variables with parameter \(\lambda \). See for instance [28].

3.3 Exchangeability

We also review very briefly the notion of exchangeable random variables, which will be used in the sequel.

Definition 1

The infinite sequence of random variables \(X_1, X_2, \ldots \) is called exchangeable if for any finite N and any permutation \(\sigma \) of \(\{1,2,\dots , N\}\), the random vectors \((X_1, \ldots , X_N)\) and \((X_{\sigma (1)}, \ldots , X_{\sigma (N)})\) have the same distributions.

The following result is well known; it was proved by de Finetti in [9]:

Theorem 5

(de Finetti’s Theorem) If \(X_1, X_2, \ldots \) is a sequence of \(\{0,1\}\)-valued exchangeable random variables, then there exists a distribution function F on [0, 1] such that for all n and all \(x_i \in \{0,1\}\),

4 Duality

In this section we introduce another process \({\varvec{Y}_t}\) and a function F, and show that \({\varvec{X}_t}\) and \({\varvec{Y}_t}\) are dual with respect to the function F in a sense to be made precise. We explain also how to leverage duality to prove some of the asserted results in Sect. 2.2.

4.1 Motivation and Definition of a “Dual Process”

The idea is as follows: For the process \(\varvec{X}_t=\varvec{X}^{(L)}_t\), consider the marginal energy distributions of \(\mu ^{(L)}\) at \(v \in \mathcal D_L\). These distributions reflect what the particles bring to site v from the bath, and that in turn is reflected in which parts of \(\mathcal B_L\) a particle visits prior to its arrival at site v—though in reality things are a bit more complicated: particles interact with all the sites they pass through on their way to v. Nevertheless, accepting this simplified picture for the moment, we can reverse the trajectories of the particles, and think of them as carrying certain “packets” from site v to the bath. The locations in \(\mathcal B_L\) at which these packets are deposited will then tell us from which parts of \(\mathcal B_L\) energies were drawn in the original process, thereby revealing the composition of the steady state distribution \(\mu ^{(L)}\) of \({\varvec{X}_t}\) at v. An advantage of studying the reverse process is that it is reminiscent of hitting distributions of Brownian motion, or harmonic measures on \(\partial D\). These are the ideas behind the duality formulation discussed in this section.

For \(\mathcal D \subset \mathbb R^d\) and L as in Sect. 2.1, we now introduce a Markov process \({\varvec{Y}^{(L)}_t}\) designed to carry packets from the sites in \(\mathcal D_L\) to \(\mathcal B_L\). This process also involves \(M=M(L)\) particles. Let \(\mathbb N\) denote the set of non-negative integers. The variables in \({\varvec{Y}^{(L)}_t}\) are

Here, \(n_{v}\) is to be interpreted as the number of packets at site \(v \in \mathcal D_L\), \(\hat{n}_{v}\) the number of packets at \(v \in \mathcal B_L\), \(\tilde{n}_{j}\) the number of packets carried by particle j, and \(Y_j\) the position of particle j. (We distinguish between packets that have been dropped off at a site and packets that are carried by particles: \(n_v\) counts packets that have been dropped off at site v, and does not include packets carried by particle j even when \(Y_j=v\).) The generator of the process \({\varvec{Y}_t}\) is given by

where \(A_1\) corresponds to movements inside \(\mathcal D_L\) and \(A_2\) is the part describing the interaction with the boundary. Formally, we have

where the quantity inside the parenthesis is

with

Note the difference between (1) and (9): In \({\varvec{X}_t}\), when a particle’s clock rings, it pools its energy together with that at the site at which it is located, and carries a random fraction of it as it jumps to a new site. In \({\varvec{Y}_t}\), the order is reversed: when a particle’s clock rings, it jumps to a new site, then pools together the packets it is carrying with those at the new site, and takes a random fraction, assuming this new site z is inside \(\mathcal D_L\). The second half of the generator treats the case where \(z \in \mathcal B_L\):

where the quantity inside the parenthesis is

with

That is to say, if particle k jumps from site \(v \in \mathcal D_L\) to site \(z \in \mathcal B_L\), then the following occurs instantaneously: it drops off all of the packets it is carrying at site z, returns to site v, and takes a random fraction of the packets located at site v. Once a packet is dropped off in \(\mathcal B_L\), it will remain there permanently, so that as time tends to infinity there will be no packets left in \(\mathcal D_L\).

This completes the definition of the process \({\varvec{Y}^{(L)}_t}\).

4.2 Proof of Pathwise Duality

The function with respect to which duality will be proved is

where \(\underline{x}, \xi _v\) and \(\eta _j\) are as in the definition of \({\varvec{X}_t}\) and the rest are from the definition of \(\varvec{Y}_t\). Notice that F does not depend on the positions of the particles in either \({\varvec{X}_t}\) or \({\varvec{Y}_t}\). For reasons to become clear, it is convenient to write

where \(\underline{\check{x}} \in \check{\mathcal X} := \mathbb R_+^{\mathcal D_{L}} \times \mathbb R_+^{M}\) denotes the energy coordinates and \(\bar{X} = (X_1, \dots , X_M)\) the positions of the particles. Writing \(\varvec{X}_t = (\underline{\check{x}}_t, \bar{X}_t)\), we observe that \(\bar{X}_t\) consists of M independent continuous-time random walks on \(\mathcal D_L\), with “reflection” at \(\partial \mathcal D_L\) as defined earlier. Likewise, \(\underline{\check{n}} \in \check{\mathcal Y} := \mathbb N^{\mathcal D_L} \times \mathbb N^{ \mathcal B_L} \times \mathbb N^{M}\) gives the number of packets at the various sites or carried by particles, while \(\bar{Y} = (Y_1, \dots , Y_M)\) denotes the positions of the particles; and if \(\varvec{Y}_t = (\underline{\check{n}}_t, \bar{Y}_t)\), then \(\bar{Y}_t\) has the same description as \(\bar{X}_t\). Notice that F is really a function of \((\underline{\check{x}}, \underline{\check{n}})\).

We now formulate a version of pathwise duality, counting sample paths of \(\bar{X}_t\) (or \(\bar{Y}_t\)) in the following way: We say a “move” in \(\bar{X}_t\) occurs when either (a) a particle jumps from \(z \in \mathcal D_L\) to \(w \in \mathcal D_L\) or (b) it jumps from \(z \in \mathcal D_L\) to \(w \in \mathcal B_L\) and jumps back immediately with the convention of regarding different \(w \in \mathcal B_L\) as distinct sample paths. Now fix a time interval \((0, \tau )\), and let \(t_1< t_2 < \dots < t_n\) be the times in \((0,\tau )\) at which \(\bar{X}_t\) moves. To avoid discussing \(t_i^\pm \) (i.e. just before or after \(t_i\)), we let \(s_0=0, s_n=\tau \), fix arbitrarily \(s_i \in (t_i, t_{i+1})\) for \(i=1,\dots , n-1\), and agree to abbreviate this sample path as \(\varvec{\sigma }= (\sigma _0, \sigma _1, \dots , \sigma _n)\) where \(\sigma _i = \bar{X}_{s_i}\), with the understanding that in case (b), both the particle and the bath location involved are specified. We also use the notation \(\varvec{\sigma }^{-1} = (\sigma _n, \dots , \sigma _0)\) to denote the sample path corresponding to \(\varvec{\sigma }\) parameterized backwards in time, i.e., for \(\varvec{\sigma }^{-1}\), moves are made at times \(\hat{t}_1 < \dots < \hat{t}_n\) where \(\hat{t}_i = \tau - t_{n+1-i}\); and at times \(\hat{t}_1, \hat{t}_2, \dots \), the moves of \(\varvec{\sigma }^{-1}\) are the reverse of those in \(\varvec{\sigma }\) at times \(t_n, t_{n-1}, \dots \).

Our one-step duality lemma reads as follows:

Lemma 6

For any fixed \(\underline{\check{x}} \in \check{\mathcal X}\), \(\underline{\check{n}} \in \check{\mathcal Y}\), and any sample path \(\varvec{\sigma }= (\sigma _0, \sigma _1)\) on \([0,\tau ]\), we have

Proof

The sample path \(\varvec{\sigma }= (\sigma _0, \sigma _1)\) describes exactly one move on the time interval \((0,\tau )\). We consider separately the two cases corresponding to the two terms in the generator G of \(\varvec{X}_t\) (see Sect. 2.1).

Case 1 Particle k jumps from site \(z \in \mathcal D_L\) to site \(w \in \mathcal D_L\). The term corresponding to k and w in \(G_1F\) can be written as \(I \cdot II\), where

and

From this we deduce that

Thus \(I \cdot II\) is the term corresponding to \(A_1F\) in the generator of \(\varvec{Y}_t\) with indices k and z.

Case 2 Particle k jumps from \(z \in \mathcal D_L\) to \(w \in \mathcal B_L\) and back. The term corresponding to k in \(G_2F\) and \(w \in \mathcal B_L\) can again be written as \(I \cdot II\), where I is as above and

From this we obtain

In the last equality, we used the simplified version of the computation of II in Case 1 (by letting \(\tilde{n}_k =0\)) and computed an elementary integral. We conclude that \(I \cdot II\) is the term corresponding to \(A_2F\) for the indices k and z. \(\square \)

Next we extend Lemma 6 to sample paths involving arbitrary numbers of moves.

Lemma 7

For any fixed \(\underline{\check{x}} \in \check{\mathcal X}\), \(\underline{\check{n}} \in \check{\mathcal Y}\), and any sample path \(\varvec{\sigma }= (\sigma _0, \sigma _1, \ldots , \sigma _m)\) on \([0, \tau ]\), we have

Proof

We prove by induction on m, the number of moves. The case \(m=1\) is Lemma 6. Assume that we have proved the statement for \(\le m-1\) moves. Letting \(s_1\) be as defined above, we have the following:

\(\square \)

Remarks

The duality statement above is a little more involved than that for Markov processes with disjoint phase spaces (see e.g. Proposition 1.2 in [19]). Here, the phase spaces intersect in the set of particle configurations, and duality is proved one sample path in particle movements at a time; that is why we call it pathwise duality. Note that it is necessary to use reversed paths in the dual process to guarantee that Lemma 6 can be applied from one step to the next. Also note that the expression “pathwise duality” has been used in in Chap. 4 of [19] in a different context: both the “strong pathwise duality” and the “conditional pathwise duality” as defined in [19] imply the usual duality while our definition is a weakening of that. Finally, we mention that we will have to further weaken our concept of duality for the proof of the case of systems with positive density of particles, see Sect. 7.

Duality has been used to prove LTE for a number of situations; see [6] and the references therein. Many of the ideas above have their origins in [20], though modifications are needed as energy is not carried by particles in the KMP model. A similar set of ideas was also used in [26], which considers the same model as ours in one space dimension and with only one particle.

4.3 Consequences of Duality

Let \(\underline{\check{x}}_* \in \check{\mathcal X}\) and \(\underline{\check{n}}_* \in \check{\mathcal Y}\) be fixed. Integrating over all sample paths \(\varvec{\sigma }\) of \(\bar{X}_t\) on [0, t], Lemma 7 together with the fact that \(\mathbb P[\varvec{\sigma }] = \mathbb P[\varvec{\sigma }^{-1}]\) imply that

Letting \(t \rightarrow \infty \), the left and right sides of the equation above tend to the two sides of the formula below:

Lemma 8

For any fixed \(\underline{\check{n}}_* \in \check{\mathcal Y}\), we have

where \(\rho _{\underline{\check{n}}_*}\) is the asymptotic distribution of \({\varvec{Y}_t}\) as \(t\rightarrow \infty \) averaged over all \(\bar{Y}\) with uniform distribution, assuming that \({\varvec{Y}_0} = (\underline{\check{n}}_*, \bar{Y})\).

That the left side of (10) converges to the left side of (11) as \(t \rightarrow \infty \) follows from the fact that the distribution of \(\varvec{X}_t\) converges to \(\mu ^{(L)}\) (Proposition 2). The limit on the right side clearly exists, since all packets are eventually deposited in \(\mathcal B_L\), resulting in the simplified form of \(F(\check{\underline{n}}, \check{\underline{x}}_*)\) in the integrand. Notice also that the right side does not depend on \(\underline{\check{x}}_*\), consistent with the fact that the convergence to \(\mu ^{(L)}\) on the left is independent of initial condition.

We now identify the relevant choices of \(\check{\underline{n}}_*\): In the proof of LTE for site energies, for example, we fix \(x \in \mathcal D\), \(S \subset \mathbb Z^d\) and nonnegative integers \((n^*_v)_{v \in S}\). If \(\check{\underline{n}}_*\) is chosen so that

then the left side of (11) is equal to

a constant times the \((n^*_v)\)-moments of the distribution \(\mu ^{(L)}_{x,S}\) defined in Sect. 2.2.

Thus the key to understanding \(\mu ^{(L)}\) is \(\rho _{\underline{\check{n}}_*}\). To get a handle on this distribution, we find that instead of working with \(\varvec{Y}_t\), which describes the evolution of the density (or distribution) of packets, it is productive to switch to an equivalent model that focuses directly on the movements of individual packets. Moreover, since only asymptotic distributions matter, we may work with a discrete time model, as long as the order of the steps are preserved.

Discrete-time version of \(\varvec{Y}_t\) focusing on movements of packets

Consider a Markov chain \({\varvec{Z}_k}= {\varvec{Z}_k}^{(L)}, \ k=0,1,2,\dots \), the variables of which are

with some fixed positive integer N (to be specified later) and the notation \(\uplus \) for disjoint union. The first N coordinates of \(\varvec{Z}_k\) describe the positions of the N (named) packets in the system, the position of packet i at step k being \({\varvec{Z}}_{i,k}\), and the final M coordinates give the positions of the M particles (abusing notation slightly by using Y in both the continuous and discrete time models). The meaning of \({\varvec{Z}}_{i,k} \in \mathcal D_L \uplus \mathcal B_L\) is obvious, and \({\varvec{Z}}_{i,k} =j\) means packet i is carried by particle j at time k. The transition probabilities of \(\varvec{Z}_k\) are as follows: Given \(\varvec{Z}_{k}\), we choose with equal probability one of the M particles, say particle j, and choose with equal probability one of particle j’s neighboring sites, say w. If \(w \in \mathcal D_L\), then we set \(Y_{j,k+1}=w\), and mix the packets carried by particle j with those at site w by pooling them together and designating a random fraction of them to be carried by particle j and the rest to be left at site w. If \(w \in \mathcal B_L\), then all the packets carried by particle j are dropped off at site w, and \(Y_{j,k+1}=Y_{j,k}\). Since all the packets are eventually dropped off in \(\mathcal B_L\), \(\varvec{Z}_{i, \infty }:= \lim _{k \rightarrow \infty } \varvec{Z}_{i,k}\) exists for \(1 \le i \le N\) almost surely.

Let \(x \in \mathcal D, S \subset \mathbb Z^d\), and \(L \gg 1\) be fixed. Associated with each

is the Markov chain \(\varvec{Z}_k\) whose initial condition \(\varvec{Z}_0=(\check{\underline{n}}_0, \bar{Y}_0)\) is given by the following: \(\check{\underline{n}}_0\) is prescribed by \(\check{\underline{n}}_*\), i.e. at time 0, there are \(n^*_v\) packets at site \(\langle xL \rangle + v\) and \(\hat{n}^*_v\) packets at site \(v \in \mathcal B_L\), \(\tilde{n}^*_j\) packets are carried by particle j, and \(\bar{Y}_0\) is uniformly distributed among all particle configurations in \(\mathcal D_L\). We claim—and leave it to the reader to check—that \(\varvec{Y}_t\) and \(\varvec{Z}_k\) differ only in the identity of individual packets and time changes that preserve the order of the moves, so they have the same asymptotic distribution, i.e.

where \(\mathbb E\) is with respect to the evolution of the process \(\varvec{Z}_k\) and

For future reference,

where U is the uniform distribution over all particle configurations \(\bar{Y}\).

We are now ready to prove Theorem 1.

Proof of Theorem 1

Let \(\check{\underline{n}}_*\) be such that

Then the left side of (11) is equal to \(\mathbb E^{(L)}(\xi _{\langle xL \rangle })\), and the right side is given by \(\int T\left( \frac{v}{L} \right) \mathrm {d}\rho _{\underline{\check{n}}_*}\), where \(\rho _{\underline{\check{n}}_*}\) is the asymptotic distribution of \(\varvec{Z}_{1,\infty }\) in the Markov chain above with \(N=1\) and \(\varvec{Z}_{1,0} = \langle xL \rangle \). From the transition probabilities of \(\varvec{Z}_k\), it is clear that if we (i) disregard waiting times, i.e. times at which \(\varvec{Z}_{1,k}\) does not change, and (ii) view the location of the packet when it is carried by particle j as \(Y_{j,k}\), then the trajectories of \(\varvec{Z}_{1,k}\) are those of a SSRW on \(\mathcal D_L\). By Proposition 3, as \(L \rightarrow \infty \) the distribution of \(\varvec{Z}^{(L)}_{1,\infty }\) rescaled back to \(\partial \mathcal D\) is the hitting probability of Brownian motion starting from \(x \in \mathcal D\). Hence \(\int T\left( \frac{v}{L} \right) \mathrm {d}\rho _{\underline{\check{n}}_*} \rightarrow u(x)\) where u is the solution of Laplace’s equation with boundary condition T. \(\square \)

Next we observe that Theorem 2 is reduced to the following proposition, the proof of which will occupy the next two sections.

Proposition 4

Let \(d \ge 1\), \(\mathcal D \subset \mathbb R^d\), T on \(\partial \mathcal D,\ x \in \mathcal D\) and \(S \subset \mathbb Z^d\) be prescribed. We fix also \(\check{\underline{n}}_* =((n^*_v)_{ v \in S}, 0, 0, \dots , 0)\), and let \(\varvec{Z}^{(L)}_k\) be the Markov chain associated with \(\check{\underline{n}}_*\). Then letting \(N= \sum _{v\in S} n^*_v\), we have

Proof of Theorem 2 assuming Proposition 4

We first prove the result assuming tightness of the sequence \(\left( \mu ^{(L)}_{x,S} \right) _{L=1,2,\ldots }\). Let \(\mu _\infty \) be a weak limit point. Then putting together (11), (14) and Proposition 4, we see that the moments of \(\mu _\infty \) are those of a product of exponential distributions with parameter \(\beta (x)=u(x)^{-1}\). Hence \(\mu _\infty \) is such a product; see Sect. 3.2. Since this is true for all limit points of \(\mu ^{(L)}_{x,S}\), we conclude that \(\mu ^{(L)}_{x,S}\) converges weakly to the measure claimed.

It suffices to prove tightness one coordinate at a time, so we may assume \(S=\{v\}\). Then the same reasoning (with \(n^*_v=2\) in Proposition 4) implies that \(\sup _L \mathbb E^{(L)}(\xi _{\langle xL \rangle + v}^2) \le C\) for some \(C< \infty \). Chebyshev’s inequality then gives \(\mathbb P^{(L)}(\xi _{\langle xL \rangle + v} >n) < \frac{C}{n^2}\) for all L, proving tightness. \(\square \)

We close this section with the following lemma.

Lemma 9

Let \({\varvec{Z}_k}\) be a system with N packets, \({\varvec{Z}^+_k}\) be another system with \(N+1\) packets, and suppose both have the same number of particles. Assume further that \(\varvec{Z}^+_{i,0} = \varvec{Z}_{i,0}\) for \(i=1,\dots , N\), and \(Y^+_{j,0}=Y_{j,0}\) for all j. Then with \(\varvec{Z}_k\) coupled to the corresponding coordinates in \(\varvec{Z}^+_k\) in the natural way, we have \(\varvec{Z}^+_{i,k}=\varvec{Z}_{i,k}\) for all \(i=1, \dots , N\) and \(k \ge 1\).

Proof

Without loss of generality, suppose that at step k, particle 1 jumps from site z to site w, and that the union of the packets carried by this particle or at site w prior to the mixing are labelled \(\{1, \dots , n\}\). Then the probability that after the mixing, the set of packets carried by particle 1 is exactly \(\{j_1, \ldots , j_l\} \subset \{1,2,\ldots ,n\}\) is given by

If packet \(N+1\) is not at site w, then clearly the situation is not disturbed. If it is there, we compute

Hence the dynamics of the first N packets are unaffected. They are also clearly unaffected if particle 1 drops off its packets at the bath. \(\square \)

Remark

An implication of Lemma 9 is that when \(N>1\), the motion of each individual packet, when seen in the light of (i) and (ii) in the proof of Theorem 1, is a SSRW. Thus Proposition 4 is proved if these SSRW are independent, or close enough to being independent. This is what we will show.

5 LTE for Site Energies: \(d\ge 2\)

In Sect. 5.1, we introduce, mostly for convenience, a small modification of the process \(\varvec{Z}_n\). This modified process is used a great deal in the pages to follow. Sect. 5.2 contains the proof of LTE for site energies (Theorem 2) for \(d=2\). Due to the transience of SSRW, proofs for \(d>2\) are simpler and are given in Sect. 5.3, along with the proof of Theorem 4.

5.1 A Slightly Modified Process

We have seen in the proof of Theorem 1 that with a suitable modification of \(\varvec{Z}_n\), the movement of the packet becomes a SSRW. We now carry out the same type of modification systematically under more general conditions:

The phase space of \(\hat{\varvec{Z}}_n\) is \((\mathcal D_L \cup \mathcal B_L)^N\), and its dynamics are derived from those for \(\varvec{Z}_n\) in the following way: First, let \(\varvec{Z}'_{i,n}= \pi (\varvec{Z}_{i,n})\) where \(\pi (\varvec{Z}_{i,n})=\varvec{Z}_{i,n}\) if \(\varvec{Z}_{i,n} \in \mathcal D_L \cup \mathcal B_L\), and \(\pi (\varvec{Z}_{i,n})=Y_{\ell ,n}\) if \(\varvec{Z}_{i,n}=\ell \). We then let \(t_0=0\), and for \(j=1,2,\dots \), define

Finally, set \(\hat{\varvec{Z}}_{i,n}= \varvec{Z}'_{i,t_n}\). That is to say, first we confuse being at a site and being carried by a particle at that site, and then we collapse the times when there is no action according to this way of bookkeeping.

Remark

We recognize that \(\hat{\varvec{Z}}_n\) is not Markovian (and is not especially nice as a stochastic process). However, the order in which the N packets move about on \(\mathcal D_L \cup \mathcal B_L\) is preserved as we go from \(\varvec{Z}'_n\) to \(\hat{\varvec{Z}}_n\), even as time has been reparametrized. As a consequence, \(\hat{\varvec{Z}}_n\) has the following important properties:

-

1.

For each L, the joint asymptotic distribution of \((\hat{\varvec{Z}}^{(L)}_{1, \infty }, \dots , \hat{\varvec{Z}}^{(L)}_{N, \infty })\) is identical to that of \((\varvec{Z}^{(L)}_{1, \infty }, \dots , \varvec{Z}^{(L)}_{N, \infty })\).

-

2.

Each packet individually performs a SSRW on \(\mathcal D_L \cup \mathcal B_L\) modulo waiting times (during which it stands still).

-

3.

The addition of new packets in the sense of Lemma 9 does not affect the order of movements of packets already under consideration.

When two packets are at the same site, their next moves are not independent. We prove a uniform bound on how long they are likely to stick together:

Lemma 10

Assume \(N=2\), and \(\hat{\varvec{Z}}_{1,k_0} = \hat{\varvec{Z}}_{2, k_0} \not \in \mathcal B_L\). Let \(\kappa \) be the smallest positive integer such that \(\hat{\varvec{Z}}_{1,k_0+\kappa } \ne \hat{\varvec{Z}}_{2, k_0+\kappa }\). Then

Proof

The only way to find out what happens in the \(\hat{\varvec{Z}}\)-process is to go back to the corresponding step in the \(\varvec{Z}\)-process. Below we enumerate all possible scenarios for \(\varvec{Z}_{i,t_{k_0}}, i=1,2\), that correspond to \(\hat{\varvec{Z}}_{1,k_0} = \hat{\varvec{Z}}_{2, k_0}\), and consider for each scenario the probability of the two packets staying together in the next one or two steps:

Scenario 1 At time \(k_0\), exactly one of the packets is carried by a particle, or the two packets are carried by different particles. In both cases, \(\kappa =1\), i.e., they will separate in the next step.

Scenario 2 Both packets are carried by the same particle. Then \(\mathbb P(\kappa =1) = 0\) but \(\mathbb P(\kappa =2) \ge \frac{1}{3}\). Reason: This particle jumps, carrying both packets to the next site, where with probability \(\frac{1}{3}\) it drops one packet and carries the other, a scenario that is guaranteed to lead to \(\kappa =2\).

Scenario 3 Neither packet is carried by a particle. Before the next move can occur, a particle has to enter the site, and with probability \((\frac{1}{3},\frac{1}{3},\frac{1}{3})\), picks up (i) neither, (ii) one, or (iii) both of the packets. If (i) occurs, Scenario 3 is repeated. (ii) and (iii) are followed by Scenarios 1 and 2 respectively. \(\square \)

Notation In this paper, we denote every universal constant by C, so that each occurrence of C may stand for a different number, even in the same line.

5.2 Proof of Theorem 2: \(d=2\)

We now focus on the planar case. Let \(\varvec{Z}_n=\varvec{Z}_n^{(L)}\) be as in Proposition 4, and \(\hat{\varvec{Z}}_n\) the modification of \(\varvec{Z}_n\) as defined in Sect. 5.1. For \(\beta , \delta \in (0,1)\) and \(i,j \in \{1,\dots , N\}\), we define

In general, the definitions of \(\tau _{i,j}\) and \(\mathcal T_i\) depend on packets other than i and j (due to the way we collapse time when going from \(\varvec{Z}_n\) to \(\hat{\varvec{Z}}_n\)), so let us first assume these are the only two packets present.

Lemma 11

Consider \(\mathcal D \subset \mathbb R^2\), and assume \(N=2\). Then for every \(\beta \in (0,1)\) and \(\delta \in (\frac{1-\beta }{3},1-\beta )\) there is a constant \(C= C(\beta , \delta )\) such that

Proof

Our plan is to write

and prove that each of the two terms above is \(<\frac{C}{L^{100}}\) for large enough L.

Consider first the second term. Decomposing the steps before \(\tau _{1,2}\) according to whether \(\hat{\varvec{Z}}_{1,n} = \hat{\varvec{Z}}_{2,n}\), we claim that

where |.| denotes the cardinality of a set. To see that, we let \(a_0 = 0\), and define

Then the process \(U_{n}\) is a planar SSRW as long as it is away from the origin. Whenever the SSRW would reach the origin, \(U_n\) performs two steps of the SSRW thus avoids the origin (more precisely, for any \(x \in \mathbb Z^2\) and any \(e_i,e_j \in \{ (0,1),(0,-1),(1,0),(-1,0)\}\), \(\mathbb P(U_{n+1} = x+ e_i | U_n = x) = 1/4\) if \(x + e_i \ne (0,0)\) and \(\mathbb P(U_{n+1} = e_j | U_n = e_i) = 1/16\)). Observe that by construction

It is easy to show by the invariance principle that there is a \(p>0\) such that

holds for any starting position \(U_0\) with \(\Vert U_0\Vert < L^{\beta }\). In fact, the left hand side converges to \(e^{-1/2}\) as \(L \rightarrow \infty \), but we will not need this. By induction, \(\Vert U_n\Vert \) reaches \(L^{\beta }\) in time \(kL^{2 \beta }\) with probability at least \(1-(1-p)^k\). Since \(1-\beta -\delta >0\), the choice \(k = L^{1-\beta - \delta }\) gives (16).

Now it is well known that for two dimensional random walks, the number of returns to the origin up to time \(L^{1+\beta -\delta }\) is \(O(\log L)\) as \(L \rightarrow \infty \). Furthermore, formula (3.11) in [12] implies that the probability of the number of returns to the origin being bigger than \(\lceil \frac{100}{\pi } \rceil \log ^{2} L\) is bounded by \(C/L^{100}\). Letting \(\varsigma _k\) be the duration that the two packets stick together at their kth meeting, we note that these random variables are independent, and each is stochastically bounded by the geometric distribution in Lemma 10. Thus we have

This completes the proof of \(\mathbb P \left( \tau _{1,2} > CL^{1 + \beta - \delta } \right) < \frac{C}{L^{100}}\).

To finish, we let \(W_n\) denote the SSRW corresponding to \(\hat{\varvec{Z}}_{1,k}\) with waiting times collapsed, and note that

Since our assumption \((1-\beta )/3 < \delta \) implies \( \beta + \delta > \frac{1}{2} (1 + \beta - \delta )\), it follows by moderate deviation theorems such as Lemma 3 (by projecting onto one coordinate, for example) that each of the probabilities in the last line is bounded above by a term of the form \(< c_1 e^{-c_2 L^{c_3}}\). Thus the sum is \(<\frac{C}{L^{100}}\) for large L.

The proof of the lemma is now complete. \(\square \)

We now return to the case of N packets for arbitrary N, and let \(\mathcal T_i \) and \(\tau _{i,j}\) be as defined at the beginning of this subsection.

Lemma 12

Let \(\mathcal D \subset \mathbb R^2\). Given any \(\varepsilon >0\), let \(\beta = 1-2\varepsilon \) and \(\delta =\varepsilon \). Then for any \(i \in \{1, \dots , N\}\), the following holds for all sufficiently large L:

Proof

First we claim that for each \(i,j \in \{1, \dots , N\}\) with \(i \ne j\), we have

i.e., Lemma 11 in fact holds for any pair i, j in a process with N packets. To go from \(N=2\) to general N, observe that while the definitions of \(\mathcal T_i\) and \(\tau _{i,j}\) depend on the packets present (more time steps are collapsed when there are fewer packets), (18) concerns only the relation between \(\tau _{i,j}\) and \(\mathcal T_i\), not the actual values of these random variables, and relations of this type are not affected by the presence of other packets as noted in the Remark in Sect. 5.1.

Now for fixed \(j \ne i\), Lemma 4 applied to \(W_n=\hat{\varvec{Z}}_{i,n} - \hat{\varvec{Z}}_{j,n}\) tells us that with probability \(>1-3\varepsilon \), packets i and j do not meet after \(\tau _{i,j}\) for L large. (The result in Lemma 4 is not affected by waiting times.) Thus picking L large enough so the right side of (18) is \(<\varepsilon \), we have

Summing over all \(j \ne i\) gives (17). \(\square \)

For \(d=2\), we now prove Proposition 4, from which Theorem 2 follows as explained in Sect. 4.3.

Proof of Proposition 4 (d = 2)

We will prove

inducting on N. The case of \(N=1\) is Theorem 1. We now assume (19) has been proved for a process with \(N-1\) packets, and note that when embedded in a process with N packets, the same asymptotic distribution holds for packets \(1,2,\dots , N-1\).

Let an arbitrarily small \(\varepsilon >0\) be fixed. We consider L large enough for (17) to hold, and for such L, we define the stopping time \(\mathcal S\) to be the smallest \(n > \mathcal T_N\) such that \(\hat{\varvec{Z}}_{N,n}=\hat{\varvec{Z}}_{j,n} \in \mathcal {D}_{L} \) for some \(j \ne N\) (setting \(\mathcal S=\infty \) otherwise), and define another process \(\hat{\varvec{Z}}^*_{N,n}\) with the property that \(\hat{\varvec{Z}}^*_{N,n}=\hat{\varvec{Z}}_{N,n}\) for \(n \le \mathcal S\), and it is a SSRW independent of the movements of the other \(N-1\) packets after time \(\mathcal S\). This ensures (i) \(\hat{\varvec{Z}}^*_{N,\infty }\) is independent of the joint distribution of \(\hat{\varvec{Z}}_{j,\infty }, j=1,\dots , N-1\), and (ii) \(\mathbb P(\hat{\varvec{Z}}^*_{N,\infty } \ne \hat{\varvec{Z}}_{N,\infty }) < 4(N-1) \varepsilon \) for all large enough L.

Simplifying notation by dropping the superscript\(\ ^{(L)}\) and abbreviating \(T \left( \frac{\hat{\varvec{Z}}_{j, \infty }}{L}\right) \) as \(T(z_j)\) and \(T \left( \frac{\hat{\varvec{Z}}^*_{N, \infty }}{L}\right) \) as \(T(z^*_N)\), we have

Of the 4 lines on the right side, the first and the second \(= O(\varepsilon )\), due to the fact that T is bounded and \(\mathbb P(T(z_N) \ne T(z^*_N)) = O(\varepsilon )\); the third tends to 0 as \(L \rightarrow \infty \) by Theorem 1 and Proposition 3; and the fourth tends to 0 as \(L \rightarrow \infty \) by our inductive hypothesis. This completes the inductive step and the proposition. \(\square \)

5.3 Related Proofs

Details aside, the proof of the \(d=2\) case of Theorem 2 can be summarized as follows: We fix two distinct length scales, \(L^{\beta + \delta }\) and \(L^\beta \), and consider \(\mathcal T_i\), the time it takes packet i to attain a net displacement of \(L^{\beta + \delta }\), and \(\tau _{i,j}\), the time it takes packets i and j to separate by a distance of \(L^\beta \). Notice that \(\hat{\mathbf{Z}}_{i,n} - \hat{\mathbf{Z}}_{j,n}\) is a SSRW except for the fact that the packets tend to stick together for a random time with finite expectation when they meet. We then showed that

-

(a)

with high probability, \(\mathcal T_i > \tau _{i,j}\), due to the difference in length scale and also to the fact that \(O(\log L)\), the number of encounters between packets i and j before \(\tau _{i,j}\), is insignificant;

-

(b)

two packets that are \(L^\beta \) apart are not likely to meet.

It follows from (a) and (b) that after time \(\mathcal T_i\), the trajectories of packets i and j are effectively independent, and the desired result follows from the harmonic measure characteristization of hitting probability starting from \(x \in \mathcal D\).

The proofs below follow the same argument, with some simplifications.

Proof of Proposition 4 (\(d>2\)) Transience of SSRW in \(d>2\) simplifies the estimates. Specifically, let \(W_n\) be a SSRW in \(\mathbb Z^d\), \(d>2\). Then the number of encounters in (a) can be estimated by the fact that given \(\varepsilon >0\), there exists K such that

and (b) follows from the fact that given \(\varepsilon >0\), there exists \(C_1\) such that

Indeed the two length scales in the proof in Sect. 5.2 can, if one so chooses, be replaced by two suitably related constants \(C_1 < C_2\). \(\square \)

Proof of Theorem 4 for \(d \ge 2\) Theorem 4 differs from Theorem 2 in that the initial locations of the packets may be \(O(L^\vartheta )\) apart. As can be seen from the sketch of proof above, the following two places in the argument may be affected: (i) With regard to the number of encounters before \(\tau _{i,j}\) in (a), the probability of meeting at least once cannot be increased if the packets are farther apart, and once they meet, the probability of meeting again is independent of their initial separation. (ii) For each L, when rescaled back to \(\mathcal D\) the packets do not start from x but from \(x_j\) with \(|x_j-x| = O(L^{\vartheta -1})\). The convergence of hitting probabilities starting from these slightly perturbed initial conditions is covered by Proposition 3. \(\square \)

6 LTE for Site Energies: \(d=1\)

In dimension 1, independent random walkers meet too often for the type of argument in Sect. 5 to work. On the other hand, when two packets meet, it suggests the possibility of exchangeability, and we will make use of this in our proof. We may assume without loss of generality that \(\mathcal D = [0,1]\), so that \(\mathcal B_L = \{-1,L+1\}\), and write \(\mathcal L = -1, \mathcal R = L+1\).

6.1 The Case of \(N=2\)

The argument in this subsection is borrowed from [26]; we need only the \(N=2\) case, which is considerably simpler. Starting from usual initial conditions, we let \(A_{i} = \mathcal {L}\) or \(\mathcal {R}\), and define

Lemma 13

It is sufficient to show that

Proof

We know from 1d SSRW (or the gambler’s ruin problem) that

If \(P(\mathcal {R}, \mathcal {R}) = x^2\), then \(P(\mathcal L, \mathcal {R}) = P(*, \mathcal {R}) - P(\mathcal {R}, \mathcal {R}) =x-x^2 = x(1-x)\), which is also equal to \(P(\mathcal {R}, \mathcal L)\) by symmetry. Thus as \(L \rightarrow \infty \), \(\varvec{Z}_{i,\infty }\) and \(\varvec{Z}_{2,\infty }\) are independent Bernoulli random variables with weights \((1-x, x)\), giving

\(\square \)

Lemma 14

\(P(\mathcal {R}, \mathcal {R}) \ge x^{2 } \)

Proof

Let \(f( x, y) = xy\). For each \(\hat{\mathbf{Z}}^{(L)}_n\)-process as defined in Sect. 5.1, define

Then \(\Delta f = 0\) for all situations except when \(\hat{\mathbf {Z}}_{1, n} = \hat{\mathbf {Z}}_{2, n}\), in which case we have

where i is the location of the packets; otherwise \(\Delta f = 0\).

Therefore, for any finite L, \(f(\hat{\mathbf {Z}}_{1, n}, \hat{\mathbf {Z}}_{2, n})\) is a bounded submartingale. As \(n \rightarrow \infty \), \(f(\hat{\mathbf {Z}}_{1, n}, \hat{\mathbf {Z}}_{2, n})\) converges to \(f(\hat{\mathbf {Z}}_{1, \infty }, \hat{\mathbf {Z}}_{2, \infty })\) almost surely. Furthermore, by the submartingale property, we have \(E( f(\hat{\mathbf {Z}}_{1, \infty }, \hat{\mathbf {Z}}_{2, \infty })) \ge E( f( \hat{\mathbf {Z}}_{1, 0}, \hat{\mathbf {Z}}_{2, 0}))\). Thus

\(\square \)

Lemma 15

\(P(\mathcal {R}, \mathcal {R}) \le x^{2} \)

The proof of this lemma is simpler if we work with a process that differs from \(\hat{\mathbf {Z}}_n\) by a half-step. More precisely, in \(\varvec{Z}_n\), a packet first jumps before it picks up a random fraction of the packets at its destination. We let \(\varvec{Z}^+_n\) be \(\varvec{Z}_n\) with the order of jumping and mixing reversed, and apply the procedure at the beginning of Sect. 5.1 to \(\varvec{Z}^+_n\) to obtain a process we call \(\tilde{\mathbf {Z}}_n\) (instead of \(\hat{\mathbf {Z}}_n\)). Clearly, as \(L \rightarrow \infty \), \(\hat{\mathbf {Z}}_n, \tilde{\mathbf {Z}}_n\) and \(\varvec{Z}_n\) all have the same asymptotic packet distributions. The notation \(P(\cdot , \cdot )\) below refers to \(\tilde{\mathbf {Z}}_n\).

Proof of Lemma 15

We consider the function

where c is a constant to be determined. Let \(\Delta f\) be as before. Then it is easy to see that \(\Delta f = 0\) when \(\tilde{\mathbf {Z}}_{1, n } \ne \tilde{\mathbf {Z}}_{2, n}\). If \(\tilde{\mathbf {Z}}_{1, n} = \tilde{\mathbf {Z}}_{2, n}\), then \(\Delta f =1\) if the two packets move together in the next step, and \(\Delta f = -c\) if the next step involves exactly one of the packets. Now the two packets can move together only if they are both available to be picked up at step \(n+1\), and even in that case, the probability that exactly one of them is picked up by the particle in question is \(=\frac{1}{3}\). Thus we conclude that independently of what is going on in the \(\varvec{Z}^+\)-process,

Choosing \(c> 3\) therefore will ensure that \(\Delta f \le 0\) at each step. Arguing as above, we then obtain \(P(\mathcal {R}, \mathcal {R}) \le x^{2}\). \(\square \)

Notice that the left side of (21) can be zero if we use \(\hat{\mathbf {Z}}_n\) instead of \(\tilde{\mathbf {Z}}_n\), for two packets carried by the same particle will necessarily move together if the particle first jumps before it mixes. This is our reason for using \(\tilde{\mathbf {Z}}_n\).

6.2 Proofs of Theorems 2 and 4 in the Case \(d=1\)

Following [20], we use the method of exchangeable random variables to extend the results above to the case of N packets for arbitrary N.

Fix \(x \in [0,1]\) and \(S \subset \mathbb Z\). Let \(v_1, v_2, \dots \in S\) (repeats allowed). For each N and L, we consider the process \(\varvec{Z}_n=\varvec{Z}_n^{(L)}\) with \(Z_{i,0}=\langle xL \rangle + v_i, i=1,2, \dots , N\), and for \(A_j=\mathcal L\) or \(\mathcal R\), we define

where the probability is defined to be the average over all initial particle configurations \(\bar{Y}\). We begin with a lemma that sets the stage for exchangeability.

Lemma 16

For every \(N \in \mathbb Z^+\) and every permutation \(\sigma \) of \(\{1,\dots , N\}\),

Proof

Since binary transformations generate the symmetric group, it suffices to consider \(\sigma \) with the property that for some \(i \ne j\), \(\sigma (i)=j, \sigma (j)=i\), and \(\sigma (\ell ) = \ell \) for all \(\ell \ne i,j\). For fixed L, we consider the process \(\varvec{Z}_n=\varvec{Z}_n^{(L)}\), and begin with the following observation:

Abbreviating \(p_{L,v_1, \dots , v_N}\) as p, we claim that

if F is one of the following two types of events:

-

(i)

\(F= \{\varvec{Z}_{i,n}=\varvec{Z}_{j,n}\}\) for some n;

-

(ii)

for two particles \(k \ne k'\) and for some n,

$$\begin{aligned} F=\{\varvec{Z}_{i,n}=k, \varvec{Z}_{j,n}=k', Y_{k,n}=Y_{k', n} \text{ and } \varvec{Z}_{\ell ,n} \ne k, k' \ \forall \ \ell \ne i,j \}. \end{aligned}$$

To see that the asymptotic distributions are as claimed in case (i), we simply switch the roles of packets i and j from time n on. In case (ii), packets i and j are carried by two different particles, which are at the same site at time n. In this case, we switch not only the roles of packets i and j but the sets of randomness for particles k and \(k'\) from this time on. The condition that these particles do not carry other packets at time n ensures that the asymptotic distributions of other packets are not affected. We will refer to an event corresponding to (i) or (ii) above as a “viable switching” for packets i and j.

Let \(\hat{\varvec{Z}}_n\) be the process obtained from \(\varvec{Z}_n\). As explained in Sect. 5, module waiting times, \(\hat{\varvec{Z}}_{i,n} - \hat{\varvec{Z}}_{j,n}\) is a SSRW when it is \(\ne 0\). When \(\hat{\varvec{Z}}_{i,n} = \hat{\varvec{Z}}_{j,n}\), the duration they spend together following each encounter is controlled by Lemma 10. As these durations are independent for different encounters and are bounded by random variables with finite expectations, it follows from the discussion in Sect. 3.1 that the number of times packets i and j meet before reaching the bath is O(L); we in fact need only that

Let \(\hat{\tau }_1< \hat{\tau }_2 < \dots \) be the times packets i and j meet in \(\mathcal D_L\) in the \(\hat{\varvec{Z}}_n\)-process, i.e. \(\hat{\varvec{Z}}_{i, \hat{\tau }_q}= \hat{\varvec{Z}}_{j, \hat{\tau }_q} \in \mathcal D_L\), and let \(\tau _q\) denote the corresponding times in the \(\varvec{Z}_n\)-process. We let \(F_q\) denote the event that a viable switching occurs at the qth meeting, and will show that there exists \(b=b(N)>0\) such that

Once we prove this, the assertion in the lemma will follow: We make the relevant switch the first time a viable switching occurs, and the probability that this occurs before the packets reach the baths tends to 1 as \(L \rightarrow \infty \).

To prove (23), suppose the qth meeting takes place at site v. Confusing \(\hat{\varvec{Z}}_n\) with \(\varvec{Z}_n\) momentarily, we assume for definiteness that packet i is the first to arrive at site v, where it remains through time \(\tau _q\) at which time packet j is brought to site v by particle \(k'\). Under these assumptions, there are the following possibilities:

Case 1 \(\varvec{Z}_{i,\tau _q}=v\). Since \(\mathbb P(\varvec{Z}_{j,\tau _q} =v) = \frac{1}{2}\), \(\mathbb P(F_q) = \frac{1}{2}\) in this case.

Case 2 \(\varvec{Z}_{i,\tau _q-1}=v\), and \(\varvec{Z}_{i,\tau _q}=k'\). In order for a viable switching to occur, packets i and j must be the only packets carried by particle \(k'\) at the end of step \(\tau _q\). There being a maximum of N packets available to be picked up by particle \(k'\) at this time, we have

Case 3 \(\varvec{Z}_{i,\tau _q-1}=k\) for some particle k. Since \(k \ne k'\), for a viable switching to occur, packet i must be the only packet picked up by particle k the last time it moved; that probability is \(\ge \frac{1}{N(N+1)}\). As packet j must also be the only packet carried by particle \(k'\) at the end of step \(\tau _q\), we have \(\mathbb P(F_q) \ge \left( \frac{1}{N(N+1)}\right) ^2\).

We have thus a lower bound b for \(F_q\) that depends only on N. \(\square \)

Notice that the argument above applies equally well to the setting of Theorem 4: even with their initial positions \(O(L^\vartheta )\) apart, we still have (22) beacuse of the gambler ruin’s estimate and the argument above.

Proof of Theorems 2 and 4 for d = 1

Let \(v_1, v_2, \dots \in S\) and \(p_{L, v_1, \dots , v_N}\) be as defined at the beginning of this subsection. For each N, \(p_{L,v_1, \dots , v_N}\) has a convergent subsequence as \(L \rightarrow \infty \) by compactness. It follows that there exists \(L_n \rightarrow \infty \) so that as \(n\rightarrow \infty \),

Equivalently, if \(X_1^{(L)}, X_2^{(L)}, \dots \) are \(\{0, 1\}\)-valued random variables defined in such a way that when \(\{0,1\}\) is identified with \(\{\mathcal L, \mathcal R\}\), the joint distribution of \((X_1^{(L)}, \dots , X_N^{(L)})\) is equal to \(p_{L,v_1, \dots , v_N}\) for each N, then there is a sequence of random variables \((X_1, X_2, \dots )\) to which \((X_1^{(L_n)}, X_2^{(L_n)}, \dots )\) converges.

By Lemma 16, the sequence \((X_1, X_2, \dots )\) is exchangeable, and de Finetti’s Theorem applies (see Sect. 3.1). It follows from Sect. 6.1 that if m is the probability in Theorem 5, then

From these two integrals, together with Jensen’s Inequality, it follows that \(m=\delta _x\), the delta function at x. That in turn implies, by the characterization of the measure m in de Finetti’s Theorem, that \(X_1, \dots , X_N\) are independent. Since the analysis above applies to any limit point of \(p_{L, v_1, \dots , v_N}\) as \(L \rightarrow \infty \), we conclude that \(p_{L, v_1, \dots , v_N}\) converges to the product measure as claimed. \(\square \)

7 LTE for Particle Numbers and Energies

This section is dedicated to the proof of Theorem 3.

We start with the following simple observation: The condition \(M(L)/L^d \rightarrow \alpha \mathop {\mathrm {vol}}\nolimits (\mathcal D)\) implies that for any \(v \in \mathcal D_L\),

A similar computation involving finitely many sites proves statement (A) of Theorem 3.

We will give a proof of statement (B) of Theorem 3 in the case \(S=\{ 0\}\). This assumption is not necessary, but it simplifies the notation considerably, and the proof of the general case is entirely analogous.

To fix notation, let \(K \in \mathbb Z^+\) be the number of particles at site \(\langle xL \rangle \), and fix arbitrary nonnegative integers \(n^*_0\), \(\tilde{n}^*_{\mathcal [1]}, \ldots , \tilde{n}^*_{[K]}\), to be used as moments of the site and particle energies. For L with \(M(L) \ge K\), let \((\mathcal T_1, \ldots ,\mathcal T_K)\) be an ordered list of distinct elements of \(\{ 1,2,\ldots , M(L)\}\). We let \(Q(\mathcal T_1, \ldots ,\mathcal T_K)\) denote the event that these are exactly the particles at site \(\langle xL \rangle \), and define \(\check{\underline{n}}_*= \check{\underline{n}}_*(\mathcal T_1, \ldots ,\mathcal T_K)\) by

Lemma 17

The following holds for all large enough L: Let \((\mathcal T_1, \ldots ,\mathcal T_K)\) be fixed. We define \(\check{\underline{n}}_*\) as above, and let \(N = n^*_0 + \sum _{j=1}^K \tilde{n}^*_{[j]}\). Then

Observe that the quantities on both sides of (26) are independent of \((\mathcal T_1, \ldots ,\mathcal T_K)\), as any two lists of K particle names are clearly interchangeable, so the “\(\sim \)” can be interpreted without ambiguity as convergence as \(L \rightarrow \infty \). Let us denote the right side of (26) by \(\mu ^{(L)}(Q_K) \cdot [u(x)]^N\).

Proof of Theorem 3 (B) assuming Lemma 17 and \(S=\{0\}\) Let

We project \(\mu ^{(L)} | A_K\), the conditional measure of \(\mu ^{(L)}\) on \(A_K\), to the site and particle energy coordinates on \(\langle xL \rangle \). The resulting probability, \(\nu ^{(L)}_{x,K}\), will be viewed as a measure on \(\mathbb R^{K+1}\) with coordinates \((\xi , \omega _1, \ldots , \omega _K)\), the \(\xi \)-coordinate corresponding to site energy.

Let \(n^*_0\), \(\tilde{n}^*_{\mathcal [1]}, \ldots , \tilde{n}^*_{[K]}\) be fixed. Using the notation above, we have

The first two equalities are by definition. The convergence as \(L \rightarrow \infty \) and the last equality are from Lemma 17 and the comments following that lemma.

As this holds for all \(n^*_0\), \(\tilde{n}^*_{\mathcal [1]}, \ldots , \tilde{n}^*_{[K]}\), we conclude, as in Sect. 4.3, that \(\nu ^{(L)}_{x,K}\) tends to a product of \(K+1\) exponential distributions each with mean u(x). \(\square \)

Proof of Lemma 17

Let \((\mathcal T_1, \ldots , \mathcal T_K)\) be fixed. We say a particle configuration \(\sigma \in Q\) if \(Q(\mathcal T_1, \ldots , \mathcal T_K)\) holds, and introduce the function

The assertion in Lemma 17 can then be rewritten as

As in Sect. 4, we approximate the left side of (27) by \(\mathbb E(F'(\check{\underline{n}}_*,\check{\underline{x}}_t, \bar{X}_t))\), and decompose into sample paths \(\varvec{\sigma }=(\sigma _0, \dots , \sigma _m)\) of particle movements on [0, t]:

The first equality above is the definition of \(F'\) and the second follows from Lemma 7. Informally, for an \(\varvec{X}_t\)-trajectory that ends in a state with a set of particles at a certain site, its “dual trajectory”, which is obtained by reversing the paths of the particles, should start with the same set of particles at the same site.

Taking the limit \(t \rightarrow \infty \) in lines (28) and (29) we obtain

To handle the right side of (30), we alter the definition of \(\varvec{Z}_k\) in Sect. 4.3 slightly by restricting \(\bar{Y}\) in \(\varvec{Z}_0\) to Q, everything else unchanged. Let \(U_Q\) denote this new probability distribution of \(\bar{Y}\), and define \(\mathbb E'(\cdot ) = \int \mathbb E_{(\check{\underline{n}}_*,\bar{Y})}(\cdot ) \ U_Q(\mathrm {d}\bar{Y})\) (cf (15) in Sect. 4.3), so that the right side of (30) is equal to

To obtain (27), it remains to check that Proposition 4 holds with \(\mathbb E\) replaced by \(\mathbb E'\): For \(d \ge 2\), this is not an issue, since properties of \(\bar{Y}\) do not appear in the proof. For 1d, one needs to check that the switching arguments in Sect. 6.2 are not affected by the restriction of \(\bar{Y}\) to Q, and that is true as well. \(\square \)

8 Possible Extensions

8.1 Hydrodynamic Limit

In the case where the density of particles is positive, we expect that the method of the previous sections, namely duality, can be applied to identify the time dependent behavior of the system in the diffusive scaling limit, also called the hydrodynamic limit. We formulate a conjecture here and explain heuristically the form of the thermal conductivity.

As in the discussion before the statement of Theorem 3 in Sect. 2.2, we assume there is a number \(\alpha >0\) such that M(L), the number of particles in the system, satisfies \(M(L)/L^d \rightarrow \alpha \ \mathrm{vol}(\mathcal D)\) as \(L \rightarrow \infty \). Following the notation in Sect. 2.2, we define \(\nu ^{(L)}_{t,x,S,K_1, \ldots K_S}\) analogously to \(\nu ^{(L)}_{x,S,K_1, \ldots K_S}\) with the invariant measure \(\mu ^{(L)}\) used to define the latter replaced by the distribution of \(\varvec{X}_{tL^2}^{(L)}\). Unlike \(\nu ^{(L)}_{x,S,K_1, \ldots K_S}\), the distribution of \(\nu ^{(L)}_{t,x,S,K_1, \ldots K_S}\) (for fixed t) depends strongly on initial condition. For simplicity, we consider initial conditions of the following kind: We fix a function f, an arbitrary positive continuous extension of T to \(\mathcal D\), and require that at time \(t=0\), both the site energy \(\xi _v\) and the energies of all particles at site v are assumed to be f(v / L), whereas initial particle configurations are taken to be uniformly distributed on \(\mathcal D_L\). Finally, for a given initial condition, we let \(\xi ^{(L)}_{v, tL^2}\) denote the coordinate of \(\varvec{X}_{tL^2}^{(L)}\) corresponding to the energy at site v.

Conjecture 1

The following hold in the setup above:

(a) Convergence to the heat equation For any \(x \in \mathcal D\) and \(t>0\),

where u(x, t) is the unique solution of

(b) LTE in the hydrodynamic limit For any \(x \in \mathcal D\) and \(t>0\), the conclusion of Theorem 3, with \(\nu ^{(L)}_{x,S,K_1, \ldots K_S}\) replaced by \(\nu ^{(L)}_{t,x,S,K_1, \ldots K_S}\) and u(x) replaced by u(x, t), remains valid.

We expect that Conjecture 1 can be proved by duality but a complete proof will involve many technical details. As the subject of the present paper is NESS, we have elected not to include a proof here.

To explain the thermal conductivity heuristically, consider the environment seen from the packet. We claim that for L large, the invariant measure of this process is close to the following one: the packet is carried by a particle with probability \(\alpha /(\alpha +1)\), and independently of whether the packet is carried by a particle or not, there is an independent Poissonian number of particles at each site with mean \(\alpha \). Now by the ergodic theorem for Markov chains, the number of jumps of the packet before time t is approximately \(t \alpha /(\alpha +1)\). Consequently the packet’s trajectory, when rescaled diffusively, converges to a Brownian motion with covariance matrix \(\frac{\alpha }{d(\alpha +1)} Id\).

A result along the lines of Conjecture 6 for a similar model (allowing spatial inhomogeneity) is the subject of a forthcoming paper by the second-named author.

8.2 Remarks and Further Extensions

Our model belongs, in fact, to the category of “gradient systems”. To see this, let us denote the number of particles at site v by \(m_v = \sum _{i=1}^M \delta _{v} (X_i)\) and the total energy per site by

The current along an arbitrary edge \((v,v+e)\) is denoted by \(J_{v,v+e}\), that is, for any \(v \in \mathcal D_L \setminus \mathcal B_L\), we have

where \(e_i\) is the ith coordinate vector. Also by definition of the process \(\varvec{X}_t\), for any \(v, v+e \in \mathcal D_L\),

Since this relation holds, the system is of gradient type.

The hydrodynamic limit of gradient systems has been studied by a number of authors using the entropy method of Guo, Papanicolau and Varadhan [18]. Directly relevant to the present work are two papers by Eyink, Lebowitz and Spohn, which prove local equilibrium for a wide class of gradient systems [14, 15]. Note however that our setting is not covered by these papers, as the local dynamics in our models are more complicated; consequently we also have a more refined version of LTE. While it is likely that the entropy method can be applied to our model, we do not pursue that here.

Finally, we mention some possible directions of future research.

-

(1)

Can one consider some biased random walk in the dual process in the spirit of [7]? Some preliminary results in this direction will be included in a forthcoming paper.

-

(2)

Consider the case when the particle density is not constant, but is a function of time and space. Let us assume for the moment that M(L) is Poissonian with expectation \(\alpha |\mathcal D_L|\) and the particles are indistinguishable. Then for any fixed constant u and \(\alpha \), one easily sees that the measure that assigns independent Poissonian (with expectation \(\alpha \)) number of particles to each site and independent exponential energy (with expectation u) to each site and particle, is invariant. Let us denote this measure by \(\prod _{\xi \in \mathcal D_L}\mu _{u, \alpha }\) (this is the equilibrium case, cf. Proposition 1). Now in the non-equilibrium case (with initial conditions as in Conjecture 1), and recalling the definition of E from (32) we expect that the triple (\(\alpha _{xL}(tL^2),E_{xL}(tL^2),\xi _{xL}(tL^2)\)) for large L is governed by the hydrodynamic equation