Abstract

We consider n particles \(0\le x_1<x_2< \cdots < x_n < +\infty \), distributed according to a probability measure of the form

where \(Z_n\) is the normalization constant. This distribution arises in the context of modeling disordered conductors in the metallic regime, and can also be realized as the distribution for squared singular values of certain triangular random matrices. We give a double contour integral formula for the correlation kernel, which allows us to establish universality for the local statistics of the particles, namely, the bulk universality and the soft edge universality via the sine kernel and the Airy kernel, respectively. In particular, our analysis also leads to new double contour integral representations of scaling limits at the origin (hard edge), which are equivalent to those found in the classical work of Borodin. We conclude this paper by relating the correlation kernels to those appearing in recent studies of products of M Ginibre matrices for the special cases \(\theta =M\in \mathbb {N}\).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Statement of the Main Results

1.1 Biorthogonal Laguerre Ensembles

The biorthogonal Laguerre ensembles refer to n particles \(x_1< \cdots < x_n\) distributed over the positive real axis, following a probability density function of the form

where

is the normalization constant, and

is the standard Vandermonde determinant.

Densities of the form (1.1) were first introduced by Muttalib [38], where he pointed out that, due to the appearance of two body interaction term \(\Delta (x_1,\ldots ,x_n)\Delta (x_1^\theta ,\ldots ,x_n^\theta )\), these ensembles provide more effective description of disordered conductors in the metallic regime than the classical random matrix theory. A more concrete physical example that leads to (1.1) (with \(\theta =2\)) can be found in [36], where the authors proposed a random matrix model for disordered bosons. These ensembles are further studied by Borodin [10] under a more general framework, namely, biorthogonal ensembles. It is also worthwhile to mention the work of Cheliotis [12], where the author constructed certain triangular random matrices in terms of a Wishart matrix whose squared singular values are distributed according to (1.1); see also [22]. Note that when \(\theta =1\), (1.1) reduces to the well-known Wishart-Laguerre unitary ensemble and plays a fundamental role in random matrix theory; cf. [6, 19].

A nice property of (1.1) is that, as proved in [38], they form the so-called determinantal point processes [27, 45]. This means there exits a correlation kernel \(K_n^{(\alpha , \theta )}(x,y)\) such that the joint probability density functions (1.1) can be rewritten as the following determinantal forms

The kernel \(K_n^{(\alpha , \theta )}(x,y)\) has a representation in terms of the so-called biorthogonal polynomials (cf. [29] for a definition). Let

be two sequences of polynomials depending on the parameters \(\alpha \) and \(\theta \), of degree j and k respectively, and they satisfy the orthogonality conditions

Note that the polynomial \(q_k^{(\alpha , \theta )}\) is normalized to be monic. We then have

The families \(\{p_j,j=0,1,\ldots \}\) and \(\{q_k,k=0,1,\ldots \}\), which are called Laguerre biorthogonal polynomials, exist uniquely, since the associated bimoment matrix is nonsingular; see (2.3) and (2.6) below. The studies of these polynomials (with \(\theta =2\)) might be traced back to [46] during the investigations of penetration and diffusion of X-rays through matter. Later, intensive studies have been conducted on the case \(\theta \in \mathbb {N}=\{1,2,\ldots \}\) in [11, 23, 24, 30, 43, 44, 47], where the general properties including explicit formulas, recurrence relations, generating functions, Rodrigues’s formulas etc. are derived.

As determinantal point processes, a fundamental issue of the study is to establish the large n limit of the correlation kernel (1.4) in both macroscopic and microscopic regimes. By expressing \(K_n^{(\alpha ,\theta )}(x,y)\) as a finite series expansion in terms of \(x^{k\theta }y^r\), \(k,r=0,1,\ldots ,n-1\), it was shown by Borodin [10, Theorem 4.2] that

where \(J_{a,b}\) is Wright’s generalization of the Bessel function [17] given by

see also [36] for the special case \(\theta =2\), \(\alpha \in \mathbb {N}\cup \{0\}\). These non-symmetric hard edge scaling limits generalize the classical Bessel kernels [18, 50] (corresponding to \(\theta =1\)), and possess some nice symmetry properties. Moreover, they also appear in the studies of large n limits of correlation kernels for biorthogonal Jacobi and biorthogonal Hermite ensembles [10]. When \(\theta =M\in \mathbb {N}\) or \(1/\theta = M\), the limiting kernels coincide with the hard edge scaling limits of specified parameters arising from products of M Ginibre matrices [33], as shown in [32].

The macroscopic behavior of the particles as \(n\rightarrow \infty \) has recently been investigated in [13], where the expressions for the associated equilibrium measures are given for quite general potentials and \(\theta \ge 1\). According to [13], as \(n\rightarrow \infty \), the (rescaled) particles in (1.1) are distributed over a finite interval \([0,(1+\theta )^{1+1/\theta }]\), with the density function given by

Here, \(I_{\pm }(x)\) (with \(\mathrm {Im}\,(I_+(x))>0\)) stand for two complex conjugate solutions of the equation

Moreover, by [13, Remark 1.9], the density blows up with a rate \(x^{-1/(1+\theta )}\) near the origin (hard edge), while vanishes as a square root near \((1+\theta )^{1+1/\theta }\) (soft edge). This phenomenon in particular suggests non-trivial hard edge scaling limits (as shown in 1.5), as well as the expectation that the classical bulk and soft edge universality [31] (via the sine kernel and Airy kernel, respectively) should hold in the bulk and the right edge as in the case of \(\theta =1\). More explicit description is revealed later in [21]. After changing variables \(x_i \rightarrow \theta x_i^{1/\theta }\), the (rescaled) particles are then distributed over \(\left[ 0,(1+\theta )^{1+\theta }/\theta ^\theta \right] \) and the limiting mean distribution is recognized as the Fuss–Catalan distribution [5, 7, 40]. Its kth moment is given by the Fuss–Catalan number

The density function of Fuss–Catalan distribution can be written down explicitly in several ways; cf. [42] in terms of Meijer G-functions (see e.g. [8, 37, 41] and the “Appendix” below for a brief introduction) or [34] in terms of multivariate integrals. The simplest form of the representation for general \(\theta \) might follow from the following parametrization of the argument [9, 21, 25, 39]:

It is readily seen that this parametrization is a strictly decreasing function of \(\varphi \), thus gives a one-to-one mapping from \((0, \pi /(1+\theta ))\) to \((0, (1 + \theta )^{1 + \theta }/\theta ^\theta )\). The density function in terms of \(\varphi \) is then given by

From (1.9) and (1.10), one can check directly that \(\rho \) blows up with a rate \(x^{-\theta /(1+\theta )}\) near the origin, and vanishes as a square root near \((1+\theta )^{1+\theta }/\theta ^\theta \), which is compatible with the changes of variables. We finally note that the other description of macroscopic behavior with the notion of a DT-element [16] can be found in [12].

The main aim of this paper to establish local universality for biorthogonal Laguerre ensembles (1.1). Due to lack of a simple Christoffel–Darboux formula for Laguerre biorthogonal polynomials, we have to adapt an approach that is different from the conventional one. The main issue here is an explicit integral representation of \(K_n^{(\alpha ,\theta )}\). Our main results are stated in the next section.

1.2 Statement of the Main Results

Our first result is stated as follows:

Theorem 1.1

(Double contour integral representation of \(K_n^{(\alpha ,\theta )}\)) With \(K_n^{(\alpha ,\theta )}\) defined in (1.4), we have

for \(x,y>0\), where

and \(\Sigma \) is a closed contour going around \(0, 1, \ldots , n-1\) in the positive direction and \(\mathrm {Re}\,t > c\) for \(t \in \Sigma \).

We highlight that this contour integral representation bears a resemblance to those appearing recently in the studies of products of random matrices [20, 28, 32, 33], where the integrands of double contour integral representations for the correlation kernels again consist of ratios of gamma functions. When \(\theta \in \mathbb {N}\), \(K_n^{(\alpha ,\theta )}\) is indeed related to certain correlation kernels arising from products of Ginibre matrices; see Sect. 3 below. We also note that, in the context of products of random matrices, the correlation kernels can be written as integrals involving Meijer G-functions, for biorthogonal Laguerre ensembles, however, it does not seem to be the case for general parameters \(\alpha \) and \(\theta \).

An immediate consequence of the above theorem is the following new representations of hard edge scaling limits.

Corollary 1.2

(Hard edge scaling limits of \(K_n^{(\alpha ,\theta )}\)) With \(\alpha \ge -1\), \(\theta \ge 1\) being fixed, we have

uniformly for x, y in compact subsets of the positive real axis, where

and where c is given in (1.12), \(\Sigma \) is a contour starting from \(+\infty \) in the upper half plane and returning to \(+\infty \) in the lower half plane which encircles the positive real axis and \(\mathrm {Re}\,t>c\) for \(t\in \Sigma \). Alternatively, by setting

and

where \(\gamma \) is a loop starting from \(-\infty \) in the lower half plane and returning to \(-\infty \) in the upper half plane which encircles the negative real axis, we have

In Corollary 1.2, we require \(\theta \ge 1\) to make sure the integral is convergent. Note that when \(\theta =1\), we have (see [41, formula 10.9.23])

where \(J_\alpha \) denotes the Bessel function of the first kind of order \(\alpha \). It then follows from (1.17) that

where

is the Bessel kernel of order \(\alpha \) that appears as the scaling limit of the Laguerre unitary ensembles at the hard edge [18, 50], as expected. Furthermore, a comparison of (1.5) and (1.13)–(1.14) gives us the following identity

For a direct proof of the above formula; see Remark 3.2 below.

We believe that the new integral representations (1.14) and (1.17) for \(K^{(\alpha ,\theta )}\) will also facilitate further investigations of relevant quantities, say, the differential equations for the associated Fredholm determinants, as done in [48, 49, 51]. The studies of these aspects will be the topics of future research.

By performing an asymptotic analysis for the double contour integral representation (1.11), we are able to confirm the bulk and soft edge universality for biorthogonal Laguerre ensembles, which are left open in [13]. The relevant results are stated as follows.

Theorem 1.3

(Bulk and soft edge universality) For \(x_0\in (0, ( 1+\theta )^{1+\theta }/\theta ^\theta )\), which is parameterized through (1.9) by \(\varphi = \varphi (x_0) \in (0, \pi /(1+\theta ))\), we have, with \(\alpha ,\theta \) being fixed,

uniformly for \(\xi \) and \(\eta \) in any compact subset of \(\mathbb {R}\), where \(\rho (\varphi )\) is defined in (1.10) and

is the normalized sine kernel.

For the soft edge, we have

uniformly for \(\xi \) and \(\eta \) in any compact subset of \(\mathbb {R}\), where

and

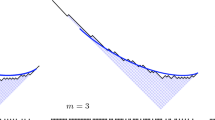

is the Airy kernel. In (1.23), \(\gamma _R\) and \(\gamma _L\) are symmetric with respect to the imaginary axis, and \(\gamma _R\) is a contour in the right-half plane going from \(e^{-\pi /3i}\cdot \infty \) to \(e^{\pi /3 i}\cdot \infty \); see Fig. 1 for an illustration.

In the special case \(\theta =2\), \(\alpha \in \mathbb {N}\cup \{0 \}\), the bulk universality is first proved in [36].

Remark 1.1

The result of soft edge universality (1.21) also implies that the limiting distribution of the largest particle in biorthogonal Laguerre ensembles, after proper scaling, converges to the well-known Tracy–Widom distribution [6, Theorem 3.1.5].

1.3 Organization of the Rest of the Paper

The rest of this paper is organized as follows. Our main results are proved in Sect. 2. The proofs of Theorem 1.1 and Corollary 1.2 are given in Sects. 2.2 and 2.3, respectively, which rely on two propositions concerning the contour integral representations of \(p_k^{(\alpha ,\theta )}\) and \(q_k^{(\alpha ,\theta )}\) in Sect. 2.1. These formulas might be viewed as extensions of the intensively studied \(\theta \in \mathbb {N}\) case, and we give direct proofs here. The nice structures of these formulas then allow us to simplify (1.4) into a closed integral form as well as to obtain the hard edge scaling limits, following the idea in recent work of the author with Kuijlaars [33]. The bulk and soft edge universality stated in Theorem 1.3 is proved in Sect. 2.4. We will perform a steepest descent analysis of the double contour integral (1.11), whose integrand constitutes products and ratios of gamma functions with large arguments. It comes out that the strategy developed by Liu et al. [35] (see also [1]) works well in the present case. Roughly speaking, the strategy is to approximate the logarithmics of the gamma functions by elementary functions for n large, which play the role of phase functions. There will be two complex conjugate saddle points in the bulk regime, corresponding to the sine kernel, while in the edge regime these two saddle points coalesce into a single one, which leads to the Airy kernel. A crucial feature of the analysis is to construct suitable contours of integration with the aid of the parametrization (1.9). Since the asymptotic analysis is carried out in a manner similar to that performed in [35], emphasis will be placed on key steps and demonstration of basic ideas in the proof of Theorem 1.3, but refer to [35] for some technical issues.

We finally focus on the cases when \(\theta =M \in \mathbb {N}\), and relate \(K_n^{(\alpha ,M)}\) to correlation kernels of specified parameters arising from products of M Ginibre matrices. Some remarks are made in accordance with this relation to conclude this paper. For convenience of the reader, we include a short introduction to the Meijer G-function in the “Appendix”.

2 Proofs of the Main Results

2.1 Contour Integral Representations of \(p_k^{(\alpha ,\theta )}(x)\) and \(q_k^{(\alpha ,\theta )}(x)\)

Proposition 2.1

We have for \(x>0\),

where \(\Sigma \) is a closed contour that encircles \(0, 1, \ldots , k\) once in the positive direction.

Proof

The first identity in (2.1) follows from the determinantal expressions for the polynomials \(q_k^{(\alpha ,\theta )}\). By setting the bimoments

we define

From the general theory of biorthogonal polynomials (cf. [15, Proposition 2]), it follows that

with \(q_0^{(\alpha ,\theta )}(x)=1\). With the aid of functional relation

an easy Gauss elimination process gives us

Similarly, by expanding the matrix in (2.4) along the last row and evaluating the associated minors, it follows

see also [30] for a proof of (2.7) by checking the orthogonality directly if \(\theta =M\).

To show the second identity in (2.1), we note that integrand in the right-hand side of (2.1) is meromorphic on \(\mathbb C\) with simple poles at \(0, 1, \ldots , k\) (the poles of the numerator at the negative integers are canceled by the poles of the factor \(\Gamma (t+1)\) in the denominator). Hence, by the residue theorem and a straightforward calculation, we obtain

This completes the proof of Proposition 2.1. \(\square \)

Proposition 2.2

For \( p_k^{(\alpha ,\theta )}\), we have the following Mellin–Barnes integral representation

where \(c > \max \{-\alpha , 1-\theta \}\) and \(x>0\).

Proof

Note that all the poles of the integrand lie on the left of the line \(\mathrm {Re}\,z = c\), it is readily seen that the integral formula in the right-hand side of (2.9) is well-defined. On account of the uniqueness of biorthogonal functions, our strategy is to check the integral representation satisfies

-

the orthogonality conditions

$$\begin{aligned} \frac{1}{2\pi i \Gamma (\alpha +1+k\theta ) k!}\int _0^\infty x^{j\theta } \int _{c-i\infty }^{c+i\infty } \frac{\Gamma \left( \frac{s}{\theta }+1-\frac{1}{\theta }\right) }{\Gamma \left( \frac{s}{\theta }+1-\frac{1}{\theta }-k\right) } \Gamma (\alpha +s)x^{-s} \,\mathrm {d}s \,\mathrm {d}x =\delta _{j,k},\nonumber \\ \end{aligned}$$(2.10)for \(j=0,1,\ldots ,k\);

-

the integral \(\frac{1}{2\pi i } \int _{c-i\infty }^{c+i\infty } \frac{\Gamma \left( \frac{s}{\theta }+1-\frac{1}{\theta }\right) }{\Gamma \left( \frac{s}{\theta }+1-\frac{1}{\theta }-k\right) } \Gamma (\alpha +s)x^{-s} \,\mathrm {d}s\) belongs to the linear span of \(x^\alpha e^{-x}, x^{\alpha +1}e^{-x}, \ldots , x^{\alpha +k}e^{-x}\).

To show (2.10), we make use of the inversion formula for the Mellin transform and obtain

To check the second statement, recall the Pochhammer symbol \((a)_k=\frac{\Gamma (a+k)}{\Gamma (a)}=a(a+1)\cdots (a+k-1)\), it is readily seen that

is a polynomials of degree k in s, the integral is then a linear combination of weights \(w_j^{(\alpha )}(x)\), \(j=0,\ldots ,k\), where

Thus, it suffices to check \(w_j^{(\alpha )}(x)\) belongs to the linear span of \(x^\alpha e^{-x}, x^{\alpha +1}e^{-x}, \ldots , x^{\alpha +j}e^{-x}\). We now expand the monomial \(s^j\) in terms of the basis \((\alpha +s)_l\), \(l=0,\ldots ,j\), i.e.,

for some constants \(a_l\) with \(a_j=1\). Inserting the above formula into (2.12), it follows that

as desired, where we have made use of the fact that

see (4.4) below.

This completes the proof of Proposition 2.2. \(\square \)

2.2 Proof of Theorem 1.1

With a change of variable \(s\rightarrow \theta s+1-\theta \) in (2.9) and contour deformation, we rewrite \(x^\alpha e^{-x} p_k^{(\alpha ,\theta )}(x)\) as

where \(c>\max \{0,1-\frac{\alpha +1}{\theta }\}\). This, together with (1.4) and (2.1), implies that

We now follow the idea in [33]. From the functional equation (2.5), one can easily check that

which means that there is a telescoping sum

To make sure that \(s-t-1 \ne 0\) when \(s \in c + i\mathbb R\) and \(t \in \Sigma \), we make the following settings. Note that \(\max \{0,1-\frac{\alpha +1}{\theta }\}<1\) for \(\alpha \ge -1\) and \(\theta >0\), we take

and let \(\Sigma \) go around \(0, 1, \ldots , n-1\) but with \(\mathrm {Re}\,t > c-1\) for \(t \in \Sigma \). Then we insert (2.16) into (2.15) and get

The t-integral in the second double integral vanishes due to Cauchy’s theorem, since there are no singularities for the integrand inside \(\Sigma \). With a change of variable \(s \mapsto s+1\) in the first double integral, we obtain (1.11)

This completes the proof of Theorem 1.1.

2.3 Proof of Corollary 1.2

The proof now is straightforward by taking limit in (1.11), as in [33]. Recall the reflection formula of the gamma function

it is readily seen that

As \(n \rightarrow \infty \), we have (cf. [41, formula 5.11.13])

which can be easily verified by using Stirling’s formula for the gamma functions. By modifying the contour \(\Sigma \) in (1.11) from a closed contour around \(0, 1, \ldots , n-1\) to a two sided unbounded contour starting from \(+\infty \) in the upper half plane and returning to \(+\infty \) in the lower half plane which encircles the positive real axis and \(\mathrm {Re}\,t>c\) for \(t\in \Sigma \), the scaling limits (1.14) follow. The interchange of limit and integrals can be justified by combining elementary estimates of the \(\sin \) and gamma functions with the dominated convergence theorem, as explained in [33].

To show (1.17), we note that

and, by (2.17),

Inserting the above two formulas into (1.14), it is readily seen that

The change of variables \(s \mapsto \theta s+1\) and \(t \mapsto -\theta t\) takes the two integrals into the two functions \(p^{(\alpha ,\theta )}\) and \(q^{(\alpha ,\theta )}\) defined in (1.15) and (1.16), respectively. The identity (1.17) then follows.

This completes the proof of Theorem 1.2.

2.4 Proof of Theorem 1.3

We start with a scaling of the correlation kernel \(K_n^{(\alpha ,\theta )}(x,y) \rightarrow K_n^{(\alpha ,\theta )}(\theta x^{\frac{1}{\theta }},\theta y^{\frac{1}{\theta }})\). By (1.11), it then follows that

where \(\mathcal {C}\) and \(\Sigma \) are two contours to be specified later, depending on the choices of reference points.

By setting

where the branch cut for the logarithmic function is taken along the negative axis and we assume that the value of \(\log z\) for \(z \in (-\infty , 0)\) is continued from above, we could rewrite (2.21) as

We will then perform an asymptotic analysis of (2.23). The basic idea is the following. It is clear that the function F in (2.23) plays the role of a phase function. For large z and proper scalings, F can be approximated by a more elementary function \(\hat{F}\) (see 2.29 below) with the help of the Stirling’s formula for gamma function. There will be two complex conjugate saddle points \(w_{\pm }\) (see 2.32 below) of \(\hat{F}\) in general. In the proof of bulk universality, the two contours are deformed so that one of them will meet the pair of saddle points. It comes out that the main contribution to the integral does not come from the saddle points alone, but from the vertical line segment connecting the two points. In the proof of soft edge universality, the two saddle points coalesce into a real one. The phase function then behaves like a cubic polynomial around the saddle point (see 2.47 below), which justifies the appearance of Airy kernel.

We also note the possibilities to deform the contours in (2.23). Firstly, it is readily seen that the integral contour for s can be replaced by any infinite contour \(\mathcal {C}\) oriented from \(-i\infty \) to \(i\infty \), as long as \(\Sigma \) is on the right side of \(\mathcal {C}\). One can further deform \(\mathcal {C}\) such that \(\Sigma \) is on its left, and the resulting double contour integral remains the same. To see this, let \(\mathcal {C}\) and \(\mathcal {C}'\) be two infinite contours from \(-i\infty \) to \(i\infty \) such that \(\Sigma \) lies between \(\mathcal {C}\) and \(\mathcal {C}'\). An appeal to the residue theorem to the integral on \(\mathcal {C} \cup \mathcal {C}'\) gives

Hence, the double contour integral does not change if \(\mathcal {C}\) is replaced by \(\mathcal {C}'\). We will use such kind of contour deformation in the proof of the soft edge universality. Similarly, one can show that if \(\Sigma \) is split into two disjoint closed counterclockwise contours \(\Sigma = \Sigma _1 \cup \Sigma _2\), which jointly enclose poles \(0, 1, \ldots , n-1\), and \(\mathcal {C}\) is an infinite contour from \(-i\infty \) to \(i\infty \) such that \(\Sigma _1\) is on the left side of \(\mathcal {C}\) and \(\Sigma _2\) is on the right side of \(\mathcal {C}\), the formula (2.23) is still valid. We will use such kind of contours in the proof of the bulk universality.

We now derive the asymptotic behavior of F. Recall that the Stirling’s formula for gamma function [41, formula 5.11.1] reads

as \(z\rightarrow \infty \) in the sector \(|\arg z |\le \pi -\epsilon \) for some \(\epsilon > 0\). It then follows that if \(|z |\rightarrow \infty \) and \(|z - n |\rightarrow \infty \), while \(\arg z\) and \(\arg (z - n)\) are in \((-\pi + \epsilon , \pi - \epsilon )\), then uniformly

where

Furthermore, we have

where

Note that if \(\theta =M\in \mathbb {N}\), we encounter the same \(\tilde{F}(z; a)\) and \(\hat{F}(z; a)\) as in [35].

Since

the saddle point of \(\hat{F}(z;x)\) satisfies the equation

In particular, if \(x=x_0\in (0, (1 + \theta )^{1 + \theta }/\theta ^\theta )\), which is parameterized through (1.9) by \(\varphi = \varphi (x_0) \in (0, \pi /(1+\theta ))\), one can find two complex conjugate solutions of (2.31) explicitly given by

For later use, we also define a closed contour

which passes through \(w_\pm \), intersects the real line only at 0 when \(\phi = \pm \pi /(1 + \theta )\) and at \(1 + \theta ^{-1}\) when \(\phi = 0\). Since the integrand of (2.23) takes 0 as one of the poles, we further deform \(\tilde{\Sigma }\) a little bit near the origin simply by setting

with counterclockwise orientation.

With the above preparations, we are ready to prove the bulk and soft edge universality for \(K_n^{(\alpha ,\theta )}\).

Proof of (1.19) In view of (1.19), we scale the arguments x and y in (2.23) such that

where \(\xi \) and \(\eta \) are in a compact subset of \(\mathbb {R}\) and \(\rho (\varphi )\) is given in (1.10).

The contours \(\mathcal {C}\) and \(\Sigma \) are chosen in the following ways. The contour \(\mathcal {C}\) is simply taken to be an upward straight line passing through two scaled saddle points \(n w_{\pm }\). This line then divides \(n \tilde{\Sigma }^{r}\) into two parts, where r is a small parameter depending on \(\theta \). By further separating these two parts, we define

where \(\Sigma _{{{\mathrm{cur}}}}\) is the part from \(n \tilde{\Sigma }^{r}\), and \(\Sigma _{{{\mathrm{ver}}}}\) are two vertical lines connecting ending points of \(\Sigma _{{{\mathrm{cur}}}}\). The distance of these two lines is taken to be \(2\epsilon \), with \(\mathcal {C}\) lying in the middle of them; see Fig. 2 for an illustration. The main issue here is that, with these choices of \(\mathcal {C}\) and \(\Sigma \), \(\mathrm {Re}\,\hat{F}(z; x_0)\) defined in (2.29) attains its global maximum at \(z=w_{\pm }\) for \(nz \in \mathcal {C}\) and its global minimum at \(z = w_{\pm }\) for \(z \in \tilde{\Sigma }\), which can be proved rigorously with estimates as shown in [35, Lemma 3.1].

By taking the limit \(\epsilon \rightarrow 0\), it follows

where (\({{\mathrm{p.v.}}}\) means the Cauchy principal value)

and, by interchange of integrals and the Cauchy’s theorem,

Here we note that by taking \(\epsilon \rightarrow 0\), the vertical line \((nw_-,nw_+)\) is enclosed by \(\Sigma _{{{\mathrm{ver}}}}\), hence the Cauchy’s theorem is applicable in the first step.

With the values of x, y given in (2.35) and \(w_\pm \) given in (2.32), a straightforward calculation gives us

On the other hand, one can show that, in a manner similar to the estimates in [35, Lemma 2.1], \(F(z; n^\theta x_0)\) attains its global maximum at \(z=nw_{\pm }\) for \(z \in \mathcal {C}\) and its global minimum at \(z = n w_{\pm }\) for \(z \in \tilde{\Sigma }\), which leads to the fact that \(I_1(z)=\mathcal {O}(n^{-1/2})\). This, together with (2.37) and (2.40), implies (1.19).

Proof of (1.21) On account of the scalings of x, y in (1.21), we set

where \(\xi ,\eta \in \mathbb {R}\), \(x_*\) and \(c_*\) are given in (1.22).

In this case, the two saddle points \(w_\pm \) coalesce into a single one, i.e.,

We now select the contours \(\Sigma \) and \(\mathcal {C}\) as illustrated in Fig. 3.

The contour \(\Sigma \) is still a deformation of \(n\tilde{\Sigma }^{r}\), while near the scaled saddle point \(nz_0\), the local part \(\Sigma _{{{\mathrm{loc}}}}\) is defined by

where

The contour \(\mathcal {C}\) is obtained by deforming a straight line. Around \(nz_0\), the local part is defined by

As in [35, Eq. 2.69], one can show that the main contribution to the integral (2.23), as \(n\rightarrow \infty \), comes from the part \(\mathcal {C}_{\mathrm {loc}} \times \Sigma _{\mathrm {loc}}\), and the remaining part of the integral is negligible. When \((s,t)\in \mathcal {C}_{\mathrm {loc}} \times \Sigma _{\mathrm {loc}}\), we can approximate \(F(s; n^\theta x_*)\) and \(F(t; n^\theta x_*)\) by \(\tilde{F}\) given in (2.26), and further by \(\hat{F}\) that is defined in (2.29).

With \(z_0\) given in (2.42), it is readily seen that

Hence,

By changes of variables

it follows from (2.23), (2.26), (2.41), (2.44) and (2.47) that

where \(\Sigma _0\) and \(\mathcal {C}_0\) are the images of \(\mathcal {C}_{\mathrm {loc}}\) and \(\Sigma _{\mathrm {loc}}\) (see 2.45, 2.43) under the change of variables (2.48), and the last equality follows from the integral representation of Airy kernel shown in (1.23).

This completes the proof of Theorem 1.3.

3 The Cases when \(\theta =M\in \mathbb {N}\)

In this section, we will show a remarkable connection between \(K^{(\alpha ,\theta )}_n\) and those arising from products of Ginibre random matrices if \(\theta \in \mathbb {N}\). In the limiting case, this relation has been established in [32]. Our result gives new insights for the relations between these two different determinantal point processes. In particular, it provides the other perspective to explain the appearance of Fuss–Catalan distribution in biorthogonal Laguerre ensembles; see Remark 3.1 below. We start with an introduction to the correlation kernels appearing in recent investigations of products of Ginibre matrices.

3.1 Correlation Kernels Arising from Products of M Ginibre Matrices

Let \(X_j\), \(j=1,\ldots ,M\) be independent complex matrices of size \((n+\nu _j)\times (n+\nu _{j-1})\) with \(\nu _0=0\) and \(\nu _j\ge 0\). Each matrix has independent and identically distributed standard complex Gaussian entries. These matrices are also known as Ginibre random matrices. We then form the product

When \(M=1\), \(Y_1 = X_1\) defines the Wishart–Laguerre unitary ensemble and it is well-known that the squared singular values of \(Y_1\) form a determinantal point process with the correlation kernel expressed in terms of Laguerre polynomials. Recent studies show that the determinantal structures still hold for general M [2, 4]. According to [2], the joint probability density function of the squared singular values is given by (see [2, formula 18])

where the function \(w_k\) is a Meijer G-function

and the normalization constant (see [2, formula 21]) is

Note that the Meijer G-function \(w_k(x)\) can be written as a Mellin–Barnes integral

with \(c > 0\). As a consequence of (4.4), it is readily seen that if \(M=1\), (3.2) is equivalent to (1.1) with \(\theta =1\).

The determinantal point process (3.2) again is a biorthogonal ensemble. Hence, one can write the correlation kernel as

where \(\nu \) stands for the collection of parameters \(\nu _1,\ldots ,\nu _M\) and the biorthogonal functions \(P_k^\nu \) and \(Q_k^\nu \) are defined as follows. For each \(k = 0, 1, \ldots ,n-1\), \(P_k^\nu \) is a monic polynomial of degree k and \(Q_k^\nu \) can be a linear combination of \(w_0, \ldots , w_k\), uniquely defined by the orthogonality

In particular, we have the following explicit formulas of \(P_k^\nu \) and \(Q_k^\nu \) in terms of Meijer G-functions [2]:

and

where

is the generalized hypergeometric function with

being the Pochhammer symbol; see (4.2) for the second equality in (3.8). The polynomials \(P_k^\nu \) can also be interpreted as multiple orthogonal polynomials [26] with respect to the first M weight functions \(w_j\), \(j=0,\ldots ,M-1\), as shown in [33]. More properties of these polynomials (or in special cases) can be found in [14, 33, 39, 52, 53, 55].

With the aid of (3.7) and (3.8), it is shown in [33, Proposition 5.1] that the correlation kernel admits the following double contour integral representation

where \(\Sigma \) is a closed contour going around \(0, 1, \ldots , n-1\) in the positive direction and \(\mathrm {Re}\,t > -1/2\) for \(t \in \Sigma \). For recent progresses in the studies of products of random matrices; see [3].

We point out that the kernel (3.11) (as well as the biorthogonal functions \(P_k^\nu \) and \(Q_k^\nu \)) is well-defined as along as \(\nu _i>-1\), \(i=1,\ldots ,M\), and has a random matrix interpretation if \(\nu _i\) are non-negative integers, i.e., then the particles correspond to the squared singular values of the matrix \(Y_M\).

3.2 Connections Between \(K_n^{(\alpha ,M)}\) and \(K_n^\nu \)

Our final result of this paper is stated as follows.

Theorem 3.1

(Relating \(K_n^{(\alpha ,M)}\) to \(K_n^\nu \)) Let \(p_k^{(\alpha ,\theta )}\), \(q_k^{(\alpha ,\theta )}\), \(P_k^\nu \) and \(Q_k^\nu \) be the functions defined through biorthogonalities (1.3) and (3.6), respectively. If \(\theta =M\in \mathbb {N}\), we have

where the parameter \(\tilde{\nu }\) is given by an arithmetic sequence

As a consequence, we have

where \(K_n^{(\alpha ,\theta )}\) and \(K_n^\nu \) are two correlation kernels defined in (1.4) and (3.5), respectively.

Proof

Suppose now the parameters in \(P_k^\nu \) are given by \(\tilde{\nu }\) (3.13), we see from (3.8) that

where the second equality follows from the definition of Pochhammer symbol (3.10). In view of the Gauss’s multiplication formula [41, formula 5.5.6]

with \(z=i+\frac{\alpha +1}{M}\) and \(n=M\), we could further simplify (3.15) to get

Combining (3.17) with (2.7), it is readily seen that

which is the first identity in (3.12). Note that both \(q_k^{(\alpha ,M)}\) and \(P_{k}^{\tilde{\nu }}\) are monic polynomials of degree k.

To show the second identity in (3.12), we obtain from (3.7) and (3.13) that

where we have made use of (3.16) again in the second step. This, together with (2.14), implies that

as desired.

Finally, the relation (3.14) follows immediately from a combination of (1.4), (3.5) and (3.12). Alternatively, this relation can also be checked directly from the double contour integral representations (1.11) and (3.11) with the help of multiplication formula (3.16).

This completes the proof of Theorem 3.1. \(\square \)

Remark 3.1

By setting \(x=y\) in (3.14), we simply have that \(K_n^{(\alpha ,M)}\) is related to \(K_n^{\tilde{\nu }}\) via an Mth root transformation. Let \(n\rightarrow \infty \), this in turn provides the other perspective to explain the appearance of Fuss–Catalan distribution in biorthogonal Laguerre ensembles, since it is well-known that the Fuss–Catalan distribution characterizes the limiting mean distribution for squared singular values of products of random matrices [5, 7, 40]. As a concrete example, we may focus on the case \(\theta =M=2\). According to [33, 54], the empirical measure for scaled squared singular values for the products of two Ginibre matrices converges weakly and in moments to a probability measure over the real axis with density given by

On the other hand, by [36] (see also [13]), the limiting mean distribution for scaled particles from biorthogonal Laguerre ensembles (1.1) with \(\theta =2\) takes the density function given by

Clearly, the density (3.20) can be reduced to (3.19) via a change of variable \(x\rightarrow 2\sqrt{x}\), as expected.

Remark 3.2

From [33, Theorem 5.3], it follows that, with \(K_n^\nu \) defined in (3.5) and \(\nu _1, \ldots , \nu _M\) being fixed,

uniformly for x, y in compact subsets of the positive real axis, where

and where \(\Sigma \) is a contour starting from \(+\infty \) in the upper half plane and returning to \(+\infty \) in the lower half plane which encircles the positive real axis and \(\mathrm {Re}\,t>-1/2\) for \(t\in \Sigma \). This fact, together with our relation (3.14) and the hard edge scaling limits of Borodin (1.5), implies that

The identity (3.23) was first proved in [32], where the authors gave a direct proof by noting that Wright generalized Bessel functions \(J_{a,b}\) defined in (1.6) can be expressed in Meijer G-functions if b is a rational number. Since it is easily seen from (3.22) and the multiplication formula (3.16) that

where \(K^{(\alpha ,M)}(x,y)\) is given in (1.14), the proof presented in [32] also gives a direct proof of identity (1.18) if \(\theta =M\in \mathbb {N}\). To show (1.18) for general \(\theta \ge 1\), we first observe from (1.16), the residue theorem and (1.6) that

Similarly, by deforming the vertical line in (1.15) to be a loop starting from \(-\infty \) in the lower half plane and returning to \(-\infty \) in the upper half plane which encircles the negative real axis, we again obtain from the residue theorem that

A combination of the above two formulas, (1.14) and (1.17) gives us (1.18).

References

Adler, M., van Moerbeke, P., Wang, D.: Random matrix minor processes related to percolation theory. Random Matrices Theory Appl. 2, 1350008 (2013)

Akemann, G., Ipsen, J.R., Kieburg, M.: Products of rectangular random matrices: singular values and progressive scattering. Phys. Rev. E 88, 052118 (2013)

Akemann, G., Ipsen, J.R.: Recent exact and asymptotic results for products of independent random matrices. arXiv:1502.01667 (preprint)

Akemann, G., Kieburg, M., Wei, L.: Singular value correlation functions for products of Wishart random matrices. J. Phys. A 46, 275205 (2013)

Alexeev, N., Götze, F., Tikhomirov, A.: Asymptotic distribution of singular values of powers of random matrices. Lith. Math. J. 50, 121–132 (2010)

Anderson, G.W., Guionnet, A., Zeitouni, O.: An Introduction to Random Matrices. Cambridge Studies in Advanced Mathematics, vol. 118. Cambridge University Press, Cambridge (2010)

Banica, T., Belinschi, S.T., Capitaine, M., Collins, B.: Free Bessel laws. Can. J. Math. 63, 3–37 (2011)

Beals, R., Szmigielski, J.: Meijer \(G\)-functions: a gentle introduction. Not. Am. Math. Soc. 60, 866–872 (2013)

Biane, P.: Processes with free increments. Math. Z. 227, 143–174 (1998)

Borodin, A.: Biorthogonal ensembles. Nucl. Phys. B 536, 704–732 (1999)

Carlitz, L.: A note on certain biorthogonal polynomials. Pac. J. Math. 24, 425–430 (1968)

Cheliotis, D.: Triangular random matrices and biorthogonal ensembles. arXiv:1404.4730 (preprint)

Claeys, T., Romano, S.: Biorthogonal ensembles with two-particle interactions. Nonlinearity 27, 2419–2443 (2014)

Coussement, E., Coussement, J., Van Assche, W.: Asymptotic zero distribution for a class of multiple orthogonal polynomials. Trans. Am. Math. Soc. 360, 5571–5588 (2008)

Desrosiers, P., Forrester, P.J.: A note on biorthogonal ensembles. J. Approx. Theory 152, 167–187 (2008)

Dykema, K., Haagerup, U.: DT-operators and decomposability of Voiculescu’s circular operator. Am. J. Math. 126, 121–189 (2004)

Erdélyi, A., Magnus, W., Oberhettinger, F., Tricomi, F.G.: Higher Transcendental Functions. Based, in Part, on Notes left by Harry Bateman, vol. 3. McGraw-Hill Book Company, Inc., New York (1955)

Forrester, P.J.: The spectrum edge of random matrix ensembles. Nucl. Phys. B 402, 709–728 (1993)

Forrester, P.J.: Log-Gases and Random Matrices. Princeton University Press, Princeton (2010)

Forrester, P.J.: Eigenvalue statistics for product complex Wishart matrices. J. Phys. A 47, 345202 (2014)

Forrester, P.J., Liu, D.-Z.: Raney distributions and random matrix theory. J. Stat. Phys. 158, 1051–1082 (2015)

Forrester, P.J., Wang, D.: Muttalib-Borodin ensembles in random matrix theory—realisations and correlation functions. arXiv:1502.07147 (preprint)

Genin, R., Calvez, L.-C.: Sur les fonctions génératrices de certains polynômes biorthogonaux. C. R. Acad. Sci. Paris Sér. A–B 268, A1564–A1567 (1969)

Genin, R., Calvez, L.-C.: Sur quelques propriéetés de certains polynômes biorthogonaux. C. R. Acad. Sci. Paris Sér. A–B 269, A33–A35 (1969)

Haagerup, U., Möller, S.: The law of large numbers for the free multiplicative convolution. In: Operator Algebra and Dynamics. Springer Proceedings in Mathematics & Statistics, vol. 58, pp. 157–186. Springer, Heidelberg (2013)

Ismail, M.E.H.: Classical and Quantum Orthogonal Polynomials in One Variable. Encyclopedia of Mathematics and its Applications, vol. 98. Cambridge University Press, Cambridge (2005)

Johansson, K.: Random Matrices and Determinantal Processes. Mathematical Statistical Physics (Lecture Notes of the Les Houches Summer School). Elsevier, Amsterdam (2006)

Kieburg, M., Kuijlaars, A.B.J., Stivigny, D.: Singular value statistics of matrix products with truncated unitary matrices. arXiv:1501.03910 (preprint), to appear in Int. Math. Res. Notices

Konhauser, J.D.E.: Some properties of biorthogonal polynomials. J. Math. Anal. Appl. 11, 242–260 (1965)

Konhauser, J.D.E.: Biorthogonal polynomials suggested by the Laguerre polynomials. Pac. J. Math. 21, 303–314 (1967)

Kuijlaars, A.B.J.: Universality. In: Akemann, G. (ed.) The Oxford Handbook of Random Matrix Theory. Oxford University Press, Oxford (2011)

Kuijlaars, A.B.J., Stivigny, D.: Singular values of products of random matrices and polynomial ensembles. Random Matrices Theory Appl. 3, 1450011 (2014)

Kuijlaars, A.B.J., Zhang, L.: Singular values of products of Ginibre random matrices, multiple orthogonal polynomials and hard edge scaling limits. Commun. Math. Phys. 332, 759–781 (2014)

Liu, D.-Z., Song, C., Wang, Z.-D.: On explicit probability densities associated with Fuss-Catalan numbers. Proc. Am. Math. Soc. 139, 3735–3738 (2011)

Liu, D.-Z., Wang, D., Zhang, L.: Bulk and soft-edge universality for singular values of products of Ginibre random matrices. arXiv:1412.6777 (preprint), to appear in Ann. Inst. Henri Poincaré Probab. Stat

Lueck, T., Sommers, H.-J., Zirnbauer, M.R.: Energy correlations for a random matrix model of disordered bosons. J. Math. Phys. 47, 103304 (2006)

Luke, Y.L.: The Special Functions and Their Approximations. Academic Press, New York (1969)

Muttalib, K.A.: Random matrix models with additional interactions. J. Phys. A 28, L159–164 (1995)

Neuschel, T.: Plancherel-Rotach formulae for average characteristic polynomials of products of Ginibre random matrices and the Fuss-Catalan distribution. Random Matrices Theory Appl. 3, 1450003 (2014)

Nica, A., Speicher, R.: Lectures on the Combinatorics of Free Probability. London Mathematical Society Lecture Note Series, vol. 335. Cambridge University Press, Cambridge (2006)

Olver, F.W.J., Lozier, D.W., Boisvert, R.F., Clark, C.W. (eds.): NIST Handbook of Mathematical Functions, Cambridge University Press, Cambridge (2010). Print companion to [DLMF]

Penson, K.A., Życzkowski, K.: Product of Ginibre matrices: Fuss-Catalan and Raney distributions. Phys. Rev. E 83, 061118 (2011)

Prabhakar, T.R.: On a set of polynomials suggested by Laguerre polynomials. Pac. J. Math. 35, 213–219 (1970)

Preiser, S.: An investigation of biorthogonal polynomials derivable from ordinary differential equations of the third order. J. Math. Anal. Appl. 4, 38–64 (1962)

Soshnikov, A.: Determinantal random point fields. Russ. Math. Surv. 55, 923–975 (2000)

Spencer, L., Fano, U.: Penetration and diffusion of X-rays. Calculation of spatial distribution by polynomial expansion. J. Res. Nat. Bur. Stand. 46, 446–461 (1951)

Srivastava, H.M.: On the Konhauser sets of biorthogonal polynomials suggested by the Laguerre polynomials. Pac. J. Math. 49, 489–492 (1973)

Strahov, E.: Differential equations for singular values of products of Ginibre random matrices. J. Phys. A 47, 325203 (2014)

Tracy, C.A., Widom, H.: Level-spacing distributions and the Airy kernel. Commun. Math. Phys. 159, 151–174 (1994)

Tracy, C.A., Widom, H.: Level spacing distributions and the Bessel kernel. Commun. Math. Phys. 161, 289–309 (1994)

Tracy, C.A., Widom, H.: Fredholm determinants, differential equations and matrix models. Commun. Math. Phys. 163, 33–72 (1994)

Van Assche, W., Yakubovich, S.B.: Multiple orthogonal polynomials associated with Macdonald functions. Integr. Transform. Spec. Funct. 9, 229–244 (2000)

Van Assche, W.: Mehler-Heine asymptotics for multiple orthogonal polynomials. arXiv:1408.6140 (preprint)

Zhang, L.: A note on the limiting mean distribution of singular values for products of two Wishart random matrices. J. Math. Phys. 54, 083303 (2013)

Zhang, L., Román, P.: The asymptotic zero distribution of multiple orthogonal polynomials associated with Macdonald functions. J. Approx. Theory 163, 143–162 (2011)

Acknowledgments

The author thanks Peter Forrester and Dong Wang for helpful communications and for providing me with an early copy of the preprint [22] on a related study of the Laguerre biorthogonal ensemble upon completion of the present work. The author also thanks the anonymous referees for their careful reading and constructive suggestions. This work is partially supported by The Program for Professor of Special Appointment (Eastern Scholar) at Shanghai Institutions of Higher Learning (No. SHH1411007) and by Grant EZH1411513 from Fudan University.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that he has no conflict of interest.

Appendix: The Meijer G-Function

Appendix: The Meijer G-Function

For convenience of the readers, we give a brief introduction to the Meijer G-function in this appendix, which includes its definition and some properties used in this paper.

By definition, the Meijer G-function is given by the following contour integral in the complex plane:

where \(\Gamma \) denotes the usual gamma function and the branch cut of \(z^{-u}\) is taken along the negative real axis. It is also assumed that

-

\(0\le m\le q\) and \(0\le n \le p\), where m, n, p and q are integer numbers;

-

The real or complex parameters \(a_1,\ldots ,a_p\) and \(b_1,\ldots ,b_q\) satisfy the conditions

$$\begin{aligned} a_k-b_j \ne 1,2,3, \ldots , \quad \text {for }k=1,2,\ldots ,n\text { and }j=1,2,\ldots ,m, \end{aligned}$$i.e., none of the poles of \(\Gamma (b_j+u)\), \(j=1,2,\ldots ,m\) coincides with any poles of \(\Gamma (1-a_k-u)\), \(k=1,2,\ldots ,n\).

The contour \(\gamma \) is chosen in such a way that all the poles of \(\Gamma (b_j+u)\), \(j=1,\ldots ,m\) are on the left of the path, while all the poles of \(\Gamma (1-a_k-u)\), \(k=1,\ldots ,n\) are on the right, which is usually taken to go from \(-i\infty \) to \(i\infty \). For more details, we refer to the references [37, 41].

Most of the known special functions can be viewed as special cases of the Meijer G-functions. For instance, with the generalized hypergeometric function \({\; }_p F_q\) given in (3.9), one has [41, formula 16.18.1]

This, together with the fact that

gives us

Rights and permissions

About this article

Cite this article

Zhang, L. Local Universality in Biorthogonal Laguerre Ensembles. J Stat Phys 161, 688–711 (2015). https://doi.org/10.1007/s10955-015-1353-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-015-1353-3