Abstract

We consider a new class of non Markovian processes with a countable number of interacting components. At each time unit, each component can take two values, indicating if it has a spike or not at this precise moment. The system evolves as follows. For each component, the probability of having a spike at the next time unit depends on the entire time evolution of the system after the last spike time of the component. This class of systems extends in a non trivial way both the interacting particle systems, which are Markovian (Spitzer in Adv. Math. 5:246–290, 1970) and the stochastic chains with memory of variable length which have finite state space (Rissanen in IEEE Trans. Inf. Theory 29(5):656–664, 1983). These features make it suitable to describe the time evolution of biological neural systems. We construct a stationary version of the process by using a probabilistic tool which is a Kalikow-type decomposition either in random environment or in space-time. This construction implies uniqueness of the stationary process. Finally we consider the case where the interactions between components are given by a critical directed Erdös-Rényi-type random graph with a large but finite number of components. In this framework we obtain an explicit upper-bound for the correlation between successive inter-spike intervals which is compatible with previous empirical findings.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A biological neural system has the following characteristics. It is a system with a huge (about 1011) number of interacting components, the neurons. This system evolves in time, and its time evolution is not described by a Markov process [6]. In particular, the times between successive spikes of a single neuron are not exponentially distributed (see, for instance, [5]).

This is the motivation for the introduction of the class of models that we consider in the present paper. To cope with the problem of the large number of components it seems natural to consider infinite systems with a countable number of components. In this new class of stochastic systems, each component depends on a variable length portion of the history. Namely, the spiking probability of a given neuron depends on the accumulated activity of the system after its last spike time. This implies that the system is not Markovian. The time evolution of each single neuron looks like a stochastic chain with memory of variable length, even if the influence from the past is actually of infinite order. This class of systems represents a non trivial extension of the class of interacting particle systems introduced in 1970 by Spitzer [25]. It is also a non trivial extension of the class of stochastic chains with memory of variable length introduced in 1983 by Rissanen [24].

The particular type of dependence from the past considered here is motivated both by empirical as well as theoretical considerations.

From a theoretical point of view, Cessac [6] suggested the same kind of dependence from the past. In the framework of leaky integrate and fire models, he considers a system with a finite number of membrane potential processes. The image of this process in which only the spike times are recorded is a stochastic chain of infinite order where each neuron has to look back into the past until its last spike time. Cessac’s process is a finite dimensional version of the model considered here.

Finite systems of point processes in discrete or continuous time aiming to describe biological neural systems have a long history whose starting points are probably Hawkes [19] from a probabilistic point of view and Brillinger [5] from a statistical point of view, see also the interesting paper by Krumin et al. [21] for a review of the statistical aspects. For non-linear Hawkes processes, but in the frame of a finite number of components, Brémaud and Massoulié [3] address the problem of existence, uniqueness and stability. Møller and coauthors propose a perfect simulation algorithm in the linear case, see [22]. In spite of the great interest in Hawkes processes during the last years, especially in association with modeling problems in finance and biology, all the studies are reduced to the case of systems with a finite number of components. Here we propose a new approach which enables us to deal also with infinite systems with a countable number of components, without any assumption of the type linearity or attractiveness.

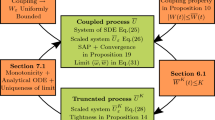

This paper is organized as follows. In Sect. 2 we state two theorems proving the existence and uniqueness of infinite systems of interacting chains with memory of variable length, under suitable conditions. Our main technical tool is a Kalikow-type decomposition of the infinite order transition probabilities which is a non trivial extension of previous results of the authors in the case of Markovian systems, cf. [15]. The decomposition considered here has two major differences with respect to what has been done before. Firstly this is due to the non-Markovian nature of the system. Secondly, and most importantly, the structure of the transition laws leads to the need of either considering a decomposition depending on a random environment or considering a space-time decomposition. Using the Kalikow-type decomposition we prove the existence, the uniqueness as well as a property of loss of memory of the stationary process.

In Sect. 3 we study the correlation between successive inter-spike intervals (ISI). This aims at explaining empirical results presented in the neuroscientific literature. Gerstner and Kistler [16], quoting Goldberg et al. [17], observe that in many experimental setups the empirical correlation between successive inter-spike intervals is very small “indicating that a description of spiking as a stationary renewal process is a good approximation”. However, Nawrot et al. [23] find statistical evidence that neighboring inter-spike intervals are correlated, having negative correlation. We show that we can account for these apparently contradictory facts within our model. This requires the introduction of a new setup in which the synaptic weights define a critical directed Erdös-Rényi random graph with a large but finite number of components. We obtain in Theorem 3 an explicit upper bound for the correlations involving the number of components of the system, as well as the typical length of one inter-spike interval. For a system having a large number of components, our result is compatible with the discussion in [16]. Gerstner and Kistler [16] deduce from this that spiking can be described by a renewal process. However, for systems with a small number of components, the correlation might as well be quite big, as reported by Nawrot et al. (2007) who show that neighboring inter-spike intervals are negatively correlated. Therefore, both features are captured by our model, depending on the scale we are working in.

The proofs of all the results are presented in Sects. 4, 5 and 6.

2 Systems of Interacting Chains with Memory of Variable Length: Existence, Uniqueness and Loss of Memory

We consider a stochastic chain \((X_{t} )_{ t \in {\mathbb{Z}}}\) taking values in {0,1}I for some countable set of neurons I, defined on a suitable probability space \(( \varOmega, \mathcal{A} , P ) \). For each neuron i at each time \(t \in {\mathbb{Z}}\), X t (i)=1 if neuron i has a spike at that time t, and X t (i)=0 otherwise. The global configuration of neurons at time t is denoted X t =(X t (i),i∈I). We define the filtration

For each neuron i∈I and each time \(t \in {\mathbb{Z}}\) let

be the last spike time of neuron i strictly before time t. We introduce a family of “synaptic” weights \(W_{j \to i} \in {\mathbb{R}}\), for j≠i, W j→j =0 for all j. W j→i is the “synaptic weight of neuron j on neuron i”. We suppose that the synaptic weights have the following property of uniform summability

Now we are ready to introduce the dynamics of our process. At each time t, conditionally on the whole past, sites update independently. This means that for any finite subset J⊂I, a i ∈{0,1},i∈J, we have

Moreover, the probability of having a spike in neuron i at time t is given by

where \(\phi_{i} : {\mathbb{R}}\times {\mathbb{N}}\to[ 0, 1 ]\) and \(g_{j} : {\mathbb{N}}\to {\mathbb{R}}_{+} \) are measurable functions for all i∈I,j∈I. We assume that ϕ i is uniformly Lipschitz continuous, i.e. there exists a positive constant γ such that for all \(s, s' \in {\mathbb{R}}\), \(n \in {\mathbb{N}}\), i∈I,

Observe that in the case where the function ϕ i is increasing with respect to the first coordinate, the contribution of components j is either excitatory or inhibitory, depending on the sign of W j→i . This is reminiscent of the situation in biological neural nets in which neurons can either stimulate or inhibit the expression of other neurons.

It is natural to ask if there exists at least one stationary chain which is consistent with the above dynamics, and if so, if this process is unique. In what follows we shall construct a probability measure P on the configuration space \(\varOmega= \{ 0 , 1 \}^{ I \times {\mathbb{Z}}} \) of all space-time configurations of spike trains, equipped with its natural sigma algebra \(\mathcal{A} \). On this probability space, we consider the canonical chain \((X_{t})_{ t \in {\mathbb{Z}}} \) where for each neuron i and each time t, X t (i)(ω)=ω t (i) is the projection of ω onto the (i,t) coordinate of ω.

For each neuron i, we introduce

the set of all neurons that have a direct influence on neuron i. Notice that in our model, \(\mathcal{V}_{\cdot\to i} \) can be both finite or infinite. We fix a growing sequence (V i (k)) k≥−1 of subsets of I such that V i (−1)=∅, V i (0)={i}, V i (k)⊂V i (k+1), V i (k)≠V i (k+1) if \(V_{i} (k ) \neq\mathcal{V}_{\cdot\to i} \cup\{ i \}\) and \(\bigcup_{k} V_{i} (k) =\mathcal{V}_{\cdot\to i} \cup\{ i \} \).

We consider two types of systems. The first system incorporates spontaneous spike times, see condition (2.6) below. These spontaneous spikes can be interpreted as external stimulus or, alternatively, as autonomous activity of the brain. The existence and uniqueness of this class is granted in our first theorem.

Theorem 1

(Existence and uniqueness in systems with spontaneous spikes)

Grant conditions (2.2) and (2.5). Assume that the functions ϕ i and g j satisfy moreover the following assumptions:

-

1.

There exists δ>0 such that for all \(i \in I, s \in {\mathbb{R}}, n \in {\mathbb{N}}\),

$$ \phi_i ( s,n ) \geq\delta. $$(2.6) -

2.

We have that

$$ G (1) + \sum_{ n = 2 }^\infty( 1 - \delta)^{ n - 2} n^2 G ( n) < \infty, $$(2.7)where \(G ( n) = \sup_{i} \sum_{ m = 1}^{n} g_{i} (m) \) and where δ is as in condition 1.

-

3.

We have fast decay of the synaptic weights, i.e.

$$ \sup_i \sum _{ k \geq1 } \big| V_i (k ) \big| \biggl( \sum _{ j \notin V_i (k - 1 ) } |W_{j \to i }| \biggr) < \infty. $$(2.8)

Then under these conditions (2.6)–(2.8), there exists a critical parameter δ ∗∈ ]0,1[ such that for any δ>δ ∗, there exists a unique probability measure P on \(\{ 0 , 1 \}^{ I \times {\mathbb{Z}}} \), under which the canonical chain satisfies (2.3) and (2.4).

Remark 1

The stochastic chain \((X_{t})_{t \in {\mathbb{Z}}}\) introduced in Theorem 1 is a chain having memory of infinite order (cf. [1, 4, 8, 9, 18, 20]). The setup we consider here extends what has been done in the above cited papers. First of all, the chain we consider takes values in the infinite state space {0,1}I. Moreover, in Theorem 1 no summability assumption is imposed on the functions g j . In particular, the choice g j (t)≡1 is possible. This implies that the specification of the chain is not continuous. More precisely, introducing

where \(L_{t}^{i} (x) = \sup\{ s < t : x_{s} (i ) = 1 \} \), we have that

in the case g j (t)≡1 for all j, which can be seen by taking configurations x and y such that \(L_{t}^{i} (x) < t - k \) and \(L_{t}^{i} (y ) < t-k \). A similar type of discontinuity has been considered in [14] for stochastic chains with memory of variable length taking values in a finite alphabet.

As an illustration of Theorem 1 we give the following example of a system with interactions of infinite range.

Example 1

We give an example of a system satisfying the assumptions of Theorem 1. Take \(I = {\mathbb{Z}}^{d} \), g j (s)=1 for all j,s, and \(W _{ i \to j } = \frac{ 1 }{ \|j- i\|_{1}^{2 d + \alpha} }\) for some fixed α>1, where ∥⋅∥1 is the L 1-norm of \({\mathbb{Z}}^{d} \). In this case, if we choose \(V_{i} (k ) = \{ j \in {\mathbb{Z}}^{d} = \| j - i \| _{1} \leq k \}\), we have |V i (k)|=(k+1)d, and condition (2.8) is satisfied, since

as α>1.

The next theorem deals with the second type of system. Now we don’t assume a minimal spiking rate. But additionally to the fast decay of the synaptic weights we also assume a sufficiently fast decay of the aging factor g j , see condition (2.9) below. This additional assumption implies that the specification of the chain is continuous. This is the main difference with the setup of Theorem 1.

Theorem 2

(Existence and uniqueness in systems with uniformly summable memory)

Suppose that ϕ i (s,n)=ϕ i (s) does not depend on n. Assume conditions (2.2) and (2.5) and suppose moreover that

where γ is given in (2.5).

Then there exists a unique probability measure P on \(\{ 0 , 1 \}^{ I \times {\mathbb{Z}}} \) such that under P, the canonical chain satisfies (2.3) and (2.4).

Now, for any \(s < t \in {\mathbb{Z}}\), let \(X_{s}^{t} (i) = ( X_{s} (i), X_{ s+1} ( i ) , \ldots, X_{t} (i) ) \) the trajectory of X(i) between times s and t. As a byproduct of the proof of Theorems 1 and 2 we obtain the following loss of memory property.

Corollary 1

-

1.

Under the assumptions of either Theorem 1 or Theorem 2, there exists a non increasing function \(\varphi: {\mathbb{N}}\to {\mathbb{R}}_{+} \), such that for any \(0 < s < t \in {\mathbb{N}}\) the following holds. For all i∈I, for all bounded measurable functions \(f : \{ 0, 1 \}^{ [ s, t ] } \to {\mathbb{R}}_{+} \),

$$ \big| E \bigl[ f \bigl( X_s^t (i) \bigr) \big| \mathcal{F}_0 \bigr] - E \bigl[ f \bigl( X_s^t (i) \bigr) \bigr] \big| \leq ( t-s +1 ) \| f \|_\infty\, \varphi(s) . $$(2.10)Moreover, \(\varphi( n) \leq C \frac{1}{n- 1} \) for some fixed constant C.

-

2.

Under the assumptions of Theorem 2, suppose moreover that there exists a constant C>0 such that

$$ g_j (n ) \leq C e^{ - \beta n } \quad \mbox{\textit{and}}\quad \sup_{i } \sum_{ j \notin V_i (n) } | W_{ i \rightarrow j } | \leq C e^{ - \beta n }, $$(2.11)for all \(j \in I , n \in {\mathbb{N}}\), for some β>0.

Then there exists a critical parameter β ∗ such that if β>β ∗, (2.10) holds with

$$ \varphi( s) = C \varrho^s \quad \mbox{\textit{for some }} \varrho\in\,] 0 , 1 [\mbox{ \textit{depending only on} } \beta. $$(2.12)

The proof of Theorem 1 is given in Sect. 4 below. It is based on a conditional Kalikow-type decomposition of the transition probabilities ϕ i , where we decompose with respect to all possible interaction neighborhoods of site i. A main ingredient is the construction of an associated branching process in random environment. The setup of Theorem 2 is conceptually less difficult, since in this case the transition probabilities are continuous. This follows from the summability of the aging factors g j . The proof of Theorem 2 relies on a space-time Kalikow-type decomposition presented in Sect. 5.

Remark 2

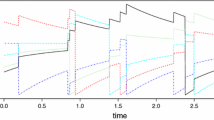

The proofs of both Theorem 1 and Theorem 2 imply the existence of a perfect simulation algorithm of the stochastic chain \((X_{t})_{t \in {\mathbb{Z}}} \). By a perfect simulation algorithm we mean a simulation which samples in a finite space-time window precisely from the stationary law P. In the setup of Theorem 2 the simulation can be implemented analogously to what is presented in Galves et al. [15]. The setup of Theorem 1 requires a conditional approach, conditionally on the realization of the spontaneous spike times.

3 Correlations Between Inter-Spike Intervals in the Critical Directed Erdös-Rényi Random Graph

We consider a finite system consisting of a large number of N neurons with random synaptic weights W i→j ,i≠j. The sequence W i→j ,i≠j, is a sequence of i.i.d. Bernoulli random variables defined on some probability space \(( \tilde{\varOmega}, \tilde{\mathcal{A}} , \tilde{P} ) \) with parameter p=p N , i.e.

where

If we represent this as a directed graph where the directed link i→j is present if and only if W i→j =1, we obtain what is called a “critical directed Erdös-Rényi random graph”. For a general reference on random graphs we refer the reader to the classical book by Bollobás [2].

We put W j→j ≡0 for all j. Notice that the synaptic weights W i→j and W j→i are distinct and independent random variables. Conditionally on the choice of the connectivities W=(W i→j ,i≠j), the dynamics of the chain are then given by

Here we suppose that ϕ i is a function only of the accumulated and weighted number of spikes coming from interacting neurons, but does not depend directly on the time elapsed since the last spike.

P W denotes the conditional law of the process, conditioned on the choice of W. We write \({\mathbb{P}}\) for the annealed law where we also average with respect to the random weights, i.e. \({\mathbb{P}}= \tilde{E} [ P^{W} ( \cdot) ] \), where \(\tilde{E} \) denotes the expectation with respect to \(\tilde{P}\).

Fix a neuron i and consider its associated sequence of successive spike times

where

and

Let us fix W. We are interested in the covariance between successive inter-spike intervals

for any k≠0,1. Since the process is stationary, the above covariance does not depend on the particular choice of k. The next theorem shows that neighboring inter-spike intervals are asymptotically uncorrelated as the number of neurons N tends to infinity.

Theorem 3

Assume that (2.5), (2.6) and (2.7) are satisfied. Then there exists a measurable subset \(A \in\tilde{\mathcal{A}} \), such that on A,

where δ is the lower bound appearing in condition (2.6). Moreover,

For large N, if the graph of synaptic weights belongs to the “good” set A, the above result is compatible with the discussion in [16]. Gerstner and Kistler [16] deduce from this that spiking can be described by a renewal process. However, for small N or on A c, the correlation might as well be quite big, as reported by Nawrot et al. (2007) who show that neighboring inter-spike intervals are negatively correlated. Therefore, both features are captured by our model, depending on the scale we are working in.

The proof of the above theorem is given in Sect. 6 below.

4 Conditional Kalikow-Type Decomposition and Proof of Theorem 1

In order to prove existence and uniqueness of a chain consistent with (2.3) and (2.4), we introduce a Kalikow-type decomposition of the infinite order transition probabilities. This type of decomposition was considered in the papers by Ferrari, Maass, Martínez and Ney [13], Comets, Fernández and Ferrari [7] and Galves et al. [15]. All these papers deal with the case in which the transition probabilities are continuous. This is not the case here. We are dealing with a more general case in which the transition probabilities might as well be discontinuous, see the discussion in Remark 1. This makes our approach new and interesting by itself. The new ingredient is a construction of a decomposition depending on a random environment. This random environment is given by the realization of the spontaneous spikes.

More precisely, condition (2.6) allows to introduce a sequence of i.i.d. Bernoulli random variables \((\xi_{t} (i ) , i \in I, t \in {\mathbb{Z}})\) of parameter δ, such that positions and times (i,t) with ξ t (i)=1 are spike times for any realization of the chain. We call these times “spontaneous spike times”. We work conditionally on the choice of \(\xi_{t} (i ) ,t \in {\mathbb{Z}}, i \in I \). In particular we will restrict everything to the state space

which is the space of all configurations compatible with ξ, i.e. all neural systems x such that every spike time of ξ is also a spike time of x. We write

for the last spontaneous spike time of neuron i before time t. Moreover, for \(x \in\mathcal{ S}^{\xi}\), we put \(L_{t}^{i} = L_{t}^{i} (x) = \sup \{ s < t : x_{s} (i ) = 1 \} \).

Consider a couple (i,t) with ξ t (i)=0. In order to prove Theorem 1 we need to introduce the following quantities which depend on the realization of ξ, namely

which is the minimal probability that neuron i spikes at time t, uniformly with respect to all configurations, and

which is the minimal probability that neuron i does not spike at time t.

Notice that for all \(x \in{ \mathcal{S}^{\xi}}\), \(L_{t}^{i} (x) \geq R_{t}^{i} \). Hence in the above formulas, only a finite time window of the configuration x is used, and uniformly in \(x \in{ \mathcal{S}^{\xi}}\), this time window is contained in \((x_{s} (j), R_{t}^{i} \leq s \leq {t- 1} , j \in I ) \). In particular the above quantities are well-defined.

Now fix \(x \in{ \mathcal{S}^{\xi}}\). For any k≥0, we write for short \(x_{L_{t}^{i} }^{t-1} (V_{i}(k)) \) for the space-time configuration

and put

In what follows and whenever there is no danger of ambiguity, we will write for short x(V i (k)) and \(r_{(i,t)}^{[k]} ( a | x (V_{i}(k)))\) or \(r_{(i,t)}^{[k]} ( a |x) \) instead of \(x_{L_{i}^{t} }^{t-1} (V_{i}(k))\) and \(r_{(i,t)}^{[k]} ( a | x_{L_{t}^{i} }^{t-1} (V_{i}(k)))\).

We put

and

Note that λ (i,t)(k)∈[0,1] and that ∑ k≥−1 λ (i,t)(k)=1 almost surely with respect to the realization of \((\xi_{t} (i ) , i \in I, t \in {\mathbb{Z}})\).

Remark 3

The λ (i,t)(k),k≥0, are random variables that depend only on the realization of \((\xi_{t} (i ) , i \in I, t \in {\mathbb{Z}})\). More precisely, for any i,t and k, λ (i,t)(k) is measurable with respect to the sigma-algebra \(\sigma( \xi_{s} (j) , R_{t}^{i} \leq s < t , j \in I )\). We write \(\lambda_{(i,t)}^{\xi}( k) , k \geq0\), in order to emphasize the dependence on the external field ξ.

We introduce the short hand notation

and

The proof of Theorem 1 is based on the following proposition.

Proposition 1

Given ξ, for all (i,t) with ξ t (i)=0, there exists a family of conditional probabilities \((p_{(i,t)}^{[k], \xi} (a|x))_{ k \geq0 } \) satisfying the following properties.

-

1.

For all a, k≥0, \({\mathcal{S}^{\xi}}\ni x \mapsto p_{(i,t)}^{[k], \xi} ( a | x ) \) depends only on the variables \((x_{s} ( j ): L_{t}^{i} \leq s \leq t-1 , j \in V_{i} (k) ) \).

-

2.

For all \(x \in{ \mathcal{S}^{\xi}}\), k≥0, \(p_{(i,t)}^{[k] , \xi} ( 1 | x ) \in[0, 1 ]\), \(p_{(i,t)}^{[k], \xi} ( 0| x ) + p_{(i,t)}^{[k], \xi } ( 1 | x ) = 1 \).

-

3.

For all a,x,k≥0, \(p_{(i,t)}^{[k], \xi} ( a | x ) \) is a \(\sigma( \xi_{s} (j) , R_{t}^{i} \leq s \leq t-1 , j \in I)\)-measurable random variable.

-

4.

For all \(x \in{ \mathcal{S}^{\xi}}\), we have the following convex decomposition.

$$ p_{(i,t)} ( a | x ) = \lambda_{(i,t)} ( -1 ) p_{(i,t) }^{[-1] , \xi} ( a) + \sum_{ k \geq0 } \lambda_{(i,t)} ( k ) p_{(i,t) }^{[k] , \xi} \bigl( a\big| x \bigl( V_i (k) \bigr) \bigr) , $$(4.24)where

$$p_{(i,t) }^{[-1], \xi} ( a) = \frac{ r_{(i,t)}^{[-1]} ( a)}{ \lambda _{(i,t)} ( -1 ) } . $$

From now on, we shall omit the subscript ξ whenever there is no danger of ambiguity and write \(p_{(i,t)}^{[ k ]} \) instead of \(p_{(i,t) }^{[k] , \xi} \).

Remark 4

The decomposition (4.24) of the transition probability p (i,t)(⋅|x) can be interpreted as follows. In a first step, we choose a random spatial interaction range k∈{−1,0,1,…} according to the probability distribution {λ (i,t)(k),k≥−1}. Once the range of the spatial interaction is fixed, we then perform a transition according to \(p_{(i,t) }^{[k]} \) which depends only on the finite space-time configuration \(x_{L_{t}^{i} }^{t-1} (V_{i}(k)) \). A comprehensive introduction to this technique can be found in the lecture notes of Fernández et al. [10].

Example 2

Suppose that all interactions are excitatory, i.e. W j→i ≥0 for all i≠j. Suppose further that g i (s)=1 for all \(s \in {\mathbb{N}}, i \in I\), and that Φ i (s,n)=Φ i (s) does not depend on n and is non-decreasing in s. Then

Hence,

Similarly,

and

Proof of Proposition 1

We have for any N≥1, a∈{0,1} and \(x \in{ \mathcal{S}^{\xi}}\),

where

Now, due to condition (2.5),

as N→∞ due to (2.2). In the above upper bound we used that

almost surely, which is a consequence of (2.7). Therefore we obtain the following decomposition.

Now let

Moreover, for k≥0, put

and for any (i,t),k such that \(\tilde{\lambda}_{(i, t)} (k, x (V_{i} (k ))) > 0\), we define

For (i,t),k such that \(\tilde{\lambda}_{(i, t)} (k, x (V_{i} (k )))= 0\), define \(\tilde {p}_{(i,t)}^{[k]} (a | x(V_{i} (k )) )\) in an arbitrary fixed way. Hence

In (4.27) the factors \(\tilde{\lambda}_{(i,t)} (k, x(V_{i}(k))), k \geq0\), still depend on \(x^{t-1}_{L_{t}^{i}} (V_{i}(k)) \). To obtain the desired decomposition, we must rewrite it as follows.

For any (i,t), take the sequences α (i,t)(k),λ (i,t)(k),k≥−1, as defined in (4.21) and (4.22), respectively. Define the new quantities

and notice that

is the total mass associated to \(r_{(i,t)}^{[k]} (\cdot, x( V_{i} ( k ))) \). From now on and for the rest of the proof we shall write for short α (i,t)(k,x) instead of writing α (i,t)(k,x(V i (k))).

Reading (4.27) again, this means that for any k≥0, we have to use the transition probability \(\tilde{p}_{(i,t)}^{[k]} \) on the interval ]α (i,t)(k−1,x),α (i,t)(k,x)].

By definition of α (i,t)(k) in (4.21), α (i,t)(k) is the smallest total mass associated to \(r_{(i,t)}^{[k]}\), uniformly with respect to all possible neighborhoods x(V i (k)). Hence, in order to get the decomposition (4.24) with weights λ (i,t)(k) not depending on the configuration, we have to define a partition of the interval [0,α (i,t)(k,x)] according to the values of α (i,t)(k) and we have to define probabilities \(p_{(i,t)}^{[k]} \) working on the intervals ]α (i,t)(k−1),α (i,t)(k)]. This can be done as follows.

Fix k≥0 and suppose that for some l′≤l≤k−1,

and therefore

Hence the probability \(p_{(i,t)}^{[k]} \) that has to be defined on the interval ]α (i,t)(k−1),α (i,t)(k)] has to be decomposed according to the above decomposition into sub-intervals. On the first interval ]α (i,t)(k−1),α (i,t)(l′,x)], we have to use the original probability \(\tilde{p}^{[l']}_{(i,t)} \), on each of the intervals ]α (i,t)(m−1,x),α (i,t)(m,x)], we have to use \(\tilde{p}_{(i,t)}^{ [m]} \), and finally on ]α (i,t)(l,x),α (i,t)(k)], we use \(\tilde{p}_{(i,t)}^{ [ l+1 ] } \).

This yields, for any k≥0, the following definition of the conditional finite range probability densities.

Note that by construction, the above defined probability \(p_{(i,t)}^{[k]} \) depends only on the configuration x(V i (l+1)), hence at most on x(V i (k)), since l≤k−1. Multiplying the above formula with λ (i,t)(k) and summing over all k shows that the decomposition (4.27) implies the desired decomposition (4.24). This finishes our proof. □

Thanks to condition (2.5), the following estimate holds. It will be useful in the sequel.

Lemma 1

We have for all k≥1,

We use this estimate in

Proof of Theorem 1

We work conditionally on the realization of the process \(\xi_{t} (i ) , t \in {\mathbb{Z}}, i \in I \). Take a couple \(( i, t) , i \in I , t \in {\mathbb{Z}}\), such that ξ t (i)=0. We have to show that it is possible to decide in a uniquely determined manner if t will be a spike time or not. In order to achieve this decision, we have to calculate p (i,t)(⋅|x), where x is the unknown history of the process.

We will construct a sequence of sets \((C_{n}^{(i,t)})_{n} \), \(C_{n}^{(i,t)} \subset I \times]- \infty, t- 1] \), which contain the sets of sites and anterior spike times that have an influence on the appearance of a spike at time t for neuron i. The choice of these sets is based on the decomposition (4.24).

First, for any couple (j,s) with ξ s (j)=0, we choose, independently from anything else, an interaction neighborhood \(\mathcal {V}_{(j,s)} = V_{j} (k) , k \geq-1 \), with probability \(\lambda _{(j,s)}^{\xi}( k) \). Here, we put V j (−1)=∅. A choice k=−1 for a couple (j,s) implies that we can immediately decide to accept a spike at time s for neuron j with probability

and to reject it with probability

We suppose that the choice of all \(\mathcal{V}_{(j,s)} \) is fixed. Then by (4.24), the decision concerning the couple (i,t) depends on the configuration of the past \(x_{L_{t}^{i} }^{t-1} ( \mathcal{V}_{(i,t)} )\). Since we do not know \(L_{t}^{i} \), we use the a priori estimate on \(L_{t}^{i} \) which is given by \(R_{t}^{i} \). Thus we consider the worst case in which we have to evaluate the past up to time \(R_{t}^{i} \).

In order to decide about the length \(t - L_{t}^{i} \), we have to assign values 0 or 1 to all couples \(( i, s ), R_{t}^{i} < s \leq t \). All these couples are influenced by their associated interaction region \(\mathcal{V}_{ (i, s)} \). So we consider

It is clear that if we know the values of all couples belonging to \(C_{1}^{(i,t)} \) then we are able to assign a value to any \((i, s), R_{t}^{i} < s \leq t \). Therefore, we call \(C_{1}^{(i,t)}\) the set of ancestors of generation 1 of (i,t). Continuing this procedure, any couple \(( j,s) \in C_{1}^{ (i,t)} \) itself has to be replaced by the set of its ancestors \(C_{1}^{(j,s)} \). We put

where \(C_{1}^{(j,s )}\) is defined as in (4.30), and then recursively,

We will show below that almost surely there exists a first time n<∞ such that \(C_{n}^{(i,t)} = \emptyset\). Then necessarily \(C_{n-1}^{(i,t)} \) consists only of couples (j,s) which chose an interaction neighborhood of range −1 or which interact only with couples representing spontaneous spiking. Thus we can decide to accept or to reject a spike for any of them independently of anything else. Once the values associated to all elements of \(C_{n-1}^{(i,t)} \) are known, we can realize all decisions needed in order to attribute values to the elements of \(C_{n-2}^{(i,t)} \). In this way, we will be able to assign values in a recursive way to all ancestor sets up to the first set, \(C_{1 }^{(i,t)} \), which allows us finally to assign a value for neuron i at time t. We call this procedure the forward coloring procedure and we call Y t (i) the value of neuron t at time t obtained at the end of this procedure.

1. We first show that the probability constructed above is the law of X t (i) under the unique invariant measure P. Put \(C_{\infty}^{(i,t)} = \bigcup_{ n \geq1 } C_{n}^{(i,t)} \) and let

Fix some initial space-time configuration \(\eta\in\{ 0, 1 \}^{ I \times- {\mathbb{N}}}\) such that η 0(i)=1 for all i∈I. Let \(X_{t}^{\eta}\) be the chain that evolves according to (2.3) and (2.4), conditionally on \(X_{- \infty}^{0} = \eta \). Recall that Y t (i) is the value obtained at the end of the above described forward coloring procedure. Then for any \(f: \{ 0, 1 \} \to {\mathbb{R}}_{+} \),

But

Hence we obtain that

since \(1_{\{ T^{(i,t)}_{\mathit {STOP}} < t \} } \to1 \) almost surely.

This implies that P is the unique invariant measure of the process.

2. We now show that the above procedure stops after a finite time almost surely. For that sake we put

Our goal is to show that \(N_{\mathit {STOP}}^{(i,t)} < \infty\) almost surely. Notice that this implies that \(T_{\mathit {STOP}}^{(i,t)} < \infty\) as well. In order to do so, let

be the cardinal of the set of ancestors after n steps of the above procedure. Then

As a consequence, it is sufficient to show that \(E( | C_{n}^{(i,t)} |) \to0 \) as n→∞. For that sake we compare \(( | C_{n}^{(i,t)} |)_{n}\) to a branching process in random environment, where the environment is given by the i.i.d. field ξ.

Recall the definition of \(G(n) = \sum_{ m=1}^{n} \sup_{i} g_{i} (m)\) and the upper bound (4.29). Thus for any k≥1,

Let

Notice that \(\bar{\lambda}_{(i,t)} (k) \) depends only on the realization of the i.i.d. field ξ through the value of \(t- R_{t}^{i} \).

Since \(\lambda_{(i,t)} (k ) \leq \bar{\lambda}_{(i,t)} (k) \) for all k≥1, it is possible, by standard branching process coupling arguments, to construct a sequence \(( \bar{C}_{n}^{(i,t)})_{n} \) coupled with the original sequence \(( C_{n}^{(i,t)})_{n} \) satisfying the following properties.

-

1.

\(\bar{C}_{n}^{(i,t)} \) is defined through (4.30) and (4.31) by using the family \((\bar{\lambda}_{(i,t)} (k ) ) \) instead of (λ (i,t)(k)).

-

2.

For all n, \(|C_{n}^{(i,t)}| \leq |\bar{C}_{n}^{(i,t)}|\).

Hence it is sufficient to show that \(E ( |\bar{C}_{n}^{(i,t)} | ) \) tends to 0 as n→∞. In order to do so, we will control the reproduction mean depending on the environment ξ. Here, reproduction mean stands for the mean number of sites belonging to \(\bar{C}_{1}^{(j,s)}\), for any fixed couple (j,s), where the expectation is taken with respect to the choices of the interaction neighborhoods \(\bar{\mathcal{V}}_{(i,s)}\), conditionally on the realization of ξ. More precisely, given \(\bar{C}_{n}^{(i,t)} = c\), the reproduction mean of any couple (j,s) belonging to \(\bar{C}_{n}^{(i,t)} \) is given by

where E ξ denotes expectation conditionally on ξ.

In what follows we upper bound the above expression. We first use that, by definition (4.37), for \(\tilde{s} \leq s\), \(\bar{\lambda}_{(j,\tilde{s})} (k ) \leq \bar{\lambda}_{(j, s)} (k ) \). Therefore,

Recalling the explicit form of \(\bar{\lambda}_{(j,s)} (k) \) in (4.37) and using moreover the upper bound

on the event \(R_{j}^{s} < s- 1 \), we obtain

Observe that \(\bar{m}_{(j,s)}^{ c }\) depends on ξ, but to avoid too cumbersome notation, we omit the superscript ξ.

Taking conditional expectation, conditionally with respect to ξ, we get

Therefore, taking expectation with respect to ξ,

Now note that the event

depends only on \(R_{\tilde{s} }^{k} \). Hence, recalling (4.38), the above event is independent of \(\bar{m}_{(j,s)}^{ \bar{C}_{n-1}^{(i,t)}} \) which depends only on \(s - R_{s}^{j} \). As a consequence,

But

We put

and define

Then

Note that e(δ) is decreasing as a function of δ and tends to 0 for δ→1. In particular, if we put

then e(δ)<1 for all δ≥δ ∗. Now we may iterate (4.40) and (4.43) and obtain

Since e(δ)n→0 as n→∞ for δ≥δ ∗, this implies our result. □

Remark 5

Note that the value of δ ∗ given in (4.44) can be explicitly calculated depending on the specific structure of the aging functions g j (s). For instance, if g j (s)=1 for all j,s, then G(n)=n for all n, and the value of δ ∗ follows from standard evaluations of the third moment of a geometrical distribution.

Remark 6

The above proof uses a non-trivial extension of the so-called Clan of ancestors method employed by Fernàndez et al. [11, 12]. In these papers the authors study the Clan of ancestors of a given vertex (or object) in a random system. They prove that this set is almost surely finite and deduce a perfect simulation algorithm.

Proof of Corollary 1, part 1

We prove the first part of Corollary 1. We keep the notation of the proof of Theorem 1. Put \(C_{\infty}^{(i, t)} = \bigcup_{n} C_{n}^{(i,t)} \). Then the random variable \(T_{\mathit {STOP}}^{(i,t)} \) defined in (4.33) can be trivially upper bounded by

which is the total number of elements appearing in the ancestor process. This is a very rough upper bound on the number of steps that we have to look back into the past in order to choose a value for X i (t).

By construction, the value X i (t) depends on all choices of interaction regions \(\mathcal{V}_{ (j, s)} \) such that \((j, s) \in C_{\infty}^{(i, t)} \) and on the values of the i.i.d. Bernoulli field ξ u for \(t - T_{\mathit {STOP}}^{(i,t)} \leq u \leq t \). Moreover, for any \((j,s) \in C_{\infty}^{(i, t)} \), a random decision has to be made whether to associate the value +1 or 0 to this couple. These decisions can be realized based on a sequence of i.i.d. uniform random variables \((U_{t} (i), i \in I, t \in {\mathbb{Z}})\), which are uniform on [0,1]. As a consequence, X t (i) is measurable with respect to the sigma-algebra

Writing

we deduce that

Let \(A \in {\mathcal{F}}_{0} \). We write E ξ for conditional expectation, conditionally with respect to ξ. Then we have

Clearly, \(f ( X_{s}^{t} (i)) 1_{\{ R > 0 \}} \) and A are independent, which follows from (4.48). Hence

We take expectation with respect to ξ and use the fact that \(E^{\xi}[ f( X_{s}^{t} (i)) 1_{ \{ R > 0 \}} ] \) is measurable with respect to σ{ξ u :1≤u≤t} and P ξ(A) is measurable with respect to σ{ξ u :u≤0}. Hence by independence

and therefore

As a consequence,

Here we have used that {R≤1} is independent of A. Indeed, the event {R≤1} does only depend on choices of \(\mathcal{V}_{ (j,s)} \) and ξ s (j) having time component s≥1. Now we conclude as follows. By definition of R,

Using (4.46), we obtain

since

where we have used (4.45). This implies the result. □

5 A Space-Time Kalikow-Type Decomposition and Proof of Theorem 2

The additional condition (2.9) allows to introduce a space-time Kalikow-type decomposition without the assumption of existence of spontaneous spikes. We will decompose with respect to increasing space-time neighborhoods V i (k)×[−k−1,−1],k≥−1, where V i (−1)=∅. Write \(\mathcal{ S} = \{ 0, 1 \}^{I \times {\mathbb{Z}}} \) for the state space of the process and introduce, analogously to (4.16)–(4.19),

and then for any k≥1,

Putting

we obtain the following space-time Kalikow-type decomposition for

Proposition 2

Under the conditions (2.2), (2.5) and (2.9), for any i∈I, the above defined quantities λ i (k) take values in [0,1] and

Moreover, there exists a family of conditional probabilities \((p_{(i,t)}^{[k]} ( a |x ) )_{ k \geq0 } \) satisfying the following properties.

-

1.

For all a, \(\mathcal{ S} \ni x \mapsto p_{(i,t)}^{[0] } ( a | x ) \) depends only on the variable x t−1(i).

-

2.

For all a, k≥1, \(\mathcal{ S} \ni x \mapsto p_{(i,t)}^{[k] } ( a | x ) \) depends only on the variables (x s (j):t−k−1≤s≤t−1,j∈V i (k)).

-

3.

For all \(x \in\mathcal{ S} \), k≥0, \(p_{(i,t)}^{[k] } ( 1 | x ) \in[0, 1 ]\), \(p_{(i,t)}^{[k] } ( 0| x ) + p_{(i,t)}^{[k]} ( 1 | x ) = 1 \).

-

4.

For all \(x \in\mathcal{ S}\), we have the following convex decomposition

$$ p_{(i,t)} ( a | x ) = \lambda_{i} ( -1 ) p_{(i,t) }^{[-1] } ( a) + \sum_{ k \geq0 } \lambda_{i } ( k ) p_{(i,t) }^{[k] } ( a| x) , $$(5.56)where

$$p_{(i,t) }^{[-1] } ( a) = \frac{ r_{i}^{[-1]} ( a)}{ \lambda_{i} ( -1 ) } . $$

The proof of this proposition follows the lines of the proof of Proposition 1. Moreover, the following estimates hold.

and for all k≥1,

Proof of Theorem 2

We construct again a sequence of sets \((C_{n}^{(i,t)})_{n} \subset I \times\,]- \infty, t- 1] \) which contain the sets of sites and anterior spike times that have an influence on the appearance of a spike at time t for neuron i. The choice of these sets is based on the decomposition (5.56).

First, we choose for any couple (j,s), independently from anything else, a space-time interaction neighborhood \(\mathcal{ O}_{(j,s)} \subset I \times[ - \infty, s- 1 ] \)

Then we put

The process \(| C_{n}^{(i,t)} | \) can be compared to a classical multi-type branching process with reproduction mean

Now, condition (2.9) together with the estimates (5.57) and (5.58) shows that m:=sup i m i <1. As a consequence,

and this finishes the proof. □

Proof of Corollary 1

The proof of the first part of corollary 1 is analogous to the proof under the conditions of Theorem 1. We give the proof of the second part of the corollary. We keep the notation of the proof of Theorem 2. We define the projection on the time coordinate

Define recursively

and let finally

Then as in the proof of the first part of the corollary,

where

We have

where

In what follows, C will denote a constant that might change from line to line, but that does not depend on β.

We wish to compare \(u- T^{(u,t) }_{\mathit {STOP}}\) to the total offspring of a classical Galton-Watson branching process. In order to do so, notice first that using (5.57) and (5.58), we get for any k≥1,

where we have used (2.11). Moreover,

It is immediate to see that for β sufficiently large, \(\sum_{ k \geq0} \bar{\lambda}(k) < 1 \), since

as β→∞. Therefore we can couple \(u- T^{(i,u) }_{\mathit {STOP}} \) with the total offspring \(\mathcal{ T}\) of a classical Galton-Watson process, where each particle has k+1 offspring, k≥0, with probability \(\bar{\lambda}(k ) \), and offspring 0 with probability \(1 - \sum_{ k \geq0 } \bar{\lambda}( k) \). This coupling can be done such that \(u- T^{(i,u) }_{\mathit {STOP}} \leq \mathcal{ T} \). Thus it is sufficient to evaluate the law of the total offspring \(\mathcal{ T}\). For that sake let W n be a random walk starting from 0 at time 0, with step size distribution η, where

Then \(\mathcal{ T} \stackrel{\mathcal{L}}{=} T_{-1} = \inf\{ n : W_{n} = - 1 \} \). Hence for any λ∈ ]0,β[, since u≥s,

where

Now, fix λ=1, then there exists β ∗ such that for all β≥β ∗, φ(1)<1. Putting ϱ=φ(1) and C=ϱ −2 yields the desired result. □

6 Proof of Theorem 3

In order to prove Theorem 3, we introduce the sequence of sets

Note that \(j \in\mathcal{V}_{i \to\cdot}\) if and only if neuron i has a direct influence on the spiking behavior of neuron j. We put

This is the first time that an information emitted by neuron i can return to neuron i itself.

Recall that λ=1+ϑ/N and define \(\mu= \frac {N-2}{N} \lambda\). We have the following lower bound.

Proposition 3

For any k the following inequality holds.

Proof

Put

The sequence of sets \(\tilde{\mathcal{V}}^{n}_{i \to\cdot} , n \geq1 \), equals the original sequence \(\mathcal{V}^{n}_{i \to\cdot} , n \geq1 \), except that we excluded the choice of i itself. On {τ i>k}, clearly \(\bigcup_{ n \leq k- 1 } \mathcal{V}^{n}_{i \to\cdot} = \bigcup_{ n \leq k- 1 } \tilde{\mathcal{V}}^{n}_{i \to\cdot}\), and we can write

Since in the definition of \(\tilde{\mathcal{V}}^{n}_{i \to\cdot} \), no choice W ⋅→i has been made, we can condition with respect to \(\bigcup_{ n \leq k- 1 } \tilde{\mathcal{V}}^{n}_{i \to\cdot } \), use the fact that for any \(j \in\bigcup_{ n \leq k- 1 } \tilde{\mathcal{V}}^{n}_{i \to\cdot}\), the random variable W j→i is independent of \(\bigcup_{ n \leq k- 1 } \tilde{\mathcal{V}}^{n}_{i \to \cdot } \), and obtain the following equality

We conclude as follows. We can couple the process \(| \tilde{\mathcal{V}}^{n}_{i \to\cdot} |, n \geq2 \), with a classical Galton-Watson process Z n ,n≥2, starting from \(Z_{1} = \mathcal{V}^{1}_{i \to\cdot} \), such that \(| \tilde{\mathcal{V}}^{n}_{i \to\cdot} | \leq Z_{n} \) for all n≥2. The Galton-Watson process has offspring mean \(\mu= (N- 2 ) \frac{ \lambda}{N} \). Here, the factor N−2 comes from the fact that any j has N−2 choices of choosing arrows W j→⋅, since j itself and i are excluded.

Therefore,

Write Σ k−1=Z 1+⋯+Z k−1 and let \(\tilde{E} (s^{\varSigma_{k-1}}), s \leq 1 \), be its moment generating function. Using the convexity of the moment generating function, we have that

Using that \(\tilde{E} ( Z_{1} ) = \frac{N-1}{N} \lambda\) and that the offspring mean equals μ, the claim follows from

since μ≤λ and λ≥1. Hence, evaluating the above lower bound in s=1−p N , we obtain

and therefore,

since p N =λ/N. Using that λ=1+ϑ/N, we obtain the assertion. □

In what follows, \(a_{-k}^{-1} \) denotes the finite sequence (a −k ,…,a −1). In particular, the notation \(a_{-l}^{-1} 1 0^{k-1} \) denotes the sequence given by (a −l ,…,a −1,1,0,…,0). We write for short

for the transition probability of neuron i, given a fixed choice of synaptic weights W. However, conditionings will be read from the left to the right. In particular, we write

The following proposition shows that on the event {τ i>k+l}, the two transition probabilities \(p^{(W,i)} (1 | 0^{k-1} 1 a_{-l}^{-1} ) \) and p (W,i)(1|0k−11) necessarily coincide.

Proposition 4

For any k≥1,l≥1,

Proof

Let W be fixed. From now on, since we will work for this fixed choice of W, we will omit the superscript W and write for short \(p^{i} ( a | a_{-k}^{-1} ) \) instead of \(p^{(W,i)} ( a | a_{-k}^{-1} ) \). We have

Thus,

The same calculus shows that

This shows that in order to ensure that \(p^{i} (1 | 0^{k-1} 1 a_{-l}^{-1} ) = p^{i} ( 1 | 0^{k-1} 1) \), it is sufficient to have

for all possible choices of \(z_{0}^{k-1} (j) , j \in\mathcal{V}_{i} \), which is implied by τ i>k+l. □

Proof of Theorem 3

In this proof, without loss of generality and to simplify the presentation, we suppose that \(\tilde{\varOmega}\) is the canonical state space of W. We will use the spontaneous spike times \(\{ n \in {\mathbb{Z}}: \xi_{n} (i) = 1 \} \) introduced in the proof of Theorem 1, in Sect. 4 above. We recall that these are independent Bernoulli random variables with P(ξ n (i)=1)=δ for all i∈{1,…,N}, for all \(n \in {\mathbb{Z}}\). Write \(l = \sup\{ n < S^{i}_{2} : \xi_{n} (i) = 1 \} \) and \(r = \inf\{ n > S^{i}_{2} : \xi_{n} (i ) = 1 \} \). Put

where k(N) is such that k(N)→∞ as N→∞ and k(N)≤N. We will fix the choice of k(N) later. We have for any realization of W∈A,

Using that conditionally on \(S^{i}_{2} \), \(r- S^{i}_{2}\) and \(S^{i}_{2} - l\) are independent and geometrically distributed, we obtain a first upper bound

We now consider the second term and use that τ i>2k(N). We have

where

Now, since \(S^{i}_{3} \leq r\),

Notice that

Now we use Proposition 4. Since we are working on {τ i>2k(N)}, we have

for all n≤k(N). Therefore,

We conclude that on A, using successively (6.63)–(6.66),

In a second step, we are seeking for lower bounds. We start with

Then on \(\{ S_{2}^{i} = t \} \),

Now, on \(\{ S_{2}^{i} = t \} \),

Therefore, for any realization W∈A,

Putting things together and supposing that k(N)+2≤k(N)2, we obtain finally

It remains to find an upper bound for \({\mathbb{P}}( A^{c} ) \). Clearly, applying Proposition 3, since k(N)≤N, we have

It is enough to choose \(k(N) = \sqrt{N} \) to conclude the proof. □

References

Berbee, H., Chains with infinite connections: uniqueness and Markov representation. Probab. Theory Relat. Fields 76, 243–253 (1987)

Bollobás, B.: Random Graphs, 2nd edn. Cambridge Studies in Advanced Mathematics, vol. 73. Cambridge University Press, Cambridge (2001)

Brémaud, P., Massoulié, L.: Stability of nonlinear Hawkes processes. Ann. Probab. 24(3), 1563–1588 (1996)

Bressaud, X., Fernández, R., Galves, A.: Decay of correlations for non-Hölderian dynamics. A coupling approach. Elect. J. Prob. 4, 1–19 (1999)

Brillinger, D.: Maximum likelihood analysis of spike trains of interacting nerve cells. Biol. Cybern. 59(3), 189–200 (1988)

Cessac, B.: A discrete time neural network model with spiking neurons: II: Dynamics with noise. J. Math. Biol. 62, 863–900 (2011)

Comets, F., Fernández, R., Ferrari, P.A.: Processes with long memory: regenerative construction and perfect simulation. Ann. Appl. Probab. 12(3), 921–943 (2002)

Doeblin, W., Fortet, R.: Sur les chaînes à liaisons complétes. Bull. Soc. Math. Fr. 65, 132–148 (1937)

Fernández, R., Maillard, G.: Chains with complete connections and one-dimensional Gibbs measures. Electron. J. Probab. 9(6), 145–176 (2004)

Fernández, R., Ferrari, P.A., Galves, A.: Coupling, renewal and perfect simulation of chains of infinite order. Notes for a minicourse given at the Vth Brazilian School of Probability. Manuscript (2001). http://www.univ-rouen.fr/LMRS/Persopage/Fernandez/resucoup.html

Fernández, R., Ferrari, P.A., Garcia, N.L.: Loss network representation of Peierls contours. Ann. Probab. 29(2), 902–937 (2001)

Fernández, R., Ferrari, P.A., Garcia, N.L.: Perfect simulation for interacting point processes, loss networks and Ising models. Stoch. Process. Appl. 102(1), 63–88 (2002)

Ferrari, P.A., Maass, A., Martínez S., Ney, P.: Cesàro mean distribution of group automata starting from measures with summable decay. Ergod. Theory Dyn. Syst. 20(6), 1657–1670 (2000)

Gallo, S.: Chains with unbounded variable length memory: perfect simulation and visible regeneration scheme. Adv. Appl. Probab. 43, 735–759 (2011)

Galves, A., Garcia, N., Löcherbach, E., Orlandi, E.: Kalikow-type decomposition for multicolor infinite range particle systems (2013)

Gerstner, W., Kistler, W.M.: Spiking Neuron Models. Single Neurons, Populations, Plasticity. Cambridge University Press, Cambridge (2002)

Goldberg, J.M., Adrian, H.O., Smith, F.D.: Response of neurons of the superior olivary complex of the cat to acoustic stimuli of long duration. J. Neurophysiol. 27, 706–749 (1964)

Harris, T.E.: On chains of infinite order. Pac. J. Math. 5, 707–724 (1955)

Hawkes, A.G.: Point spectra of some mutually exciting point processes. J. R. Stat. Soc. B 33, 438–443 (1971)

Johansson, A., Öberg, A.: Square summability of variations of g-functions and uniqueness of g-measures. Math. Res. Lett. 10(5–6), 587–601 (2003)

Krumin, M., Reutsky, I., Shoham, S.: Correlation-based analysis and generation of multiple spike trains using Hawkes models with an exogenous input. Front Comput Neurosci. 4, 147 (2010). doi:10.3389/fncom.2010.00147

Møller, J., Rasmussen, J.G.: Perfect simulation of Hawkes processes. Adv. Appl. Probab. 37(3), 629–646 (2005)

Nawrot, M.P., Boucsein, C., Rodriguez-Molina, V., Aertsen, A., Grün, S., Rotter, S.: Serial interval statistics of spontaneous activity in cortical neurons in vivo and in vitro. Neurocomputing 70, 1717–1722 (2007)

Rissanen, J.: A universal data compression system. IEEE Trans. Inf. Theory 29(5), 656–664 (1983)

Spitzer, F.: Interaction of Markov processes. Adv. Math. 5(2), 246–290 (1970)

Acknowledgements

We thank D. Brillinger, B. Cessac, S. Ditlevsen, M. Jara, M. Kelbert, Y. Kohayakawa, C. Landim, R.I. Oliveira, S. Ribeiro, L. Triolo, C. Vargas and N. Vasconcelos for many discussions on Hawkes processes, random graphs and neural nets at the beginning of this project.

This work is part of USP project “Mathematics, computation, language and the brain”, FAPESP project “NeuroMat” (grant 2011/51350-6), USP/COFECUB project “Stochastic systems with interactions of variable range” and CNPq project “Stochastic modeling of the brain activity” (grant 480108/2012-9). A.G. is partially supported by a CNPq fellowship (grant 309501/2011-3), A.G. and E.L. have been partially supported by the MathAmSud project “Stochastic structures of large interacting systems” (grant 009/10). E.L. thanks Numec, USP, for hospitality and support.

Author information

Authors and Affiliations

Corresponding author

Additional information

Dedicated to Errico Presutti, frateddu e mastru.

Rights and permissions

About this article

Cite this article

Galves, A., Löcherbach, E. Infinite Systems of Interacting Chains with Memory of Variable Length—A Stochastic Model for Biological Neural Nets. J Stat Phys 151, 896–921 (2013). https://doi.org/10.1007/s10955-013-0733-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10955-013-0733-9