Abstract

The application of the Late Acceptance Hill-Climbing (LAHC) to solve the High School Timetabling Problem is the subject of this manuscript. The original algorithm and two variants proposed here are tested jointly with other state-of-art methods to solve the instances proposed in the Third International Timetabling Competition. Following the same rules of the competition, the LAHC-based algorithms noticeably outperformed the winning methods. These results, and reports from the literature, suggest that the LAHC is a reliable method that can compete with the most employed local search algorithms.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The High School Timetabling problem (HSTP) is faced by many educational institutions around the world. A solution for this problem consists of an assignment of timeslots and resources to the events, respecting several constraints. Generally, this assignment is repeated weekly, until the end of the semester. Beyond its practical importance, this problem is \({\mathcal {NP}}\)-Hard (Garey and Jonhson 1979), which justifies the intense efforts dedicated by the Operations Research and Computational Intelligence communities in proposing methods for solving it (Dorneles et al. 2014; Moura and Scaraficci 2010; Pillay 2013).

The problem relevance and complexity motivated the organization of three International Timetabling Competitions (ITC), in which researchers could test their approaches in the same computational environment. The first competition (ITC2003) (IDSIA 2012) was won by Kostuch (2005), with a 3-phase local search-based algorithm. The second one (ITC2007) (McCollum 2012) was won by Muller (2009), with a variation of the Simulated Annealing algorithm (SA). The last one (ITC2011) (McCollum 2012) was won by Fonseca et al. (2014), who proposed a hybrid approach combining SA and Iterated Local Search (namely SA-ILS).

The competition results indicate that local search methods are currently leading to the best results of the HSTP. Among these methods, it is possible to highlight the SA algorithm (Kirkpatrick et al. 1983), which was used by the three competition winners. Approaches based on integer programming were also proposed (Kristiansen et al. 2014; Santos et al. 2012), but they are restricted to small instances due to their computational complexity.

A new technique, applied to the HSTP model introduced in ITC2011, is proposed in this paper. This technique is based on a local search method called Late Acceptance Hill-Climbing (LAHC), proposed by Burke and Bykov (2012). This algorithm was modified, generating two new variants that intend to overcome some limitations of the original method. The new algorithms obtained remarkable results, outperforming the best marks from the ITC2011 winner. Moreover, the implementation of the algorithm is quite easy, and, according to the author, it can be extended to other combinatorial optimization problems without major adaptations.

The outline of this paper is structured as follows. Section 2 presents the model of the HSTP adopted in ITC2011. The proposed solution approaches are presented in Sect. 3. Results for computational experiments are given in Sect. 4. Finally, concluding remarks are drawn in Sect. 5.

2 High school timetabling problem model

The Third International Timetabling Competition motivated the development of methods for solving high school timetabling problems. It also encouraged the alignment of research and practice, making real-world instances available. The organizers also provided a benchmark to adjust processing times and a solution validator.

The instances were specified in the XHSTT format, which is an XML (extensible markup language)-based format adapted to describe timetabling problems. Post et al. (2014) also highlighted that this format can specify instances of other timetabling problems, beyond the scholar context.

The considered model of HSTP came up with the goal of providing a generic model capable of addressing various features of the HSTP in real-world situations (de Haan et al. 2007; Kingston 2005; Nurmi and Kyngas 2007; Post et al. 2014; Santos et al. 2012; Valourix and Housos 2003; Wright 1996). The model is split into four main entities, which are described in the following subsections.

2.1 Times

The times entity consists of a single Time (timeslot) or a set of times, called a Time Group. The timeslots are commonly grouped by Day (e.g., timeslots for Monday).

2.2 Resources

The resources entity consists of a single Resource, a set of resources (Resource Group), or a Resource Type. Each single resource belongs to a specific resource type. In the context of school timetabling, the most common resource types are (Post et al. 2014):

- class :

-

a group of students who attend the same events. Important constraints to the classes are controlling idle times and the number of lessons per day;

- teacher :

-

a teacher can be preassigned to attend an event. In some cases, pre-assignment is not possible, and the teacher should be assigned according to qualifications and workload limits; and

- room :

-

most events take place in a room. One room has a certain capacity and a set of features.

2.3 Events

An Event usually represents a set of lessons about a subject. It demands a set of times and resources to occur. This assignment is the main goal of any timetabling solver. Events may be grouped into an Event Group. A timeslot assigned to an event is called a Meet, and a resource assigned to an event is called a Task. Every XHSTT solver is also responsible for breaking an event into sub-events to be spread over the days whenever it is necessary. Other kinds of events, such as meetings, are allowed by the model (Post et al. 2014). An event has the following attributes:

-

duration, which represents the number of times that should be assigned to an event;

-

workload, which will be added to the total workload of resources assigned to the event (optional);

-

preassigned resources to attend the event (optional); and

-

preassigned timeslots to attend the event (optional).

2.4 Constraints

Post et al. (2014) groups the Constraints into three categories: basic scheduling constraints, event constraints, and resource constraints. The objective function f(.) is calculated in terms of violations of the constraints. These violations are penalized according to the weight of each constraint, defining a minimization problem. They are also divided into hard constraints, whose attendance is mandatory, and soft constraints, whose attendance is desirable. Each instance can define whether a constraint is hard or soft and its weight. For more details, please refer to Post et al. (2014). A mathematical programming formulation of all XHSTT constraints is given by Kristiansen et al. (2014).

2.4.1 Basic scheduling constraints

-

Assign Time assigns the required number of timeslots to each event;

-

Assign Resource assigns the required resources to each event;

-

Prefer Times indicates that some events have preference for particular timeslots; and

-

Prefer Resources indicates that some events have preference for particular resources.

2.4.2 Event constraints

-

Link Events Schedules a set of events to the same timeslots;

-

Spread Events Specifies that the number of occurrences of an event in a timeslot group should lie between a minimum and a maximum value. This constraint can be used, for example, to define a daily limit of lessons of a given subject;

-

Avoid Split Assignments Assigns the same resources to all occurrences of the same event. With this constraint, for example, one can enforce the assignment of all occurrences of an event to the same room;

-

Distribute Split Events Places limits on the number of sub-events of a particular duration that may be derived from an event. This constraint may be important in some institutions, since a large number of consecutive lessons of the same subject can affect the performance of the students; and

-

Split Events Limits the number of non-consecutive meets that an event can be scheduled and its duration. One example of this constraint is to ensure that an event of duration four is split into two sub-events of duration two.

2.4.3 Resource constraints

-

Avoid Clashes Assigns the resources without clashes (i.e., without assigning the same resource in more than one event at a given time);

-

Avoid Unavailable Times States that some resources are unavailable to attend any event at certain times. For instance, this constraint can be used to avoid assigning a teacher to a timeslot that they cannot attend;

-

Limit Workload Restricts the workload of the resources between minimum and maximum bounds;

-

Limit Idle Times Sets the number of idle times in each time group to lie between a minimum and a maximum bound for each resource. Typically, a time group consists of all timeslots of a given day of the week. This constraint is used to avoid inactive timeslots between active ones in the schedule of a given resource;

-

Limit Busy Times The number of busy times in each day should lie between minimum and maximum bounds for each resource. A high number of allocations in the same day can affect student and teacher performances; and

-

Cluster Busy Times The number of time groups with a timeslot assigned to a resource should lie between minimum and maximum limits. This can be used, for example, to concentrate teacher’s activities into a minimum number of days.

3 Solution approach

The proposed approach is composed of two main steps: (i) an initial solution is generated using the Kingston High School Timetabling Engine (KHE) constructive algorithm (Kingston 2012a); (ii) this solution is used as a starting point for the LAHC metaheuristic, or one of our proposed variants, in order to find improved solutions using multi-neighborhood local search. These elements are explained in the next subsections.

Example of event swap (Fonseca et al. 2014)

Example of event move (Fonseca et al. 2014)

3.1 Build method

The KHE is a platform for handling instances of the addressed problem. It also provides a solver, which is used to generate initial solutions because it can find solutions of reasonable quality in a short time (Kingston 2012b). A very brief description of the KHE will be given in the next paragraphs. For more details, please refer to Kingston (2014, 2012b).

The KHE generates a solution through a three-step approach. The first one is the structural phase. It constructs an initial solution with no time or resource assignments and it creates structures for the next phases. The structural phase splits events into sub-events whose durations depend on constraints related to how events should be split (namely, split events, distribute split events, and spread events), and groups the sub-events (the so-called meets) into sets called nodes. Sub-events derived from the same event go into the same node. Sub-events whose original events are connected by spread events or avoid a split-assignments constraint also lie in the same node. Events connected by link-events constraints have their meets connected in such a way that whenever a time is assigned to one of these meets, this assignment is also extended to the other connected meets. Each meet also contains a set of times called domain. Only times from this set may be assigned to the meet. Domains are chosen based on preferred time constraints. A meet contains one task for each demanded resource in the event that it was derived from. Each task also contains a set of resources of the proper type called a domain. When the resource is preassigned, the domain contains only the preassigned resource; otherwise, this domain is based on preferred resource constraints. This step also assigns preassigned times and resources.

Next, the time assignment phase assigns a time to each meet. For each resource to which a hard avoid-clashes constraint applies, it builds a layer—the set of nodes containing meets preassigned to that resource. After merging layers wherever one node is a subset of another, and sorting them in such a way that the most difficult layers (with fewer available choices for assignment) come first, it assigns times to the meets of each layer. This assignment is made through a minimum-cost matching between meets of the given layer and times. Each edge of such a graph has a cost according to the objective function cost of this assignment.

Finally, comes the resource assignment phase. For each resource type, an iteration of the following procedure is performed. If the resource assignment for this resource type is constrained by an avoid split assignments constraint, a resource packing algorithm is invoked. Otherwise, a simple heuristic is used. A packing of a resource consists in finding assignments of tasks to the resource that makes the solution cost as small as possible, using the resource as much as possible under its workload limits. The resources are placed in a priority queue in which the most demanded are prioritized. At each iteration, a resource is dequeued and processed. The packing procedure consists of a simple binary tree search over the elective tasks of a given resource. For each task, from the most constrained to the least, the simple heuristic consists in assigning the resource that provides more improvement on the objective function. It is possible to estimate the amount of tasks whose resource assignment is impossible (ideally 0). This is performed through a maximum matching in an unweighed bipartite graph, where tasks are demand nodes and resources are supply nodes. This estimate is called resource assignment invariant and it is kept minimal through the whole resource assignment process.

3.2 Neighborhood structure

The neighborhood structure N(s) considered in the proposed methods is composed of six types of moves.Footnote 1 This neighborhood structure is very similar to the one proposed by the winner of ITC2011 (Fonseca et al. 2012, 2014), except that the move Permute Resources was removed. This move is computationally expensive and it was not contributing significantly to achieve good solutions. The considered moves are presented in the following subsections.

3.2.1 Event swap (es)

Two events \(e_1\) and \(e_2\) are selected and have their timeslots \(t_1\) and \(t_2\) swapped. Figure 1 presents an example of this move.

3.2.2 Event move (em)

An event \(e_1\) is moved from its original timeslot \(t_1\) to a new timeslot \(t_2\). Figure 2 presents an example of this move.

3.2.3 Event block swap (ebs)

Similarly to es move, the Event Block Swap swaps the timeslots of two events \(e_1\) and \(e_2\), but, when the events have different durations, \(e_1\) is moved to the last timeslot occupied by \(e_2\). This move allows timeslot swaps without losing the allocation contiguity. Figure 3 presents an example of this move.

Example of event block swap (Fonseca et al. 2014)

3.2.4 Resource swap (rs)

Two events \(e_1\) and \(e_2\) have their assigned resources \(r_1\) and \(r_2\) swapped. Such an operation is only allowed if the resources \(r_1\) and \(r_2\) are of the same type (e.g., both have to be teachers). Figure 4 presents an example of this move.

Example of resource swap (Fonseca et al. 2014)

3.2.5 Resource move (rm)

The resource \(r_{1}\) assigned to an event \(e_1\) is replaced by a new resource \(r_{2}\), randomly selected from the available resources that can be used to attend \(e_1\). Figure 5 presents an example of this move.

Example of resource move (Fonseca et al. 2014)

3.2.6 Kempe move (km)

Two timeslots \(t_1\) and \(t_2\) are selected. The events assigned to \(t_1\) and \(t_2\) are listed and represented as nodes in a graph. If two nodes (events) \(n_1\) and \(n_2\) in this graph share resources, they are connected with an edge. Edges are created only between nodes assigned in distinct timeslots; thus, the generated graph is a bipartite graph known as conflict graph. Every edge in the conflict graph also has a weight, formed by the cost difference in the objective function assuming the exchange of timeslots between the events in the pair (\(n_1\), \(n_2\)). Afterward, the method looks for the path with the lowest cost in the conflict graph and it makes the exchange of timeslots in the chain. This procedure is similar to that proposed by Tuga et al. (2007). Figure 6 presents an example of this move.

Example of kempe move (Fonseca et al. 2014)

3.2.7 Move selection

The move k in N(s) is randomly selected in order to generate a neighbor. If the instance requires the assignment of resources (i.e., has at least one Assign Resource constraint), the moves are chosen based on the following probabilities: \(\textsc {es} = 0.20\), \(\textsc {em} = 0.38\), \(\textsc {ebs} = 0.10\), \(\textsc {rs} = 0.20\), \(\textsc {rm} = 0.10\), and \(\textsc {km} = 0.02\). Otherwise, the moves \(\textsc {rs}\) and \(\textsc {rm}\) are not used and the probabilities become \(\textsc {es} = 0.40\), \(\textsc {em} = 0.38\), \(\textsc {ebs} = 0.20\), and \(\textsc {km} = 0.02\). These values were adjusted based on empirical observation.

3.3 Late acceptance hill-climbing

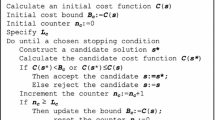

The LAHC metaheuristic was proposed by Burke and Bykov (2008). This algorithm is an adaptation of the classical Hill-Climbing method. It relies on comparing a new candidate solution with the last lth solution considered in the past, in order to accept or to reject it. Note that the candidate solution may be accepted even if it is worse than the current solution, since it is compared to the solution of l iterations before.

This metaheuristic was created with three goals in mind: to be a one-point search procedure that does not employ an artificial cooling schedule, such as SA; to effectively use the information collected during previous iterations of the search; and to employ a simple acceptance mechanism (i.e., almost as simple as Hill-Climbing) (Burke and Bykov 2012).

In this method, a vector \(\mathbf {p} = \mathbf {p}_0, \ldots \mathbf {p}_{l-1}\) with costs of previous solutions is stored. Initially, this list is filled with the cost of the initial solution s: \(\mathbf {p}_k \leftarrow f(s) \ \forall k \in \) {\(0, ..., l-1\)}. At each iteration i, a candidate solution \(s'\) is generated. The candidate solution is accepted if its cost is less than or equal to the cost stored on the \(i \mod l\) position of \(\mathbf {p}\). Moreover, if this solution is better than the best solution \(s^{*}\) found so far, a new incumbent solution is stored. Afterward, the position \(v = i \mod l\) of \(\mathbf {p}\) is updated: \(\mathbf {p}_{v} \leftarrow f(s^{'})\). This process repeats until a stopping condition is met.

The implementation of the LAHC is illustrated in Algorithm 1. Note that timeout was adopted as the stopping condition for the algorithm. This decision is discussed in Sect. 4. Some successful examples of application of LAHC can be found in Abuhamdah (2010), Özcan et al. (2009), Verstichel and Vanden Berghe (2009).

Since it is relatively recent, variations of the LAHC metaheuristic were not extensively explored yet. Therefore, the combination of LAHC with other methods and strategies is an open field for experimentation (Burke and Bykov 2012). In this paper we propose and evaluate computationally two LAHC variants, one of which is a hybrid version including SA.

3.3.1 Stagnation-free LAHC

In late stages of the LAHC execution, it is often very hard to improve the current solution. The algorithm can lead to a list with all l positions occupied with the same cost value, even for large values of l. This behavior can make the LAHC incapable of escaping from local minima, since worse solutions are never accepted. A new variation of the LAHC, the so-called Stagnation-Free LAHC or simply sf-LAHC, is proposed in this paper in order to handle such situations.

In the sf-LAHC method, the algorithm reheats the system when it reaches a stagnation condition. In the proposed implementation, the reheat consists of retrieving the vector of costs from the last time in which one improvement occurred, denoted by \(\mathbf {p}^{\prime }\). This means that various worsening moves may become acceptable after this list update. The algorithm is considered on stagnation when it performs n iterations without improvement. The authors suggest to set n as a function of l, in order to simplify the parameter tuning process. The Stagnation-Free variant of LAHC is presented in Algorithm 2. In the experiments, we considered always \(n = 1000 \times l\).

3.3.2 Simulated annealing—LAHC

Proposed by Kirkpatrick et al. (1983), the metaheuristic SA is a probabilistic method based on an analogy to thermodynamics, simulating the cooling of a set of heated atoms. This technique starts its search from any initial solution. The main procedure consists of a loop that randomly generates, at each iteration, one neighbor \(s^{\prime }\) of the current solution s. Movements are probabilistically selected considering a temperature T and the cost variation obtained with the move, \(\Delta \).

This algorithm was part of the solvers in all ITC winners (Fonseca et al. 2012; Kostuch 2005; Muller 2009). It also achieved good results in this model of the problem, especially for larger instances. Therefore, it was evaluated in a hybrid approach with the LAHC algorithm. Since SA performance is not strongly affected by the fitness of the initial solution, it has been considered a mixed algorithm, with the SA algorithm being executed in the initial solution, generating a \(s^{*}\) solution, and the LAHC method being executed further, to polish this solution, generating a final solution \(s^{**}\). A combination of SA and sf-LAHC variant of LAHC was also tested. A mixed approach with as-LAHC was not presented because it achieved poor results.

The implementation of SA which is used in this work is described in Algorithm 3. Parameters were set as \(\alpha = 0.97\), \(T_{0} = 1\), and \( SAmax = 10{,}000\). The method selectMovement() chooses a move according to the neighborhood probabilities previously defined.

4 Computational experiments

All experiments were executed on an Intel ® i5 2.4 GHz computer, 4 GB of RAM, under an Ubuntu 11.10 operating system. The software was coded in C++ and compiled with GCC 4.6.1. The obtained results were validated by a HSEval validator.Footnote 2 The stopping criterion was 1,500 s timeout, adjusted according to the ITC2011 provided benchmark.

The results are expressed by the pair x / y, where x stands for the feasibility measure and y for the quality measure. The proposed solver, along with solutions and reports, can be found at GOAL-UFOP website.Footnote 3 The interested reader is invited to validate the results.

4.1 Dataset characterization

The set of instances available from ITC2011 (Post et al. 2014) is composed of problems from many countries, ranging from small to large and challenging instances. The main features of the considered instances are presented in Table 1.

4.2 Parameter setting

One of the key advantages of LAHC is the small number of parameters to be set. Actually, the algorithm has only one parameter, which is the length l of \(\mathbf {p}\) vector. As mentioned by Burke and Bykov (2008), higher values of l make the search not only more suitable to find better results but also imply a higher processing time. On the other hand, low values of l make the search faster but it can lead to poor results. For instance, if one considers \(l = 1\), the method performs exactly like the classical Hill-Climbing method.

In this sense, experiments considering many values of l: l = {1, 10, 100, 500, 1000, 5000, 10,000, 20,000, 50,000} have been executed. The instances BrazilInstance2, ItalyIntance4, SpainSchool, KosovaInstance, and NetherlandsKottenpark2009 have been chosen to determinate which value has the better average performance. These instances were chosen since they have different sizes and features. Tables 2 and 3 present the results obtained under the considered configurations.

The poor performance observed for \(l = 1\) was expected, since the algorithms become identical to the original Hill-Climbing method. In general, it is possible to detect two different behaviors:

-

for small instances, higher l values imply better performance, since the algorithm capacity of escaping from local optima increases. This can be seen for instance BrazilInstance2 in Table 3.

-

for large instances, the performance of the method increases with l, but after some point it starts to decrease because the algorithm does not reach convergence before timeout in these cases. The instance KosovaInstance1, whose convergence curves are shown in Fig. 7, is an example of such a case. From this figure, it is possible to note that both, excessively high or excessively low values of l lead to bad results.

Based on the overall performances of the methods, we fixed \(l = 500\) to perform the remaining experiments. This size has been chosen because it has shown to be a good compromise between small and large instances.

4.3 Obtained results

Table 4 presents the results obtained with the LAHC method and its variants. The results obtained with the KHE engine (initial solution), the ITC2011 winner approach (SA-ILS), and the stand alone SA are also presented for comparison. The results presented are average values of five runs, with random seeds. The value of “Average ranking” was calculated following the ITC2011 rules: each solution method was ranked between 1 and 5 on each instance (1 being the best and 5 being the worst), and the average of these ranks was taken. The best results are highlighted in bold.

The Algorithm SA-sf-LAHC has been compared with the other results from ITC2011, since it was the algorithm with better performance among the proposed methods. Such a comparison is shown in Table 5, where bold values highlight the best result found for each instance. Decimal values were rounded to the nearest integer. Again, the “Average ranking” is calculated following the ITC2011 rule. In a brief description of ITC2011 finalists, GOAL team (Fonseca et al. 2012) developed a SA-ILS hybrid local search approach; HySTT team (Kheiri et al. 2012) developed a method based on Hyper-heuristics; Lectio team (Srensen et al. 2012) used an Adaptive Large Neighborhood Search; and HTF team (Romrs and Homberger 2012) used an Evolutionary Algorithm.

4.4 Discussion of results

In some instances, even the production of feasible solutions is complicated, specially when most constraints are set as hard ones. The LAHC method and its variants were able to find 12 feasible solutions out of the 18 instances in the considered dataset, one more than the ITC2011 winner. In Table 4, it is possible to see that the LAHC algorithm and its variants outperformed the SA-ILS solver.

When compared to the other methods, the stand-alone SA worked better in large instances than in small ones. Surprisingly, the SA algorithm had better performance than the hybrid SA-ILS approach. When it is compared to the original LAHC, it is possible to note that the LAHC was slightly better than the SA. This is an interesting result, since LAHC is a new metaheuristic and it is still open for improvements. In addition, the SA is known as a good algorithm for dealing with scheduling problems, which makes the observed result a good achievement.

The Stagnation-Free version of LAHC obtained good results, outperforming its original version in several instances. This could be noted specially in small instances, in which sf-LAHC can keep some improvement until the timeout is reached instead of the original LAHC, which probably got stuck at a local optima. Finally, it is important to highlight the remarkable performance observed for the combination of LAHC and SA proposed in this work (SA-sf-LAHC). This heuristic obtained the best results and, compared to the finalist results (see Table 5), it is possible to conclude that it would win the competition by a large margin: it reached the best result in 14 out of 18 instances, leading to an overall ranking of 1.42. A two-tail Welchs T-test, comparing GOAL and SA-sf-LAHC rankings, reinforced the assumption of SA-sf-LAHC superiority: it has obtained a p-value of 8.0254e\(-\)06, which widely supports the rejection of the null hypothesis (equivalent algorithms) under the confidence level of 95 %.

5 Concluding remarks

This work presented an application of the Late Acceptance Hill-Climbing algorithm to the HSTP model proposed in the ITC2011. In addition, some variants of the LAHC method were proposed and evaluated computationally.

The LAHC algorithm obtained good results. It was able to outperform the stand-alone SA approach and the ITC2011 winner approach, a SA-ILS method. The LAHC variants proposed in this paper also reached promising results. The Stagnation-Free LAHC (sf-LAHC) was able to outperform its original version. The combinations of LAHC and sf-LAHC with SA were tested, and the mixed SA-sf-LAHC algorithm achieved the best results to this problem up to now. One great feature of LAHC is its simplicity: it is very easy to implement and it relies only on one parameter to be tuned.

Some possible future extensions of this work are (i) to develop and to evaluate other variations of LAHC as suggested by Burke and Bykov (2008; ii) to implement and to evaluate other neighborhood moves; and (iii) to develop a graphical user interface to allow the use of the solver by schools and universities.

Notes

We denote by \(N_k(s)\) the subset of \(N_k(s)\) involving only moves of type k.

Code, solutions and reports are available at http://www.goal.ufop.br/softwares/hstt.

References

Abuhamdah, A. (2010). Experimental result of late acceptance randomized descent algorithm for solving course timetabling problems. IJCSNS-International Journal of Computer Science and Network Security, 10(1), 192–200.

Burke, E. K., & Bykov, Y. (2008). A late acceptance strategy in hill-climbing for exam timetabling problems. In PATAT’08 proceedings of the 7th international conference on the practice and theory of automated timetabling.

Burke, E. K., & Bykov, Y. (2012). The late acceptance hill-climbing heuristic. Technical Report CSM-192, Department of Computing Science and Mathematics, University of Stirling.

de Haan, P., Landman, R., Post, G., & Ruizenaar, H. (2007). A case study for timetabling in a dutch secondary school. In Lecture notes in computer science: VI. Practice and theory of automated timetabling (Vol. 3867, pp. 267–279). Berlin: Springer.

Dorneles, Á. P., de Araújo, O. C., & Buriol, L. S. (2014). A fix-and-optimize heuristic for the high school timetabling problem. Computers & Operations Research, 52, 29–38.

Fonseca, G., Santos, H., Toffolo, T., Brito, S., & Souza, M. (2012). A SA-ILS approach for the high school timetabling problem. In PATAT’12 proceedings of the 9th international conference on the practice and theory of automated timetabling.

Fonseca, G. H. G., Santos, H. G., Toffolo, T. A. M., Brito, S. S., & Souza, M. J. F. (2014). GOAL solver: A hybrid local search based solver for high school timetabling. Annals of Operations Research, 1–21. doi:10.1007/s10479-014-1685-4.

Garey, M. R., & Jonhson, D. S. (1979). Computers and intractability: A guide to the theory of NP-completeness. San Francisco, CA: Freeman.

IDSIA (2012). International Timetabling Competition 2002 (2012). Retrieved December, 2012 from http://www.idsia.ch/Files/ttcomp2002/.

Kheiri, A., Ozcan, E., & Parkes, A. J. (2012). Hysst: Hyper-heuristic search strategies and timetabling. In Proceedings of the ninth international conference on the practice and theory of automated timetabling (PATAT 2012) (pp. 497–499).

Kingston, J. (2014). KHE14 an algorithm for high school timetabling. In 10th international conference on the practice and theory of automated timetabling (pp. 26–29).

Kingston, J. H. (2005) A tiling algorithm for high school timetabling. In Lecture notes in computer science: V. Practice and theory of automated timetabling (Vol. 3616, pp. 208–225). Berlin: Springer.

Kingston, J. H. (2012). A software library for school timetabling (2012). Retrieved May, 2012, from http://sydney.edu.au/engineering/it/~jeff/khe/.

Kingston, J. H. (2012). A software library for school timetabling (2012). Retrieved December 2012, from http://sydney.edu.au/engineering/it/~jeff/khe/.

Kirkpatrick, S., Gelatt, C. D., & Vecchi, M. P. (1983). Optimization by simulated annealing. Science, 220, 671–680.

Kostuch, P. (2005). The university course timetabling problem with a three-phase approach. In Proceedings of the 5th international conference on practice and theory of automated timetabling, PATAT’04 (pp. 109–125). Berlin: Springer. doi:10.1007/11593577_7.

Kristiansen, S., Srensen, M., Stidsen, T. (2014). Integer programming for the generalized high school timetabling problem. Journal of Scheduling, 1–16. doi:10.1007/s10951-014-0405-x.

McCollum, B. (2012). International timetabling competition 2007. Retrieved December, 2012 from http://www.cs.qub.ac.uk/itc2007/.

Moura, A. V., & Scaraficci, R. A. (2010). A grasp strategy for a more constrained school timetabling problem. International Journal of Operational Research, 7(2), 152–170.

Muller, T. (2009). ITC2007 solver description: a hybrid approach. Annals OR 172(1), 429–446. http://dblp.uni-trier.de/db/journals/anor/anor172.html#Muller09.

Nurmi, K., & Kyngas, J. (2007). A framework for school timetabling problem. In Proceedings of the 3rd multidisciplinary international scheduling conference: theory and applications, Paris (pp. 386–393).

Özcan, E., Bykov, Y., Birben, M., & Burke, E. K. (2009). Examination timetabling using late acceptance hyper-heuristics. In Proceedings of the eleventh conference on congress on evolutionary computation, CEC’09 (pp. 997–1004). IEEE Press, Piscataway, NJ http://dl.acm.org/citation.cfm?id=1689599.1689731.

Pillay, N. (2013) A survey of school timetabling research. Annals of Operations Research, 1–33.

Post, G., Kingston, J., Ahmadi, S., Daskalaki, S., Gogos, C., Kyngas, J., et al. (2014). XHSTT: An XML archive for high school timetabling problems in different countries. Annals of Operations Research, 218(1), 295–301.

Romrs, J., & Homberger, J. (2012). An evolutionary algorithm for high school timetabling. In PATAT’12 proceedings of the 9th international conference on the practice and theory of automated timetabling.

Santos, H. G., Uchoa, E., Ochi, L. S., & Maculan, N. (2012). Strong bounds with cut and column generation for class-teacher timetabling. Annals OR, 194(1), 399–412.

Srensen, M., Kristiansen, S., & Stidsen, T. (2012). International timetabling competition 2011: An adaptive large neighborhood search algorithm (pp. 489–492).

Tuga, M., Berretta, R., & Mendes, A. (2007) A hybrid simulated annealing with kempe chain neighborhood for the university timetabling problem. In 6th IEEE/ACIS international conference on computer and information science, 2007. ICIS 2007, IEEE (pp. 400–405).

Valourix, C., & Housos, E. (2003). Constraint programming approach for school timetabling. In Computers & Operations Research (pp. 1555–1572).

Verstichel, J., & Vanden Berghe, G. (2009). A late acceptance algorithm for the lock scheduling problem. In S. Voss, J. Pahl, & S. Schwarze (Eds.), Logistik management (pp. 457–478). Dordrecht: Springer.

Wright, M. (1996). School timetabling using heuristic search. Journal of Operational Research Society, 47, 347–357.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Fonseca, G.H.G., Santos, H.G. & Carrano, E.G. Late acceptance hill-climbing for high school timetabling. J Sched 19, 453–465 (2016). https://doi.org/10.1007/s10951-015-0458-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10951-015-0458-5