Abstract

For the purpose of population pharmacometric modeling, a variety of mathematic algorithms are implemented in major modeling software packages to facilitate the maximum likelihood modeling, such as FO, FOCE, Laplace, ITS and EM. These methods are all designed to estimate the set of parameters that maximize the joint likelihood of observations in a given problem. While FOCE is still currently the most widely used method in population modeling, EM methods are getting more popular as the current-generation methods of choice because of their robustness with more complex models and sparse data structures. There are several versions of EM method implementation that are available in public modeling software packages. Although there have been several studies and reviews comparing the performance of different methods in handling relatively simple models, there has not been a dedicated study to compare different versions of EM algorithms in solving complex PBPK models. This study took everolimus as a model drug and simulated PK data based on published results. Three most popular EM methods (SAEM, IMP and QRPEM) and FOCE (as a benchmark reference) were evaluated for their estimation accuracy and converging speed when solving models of increased complexity. Both sparse and rich sampling data structure were tested. We concluded that FOCE was superior to EM methods for simple structured models. For more complex models and/ or sparse data, EM methods are much more robust. While the estimation accuracy was very close across EM methods, the general ranking of speed (fastest to slowest) was: QRPEM, IMP and SAEM. IMP gave the most realistic estimation of parameter standard errors, while under- and over- estimation of standard errors were observed in SAEM and QRPEM methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In the field of pharmacometrics, population analysis is often done with the approach of maximum likelihood modeling [1]. A variety of mathematical algorithms have been developed, and implemented in public available software to facilitate such analysis [2–5]. Some of the most popular methods include first-order conditional estimation (FOCE), Laplace, iterative-two stage (ITS) and expectation-maximization (EM) methods. Regardless of the difference in mathematical derivation, all of these methods are designed to compute the best estimation of parameters that maximize the joint marginal likelihood for population pharmacometric problems. There has been several studies and reviews comparing these methods in solving relatively simple pharmacometric models [4–6]. Due to the difficulty of computing the exact likelihood, current algorithms are designed to calculate this value with different approaches of approximations. The specific approximation strategy applied in each algorithm distinguishes itself from the others.

Linearization methods (FO, FOCE and Laplace) are one of the earliest developed methods. They approximate the likelihood (internally referred to as objective function value, or OFV in the software, in the form of −2 log likelihood) by taking Laplace transformation and Taylor linearization [7]. For simple structured and low dimensional models, these algorithms perform sufficiently well and converge rapidly. However, failure of convergence and estimation bias becomes more significant when model complexity increases. With increasing demands to more precisely depict the pharmacokinetics and pharmacodynamics of investigated medications, models of high complexity (such as physiologically-based pharmacokinetic models) are being employed more often in pharmacometric analysis [8, 9]. It is reasonable to expect that eventually linearization methods will not suffice for such demands.

The EM algorithms, on the other hand, are considered as the current-generation algorithms by developers and end-users. The major advantage of these methods is that they are designed to calculate (still with approximation) the exact likelihood [10]. This is done by sampling and summing through the probability density function space, which in theory would ultimately approach the true likelihood with infinite sampling. In practice, the sampling number is set to a large value (usually 300–10,000) as approximation. EM algorithms generally take longer time to run than the linearization algorithms due to the sampling step, but are much more robust with high dimensional problems.

Currently, different versions of EM algorithm implementation are available in major modeling software packages. Each package features their own version and may also provide other versions for users’ selection. Some examples include Monte Carlo Parametric EM (MCPEM) in S-ADAPT, NONMEM and Phoenix; Stochastic Approximation EM (SAEM) in Monolix and NONMEM; Monte Carlo Importance Sampling Parametric EM (IMP) in NONMEM; and Quasi-Random Parametric EM (QRPEM) in Phoenix NLME. The essential difference between these methods lies in the different Monte Carlo (MC) sampling strategies applied, which makes the sampling process more efficient than the plain MC method. While in theory all these methods should function similarly, there has not been a dedicated study comparing their performance in solving complex pharmacometric models. This work aims to compare three most popular EM methods (SAEM, IMP and QRPEM) on their performance in handling complex population pharmacokinetic models.

For demonstration purpose, an anti-cancer drug everolimus was selected as the model drug. Data sets in this work were simulated based on published PK results of everolimus [11, 12]. A total of four model structures with increasing complexity were tested: a classical 1-compartment PK model, a highly reduced 4-compartment PBPK model, a reduced 6-compartment PBPK model, and a 9-compartment PBPK model. Both rich and sparse sampling data structures were simulated and tested. The algorithm performance was assessed on parameter estimation accuracy (deviation from true values) and speed (computation time).

Methods

Models

Classical 1-compartment model

A classical 1-compartment model with first-order oral absorption and first-order elimination was used to describe everolimus PK in human adults. The model was developed in NONMEM7.3 using ADVAN2 TRANS2 and in Phoenix NLME using PML language.

PBPK models

Three PBPK models (4-compartment, 6-compartment and 9-compartment) were used to describe everolimus PK in mice. First-order oral absorption and first-order hepatic elimination was assumed. Perfusion-limited partition was assumed in all tissues. The models were developed in NONMEM7.3 using ADVAN13 and in Phoenix NLME using PML language. The systems of differential equations describing the model structures are listed below:

4-Comp model

Blood:

Lung:

Carcass:

Liver:

Absorption depot:

6-Comp model

Blood:

Lung:

Carcass:

Liver:

Pancreas and spleen:

GI:

Absorption:

9-Comp model

Blood:

Lung:

Other tissues (carcass, brain, adipose, pancreas, and spleen)

Liver:

GI:

Absorption:

A is the drug amount in each compartment. Q is the blood flow rate. V is the tissue weight or volume (blood). K is the tissue specific partition coefficient [13]. CL is the clearance. FA is the fraction absorbed. KA is the absorption rate constant. The physiological parameters used are listed in Supplemental Tables 1 and 2.

Data sets

The data were simulated based on published results [11, 12]. Modifications from original published data were made for the convenience of calculation (Table 1). A unified body weight of 20 g was assumed for the PBPK model. Physiological parameters were based on literature values [14, 15]. Log-normal between-subject variability with diagonal OMEGA matrix and normal intra-subject variability was assumed. The additive error model was used to describe residual errors. Data sets were simulated using NONMEM7.3 $SIMULATION module and R packages for formatting. For each model, both rich and sparse sampling data were simulated.

The dosing regimen was designed as a single oral bolus dose of everolimus at 10 mg/subject for the 1-comp classical model, and 100 µg/subject for the PBPK model. The dosing records were simulated as random number, ranging between 9 and 11 for 1-compartment model, and between 90 and 110 for PBPK models. The designed sampling time was 0, 0.17, 0.5, 1, 4, 8, 12, 24 h after dosing for 1-comp model, and 0, 0.17, 0.5, 1, 2, 4, 6, 8, 12 h after dosing for PBPK models. The observation records (ng/ml) were simulated at ±0.1 h within the designed sampling time. Rich data had all designed observations within each subject, while sparse data had only one designed observation randomly selected within each subject. The number of subjects and observations for sparse and rich data sets are listed in Table 2. Simulated data sets for each case studies are presented in Supplemental Figure 1.

Hardware and software

The specifications of the computer used in this work is as follows: Windows 7 64-bit professional environment, two Intel Xeon E5-2609 quad-core processors, gfortran 4.6.3 FORTRAN compiler, ActivePerl 5.20.2 Perl compiler, and MPICH2 1.4.1 message passing interface (MPI) standard. NONMEM 7.3 implemented with PsN4 and Phoenix 6 NLME 1.3 were used to build the models.

Estimation methods

For each model, FOCE (NONMEM), IMP (NONMEM), SAEM (NONMEM) and QRPEM (Phoenix NLME) methods were separately run to obtain parameter estimates and statistics. FOCE was assessed as a benchmark reference. Initial estimates remained the same within each model across methods (Table 1).

For PBPK models built in NONMEM, MU-referencing was applied for EM methods (SAEM and IMP). Each estimated parameter was expressed in the format of MU-reference. In this work, all methods implemented in NONMEM were applied using ‘auto’ option unless modified by the authors. In Phoenix NLME (QRPEM), MU-referencing was not necessary [2] and unavailable. All default setup in the QRPEM method was used. Parallel computing technique was applied in both NONMEM and Phoenix. Detailed algorithm options are listed in Supplemental Table 3.

Evaluation metrics

The true parameter values for simulation was used as expected parameter estimates. The confidence intervals were generated using PFIM 4.0 evaluation module (Fig. 1).

Model structures. a: A highly reduced 4-compartment PBPK model. A1, drug amount in blood; Q1, total blood flow rate; A2, drug amount in lung; Q2, blood flow rate through lung; A3, drug amount in carcass; Q3, blood flow rate through carcass; A4, drug amount in liver; Q4, blood flow rate through liver; CL, hepatic drug clearance; A4, drug amount in absorption depot; FA, fraction absorbed; KA, absorption rate constant. b: A reduced 6-compartment PBPK model. A1, drug amount in blood; Q1, total blood flow rate; A2, drug amount in lung; Q2, blood flow rate through lung; A3, drug amount in carcass; Q3, blood flow rate through carcass; A4, drug amount in liver; Q4, blood flow rate through liver; A5, drug amount in pancreases and spleen lumped; Q5, blood flow rate through pancreas and spleen lumped; A6, drug amount in GI tract; Q6, blood flow rate through GI tract; CL, hepatic drug clearance; A7, drug amount in absorption depot; FA, fraction absorbed; KA, absorption rate constant. c: A 9-compartment PBPK model. A1, drug amount in blood; Q1, total blood flow rate; A2, drug amount in lung; Q2, blood flow rate through lung; A3, drug amount in carcass; Q3, blood flow rate through carcass; A4, drug amount in brain; Q4, blood flow rate through brain; A5, drug amount in adipose; Q5, blood flow rate through adipose; A6, drug amount in liver; Q6, blood flow rate through liver; A7, drug amount in pancreases; Q7, blood flow rate through pancreas; A8, drug amount in spleen; Q8, blood flow rate through spleen; A9, drug amount in GI tract; Q9, blood flow rate through GI tract; CL, hepatic drug clearance; A10, drug amount in absorption depot; FA, fraction absorbed; KA, absorption rate constant

Relative estimation errors (RER), root mean squared error (RMSE) and standardized RMSE (sRMSE) were computed to evaluate the precision of the parameter estimates by each method [16, 17]. The mathematical definitions of these metrics are as below:

\({}_{p}^{a} \widehat{\theta }\) is the estimate of parameter p by algorithm a. \({}_{p}^{a} \theta\) is the true value of parameter p used for simulation. For each parameter p, the standardized RMSE is calculated by dividing RMSE (returned by algorithm a) by the minimum RMSE of all tested algorithms for parameter p.

Results

Classical 1-compartment model

The parameter estimates (PEs) and 95 % confidence intervals (CIs) are presented in Fig. 2. The CIs were provided by the software output and based on the calculation of standard errors of corresponding PEs. The solid horizontal line represents the expected PEs (simulation values). The dashed horizontal lines represent the 95 % CIs of the expected PEs calculated using PFIM 4.0. The empty circles represent the PEs obtained from each estimation method. The crosses represent the 95 % CIs output by each method. The same notation will be used across models. The iteration numbers until convergence and computation time are listed in Table 3.

1-compartment model: the parameter estimates and confidence intervals. Both rich and sparse data scenarios are listed. CL clearance, V volume of distribution, KA absorption rate constant. Fixed, estimates of population mean of fixed effects; Random, estimates of variance of random effects. Solid horizontal line, expected value of mean; dashed horizontal lines, expected confidence of interval. Circles, parameter estimates; crosses, 95 % confidence intervals of estimates

In this simplest model (only 2 ETA terms), the parameter estimation accuracy and converging speed were compared across FOCE, IMP, SAEM and QRPEM methods. For the rich data scenario, most PEs and CIs were estimated with values very close to the reference. The only exception was the CI span of CL by IMP method, which was approximately twice as the reference value.

For the sparse data scenario, which is more computational intense, the parameters were still estimated with very high accuracy. Most PEs were within 5 % deviation to reference values (Supplemental Table 4). The PE and CI of omega2 CL obtained by SAEM method were most deviated.

Despite the similar estimation results across methods, the computation time varied significantly for this simple model (Table 3). FOCE was overall the fastest to converge. The ranking of speed for EM methods were (fastest to slowest): QRPEM, IMP and SAEM for the rich data; QRPEM, SAEM and IMP for the sparse data.

Highly reduced 4-compartment PBPK model

To benchmark the methods under more computational intense situations, a highly reduced 4-compartment PBPK model (5 ETA terms) was tested (Fig. 3). For the rich data scenario, all methods converged successfully. The PEs were almost identical across methods and all very close to the reference values. For the CIs of fixed-effect, estimated spans by QRPEM were similar to the reference, while overestimation by FOCE, SAEM and IMP were observed. The CI spans of random-effect were generally estimated accurately, expect for omega2 CL by IMP and omega2 K2 by SAEM, which were both overestimated.

4-Compartment PBPK model: the parameter estimates and confidence intervals. Both rich and sparse data scenarios are listed. CL, hepatic clearance; K2-4, partition coefficient in lung, carcass and liver, respectively. Fixed, estimates of population mean of fixed effects; Random, estimates of variance of random effects. Solid horizontal line expected value of mean; dashed horizontal lines, expected confidence of interval. Circles, parameter estimates; crosses, 95 % confidence intervals of estimates

For the sparse data scenario, FOCE method failed to converge and therefore no parameter estimates were obtained. PEs from all EM methods were close to reference values. The CIs of fixed effects were estimated with similar span by SAEM and IMP methods as reference values, but were underestimated by QRPEM method. All random effects estimates and CIs were almost identical to the reference values across EM methods.

In this more complex model, FOCE method was the slowest to converge in the rich data scenario and failed to converge in the sparse data scenario (Table 3). The ranking of speed across EM methods were (fastest to slowest): QRPEM, IMP and SAEM.

Reduced 6-compartment PBPK model

To further distinguish the method performance, a more complex 6-compartment PBPK model (7 ETA terms) was tested (Fig. 4). For the rich data scenario, all methods converged successfully. The PEs of both fixed and random effects were close to reference values. CI spans were most overestimated by SAEM method for all parameters, and were closest with QRPEM method for the fixed-effect parameters.

6-Compartment PBPK model: the parameter estimates and confidence intervals. Both rich and sparse data scenarios are listed. CL, hepatic clearance; K2-6, partition coefficient in lung, carcass, liver, pancreas/spleen lumped, GI, respectively. Fixed, estimates of population mean of fixed effects; Random, estimates of variance of random effects. Solid horizontal line, expected value of mean; dashed horizontal lines, expected confidence of interval. Circles, parameter estimates; crosses, 95 % confidence intervals of estimates

For the sparse data scenario, all EM methods converged successfully, but FOCE failed to converge. The PEs upon convergence were all very close to reference values. CI spans of fixed-effect parameters were all underestimated by QRPEM method.

Similar as the 4-compartment PBPK model, FOCE method was the slowest to converge for rich data (Table 3). The same ranking of EM method speed was also observed (Table 3).

9-Compartment PBPK model

In this most complex model (10 ETA terms) tested in this study, FOCE method failed to converge for both rich and sparse data. However, all EM methods converged successfully in both scenarios.

The same pattern of results were observed as in the 6-compartment model (Fig. 5). For rich data scenario, the CI spans of all parameters were most overestimated by SAEM method. CI spans of the fixed-effect parameters were most accurately estimated by QRPEM method. For sparse data scenario, the CI spans of fixed-effect parameters were underestimated by QRPEM method. The same speed ranking of EM methods was observed.

9-compartment PBPK model: the parameter estimates and confidence intervals. Both rich and sparse data scenarios are listed. CL, hepatic clearance; K2-9 partition coefficient in lung, carcass, brain, adipose, liver, pancreas, spleen and GI, respectively. Fixed, estimates of population mean of fixed effects; Random, estimates of variance of random effects. Solid horizontal line, expected value of mean; dashed horizontal lines, expected confidence of interval. Circles, parameter estimates; crosses, 95 % confidence intervals of estimates

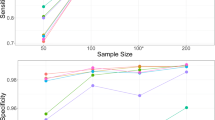

The running time of all tested methods are presented in Fig. 6. QRPEM was overall the fastest to converge in all tested cases, while SAEM was the slowest. The summary of the average sRMSE is presented in Table 4. QRPEM had the least biased parameter estimates of all methods. The summary of RER for each case study is presented in supplemental Table 4.

Discussion

This work evaluated the performance of FOCE and three EM methods in modeling problems with increasing complexity, with rich or sparse data. It demonstrated the advantages of using EM methods over FOCE method for more complex models and sparse data. With tested models, the computation speed varied significantly among SAEM, IMP and QRPEM methods.

In this study, everolimus was used as a model drug and a total of 8 simulated PK cases were studied. Model structures included 1, 4, 6 and 9 compartments, and data sampling strategies included both rich and sparse designs for each model. Among these cases, only the 1-comp model (human, rich sampling) and 9-comp model (mouse, sparse sampling) had direct references to published results [11, 12]. The other cases were examined to construct a complete model/data-structure space, to demonstrate a consistent trend when comparing algorithm performances during increasing problem complexity. For some extreme cases (e.g. the 9-comp PBPK model with rich sampling), there is still lack of direct experimental/clinical references. However, active research in analytical method development has shown promise to potentially fill this gap. Ekstrom et al. [18, 19] reported their studies in which in situ microdialysis technique was applied to simultaneously monitor the methotrexate PK in plasma, liver, kidney and muscle in a rat model. Other groups have also reported studies using the same technique to continuously monitor drug concentrations in other tissues including brain, skin, adipose, heart, lung, eye, etc. [20–27]. All of these evidence has demonstrated the technical feasibility of conducting PK studies with rich sampling in multiple tissues. It is out of the scope of the current study to further validate and push the limit of these techniques in PK research. However, it is reasonable to expect that with the active progress in analytical method development, more experimental evidence will become available as references to the simulated cases of the current study.

For population pharmacometric analysis, the complexity of a model comes from both deterministic and stochastic aspects of a problem. The deterministic aspect can be attributed to the complexity of PK/PD model structure, such as the number of conceptualized compartments and the kinetic relationship between compartments (THETAs). Models of high deterministic complexity are usually better expressed with sets of differential equations rather than in integrated forms. For such problems, the ODE (ordinary differential equation) solving process is applied to determine the amount in each compartment before the actual joint likelihood computation. In most modeling software packages, ODE solvers for different conditions of the system (stiff, non-stiff, or auto-detect) are available. Users can select the most efficient solver for their specific problems (with prior knowledge or simply by testing all candidate solvers). In this study, models were tested with stiff, non-stiff and auto-detect ODE solvers. Only the results using auto-detect solver are presented. The software output files were examined to confirm the absence of reported errors of ODE integration difficulties. Since all methods were run using the same (type of) solver within each model, the ODE solving process were assumed to be the same and not to influence the comparison.

The stochastic complexity of a model is largely determined by the number of random-effect parameters (between-subject variabilities, ETAs) to be estimated and the way they interact. Models with many ETA terms are also referred as of high dimensions. The exact joint likelihood of such problems are very difficult to compute and therefore a variety of algorithms have been developed. The essential difference between different maximum likelihood methods is their strategies to attack the stochastic aspects of the complexity. In other words, methods differ in the calculation of objective function value (OFV) which represents the joint marginal likelihood of all observations.

The FOCE is currently the most popular method by many users and therefore was tested as a benchmark reference in this work. Similar methods such as FO and Laplace all linearize the OFV with approximation instead of computing the exact value. As shown here, FOCE works sufficiently well for simple models, but is not robust with complex models and sparse data. Discussion of specific reasons for its failure to converge for complex models or sparse data is beyond the scope of this study.

All EM methods converged successfully in tested models. The parameter estimates were generally close to the true values and similar across methods. However, the run time differed significantly. And the speed ranking of methods were consistent across models. This can be attributed to the different sampling strategies applied in each method. SAEM is a ‘two-phase’ algorithm which consists of a stochastic ‘burn-in’ phase and an accumulation phase. The ‘burn-in’ stage is the sampling step that consumes most of the running time. This step ran longer as the model complexity increased and as data became sparse. The IMP and QRPEM methods both apply the importance sampling technique when sampling across the probability density space. QRPEM uses Sobol sequencing to obtain more evenly distributed samples which greatly shortens the sampling time [2]. In tested models, QRPEM was consistently the fastest to converge among EM methods, demonstrating the advantage of this approach. The IMP method applies the importance sampling without Sobol sequencing and therefore ran slower than QRPEM. However, it still converged much faster than the SAEM method.

A significant feature of EM methods is the adjustability of the sampling process. In theory, this process can be set to run as accurate as possible by providing an extremely large sampling number. In practice, this is rarely done considering the consumption of time and available computation capacity. In this study, sampling number was tested from 300 to 10,000. Only the results using 300 samples (ISAMPLE = 300) are presented because the estimation accuracy was not significantly improved when ISAMPLE went beyond 300. Another control of the EM method run is based on the convergence criteria. In NONMEM, this can be implemented by setting NITER, CTYPE, CITER, CINTERVAL and ALPHA for SAEM and IMP methods (for other undiscussed methods also). In Phoenix NLME, this option is internally set and not available to end-users. A proper convergence criteria saves computer time while retaining estimation accuracy. On the other hand, an inappropriate criteria may cause the model to run excessively long, or stop before parameter estimates get stable. Since NONMEM version 7.3, an ‘easy button’ AUTO = 1 for the new methods is available to users. While this option is very convenient to apply and generally works well for most problems (based on the authors’ experience), it is not guaranteed as the universal optimal option for any specific problems. In this study, AUTO = 1 option (see supplemental Table 2 for detailed settings) was initially applied for EM methods in NONMEM, and optimized options were tested and used if significant improvement in method implementation was observed.

Among tested EM methods, IMP was the most efficient considering the tradeoff between speed and accuracy. SAEM was slower to converge and the span of estimated CI was more prone to overestimation. QRPEM was faster to converge, however the span of CI was overall underestimated especially for sparse data. The inaccuracy of the CI span estimation was due to the biased estimation of standard error. A detailed discussion of the standard error calculation algorithm is beyond the scope of this work. Nonetheless, the proper use of estimated standard error has been a long debated topic in the pharmacometric society. A typical example of the improper use of estimated standard errors is the calculation of confidence interval of random-effect (ETA) variances (Omega2). By default, NONMEM calculates the CI by adding/subtracting the estimates with 1.96 times the standard error, assuming a normal distribution of these variables. Such calculation is valid for ETA whose distribution was assumed to be normal. However, the variance of a normal distributed random variable (such as ETA) theoretically follows Chi square distribution. This is not currently reflected in the calculation of ‘omega (n,n)’ by most modeling software packages. In the current study, PFIM predicted CIs of parameters are used as a reference for all tested methods. The validity of the PFIM predicted standard errors (based on study designs) has been previously demonstrated in relatively simple structured models [28]. It is reasonable to speculate that this may still hold for more complex models. However, cautions should still be undertaken when using PFIM predictions as reference for CIs estimates, while more research is needed to further validate its performance in high dimensional problems.

SAEM method is also robustly implemented in Monolix (Lixoft, France). While in theory the implementation of the method should not differ dramatically across platforms, inference about SAEM performance in Monolix is not readily drawn based on results in this study. The comparison conclusions only apply to methods available in tested software packages (NONMEM and Phoenix NLME).

Conclusion

The computation time varied significantly among tested methods. FOCE method was much faster to converge than EM methods for simple classical population PK models, and provided parameter estimates with high accuracy. However, EM methods were faster and more stable than FOCE method for complex models and sparse data. Among the tested EM methods, QRPEM was the fastest to converge and SAEM was generally the slowest.

The parameter estimates from tested methods were all very close to reference values upon convergence. For the estimated CI of fixed-effect parameters, the span was overestimated by SAEM method for complex models with rich data, and was underestimated by QRPEM method for the complex models. The estimated span of CI from IMP method was overall the most accurate among all tested EM methods.

References

Kuhn E, Lavielle M (2005) Maximum likelihood estimation in nonlinear mixed effects models. Comput Stat Data Anal 49(4):1020–1038

Leary B, Chittenden J, Matzuka B, Guzy S (2011) QRPEM—a new standard of accuracy, precision, and efficiency in NLME population PK/PD methods

Roe DJ (1997) Comparison of population pharmacokinetic modeling methods using simulated data: results from the Population Modeling Workgroup. Stat Med 16 (11):1241–1257; discussion 1257-1262

Aarons L (1999) Software for population pharmacokinetics and pharmacodynamics. Clin Pharmacokinet 36(4):255–264. doi:10.2165/00003088-199936040-00001

Bauer RJ, Guzy S, Ng C (2007) A survey of population analysis methods and software for complex pharmacokinetic and pharmacodynamic models with examples. AAPS J 9(1):E60–E83. doi:10.1208/aapsj0901007

Gibiansky L, Gibiansky E, Bauer R (2012) Comparison of Nonmem 7.2 estimation methods and parallel processing efficiency on a target-mediated drug disposition model. J Pharmacokinet Pharmacodyn 39(1):17–35. doi:10.1007/s10928-011-9228-y

Wang Y (2007) Derivation of various NONMEM estimation methods. J Pharmacokinet Pharmacodyn 34(5):575–593. doi:10.1007/s10928-007-9060-6

Gibiansky L, Gibiansky E (2014) Target-mediated drug disposition model and its approximations for antibody-drug conjugates. J Pharmacokinet Pharmacodyn 41(1):35–47. doi:10.1007/s10928-013-9344-y

Cao Y, Balthasar JP, Jusko WJ (2013) Second-generation minimal physiologically-based pharmacokinetic model for monoclonal antibodies. J Pharmacokinet Pharmacodyn 40(5):597–607. doi:10.1007/s10928-013-9332-2

Dempster AP, Laird NM, Rubin DB (1977) Maximum likelihood from incomplete data via the EM algorithm. J R Stat Soc Ser B (Methodol) 39(1):1–38

Pawaskar DK, Straubinger RM, Fetterly GJ, Hylander BH, Repasky EA, Ma WW, Jusko WJ (2013) Physiologically based pharmacokinetic models for everolimus and sorafenib in mice. Cancer Chemother Pharmacol 71(5):1219–1229. doi:10.1007/s00280-013-2116-y

Lemaitre F, Bezian E, Goldwirt L, Fernandez C, Farinotti R, Varnous S, Urien S, Antignac M (2012) Population pharmacokinetics of everolimus in cardiac recipients: comedications, ABCB1, and CYP3A5 polymorphisms. Ther Drug Monit 34(6):686–694. doi:10.1097/FTD.0b013e318273c899

Jones HM, Chen Y, Gibson C, Heimbach T, Parrott N, Peters SA, Snoeys J, Upreti VV, Zheng M, Hall SD (2015) Physiologically based pharmacokinetic modeling in drug discovery and development: a pharmaceutical industry perspective. Clin Pharmacol Ther 97(3):247–262. doi:10.1002/cpt.37

Brown RP, Delp MD, Lindstedt SL, Rhomberg LR, Beliles RP (1997) Physiological parameter values for physiologically based pharmacokinetic models. Toxicol Ind Health 13(4):407–484

Davies B, Morris T (1993) Physiological parameters in laboratory animals and humans. Pharm Res 10(7):1093–1095

Johansson AM, Ueckert S, Plan EL, Hooker AC, Karlsson MO (2014) Evaluation of bias, precision, robustness and runtime for estimation methods in NONMEM 7. J Pharmacokinet Pharmacodyn 41(3):223–238. doi:10.1007/s10928-014-9359-z

Plan EL, Maloney A, Mentre F, Karlsson MO, Bertrand J (2012) Performance comparison of various maximum likelihood nonlinear mixed-effects estimation methods for dose-response models. AAPS J 14(3):420–432. doi:10.1208/s12248-012-9349-2

Ekstrom PO, Andersen A, Warren DJ, Giercksky KE, Slordal L (1996) Determination of extracellular methotrexate tissue levels by microdialysis in a rat model. Cancer Chemother Pharmacol 37(5):394–400. doi:10.1007/s002800050403

Ekstrom PO, Anderson A, Warren DJ, Giercksky KE, Slordal L (1995) Pharmacokinetics of different doses of methotrexate at steady state by in situ microdialysis in a rat model. Cancer Chemother Pharmacol 36(4):283–289

Azeredo FJ, Dalla Costa T, Derendorf H (2014) Role of microdialysis in pharmacokinetics and pharmacodynamics: current status and future directions. Clin Pharmacokinet 53(3):205–212. doi:10.1007/s40262-014-0131-8

de la Pena A, Liu P, Derendorf H (2000) Microdialysis in peripheral tissues. Adv Drug Deliv Rev 45(2–3):189–216

Eslam RB, Burian A, Vila G, Sauermann R, Hammer A, Frenzel D, Minichmayr IK, Kloft C, Matzneller P, Oesterreicher Z, Zeitlinger M (2014) Target site pharmacokinetics of linezolid after single and multiple doses in diabetic patients with soft tissue infection. J Clin Pharmacol 54(9):1058–1062. doi:10.1002/jcph.296

Rigby JH, Draper DO, Johnson AW, Myrer JW, Eggett DL, Mack GW (2015) The time course of dexamethasone delivery using iontophoresis through human skin, measured via microdialysis. J Orthop Sports Phys Ther 45(3):190–197. doi:10.2519/jospt.2015.5308

Srirangam R, Hippalgaonkar K, Majumdar S (2012) Intravitreal kinetics of hesperidin, hesperetin, and hesperidin G: effect of dose and physicochemical properties. J Pharm Sci 101(4):1631–1638. doi:10.1002/jps.23047

Cremers TI, Flik G, Folgering JH, Rollema H, Stratford RE Jr (2016) Development of a rat plasma and brain extracellular fluid pharmacokinetic model for bupropion and hydroxybupropion based on microdialysis sampling, and application to predict human brain concentrations. Drug Metab Dispos 44(5):624–633. doi:10.1124/dmd.115.068932

Kuzmin AI, Tskitishvili OV, Serebryakova LI, Kapelko VI, Majorova IV, Medvedev OS (1995) Allopurinol: kinetics, inhibition of xanthine oxidase activity, and protective effect in ischemic-reperfused canine heart as studied by cardiac microdialysis. J Cardiovasc Pharmacol 25(4):564–571

Eisenberg EJ, Conzentino P, Eickhoff WM, Cundy KC (1993) Pharmacokinetic measurement of drugs in lung epithelial lining fluid by microdialysis: aminoglycoside antibiotics in rat bronchi. J Pharmacol Toxicol Methods 29(2):93–98

Nguyen TT, Bazzoli C, Mentre F (2012) Design evaluation and optimisation in crossover pharmacokinetic studies analysed by nonlinear mixed effects models. Stat Med 31(11–12):1043–1058. doi:10.1002/sim.4390

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Liu, X., Wang, Y. Comparing the performance of FOCE and different expectation-maximization methods in handling complex population physiologically-based pharmacokinetic models. J Pharmacokinet Pharmacodyn 43, 359–370 (2016). https://doi.org/10.1007/s10928-016-9476-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10928-016-9476-y