Abstract

Listening via stethoscope is a primary method, being used by physicians for distinguishing normally and abnormal cardiac systems. Listening to the voices, coming from the cardiac valves via stethoscope, upon the flow of the blood running in the heart, physicians examine whether there is any abnormality with regard to the heart. However, listening via stethoscope has got a number of limitations, for interpreting different heart sounds depends on hearing ability, experience, and respective skill of the physician. Such limitations may be reduced by developing biomedical based decision support systems. In this study, a biomedical-based decision support system was developed for the classification of heart sound signals, obtained from 120 subjects with normal, pulmonary and mitral stenosis heart valve diseases via stethoscope. Developed system was mainly comprised of three stages, namely as being feature extraction, dimension reduction, and classification. At feature extraction stage, applying Discrete Fourier Transform (DFT) and Burg autoregressive (AR) spectrum analysis method, features, representing heart sounds in frequency domain, were obtained. Obtained features were reduced in lower dimensions via Principal Component Analysis (PCA), being used as a dimension reduction technique. Heart sounds were classified by having the features applied as input to Artificial Neural Network (ANN). Classification results have shown that, dimension reduction, being conducted via PCA, has got positive effects on the classification of the heart sounds.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Heart is one of the vital centers for human life. Since the year 1985, deaths from heart diseases have been ranked second worldwide, right after those from brain infarction [1]. Thus, it is critical to diagnose any disease to occur related with the heart.

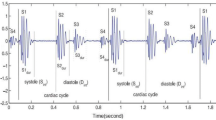

Correlation between the volume, pressure, and flow of blood in the heart determines opening and closing of cardiac valves. Normal heart sound comes from closing of the valves. Besides, sounds, coming from flow of blood inside the heart and vessels, are components of heart sounds [2]. Heart sounds and murmurs come in general from the movements of myocardial walls, opening and closing of valves, as well as from the flow of blood in and out of chambers [3]. The sound emitted by a human heart during a single cardiac cycle consist of two dominant events, known as the first heart sound S1 and second heart sound S2. While S1 comes from closing of mitral and tricuspid valves, S2 comes from closing of aortic and pulmonary valves [4].

For the analysis of heart sounds, and for their naming within the literature as well, heart has been divided into four regions. These are named as mitral, tricuspid, pulmonary, and aortic regions. These regions are not the anatomical locations of the heart valves, but the direction of blood flow through these valves. Comparing the sounds coming from each region with those coming from other regions, troubled region, and reason of the related trouble are attempted to be identified [5]. In our study, having made use of heart sounds obtained from mitral and pulmonary regions, diagnoses of mitral stenosis and pulmonary stenosis diseases were conducted.

Mitral stenosis occurs from the declination in the opening of mitral valve, leading the way of the blood in the left atrium to left ventricle, and blocking its return [5]. Many conditions, having occurred from birth, or thereafter, may end up with failure in filling of left ventricle, and cause mitral stenosis. Rheumatic valve disease is the primary cause of mitral stenosis in adults [6].

Pulmonary stenosis, on the other hand, occurs from pulmonary valve’s (located between right ventricle and lung artery) hampering the flow of blood into the lungs [5]. Pulmonary stenosis is the second most endemic congenital disease among adults. While most of them progress lightly, without necessitating any treatment, they frequently coincide with congenital heart diseases. Having pulmonary stenosis not undergone any treatment, right ventricle failure may develop [6].

Abnormalities in the structure of the heart are mostly reflected in the heart sounds [7]. Thus, in order to have the abnormalities in the structure of the heart identified, physicians listen to mitral, tricuspid, pulmonary, and aortic sections respectively. Nowadays, the most common method being used by physicians in diagnosing cardiac diseases is listening via stethoscope [8]. Listening to the voices, coming from the cardiac valves via stethoscope, upon the flow of the blood running in the heart, physicians examine whether there is any abnormality with regard to the heart. However, there are numerous limitations on the method of listening via stethoscope. Listening via stethoscope is dependent on the physician’s ability to interpret different heart sounds, on his/her hearing ability, experience, and skill [9]. The required experience and skill are obtained through examinations, being conducted over long years. While there are experience and skill difficulties being encountered particularly with respect to newly graduated and intern physicians, inappropriate environmental conditions, and patient nonconformity as well may lead to insufficient diagnoses [10]. Development of decision support systems will be helpful for the physicians in diagnosing the heart sounds against the possibility of encountering such deficiencies. Decision support systems, being developed via signal processing, pattern recognition and classification methods, will be helpful for physicians in interpreting the heart sounds, and in diagnosing heart diseases consequently. Besides, those developed systems are accurate, easy-to-use, and cost-efficient. In case that heart sounds may be identified, or diagnosed via computer softwares, the abovementioned problems would significantly become solved.

There are a lot of studies in the literature, having been conducted for the diagnosis of heart diseases with the help of heart sounds. In their study, Sharif et al. have achieved a success in classification via frequency estimation from heart sound signals at a rate of 70% for normal heart sound, mitral stenosis, and mitral regurgitation [11]. Segaier et al. have developed an algorithm for ascertaining first (S1) and second (S2) heart sound. They have made use of DFT for the analysis of heart signals from patients, at their study among pediatrics department patients [12]. Folland et al. have applied Fast Fourier Transform and Levinson-Durbin algorithms on the heart sounds, for the analysis of abnormalities in heart sounds. They have classified the obtained features by making use of Multi Layer Perceptron (MLP) and Radial Basis Function (RBF) Neural Network Classifiers. Consequently, classification successes by 84% and 88% have been obtained consecutively from MLP and RBF neural networks [13]. Bhatikar et al. have aimed at developing a reliable screening device for diagnosis of heart murmurs in pediatrics. They were used Fast Fourier Transform to extract the energy spectrum in frequency domain. Heart murmurs were classified by having the features applied as input to ANN. With this classifier, they were able to achieve classification accuracy of 83% sensitivity and 90% specificity in discriminating between innocent and pathological heart murmurs [14]. Reed et al. have made use of wavelet transform and ANN for the analysis and classification of heart sounds [15]. Sinha et al. have analyzed heart sounds, obtained from healthy persons, and from mitral stenosis patients via wavelet transform technique, and ANN [16]. Voss et al. have classified the features, obtained from normal and aortic heart sound signals via wavelet and Fourier transform, via linear discriminant function analysis method [17]. Pavlopoulos et al. have developed a decision tree-based method by making use of heart sounds, for the diagnosis of aortic stenosis and mitral regurgitation diseases [18].

In this study, a biomedical-based application has been developed for the classification of heart sound signals, obtained from 120 subjects with normal, pulmonary and mitral stenosis heart valve diseases via stethoscope. Application is mainly comprised of three stages, namely as being feature extraction, dimension reduction, and classification. At the feature extraction stage, frequency spectrum of heart sound signals has been obtained by making use of DFT. In addition to DFT, power spectrum density of each signal has been calculated using the Burg AR method for compare to feature extraction methods. Thus, having heart sound signals converted from time domain to frequency domain, features representing the heart sounds will be obtained. Having the obtained features applied as input to ANN, classification stage of heart will be proceeded to. However, due to the presence of numerous variables, over-fitting should be avoided in the choice of ANN input variables [19]. Data set may become lower-dimensioned via PCA, being used as a dimension reduction technique. While “over-fitting” may be avoided by having the reduced features applied as input to ANN, ANN with reduced parameters will also shorten the training period. For these reasons, features, having been obtained via DFT and Burg AR method, have been applied, in a form reduced via PCA, as input to ANN. Classification success at a rate by 95% has been achieved in our purposed system for both feature extraction methods. Classification results have displayed the fact that, purposed system is effective in the classification of heart sounds.

Rest of the article was organized as follows. In “Materials and methods”, by the obtainment of heart sound signals, background theory of DFT, PCA, and ANN methods was explained. The efficiency of the method, intended for the classification of heart sound signals, being used in the diagnosis of heart valve diseases, was introduced in “Experimental results”. “Discussion and conclusion” was comprised of the conclusion, as well as the recommendations.

Materials and methods

Figure 1 shows the procedure used in the development of the classification system. It consist of five parts: (a) measurement of heart sound signals with stethoscope, (b) feature extraction of heart sound signals by using DFT and Burg AR mehod, (c) dimension reduction with PCA (d) classify heart sound signals, (e) classification results (as normal, mitral stenosis or pulmonary stenosis).

Raw data obtainment

In this study, heart sounds, having been obtained by Güraksın from the cardiology clinic of the Medicine Faculty of Afyon Kocatepe University, during the course of Master thesis works [20], have been made use of. Heart sounds are being listened simply by having the stethoscope slightly contact the chest wall. For the obtainment of heart sounds, a Littman 4100-model electronic stethoscope has been made use of. It is possible to record six different sounds via Littman 4100-model stethoscope. By this way, it is possible to have heart sounds from six patients saved within the stethoscope itself. Heart sounds, obtained via stethoscope, have been exemplified in 8 kHz. Making use of the noise reduction technology within the body of the stethoscope, surround sounds have been reduced averagely by 75% (−12 dB), without eliminating critical body sounds. Sounds, having been recorded via Littman 4100-model electronic stethoscope, are being kept in “e4k” format [20]. While this format has been converted into “wav” format, by making use of a program given by Littman, the heart sounds in wav format have thereafter been converted into digital form via an input C# application.

Discrete fourier transform (DFT)

Having the signals, to be used in applications, reviewed, numerous signals, being come by in practice, are time domain signals, and their measured size is a function of time. Thus, it is necessary to transfer the signal to a different domain via a mathematical transform, and information on the signal is obtained from the components, representing the signal in this domain. Via Fourier transform, frequency spectrum of the signal is obtained. By this way, the information hidden in the time domain is brought into open in frequency domain [5].

Contrary to some of the theoretically-described series, it is impossible to calculate Fourier transforms of real series. Thus, use of Fourier transform is not suitable for digital signals. Analog display of frequency and the need for infinite examples as well, are the main reasons for this unsuitability. Besides, while Fourier transform examines which frequency components are embedded in the signal, it does not contain any information on which moment of time that these components are brought into open. In this condition, sounds with the same frequency, but generated in regions other than time domain, will correspond to the same region within the frequency spectrum. This will, then, lead to yield of inaccurate results from the classifier. According to these difficulties, a more applicable transform should be identified by having the importance of Fourier transform in signal processing into consideration. This more practical solution, having been identified for the evenly-spaced N frequency point around the unit circle, and for the example N of x(n) series, is named as DFT [20].

DFT calculations are used in numerous engineering applications. The reverse for the time series can also be gained and its feature of transform is quite acute. It has mathematical features exactly similar to those of Fourier integral transform. It particularly identifies the spectrum of a time series [21]. The most important two features of DFT are that, correspondence of multiplication of two DFT’s in the time domain is equal to the convolution sum of the series. Besides, numerous spectrum analysis methods are based on DFT [20].

DFT is identified via Eq. 1:

where, A r represents DFT’s r th coefficient, X k represents k th example of a time series made of N example. While X k may be complex numbers, A r ’s are always complex numbers.

Burg autoregressive (AR) method

Different spectrum estimation methods fall under either the nonparametric or parametric method categories. In Nonparametric methods, it is the signal that is used directly in the estimation of power spectral density (PSD). Periodogram is the easiest method that can be given as an example to that. Whereas, in Parametric methods, a model is used in the process of estimating the power spectrum. Burg, Welch, and Yule Walker methods are the most common parametric methods used.

There are two steps for estimating the spectrum in the parametric methods. Method parameters are estimated according to the data series x(n),\( 0 \leqslant n \leqslant N - 1 \). PSD estimate is computed using the estimations obtained here. As stated above, AR method is preferred as a spectrum analysis technique, due to the simplification of the estimation of AR parameters (such as Yule–Walker, Burg, covariance, least squares, and maximum likelihood estimation). Unlike other AR estimation methods, Burg method doesn’t calculate the autocorrelation function but directly estimates the reflection coefficients. Because of the fact that PSD estimates are close to the true values in the AR case, to resolve closely spaced sinusoids in signals with low noise levels and to estimate short data records can be stated as the main advantages of the Burg’s method [22]. Moreover, Burg AR method is efficient giving stable estimates. On the grounds of these advantages, in our study Burg method is used. In Burg method, PSD estimation can be defined as Eq. 2.

where \( {\hat{a}_p}(k) \) is AR parameters to reflection coefficients, \( {\hat{e}_p} \) is total least-squares error, p denotes model order, f denotes frequency.

Selecting the model order makes up a key component in parametric methods. Through various techniques, the optimal model order can be determined [23–25]. Akaike proposed a better criterion for choosing the model order, namely the Akaike information criterion (AIC) [25]. In AIC, the model order is selected through the minimization of Eq. 3.

where \( {\hat{\sigma }^2} \) is the estimated variance of the linear prediction error. In this study, model order of the AR method was taken as 12 by using Eq. 3.

Artificial neural network (ANN)

ANN is a calculation system, having been developed through inspiration from the structure, as well as from the learning characteristics of neural cells. It is similarly and successfully applied on the functional features of human brain, in terms of such aspects as learning, optimization, prediction, clustering, generalization, and classification. Main reasons of the frequent use of ANN in classification applications are as follows: (a) ANN’s simple structure for easier use in hardware platform; (b) ANN’s easier mapping of complex class-distributed features; (c) ANN’s generalization feature. Its generation of results appropriate for input vectors unavailable in training set; (d) Weights indicating the result are found via repeated trainings [26].

ANNs’ physical structure is important in fulfillment of their duties. Different structures are originated from the connection of neurons, as well as from the applied training rule. A group of neurons get together, and generate a layer. In general, there are three layers available in ANNs. These layers are consecutively as follows: input layer, establishing the connection with the outer world; hidden layer, with the ability to process the incoming information; output layer, transmitting the decisions of ANN to outer world. Process elements in the input layer transmit the given information to the neurons in the hidden layer, without subjecting to any change. Information, as being mentioned herein, means to be the weights found on the connection lines between the neurons. These information constitute the memory of ANN, in other words the database for the system, in which ANN will be used after being trained [27]. The number of the layers of ANN’s structure, and number of neurons therein are in general dependent to the applications. In Fig. 2, structure of an ANN with 1 input, 1 hidden, and 1 output layer.

While it is possible to use all ANN models as classifiers, the most widely-used model is the feed-forward neural network of Multi-Layer Perceptron (MLP). While various training algorithms are being made use of in the training of the feed-forward neural networks MLPs, best results have been attained from back-propagation algorithm. Back-propagation algorithm depends on gradient-descent method [28]. Upon calculating the error in the output of the network, Back-propagation algorithm rearranges the weights of neurons thereafter. Having the rearrangement extended through layers, it is attempted to reduce errors in output [28, 29]. In our study, 3-layered “MLP feed-forward neural network with back-propagation algorithm” structure was used.

Principal component analysis (PCA)

PCA is a statistical technique, being used for extracting information from multi-variety data set. This process is performed via having principal components of original variables with linear combinations identified. While the original data set with the maximum variability is represented with first principal component, the data set from the remaining with the maximum variability is represented with second principal component. The process goes on consecutively as such, with the data set from the remaining with the maximum variability being represented with the next principal component. While m represents the number of all principal components, and p represents the number of the significant principal components among all principal components, p may be defined as the number of those principal components of the m-dimensional data set with the highest variance values. It is clear therein that, p≤m. Therefore, PCA may be defined as the data-reducing technique. In other words, PCA is a technique, being used for producing the lower-dimensional version of the original data set [30].

Let’s take a p-dimensional data set as X, and p number of principal axes as T 1,T 2,...,T p . In accordance with 1 ≤ p ≤ m, T 1,T 2,...,T p are identified by the eigenvectors of sample covariance matrix in Eq. 4.

where x i ∈ X, μ is the sample mean, and N is the number of samples, so that:

where λ i is the largest eigenvalue of S. The p number of principal components of a given observation vector x i ∈ X is as follows:

In multi-classed problems, while the variations of the data are taken as general principals, principal axes are subtracted from the global covariance matrix.

where µ is global mean off all samples. K is number of classes, N j is the number of samples in class j; \( N = \sum\nolimits_{j = 1}^K {{N_j}} \) and x ji represents the i th observation from class j. The principal axes T 1,T 2,...,T p are the p leading eigenvectors of \( \hat{S} \).

where \( {\hat{\lambda }_i} \) is the i th largest eigenvalue of \( \hat{S} \) [31].

Evaluation of performance

The performance analysis of the proposed system has been performed according to the techniques given below.

Classification accuracy, sensitivity and specificity analysis

In this study, the classification accuracy of for the dataset was evaluated according to Eq. 9.

where T is the test dataset, t is a data item of T (t∈T), t.c is the class of the item t, and classify(t) returns the classification of t by ANN method in our study.

Sensitivity and specificity analysis are important measure for performance of diagnostic tests. The sensitivity and specificity are defined as:

where TP, TN, FP and FN denotes true positives, true negatives, false positives and false negatives, respectively.

Confusion matrix

A basic methodology called a confusion matrix is used to display the classification results of a classifier. Labeling the requested classification on the rows and the actual classifier outputs on the columns forms a confusion matrix. In each exemplar, the cell entry defined by the requested classification and the actual classifier outputs is increased by 1. Since it is desired to have actual classifier outputs and the requested classification identical, the optimum is attained when all the exemplars are located diagonally on the matrix [32].

Experimental results

In this study, for the diagnosis of a heart valve disease, a PCA-ANN-based biomedical system has been developed by making use of the heart sounds, having been obtained from a total of 120 subjects. Among the heart sounds obtained from 120 subjects, 40 of them are normal, 40 of them are mitral stenosis, and the remaining 40 are pulmonary stenosis. The group consists of 55 males, and 65 males within an age range of 4 to 65. The study is mainly comprised of three stages, namely as being feature extraction, dimension reduction, and classification. At the feature extraction stage, features, representing heart sound signals, are obtained by making use of DFT and Burg AR spectrum analysis method. Obtained features have been reduced in lower dimensions via PCA, being used as a dimension reduction technique. Having the reduced features applied as inputs to ANN, heart sounds have been divided into three classes, as being normal, mitral stenosis, and pulmonary stenosis. Stages of feature extraction, dimension reduction, and classification have been realized by making use of Matlab software package.

Feature extraction via DFT

In real life, calculations are made in computer environment, and reviewed signals are converted into digitals upon being sampled accordingly. Mathematical transformations, being used for conversion of signals into digitals, should therefore be applicable to these signals comprised of discrete elements. DFT has been developed for this purpose. DFT enables the Fourier transformation from finite number of samples of a signal.

At the feature extraction stage, frequency spectrum of heart sound signals has been obtained by making use of DFT. Thus, having heart sound signals converted from time domain to frequency domain, features representing the heart sounds will be attained. 0–300 Hz of frequency spectrum, obtained from DFT application has been chosen as feature, in the form having been used in [4].

In the Fig. 3(a) and (b), graphics of the heart sound, obtained from a normal subject, are seen consecutively in its example of waveform, and as being applied DFT.

While in Fig. 4(a) and (b), graphics of the heart sounds, obtained from subjects with mitral stenosis disease, are seen consecutively in their examples of waveform, and as being applied DFT, similarly in Fig. 5(a) and (b), those of the heart sounds, obtained from subjects with pulmonary stenosis disease, are seen consecutively in their examples of waveform, and as being applied DFT. Graphics in Figs. 3, 4, and 5 are drawn alongside a period of one cycle.

As seen in Figs. 3, 4, and 5, there are apparent differences between the graphics of the heart sound of a normal subject, and those from patients with mitral and pulmonary stenosis diseases. These differences, being reflected to heart sound graphics, are also reflected largely to DFT graphics. While heart sound graphics of a disease vary according to that particular disease, and such a condition also varies DFT graphics as per each particular disease. Therefore, such a classification system, being established by taking such variances in DFT graphics into consideration, enables for deciding on respective diseases.

Feature extraction via Burg AR method

In our study, before the Burg AR spectrum analysis method is applied to the heart sound signals, sampled heart sound signals are grouped by frames comprising specific sample numbers. The most common frame lengths are 64,128 and 256. The optimal length of the used frame depends upon the durability of the signal and sampling frequency. In this study, frame length is taken as 64 because sample numbers were few [33]. Subsequently, the power spectrum density of each window was calculated using the Burg AR method. Hence, number of samples for each subject was reduced to 33. 33 characteristics of the heart sound signals obtained by calculating the PDSs of heart sound signals were taken as ANN input parameters.

Figure 6(a), (b) and (c) present the sample PSDs of the heart sound signals for the normal and the abnormal subjects, respectively. According to Fig. 6, heart sound PSD values of normal subject and abnormal subjects, who suffer from mitral stenosis and pulmonary stenosis, show different characteristics.

Dimension reduction via PCA

0–300 Hz of frequency spectrum, obtained from DFT application has been chosen as feature, in the form having been used in [4]. In addition to DFT, the power spectrum density of each signal has been calculated using the Burg AR method for compare to feature extraction methods. 33 features have been extracted via Burg AR method. However, due to the numerous input variables, over-fitting should be avoided in the choice of ANN input variables [19]. Besides, presence of excess number of redundant, irrelevant, and noisy input variables may hide the meaningful variables in the data set. Excess number of input parameters may further hinder the determination of the optimum ANN model [34]. As a linear technique, being used in dimension reduction, PCA transforms the data set from its space m-dimensional original form to its space p-dimensional new form. While over-fitting may be avoided by making use of a lower-dimensional data set, thanks to ANN with reduced number of parameters, training period will be shortened. For these reasons, reducing input variables in the data set via PCA will enable an increase in the classification performance of ANN [30].

The number of principal components, being obtained from PCA, will be equal to that of the variables. One of the basic advantages of PCA is that, it may represent m number of variables, as p≤m, by p number of variables. However, the p number of principal components to be chosen among the all principal components should be the principal components to represent the data at their very best. There are certain criteria in determining the optimal number of principal components. “Broken-stick model, Velicier’s partial correlation procedure, cross-validation, Barlett’s test for equality of eigen-values, Kaiser’s criterion, Cattell’s screen-test, and cumulative percentage of variance” are a group of such criteria [35]. In our study, cumulative percentage of variance criteria has been applied in determining the number of principal components, for its simplicity, and eligible performance [36]. According to this criterion, principal components, with their cumulative percentage of variance is higher than a prescribed threshold value, are being chosen. The sensible threshold value can be selected between 70% and 90%. The best value for threshold will generally become smaller as p increases. Although a sensible threshold is very often in the range 70% to 90%, it can sometimes be higher or lower depending on the practical details of a particular data set. However, it should be noticed that some authors point out that there is no ideal solution to the problem of dimensionality in a PCA [37]. Therefore, the choice of threshold is often selected heuristically [38]. In this study, threshold value has been specified as 75%.

For 300 features, having been obtained from DFT, 300 principal components have been obtained from PCA analysis. As being seen in Table 1, cumulative percentage variance of the first 8 of 300 principal components exceeds the threshold value of 75%. This means the data are highly correlated and can be indicated by the eight principal components. A further 292 principal components contributed only %25 of the variation and were not considered of importance. In this way, obtained 300 features from DFT, was reduced eight features.

In similar way, for 33 features, having been obtained from Burg AR method, 33 principal components have been obtained from PCA analysis. As being seen in Table 2, cumulative percentage variance of the first 6 of 33 principal components exceeds the threshold value of 75%. This means the data are highly correlated and can be indicated by the ten principal components. In this way, obtained 33 features from Burg AR method was reduced six features.

Classification using artificial neural network

From the dimension reduction step via PCA application, it was proceeded to the classification stage of heart sounds. For this process, MLP ANN structure, classification successes of which have been mentioned above, has been made use of. Normal heart sounds, as well as diseases of mitral and pulmonary stenosis have been reviewed at the classification via ANN.

In Table 3, distribution of the training, and test sets to be used as input at ANN structure is being seen. Training and test set distribution is similar to that in [4]. Its reason is the exact comparability of the proposed method with [4].

Feature vectors from the training set via DFT-PCA and Burg AR-PCA method, distribution of which has been performed according to Table 3, have been applied as inputs for ANN classifier. Training parameters, having been applied herein, as well as the structure of ANN are revealed in Table 4. Table 4 has been established by having such parameters, as number of hidden layers, learning rate and momentum constant value, and activation functions type modified, with the intent of obtaining the best classification performance. Besides, classification process has been replicated 30 times with random initially weight values.

In Fig. 7, training performance of DFT-PCA-ANN is being seen. As a result of the replicated 30 times, it has been found out that, mean-squared error is reduced lower to 10−5 in an average of 35 steps. At the end of training, DFT-PCA-ANN classifier training set has been classified as 100% correct.

In Fig. 8, training performance of Burg AR-PCA-ANN is being seen. As a result of the replicated 30 times, it has been found out that, mean-squared error is reduced lower to 10−5 in an average of 28 steps. At the end of training, Burg AR-PCA-ANN classifier training set has been classified as 100% correct.

Following the training stage, feature vectors from 60 test sets have been applied as inputs for trained DFT-PCA-ANN and Burg AR-PCA-ANN classifiers. Classification results have shown the performances of these two different feature extraction based classifiers are same. The confusion matrix, revealing the obtained classification results, is given in Table 5.

According to the confusion matrix, one normal subject was incorrectly classified as pulmonary stenosis, one subject with mitral stenosis was incorrectly classified as normal subject, and one subject with pulmonary stenosis was incorrectly classified as patient suffering from mitral stenosis.

Besides, by making use of statistical sensitivity and specificity parameters, successes of DFT-PCA-ANN and Burg AR-PCA-ANN based methods in classification have been compared with that of DFT-ANN-based method [4] in classification. In these studies, training and test data sets, as well as feature extraction with DFT stage are exactly the same. Comparison results are seen in Table 6.

According to Table 6, our results show the performances of purposed DFT-PCA-ANN and Burg AR-PCA-ANN based classifiers are same. But, classification success of the purposed DFT-PCA-ANN/Burg AR-PCA-ANN based methods have achieved much better results, in comparison with those of the classification success of DFT-ANN based method, having been applied in [4]. According to classification results have shown that, dimension reduction, being conducted via PCA, has got positive effects on the classification of the heart sounds signals.

The ROC curves were plotted in order to compare the performance of the classifiers using sensitivity and specificity values. ROC curves provide a view of this whole spectrum of sensitivities and specificities because some sensitivity/specificity pairs for a test set are plotted [39]. A classifier has good classification performance, when sensitivity rises rapidly. Specificity does not almost increase at all until sensitivity becomes high. ROC curve which is shown in Fig. 9 demonstrates DFT-PCA-ANN/Burg AR-PCA-ANN method classification performance on the test data set. In addition the ROC curve, the areas under the ROC curves were calculated. The areas under the ROC curves were found to be 0.925 for DFT-ANN and 0.950 for DFT-PCA-ANN/Burg AR-PCA-ANN methods. According to these results, the classification performances of purposed methods were better than DFT-ANN classification method in [4].

Discussion and conclusion

In this study, a biomedical-based system has been developed for the classification of heart sound signals, obtained from 120 subjects with normal, pulmonary and mitral stenosis heart valve diseases via stethoscope. Developed system is mainly comprised of three stages, namely as being feature extraction, dimension reduction, and classification. At the feature extraction stage, features, representing heart sound signals, are obtained by making use of Fourier transform. In addition to DFT, power spectrum density of each signal has been calculated using the Burg AR method for compare to feature extraction methods. Obtained features have been reduced in lower dimensions via PCA, being used as a dimension reduction technique. Consequently, having the process load of ANN lessened, it has been intended to avoid over-fitting. Heart sounds have been classified by having the reduced features applied as entry to ANN. Classification results have shown the performances of purposed DFT-PCA-ANN and Burg AR-PCA-ANN based classifiers are same. But, classification success of the purposed DFT-PCA-ANN/Burg AR-PCA-ANN based methods have achieved much better results, in comparison with those of the classification success of DFT-ANN based method. As an outcome of purposed methods, heart sounds, obtained from three classes, namely as normal, mitral stenoses, and pulmonary stenosis, have been classified at correctness rates of 95%. In conclusion, classification results have shown that, dimension reduction, being conducted via PCA, has got positive effects on the classification of the heart sounds. Above all, development of this kind of decision-support systems will provide assistance to physicians with lacking experience, and skill in diagnosing the heart sounds, by simplifying this diagnosis process.

References

Jiang, Z., and Choi, S., A cardiac sound characteristic waveform method for in-home heart disorder monitoring with electric stethoscope. Expert Syst Appl 31(2):286–298, 2006.

Ahlström, C., Processing of the Phonocardiographic Signal-Methods for the intelligent stethoscope, Ms Thesis, Linköping University, Institue of Techonology, Linköping, Sweden, 2006.

Kara, S., Classification of mitral stenosis from Doppler signals using short time Fourier transform and artificial neural Networks. Expert Syst Appl 33:468–475, 2007.

Güraksın, G.E., Ergün, U., Classification of the Heart Sounds via Artificial Neural Network, International Symposium on Innovations in Intelligent Systems and Applications 507–511, Trabzon Turkey 2009.

Say, Ö., Analysis of heart sounds and classification of by using artificial neural networks, Ms Thesis, Institute of Natural and Applied Science, İstanbul Technical University, İstanbul, Turkey, 2002.

Crawford, M. H., Current diagnosis & treatment in cardiology, chapter 23 of congenital heart disease in adults, 2. McGraw-Hill, USA, 2002. 403 p.

Leung, T. S., White, P. R., Collis, W. B., Brown, E., and Salmon, A. P., Classification of heart sounds using time-frequency method and artificial neural Networks. Proceedings of the 22nd Annual International Conference of the IEEE Engineering in Medicine and Biology Society 2:988–991, 2006.

Sinha, R. K., Artificial neural network detects changes in electro-encephalogram power spectrum of different sleep-wake states in an animal model of heat stress. Med Biol Eng Comput 41:595–600, 2003.

Kandaswamy, A., Kumar, C., Ramanathan, R., Jayaraman, S., and Malmurugan, N., Neural classification of lung sounds using wavelet coefficients. Comput Biol Med 34(6):523–537, 2004.

O’Rourke, R. A., Cardiovascular disease: foreword. Curr Probl Cardiol 25(11):786–825, 2000.

Sharif, Z., Zainal, M. S., Sha’ameri, A. Z., and Salleh, S. H. S., Analysis and classification of heart sounds and murmurs based on the instantaneous energy and frequency estimations. Tencon 41:130–134, 2000.

El-Segaier, M., Lilja, O., Lukkarinen, S., Sörnmo, L., Sepponen, R., and Pesonen, E., Computer-based detection and analysis of heart sound and murmur. Ann Biomed Eng 33(7):937–942, 2005.

Folland, R., Hines, E. L., Boilot, P., and Morgan, D., Classifying coronary dysfunction using neural networks through cardiovascular auscultation. Med Biol Eng Comput 40:339–343, 2002.

Bhatikar, S. R., DeGroff, C., and Mahajan, R. L., classifier based on the artificial neural network approach for cardiologic auscultation in pediatrics. Artif Intell Med 33:251–260, 2005.

Reed, T. R., Reed, N. E., and Fritzson, P., Heart sound analysis for symptom detection and computer-aided diagnosis. Simul Model Practice Theory 12(2):129–146, 2004.

Sinha, R. K., Aggarwal, Y., and Das, B. N., Backpropagation artificial neural network classifier to detect changes in heart sound due to mitral valve regurgitation. J Med Syst 31:205–209, 2007.

Voss, A., Mix, A., and Huebner, T., Diagnosing aortic valve stenosis by parameter extraction of heart sound signals. Ann Biomed Eng 33:1167–1174, 2005.

Pavlopoulos, S., Stasis, A., Loukis, E., A decision tree-based method for the differential diagnosis of aortic stenosis from mitral regurgitation using heart sounds. BioMed Eng OnLine (June 3) 1–5, 2004, Available at: http://www.biomedical-engineering-online.com/content/3/1/21.

Tetko, I. V., Luik, A. I., and Poda, G. I., Applications of neural networks in structure-activity relationships of a small number of molecules. J Med Chem 36(7):811–814, 1993.

Güraksın, G.E., Classification of the heart sounds via artificial neural network, Ms Thesis, Institute of Natural and Applied Science, Afyon Kocatepe University, Afyonkarahisar, Turkey, 2009.

Cochran, W. T., Cooley, J. W., Favin, D. L., Helms, H. D., Kaenel, R. A., Lang, W. W., Maling, G. C., Nelson, D. E., Rader, C. M., and Welch, P. D., What is the fast fourier transform. Trans Audio Electroacoust 15:45–55, 1967.

Faust, O., Acharya, R. U., Allen, A. R., and Lin, C. M., Analysis of EEG signals during epileptic and alcoholic states using AR modeling techniques. IRBM 29(1):44–52, 2008.

Proakis, J. G., and Manolakis, D. G., Digital signal processing principles, algorithms, and applications. Prentice-Hall, New Jersey, 1996.

Kay, S. M., Modern spectral estimation: theory and application. Prentice-Hall, New Jersey, 1988.

Akaike, H., A new look at the statistical model identification. IEEE Trans Autom Control 19:716–723, 1974.

Ölmez, T., and Dokur, Z., Classification of heart sound using an artificial neural network. Pattern Recogn Lett 24:617–629, 2003.

Türkoğlu, İ., An Intelligent pattern recognition for nonstationary signals based on the time-frequency entropies, Phd Thesis, Institute of Natural and Applied Science, Fırat University, Elazığ, Turkey, 2002.

Haykin, S., Neural Networks. A Comprehensive Foundation. Macmillan College Publishing Company Inc., New York, 1–60 p., 417 p., 1994.

Basheer, I. A., and Hajmeer, M., Artificial neural Networks: Fundementals, computing, design and application. J Microbiol Methods 43:3–31, 2000.

Zhang, Y. X., Artificial neural networks based on principal component analysis input selection for clinical pattern recognition analysis. Talanta 73:68–75, 2007.

Wang, X., and Paliwal, K. K., Feature extraction and dimensionality reduction algorithms and their applications in vowel recognition. Pattern Recogn 36:2429–2439, 2003.

Beksaç, M. S., Başaran, F., Eskiizmirliler, S., Erkmen, A. M., and Yörükan, S., A computerized diagnostic system for the interpretation of umbilical artery blood flow velocity waveforms. Eur J Obstet Gynecol Reprod Biol 64(1):37–42, 1996.

Akın, M., and Kıymık, M. K., Application of periodogram and AR spectral analysis to EEG signals. J Med Syst 24(4):247–256, 2000.

Seasholtz, M. B., and Kowalski, B., The parsimony principle applied to multivariate calibration. Anal Chim Acta 277:165–177, 1993.

Ferr, L., Selection of components in principal component analysis: a comparison of methods. Computat Stat Data Anal 19:669–682, 1995.

Valle, S., Li, W., and Qin, S. J., Selection of the number of principal components: The variance of the reconstruction error criterion with a comparison to other methods. Ind Eng Chem Res 38:4389–4401, 1999.

Jolliffe, I. T., Principal component analysis, 2nd edition. Springer, New York, 2002.

Warne, K., Prasad, G., Rezvani, S., and Maguire, L., Statistical and computational intelligence techniques for inferential model development: a comparative evaluation and a novel proposition for fusion. Eng Appl Artif Intell 17:871–885, 2004.

Centor, R. M., Signal detectabilty: The use of ROC curves and their analysis. Med Decis Making 11:102–106, 1991.

Acknowledgement

This study has been supported by Scientific Research Project of Selcuk University.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Uğuz, H. A Biomedical System Based on Artificial Neural Network and Principal Component Analysis for Diagnosis of the Heart Valve Diseases. J Med Syst 36, 61–72 (2012). https://doi.org/10.1007/s10916-010-9446-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10916-010-9446-7