Abstract

An initial-boundary value problem of subdiffusion type is considered; the temporal component of the differential operator has the form \(\sum _{i=1}^{\ell }q_i(t)\, D _t ^{\alpha _i} u(x,t)\), where the \(q_i\) are continuous functions, each \(D _t ^{\alpha _i}\) is a Caputo derivative, and the \(\alpha _i\) lie in (0, 1]. Maximum/comparison principles for this problem are proved under weak hypotheses. A new positivity result for the multinomial Mittag-Leffler function is derived. A posteriori error bounds are obtained in \(L_2(\Omega )\) and \(L_\infty (\Omega )\), where the spatial domain \(\Omega \) lies in \({\mathbb {R}}^d\) with \(d\in \{1,2,3\}\). An adaptive algorithm based on this theory is tested extensively and shown to yield accurate numerical solutions on the meshes generated by the algorithm.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The numerical solution of fractional differential equations (FDEs) is currently the subject of much research (see for example [11, 23]), since such equations model many physical processes but their exact solution is generally impossible. Of course this is also true for classical integer-order differential equations, where mesh-adaptive numerical methods based on a posteriori error analyses have played a significant role for many years. Methods of this type have very general usefulness since they require no knowledge of the properties of the unknown solution to the problem. But for FDEs, there has been little progress in theory-based adaptive numerical methods; their development has been impeded by the absence of a satisfactory a posteriori theory for their error analysis.

As it is often difficult to analyse the regularity and other fundamental properties of the unknown solutions to FDEs, it can be impossible to give any rigorous a priori error analysis of numerical methods for their solution. This makes it even more desirable to devise an a posteriori error analysis that does not require any information about the unknown solution.

Recently a new and very promising a posteriori error estimation methodology for FDEs appeared in [14], which considered initial-value and initial-boundary value time-fractional subdiffusion problems whose differential equations contained a single temporal derivative of fractional order. It is clearly desirable to extend this theory to time-fractional FDEs containing several fractional derivatives, as these offer more powerful modelling capabilities. Our primary aim in the current paper is to develop the a posteriori theory for this extension and to show experimentally that an adaptive algorithm based on our theory is able to compute accurate numerical solutions to problems whose solutions have singularities (as is usually the case with FDEs). It should be noted that these accurate solutions are computed on nonuniform meshes that are constructed automatically by the algorithm — the user does not have to provide any special mesh, nor input any attributes of the unknown solution.

The relationship between our paper, which studies a multiterm fractional derivative operator, and [14], where only a single fractional derivative appears, is the following. Section 3 below points out similarities between Lemma 3.1, Theorem 3.2 and Corollary 3.3 and results from [15]; but while Corollary 3.4 is analogous to the second bound in [14, Corollary 2.4], the proof of Corollary 3.4 is much deeper since it involves hypergeometric functions whereas [14] needed only elementary functions. Outside Sect. 3 there are significant differences between our paper and [14] — see Theorem 2.5, Remark 2.6, Lemma 2.9, eq. (2.7); Lemma 2.11 would be trivial in the single-term case; the multinomial Mittag-Leffler function of Definition 2.7 that is needed for the multiterm case is less tractable than the more familiar two-parameter Mittag-Leffler function that suffices for the single-term case — thus all of the rather technical Appendix A is new.

The paper is structured as follows. Section 1.1 describes the multiterm time-fractional initial-boundary value problem of subdiffusion type that will be studied. In Sect. 2, maximum/comparison principles and some of their consequences are derived for the associated fractional initial-value problem; existence of a solution for that problem is also discussed. A posteriori error bounds for \(L_2(\Omega )\), where the spatial domain \(\Omega \) lies in \({\mathbb {R}}^d\) with \(d\in \{1,2,3\}\), are established in Sect. 3. A variant of this theory in Sect. 4 gives a posteriori error bounds in \(L_\infty (\Omega )\). Then in Sect. 5 we perform extensive numerical experiments to demonstrate the effectiveness and reliability of the theory of Sects. 3 and 4. Finally, an Appendix proves a new positivity result for the multinomial Mittag-Leffler function, then uses it to give an alternative version of a result from Sect. 2.

1.1 The Multiterm Time-fractional Subdiffusion Problem

We shall study the multiterm time-fractional subdiffusion problem

with initial and boundary conditions

Here \(\ell \) is a positive integer, the constants \(\alpha _i\) (for \(i=1,2,\dots , \ell \)) satisfy

while each \(q_i\in C[0,T]\) with

This problem is posed in a bounded Lipschitz domain \(\Omega \subset {\mathbb {R}}^d\) (where \(d\in \{1,2,3\}\)), and involves a spatial linear second-order elliptic operator \({{\mathcal {L}}}\). Each Caputo temporal fractional derivative \(D_t^{\alpha _i}\) is defined [6] for \(0<\alpha _i<1\) and \(t>0\) by

where \(\Gamma (\cdot )\) is the Gamma function, and \(\partial _s\) denotes the partial derivative in s. From [6, Theorem 2.20 and Lemma 3.4] it follows that \(\lim _{\alpha \rightarrow 1^-}D_t^{\alpha } u(x,t)=\partial _t u(x,t)\) for each \((x,t)\in \Omega \times (0,T]\) when \(u(x,\cdot )\in C^1[0,T]\), so for \(\alpha _1=1\) we take \(D_t^{\alpha _1} u=D_t^{1} u:=\partial _t u\).

Remark 1.1

One might wonder whether the presence of lower-order fractional derivatives in the differential operator would invalidate the above presumption that \(u(x,\cdot )\in C^1[0,T]\) if \(\alpha _1=1\), but when \(\alpha _1=1\) (and \(q_1(t)>0\) for all t) and the data are continuous, in Lemma 2.11 we prove that the solution of the associated initial-value problem does lie in \(C^1[0,T]\). See also Remark 2.6, where it is shown that if \(\alpha _1=1\) then at \(t=0\) the solution is better behaved than if \(\alpha _1<1\). Furthermore, in the case of constant coefficients \(q_i\), when \(\alpha _1=1\) one can deduce that the solution of the initial-value problem lies in \(C^1[0,T]\) from the explicit solution given by Remark 2.10 and eq. (2.7), though we omit the details.

In the case where each \(q_i\) is a positive constant and \(\alpha _1<1\), existence of a solution to (1.1) follows from [15, Theorems 2.1 and 2.2]. For the general case of variable \(q_i\) satisfying (1.3), one can show uniqueness of a solution to (1.1) by imitating the argument of [17, Theorem 4].

The problem (1.1) with constant \(q_i\) was considered in [5, 15] and their references. Two-term fractional differential equations (i.e., \(\ell =2\) in (1.3)) appear in [19] modelling anomalous transport and in [22] modelling solute transport in aquifers. In [22, eq. (14)], the time-fractional PDE

is used to model solute transport in aquifers, where \(C= C(x,t)\) denotes concentration and \(\alpha \in (0,1)\). This is the particular case of our fractional PDE (1.1a) where \(\ell =2\), \(\alpha _1=1\) and \( \alpha _2=\alpha \), with \(q_1=1\) and \(q_2=\beta >0\) so (1.3) is satisfied. The “fractal immobile capacity" \(\beta \) in (1.5) may be time-dependent; for example in [22, Figure 4] the authors take \(\beta = 0.08 d^{-0.67}\) where d is time measured in days. Thus it is of interest to consider time-dependent \(q_i\) in (1.1).

Alternatively, to incorporate uncertainties in the data of the physical problem, one can use a variably distributed-order subdiffusion problem like that of [26], where the distributed-order fractional derivative is defined by \({{\tilde{D}}}_t^\rho u(x,t) = \int _0^1 \rho (\alpha ) D_t^\alpha u(x,t)\,d\alpha \) with \(\rho =\rho (\alpha )\) a probability density function. To handle this numerically one must apply a quadrature rule to \({{\tilde{D}}}_t^\rho u\), which can lead to a PDE such as (1.1a) that satisfies the hypothesis (1.3).

It appears that (1.1) with variable \(q_i(t)\) has never been rigorously studied in the mathematics literature. This variant, however, is of some interest since it is a simple (and hence attractive) alternative to models with variable-fractional-order equations, which have received a lot of attention in recent years (see [27] and its references).

Notation. We use the standard inner product \(\langle \cdot ,\cdot \rangle \) and the norm \(\Vert \cdot \Vert \) in the space \(L_2(\Omega )\), as well as the standard spaces \(L_\infty (\Omega )\), \(H^1_0(\Omega )\), \(L_{\infty }(0,t;\,L_2(\Omega ))\), and \(W^{1,\infty }(t',t'';\,L_2(\Omega ))\) (see [7, Section 5.9.2] for the notation used for functions of x and t). The notation \(v^+:=\max \{0,\,v\}\) is used for the positive part of a generic function v. For convenience we sometimes write

2 Nonnegative Solutions of Certain Initial-Value Problems

Our a posteriori analysis will rely on the property that the solutions of certain multiterm fractional initial-value problems are nonnegative; we derive this result in this section after presenting a reformulation of the definition (1.4) of the fractional derivative \(D_t^{\alpha _i}y(\cdot , t)\) that can be applied to a more general class of functions.

For simplicity, in this section we write y(t) instead of y(x, t) since the dependence on x is irrelevant here.

2.1 Function Regularity and Reformulated Caputo Derivative

In (1.4) one can integrate by parts to reformulate the definition of \(D_t^{\alpha _i}y(t)\) for \(\alpha _i<1\) as

for \(0<t \le T\). This reformulation appeared already in [14, eq. (2.4)], and in, e.g., [4, Lemma 3.1], [10, Lemma 2.10], [16, Proof of Theorem 1], and [25, Theorem 5.2]. We will show that it has the advantage that it permits the use of less smooth functions y than (1.4); this attribute is needed, for example, to prove Lemma 3.1 below.

Recall that (2.1) was obtained from (1.4) by integration by parts. From the proof of the integration by parts formula, one sees that this calculation is valid if for each \(t'\in [0,t)\) the function \(\psi (t;\cdot )\) defined by \(\psi (t;s) := (t-s)^{-\alpha _i}[y(s)-y(t)]\) is absolutely continuous on \([0,t']\) and satisfies \(\lim _{t'\rightarrow t^-}\psi (t;t')=0\), because one can integrate by parts \(\int _{0}^{t'}(t-s)^{-\alpha _i}\, y'(s)\, ds\), then take \(\lim _{t'\rightarrow t^-}\).

For example, if y lies in the standard Hölder space \(C^\beta [0,T]\) for some \(\beta >\alpha _i\), then this derivation of (2.1) from (1.4) is valid; see [4, Lemma 3.1].

As in [14], we consider now a more general class of functions for which the definition (1.4) is unsuitable but (2.1) can be used.

Let us assume that

The hypothesis that \(y\in W^{1,\infty }(\epsilon ,t)\) is equivalent to assuming that y is Lipschitz continuous on each interval \([\epsilon , t]\); see [9, p.154]. If \(\alpha _1=1\), then we strengthen (2.2) by assuming that \(y\in C[0,T]\) and \(y'\) is a left-continuous function on (0, T] that may have jump discontinuities; see Sect. 2.2.

Fix \(t\in (0,T]\). The integral \( \int _0^{t/2}\!\alpha _i(t-s)^{-\alpha _i-1}\, \left[ y(t)- y(s)\right] \, ds\) is defined and finite as its integrand lies in \(C\left[ 0, \frac{1}{2} t\right] \). For \( \int _{t/2}^t\!\alpha _i(t-s)^{-\alpha _i-1}\, \left[ y(t)- y(s)\right] \, ds\), since \(y\in W^{1,\infty }\left( \frac{1}{2}t,t\right) \) one has \(\vert y(t)- y(s) \vert \le C(t-s)\) with a constant C that depends on t but is independent of s, which implies that the integral exists and is finite. Thus for all y satisfying (2.2), we can define \(D_t^{\alpha _i} y(t)\) by (2.1).

The next two remarks describe weakenings of the hypothesis (2.2) on the function y that still allow us to define \(D_t^{\alpha _i} y(t)\) by (2.1).

Remark 2.1

One could replace \(y\in W^{1,\infty }(\epsilon ,t)\) in (2.2) by \(y\in C^{\beta }(\epsilon , T]\) for all \(\epsilon , t\) satisfying \(0<\epsilon <t\le T\), where \(\beta \) is any constant satisfying \(\alpha _i < \beta \le 1\) and \(C^{\beta }(\epsilon , T]\) is a standard Hölder space.

Remark 2.2

(initial discontinuity in y) Note that \(y\in W^{1,\infty }(\epsilon ,t)\) for all \(\epsilon , t\) satisfying \(0<\epsilon <t\le T\) implies \(y\in C(0,T]\). In (2.2) one could replace the hypothesis that \(y\in C[0,T]\) by an assumption that \(y\in L_\infty (0,T)\), and still work with (2.1). In particular we can replace C[0, T] in (2.2) by an assumption that \(\lim _{t\rightarrow 0^+}y(t)\) exists; this will be useful in the forthcoming error analysis.

2.2 The Initial-Value Problem

Consider the initial-value problem

where we assume that the parameter \(\lambda \ge 0\). (We shall use the notation \(u(x,t)\) for the solution of (1.1) and w(t) for the solution of (2.3).)

In the next lemma we specify hypotheses allowing, for example, the possibility that w is a piecewise polynomial. Given a function g that has a jump discontinuity at a finite number of points in (0, T) but is continuous otherwise on (0, T], at any point of discontinuity \(\tau \) we take \(g(\tau )= \lim _{t\rightarrow \tau ^-}g(t)\). That is, we regard g as left-continuous on (0, T].

Lemma 2.3

(Comparison principle for the initial-value problem) Consider (2.3) where \(v\ge 0\) may have a finite number of jump discontinuities in (0, T) and is left-continuous on (0, T]. Suppose that w satisfies the regularity hypothesis (2.2). Define \(D_t^{\alpha _i} w\) by (2.1) if \(\alpha _i<1\). If \(\alpha _1=1\), suppose also that \(w'\) may have jump discontinuities but is a left-continuous function on (0, T]. Assume that \(w_0\ge 0\). Then \(w(t)\ge 0\) for \(t\in [0,T]\).

Proof

Suppose that the result is false. Then since \(w\in C[0,T]\) and \(w(0)\ge 0\), there exists a point \(t_0\in (0,T]\) such that \(w(t_0)<0 \le w(0)\) and \(w(t_0)\le w(t)\) for all \(t\in [0,T]\). From (2.1) one sees immediately that each \(D_t^{\alpha _i} w(t_0) < 0\) if \(\alpha _i<1\), while if \(\alpha _i=1\) then \(w'(t_0)\le 0\) (consider the interval \([0, t_0]\) and use the left-continuous property of \(w'(t)\) at \(t_0\)). Hence \(D_t^{{\bar{\alpha }}} w(t_0) + \lambda w(t_0)<0 \le v(t_0)\), so w cannot be a solution of (2.3). (The case where \(q_i(t_0)=0\) for \(i=2,3,\dots ,\ell \) is exceptional, as we then get only \(w'(t_0) + \lambda w(t_0) \le 0\); to derive a contradiction, one can make a change of variable \({{\tilde{w}}}(t) := e^{-\mu t}w(t)\) for suitable \(\mu \) as in [12, Section 2] and consider the initial-value problem satisfied by \({{\tilde{w}}}\).) \(\square \)

The following extension of Lemma 2.3 weakens the requirement that \(w\in C[0,T]\). It will be needed to deal with the discontinuous function \({{\mathcal {E}}}_0\) of Sect. 3.

Corollary 2.4

In Lemma 2.3, replace the hypothesis that \(w\in C[0,T]\) by \(\lim _{t\rightarrow 0^+}w(t)\ge 0\) exists. Then \(w(t)\ge 0\) for \(t\in (0,T]\).

Proof

Recalling Remark 2.2, one can use the same argument as for Lemma 2.3, with minor modifications. \(\square \)

We now use Lemma 2.3 to derive a stronger bound on w. First, recall the well-known two-parameter Mittag-Leffler function \(E_{\alpha ,\beta }(s) := \sum _{k=0}^\infty s^k/\Gamma (\alpha k+\beta )\).

Theorem 2.5

Consider (2.3), where \(v\in C[0,T]\) with \(v\ge 0\) and \(w_0\ge 0\). Suppose that w satisfies the regularity hypothesis (2.2). Define \(D_t^{\alpha _i} w\) by (2.1) if \(\alpha _i<1\). If \(\alpha _1=1\), suppose also that \(w\in C^1(0,T]\). Set \({\underline{q}}_j = \min _{t\in [0,T]}q_j(t)\) for \(j=1,\dots ,\ell \). Then

where one sets \(E_{\alpha _j,1}(-\lambda t^{\alpha _j}/{\underline{q}}_j) \equiv 0\) if \({\underline{q}}_j=0\).

Proof

Fix \(j\in \{1,\dots ,\ell \}\). Define the barrier function \(B_j\) by \({\underline{q}}_jD _t ^{\alpha _j} B_j(t) + \lambda B_j(t) = 0\) for \(0<t\le T\), \(B_j(0)=w_0\). Then \(B_j(t) = w_0 E_{\alpha _j,1}(-\lambda t^{\alpha _j}/{\underline{q}}_j) \) by [10, Example 3.1]; this function is completely monotonic [10, Theorem 3.5], which says in particular that \(B_j(t)\ge 0\) and \(B_j'(t)\le 0\). (In the case \({\underline{q}}_j=0\) one takes \(B_j\equiv 0\).) Consequently \((w-B_j)(0)\ge 0\) and

Lemma 2.3 now yields \(w(t)\ge B_j(t)\) for all \(t\in [0,T]\), which implies the desired result since \(j\in \{1,\dots ,\ell \}\) was arbitrary. \(\square \)

In the case \(\ell =1\), constant \(q_1>0\), and \(v\equiv 0\), the bound of the theorem is sharp.

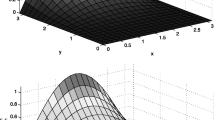

In the conclusion (2.4) of Theorem 2.5, the value of j such that \(E_{\alpha _j,1}(-\lambda t^{\alpha _j}/{\underline{q}}_j)\) is the dominant term may change as t varies. This phenomenon is illustrated in Fig. 1, where \(\ell =3\), \(w_0=\lambda = {\underline{q}}_j =1\) for each j, and \(\alpha _j \in \{1, 0.7, 0.3\}\); one sees that Theorem 2.5 yields \(w(t)\ge E_{1,1}(-t)\) for \(0\le t < 0.7\) (approx.) but \(w(t)\ge E_{0.3,1}(-t^{0.3})\) for \(0.7< t\le 2\).

Illustration of result of Theorem 2.5: graphs of \(E_{\alpha ,1}(-t^{\alpha })\) for \(\alpha =1, 0.7, 0.3\)

In the next remark we discuss the behaviour of \(w'(t)\) as \(t\rightarrow 0^+\).

Remark 2.6

Assume the hypotheses of Theorem 2.5 and that \(v\in C^1[0,T]\). Assume also that \(q_1(0)\ne 0\) and \(q_1\in C^1[0,T]\); without loss of generality we can take \(q_1(0)=1\). Set \(\phi (t):=t^{\alpha _1}/\Gamma (1+\alpha _1)\). Then

so \(\left( D_t^{{\bar{\alpha }}}+\lambda \right) [1-\lambda \phi (t)]=O(t^{\alpha _1-\alpha _2})\).

Let \(\delta \) be a nonzero constant. Set \({{\widetilde{w}}}_{\delta }(t):=w_0\,[1-\lambda \phi (t)]+[v(0)+\delta ]\,\phi (t)\). Then \({{\widetilde{w}}}_{\delta }(0) = w_0\) and

since \(v(t)=v(0)+O(t)\). Now choose \(\delta \) to be a small positive constant. Then choose \(\epsilon >0\) such that for \(t\in (0,\epsilon )\) one has \( \vert O(t^{\alpha _1-\alpha _2}) \vert \le \delta \) in the previous equation. Now the comparison principle (Lemma 2.3) yields \({{\widetilde{w}}}_{-\delta }(t)\le w(t)\le {{\widetilde{w}}}_{\delta } (t)\) for \(0\le t\le \epsilon \). That is,

Hence \((w(t)-w(0))/t\approx [v(0)-\lambda w_0]\phi (t)\) as \(t\rightarrow 0\), and assuming that \(v(0)\ne \lambda w_0\), one has \((w(t)-w(0))/t = O(t^{\alpha _1-1})\) as \(t\rightarrow 0\).

Thus, when \(\alpha _1<1\) we expect that \(w'(t)\rightarrow -\infty \) as \(t\rightarrow 0^+\), but if \(\alpha _1=1\), then the behaviour of the solution is quite different: we expect that \(w'(t)\) remains bounded as \(t\rightarrow 0^+\). This behaviour when \(\alpha _1=1\) concurs with the existence result for (2.3) that we shall prove rigorously in Lemma 2.11.

Notation. From Lemma 2.3 it follows that any solution of (2.3) is unique. We shall use the notation \(\left( D_t^{{\bar{\alpha }}}+\lambda \right) ^{-1}\!v\) for this unique solution when the initial condition \(w_0=0\).

When the \(q_i\) are positive constants, then the solution of (2.3) exists and can be written in an explicit form; this will be seen in Sect. 2.3. For the general case of variable \(q_i(t)\), existence of the solution to (2.3) seems reasonable but it does remain an open question; nevertheless, almost all of our analysis does not require this existence result — the only exception is Corollary 3.5.

2.3 Solution of (2.3) for Constant-Coefficient \(D_t^{{\bar{\alpha }}}\)

Throughout Sect. 2.3, let all \(q_i\) in (1.6) be positive constants. Without loss of generality, we assume that \(q_1=1\).

Then the structure of the solution of (2.3) is intimately related to the following multinomial Mittag-Leffer function, which is a generalisation of the two-parameter Mittag-Leffler function \(E_{\alpha ,\beta }(s)\).

Definition 2.7

[15, 18] Let \(\beta _0 \in (0,2)\). For \(j=1,\dots ,\ell \), let \(0<\beta _j\le 1, \ s_j \in {\mathbb {R}}\) and \(k_j\in {\mathbb {N}}_0\). Then the multinomial Mittag-Leffler function is defined by

where the multinomial coefficient

Remark 2.8

The symmetry of Definition 2.7 implies that the value of \(E_{(\beta _1,\dots ,\beta _\ell ),\beta _0}(s_1,\dots ,s_\ell )\) remains unaltered if we perform any permutation of \((\beta _1,\dots ,\beta _\ell )\), provided that we also perform the same permutation of \((s_1,\dots ,s_\ell )\). In particular one has \(E_{(\beta _1,\dots ,\beta _\ell ),\beta _0}(s_1,\dots ,s_\ell ) = E_{(\beta _\ell ,\dots ,\beta _1),\beta _0}(s_\ell ,\dots ,s_1)\).

We shall also use the more succinct notation of [2, eq.(2.4))]:

for \(t>0\), any positive integer m, \(\beta \in (-\infty ,2)\), \(0<\mu _j <1\) for each j, and any real constants \(a_1,\dots ,a_m\).

Lemma 2.9

Suppose that \(0\le \mu _m<\dots < \mu _1 \le \beta \le 1\) and \(a_j>0\) for \(j=1,\dots , m\). Then \(E_{(\mu _1, \mu _2,\dots ,\mu _m),\beta }(-a_1t^{\mu _1},-a_2t^{\mu _2},\dots ,-a_mt^{\mu _m})\ge 0 \) for all \(t> 0\).

Proof

Taking \(\delta =1\) in [2, Theorem 3.2] shows that \(t\mapsto {{\mathcal {F}}}_{(\mu _1, \mu _2,\dots ,\mu _m),\beta }(t; a_1, a_2, \dots , a_m)\) is a completely monotone function, which implies that \({{\mathcal {F}}}_{(\mu _1, \mu _2,\dots ,\mu _m),\beta }(t; a_1, a_2, \dots , a_m) \ge 0\). The desired result now follows from (2.6). \(\square \)

If \(w_0=0\), then [18, Theorem 4.1] gives the solution of (2.3) as

where we used Remark 2.8 and (2.6). The formula (2.7) is the multiterm generalisation of [14, eq.(2.1)].

Remark 2.10

It is easy to see that \(\left( D_t^{{\bar{\alpha }}}+\lambda \right) [1]=\lambda \). Hence if \(w_0\ne 0\), then the initial-value problem (2.3), with positive constant coefficients \(q_i\), has the unique solution

Furthermore, an explicit solution representation for \( \left( D_t^{{\bar{\alpha }}}+\lambda \right) ^{-1}[v-\lambda w_0]\) is provided by (2.7) with \(v(t-s)\) replaced by \(v(t-s)-\lambda w_0\).

2.4 The Special Case \(\alpha _1=1\) and \(q_1(t)>0\)

In this subsection we consider the special case where \(\alpha _1=1\) and \(q_1(t)>0\) for all \(t\in [0,T]\). In this setting we are able to prove existence of a solution w to the variable-coefficient initial-value problem (2.3) , and moreover this solution lies in \(C^1[0,T]\).

Lemma 2.11

Assume that \(\alpha _1=1\) and \(q_1(t)>0\) for all \(t\in [0,T]\), with \(v,q_i\in C[0,T]\) for all i. Then the initial-value problem (2.3) has a solution \(w\in C^1[0,T]\), and this solution is unique.

Proof

Lemma 2.3 implies that any solution of (2.3) is unique. To show existence of a solution we assume without loss of generality that \(q_1(t)\equiv 1\) for \(t\in [0,T]\), since one can divide (2.3) by \(q_1(t)\). Set \(\phi (t) = w'(t)\). Using the definition (1.4), write (2.3) as

This is a Volterra integral equation of the second kind in the unknown function \(\phi \). Observe first that any solution of (2.8) in C[0, T] must be unique, because two distinct solutions \(\phi _1, \phi _2\) would yield two distinct solutions \(w_i(t) := w_0 + \int _0^t\phi _i(s)\,ds\) (\(i=1,2\)) of (2.3). It is well known (see, e.g., [3, Appendix A.2.2]) that each of the operators

is a compact operator from the Banach space \((C[0,T], \Vert \cdot \Vert _\infty )\) to itself, and a finite sum of compact operators is also a compact operator, and we saw already that any solution of (2.8) is unique; thus we can apply the Fredholm Alternative Theorem [3, Theorem A.2.17] to conclude that (2.8) has a solution \(\phi \in C[0,T]\). Hence (2.3) has the solution \(w(t) := w_0 + \int _0^t\phi (s)\,ds\), and this solution clearly lies in \(C^1[0,T]\). \(\square \)

3 \(L_2(\Omega )\) a Posteriori Error Estimates

Let \(u_h\) be our approximate solution. We assume throughout our analysis that \(u_h(\cdot ,0)=u(\cdot ,0)\) on \(\Omega \) and \(u_h(x,t)=u(x,t)\) for \(x\in \partial \Omega \) and \(t>0\). For the case \(u_h(\cdot ,0)\ne u(\cdot ,0)\), see [14, Corollary 2.5].

Lemma 3.1

Suppose that \(r(\cdot ,0)=0\) and \(r\in L_{\infty }(0,T;\,L_2(\Omega )) \cap W^{1,\infty }(\epsilon ,T;\,L_2(\Omega ))\) for each \(\epsilon \in (0,T]\), Then

Proof

One can use the same proof as for [14, Lemma 2.8], based on the reformulation (2.1) and recalling Remark 2.2. \(\square \)

Define the residual

Theorem 3.2

In (1.1a) assume that \(\langle {{\mathcal {L}}}r,r\rangle \ge \lambda \Vert r\Vert ^2\) for all \(r\in H_0^1(\Omega )\), where \(\lambda \ge 0\) is some constant. Suppose that a unique solution u of (1.1) and its approximation \(u_h\) are in \(C([0,T];\,L_2(\Omega )) \cap W^{1,\infty }(\epsilon ,T;\,L_2(\Omega ))\) for each \(\epsilon \in (0,T]\), and also in \(H^1_0(\Omega )\) for each \(t>0\). Suppose also that

for some barrier function \({{\mathcal {E}}}\) that satisfies the regularity condition (2.2), with moreover \({{\mathcal {E}}}(t)\ge 0\ \forall \,t\ge 0\). Then \(\Vert (u_h-u)(\cdot ,t)\Vert \le {{\mathcal {E}}}(t)\) \(\forall \,t\ge 0\).

Proof

(This is similar to the proof of [14, Theorem 2.2 and Corollary 2.3].)

Set \(e: = u_h-u\), so \(e(\cdot ,0)=0,\ e(x,t)=0\) for \(x\in \partial \Omega \), and \(\left( D _t ^{{\bar{\alpha }}} + {{\mathcal {L}}}\right) e(\cdot ,t) = R_h(\cdot ,t)\) for \(t>0\). Multiply this equation by \(e(\cdot ,t)\) then integrate over \(\Omega \); invoking Lemma 3.1 and \(\langle {{\mathcal {L}}}v,v\rangle \ge \lambda \Vert v\Vert ^2\), we get

Combining this with our hypothesis (3.1), one has \((D_t^{{\bar{\alpha }}}+\lambda )({{\mathcal {E}}}-\Vert e(\cdot ,t)\Vert )\ge 0\). Now an application of Lemma 2.3 yields \(\Vert (u_h-u)(\cdot ,t)\Vert \le {{\mathcal {E}}}(t)\) \(\forall \,t\ge 0\). \(\square \)

In Theorem 3.2, one can replace the condition \({{\mathcal {E}}}\in C[0,T]\) of (2.2) by \(\lim _{t\rightarrow 0^+}{{\mathcal {E}}}(t)\ge 0\) exists; see Remark 2.2 and Corollary 2.4.

Note that the proof of Theorem 3.2 did not require existence of a solution of (2.3), which we have proved only for the constant-coefficient case of Sect. 2.3 and the case \(\alpha _1=1\) and \(q_1(t)>0\) of Sect. 2.4.

The next corollary presents a possible choice of \({{\mathcal {E}}}(t)\) to use in (3.1).

Corollary 3.3

Assume the hypotheses of Theorem 3.2. Then the error \(e=u_h-u\) satisfies

where \(j=1\) if \(\alpha _1<1\) and \(j=2\) if \(\alpha _1=1\).

Proof

(The proof is similar to part of the proof of [14, Corollary 2.4].)

Set \(\kappa = \sup _{0<s\le t}\!\left\{ \Vert R_h(\cdot ,s)\Vert /{{\mathcal {R}}}_0(s) \right\} \). If \(\kappa =\infty \) the result is trivial, so assume that \(0\le \kappa \in {\mathbb {R}}\). Define the barrier function \({{\mathcal {E}}}_0(t)\) by \({{\mathcal {E}}}_0(t):=1\) for \(t>0\) and \({{\mathcal {E}}}_0(0):=0\). Note that \({{\mathcal {E}}}_0\) satisfies the conditions of Theorem 3.2. From (2.1) (see also [14, Remark 2.9]) one has \(D _t ^{\alpha _i} {{\mathcal {E}}}_0(t) = t^{-\alpha _i}/\Gamma (1-\alpha _i)\) for \(t>0\) if \(\alpha _i<1\), while \(D_t^{\alpha _1}{{\mathcal {E}}}_0(t)=0\) for \(t>0\) if \(\alpha _1=1\), so \( \left( D _t ^{{\bar{\alpha }}} +\lambda \right) \kappa {{\mathcal {E}}}_0(t) = \kappa {{\mathcal {R}}}_0(t)\) in all cases. Thus we can apply Theorem 3.2 with \({{\mathcal {E}}}= \kappa {{\mathcal {E}}}_0\) to finish the proof. \(\square \)

We shall present a second possible choice of \({{\mathcal {E}}}(t)\) after we list some properties of the hypergeometric function \(_2F_1(\alpha _i,-\beta \,;\,\alpha _1\,;\,s)\) that is discussed in [1, Section 15] and [20].

Set \(\beta :=1-\alpha _1\). Then \(\frac{d}{ds}\bigl (s^{-\beta }{}_2F_1(\alpha _i,-\beta \,;\,\alpha _1\,;\,s)\bigr ) =-\beta s^{-\beta -1}{}_2F_1(\alpha _i,-\beta \,;\,-\beta \,;\,s)\) [1, Section 15.2.4] [20, item 15.5.4], while by [1, Section 15.1.8] [20, item 15.4.6] one gets \({}_2F_1(\alpha _i,-\beta \,;\,-\beta \,;\,s)=(1-s)^{-\alpha _i}\). Hence

Furthermore,

by [1, Section 15.1.20][20, item 15.4.20].

Corollary 3.4

Assume the hypotheses of Theorem 3.2 and that \(\alpha _1<1\). Then the error \(e=u_h-u\) satisfies

where

with \(\beta :=1-\alpha _1\), \( {\hat{\tau }} := \tau /t\) and \({{\mathcal {E}}}_1(t):= (\max \{\tau , t\})^{\alpha _1-1}\) for \(t>0, \ {{\mathcal {E}}}_1(0):=0\). Here \(\tau >0\) is an arbitrary user-chosen parameter.

Furthermore, in (3.6) one can replace \(t^{\alpha _1-1}\) by \({{\mathcal {E}}}_1(t)\) if desired.

Proof

Set \(\kappa = \sup _{0<s\le t}\!\left\{ \Vert R_h(\cdot ,s)\Vert /{{\mathcal {R}}}_1(s) \right\} \). If \(\kappa =\infty \) the result is trivial, so assume that \(0\le \kappa \in {\mathbb {R}}\). Observe that \({{\mathcal {E}}}_1(t)=\tau ^{-\beta }{{\mathcal {E}}}_0(t) - (\tau ^{-\beta }-t^{-\beta })^+\), where \({{\mathcal {E}}}_0\) was defined in the proof of Corollary 3.3. From (2.1) one has \(D _t ^{\alpha _i} {{\mathcal {E}}}_0(t) = t^{-\alpha _i}/\Gamma (1-\alpha _i)\) for \(t>0\) and \(i=1,\dots ,\ell \), so for \(t\le \tau \) (i.e., \(\hat{\tau }\ge 1\)) we get \( \left( D _t ^{{\bar{\alpha }}} +\lambda \right) \kappa {{\mathcal {E}}}_1(t) = \kappa {{\mathcal {R}}}_1(t)\) since in \({{\mathcal {R}}}_1\) one has \(\rho _i({\hat{\tau }})=0\) for all i.

For \(t>\tau \), since \(\partial _s (\tau ^{-\beta }-s^{-\beta })^+ =-\partial _s(s^{-\beta }) =\beta s^{-\beta -1} \), for \(i=1,2,\dots ,\ell \) we have

from (3.4) and (3.7). But \(t^{-\beta -\alpha _i}\,{\hat{\tau }}^{-\beta }=\tau ^{-\beta }t^{-\alpha _i}\), so \(\Gamma (1-\alpha _i)\,D^{\alpha _i}_t {{\mathcal {E}}}_1(t)=\tau ^{-\beta }t^{-\alpha _i}[1-\rho _i({\hat{\tau }})]\). Hence, \( \left( D _t ^{{\bar{\alpha }}} +\lambda \right) \kappa {{\mathcal {E}}}_1(t) \ge \kappa {{\mathcal {R}}}_1(t)\) for \(t> \tau \).

For the bound on \(\rho _i\) in (3.7), the above argument shows that

where we also used \((1-{{\hat{s}}})^{-\alpha _i}/(1-{{\hat{s}}})^{-\alpha _1}\le (1-{\hat{\tau }})^{\alpha _1-\alpha _i}\) as \(\alpha _1\ge \alpha _i\). Hence, \(\rho _i({\hat{\tau }})\le (1-{\hat{\tau }})^{\alpha _i}\), as desired.

We can now apply Theorem 3.2 with \({{\mathcal {E}}}= \kappa {{\mathcal {E}}}_1\) to obtain the bound

then \({{\mathcal {E}}}_1(t) \le t^{\alpha _1-1}\) completes the proof. \(\square \)

One could extend the proof of Corollary 3.4 to include the case \(\alpha =1\), but in this case the result becomes the same as that of Corollary 3.3.

Note that (3.7) for \(i=1\) simplifies to \(\rho _1(s)=\left( (1-s)^+\right) ^{1-\alpha _1}\) because of [1, Section 15.1.8] [20, item 15.4.6] and \(\{\Gamma (\alpha _1-\alpha _i)\}^{-1}=0\).

Finally, we give a general result that relates \(\Vert (u_h-u)(\cdot ,t)\Vert \) to \(\Vert R_h(\cdot , t)\Vert \) without involving any barrier function — but this result, unlike Corollaries 3.3 and 3.4, depends on the assumption that \(\left( D _t ^{{\bar{\alpha }}} +\lambda \right) ^{-1}\Vert R_h(\cdot , t)\Vert \) exists.

Corollary 3.5

Assume the hypotheses of Theorem 3.2. Recall the definition of \(\left( D _t ^{{\bar{\alpha }}} +\lambda \right) ^{-1}\) in Sect. 2. If \(\left( D _t ^{{\bar{\alpha }}} +\lambda \right) ^{-1}\Vert R_h(\cdot , t)\Vert \) exists, then

Proof

Set \({{\mathcal {E}}}(t):= (D_t^{{\bar{\alpha }}}+\lambda )^{-1}\Vert R_h(\cdot , t)\Vert \). Then \({{\mathcal {E}}}(0)=0\) and \((D_t^{{\bar{\alpha }}}+\lambda ){{\mathcal {E}}}(t)=\Vert R_h(\cdot , t)\Vert \) imply \({{\mathcal {E}}}(t)\ge 0\) by Lemma 2.3. Thus we can invoke Theorem 3.2 to get (3.8). \(\square \)

4 \(L_\infty (\Omega )\) a Posteriori Error Estimates

Throughout Sect. 4, let \({{\mathcal {L}}}u := \sum _{k=1}^d \left\{ a_k(x)\,\partial ^2_{x_k}\!u + b_k(x)\, \partial _{x_k}\!u \right\} +c(x)\, u\) in (1.1), with sufficiently smooth coefficients \(\{a_k\}\), \(\{b_k\}\) and c in \(C({\bar{\Omega }})\). Assume also that for each k one has \(a_k>0\) in \({\bar{\Omega }}\), and that \(c\ge \lambda \ge 0\).

The condition \(\langle {{\mathcal {L}}}v,v\rangle \ge \lambda \Vert v\Vert ^2\) is not required in this section.

Lemma 4.1

(Comparison principle for the initial-boundary value problem) Suppose that

where \(v(\cdot , t)\in C^2(\Omega )\) for each \(t>0\), and for each \(x\in \Omega \) we have \(v(x,\cdot )\in W^{1,\infty }(\epsilon ,t)\) for all \(\epsilon , t\) satisfying \(0<\epsilon <t\le T\), and \(\lim _{t\rightarrow 0^+}v(x,t)\ge 0\) exists. In (4.1) define \(D_t^{\alpha _i} v(x,\cdot )\) for each \(x\in \Omega \) by (2.1) if \(\alpha _i<1\). If \(\alpha _1=1\), suppose also that \(v_t(x,\cdot )\) (for each \(x\in \Omega \)) may have jump discontinuities but is a left-continuous function on (0, T]. Assume that \(v(x,0)\ge 0\) for \(x\in \Omega \) and that \(v(x, t)\ge 0\) for \(x\in \partial \Omega \) and \(0\le t\le T\). Then \(v(x,t)\ge 0\) for all \((x,t)\in \Omega \times (0,T]\).

Proof

Imitate the argument of Corollary 2.4, with the extra detail that \({{\mathcal {L}}}v(x_0,t_0)\le 0\) at any point \((x_0, t_0)\in \Omega \times (0,T]\) where v(x, t) attains a negative minimum. \(\square \)

A result similar to Lemma 4.1 is proved in [17, Theorem 2] under the stronger hypothesis that \(v(x,\cdot )\in C^1(0,T]\cap W^{1,1}(0,T)\) for each \(x\in \Omega \). See also [4, Lemma 3.1].

Theorem 4.2

Assume that a unique solution u of (1.1) and its approximation \(u_h\) each satisfy the regularity hypotheses imposed on v in Lemma 4.1. Then the error bounds of Theorem 3.2 and Corollaries 3.5, 3.3 and 3.4 remain true with \(\Vert \cdot \Vert =\Vert \cdot \Vert _{L_2(\Omega )}\) replaced by \(\Vert \cdot \Vert _{L_\infty (\Omega )}\).

Proof

Note that \({{\mathcal {L}}}{{\mathcal {E}}}(t) = c{{\mathcal {E}}}(t) \ge \lambda {{\mathcal {E}}}(t)\) for \(t>0\). Consider first Theorem 3.2, whose hypothesis now becomes \(\Vert R_h(\cdot ,t)\Vert _{L_\infty (\Omega )}\le (D_t^{{\bar{\alpha }}}+\lambda ){{\mathcal {E}}}(t)\) for \(t>0\). But \((D_t^{{\bar{\alpha }}}+\lambda ){{\mathcal {E}}}(t)\le (D_t^{{\bar{\alpha }}}+{{\mathcal {L}}}){{\mathcal {E}}}(t)\) and \(R_h(x,t) = (D_t^{{\bar{\alpha }}}+{{\mathcal {L}}})(u_h-u)(x,t)\), so we have \(\vert (D_t^{{\bar{\alpha }}}+{{\mathcal {L}}})(u_h-u)(x,t)\vert \le (D_t^{{\bar{\alpha }}}+{{\mathcal {L}}}){{\mathcal {E}}}(t)\) for \(x\in \Omega \) and \(t>0\). Thus one can invoke Lemma 4.1 to get \(\vert (u_h-u)(x,t)\vert \le {{\mathcal {E}}}(t)\) on \(\Omega \times [0,T]\), i.e., Theorem 3.2 is valid in the \(L_\infty (\Omega )\) setting.

We can now deduce \(L_\infty (\Omega )\) variants of the other results. To get the new Corollary 3.5, take \({{\mathcal {E}}}(t):=(D_t^\alpha +\lambda )^{-1}\Vert R_h(\cdot ,t)\Vert _{L_\infty (\Omega )}\) in the new Theorem 3.2. For the new Corollaries 3.3 and 3.4, use their old proofs with \(\Vert R_h\Vert \) replaced by \(\Vert R_h\Vert _{L_\infty (\Omega )}\) and appeal to the new Theorem 3.2. \(\square \)

5 Application to the L1 Method. Numerical Experiments

In this section we examine in detail the practical application of our a posteriori analysis to the well-known L1 discretisation of each fractional derivative \( D _t ^{\alpha _i}\). Other discretisations will be discussed in a future paper [8].

Given an arbitrary temporal mesh \(\{t_j\}_{j=0}^M\) on [0, T], let \(\{u_h^j\}_{j=0}^M\) be the semi-discrete approximation for (1.1) obtained using the popular L1 method [23]. Then its standard Lagrange piecewise-linear-in-time interpolant \(u_h\), defined on \({\bar{\Omega }}\times [0,T]\), satisfies

subject to \(u_h^0:=u_0\) and \(u_h=0\) on \(\partial \Omega \). In the case of \(\alpha _1=1\), the term \(D _t ^{\alpha _1}u_h(x, t_j)=[u_h(x, t_j)-u_h(x, t_{j-1})]/(t_j-t_{j-1})\), which corresponds to \(\partial _t u_h\) treated as a left-continuous function in time.

First, consider the case \(\alpha _1<1\). For the residual of \(u_h\) one immediately gets \(R_h(\cdot , t_j)=0\) for \(j\ge 1\), i.e., the residual is a non-symmetric bubble on each \((t_{j-1},t_j)\) for \(j>1\). Hence, for the piecewise-linear interpolant \(R_h^I\) of \(R_h\) one has \(R_h^I=0\) for \(t\ge t_1\), and, more generally, \(R_h^I=[{{\mathcal {L}}}u_0-f(\cdot , 0)](1-t/t_1)^+\) for \(t>0\) (where we used \(R_h(\cdot ,0)={{\mathcal {L}}}u_0-f(\cdot ,0)\) because \(D_t^{\alpha _i} u_h^0(\cdot , 0)=0\)). Finally, note that \(R_h-R_h^I=(D_t^{{\bar{\alpha }}} u_h-f)-(D_t^{{\bar{\alpha }}} u_h-f)^I\) since \(({{\mathcal {L}}}u_h)^I ={{\mathcal {L}}}u_h\). In other words, one can compute \(R_h\) by sampling, using parallel/vector evaluations, without a direct application of \({{\mathcal {L}}}\) to \(\{u^j_h\}\).

Next, consider the case \(\alpha _1=1\). Then \(D _t ^{\alpha _1}u_h=\partial _t u_h\) is piecewise constant in time, and it is convenient to treat it as a left-continuous function, viz., \(\partial _t u_h=\delta _t^ju_h:=[u_h(\cdot , t_j)-u_h(\cdot , t_{j-1})]/(t_j-t_{j-1})\) is constant in time on each time interval \((t_{j-1},t_j]\). As before, one gets \(R_h(\cdot , t_j)=0\) for \(j\ge 1\), so \(R_h^I=[{{\mathcal {L}}}u_0-f(\cdot , 0)](1-t/t_1)^+\) for \(t>0\) — but \(R_h\) is no longer continuous in time. (To be precise, \(R_h-q_1(t)\, \partial _t u_h\) is continuous on [0, T], assuming that \(u_0\) is smooth; a modification for the case when \({{\mathcal {L}}}u_0\notin L_2(\Omega )\) is discussed in [14, Remark 2.7].) Nevertheless, we can still employ \(R_h-R_h^I=(D_t^{{\bar{\alpha }}} u_h-f)-(D_t^{{\bar{\alpha }}} u_h-f)^I\), but one needs to be more careful when evaluating the component \((q_1 \partial _t u_h)^I\) of \((D_t^{{\bar{\alpha }}})^I\): on each \((t_{j-1},t_j]\) with \(j>1\), one gets

(to check this formula, observe that it is linear in time and equals \(q_1(t_j)\,\delta _t^j u_h \) at \(t_j\) and \(q_1(t_{j-1})\,\delta _t^{j-1} u_h \) at \(t_{j-1}\)). On \([0,t_1]\), i.e., when \(j=1\), the situation is simpler as \(u_h\) is continuous in time, so \((q_1\, \partial _t u_h)^I=q_1^I\,\delta _t^1 u_h\), so one can still employ the above formula after setting \(\delta _t^{0} u_h:=\delta _t^{1} u_h\). Thus, even when \(\alpha _1=1\), one can still compute \(R_h\) by sampling, using parallel/vector evaluations, without a direct application of \({{\mathcal {L}}}\) to \(\{u^j_h\}\).

Finally, for completeness we include in Fig. 2 a description of the adaptive algorithm of [14], to aid the reader’s understanding of the numerical results that follow. This algorithm is motivated by (3.3) and (3.6); it constructs a temporal mesh such that \(\Vert R_h(\cdot ,t)\Vert \le { TOL}\cdot {{\mathcal {R}}}_p(t)\) for \(p=0,1\), with \(Q:=1.1, \ \tau _{**}:=0\) and \(\tau _*:=5t_1\) in \({{\mathcal {R}}}_1\). (Experiments with larger values of Q and a discussion of implementation of the algorithm are given in [8].) Note that the computation of the mesh in the algorithm is one-dimensional in nature and is independent of the number of spatial dimensions in (1.1), since it is based on the scalar quantity \(\Vert R_h(\cdot ,t)\Vert \).

5.1 Numerical Results with \(\alpha _1<1\)

We start our numerical experiments with three initial-value problems of the form (2.3) to illustrate orders of convergence, since time discretisation is the main focus of our paper. A subdiffusion test problem of the form (1.1) (i.e., containing spatial and temporal derivatives) will then be considered.

As well as results computed on our adaptive mesh, some of the figures compare the adaptive mesh itself with the \((M+1)\)-point graded mesh \(t_k := T(k/M)^r\) for \(k=0,1,\dots ,M\) that is often used in conjunction with the L1 scheme (see [23]). Here \(r\ge 1\) is a user-chosen mesh grading parameter and it is known [13, 24] that when \(\ell =1\) the choice \(r=(2-\alpha )/\alpha \) yields the optimal mesh grading for the problem (1.1); we make an analogous choice of r in our experiments. We shall see that the adaptive mesh constructed by our algorithm — without using any information about the exact solution and without any guidance from the user — is remarkably similar to the optimal graded mesh. Of course this holds great promise for the performance of the algorithm in problems where no a priori analysis of the exact solution (and therefore no optimal a priori mesh) is available.

To begin, we present three initial-value examples to demonstrate that an adaptive approach based on our a posteriori analysis works well in widely-differing regimes.

Example 5.1

Consider (1.1) without spatial derivatives, with \({{\mathcal {L}}}:=1\), \(T=1\), and \(\ell =2\), and

where \(\alpha \in (0,1)\). In this example one has \(q_1(t)>0\) and \(q_2(t)>0\) for all t. The unknown exact solution is replaced by a reference solution (computed on a considerably finer mesh). See Figs. 3 and 4 for errors in the computed solutions and the meshes generated.

Adaptive algorithm with \({{\mathcal {R}}}_0(t)\) for Example 5.1: loglog graphs of \(\max _{[0,T]}\vert e(t)\vert \) on the adaptive mesh and the corresponding \({ TOL}\), for \(\alpha =0.4\) (left) and \(\alpha =0.9\) (centre). Right: loglog graphs of \(\{t_j\}_{j=0}^M\) as a function of j/M for our adaptive mesh and the standard graded mesh with \(r=(2-\alpha )/\alpha \), \(\alpha =0.4\), \({ TOL}=10^{-3}\), \(M=51\)

Adaptive algorithm with \({{\mathcal {R}}}_1(t)\) for Example 5.1: \(\vert e(1)\vert \) on the adaptive mesh and the corresponding \({ TOL}\), for \(\alpha =0.4\) (left) and \(\alpha =0.7\) (centre). Right: log-log graph of the pointwise error \(\vert e(t_j)\vert \) on the adaptive mesh and \({ TOL}\cdot t^{\alpha -1}\) for \(\alpha =0.4\), \({ TOL}=10^{-5}\), \(M=346\)

Example 5.2

We modify Example 5.1 by resetting

while retaining \(u(0)=0\) and \(f(t)\equiv 1\). Now the coefficient of the highest-order derivative vanishes for \(t\ge 1/2\). Loglog graphs of reference solutions indicate that the solution to this problem has an initial singularity of type \(t^{\alpha _1}\) (compare the constant-coefficient analysis of Sect. 2.3) and remains smooth away from \(t=0\). See Fig. 5 for errors in the computed solutions and the mesh generated. We also display (see rightmost figure) the meshes generated when \(f(t)=\cos (5t^2)\) to show that the algorithm continues to perform well when f changes rapidly.

Adaptive algorithm with \({{\mathcal {R}}}_0(t)\) for Example 5.2: loglog graphs of \(\max _{[0,T]}\vert e(t)\vert \) on the adaptive mesh and the corresponding \({ TOL}\) for \(\alpha =0.4\) (left) and \(\alpha =0.8\) (centre). Right: Change f to \(f(t)=\cos (5t^2)\); loglog graphs of \(\{t_j\}_{j=0}^M\) as a function of j/M for our adaptive mesh and the standard graded mesh with \(r=(2-\alpha )/\alpha \) for \(\alpha =0.6\), \({ TOL}=10^{-3}\), \(M=139\)

Example 5.3

We modify Example 5.1 by resetting

Here the situation is opposite to that of Example 5.2: the coefficient of the highest-order derivative vanishes for \(t< 1/2\). Loglog graphs of reference solutions indicate that the solution to this problem has an initial singularity of type \(t^{\alpha _2}\) (one could show this analytically by an extension of Remark 2.6) and remains smooth away from \(t=0\). See Fig. 6 for errors in the computed solutions and the mesh generated.

Adaptive algorithm with \({{\mathcal {R}}}_0(t)\) for Example 5.3: loglog graphs of \(\max _{[0,T]}\vert e(t)\vert \) on the adaptive mesh and the corresponding \({ TOL}\) for \(\alpha =0.4\) (left) and \(\alpha =0.8\) (centre). Right: loglog graphs of \(\{t_j\}_{j=0}^M\) as a function of j/M for our adaptive mesh and the standard graded mesh with \(r=(2-\alpha _2)/\alpha _2\), \(\alpha =\alpha _1=0.6\), \({ TOL}=10^{-3}\), \(M=54\)

Example 5.4

Now we consider the subdiffusion analogue (1.1) of (5.2): retain the values of \(\alpha _1, \alpha _2, q_1, q_2\) and set

Note that the initial data \(u_0\) has only limited compatibility with the other data at the corner \((\pi ,0)\) of the space-time domain. Nevertheless the algorithm performs satisfactorily. (Related examples where either the exact solution is known, or the initial condition is piecewise linear, were tested in [14].) See Fig. 7 for errors in the computed solutions.

Example 5.4 adaptive algorithm results: (left) for \({{\mathcal {R}}}_1(t)\) with \(\alpha =0.4\), showing \(\Vert e(1)\Vert \) and \({ TOL}\); for \({{\mathcal {R}}}_0(t)\) with \(\alpha =0.4\) (centre) and \(\alpha =0.8\) (right), \(\max _{t_j\in (0,T]}\Vert e(t_j)\Vert \) on the adaptive mesh and the corresponding \({ TOL}\)

5.2 Numerical Results with \(\alpha _1=1\)

Example 5.5

Consider the IVP (2.3) with \(\alpha _1=1, \lambda =1\) and

See Fig. 8 for results for \(c_1=1\) and Fig. 9 for those for \(c_1=\frac{1}{2}\). When \(c_1=1\), the solution has no initial singularity and we used the exponential barrier function \({{\mathcal {E}}}(t):=1-\exp (-10t)\) since it gives better results in this case. For \(c_1=\frac{1}{2}\) one has \(q_2(0)>0\), so we employed \({{\mathcal {E}}}_0\) and hence \({{\mathcal {R}}}_0\) as in the earlier examples for \(\alpha _1<1\).

Note: when evaluating \({{\mathcal {R}}}(t) :=\left( \sum _{i=1}^{\ell } q_i(t) D _t ^{\alpha _i} + \lambda \right) {{\mathcal {E}}}(t)\) in (3.1), \(D_t^{\alpha _1}{{\mathcal {E}}}={{\mathcal {E}}}'(t)\) is computed explicitly, while \(D_t^{\alpha _2}{{\mathcal {E}}}\) is computed using quadrature.

Adaptive algorithm with \({{\mathcal {R}}}(t)\) generated by \({{\mathcal {E}}}=1-\exp (-10t)\) for Example 5.5 with \(\alpha _1=1\) and \(c_1=1\): loglog graphs of \(\max _{[0,T]}\vert e(t)\vert \) on the adaptive mesh and the corresponding \({ TOL}\) for \(\alpha _2=0.3\) (left) and \(\alpha _2=0.8\) (centre). Right: computed solutions for this test problem obtained using \({ TOL}=10^{-2}\)

Adaptive algorithm with \({{\mathcal {R}}}_0(t)\) for Example 5.5 with \(\alpha _1=1\) and \(c_1=\frac{1}{2}\): loglog graphs of \(\max _{[0,T]}\vert e(t)\vert \) on the adaptive mesh and the corresponding \({ TOL}\) for \(\alpha _2=0.3\) (left) and \(\alpha _2=0.8\) (centre). Right: computed solutions for this test problem obtained using \({ TOL}=10^{-2}\)

In the next example we return to our subdiffusion problem (1.1).

Example 5.6

Take \(q_1\), \(q_2\) and f as in Example 5.5, with \(c_1=\frac{1}{2}\), while \({{\mathcal {L}}}\), \(u_0\), \(\Omega \) and \(\lambda =1\) are taken from Example 5.4. Now we choose the temporal grid a priori to be uniform. Once the computed solution is obtained, we compute the residual \(\Vert R_h(\cdot , t)\Vert \) on a finer mesh, with 15 equidistant additional points between any consecutive time layers.

Assuming that there exists a solution \({{\mathcal {E}}}\) of \(\left( \sum _{i=1}^{\ell } q_i(t)\, D _t ^{\alpha _i} +\lambda \right) {{\mathcal {E}}}=\Vert R_h(\cdot , t)\Vert \), inequality (3.8) gives an upper bound for the error, viz., \(\Vert (u_h-u)(\cdot ,t)\Vert \le {{\mathcal {E}}}\). In practice, one finds a numerical approximation \({{\mathcal {E}}}_h\) of \({{\mathcal {E}}}\) on the above fine grid.

It is important to note that the computed solution \(u_h\) is a numerical approximation of the fractional subdiffusion problem with spatial derivatives, while the computation of \({{\mathcal {E}}}_h\), although the latter is computed on a much finer temporal grid, is inexpensive, as \({{\mathcal {E}}}(t)\) is a solution of an initial-value problem without spatial derivatives.

See Fig. 10 for results.

A posteriori error estimation on the uniform temporal mesh for Example 5.6 with \(\alpha _1=1\) and \(c_1=\frac{1}{2}\): loglog graphs of \(\max _{[0,T]}\Vert e(t_j)\Vert \) and the corresponding estimator \(\max _{t_j\in [0,T]}{{\mathcal {E}}}_h(t_j)\) for \(\alpha _2=0.3\) (left) and \(\alpha _2=0.8\) (centre). Right: pointwise-in-time error \(\Vert e(t_j)\Vert \) and pointwise estimator \({{\mathcal {E}}}_h(t_j)\) for \(\alpha _2=0.8\), \(M=32\)

The numerical results in this section demonstrate that, for many different types of data, our algorithm based on the L1 scheme automatically adapts the given initial mesh to compute accurate numerical solutions. It gives excellent results for problems whose solutions have a weak singularity at \(t=0\), without requiring the user to choose a suitable mesh — while if the mesh is prescribed a priori, it can estimate the error in the solution computed on this mesh (see Fig. 10). It is equally good in cases where this weak singularity is absent.

Availability of Data and Materials

Not applicable.

References

Abramowitz, Milton, Stegun, Irene A.: Handbook of mathematical functions with formulas, graphs, and mathematical tables. National Bureau of Standards Applied Mathematics Series, No. 55. U. S. Government Printing Office, Washington, D.C., (1964). For sale by the Superintendent of Documents

Bazhlekova, Emilia: Completely monotone multinomial Mittag-Leffler type functions and diffusion equations with multiple time-derivatives. Fract. Calc. Appl. Anal. 24(1), 88–111 (2021)

Brunner, Hermann: Volterra integral equations, volume 30 of Cambridge Monographs on Applied and Computational Mathematics. Cambridge University Press, Cambridge, 2017. An introduction to theory and applications

Brunner, Hermann, Han, Houde, Yin, Dongsheng: The maximum principle for time-fractional diffusion equations and its application. Numer. Funct. Anal. Optim. 36(10), 1307–1321 (2015)

Chen, Hu., Stynes, Martin: Using Complete Monotonicity to Deduce Local Error Estimates for Discretisations of a Multi-Term Time-Fractional Diffusion Equation. Comput. Methods Appl. Math. 22(1), 15–29 (2022)

Diethelm, Kai: The analysis of fractional differential equations, volume 2004 of Lecture Notes in Mathematics. Springer-Verlag, Berlin, 2010. An application-oriented exposition using differential operators of Caputo type

Evans, Lawrence C.: Partial differential equations, volume 19 of Graduate Studies in Mathematics. American Mathematical Society, Providence, RI, second edition (2010)

Franz, Sebastian, Kopteva, Natalia: Pointwise-in-time a posteriori error control for higher-order discretizations of time-fractional parabolic equations. In preparation

Gilbarg, David, Trudinger, Neil S.: Elliptic partial differential equations of second order. Classics in Mathematics. Springer-Verlag, Berlin (2001). Reprint of the 1998 edition

Jin, Bangti: Fractional differential equations—an approach via fractional derivatives, volume 206 of Applied Mathematical Sciences. Springer, Cham, [2021] \(\copyright \) 2021

Jin, Bangti, Lazarov, Raytcho, Zhou, Zhi: Numerical methods for time-fractional evolution equations with nonsmooth data: a concise overview. Comput. Methods Appl. Mech. Engrg. 346, 332–358 (2019)

Kopteva, N.: Maximum principle for time-fractional parabolic equations with a reaction coefficient of arbitrary sign. Appl. Math. Lett. 132, 108209 (2022)

Kopteva, Natalia: Error analysis of the L1 method on graded and uniform meshes for a fractional-derivative problem in two and three dimensions. Math. Comp. 88(319), 2135–2155 (2019)

Kopteva, Natalia: Pointwise-in-time a posteriori error control for time-fractional parabolic equations. Appl. Math. Lett. 123, 107515, 8 (2022)

Li, Zhiyuan, Liu, Yikan, Yamamoto, Masahiro: Initial-boundary value problems for multi-term time-fractional diffusion equations with positive constant coefficients. Appl. Math. Comput. 257, 381–397 (2015)

Luchko, Yury: Maximum principle for the generalized time-fractional diffusion equation. J. Math. Anal. Appl. 351(1), 218–223 (2009)

Luchko, Yury: Initial-boundary problems for the generalized multi-term time-fractional diffusion equation. J. Math. Anal. Appl. 374(2), 538–548 (2011)

Luchko, Yurii, Gorenflo, Rudolf: An operational method for solving fractional differential equations with the Caputo derivatives. Acta Math. Vietnam. 24(2), 207–233 (1999)

Metzler, R., Klafter, J., Sokolov, I.M.: Anomalous transport in external fields: Continuous time random walks and fractional diffusion equations extended. Phys. Rev. E 58(2), 1621–1633 (1998)

Olde Daalhuis, A.B.: Digital Library of Mathematical Functions, Chapter 15 Hypergeometric Function. https://dlmf.nist.gov/15. [Online; accessed 21-Jan-2022]

Popov, A. Yu., Sedletskiĭ, A.M.: Distribution of roots of Mittag-Leffler functions. Sovrem. Mat. Fundam. Napravl. 40, 3–171 (2011). Translation in J. Math. Sci. (N.Y.) 190(2):209–409, 2013

Schumer, Rina, Benson, David A., Meerschaert, Mark M., Baeumer, Boris: Fractal mobile/immobile solute transport. Water Resour. Res. 39(10), 1286 (2003)

Stynes, Martin: A survey of the L1 scheme in the discretisation of time-fractional problems. Numer. Math. Theor. Meth. Appl. (2022). (To appear)

Stynes, Martin, O’Riordan, Eugene, Gracia, José Luis.: Error analysis of a finite difference method on graded meshes for a time-fractional diffusion equation. SIAM J. Numer. Anal. 55(2), 1057–1079 (2017)

Vainikko, Gennadi: Which functions are fractionally differentiable? Z. Anal. Anwend. 35(4), 465–487 (2016)

Yang, Zhiwei, Zheng, Xiangcheng, Wang, Hong: A variably distributed-order time-fractional diffusion equation: analysis and approximation. Comput. Methods Appl. Mech. Engrg. 367, 113118, 16 (2020)

Zheng, Xiangcheng, Wang, Hong: Optimal-order error estimates of finite element approximations to variable-order time-fractional diffusion equations without regularity assumptions of the true solutions. IMA J. Numer. Anal. 41(2), 1522–1545 (2021)

Funding

The research of Natalia Kopteva is supported in part by Science Foundation Ireland under grant 18/CRT/6049. The research of Martin Stynes is supported in part by the National Natural Science Foundation of China under grants 12171025 and NSAF-U1930402.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

A Variant of Lemma 2.3

A Variant of Lemma 2.3

In this appendix we shall prove a result (Lemma A.3) that complements Lemma 2.3. This is done by using an explicit complex contour integral formula to derive a positivity property of the multinomial Mittag-Leffler function (Lemma A.2) that appears to be new.

Our argument starts with the following elementary result.

Lemma A.1

Let m and n be nonnegative integers with \(m<n\). Set \(S(s) = \sum _{j=0}^n k_js^{\gamma _j}\) for \(s\in [0,\infty )\), where \(0 = \gamma _0< \gamma _1<\dots < \gamma _n \le 1\) and \(k_j>0\) for \(0\le j\le m\), \(k_j<0\) for \(m<j\le n\). Then the equation \(S(s) =0\) has a unique solution \(s_0\in (0,\infty )\), with \(S(s)>0\) for \(0\le s <s_0\) and \(S(s)<0\) for \(s_0<s < \infty \).

Proof

If \(t\in (0,\infty )\), then

Hence

One has \(S(0)=k_0>0\). As \(s\rightarrow \infty \), the term \(k_ns^{\gamma _n}\) in S(s) will dominate; it follows that \(S(s)<0\) for all sufficiently large s. Hence \(S(s)=0\) has at least one solution \(s_0\) in \((0,\infty )\).

From (A.1) it follows that \(S'(s_0)<0\), so \(S(s)<0\) on some interval \((s_0, s_0+\delta )\). Now (A.1) ensures that S can never reach a minimum on \((s_0, \infty )\), which implies that \(S(s)<0\) for \(s\in (s_0,\infty )\). Thus \(s_0\) is the unique solution of \(S(s)=0\). \(\square \)

We now prove a new positivity property of the multinomial Mittag-Leffler function that is related to Lemma 2.9; this proof is in the spirit of classical analyses of Mittag-Leffler functions. The argument used is partly based on [21, pp.215–216], where a similar result was obtained for the simpler case of the two-parameter Mittag-Leffler function \(E_{\alpha ,\beta }(t)\).

Lemma A.2

Assume that \(1< \beta < 1+\alpha _1\) and \(\lambda >0\). Then

for all \(t> 0\).

Proof

For each \(t>0\), by Remark 2.8 and [18, eq.(47)] we have

where \(r := \max \left\{ 1, \left( \lambda +\sum q_j\right) ^{1/(\alpha _1-\alpha _2)} \right\} \), and \({\gamma (R, \theta _1,\theta _2)}\) (for \(R\ge 0\) and \(-\pi \le \theta _1\le \theta _2\le \pi \)) denotes the complex-plane Hankel contour that comprises the ray \(\arg \zeta = \theta _1\) with \(\vert \zeta \vert \ge R\), the arc \(\vert \zeta \vert =R\) with \(\theta _1\le \arg \zeta \le \theta _2\), and the ray \(\arg \zeta = \theta _2\) with \(\vert \zeta \vert \ge R\), and the contour is traversed in the direction of increasing \(\arg \zeta \).

The substitution \(w= \zeta ^{\alpha _1}\) gives

Observe that if \(w\in \gamma (r^{\alpha _1}, -\alpha _1\pi , \alpha _1\pi )\), then \(\vert \arg w\vert \le \alpha _1\pi \) independently of r; hence

so (recall that \(\lambda >0\)) the denominator of the integrand will not vanish if we change the value of r in the contour, and consequently the value of the integral will not change (by Cauchy’s integral theorem). Furthermore, we can permit \(r\rightarrow 0\) because \(\beta <1+\alpha _1\) ensures that the integral remains finite. Thus we can replace the contour \(\gamma (r^{\alpha _1}, -\alpha _1\pi , \alpha _1\pi )\) in (A.2) by \(\gamma (0, -\alpha _1\pi , \alpha _1\pi )\).

Next, set \(w = se^{\pm i\alpha _1\pi }\) along the ray \(\arg w = \pm \alpha _1\pi \) (choose same sign). This yields

where \(\xi := \sum _{j=2}^\ell q_js^{\alpha _j/\alpha _1}e^{i(\alpha _1-\alpha _j)\pi } +\lambda e^{i\alpha _1\pi }\) and \({\bar{\xi }}\) is its complex conjugate. Now

where \(v(s) := s \sin \beta \pi + \sum _{j=2}^\ell q_j s^{\alpha _j/\alpha _1} \sin (\beta -\alpha _1+\alpha _j)\pi + \lambda \sin (\beta -\alpha _1)\pi \). Hence (A.3) becomes

Note that v(s) has exactly the same structure as S(s) in Lemma A.1, since \(0< \alpha _j/\alpha _1 <1\), \(1< \beta < 1+\alpha _1\) and \(\lambda >0\). Thus there exists \(s_0>0\) such that \(v(s)>0\) for \(0<s<s_0\) and \(v(s)<0\) for \(s>s_0\). From Definition 2.7 we get \({{\tilde{E}}}(0) = 1/\Gamma (\beta )>0\). By continuity we can choose \(t_0>0\) such that \({{\tilde{E}}}(t)>0\) on \((0,t_0]\), which implies \(I(t_0)>0\). That is, recalling the properties of \(s_0\),

Then for any \(t>t_0\), using (A.5) we get

Now move the integral \( \int _{s_0}^\infty \dots \) to the left-hand side; this gives \(I(t)>0\). Hence \({{\tilde{E}}}(t)>0\) for \(t>t_0\) and we are done. \(\square \)

We can now prove our variant of Lemma 2.3.

Lemma A.3

Consider the homogeneous version of the initial-value problem (2.3):

where the \(q_i\) are constants and \(\lambda \ge 0\). Then this problem has a solution y, with \(y(t)\ge 0\) for \(t\in [0,T]\).

Proof

If \(\lambda =0\) then \(y(t)\equiv 1\) is the unique solution of (A.6) by [18, Theorem 4.1]. Thus we can assume that \(\lambda >0\). From [17, Theorem 6] the solution of (A.6) is

But [15, Lemma 3.1] states that for \(m\ge 1\) one has

for \(0<\beta _0 <2\) and \(0<\beta _j<1\) (\(j=1,\dots ,m\)) and any \(z_j\in {\mathbb {R}}\). In particular this implies that

Hence, using Remark 2.8, we get

The result now follows by applying Lemma A.2 to the term \({{\mathcal {F}}}_{(\dots ),1}\) and Lemma 2.9 to each term \({{\mathcal {F}}}_{(\dots ),1+\alpha _1-\alpha _j}\). \(\square \)

Rights and permissions

About this article

Cite this article

Kopteva, N., Stynes, M. A Posteriori Error Analysis for Variable-Coefficient Multiterm Time-Fractional Subdiffusion Equations. J Sci Comput 92, 73 (2022). https://doi.org/10.1007/s10915-022-01936-2

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-022-01936-2