Abstract

We examine the long time error behavior of discontinuous Galerkin spectral element approximations to hyperbolic equations. We show that the choice of numerical flux at interior element boundaries affects the growth rate and asymptotic value of the error. Using the upwind flux, the error reaches the asymptotic value faster, and to a lower value than a central flux gives, especially for low resolution computations. The differences in the error caused by the numerical flux choice decrease as the solution becomes better resolved.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

To compute long time behavior of hyperbolic wave propagation problems accurately, the error should not grow large over time. Stability of a numerical scheme ensures that the solution remains bounded in some norm for fixed time, but the equation that describes the time variation of the error includes a forcing term generated by the approximation or truncation errors. That forcing term can lead to unbounded growth in the error for long times even though the solution remains bounded.

Examples of both linear and bounded temporal error growth are observed in computations presented in the literature. Linear growth of the error in approximations of hyperbolic systems has been noted for both finite difference [5, 11] and discontinuous Galerkin [4, 7] approximations. In [7], the authors prove linear growth of the error and note that the bound is sharp, meaning that slower than linear growth cannot be guaranteed, though the growth rate is controlled by the order of the approximation. On the other hand, bounded error behavior is observed for some finite difference approximations, e.g. [1, 2], and [8].

An explanation for when the error is bounded or not was presented in [10]. Linear growth is observed when waves are trapped in cavities or in periodic geometries, which is what was studied in [7]. Bounded growth occurs when waves are present in the domain for finite amounts of time, as in an inflow-outflow problem. The idea was explained at the partial differential equation (PDE) level in [10] in terms of a model problem with forcing. The analysis of SBP-SAT (Summation-By-Parts/Simultaneous-Approximation-Term) finite difference approximations in that paper predicted the same behavior. The conclusion was that the error is bounded if a sufficiently dissipative boundary procedure is used. It is not bounded due to the internal discretization. The error levels were significantly lower using characteristic boundary conditions versus noncharacteristic ones. Since the error is bounded, arbitrarily high order accuracy can be found at any time.

In this paper we examine the long time behavior of the error for discontinuous Galerkin spectral element methods (DGSEM). We show that although the bounded error property is a result of dissipative boundary conditions as was shown in [10], the behavior of the error and its bound are influenced by the internal approximation. In particular, we show that the choice of the numerical flux at interior element interfaces affects both the rate at which the error grows and the asymptotic value it attains. The presence of inter-element dissipation introduced by the numerical flux is a feature of the DGSEM not found in single domain SBP finite difference approximations. The results, however, apply to multidomain or multiblock versions of those methods.

2 The Model Problem in One Space Dimension

To show the boundedness of the energy when characteristic boundary conditions are applied, we study the error equation for the DGSEM approximation of the scalar constant coefficient initial boundary value problem with a non-periodic boundary condition

For truncation errors of the approximation to be bounded in time we assume that the initial and boundary values are constructed so that \(u(x,t) \in H^{m}(0,L)\) for \(m> 1\) and that its norm \(\left\| u\right\| _{H^{m}}\) is uniformly bounded in time. Such conditions are physically meaningful and describe problems where the boundary input is, for example, sinusoidal.

The energy of the solution of the initial boundary value problem, measured by the \(\mathbb {L}^{2}\) norm \({\left\| u \right\| ^2} = (u,u) = \int _0^L {{u^2}dx}\), is increased through the addition of energy at the left boundary, and dissipated as waves move out through the boundary at the right. To see this, construct a weak form of the equation by multiplying it with a test function \(\phi \in \mathbb {L}^{2}(0,L)\) and integrating over the domain

Replacing \(\phi \) with u yields

Integration by parts implies that when the boundary condition at the left is applied,

Integrating in time over an interval [0, T] leads to

Thus, the energy at time T is the initial energy, plus energy added at the left through the boundary condition minus the energy lost through the right boundary. It is this behavior that the numerical approximation should emulate.

3 The DGSEM Approximation of the Model Problem

To construct the DGSEM, we subdivide the interval into elements \(e^{k}=\left[ x_{k-1},x_{k}\right] \), \(k=1,2,\ldots ,K\), where the \(x_{k},\;k=0,1,\ldots ,K\) are the element boundaries with \(x_{0}=0\) and \(x_{K}=L\). Then

Since \(\phi \in \mathbb {L}^{2}\), we can choose \(\phi \) to be nonzero selectively in each element, which tells us that on each element the solution satisfies

To allow us to use a Legendre polynomial approximation of the solution, we map the element \(\left[ x_{k-1},x_{k}\right] \) onto the reference element \(E=[-1,1]\) by the linear transformation

where \(\Delta x_{k} = x_{k}-x_{k-1}\) is the length of the element. Under this transformation, \(u_{x}= 2u_{\xi }/\Delta x_{k}\) so the elemental contribution is

We then integrate the term with the space derivative by parts to get the elemental weak form

Finally, we define the elemental inner product and norm by

and write the elemental contribution as

Since it is unlikely to cause confusion, we will typically drop the subscript E.

We are now ready to construct the DGSEM approximation. Let \(\mathbb {P}^{N}\) be the space of polynomials of degree \(\le N\) and let \(\mathbb {I}^{N}:\mathbb {L}^{2}(-1,1)\rightarrow \mathbb {P}^{N}(-1,1)\) be the interpolation operator. We approximate the solution by a polynomial interpolant, \(u\approx U\in \mathbb {P}^{N}\), which we write in Lagrange (nodal) form

where \(\ell _{j}(\xi )\in \mathbb {P}^{N}\) is the jth Lagrange interpolating polynomial that satisfies \(\ell _{j}\left( \xi _{i}\right) = \delta _{ij}\). The interpolation nodes, \(\xi _{j},\; j=0,1,\ldots ,N\) are the nodes of the Gauss-Lobatto quadrature

Then we can define the discrete inner product and norm in terms of the Legendre–Gauss–Lobatto quadrature as

We choose the Gauss–Lobatto points here because they allow for the derivation of provably stable approximations in multiple dimensions and on curved elements [9]. The discrete norm is equivalent to the continuous norm ([3], after (5.3.2)) in that for all \(U\in \mathbb {P}^{N}\),

The Gauss–Lobatto quadrature has the property [3] that

Furthermore, with the interpolation property \(\mathbb {I}^{N}(u)\left( \xi _{j}\right) = u\left( \xi _{j}\right) = u_{j}\),

which says that the interpolation operator is the orthogonal projection of \(\mathbb {L}^{2}\) onto the space of polynomials with respect to the discrete inner product \(\left( \cdot ,\cdot \right) _{N}\).

In addition to the solution, three more quantities need to be approximated. We use the Gauss-Lobatto quadrature to approximate the inner products in (12). We restrict the test function to be \(\phi ^{k}\in \mathbb {P}^{N}\subset \mathbb {L}^{2}\). Finally, we introduce the continuous numerical “flux” \(U^{*}=U^{*}\left( U^{L},U^{R}\right) \) to couple the elements at the boundaries to create the weak form of the DGSEM

In this work we will choose the numerical flux to have the form

where \(U^{L,R}\) are the states on the left and the right and \(\sigma \in [0,1]\). The numerical flux includes both the upwind (\(\sigma =1\)) and central (\(\sigma =0\)) fluxes

In shorthand, the approximation on the \(k^{th}\) element satisfies

3.1 Stability of the DGSEM

The DGSEM is stable in the sense that the energy of the approximate solution approximates (5) if the upwind numerical flux is used at the physical boundaries. To show this, we let \(\phi ^{k} = U^{k}\) to get the energy equation on an element

The quadrature in the discrete inner product on the right is exact. Alternatively, we can say that the discrete inner product satisfies the summation by parts rule [9]

Therefore, the elemental contribution to the energy is

Summing over all the elements gives the time rate of change of the total energy

The sum over the element endpoints splits into three parts: One for the left physical boundary, one for the right physical boundary and a sum over the internal element endpoints. The internal term has contributions from the elements to the left and the right of the interface and uses the fact that the numerical flux \(U^{*}\) is unique at the interface. Therefore,

where \(U_{ext}\) is some external state required by the numerical flux function.

Using the central solver at the boundaries does not give conditions that match those of the PDE seen in (5). On the other hand, when the upwind flux is used,

The terms in the sum over the internal faces are each of the form

where \(\llbracket V\rrbracket = {V^L} - {V^R}\) is the jump in the argument. This quantity is non-negative for either the upwind or central numerical flux. Direct calculation shows that

Therefore,

Let us now define the global norm by

Then, if we define U(0) as the interpolant of the initial condition \(u_{0}\) on the element,

which also satisfies

Equation (33) matches (5) except for the additional dissipation that comes from the weak imposition of the boundary condition, and dissipation from the jumps in the solution at the element interfaces if the upwind flux (\(\sigma =1\)) is used. Therefore, the DGSEM for the (constant coefficient) problem is strongly stable and the energy at any time T is bounded by the initial energy plus the energy added at the left boundary minus the energy lost from the right if the upwind flux (i.e. characteristic boundary condition) is used at the endpoints of the domain.

4 The Error Equation

We now study the time behavior of the error, whose elemental contribution is \(E^{k}=u\left( x\left( \xi \right) ,t\right) - U^{k}\left( \xi ,t \right) \). For a more general derivation and for multidimensional problems, although with exact integration, see [7] and [12].

We compute the error in two parts as

so that \(\varepsilon ^{k}\in \mathbb {P}^{N}\). The triangle inequality allows us to bound the two parts separately

The interpolation error, \(\varepsilon ^{k}_{p}\), is independent of the approximate solution and is the sum of the series truncation error and the aliasing error. Its continuous norm converges spectrally fast as [3] (5.4.33)

where

On an element itself (as opposed to the reference element), the interpolation error is bounded by [3] (5.4.42)

for \(n=0,1\). Equivalence of the discrete and continuous norms allows us to bound the discrete norm in terms of the continuous one, so the contribution of \(\varepsilon ^{k}_{p}\) in (36) decays spectrally fast.

The part of the error that depends on U, namely \(\varepsilon ^{k}\), depends on the spatial approximation. To find the equation that \(\varepsilon ^{k}\) satisfies, note that u satisfies the continuous equation (12) and that \(u=\mathbb {I}^{N}(u) + \varepsilon ^{k}_{p}\). Then when we replace u by this decomposition and restrict \(\phi \) to \(\mathbb {P}^{N}\subset \mathbb {L}^{2}\),

Note that the endpoints of the interval are Gauss-Lobatto points, so the interpolant equals the solution there and \(\varepsilon ^{k}_{p}=0\) at the endpoints. Also, we can integrate the last term by parts so the boundary terms on the right vanish and

Next,

where the term in the braces is the error associated with the Gauss-Lobatto quadrature, which is spectrally small through [3] (5.4.38)

for all \(\phi \in \mathbb {P}^{N}\) and \(m\ge 1\) and some C independent of m and u. The error bound (43) comes from applying the Cauchy–Schwarz inequality, the interpolation error estimate, exactness of the quadrature and the norm equivalence to

where \(\varPi ^{N}:\mathbb {L}^{2}\rightarrow \mathbb {P}^{N}\) is the \(\mathbb {L}^{2}\) orthogonal projection (series truncation) operator.

Also when \(\phi \) is restricted to \(\mathbb {P}^{N}\), the volume term in (41) is equal to the quadrature

Finally, the value of the interpolant at a point can be represented in terms of the limits from the left, \({\mathbb {I}^N}{{(u)}^ - }\), and the right, \({\mathbb {I}^N}{{(u)}^ + }\) as

At the element interfaces, u is continuous (\(m>1\)), so that the error term in the braces is zero. Thus, at \(\xi =\pm 1\), \({\mathbb {I}^N}(u) = {U^*}\left( {{\mathbb {I}^N}{{(u)}^ - },{\mathbb {I}^N}{{(u)}^ + }} \right) \).

Making these substitutions,

The right hand side of (47) is the amount by which the exact solution u fails to satisfy the approximation (22), in other words it is the spectrally small “truncation error”. Therefore, we use (44) and write

where

and

The quantity \(\mathbb Q\) measures the projection error of a polynomial of degree N onto a polynomial of degree \(N-1\). It is bounded under the assumptions on the boundedness of u. The remaining parts of \(\mathbb T\) satisfy bounds determined by (39). Specifically,

which is convergent in N when \(m>1\) and the Sobolev norm of the solution is uniformly bounded in time. (It is for this reason the initial and boundary conditions for (1) have the specified smoothness.) The norm of the time derivative term is similarly bounded in time since \(u_{t}=-u_{x}\).

When we subtract (22) from (48), we get an equation for the error, \(\varepsilon ^{k}\)

where by linearity of the numerical flux,

We get the energy equation for the error by letting \(\phi ^{k} = \varepsilon ^{k}\). Then

As before, summation by parts says that

Therefore,

We now sum over all of the elements

to get the global energy equation

which is of the same form as (26) except for the right hand side generated by the approximation errors.

We now bound the right hand side of (58). We re-write it as

and use the Cauchy–Schwarz inequality on the inner products to get the bound

We then use the Cauchy–Schwarz inequality

to see that

Using the definition of the global norm (32) and the equivalence between the continuous and discrete norms, (16),

Therefore, the global error equation is

Again, the sum over the element endpoints splits into three parts: One for the left physical boundary, one for the right physical boundary and a sum over the internal element endpoints. The last has contributions from the elements to the left and the right of the interface

The external states for the physical boundary contributions are zero because \(\mathbb {I}^{N}(u)=g\) at the left boundary and the external state for \(U^{1}\) is set to g. At the right boundary, where the upwind numerical flux is used, it doesn’t matter what we set for the external state, since its coefficient in the numerical flux is zero.

The inner element boundaries contribute as in the stability proof

At the left boundary, let \(e\leftarrow {\varepsilon ^0}( - 1)\) to simplify the notation. Then

At the right, with \(e\leftarrow {\varepsilon ^K}( 1)\),

Therefore, the energy growth rate is bounded by

Grouping the boundary and interface terms,

where

Note that \(BTs\ge 0\). We also note that (69) is the same kind of estimate found for summation by parts finite difference approximations [10] except for the additional sum over the squares of the element endpoint jumps, which represents additional damping (when \(\sigma >0\)) that does not exist in the single block finite difference approximation. However, in the multi-block version, it does, see Remark 2 below.

5 Bounded Error in Time for the DGSEM

Using the product rule, we write (70) as

As noted in [10], one should not throw away the dissipation contributed by the boundary terms. So we leave them in and write

In [10], it is argued that the mean value of \(\eta (t)\) over any finite time interval, \(\bar{\eta }\), is bounded from below by a positive constant, i.e., \(\bar{\eta }\geqslant {\delta _0} > 0\). Furthermore, the truncation and quadrature errors are bounded in time under the assumption that u and its time and space derivatives are bounded in time. An integrating factor allows one to integrate (73) to get a bound on the error at any time t

where by the boundedness assumption on the exact solution, \(M = \mathop {\max }\limits _{s \in [0,\infty )} \mathbb E(s)<\infty \) is bounded.

Equation (74) says that for bounded truncation error the dissipative boundary conditions keep the error bounded for large time.

We now make four predictions from (74) about the behavior of the error, which come from the fact that \(\delta _{0}\) is a lower bound on the average of \(\eta (t)\), which in turn depends on the size of the contributions of the element boundaries. Before doing so, we modify the boundary terms to explicitly incorporate the upwind flux (\(\sigma = 1\)) at the physical boundaries. We now write

The model (74) predicts:

-

P1

Using the upwind flux at the physical boundaries and either the upwind flux or the central flux at the interior element interfaces, the error growth is bounded asymptotically in time.

Under these conditions, \(BTs\ne 0\) for all time, leading to (74). For large time, the error \({\left\| {\varepsilon (t)} \right\| _N}\rightarrow M/\delta _{0}\). Equivalence of the norms implies that the same holds true in the continuous norm.

-

P2

Using the upwind flux \(\sigma = 1\) in the interior will lead to a smaller asymptotic error than using the central flux, \(\sigma = 0\). This will be especially true for under-resolved approximations.

As time increases the error approaches \(M/\delta _{0}\), so the larger \(\delta _{0}\) is the smaller the asymptotic error. Using the upwind flux in the interior, \(\sigma =1\), increases the contribution of the boundary terms, BTs, and hence the size of the mean, \(\bar{\eta }\). The interface jumps in (75) are larger when the resolution is low, so the effect will be more pronounced at low resolution.

-

P3

As the resolution increases, the difference between the asymptotic error from the central and upwind fluxes should decrease.

Following the argument of prediction P2, the size of the jumps decreases as the solution converges, therefore decreasing the effects of the inter-element jump terms in BTs so that \(\delta _{0}\) approaches the same value.

-

P4

The error growth rate will be larger when the upwind flux is used compared to when the central flux is used. Equivalently, the upwind flux solution should approach its asymptotic value faster than the central flux solution.

The rate at which the error approaches the asymptotic value depends on \(\delta _{0}\), which is larger with the upwind flux due to the presence of the jump terms in the interior.

Remark 1

The fundamental bounded error behavior P1 was shown to hold for SBP-SAT finite difference approximations in [10]. The predictions P2–P4 are new.

Remark 2

We have also revisited [10], and derived the error bounds for the multi-block finite difference approximation. The relations (73),(74) and (75) also hold, i.e. an almost identical result. The bound M now corresponds to the maximum truncation error and \(\varepsilon \) represents the difference between the numerical solution and the exact one at each grid-point. The main difference between the DGSEM and SBP-SAT result is that in practice the number of interfaces used in the DGSEM is larger in a typical application due to the differences in the types of meshes used, unstructured vs block structured.

6 Numerical Examples

In this section we present numerical examples to illustrate the bounded error properties of the DGSEM for the boundary value problem (1) as predicted by the model, (74). We also present a two dimensional example to show that the same behaviors appear for systems of equations in multiple space dimensions.

6.1 Error Behavior in One Space Dimension

We illustrate the behavior of the error for \(L=2\pi \) and the initial condition \(u_{0}=\sin (12(x-0.1))\), with the boundary condition g(t) chosen so that the exact solution is \(u(x,t) = \sin (12(x - t - 0.1))\). We approximate the PDE with the DGSEM in space, and integrate in time with a low storage third order Runge-Kutta method, with the time step chosen so that the time integration error is negligible. In all the one dimensional tests, the elements will be of uniform size.

Figure 1 shows the error as a function of time for 50 elements with a fourth order polynomial approximation. The error is bounded as time increases for both the upwind and central fluxes (P1) and the error bound for the central flux is larger than that of the upwind flux (P2). The upwind flux error also reaches its asymptotic value much sooner (\(t \lesssim 1/2\) vs. \(t \approx 3\)) than the central flux error (P4).

We observe in Fig. 1 that the central flux error is significantly noisier than the upwind flux error. This observation is typical for all of the meshes and polynomial orders tested. We interpret that as due to the fact that when using the central flux in the interior, the only dissipation comes from the upwind flux at the physical boundaries, as observed in the plot on the left of Fig. 2 showing the eigenvalues of the discrete spatial operator. The eigenvalues of the upwind flux shown on the right of Fig. 2 all have negative real parts, indicating dissipation in all modes.

At better resolution, P3 suggests that the difference between the asymptotic errors from the upwind and central fluxes should decrease. Figure 3 on the left shows the time behavior of the error for \(N=7\) and \(K=50\), where the polynomial order is increased but the number of elements stays fixed. The asymptotic error has decreased and the central flux still gives a larger error. There is also much less difference between the time it takes for the two approximations to reach the error bound, which is consistent with the argument leading to P4. Using the same number of degrees of freedom but lower order and more elements also supports P3. The asymptotic errors are closer than for \(N=4\), \(K=50\), but more elements means more jumps to dissipate energy and the dissipation effect is stronger at the lower order [6].

In general, we would expect spectral convergence of the error for a spectral element method. Indeed, we’ve seen that the quantity \(\mathbb {E}\) depends only on the smoothness of the solution, u. However, we expect the time asymptotic error to be bounded by \(\mathbb {E}/\delta _{0}\) where \(\delta _{0}\) depends on the size of the jumps at the element interfaces. So the question is whether \(1/\delta _{0}\) increases faster or slower than the approximation errors in \(\mathbb {E}\). The arguments in [10] leading to the estimate (74) are not precise enough to answer that question. Experimentally, Fig. 4 shows that the upper bound of the error for the upwind flux (which is less noisy and hence more easily measured) as a function of polynomial order is clearly spectral. This suggests that the approximation errors decay faster than \(1/\delta _{0}\) grows.

6.2 Error Behavior in Two Space Dimensions

To see that the conclusions derived from the one dimensional approximation extend to multiple space dimensions, we compute solutions to the symmetric linear wave equation in first order system form

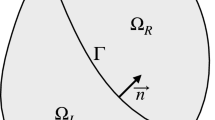

with wavespeed \(c=1\) on the circular domain with a hole shown in Fig. 5.

We choose the initial and boundary conditions so that the exact solution is the sinusoidal plane wave

with wavevector \(\left( k_{x},k_{y}\right) =\left( \sqrt{3}/2,1/2\right) \). The computed solution contours at \(t=10\) are shown in Fig. 6.

Since the element boundaries are curved in this test problem, the metric terms associated with the transformations from the elements to the reference element \([-1,1]^{2}\) are not constant. To ensure that the approximation is stable, we use the skew-symmetric DGSEM approximation developed in [9]. With the skew-symmetric approximation, the volume terms for the constant coefficient problem vanish in the stability and error proofs leaving only the boundary terms, just as in one space dimension. For the time integration, we again use a third order low storage Runge–Kutta method with the time step chosen so that the time integration error is negligible.

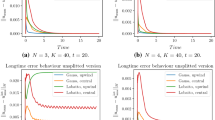

The time history of the error for the two-dimensional example is shown in Fig. 7. The features predicted by the one dimensional analysis still hold: For both the upwind and central fluxes, the error is bounded in time (P1), rather than growing linearly. The error bound for the central flux is once again larger than that of the upwind flux (P2). Finally, it takes longer for the central flux to reach its time asymptotic state where the pattern starts repeating than does the upwind flux, \(T\approx 8\) vs. \(T\approx 2\) (P3).

7 Conclusions

We have shown that when characteristic boundary conditions are implemented through the numerical flux, the discontinuous Galerkin spectral element method exhibits bounded error growth, just as has been observed in the past for finite difference approximations. The numerical flux used at element interfaces affects the speed at which the asymptotic error is reached and the magnitude of that error. The use of the upwind flux leads to a shorter time to, and a smaller value of, the asymptotic error. This effect decreases as the resolution increases and the jumps at the interfaces decreases. Numerical experiments in both one and two space dimensions show this behavior predicted by the error growth model.

References

Abarbanel, S., Ditkowski, A., Gustafsson, B.: On error bounds of finite difference approximations to partial differential equations temporal behavior and rate of convergence. J. Sci. Comput. 15(1), 79–116 (2000)

Abarbanel, S.S., Chertock, A.E.: Strict stability of high-order compact implicit finite-difference schemes: the role of boundary conditions for hyperbolic PDEs. J. Comput. Phys. 160, 42–66 (2000)

Canuto, C., Hussaini, M.Y., Quarteroni, A., Zang, T.A.: Spectral Methods: Fundamentals in Single Domains. Springer, Berlin (2006)

Cohen, G., Ferrieres, X., Pernet, S.: A spatial high-order hexahedral discontinuous Galerkin method to solve Maxwell’s equations in time domain. J. Comput. Phys. 217(2), 340–363 (2006)

Ditkowski, A., Dridi, K., Hesthaven, J.S.: Convergent cartesian grid methods for Maxwell’s equations in complex geometries. J. Comput. Phys. 170, 39–80 (2001)

Gassner, G., Kopriva, D.A.: A comparison of the dispersion and dissipation errors of Gauss and Gauss–Lobatto discontinuous Galerkin spectral element methods. SIAM J. Sci. Comput. 33(5), 2560–2579 (2010)

Hesthaven, J.S., Warburton, T.: Nodal high-order methods on unstructured grids. I. Time-domain solution of Maxwell’s equations. J. Comput. Phys. 181, 186–221 (2002)

Koley, U., Mishra, S., Risebro, N.H., Svärd, M.: Higher order finite difference schemes for the magnetic induction equations. BIT Numer. Math. 49, 375–395 (2009)

Kopriva, D.A., Gassner, G.J.: An energy stable discontinuous Galerkin spectral element discretization for variable coefficient advection problems. SIAM J. Sci. Comput. 34(4), A2076–A2099 (2014)

Nordström, J.: Error bounded schemes for time-dependent hyperbolic problems. SIAM J. Sci. Comput. 30(1), 46–59 (2007)

Nordström, J., Gustafsson, R.: High order finite difference approximations of electromagnetic wave propagation close to material discontinuities. J. Sci. Comput. 18(2), 215–234 (2003)

Warburton, T.: A low-storage curvilinear discontinuous Galerkin method for wave problems. SIAM J. Sci. Comput. 35(4), A1987–A2012 (2013)

Acknowledgements

The authors would like to thank Tim Warburton for supplying the intermediate steps to his convergence proofs.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Kopriva, D.A., Nordström, J. & Gassner, G.J. Error Boundedness of Discontinuous Galerkin Spectral Element Approximations of Hyperbolic Problems. J Sci Comput 72, 314–330 (2017). https://doi.org/10.1007/s10915-017-0358-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-017-0358-2