Abstract

Multicollinearity exists when some explanatory variables of a multiple linear regression model are highly correlated. High correlation among explanatory variables reduces the reliability of the analysis. To eliminate multicollinearity from a linear regression model, we consider how to select a subset of significant variables by means of the variance inflation factor (VIF), which is the most common indicator used in detecting multicollinearity. In particular, we adopt the mixed integer optimization (MIO) approach to subset selection. The MIO approach was proposed in the 1970s, and recently it has received renewed attention due to advances in algorithms and hardware. However, none of the existing studies have developed a computationally tractable MIO formulation for eliminating multicollinearity on the basis of VIF. In this paper, we propose mixed integer quadratic optimization (MIQO) formulations for selecting the best subset of explanatory variables subject to the upper bounds on the VIFs of selected variables. Our two MIQO formulations are based on the two equivalent definitions of VIF. Computational results illustrate the effectiveness of our MIQO formulations by comparison with conventional local search algorithms and MIO-based cutting plane algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Multiple regression analysis is a statistical process for estimating the relationship between explanatory and response variables. When some of explanatory variables are highly correlated, the reliability of the analysis is decreased because of the low quality of the resultant estimates. This problem is known as multicollinearity [4, 11, 12].

There are several approaches to avoiding the deleterious effects of multicollinearity, such as principal component regression [21, 28], partial least squares regression [39, 40], ridge regression [17], and subset selection [16, 30]. This paper is focused on subset selection, a commonly used method for eliminating multicollinearity. Conventionally in this method, explanatory variables are removed one at a time on the basis of indicators for detecting multicollinearity, such as condition number of the correlation matrix and variance inflation factor (VIF) [11]. On the other hand, the potential disadvantage of this iterative procedure is that the best (e.g., in the least-squares sense) subset of variables is not necessarily found. More precisely, the iterative procedure may fail to provide an optimal solution to the following problem: Find a subset of variables that minimizes the residual sum of squares under the constraint that multicollinearity measured by the VIF is undetected when using that subset.

Multiple regression analysis has two primary purposes: prediction and description [18]. Subset selection is particularly beneficial for description purposes because it promotes better understanding of the causal relationships between explanatory and response variables. Various computational algorithms have been proposed for subset selection [10, 14, 23, 26], and many of them are categorized as heuristic algorithms. However, these heuristic algorithms are often unsuitable for description purposes because they can yield low-quality solutions that lead to incorrect conclusions about causality.

For this reason, we adopt a mixed integer optimization (MIO) approach to subset selection. This approach was first proposed in the 1970s [1], and recently it has received renewed attention due to advances in algorithms and hardware [9, 15, 24, 37, 38]. In contrast to heuristic algorithms, the MIO approach has the potential to provide the best subset of variables with respect to several criterion functions, which include Mallows’ \(C_p\) [31], adjusted \(R^2\) [32], discrete Dantzig selector [29], and some information criteria [22, 32]. Due to its usefulness and good performance, MIO-based subset selection has extended the range of applications to areas such as logistic regression [8, 34], sequential logit models [35], support vector machines [27], cluster analysis [5], and classification trees [6].

To avoid multicollinearity in MIO-based subset selection, Bertsimas and King [7] suggested the use of a cutting plane algorithm, which iteratively adds valid inequalities to cut off sets of collinear variables. These valid inequalities can be strengthened by means of a local search algorithm [36]; however, the cutting plane algorithm must solve a series of MIO problems, each of which is NP-hard.

Meanwhile, Tamura et al. [36] devised a mixed integer semidefinite optimization (MISDO) formulation for subset selection to eliminate multicollinearity. In contrast with the cutting plane algorithm, this approach merely needs to solve a single MISDO problem. In this MISDO formulation, however, only the condition number can be adopted as an indicator for detecting multicollinearity. Although VIF is better-grounded in statistical theory [4, 11], to the best of our knowledge, none of the existing studies have developed a computationally tractable MIO formulation for eliminating multicollinearity on the basis of VIF.

The purpose of this paper is to devise mixed integer quadratic optimization (MIQO) formulations that can be used to select the best subset of explanatory variables subject to the upper bounds on the VIFs of selected variables. Our two MIQO formulations are based on two equivalent definitions of VIF. The effectiveness of our MIQO formulations is assessed through computational experiments using several datasets from the UCI Machine Learning Repository [25].

The main contributions of the present paper are as follows.

-

We obtain computationally tractable MIQO formulations for best subset selection under the upper-bound constraints on VIF. Although the cutting plane algorithm was the only way to exactly solve this subset selection problem, we successfully reformulated the problem as a convex MIQO problem, which can be handled using standard MIO software.

-

Our MIQO formulations are capable of verifying the optimality of the selected subset of variables. We verify through computational experiments that when the number of candidate explanatory variables is less than 30, our MIQO problems can be solved to optimality within a few tens of seconds.

-

The proposed MIQO formulations provide solutions of good quality even if the computation is terminated before verifying optimality. The computational results demonstrate that even when the number of candidate explanatory variables is more than 30, our MIQO formulations with some preprocessing can find, within a time limit of 10,000 s, better subsets of variables than those obtained using local search algorithms.

2 Multiple linear regression and variance inflation factor

Let us suppose that we are given n samples, \((y_i;x_{i1},x_{i2},\ldots ,x_{ip})\) for \(i = 1,2,\ldots ,n\). Here, \(y_i\) is a response variable and \(x_{ij}\) is the jth explanatory variable for each sample \(i=1,2,\ldots ,n\). The index set of all candidate explanatory variables is denoted by \(P := \{1,2,\ldots ,p\}\).

For simplicity of explanation, in Sects. 2 and 3 we assume that all explanatory and response variables are centered and scaled for unit length; that is,

for all \(j \in P\). The multiple linear regression model is then formulated as follows:

where \({\varvec{y}} := (y_1,y_2,\ldots ,y_n)^{\top }\), \({\varvec{a}}:=(a_1,a_2,\ldots ,a_p)^{\top }\), \({\varvec{\varepsilon }} := (\varepsilon _1,\varepsilon _2,\ldots ,\varepsilon _n)^{\top }\), and

Here, \({\varvec{a}}\) is a vector of regression coefficients to be estimated, and \({\varvec{\varepsilon }}\) is a vector composed of a prediction residual for each sample \(i=1,2,\ldots ,n\).

In what follows, we consider selecting a subset \(S \subseteq P\) of explanatory variables to mitigate the negative influence of multicollinearity on regression estimates. On account of assumption (1), the correlation matrix of selected variables is calculated as

where \({\varvec{X}}_{S} := ({\varvec{x}}_j)_{j \in S}\) is the submatrix of \({\varvec{X}}\) corresponding to the set S.

The variance inflation factor, VIF, for detecting multicollinearity is defined for each \(\ell \in S\). Specifically, VIF of the \(\ell \)th explanatory variable is defined as the \(\ell \)th diagonal entry of the inverse of \({\varvec{R}}_S\), that is,

When some of the selected variables are highly correlated, \({\varvec{R}}_S\) is close to singular, and the corresponding VIF value is very large. Hence, the following upper-bound constraints should be imposed on the set S:

where \(\alpha \) is a user-defined parameter larger than one.

On the other hand, VIF has another easily interpretable definition. To describe this, we consider a linear regression model that explains the relationship between the \(\ell \)th explanatory variable and other variables in the set S,

where \({\varvec{a}}^{(\ell ,S)} \in \mathbb {R}^{|S|-1}\) and \({\varvec{\varepsilon }}^{(\ell ,S)} \in \mathbb {R}^n\) are vectors of regression coefficients and residuals.

To estimate the regression coefficients, \({\varvec{a}}^{(\ell ,S)}\), the ordinary least squares (OLS) method minimizes the residual sum of squares (RSS),

This is equivalent to solving the well-known normal equation:

where \(\hat{{\varvec{a}}}^{(\ell ,S)}\) is called the OLS estimator.

The goodness-of-fit of regression model (4) is measured by the coefficient of determination. Due to assumption (1), it is calculated based on the OLS estimator as follows:

When \(R^2(\ell ,S)\) is close to one, the \(\ell \)th explanatory variable has a strong linear relationship with other variables in the set S. It is known that VIF of the \(\ell \)th explanatory variable can also be defined as follows [4, 12]:

Here we briefly explain an advantage to using VIF over using the condition number as an indicator of multicollinearity. To eliminate multicollinearity, we select explanatory variables and construct a model in which VIF or the condition number does not exceed a specified upper bound; 10 and 225, respectively, are frequently used as upper bounds for VIF and the condition number [11]. From a modeling perspective, we can directly control the upper bound of the degree of multicollinearity by using VIF. For example, when we need to tighten the upper bound of \(R^2(\ell ,S)\) from 0.9 to 0.8, we change the upper bound of VIF\((\ell ,S)\) from \(1/(1-0.9)=10\) to \(1/(1-0.8)=5\) due to the definition (7). On the other hand, such control is not obvious when using the condition number.

3 Mixed integer quadratic optimization formulations

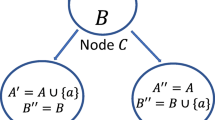

In this section, we consider minimizing RSS of a subset regression model under the upper-bound constraints (3) on VIFs. Let \({\varvec{z}}:=(z_1,z_2,\ldots ,z_p)^{\top }\) be a vector of 0–1 decision variables for subset selection. Accordingly, \(S({\varvec{z}}) := \{j \in P \mid z_j = 1\}\) is a selected subset of explanatory variables. The subset selection problem for eliminating multicollinearity based on VIF is posed as an MIO problem:

If \(z_j = 0\), then the jth explanatory variable is deleted from the regression model because its coefficient is set to zero by the logical implications (9). The VIF constraints (3) are imposed in the form of logical implications (10). It is known that these logical implications can be represented by using a big-M method or a special ordered set type 1 (SOS 1) constraint [2, 3].

However, other than logical implications, constraints (10) still contain difficulty to be handled in an MIO formulation. In the following two subsections, we derive two tractable formulations of these VIF constraints.

3.1 Normal-equation-based formulation

We first propose an MIQO formulation based on the definition (7) of VIF. Let us introduce a vector of decision variables, \({\varvec{a}}^{(\ell )} := (a^{(\ell )}_j)_{j \in P \setminus \{\ell \}} \in \mathbb {R}^{p-1}~(\ell \in P)\). To convert the VIF constraints (10) into a set of linear constraints, we exploit the normal-equation-based constraints proposed by Tamura et al. [36]:

Theorem 1

Suppose that \(({\varvec{a}}^{(\ell )},{\varvec{z}}) \in \mathbb {R}^{p-1} \times \{0,1\}^p\) satisfies constraints (12)–(13). Then, we have

Proof

Let s be the number of nonzero elements of \({\varvec{z}}\). Without loss of generality, we may assume that \(S({\varvec{z}}) = \{1,2,\ldots ,s\}\). According to \(S({\varvec{z}})\), we partition \({\varvec{a}}^{(\ell )}\) as

where \({\varvec{a}}^{(\ell )}_2 = {\varvec{0}}\) due to constraints (13). Therefore constraints (12) correspond to the normal equation (6) for \(S = S({\varvec{z}})\); that is,

Since the OLS estimator provides the minimum value of RSS (5), it follows that

Hence, the definition (7) of VIF completes the proof. \(\square \)

The VIF constraints (10) can be rewritten by Theorem 1 as follows:

However, these are reverse convex constraints, which are very difficult to handle in an MIO formulation. To resolve this reverse-convexity, we exploit the normal equation (14) as follows:

Consequently, the subset selection problem (8)–(11) can be formulated as an MIQO problem, which we call the normal-equation-based formulation:

3.2 Inverse-matrix-based formulation

We next propose another MIQO formulation based on the definition (2) of VIF. Let us introduce square matrices of decision variables, \({\varvec{Q}} := (q_{\ell j})_{(\ell ,j) \in P \times P}\) and \({\varvec{U}} := (u_{\ell j})_{(\ell ,j) \in P \times P}\). To compute the inverse of the correlation matrix \({\varvec{R}}_{S({\varvec{z}})}\), we make use of the following constraints:

where \({\varvec{I}}_p\) is the identity matrix of size p. To promote an understanding of constraints (22), we consider a case in which all candidate explanatory variables are selected (i.e., \(z_j = 1~(j \in P)\)). In this case, \({\varvec{U}}\) becomes the zero matrix because of constraints (23). Consequently, we have \({\varvec{Q}} {\varvec{R}}_P = {\varvec{I}}_p\); that is, \({\varvec{Q}}\) is the inverse of the correlation matrix of all candidate explanatory variables.

Theorem 2

Suppose that \(({\varvec{Q}},{\varvec{U}},{\varvec{z}}) \in \mathbb {R}^{p \times p} \times \mathbb {R}^{p \times p} \times \{0,1\}^p\) satisfies constraints (22)–(24). Then, we have \(q_{\ell \ell } = [{\varvec{R}}_{S({\varvec{z}})}^{-1}]_{\ell \ell }\) for \(\ell \in S({\varvec{z}})\), and \(q_{\ell \ell } = 0\) for \(\ell \not \in S({\varvec{z}})\).

Proof

Similarly to Theorem 1, we may assume without loss of generality that \(S({\varvec{z}}) = \{1,2,\ldots ,s\}\). We partition \({\varvec{Q}},{\varvec{R}}_P,{\varvec{U}}\) and \({\varvec{I}}_p\) according to \(S({\varvec{z}})\) and rewrite constraints (22) as follows:

where \({\varvec{Q}}_1,{\varvec{R}}_1,{\varvec{U}}_1 \in \mathbb {R}^{s \times s}\), \({\varvec{Q}}_2,{\varvec{R}}_2,{\varvec{U}}_2 \in \mathbb {R}^{s \times (p-s)}\), \({\varvec{Q}}_3,{\varvec{R}}_3,{\varvec{U}}_3 \in \mathbb {R}^{(p-s) \times s}\), \({\varvec{Q}}_4,{\varvec{R}}_4,{\varvec{U}}_4 \in \mathbb {R}^{(p-s) \times (p-s)}\), and \({\varvec{O}}\) is the zero matrix of size \(s \times (p-s)\). Note here that \({\varvec{Q}}_2,{\varvec{Q}}_3,{\varvec{Q}}_4, {\varvec{U}}_1\) and \({\varvec{U}}_3\) become zero matrices due to constraints (23)–(24). As a result, the above constraints are reduced to

while other constraints are satisfied through free decision variables in \({\varvec{U}}_2\) and \({\varvec{U}}_4\). Since \({\varvec{R}}_1 = {\varvec{R}}_{S({\varvec{z}})}\), it follows that \({\varvec{Q}}_1 = {\varvec{R}}_{S({\varvec{z}})}^{-1}\), which completes the proof. \(\square \)

Using the definition (2), the subset selection problem (8)–(11) is reformulated as an MIQO problem, which we call the inverse-matrix-based formulation:

Note that the correlation matrix is symmetric, so is its inverse; thus, constraint (30) is redundant. However, we explicitly add the constraint because it improves computational speed.

3.3 Preprocessing for faster computation

In this subsection, we propose some ideas for speeding up the MIQO computation. In our preliminary experiments, however, preprocessing (iii) and (iv) did not shorten the computation time; hence, we will evaluate the efficiency of preprocessing steps (i) and (ii) in the next section.

Preprocessing (i): Deleting redundant VIF constraints

The definition (7) of VIF implies that \(\mathrm{VIF}(\ell ,S) \le \mathrm{VIF}(\ell ,P)\) for all \(S \subseteq P\). Therefore, the VIF constraints for \(\ell \in P_0\) can be deleted, with

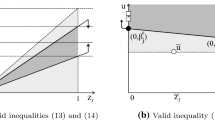

Preprocessing (ii): Adding cutting-plane-based constraints

This step is the following. First, find subsets \(S_k \subseteq P~(k \in K)\) of collinear variables such that \(\mathrm{VIF}(\ell ,S_k) > \alpha \) for some \(\ell \in S_k\). Next, cut them off by means of the following cutting-plane-based constraints [7, 36]:

Preprocessing (iii): Tightening constraints

Constraints (18) can be tightened by using the minimum RSS (5) of \(S = P\) as follows:

Preprocessing (iv): Linearization of the objective function

The objective function can be linearized by applying the transformation (15) to \(\Vert {\varvec{y}} - {\varvec{X}} {\varvec{a}}\Vert ^2_2\), which changes the proposed MIQO formulations into mixed integer linear optimization formulations.

4 Computational results

This section evaluates the computational performance of our MIQO formulations for subset selection to eliminate multicollinearity as characterized by VIF.

We downloaded six datasets for regression analysis from the UCI Machine Learning Repository [25]. Table 1 lists the instances used for computational experiments, where n and p are the numbers of samples and candidate explanatory variables, respectively. In the SolarFlareC instance, C-class flares production was employed as a response variable. Categorical variables were encoded into sets of dummy variables. Samples containing missing values were removed, and then redundant variables (i.e., those with the same value in all samples) were also removed.

We compare the computational performance of the following subset selection algorithms.

-

FwS:

Forward selection method: Starts with \(S = \emptyset \) and iteratively adds the jth variable (i.e., \(S \leftarrow S\cup \{j\}\)) that decreases RSS the most; this operation is repeated while the VIF constraints (3) are satisfied.

-

BwE:

Backward elimination method: Starts with \(S = P\) and iteratively eliminates the jth variable (i.e., \(S \leftarrow S\setminus \{j\}\)) that increases RSS the most; this operation is repeated until the VIF constraints (3) are satisfied.

-

CPA:

Cutting plane algorithm [7, 36]: Solves the MIQO problem (8)–(9) and (11), and adds a valid inequality (cf. (35)) to the problem to cut off subsets of collinear variables; this operation is repeated until the VIF constraints (3) hold.

-

CPA*:

Cutting plane algorithm in which valid inequalities are strengthened by using a backward elimination method [36].

- NEF:

-

NEF+:

Normal-equation-based MIQO formulation (16)–(21) with the preprocessing (i) and (ii).

- IMF:

-

IMF+:

Inverse-matrix-based MIQO formulation (26)–(33) with the preprocessing (i) and (ii).

These computations were performed on a Windows 7 PC with an Intel Core i7-4770 CPU (3.40 GHz) and 8 GB memory. The algorithms FwS and BwE were implemented with R 3.1.1 [33]; the algorithms CPA and CPA* were implemented in Python 2.7 with Gurobi Optimizer 7.5.2 [13], where the logical implications (9) were incorporated in the form of SOS 1 constraint as in Tamura et al. [36]. The MIQO problems (i.e., NEF, NEF+, IMF, and IMF+) were solved using IBM ILOG CPLEX 12.6.3.0 [19] with eight threads. Here the indicator function implemented in CPLEX was used to impose the logical implications (17), (19), (20), (27), (31), and (32).

A subset of explanatory variables often has collinearity problems when its VIF value is greater than 5 or 10 (see [20], p. 101). Thus, we tested two values (\(\alpha = 5\) and 10) of the upper bound on VIF to evaluate the effect on computational results.

The algorithms NEF+ and IMF+ involved preprocessing steps (i) and (ii), as explained in Sect. 3.3. Specifically, the VIF constraints for the set (34) were deleted in advance, and the cutting-plane-based constraints (35) were included. Here, the subsets \(S_k~(k \in K)\) of collinear variables were found by applying the algorithm FwS to the regression model (4) for each \(\ell \in P\).

Results of the preprocessing are summarized in Table 2 (\(\alpha = 5\)) and Table 3 (\(\alpha = 10\)), where \(|P_0|\) and |K| are the numbers of redundant VIF constraints and cutting-plane-based constraints, respectively. The column labeled “Time (s)” shows the computation time in seconds. We can see that preprocessing (i) required only a few seconds, but the computation time of preprocessing (ii) increased greatly with the number of candidate explanatory variables.

We evaluate the results of the subset selection algorithms on the basis of the following evaluation criteria.

-

VIF: must be smaller than the specified upper bound. Note that if VIF is minimized as the objective function, then the optimal solution becomes a trivial and meaningless one, that is, a subset consisting of only one explanatory variable. Therefore, VIF should not be treated as the objective function but should be kept small by means of the upper-bound constraints.

-

Computation time: lower is better. Several days of computation are rarely acceptable for subset selection because it needs to be performed repeatedly (e.g., for cross-validation) in many practical situations. In addition, we are faced with a growing number of samples and explanatory variables because of recent advances in information technology. As a result, a faster algorithm is required for subset selection.

-

\(R^2\) value: higher is better. The goodness-of-fit of regression model is quantified by the coefficient of determination, \(R^2\). Accordingly, an algorithm that produces a larger \(R^2\) value is desirable.

-

Implementation: simpler is better. If two algorithms offer a similar performance, an easily implementable one is highly preferred.

Tables 4 and 5 show the computational results of the subset selection algorithms for \(\alpha =5\) and 10, respectively. The column labeled “\(R^2\)” shows the value of the coefficient of determination of a subset regression model; the largest \(R^2\) values for each instance are indicated in bold. The column labeled “\(\mathrm VIF_{max}\)” shows the value of \(\max \{\mathrm{VIF}(\ell ,S) \mid \ell \in S\}\), and the column labeled “|S|” shows the number of selected explanatory variables. The computation of each subset selection algorithm was terminated if it did not finish by itself within 10,000 s. In these cases, the best feasible solution obtained within 10,000 s was taken as the result.

First, we can confirm that all solutions satisfy the constraint \(\mathrm{VIF_{max}} \le {\alpha } \) unless no feasible solution was found within 10,000 s. Note that if a solution satisfies the VIF constraint, the value itself of \(\mathrm VIF_{max}\) does not matter.

Next, we discuss the computation time and \(R^2\) values of each algorithm. Note that FwS and BwE are local search algorithms, and thus they complete the search process quickly without certificates of optimality of the obtained solutions. Meanwhile, other MIO approaches require a sufficient amount of computation time to verify optimality of the obtained solutions. Nevertheless, most of the MIQO problems for the Servo, AutoMPG, and SolarFlareC instances were solved to optimality within a few tens of seconds. In this case, \(R^2\) values of our MIQO formulations were always the largest because the obtained solutions were verified to be optimal. We can also see that our preprocessing significantly reduced the time used in solving the MIQO problems. For instance, in Table 4, IMF required a relatively long computation time for the AutoMPG and SolarFlareC instances, but the computation of IMF+ finished much earlier for the same instances. In the case of the BreastCancer instance, the computation was completed about 20 times faster by IMF+ than by IMF for both \(\alpha =5\) and 10.

The MIQO computations for the Automobile and Crime instances were terminated due to the time limit of 10,000 s; nevertheless, they successfully found solutions of good quality within 10,000 s. On the other hand, IMF failed to deliver a solution of good quality to the Crime instance; specifically, it found only the feasible solution \(S = \emptyset \) within 10,000 s for \(\alpha = 5\) and 10. This issue was observed due to numerical instability in IMF, whereas it did not happen in IMF+.

The algorithm CPA took a long time to solve even small instances (e.g., AutoMPG and SolarFlareC), and it failed to find a feasible solution to the BreastCancer, Automobile, and Crime instances within the time limit of 10,000 s. The algorithm CPA* is much faster than CPA, but it seems slower than IMF+, which is the fastest of four MIQO formulations (NEF, NEF+, IMF, and IMF+).

Table 6 shows the number of times the best solution was generated by each algorithm. We can see that CPA* was competitive with our MIQO formulations in terms of solution quality. Here, we also take into account the simplicity of implementation of these algorithms. The implementation of CPA* is relatively complicated; MIQO problems combined with the backward elimination method must be solved repeatedly. On the other hand, our MIQO formulations enable us to pose the subset selection problem as a single MIQO problem, which can be handled using standard MIO software. From the point of view of implementation, our MIQO formulations have a crucial advantage over the cutting plane algorithm.

We conclude this section by comparing our MIQO formulations with the MISDO formulation devised by Tamura et al. [36] for subset selection with the condition number constraint. The MISDO formulation spent 5563.34 s (\(\kappa = 100\)) and 336.14 s (\(\kappa = 225\)) for the AutoMPG instance, where \(\kappa \) is the upper bound on the condition number. These results suggest that our VIF-based MIQO formulations had a clear computational advantage over the condition-number-based MISDO formulation. In addition, Tamura et al. [36] could not solve many of the MISDO problems for larger instances because of numerical instability.

5 Conclusions

This paper dealt with the problem of selecting the best subset of explanatory variables under upper-bound constraints on VIFs of selected variables. For this problem, we presented two MIQO formulations (a normal-equation-based formulation and an inverse-matrix-based formulation), based on two equivalent definitions of VIF.

The research contribution in this paper is computationally tractable MIQO formulations for eliminating multicollinearity by using VIF. Previously, no tractable formulation of the VIF constraint was known, and we have successfully written it as a set of linear constraints. As a result, we reduced the subset selection to a single MIO problem, which can be handled using standard MIO software.

Our MIQO formulations must spend a certain amount of time to verify optimality of the selected subset of variables. Nevertheless, it was demonstrated that when the number of candidate explanatory variables was less than 30, most of the MIQO problems were solved to optimality within a few tens of seconds. Our MIQO formulations also have the advantage of being able to find solutions of good quality in the early stage of the computation. Indeed, even when the number of candidate explanatory variables was more than 30, our MIQO formulations with preprocessing provided solutions of better quality within the time limit than those obtained using local search algorithms. These results reveal that our method is particularly effective for small-to-medium-sized problems (e.g., \(p \le 100\)). We believe that our method has a potential value in reality because the number of candidate variables for regression analysis is less than one hundred in many cases.

A conventional way of avoiding multicollinearity is to remove explanatory variables one at a time according to the VIF value of each variable. Our MIQO formulations, however, have a clear advantage in terms of solution quality over such a heuristic algorithm. A solution of better quality leads to more reliable results for description purposes; hence, our MIQO formulations will be helpful in enhancing reliability of the regression analysis.

A future direction of study will be to extend our formulations to other regression/classification models. Multicollinearity generates a harmful effect on most statistical models, so our formulations are expected to be useful in various regression/classification models.

References

Arthanari, T.S., Dodge, Y.: Mathematical Programming in Statistics. Wiley, New York (1981)

Beale, E.M.L.: Two transportation problems. In: Kreweras, G., Morlat, G. (eds.) Proceedings of the Third International Conference on Operational Research, pp. 780–788 (1963)

Beale, E.M.L., Tomlin, J.A.: Special facilities in a general mathematical programming system for non-convex problems using ordered sets of variables. In: Lawrence, J. (ed.) Proceedings of the Fifth International Conference on Operational Research, pp. 447–454 (1970)

Belsley, D.A., Kuh, E., Welsch, R.E.: Regression Diagnostics: Identifying Influential Data and Sources of Collinearity. Wiley, Hoboken (2005)

Benati, S., García, S.: A mixed integer linear model for clustering with variable selection. Comput. Oper. Res. 43, 280–285 (2014)

Bertsimas, D., Dunn, J.: Optimal classification trees. Mach. Learn. 136, 1039–1082 (2017)

Bertsimas, D., King, A.: OR forum: an algorithmic approach to linear regression. Oper. Res. 64, 2–16 (2016)

Bertsimas, D., King, A.: Logistic regression: from art to science. Stat. Sci. 32, 367–384 (2017)

Bertsimas, D., King, A., Mazumder, R.: Best subset selection via a modern optimization lens. Ann. Stat. 44, 813–852 (2016)

Blum, A.L., Langley, P.: Selection of relevant features and examples in machine learning. Artif. Intell. 97, 245–271 (1997)

Chatterjee, S., Hadi, A.S.: Regression Analysis by Example. Wiley, Hoboken (2012)

Dormann, C.F., Elith, J., Bacher, S., Buchmann, C., Carl, G., Carré, G., García Marquéz, J.R., Gruber, B., Lafoourcade, B., Leitão, P.J., Münkemüller, T., McClean, C., Osborne, P.E., Reineking, B., Schröder, B., Skidmore, A.K., Zurell, D., Lautenbach, S.: Collinearity: a review of methods to deal with it and a simulation study evaluating their performance. Ecography 36, 27–46 (2013)

Gurobi Optimization, Inc.: Gurobi Optimizer Reference Manual. http://www.gurobi.com (2016). Accessed 6 Oct 2017

Guyon, I., Elisseeff, A.: An introduction to variable and feature selection. J. Mach. Learn. Res. 3, 1157–1182 (2003)

Hastie, T., Tibshirani, R., Tibshirani, R.J.: Extended comparisons of best subset selection, forward stepwise selection, and the lasso. arXiv preprint arXiv:1707.08692 (2017)

Hocking, R.R.: The analysis and selection of variables in linear regression. Biometrics 32, 1–49 (1976)

Hoerl, A.E., Kennard, R.W.: Ridge regression: biased estimation for nonorthogonal problems. Technometrics 12, 55–67 (1970)

Huberty, C.J.: Issues in the use and interpretation of discriminant analysis. Psychol. Bull. 95, 156–171 (1984)

IBM: IBM ILOG CPLEX Optimization Studio. https://www-01.ibm.com/software/commerce/optimization/cplex-optimizer/ (2015). Accessed 6 Oct 2017

James, G., Witten, D., Hastie, T., Tibshirani, R.: An Introduction to Statistical Learning. Springer, New York (2013)

Jolliffe, I.T.: A note on the use of principal components in regression. Appl. Stat. 31, 300–303 (1982)

Kimura, K., Waki, H.: Minimization of Akaike’s information criterion in linear regression analysis via mixed integer nonlinear program. Optim. Methods Softw. 33, 633–649 (2018)

Kohavi, R., John, G.H.: Wrappers for feature subset selection. Artif. Intell. 97, 273–324 (1997)

Konno, H., Yamamoto, R.: Choosing the best set of variables in regression analysis using integer programming. J. Glob. Optim. 44, 273–282 (2009)

Lichman, M.: UCI Machine Learning Repository. School of Information and Computer Science, University of California, Irvine. http://archive.ics.uci.edu/ml (2013)

Liu, H., Motoda, H.: Computational Methods of Feature Selection. CRC Press, Boca Raton (2007)

Maldonado, S., Pérez, J., Weber, R., Labbé, M.: Feature selection for support vector machines via mixed integer linear programming. Inf. Sci. 279, 163–175 (2014)

Massy, W.F.: Principal components regression in exploratory statistical research. J. Am. Stat. Assoc. 60, 234–256 (1965)

Mazumder, R., Radchenko, P.: The discrete Dantzig selector: estimating sparse linear models via mixed integer linear optimization. IEEE Trans. Inf. Theory 63, 3053–3075 (2017)

Miller, A.: Subset Selection in Regression. CRC Press, Boca Raton (2002)

Miyashiro, R., Takano, Y.: Subset selection by Mallows’ \(C_p\): a mixed integer programming approach. Expert. Syst. Appl. 42, 325–331 (2015)

Miyashiro, R., Takano, Y.: Mixed integer second-order cone programming formulations for variable selection in linear regression. Eur. J. Oper. Res. 247, 721–731 (2015)

R Core Team: R: a language and environment for statistical computing. R Foundation for Statistical Computing. https://www.R-project.org (2014). Accessed 6 Oct 2017

Sato, T., Takano, Y., Miyashiro, R., Yoshise, A.: Feature subset selection for logistic regression via mixed integer optimization. Comput. Optim. Appl. 64, 865–880 (2016)

Sato, T., Takano, Y., Miyashiro, R.: Piecewise-linear approximation for feature subset selection in a sequential logit model. J. Oper. Res. Soc. Jpn. 60, 1–14 (2017)

Tamura, R., Kobayashi, K., Takano, Y., Miyashiro, R., Nakata, K., Matsui, T.: Best subset selection for eliminating multicollinearity. J. Oper. Res. Soc. Jpn. 60, 321–336 (2017)

Ustun, B., Rudin, C.: Supersparse linear integer models for optimized medical scoring systems. Mach. Learn. 102, 349–391 (2016)

Wilson, Z.T., Sahinidis, N.V.: The ALAMO approach to machine learning. Comput. Chem. Eng. 106, 785–795 (2017)

Wold, S., Ruhe, A., Wold, H., Dunn III, W.J.: The collinearity problem in linear regression. The partial least squares (PLS) approach to generalized inverses. SIAM J. Sci. Stat. Comput. 5, 735–743 (1984)

Wold, S., Sjöström, M., Eriksson, L.: PLS-regression: a basic tool of chemometrics. Chemom. Intell. Lab. Syst. 58, 109–130 (2001)

Acknowledgements

This work was partially supported by JSPS KAKENHI Grant Nos. JP17K01246 and JP17K12983.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Tamura, R., Kobayashi, K., Takano, Y. et al. Mixed integer quadratic optimization formulations for eliminating multicollinearity based on variance inflation factor. J Glob Optim 73, 431–446 (2019). https://doi.org/10.1007/s10898-018-0713-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-018-0713-3