Abstract

The convergence rate of a rectangular partition based algorithm is considered. A hyper-rectangle for the subdivision is selected at each step according to a criterion rooted in the statistical models based theory of global optimization; only the objective function values are used to compute the criterion of selection. The convergence rate is analyzed assuming that the objective functions are twice- continuously differentiable and defined on the unit cube in d-dimensional Euclidean space. An asymptotic bound on the convergence rate is established. The results of numerical experiments are included.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Problems of global optimization of continuous multimodal functions are attacked using deterministic [1,2,3,4,5,6,7], stochastic [8,9,10,11,12], and heuristic approaches [13,14,15]. A promising research direction is the hybridization of the advantages of some of the successful methods [16, 17]. Although the proposed algorithms are thoughtfully investigated there remains an important issue that has received relatively little attention, namely the convergence rate problem. In the present paper we focus on this problem continuing the research in [18,19,20,21] where the convergence rate was established for algorithms rooted in the approach based on statistical models of global optimization. The convergence rate of global optimization algorithms in a stochastic setting has been considered for certain Gaussian probabilities; see for example [19]. The general question of average-case tractability of multivariate global optimization is mentioned (Open Problem 87) in [22].

We consider the problem of approximating the global minimum \(f^*\) of a twice-continuously differentiable function f defined on the d-dimensional unit cube \(A=[0,1]^d\), for \(d\ge 2\). We assume that the global minimizer, denoted by \(x^*\), is unique and lies in the interior of the cube. While we assume differentiability, we are interested in algorithms that only evaluate the function and not derivatives. Our interest centers on how the number of function values required to obtain a given quality approximation grows with the dimension of the domain.

The algorithm presented here is similar to a one-dimensional algorithm presented in [20] and a two-dimensional algorithm based on Delaunay triangulations presented in [21]. Those papers presented algorithms with the property that for large enough N (depending on f), the residual error (difference between the smallest function value observed and the global minimum) after N function evaluations is at most

for \(c_1,c_2\) depending on dimension (1 or 2) and f. While the algorithm presented in [21] was based on a Delaunay triangulation of the domain, the algorithm considered in this paper is based on a rectangular decomposition. Rectangular decompositions have been employed in previous studies of global optimization, for example in [4, 23,24,25]. The rectangular partition of the feasible region is inherent also for the interval arithmetic-based global optimization algorithms, see e.g. [26]. One of the advantages of the algorithms using rectangular partition of the feasible region is their suitability for parallelization; however the investigation of parallel versions of the algorithm proposed here is postponed for further research. For a discussion of the alternative simplicial partition of the feasible region in the development of global optimization algorithms we refer to [27].

The algorithm in [21] works for arbitrary domains, but the error bound, which required a certain quality triangulation, could only be proved for bivariate functions. In the present paper we are able to prove an asymptotic error bound for arbitrary dimension. Our main result is that eventually the error is at most

where \(c_2(f,d)\) decreases exponentially in dimension d. Recall that we assume that f has continuous partial derivatives up to order 2, and that the global minimizer is unique and is in the interior of the domain.

In the worst-case setting any nonadaptive optimization algorithm has an error of order at least \(N^{-\,2/d}\). It is known that for a convex and symmetric function class such as \(C^2([0,1]^d)\) adaptive algorithms are essentially no more powerful than nonadaptive algorithms for linear problems such as function approximation with error measured using the \(L_\infty \) norm ([28]). Global optimization is as difficult as \(L_\infty \) approximation (see Theorem 18.24 in [22]). Therefore, \(N^{-\,2/d}\) is an optimal worst-case error bound for optimization on \(C^2([0,1]^d)\). To obtain an error of at most \(\epsilon \), we then require on the order of \((1/\epsilon )^{d/2}\) function values. For the algorithm proposed in this paper, we consider the asymptotic decay of the error, showing that for small enough \(\epsilon \) on the order of \(d^{d+3}\log (1/\epsilon )^2\) function values suffice. Because of the asymptotic setting, this does not contradict the worst-case results mentioned above.

With the algorithm defined in this paper, the error converges to zero for a much broader class of functions than the one we consider. For example, the error converges to zero for any continuous function defined on \([0,1]^d\), though it could be difficult to characterize the rate of convergence for such a broad class.

At the present stage of investigation we have not focused on the details of efficient software development, and have tested a rather simple implementation (without sophisticated data structures) of the algorithm with well known test functions. Our initial guess was that Delaunay triangulation-based algorithm would be considerably more efficient than the algorithm proposed in this paper. This guess was based on the observation that hyper-rectangles have more volume far from the vertices than simplexes. Also, in our Delaunay-based algorithm, each step placed a single function evaluation in a simplex, while subdividing a hyper-rectangle takes a number of evaluations that grows exponentially with dimension. However, at least for the low dimensional problems in our experiments, this guess was not confirmed. The results are presented in the last section of the paper where the testing results of the proposed algorithm and the algorithm based on Delaunay triangulation [21] are presented for comparison.

2 The algorithm

The algorithm operates by decomposing \([0,1]^d\) into hyper-rectangles as follows. Given a current decomposition, choose one of the hyper-rectangles (according to the maximal value of a criterion to be defined below) and bisect it along the longest axis by evaluating the function at up to \(2^{d-1}\) midpoints of the longest hyper-rectangle edges (some of the midpoints may have been evaluated in previous decompositions).

The criterion of choice of a rectangle is rooted in definition of the P-algorithm described in detail in [29]. The P-algorithm chooses the point for the current computing the an objective function value according to the criterion of maximum of improvement probability

where \(\xi (x)\) is a Gaussian random field accepted as a statistical model of objective functions, and \(y_i\) values of the objective function computed at previous iterations, and \(y_{0N}=\min _{i=1,\ldots ,N}y_i\), \(\varepsilon >0\). The maximization of the improvement probability can be simplified because of the equality

where \(\mu (x|(x_i,y_i),\,i=1,\ldots ,N)\) and \(\sigma ^2(x|(x_i,y_i),\,i=1,\ldots ,N)\) denote conditional mean and variance correspondingly. The criterion for the selection of a rectangle by the algorithm considered in the present paper is a modification of (1) where conditional mean and variance are replaced by the relevant parameters of the rectangle, and \(\varepsilon \) is replaced by a parameter \(g(\cdot )\) which is defined below; its form of the dependence on the number of iteration is determined aiming at fast asymptotic convergence of the algorithm,

Suppose that the algorithm has evaluated f at N points. Let s(N) denote the number of hyper-rectangles in our partition. Each hyper-rectangle subdivision (iteration) requires up to \(2^{d-1}\) function evaluations; let us note that at the current iteration some function values can be known since computed at previous subdivisions. Therefore, the following inequality is valid

We next define some constants and functions that are used in the definition of the algorithm. Define

and set

for \(0<x\le 1/2\) and \(g(1)=q d^2/4\). Note that g is increasing and \(g(x)\downarrow 0\) as \(x\downarrow 0\).

It will be convenient to index quantities to be defined by the iteration number of the algorithm, which we denote by n, instead of the number of function evaluations, N, which is generally larger. Later we will also describe the error bound in terms of the number of function evaluations N.

After n iterations of the algorithm, let \(M_n = \min _{1\le i\le n}f(x_i)\) and denote the error by \(\varDelta _n = M_n-f(x^*)\). Define the function \(L_n:[0,1]^d\rightarrow \mathbb {R}\) as follows. In the interior of hyper-rectangle R, \(L_n\) coincides with the multilinear interpolant of the function values at the vertices of R. On the boundaries of hyper-rectangles set \(L_n\equiv M_n\), so that \(M_n\) is equal to the global minimum of \(L_n\). Let \(v_n\) denote the smallest volume of a hyper-rectangle after n iterations.

Our algorithm will assign a numerical value to each hyper-rectangle in the subdivision. After n iterations of the algorithm, for each hyper-rectangle \(R_i\), \(1\le i\le {n}\), set

and

The criteria (3) and (4) are analogues of reciprocal (1) with opposite sign where \(g(v_n)\) is a generalisation of \(\varepsilon \). Since the hyper-rectangle boundaries have measure zero, the way we defined \(L_n\) on the boundaries is immaterial for the values of the \(\rho ^n_i\). Note that the \(\inf \) in the denominator of (4) is equal to the minimum of f at the vertices of the hyper-rectangle.

If we are about to subdivide the smallest hyper-rectangle, then

with the last inequality following from the fact that the smallest hyper-rectangle in the subdivision has volume \(v_n\le 1/n\).

A more formal description of the algorithm follows. In the description, N is the total number of function evaluations to make, i is the current number of hyper-rectangles, and j is the number of function evaluations that have been made. Let v, the volume of the smallest hyper-rectangle, take initial value 1 and let M, the minimum of the observed function values, take initial value the minimum of the function values at the vertices of \([0,1]^d\).

The algorithm comprises the following steps.

-

1.

We start with the hyper-rectangle \([0,1]^d\) and with the function values at all \(2^d\) vertices. Set i, the number of hyper-rectangles, to 1 and set j, the number of function evaluations, to \(2^d\).

-

2.

For each hyper-rectangle \(R_k\) in the current collection \(\{R_1,R_2,\ldots ,R_i\}\), compute \({\rho }^{{i}}_k\), keeping track of the rectangle with the lowest index \(\gamma \) that has the maximal value of \({\rho }^i_\gamma \).

-

3.

From the hyper-rectangle \(R_\gamma \) with maximal value \(\rho ^n_\gamma \), form two new hyper-rectangles as follows. Suppose that

$$\begin{aligned} R_\gamma = [a_1,b_1]\times [a_2,b_2]\times \cdots \times [a_d,b_d], \end{aligned}$$and that j is the smallest index with \(b_j-a_j\ge b_i-a_i\) for \(1\le i\le d\). The two new hyper-rectangles are

$$\begin{aligned} R^{\prime }_{\gamma } \equiv [a_1,b_1]\times \cdots \times [a_j,(a_j+b_j)/2]\times \cdots \times [a_d,b_d] \end{aligned}$$and

$$\begin{aligned} R^{\prime \prime }_{\gamma } \equiv [a_1,b_1]\times \cdots \times [(a_j+b_j)/2, b_j]\times \cdots \times [a_d,b_d]. \end{aligned}$$Evaluate f at the (at most) \(2^{d-1}\) points

$$\begin{aligned} \left( c_1,\ldots ,\frac{a_j+b_j}{2},\ldots ,c_d\right) ,\ \ \ c_i\in \{a_i,b_i\},\ 1\le i\le d, \ i\ne j, \end{aligned}$$which have not been evaluated previously.

If \(\left| R_\gamma \right| =v\), then set \(v\leftarrow v/2\), and if the smallest of the new function values is less than the previously observed minimum M, then set M to that new smallest value. Update the number of hyper-rectangles \(i\leftarrow i+1\) and increment j by the number of new function evaluations.

-

4.

If \(j<{N}\), return to step 2.

Typical subdivisions for the two-dimensional case are depicted in Fig. 1 in Sect. 5. In the graphs, each vertex of the mesh corresponds to a point where the function has been evaluated. Each hyper-rectangle has been evaluated at its four vertices, as well as possibly other points on the perimeter. The function \(L_n\) agrees on the interior of a hyper-rectangle with the multilinear interpolation of the four vertices, disregarding additional vertices that may lie on the perimeter. The aspect ratio of each hyper-rectangle is either 1 or 2.

Let \(\hat{F}\subset C^2\left( [0,1]^d\right) \) denote the set of functions that have a unique global minimizer \(x^*\in ]0,1[^d\). Our error bound will hold for functions in \(\hat{F}\). It is essential for our result that the function has only a finite number of isolated global minimizers; the uniqueness assumption is to simplify the presentation.

Denote the matrix of second-order partial derivatives at the minimizer by \(D^2f(x^*)\), assumed positive definite.

The error bound will depend on the regularity of the function as measured by a seminorm that we now define. For a compact set \(K{\subset [0,1]^d}\) and \(f\in C^2(K)\), define the seminorm

where \(D_yf\) is the directional derivative of f in the direction y. This is a measure of the maximum size of the second derivative of f over K.

Our main result on the convergence rate of the error for this algorithm follows. Let note, that the convergence of the original version of the P-algorithm for continuous objective functions we refer to ([29]) where the improvement threshold \(\varepsilon \), analogous to \(g(\cdot )\) in (3), (4), can be any positive constant. With such a weaker assumption, however, the convergence rate remains not known.

Theorem 1

Suppose that \(f\in \hat{F}\). There is a number \(n_0(f)\) such that for \(n\ge n_0(f)\), the residual error after n iterations of the algorithm satisfies

where

The number \(n_0(f)\) after which the bound applies will be the maximum of several other \(n_i(f)\)’s that will be defined in the proof of the theorem. We note here that \(n_0(f)\) depends (exponentially) on the value of the seminorm \(\left\| D^2f\right\| _{\infty , [0,1]^d}\).

Note that the limiting error decreases when the determinant of the second derivative at the minimizer increases; a larger second derivative allows the search effort to concentrate more around the minimizer. The convergence rate in terms of the number of function evaluations n is quite fast, but the term \(\beta (f,d)\) decreases exponentially fast as d increases: as \(d\rightarrow \infty \), \(\beta (f,d) = O\left( d^{-(d+3)/2}\right) \). Therefore, this algorithm does not escape the curse of dimension.

We focus on the information complexity; that is, the cost is the number of function evaluations. The worst-case combinatorial cost of the algorithm grows quadratically with the number of iterations.

3 Interpolation error bounds

We will use results about interpolation from [30].

Lemma 1

Consider a hyper-rectangle \(R=\{x\in [0,1]^d: a_i\le x_i\le b_i, 1\le i\le d\}\), and let L denote the multilinear function that interpolates f at the vertices of the hyper-rectangle. We have the error bound

which implies the bound

Proof

See [30]. \(\square \)

The quantity

is a measure of how “bad” the hyper-rectangle is from the point of view of interpolation error; that is, how far it is from a cube. Since by construction of the algorithm the widths are within a factor of 2 of each other, there is some number \(1\le k\le d\) and a positive number w such that k of the dimensions have width w and \(d-k\) have width 2w, and

For \(k=d\) we get the value d and for \(k=1\) we get the value \((d-3/4)2^{2/d}\). Maximizing

over \(x\in ]0,1[\) gives a maximum value of

Therefore, the quality metric ranges from d up to about 1.27d, and the second bound in Lemma 1 implies that

Also note that

In addition to \(\left\| D^2f\right\| _{\infty , [0,1]^d}\), we introduce a functional that is in a sense a measure of how difficult it is to obtain an \(\epsilon \) approximation of f. For \(\epsilon >0\), define

The following lemma, proved in “Appendix A”, generalizes Lemma 3.2 of [21] to arbitrary dimension.

Lemma 2

4 Proof of Theorem 1

We begin with a rough outline the steps of the proof of Theorem 1, which will be based on a series of lemmas. The proofs of the lemmas appear in “Appendix B”.

Lemma 4 establishes that the hyper-rectangle that contains the global minimizer cannot be much larger than the smallest rectangle at that iteration; this fact allows us to bound the error in terms of \(v_n\), the volume of the smallest rectangle. Recalling that \(\rho ^n_i\) is the metric used to choose the next rectangle to split, Lemmas 5 and 6 establish that

while Lemma 7 shows that

With this, Lemma 2 allows us to bound \(g(v_n)\), and thereby the error. All of the analysis relies on Lemma 3, which gives an upper bound for \(\rho ^n\).

We consider a fixed function \(f\in \hat{F}\). The subscript n will indicate the number of iterations of the algorithm (not the number of function evaluations). We assume that \(n\ge n_2(f)\), where \(n_2(f)\) is the first time after \(n_1(f)\) that the smallest rectangle is about to be split and

(Later we will further restrict n.)

Lemma 3

For all \(n\ge n_2(f)\),

Lemma 4

Let \(v_n^*\) denote the volume of the hyper-rectangle containing the minimizer \(x^*\). For \(n\ge n_2\),

and

The following lemma gives a lower bound for \(\rho ^n\) that complements the upper bound provided by Lemma 3.

Lemma 5

For \(n\ge n_2(f)\),

Lemma 6

For \(n\ge n_3(f)\equiv 2n_2(f)\), we have the bounds

and

The following lemma allows us to approximate the average of the \(\{\rho ^n_i\}\) in terms of \({\mathcal I}_f(\cdot )\), for which we have the approximation given by Lemma 2.

Lemma 7

For \(n\ge n_2(f)\),

We are now ready to prove Theorem 1. From Lemma 6 we have the bounds

and

Therefore

using the first inequality in (13), (14), (15), and the second inequality in (13), respectively. We focus on the inequalities

There is a number \(n_4(f)\) such that, by Lemma 2, \(n\ge n_4(f)\) implies that

Replace \({\mathcal I_f}(g(v_n))\) by \(\log (1/g(v_n))\) (times appropriate factor) in the extreme terms of (??) to get

Now substitute the value

to obtain the bounds

This implies that eventually, say for \(n\ge n_5(f)\),

which entails that

Then

For \(n\ge n_0(f) \equiv \max \{ n_2(f), n_3(f), n_4(f), n_5(f)\}\),

Substituting the value of \(\alpha \) from Lemma 2, and using the bound (from Stirling’s approximation)

we obtain

Combined with (16) this yields the bound

where

Finally, note that this is the expression for the error after n iterations of the algorithm, which corresponds to as many as \(2^{d-1}n\) function evaluations. After a total of N function evaluations, an upper bound on the error is given by

This completes the proof of Theorem 1.

5 Numerical examples

The main goal of the research presented in the paper was the evaluation of convergence rate of the algorithm which generalizes the previously investigated algorithms for the case of arbitrary d. However, we do not expect the upper bound to be tight for typical test functions since the worst case objective function is considered. Therefore, including of some results of preliminary numerical experiments seemed reasonable to illustrate relation of the asymptotic convergence rate to the practical performance. The theoretical overestimate of the actual error is commented after the presentation of the experimental results.

A simple C++ implementation of the algorithm, applicable to the problems of low dimensionality, was used. Further, we plan to implement parallel version of the algorithm and perform the so called competitive software testing according to the widely accepted methodology [13, 31, 32].

The algorithm consists of the following main parts: computation of the selection criterion (3), selection and subdivision of the hyper rectangle, and storing of the new hyper rectangles produced. The computational burden needed for the implementation of the current iteration of algorithm depends on the dimensionality d and on the number of iteration. The dimensionality influences computing time of the criterion (3). The amount of stored information grows with the number of iteration, and correspondingly grows computer time needed for its management. Therefore, for the problems of higher dimensionality, the parallel implementation of the algorithm is planned. Meanwhile, the numerical results below are obtained using the straightforward C++ implementation.

The computation of criterion (3) reduces to the numerical integration over the hyper rectangle. In the two-dimensional case the integral can be computed using a combination of analytic and numerical techniques. Let \(\mathbf p ^i=(p^i_0, p^i_1)\), \(i=0, \dots , 3,\) be the rectangle vertices, numbered such that \(p^0_0 = p^1_0 < p^2_0 = p^3_0\) and \(p^0_1=p^2_1 < p^1_1=p^3_1\). The corresponding objective function values are then \(f_i = f(\mathbf p ^i), i=0, \dots , 3\). The bi-linear interpolation of the objective value at an arbitrary point \(\mathbf x =(x_0, x_1)\), \(x_j \in [a_j, b_j], a_j=p^0_j, b_j=p^3_j, j =0, 1, \) can then be expressed as

Then

where

Note that the function \(u(x_1)\) is continuous at the point \(\hat{x}_1=\frac{f_0-f_2}{f_0-f_1-f_2+f_3}\), \(\hat{x}_1 \in [a_1, b_1],\) that gives \(A(\hat{x}_1)=0\).

In the experiments for the two dimensional case we compute \(\rho ^n_i\) as a single dimensional integral using Eqs. 17–19. The single dimensional integral is computed numerically using the GNU Scientific Library [33] routine gsl_integration_cquad requiring a relative error smaller than \(10^{-7}\). We call the resulting implementation Rect-A. For comparison we also include the integration over the rectangle \(R_i\) using the classical Monte Carlo method (GNU Scientific Library routine gsl_monte_plain_integrate) with \(M=10{,}000\) evaluations of the two dimensional integrand (referred to as Rect-MC). Finally, we present a simplified version of computing \(\rho ^n_i\) for dimension \(d=2\) as

We call this algorithm version Rect-1. In all implementations the function evaluation database is employed, so that the objective is never evaluated twice at the same location.

For comparison, two following algorithms were used. A recent algorithm [21], based on the Delaunay triangulation of the optimization region and referred to as Simpl in this section, is also implemented in the C++ programming language and uses the 2D and 3D Delaunay triangulation code from the CGAL library [34]. The comparison with a non-adaptive algorithm presents interest especially because of mentioned in the introduction different convergence rates. The non-adaptive algorithm is implemented using the so called \(LP_{\tau }\) sequences [35], and is worst case quasi optimal since it generates points for computing objective function values nearly uniformly in the feasible region. The results of that algorithm are referred to as Sobol.

We start by illustrating the partition of the feasible regions by the algorithms Rect-A during minimization of the following objective functions:

-

The six-hump camel back function [36]:

$$\begin{aligned} f(\mathbf x )&= \left( 4-2.1x_1^2 + x_1^4/3\right) x_1^2 + x_1x_2+ (-\,4+4x_2^2)x_2^2,\nonumber \\&\quad \mathbf x \in [-3, 3]\times [-\,2, 2]. \end{aligned}$$(21) -

The Branin function [37]:

$$\begin{aligned} f(\mathbf x )&= \left( x_2-\frac{5.1}{4\pi ^2}x_1^2+5\frac{x_1}{\pi }-6\right) ^2+ 10\left( 1- \frac{1}{8\pi }\right) \cos (x_1)+10,\nonumber \\&\quad \mathbf x \in [-\,5, 10]\times [0, 15]. \end{aligned}$$(22)

Figure 1 shows the partition after \(n=3000\) objective function evaluations. It can be seen that the space is roughly explored everywhere, however, the trial points are positioned more densely in the regions of potential global minima.

Next, we present the numerical experiments aimed at the comparison of the proposed algorithm with the algorithm of similar destination which implements the partition based on the Delaunay triangulation of the feasible region [21]. The latter was tested using a set of 2-dimensional objective functions from [38], that were used as well as in numerous other papers investigating Lipschitz optimization algorithms.

Table 1 presents results of minimization of test functions from [38] using the same numeration. The optimization error is presented (on a logarithmic scale) as \(\delta _n=-\log _{10}(M_n-f_{min})\), after \(n=1000, 3000\) function evaluations for each of the algorithms Rect-A, Rect-1, Rect-MC, Simpl [21] and Sobol. Symbol \(\infty \) means that zero error has been achieved within machine precision. The numbers in the left column have been copied from the function definitions in [38] on p. 468. The values for the algorithm [21] have been copied from the Table 1 of the paper [21].

It can be seen that the various versions of the proposed algorithm perform comparably with the algorithm [21]. Moreover, there appears to be no significant difference between implementations Rect-A and Rect-MC, suggesting that the Monte Carlo technique could be used to approximate the integral in the higher dimensions. As could be expected, the results of the non-adaptive Sobol algorithm are the worst. The minimization of the function No. 13 was similarly problematic to all algorithms, as the global minimum of this function is hidden in a small basin behind a sharp spike.

The minimization results for objective functions defined over 3-dimensional regions are shown in Table 2. The functions are also taken from [38]. Since the analytic expression of the integral is not available in the 3-dimensional case, we included only versions Rect-1 and Rect-MC (\(M=100000\) evaluations of the integrand per hyper-rectangle) of the proposed algorithm. The table shows that the proposed algorithm performs better than other algorithms.

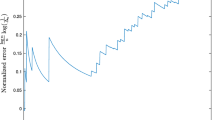

The number of iteration needed to find an appropriate approximation of minimum can depend on a coincidental match of properties of an algorithm and objective function. To reduce that factor, the performance profiles below are evaluated using a sample of randomly generated problems where the same objective function is minimized over a feasible region which is randomly (with uniform distribution) scrolled for 10% of its original extend. The average and maximum errors of 100 random problems were recorded and plotted against number of function evaluations n. The logarithmic scale for the presentation of graphs was used: \(\delta _n=-\,\log _{10}(M_n-f_{min})\), where \(M_n\) denotes the best function value found up to the \(n\mathrm{th}\) function evaluation and \(f_{min}\) is the actual global minimum value. In Fig. 2 are presented graphs for (2-dimensional) six-hump camel back and Branin functions; the version of algorithm Rect-A was used, partition of the feasible region is shown in Fig. 1.

The maximum and average error after n function evaluations of algorithms Rect-1 and Sobol with 100 perturbed cases of the Rastrigin test problem (Eq. 23) for \(d=2, 3,4,5\)

To illustrate the influence of the dimension similar experiments were performed with test problems up to dimension 5. Let us note, that contemporary publications on algorithms of similar destination, i.e supposed for expensive black box type problems, typically present experiments with objective functions up to dimensionality five, six [16, 39]. The test problems were constructed extending the two dimensional Rastrigin function [15] for dimension d:

Such an extension keeps fixed number of local minima equal to 5. The global minimum point is the d dimensional vector with zero components. The results for \(d=2,3,4,5\) are presented in Fig. 3, and are also quantified in the Table 3.

Now we can compare the upper bound given by Theorem 1 with the actual error estimated experimentally. Our analysis uses the inequality \(v_n\le 1/n\) (\(v_n\) is the volume of the smallest rectangle after n iterations), for example in the last inequalities in the proof of Lemma 4. If f is near its minimum over much of the domain, then \(v_n\approx 1/n\) (think of a nearly constant function with a tiny, locally quadratic valley in the center of the domain). For our test functions, \(v_n\) is much smaller than 1 / n. We computed the characteristics of the test function at (23), and arrived at a value of \(\beta = 2.05 \times 10^{-4}\) for dimension \(d=5\). For \(n=60{,}000\), this gives an upper bound of \(-\,0.02\), while the corresponding number is less than \(-\,5\) in the last graph of Fig. 3 (the difference is even larger if we take into account the factor in front of the exponential term).

References

Floudas, Ch.: Deterministic Global Optimization: Theory, Algorithms and Applications. Kluwer, Dordrecht (2000)

Horst, R., Pardalos, A.P., Thoai, N.: Introduction to Global Optimization. Kluwer, Dordrecht (1995)

Horst, R., Tuy, H.: Global Optimization: Deterministic Approaches. Springer, Berlin (1996)

Pinter, J.: Global Optimization in Action. Kluwer, Dordrecht (1996)

Sergeyev, Y.D.: Multidimensional global optimization using the first derivatives. Comput. Maths. Math. Phys. 39(5), 743–752 (1999)

Sergeyev, Y.D., Kvasov, D.E.: A deterministic global optimization using smooth diagonal auxiliary functions. Commun. Nonlinear Sci. Numer. Simul. 21, 99–111 (2015)

Sergeyev, Y.D., Kvasov, D.E.: Deterministic Global Optimization, An Introduction to the Diagonal Approach. Springer, New York (2017)

Mockus, J.: Bayesian Approach to Global Optimization. Kluwer, Dordrecht (1988)

Strongin, R.G., Sergeyev, Y.D.: Global Optimization with Non-convex Constraints: Sequential and Parallel Algorithms. Kluwer, Dordrecht (2000)

Zhigljavsky, A.: Theory of Global Random Search. Kluwer, Dordrecht (1991)

Žilinskas, A.: A statistical model-based algorithm for black-box multi-objective optimisation. Int. J. Syst. Sci. 45(1), 82–92 (2014)

Žilinskas, A., Zhigljavsy, A.: Stochastic global optimization: a review on the occasion of 25 years of Informatica. Informatica 27, 229–256 (2016)

Hooker, J.: Testing heuristics: we have it all wrong. J. Heuristics 11, 33–42 (1995)

Pardalos, P., Romeijn, H.: Handbook of Global Optimization, vol. 2. Springer, Berlin (2002)

Rastrigin, L.: Statistical Models of Search. Nauka (2013) (in Russian)

Paulavičius, R., Sergeyev, Y., Kvasov, D., Z̆ilinskas, J.: Globally-biased DISIMPL algorithm for expensive global optimization. J. Glob. Optim. 59, 545567 (2014)

Žilinskas, A., Z̆ilinskas, J.: A hybrid global optimization algorithm for non-linear leist squares regression. J. Glob. Optim. 56, 265–277 (2013)

Calvin, J., Žilinskas, A.: A one-dimensional P-algorithm with convergence rate \(o(n^{-3+\delta })\) for smooth functions. J. Optim. Theory Appl. 106, 297–307 (2000)

Calvin, J.M.: An adaptive univariate global optimization algorithm and its convergence rate under the Wiener measure. Informatica 22, 471–488 (2010)

Calvin, J.M., Chen, Y., Z̆ilinskas, A.: An adaptive univariate global optimization algorithm and its convergence rate for twice continuously differentiable functions. J. Optim. Theory Appl. 155, 628–636 (2011)

Calvin, J.M., Z̆ilinskas, A.: On a global optimization of bivariate smooth functions. J. Optim. Theory Appl. 163, 528–547 (2014)

Novak, E., Woźniakowski, H.: Tractability of Multivariate Problems, II. Tracts in Mathematics, vol. 12. European Mathematical Society, Zürich (2010)

Huyer, W., Neumaier, A.: Global optimization by multi-level coordinate search. J. Glob. Optim. 14, 331–355 (1999)

Jones, D.R., Perttunen, C.D., Stuckman, C.D.: Lipschitzian optimization without the lipschitz constant. J. Optim. Theory Appl. 79, 157–181 (1993)

Sergeyev, Y., Kvasov, D.: Global search based on efficicient diagonal partitions and set of Lipschitz constants. SIAM J. Optim. 16(3), 910–937 (2006)

Scholz, D.: Deterministic Global Optimization: Geometric Branch-and-Bound Methods and their Applications. Springer, Berlin (2012)

Paulavičius, R., Z̆ilinskas, J.: Simplicial Global Optimization. Springer Briefs in Optimization. Springer, Berlin (2014)

Novak, E.: Deterministic and Stochastic Error Bounds in Numerical Analysis, Lecture Notes in Mathematics, vol. 1349. Springer, Berlin (1988)

Zhigljavsy, A., Žilinskas, A.: Stochastic Global Optimization. Springer, Berlin (2008)

Waldron, S.: Sharp error estimates for multivariate positive linear operators which reproduce the linear polynomials. In: Chui, C.K., Schumaker, L.L. (eds.) Approximation Theory IX, vol. 1, pp. 339–346. Vanderbilt University Press, Nashville (1998)

McGeoch, C.: Experimental analysis of algorithms. In: Pardalos, P., Romeijn, E. (eds.) Handbook of Global Optimization, vol. 2, pp. 489–514. Kluwer, Dodrecht (2002)

More, J., Wild, S.: Benchmarking derivative-free optimization algorithms. SIAM J. Optim. 20, 172–191 (2009)

GSL, GNU Scientific Library. https://www.gnu.org/software/gsl/. Accessed on 1 Aug 2016

Cgal, Computational Geometry Algorithms Library. http://www.cgal.org. Accessed on 15 Oct 2014

Sobol, I.: On the systematic search in a hypercube. SIAM J. Numer. Anal. 16, 790793 (1979)

Dixon, L.C.W., Szegö, G.P. (eds.): Towards Global Optimization II. North Holland, New York (1978)

Branin, F.: Widely convergent method for finding multiple solutions of simultaneous nonlinear equations. IBM J. Res. Dev. 16(5), 504–522 (1972)

Hansen, P., Jaumard, B.: Lipshitz optimization. In: Horst, R., Pardalos, P. (eds.) Handbook of Global Optimization, pp. 407–493. Kluwer, Dodrecht (1995)

Liu, H., Xu, S., Ma, Y., Wang, X.: Global optimization of expensive black box functions using potential lipschitz constants and response surfaces. J. Glob. Optim. 63, 229251 (2015)

Acknowledgements

We thank the reviewers for valuable hints and remarks which facilitated the improvement of presentation of our results. The work of J. Calvin was supported by the National Science Foundation under Grant No. CMMI-0926949 and the work of G. Gimbutienė and A. Žilinskas was supported by the Research Council of Lithuania under Grant No. P-MIP-17-61.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix A

This appendix contains the proof of Lemma 2.

Denote the eigenvalues of \(D^2f(x^*)\) by

Then by Taylor’s theorem (x a column vector),

Since \(D^2f(x^*)\) is symmetric positive definite, we can express it as

for an orthogonal matrix V and diagonal matrix \(\varTheta =\mathrm{diag}(\theta _1, \theta _2,\ldots ,\theta _d)\). Then

and

where \(Tx \equiv \varTheta ^{1/2}V^Tx\).

Let \(B_c^d(0)\) denote the ball of radius c, centered at 0, in \(\mathbb {R}^d\). For \(c>0\), let

which is positive since the minimizer is assumed unique and f is continuous. Then

and

by (24). For any \(\eta \in ]0,1/2]\), we can choose \(c>0\) small enough so that

Therefore,

as \(\epsilon \downarrow 0\), and similarly

Using the orthogonality of V, for any \(b>0\),

where \(E(c,b,\epsilon )\) is the image of the ball of radius c under the map \(x_i \mapsto x_i(\theta _ib/\epsilon )^{1/2}\), and we used the substitution \(y_i \leftarrow x_i\left( \theta _i b/\epsilon \right) ^{1/2}\) in the last equation. Therefore,

and (27) gives the bounds

Let \(\mathcal {V}(x)=\pi ^{d/2}\varGamma (d/2 +1)^{-1}x^d\) denote the volume of the d-dimensional ball of radius x. For \(z>0\),

For \(z>1\),

and for \(A<z\),

Combining this inequality with (28) we conclude that

Set

and

By (29), \(G_d(c)/\log (c)\rightarrow 1\) as \(c\uparrow \infty \). For any \(b>0\), we have the bounds

and

Since \(\eta >0\) is arbitrary, the fact that \(G_d(c)/\log (c)\rightarrow 1\) as \(c\uparrow \infty \) completes the proof.

Appendix B

This appendix contains the proofs of the lemmas upon which the proof of Theorem 1 are based.

Proof of Lemma 3

Let \(m\le n\) be the last time before n that the smallest hyper-rectangle was about to be split, so \(v_m = 2v_n\) and by (5),

Note that \(m\ge n_0\) by definition of \(n_2\).

We will show by induction that

for \(k\in \{1,2,\ldots ,n-m\}\), if the hyper-rectangle that the algorithm is subdividing at iteration \(m+k\) has not been previously subdivided since time m. At other times the bound is twice as large.

Let us first consider \(\{\rho ^{m+1}_i: i\le m+1\}\). Suppose that i is the split hyper-rectangle at time m, and let j denote a non-split hyper-rectangle, so \(\rho ^m_j \le \rho _i^m\). Then there exists a point \(c_j\in R_j\) such that

Next consider a child of the split subrectangle i. Since we are splitting the smallest subrectangle, \(v_m = 2v_n\), and there exists a point \(c_i\in R_i\) such that (supposing that one child has index i at time \(m+1\))

We have established the base case for the induction. Now consider iteration \(m+k+1\), \(1\le k<n-m\). For the induction hypothesis, assume that

if hyper-rectangle i has not been split since time m and

if hyper-rectangle i has been split since time m.

Suppose that the most promising hyper-rectangle at iteration \(m+k+1\) is subrectangle \(R_j=\prod _{i=1}^d[a_i,b_i]\),

Assume that this hyper-rectangle has not been split since time m. Let us suppose that during this interval (between m and the next time that the smallest hyper-rectangle is split) R is split r times, and denote by \(L^\prime \) the piecewise multilinear function defined over R after the splitting evaluations. Then

where

Set

Then, denoting by \(\rho _1\) the sum of the \(\rho \) values for the resulting subrectangles of R at time \(m+k+q\), we have

Let

where \(a=\min _{s\in R} L_{m+k+1}(s)-M_n+g(v_n) >0\). Then

The latter ratio is maximized by \(Q\equiv 0\), which corresponds to \(\rho _0 = \left| R\right| /a^{d/2}\), and so

We used the inequalities

which follows from the induction hypothesis.

We have shown that whenever the algorithm is about to subdivide a hyper-rectangle for the first time since m, \(\rho ^{m+k}\le 2/\lambda \log (m+k)\). After a hyper-rectangle is subdivided, subsequent subdivisions can never result in \(\rho \) more than twice as large.

The proof by induction of (30) is complete. \(\square \)

Proof of Lemma 4

Suppose (to get a contradiction) that \(\left| R_i\right| =v_n^*\), \(\left| R_j\right| =v_n\), and \(v_n^*=4 v_n\), but yet we are about to split hyper-rectangle \(R_j\), resulting in \(v_n^*>4v_n\); that is, \(\rho ^n_j \ge \rho ^n_i\):

for some \(c_i\in R_i, c_j\in R_j\). This implies that

But \(L_n(c_j) \ge M_n\) and

Thus

This means that

since \(d\ge 2\). But this contradicts (31), and establishes (9).

The proof of (10) follows from

\(\square \)

Proof of Lemma 5

Recall that \(R^*_n\) denotes the hyper-rectangle containing \(x^*\) at time n, and that \(\left| R^*_n\right| = v^*_n \le 4 v_n\) by Lemma 4. If h is the minimal edge length of \(R^*_n\), then \(v^*_n = 2^j h^d\) for some \(0 \le j <d\), and the diameter of \(R^*_n\) is less than \(2h\sqrt{d}\). Also \(h = 2^{-j/d}(v^*_n)^{1/d}\). Therefore, for \(s\in R^*_n\),

Therefore, by the previous inequality

\(\square \)

Proof of Lemma 6

Equation (11) follows from Lemma 3.

For \(n\ge n_2(f)\), the \(\rho \) values for the children of a split hyper-rectangle will not be much smaller than the parent. The largest possible decrease occurs if the values on the boundary of \(R_i\) are constant, say with value \(M_{m+k}+A\), and the new function values are the largest possible, namely \(M_{n}+A+a\), where

Considering a child of the split hyper-rectangle (say with index i),

using the inequality \((1+x/k)^k \le \exp (x)\) and the fact that \(d\ge 2\) implies that \(4^{2/d}/(4d)\le 1/2\).

Consider the average of the \(\{\rho ^n_i\}\) at time n that were the product of splits after time n / 2. Over this time interval we have

by Lemma 5, since \(n\ge 2n_2(f)\). Since the children \(\rho \) values will not be much smaller than the parent’s,

since \(v_i\ge v_n\). \(\square \)

Proof of Lemma 7

Fix a particular hyper-rectangle \(R_i\) with

We first show that the integrals

are close. As in the proof of Lemma 3, let

where \(a=\min _{s\in {R_i}} L_n(s)-f^*+g(v_n) >0\). Then, from (6),

and

The smallest value of the ratio occurs when \(Q(s)\equiv 0\).

The case \(Q\equiv 0\) corresponds to \(\rho = \left| {R_i}\right| /a^{d/2}\), and so

Now

Therefore,

since the last expression is increasing in \(d\ge 2\). Turning to an upper bound for

observe that

We have shown that

Recall that \(v_n^*\) denotes the volume of the hyper-rectangle containing the minimizer \(x^*\). Since \(n\ge n_2\), by Lemma 4 \(v_n^* \le 4 v_n\) and

Therefore,

Combining these inequalities with (32) gives

Since

this completes the proof. \(\square \)

Rights and permissions

About this article

Cite this article

Calvin, J., Gimbutienė, G., Phillips, W.O. et al. On convergence rate of a rectangular partition based global optimization algorithm. J Glob Optim 71, 165–191 (2018). https://doi.org/10.1007/s10898-018-0636-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-018-0636-z