Abstract

A new class of extragradient-type methods is introduced for solving an equilibrium problem in a real Hilbert space without any monotonicity assumption on the equilibrium function. The strategy is to replace the second projection step in the classical extragradient method by a projection onto shrinking convex subsets of the feasible set. Furthermore, to ensure a sufficient decrease on the equilibrium function, a general Armijo-type condition is imposed. This condition is shown to be satisfied for four different linesearches used in the literature. Then, the weak and strong convergence of the resulting algorithms is obtained under non-monotonicity assumptions. Finally, some numerical experiments are reported.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let C be a nonempty closed convex subset of a real Hilbert space \({\mathscr {H}}\) and let f be a function from \({\mathscr {H}} \times {\mathscr {H}}\) to \(\mathbb {R}\). The equilibrium problem associated with f and C in the sense of Blum and Oettli [6] consists in finding a point \(x^* \in C\) such that

This problem is denoted by EP(f, C) and its solution set by \(S_E\). The equilibrium problem, also known under the name of Ky Fan inequality [11], is very general in the sense that it includes, as special cases, many well-known problems: the optimization problem, the variational inequality problem, the saddle point problem, the (generalized) Nash equilibrium problem in game theory, the fixed point problem, and others; see, for instance, [24, 26, 31], and the references quoted therein. Associated with the classical equilibrium problem, the dual equilibrium problem, denoted DEP(f, C), can be expressed as finding \(x^* \in C\) such that

This problem was initially introduced in 1964 by Debrunnen and Flor [9] for variational inequalities where a first existence result was obtained. In 1967 it was reconsidered by Minty [21] and its relevance to applications was pointed out in [12].

Let us denote by \(S_D\) the solution set of DEP(f, C). The inclusion \(S_E \subset S_D\) holds under the pseudo-monotonicity assumption of f on C, namely: for every \(x,y \in C\)

In that situation, the inclusion \(S_E \subset S_D\) can also be expressed as

As mentioned in [13], the converse inclusion \(S_D \subset S_E\) is true when \(f(\cdot ,y)\) is upper semicontinuous for every \(y \in C\) and \(f(x,\cdot )\) is convex for every \(x \in C\) (see also [5]).

Many methods have been proposed for solving equilibrium problems such as the projection methods [19, 24, 32], the proximal point methods [14, 23], the subgradient methods [29, 36], the extragradient methods with or without linesearches [25, 26, 33, 38], the gap function method [20, 27] and the bundle methods [34]. Each solution method is adapted to a class of equilibrium problems so that the convergence of the algorithm can be guaranteed. The reader is referred to [5] and the references quoted therein for an excellent survey on the existing methods.

Among the solution methods for solving \(\textit{EP}(f,C)\), the extragradient method is well-known because of its efficiency in numerical tests. To the best of our knowledge, most of the extragradient methods require at least the pseudo-monotonicity assumption on the function f. However, this assumption may not be satisfied in a lot of practical problems as, for example, in the Nash–Cournot equilibrium problem [25]. So our aim in this paper is to study a new class of extragradient methods for solving \(\textit{EP}(f,C)\) in the framework of Hilbert spaces and without assuming any pseudo-monotonicity assumption on f. To do this, the projection algorithms proposed in [2, 10, 26] for solving finite-dimensional equilibrium problems are modified and embedded in a class of extragradient methods. The prediction step is unchanged but the correction step is new and obtained by projecting the prediction point onto shrinking convex subsets of C that contain the solution set \(S_D\) of problem \(\textit{DEP}(f,C)\). Since these subsets become smaller and smaller after each iteration, the proposed method should have a good numerical behavior. Furthermore, this strategy allows us to prove the convergence of the method without assuming any pseudo-monotonicity property on f but rather the condition

When \(S_D \subset S_E\), this condition is equivalent to the nonemptyness of the set \(S_D\) and allows us to obtain the convergence.

Recently, Ye and He presented in [37] a double projection method for solving finite dimensional variational inequalities without monotonicity assumptions on the function defining the variational inequality. Their method is a modification of a method due to Solodov and Svaiter [30] and coincides with one of the methods studied in this paper when the equilibrium problem is a finite-dimensional variational inequality problem. Finally, let us mention that other methods also exist for solving non-monotone equilibrium problems. One of them consists in solving at each iteration a perturbed equilibrium problem depending on a parameter \(\varepsilon \) that goes to zero. The reader is referred to [17] for more details.

The remainder of the paper is organized as follows: In Sect. 2, some preliminary results are recalled. A very general algorithm is presented in Sect. 3 for finding a point in the intersection of a sequence \(\{C_k\}\) of closed convex subsets of \(C \subset {\mathscr {H}}\). The weak and strong convergence of this algorithm is discussed in detail. In the next section, the sets \(C_k\) are defined via an extragradient method using a general Armijo-type condition. In Sect. 5, four implementable linesearches satisfying the general Armijo-type condition are introduced and the weak and strong convergence of the resulting algorithms is proven under the very mild assumption that the set \(S_D\) is nonempty. Finally, some numerical results are reported in Sect. 6 to show the efficiency of the methods.

2 Preliminaries

Let C be a nonempty closed convex subset of a real Hilbert space \({\mathscr {H}}\). For each \(x\in {\mathscr {H}}\), there exists a unique point in C, denoted \(P_Cx\), such that

The mapping \(P_C\) is known as the metric projection from \({\mathscr {H}}\) onto C. Below are listed some well-known properties of the projection \(P_C\) (see, for example, [16]).

Theorem 2.1

-

(a)

\(P_C(\cdot )\) is a nonexpansive mapping, i.e., for all \(x,y\in {\mathscr {H}}\)

$$\begin{aligned} \Vert P_Cx-P_Cy\Vert \le \Vert x-y\Vert . \end{aligned}$$ -

(b)

For any \(x\in {\mathscr {H}}\) and \(y\in C\), it holds that \(\left<x-P_Cx, y-P_Cx\right>\le 0\).

Conversely, if \(u\in C\) and \(\left<x-u, y-u\right>\le 0\) for all \(y\in C\), then \(u=P_Cx\).

Let \(f:{\mathscr {H}} \times {\mathscr {H}} \rightarrow \mathbb {R}\) be a function such that \(f(x,\cdot )\) is convex for every \(x \in {\mathscr {H}}\). Let also \(x,y \in {\mathscr {H}}\) and \(\varepsilon \ge 0\). The \(\varepsilon \)-subdifferential of \(f(x,\cdot )\) at y is denoted \(\partial _2^{\varepsilon }\,f(x,y)\) and defined by

When \(\varepsilon =0\), the set \(\partial _2^{\varepsilon } f(x,y)\) is written \(\partial _2 f(x,y)\) and called the subdifferential of \(f(x, \cdot )\) at y.

One often considers \(\textit{EP}(f,C)\) with some additional properties imposed on the function f such as monotonicity and pseudo-monotonicity. Let us recall these well-known definitions.

Definition 2.1

A function \(f: {\mathscr {H}} \times {\mathscr {H}} \rightarrow \mathbb {R}\) is called

-

(i)

pseudo-monotone on C if for all \(x,y \in C\),

$$\begin{aligned} f(x,y) \ge 0 \quad \Rightarrow \quad f(y,x) \le 0 \end{aligned}$$ -

(ii)

monotone on C if for all \(x,y \in C\),

$$\begin{aligned} f(x,y) + f(y,x) \le 0. \end{aligned}$$

It is easy to see that \((\mathrm ii) \Rightarrow (\mathrm i)\).

Throughout this paper, for finding a solution of problem \(\textit{EP}( f,C)\), we assume that the solution set \(S_D\) of the dual equilibrium problem \(\textit{DEP}(f,C)\) is nonempty and the function \(f:\,{\mathscr {H}} \times {\mathscr {H}} \rightarrow \mathbb {R}\) satisfies the following conditions:

- \((U_1)\) :

-

\(f(x,x)=0\) for all \(x\in {\mathscr {H}}\);

- \((U_2)\) :

-

\(f(x,\cdot )\) is a continuous and convex function on \({\mathscr {H}}\) for all \(x\in {\mathscr {H}}\);

- \((U_3)\) :

-

f is jointly weakly continuous on \({\mathscr {H}} \times {\mathscr {H}}\). In other words, if \(x,y \in {\mathscr {H}}\) and \(\{x_n\}\) and \(\{y_n\}\) are two sequences in \({\mathscr {H}}\) converging weakly to x and y, respectively, then \(f(x_n,y_n) \rightarrow f(x,y)\).

From Condition \((U_2)\), we have immediately that the function \(f(x,\cdot )\) is subdifferentiable for any \(x \in {\mathscr {H}}\) (see for example [15], Theorem 3.26).

In this paper we do not assume that the function f is pseudo-monotone on C as in the classical extragradient methods [26] and their variants [2, 3, 10], where the pseudo-monotonicity of f is used to get that \(S_E \subset S_D\). The following example shows that property \(S_E \subset S_D\) may not be satisfied when f is not pseudo-monotone.

Example 1

Let \(C=[-1,1]\times [-1,1] \subset \mathbb {R}^2\) and consider the equilibrium function defined, for every \(x=(x_1,x_2), y=(y_1,y_2) \in \mathbb {R}^2\) by

It is easy to see that

and thus that \(S_D \subset S_E\) with \(S_E \not = S_D\). Moreover, for \(x=(0,0)\) and for all \(y \in [-1,0)\times [-1,0)\), we have

This means that f is neither monotone nor pseudo-monotone on C.

The next two propositions will be useful for proving the convergence of our algorithms.

Proposition 2.1

Assume that the function f satisfies conditions \((U_1)\)–\((U_3)\). Let \(\{v^k\}\) and \(\{w^k\}\) be two bounded sequences in C and let \(\{\mu ^k\}\) be a sequence such that

Then

-

(i)

There exists a subsequence of \(\{\mu ^k\}\) which is bounded;

-

(ii)

If, in addition, \(\varepsilon _k=0\) for all k and the sequences \(\{v^k\}\) and \(\{w^k\}\) are weakly convergent, then the whole sequence \(\{\mu ^k\}\) is bounded.

Proof

(i) The function \(f(x,\cdot )\) being continuous and convex for all \(x \in {\mathscr {H}}\) (see Condition \((U_2)\)), and \(\mu ^k\) belonging to \(\partial _2^{\varepsilon _k} f(v^k,w^k)\) for all k, it follows from the Brønsted-Rockafellar property (see [7] Lemma, p. 608) that for all k, there exists \(t^k \in B(w^k,1)\) such that

where B(x, r) denotes the ball with center x and radius \(r>0\). The sequence \(\{w^k\}\) being bounded, it is easy to see that the sequence \(\{t^k\}\) is also bounded and thus that one of its subsequences denoted by \(\{t^{k_j}\}\) converges weakly to some \(\bar{t}\). Similarly, the sequence \(\{v^k\}\) being bounded, there exists a subsequence of \(\{v^{k_j}\}\) denoted again by \(\{v^{k_j}\}\) that converges weakly to some \(\bar{v}\). Then it follows from Proposition 4.3 in [35] that there exist \(\eta >0\) and an integer \(j_0\) such that for all \(j \ge j_0\)

Consequently, for all \(j \ge j_0\),

Since \(\partial _2 f(\bar{v},\bar{t})\) and B(0, 1) are bounded, the sequence \(\{\mu ^{k_j}\}\) is bounded.

(ii) When \(\varepsilon _k =0\) for all k and the sequences \(\{v^k\}\) and \(\{w^k\}\) are weakly convergent to \(\bar{v}\) and \(\bar{w}\), respectively, it follows from Proposition 4.3 in [35] that there exist \(\eta >0\) and an integer \(k_0\) such that for all \(k \ge k_0\)

Since \(\partial _2 f(\bar{v},\bar{w})\) and B(0, 1) are bounded, the whole sequence \(\{\mu ^{k}\}\) is bounded. \(\square \)

Proposition 2.2

([18], Lemma 1.5) Let \(\varOmega \) be a nonempty, closed and convex subset of \({\mathscr {H}}\). Let \(u \in {\mathscr {H}}\) and let \(\{x^k\}\) be a sequence in \({\mathscr {H}}\) such that any weak limit point of \(\{x^k\}\) belongs to \(\varOmega \) and

Then \(x^k \rightarrow P_\varOmega (u)\) (strongly).

3 A general algorithm

Let C be a closed convex subset of a real Hilbert space \({\mathscr {H}}\) and let \(\{C_k\}\) be a sequence of closed convex subsets of C. Let also \(C_{\infty }\) be a nonempty closed convex subset of \({\mathscr {H}}\) such that \(C_{\infty } \subset C_k\) for all k. In the first part of this section, we consider the sequence \(\{x^k\}\) defined by

In the next proposition we give some properties of the sequence generated by (3.1).

Proposition 3.1

Let \(x^* \in C_{\infty }\) and let \(\{x^k\}\) be any sequence generated by (3.1). Then

Furthermore, the sequence \(\{x^k\}\) is bounded and

Proof

Let \(x^* \in C_{\infty }\) and let k be fixed. Then \(x^* \in C_k\) and since \(x^{k+1}=P_{C_k}x^k\), we have \( \langle x^k-x^{k+1}, x^* - x^{k+1}\rangle \le 0\). So we can successively write

The rest of the proof is straightforward. \(\square \)

Proposition 3.2

If any weak limit point of the sequence \(\{x^k\}\) belongs to \(C_{\infty }\), then the whole sequence \(\{x^k\}\) converges weakly to some \(\bar{x} \in C_{\infty }\).

Proof

The sequence \(\{x^k\}\) being Féjer monotone with respect to \(C_\infty \) (see Proposition 3.1), the result directly follows from Theorem 5.5 of [4]. \(\square \)

Now, before proving that any weak limit point of the sequence \(\{x^k\}\) belongs to \(C_{\infty }\), we recall the following definition:

Definition 3.1

([22], p. 519) A sequence \(\{S_k\}\) of subsets of \({\mathscr {H}}\) converges to \(S\subset {\mathscr {H}}\) (in short, \(S_k \rightarrow S\)) if

where

and

Remark 3.1

Since \(C_\infty \subset C_k\) for all k, we have (see [22], Sect. 3) that

More information about the convergence of a sequence of subsets in a real Hilbert space can be found in Mosco’s paper [22].

Proposition 3.3

If the sequence \(\{C_k\}\) converges to \(C_\infty \), then any weak limit point of the sequence \(\{x^k\}\) defined by (3.1) belongs to \(C_\infty \).

Proof

Let x be a weak limit point of \(\{x^k\}\). Then there exists a subsequence \(\{x^{j}\}\) of \(\{x^k\}\) that converges weakly to x and that satisfies for all j the property: \(x^{j} \in C_{k_j}\) where \(k_j=j-1\). Consequently, x belongs to \(w-\lim \sup \,C_k\), and thus also to \(C_\infty \) thanks to Remark 3.1. \(\square \)

Now to obtain that the sequence \(\{C_k\}\) converges to \(C_\infty \), we assume that the sets \(\{C_k\}\) satisfy the following conditions:

- \((T_1)\) :

-

\(C_{k+1} \subset C_k \subset C\) for all k;

- \((T_2)\) :

-

\(C_\infty = \cap _{k=0}^\infty C_k \not = \emptyset \).

Proposition 3.4

Under conditions \((T_1)\), \((T_2)\), the sequence \(\{C_k\}\) converges to \(C_\infty \) and any weak limit point of the sequence \(\{x^k\}\) belongs to \(C_\infty \).

Proof

Thanks to Remark 3.1 and Proposition 3.3, we have only to prove that

In that purpose, let \(x \in w-\lim \sup \,C_k\). Then there exists a sequence \(\{x^j\}\) converging weakly to x with \(x^j \in C_{k_j}\) for all j where \(\{C_{k_j}\}\) is a subsequence of \(\{C_k\}\). Next, let \(N \in \mathbb {N}\). Since \(k_j \rightarrow \infty \) as \(j \rightarrow \infty \), there exists \(j_0\) such that \(k_j \ge N\) for all \(j \ge j_0\). Then, using assumption \((T_1)\), we can write that

On the other hand, the set \(C_N\) being closed and convex is also weakly closed. Consequently, since \(x^{j} \rightharpoonup x\) as \(j \rightarrow \infty \), we obtain that \(x \in C_N\). Finally, recalling that \(C_\infty = \cap _{N=0}^\infty C_N\), we can easily deduce that \(x \in C_\infty \). \(\square \)

From Propositions 3.1–3.4 we can derive the following theorem:

Theorem 3.1

Under conditions \((T_1)\), \((T_2)\), the sequence \(\{x^k\}\) defined by (3.1) is bounded and converges weakly to some \(x \in C_\infty \).

In the second part of this section, our aim is to generate a sequence \(\{x^k\}\) that converges strongly to some \(x^* \in C_\infty \) under the same conditions \((T_1)\) and \((T_2)\). Furthermore, we impose that \(x^*=P_{C_\infty }(x^g)\) where \(x^g \in C\) is given. This can be done by adding a supplementary step in (3.1). More precisely, let \(x^0=x^g \in C\) and assume that \(x^k \in C\) is known. Then \(x^{k+1}\) is computed through an iterate \(u^k\) as follows:

where the sequence \(\{C_k\}\) satisfies conditions \((T_1)\) and \((T_2)\) and the sets \(B_k\) and \(D_k\) are defined for all k by

Using Proposition 3.1 with \(x^{k+1}\) replaced by \(u^k\), we obtain, for all k, the following inequality

where \(x^* \in C_\infty \). In the next proposition, we give the relationship between the set \(C_\infty \) and the sets \(B_k\) and \(D_k\).

Proposition 3.5

Let \(B_k\) and \(D_k\) be the subsets of \({\mathscr {H}}\) defined by (3.3) and (3.4). Then

Proof

Let \(x^* \in C_\infty \). Using (3.5) and the definition of \(C_\infty \) and \(B_k\), we easily obtain that \(x^* \in B_k \cap C\). Let us prove, by induction, that \(x^* \in D_k\) for all k. Since \(x^0=x^g\), we have immediately that \(x^* \in D_0\). Next, suppose that \(x^* \in D_k\) for some k. By definition of \(x^{k+1}\), we have that

Since \(x^* \in B_k \cap D_k \cap C\), we immediately deduce that

i.e., \(x^* \in D_{k+1}\). \(\square \)

In order to prove the strong convergence of the sequence \(\{x^k\}\) generated by means of (3.2), we need the following proposition.

Proposition 3.6

The sequences \(\{x^k\}\) and \(\{u^k\}\) generated by (3.2) satisfy the properties:

-

1.

\(\Vert x^k-x^g\Vert \le \Vert x^g-P_{C_\infty }(x^{g})\Vert \) for all k.

-

2.

\(\lim _{k \rightarrow \infty }\Vert x^k-x^g\Vert \) exists and the sequence \(\{x^k\}\) is bounded.

-

3.

\(\Vert x^{k+1}-x^k\Vert \rightarrow 0\) and \(\Vert x^k-u^k\Vert \rightarrow 0\) as \(k \rightarrow \infty \).

Proof

By definition of \(D_k\), we have that \(x^k=P_{D_k}(x^g)\) and thus that \(\Vert x^k-x^g\Vert \le \Vert x-x^g\Vert \) for all \(x \in D_k\). Since \(x^{k+1} \in D_k\) and \(C_\infty \subset D_k\), the sequence \(\{\Vert x^k-x^g\Vert \}\) is non-decreasing and \(\Vert x^k-x^g\Vert \le \Vert x^g-P_{C_\infty }(x^{g})\Vert \). Hence \(\lim _{k \rightarrow \infty }\Vert x^k-x^g\Vert \) exists and the sequence \(\{x^k\}\) is bounded. The proof of the third part is similar to the beginning of the proof of Theorem 4.1 in [10]. \(\square \)

Remark 3.2

From the first part of Proposition 3.6, and thanks to Proposition 2.2 with \(\varOmega =C_\infty \), it suffices to prove that any weak limit point of the sequence \(\{x^k\}\) belongs to \(C_\infty \) to obtain the strong convergence of \(\{x^k\}\) to \(P_{C_\infty }(x^g)\).

Proposition 3.7

If the sequence \(\{C_k\}\) converges to \(C_\infty \), then any weak limit point of the sequence \(\{x^k\}\) defined for all k by (3.2) belongs to \(C_\infty \).

Proof

Let x be a weak limit point of \(\{x^k\}\). Then there exists a subsequence \(\{x^{j}\}\) of \(\{x^k\}\) that converges weakly to x. By definition, \(u^j=P_{C_j}(x^j) \in C_j\) for all j. Since \(x^j \rightharpoonup x\), and by Proposition 3.6, \(\Vert u^j-x^j\Vert \rightarrow 0\), it follows that \(u^j \rightharpoonup x\) as \(j \rightarrow \infty \). Consequently, x belongs to \(w-\lim \sup \,C_k\), and thus also to \(C_\infty \) thanks to Remark 3.1. \(\square \)

Under conditions \((T_1)\), \((T_2)\), it is easy to prove that the statement of Proposition 3.4 is still valid when (3.2) is used instead of (3.1). So, taking account of Remark 3.2, we can deduce the next theorem.

Theorem 3.2

Under conditions \((T_1)\), \((T_2)\), the sequence \(\{C_k\}\) converges to \(C_\infty \). Furthermore, the sequence \(\{x^k\}\) defined for all k by (3.2) converges strongly to the projection of \(x^g\) onto \(C_\infty \).

To conclude this section, we can say that under conditions \((T_1)\) and \((T_2)\), the sequence \(\{x^k\}\) defined by (3.1) converges weakly to an element of \(C_\infty \) and that the sequence \(\{x^k\}\) defined for all k by (3.2) converges strongly to \(P_{C_\infty }(x^g) \in C_\infty \).

4 Two convergent algorithms

Our aim in this section is to construct a sequence \(\{C_k\}\) of subsets of C satisfying not only conditions \((T_1)\) and \((T_2)\) but also one of the next properties

-

1.

The sequence \(\{x^k\}\) defined by (3.1), being weakly converging to a point of \(C_\infty \), is also weakly converging to a solution of problem \(\textit{EP}(f,C)\).

-

2.

The sequence \(\{x^k\}\) defined by (3.2), being strongly converging to a point of \(C_\infty \), is also weakly (and thus strongly) converging to a solution of problem \(\textit{EP}(f,C)\).

So to consider both cases, we assume that the sequence \(\{x^k\}\) is weakly convergent and we prove that any weak limit point of this sequence is a solution of problem \(\textit{EP}(f,C)\). From now on, we assume that conditions \((U_1)\) – \((U_3)\) hold.

The strategy developed here is based on the extragradient method and consists in using separating closed half-spaces to define the sequence \(\{C_k\}\). More precisely, our general algorithm will take the following forms depending on the type of convergence (weak or strong) we want to obtain.

Algorithm 1

- Step 0:

-

Let \(x^0 \in C\) and let \(c >0\). Set \(k=0\).

- Step 1:

-

Compute \(y^k = \arg \min _{y \in C} \{ f(x^k,y) + \frac{1}{2}\Vert y-x^k\Vert ^2 \}\). If \(y^k=x^k\), then Stop: \(x^k\) is a solution of problem \(\textit{EP}(f,C)\).

- Step 2:

-

Otherwise find \(\alpha _k \in (0,1)\) and compute \(z^k=(1-\alpha _k) x^k+\alpha _k y^k \) such that

$$\begin{aligned} \varepsilon _k + \alpha _k c\,\Vert x^k-y^k\Vert ^2 \le \langle g^k, x^k-z^k \rangle \end{aligned}$$(4.1)where \( \varepsilon _k \ge 0\) and \(g^k \in \partial _2^{\varepsilon _k} f(z^k,z^k)\). Denote by \(H_k\) the half-space

$$\begin{aligned} H_k = \{x \in {\mathscr {H}}\,|\,\langle g^k,x-z^k \rangle \le \varepsilon _k \}. \end{aligned}$$ - Step 3:

-

Compute \(u^{k} = P_{C_{k}}(x^k)\) where \(C_k\) denotes the closed convex set

$$\begin{aligned} C_k = \cap _{i=0}^{k}\,[\,C \cap H_i\,]. \end{aligned}$$ - Step 4:

-

Set \(x^{k+1}=u^k\).

- Step 5:

-

Set \(k:=k+1\) and go back to Step 1.

To obtain the strong convergence of the sequence \(\{x^k\}\), we replace Step 4 in Algorithm 1 by the following step

Step 4a Compute \(x^{k+1}=P_{B_k \cap D_k \cap C}(x^g)\) where \(x^g=x^0\) and \(B_k\) and \(D_k\) are defined as in (3.3) and (3.4), respectively.

The corresponding algorithm is called Algorithm 2.

In this section we make the following assumption: for all k such that \(x^k \not = y^k\), it is possible to find \(\alpha _k \in (0,1)\) such that the point \(z^k = (1-\alpha _k)x^k + \alpha _ky^k\) satisfies inequality (4.1) where

In particular, note that this assumption implies that \(g^k \not = 0\) when \(x^k \not = y^k\). In next section, we will give four examples of linesearches where inequality (4.1) is satisfied when \(x^k \not = y^k\).

Remark 4.1

When \(S_D\) is nonempty, the subsets \(C_k=\cap _{i=0}^{k}\,[\,C \cap H_i\,]\), \(k \in \mathbb {N}\) satisfy conditions \((T_1)\) and \((T_2)\). Indeed, condition \((T_1)\) is immediately verified. To obtain condition \((T_2)\), we prove that \(S_D \subset \cap _{k=0}^{\infty }C_{k}\equiv C_{\infty }\). Let \(x^* \in S_D\). Then \(x^* \in C\) and

So, for all \(i \in \mathbb {N}\), by definition of \(g^i \in \partial _2^{\varepsilon _i} f(z^i,z^i)\), we can write

and thus \(x^* \in C \cap H_i\). Consequently, \(S_D \subset C_\infty \) and condition \((T_2)\) is satisfied. Furthermore, the sets \(C_k\) being nonempty closed and convex, the projection of \(x^k\) onto \(C_k\) is well-defined at Step 3 of Algorithms 1 and 2. From now on, we assume that \(S_D\) is nonempty.

Remark 4.2

In the particular case when f is pseudo-monotone, we know that \(S_D = S_E \subset C_\infty \). So when Algorithm 1 or Algorithm 2 is used, we can replace \(C_\infty \) by \(S_E\) in the statements of Propositions 3.1, 3.2 and 3.6 and take \(\varOmega = S_E\) in Proposition 2.2. Hence we obtain that the sequence \(\{x^k\}\) generated by Algorithm 1 (Algorithm 2) converges weakly (strongly) to some \(\bar{x} \in S_E\) when each weak limit point of \(\{x^k\}\) belongs to \(S_E\). As we will see it later (see Remark 5.5 and Theorem 5.2), this last property can be proven without using Proposition 3.4 and Theorem 3.2. So, conditions \((T_1)\) and \((T_2)\) are not requested when f is pseudo-monotone.

Remark 4.3

When \({\mathscr {H}}=\mathbb {R}^n\) and \(\varepsilon _k=0\) for all k, the choice \(C_k = \cap _{i=0}^{k}\,[\,C \cap H_i\,]\) has already been considered by Ye and He in [37] in the framework of variational inequalities.

In next proposition, we start by recalling some properties satisfied by the sequence \(\{y^k\}\) defined at Step 1.

Proposition 4.1

[1], Lemma 3.1 (8)] For every \(y \in C\), the two sequences \(\{x^k\}\) and \(\{y^k\}\) generated by Algorithm 1 or Algorithm 2 verify, for all k, the property

In particular, the inequality \(\Vert x^k-y^k\Vert ^2+f(x^k,y^k) \le 0\) is satisfied for all k. Furthermore, if \(y^k=x^k\) for some k, then \(x^k\) is a solution of problem \(\textit{EP}(f,C)\).

From now on, we assume that \(x^k \not = y^k\) for all k and thus that the sequence \(\{x^k\}\) generated by Algorithm 1 or Algorithm 2 is infinite.

First we prove that any weak limit point of the sequence \(\{x^k\}\) generated by Algorithm 1 or Algorithm 2 is a solution of problem \(\textit{EP}(f,C)\).

Theorem 4.1

Let \(\bar{x}\) be a weak limit point of the sequence \(\{x^k\}\) and let \(\{x^{k_j}\}\) be the corresponding subsequence converging weakly to \(\bar{x}\). If the subsequence \(\{\Vert x^{k_j}-y^{k_j}\Vert \}\) converges to 0, then \(\bar{x}\) is a solution of problem \(\textit{EP}(f,C)\).

Proof

Let \(y \in C\). Then, for all j, we have, using Proposition 4.1, that

Taking the limit as \(j \rightarrow \infty \), we have that \(y^{k_j} \rightharpoonup \bar{x} \) and remembering assumption \((U_3)\) and \(\Vert x^{k_j}-y^{k_j}\Vert \rightarrow 0\), we obtain that \(f(\bar{x},y) \ge 0\). So \(\bar{x}\) is a solution of \(\textit{EP}(f,C)\). \(\square \)

Now it remains to prove that \(\{\Vert x^{k_j}-y^{k_j}\Vert \} \rightarrow 0\) when \(x^{k_j} \rightharpoonup \bar{x}\). In that purpose, we need the following result.

Proposition 4.2

Assume that \(C_k \subset C \cap H_k\) for all k. Then the following inequality holds

for all k. Furthermore,

Proof

By construction, for all k, the iterate \(u^{k}\) belongs to \(C_k\) and thus, by assumption, to \(H_k\). So \(\langle g^k, u^{k}-z^k\rangle \le \varepsilon _k\). Consequently, using the linesearch (Step 2), we obtain successively

But this means that

Finally, since \(\Vert u^{k}-x^k\Vert \rightarrow 0\) (see Propositions 3.1 and 3.6), we obtain that

\(\square \)

The next step is to prove that a subsequence of the sequence \(\{g^{k_j}\}\) is bounded when \(x^{k_j} \rightharpoonup \bar{x}\). In that purpose we first prove that the sequences \(\{y^{k_j}\}\) and \(\{z^{k_j}\}\) are bounded.

Proposition 4.3

Assume that \(x^{k_j} \rightharpoonup \bar{x}\). Then the two sequences \(\{y^{k_j}\}\) and \(\{z^{k_j}\}\) are bounded.

Proof

Let j be fixed and let \(s^{k_j} \in \partial _2 f(x^{k_j},x^{k_j})\). First we prove that \(\Vert x^{k_j}-y^{k_j}\Vert \le \Vert s^{k_j}\Vert \). Since \(f(x^{k_j},x^{k_j})=0\), we have, by definition of the subdifferential, that \(f(x^{k_j},y^{k_j})\ge \langle s^{k_j},y^{k_j}-x^{k_j}\rangle \). Hence, using successively Proposition 4.1 and the Cauchy–Schwarz inequality, we obtain

So \(\Vert x^{k_j}-y^{k_j}\Vert \le \Vert s^{k_j}\Vert \). Using Proposition 2.1 (ii) with \(\{v^{k}\}=\{x^{k_j}\}\), \(\{w^{k}\}=\{x^{k_j}\}\) and \(\{\mu ^{k}\}=\{s^{k_j}\}\), and recalling that \(x^{k_j} \rightharpoonup \bar{x}\), we can deduce that the sequence \(\{s^{k_j}\}\) is bounded. Hence, the sequence \(\{x^{k_j}\}\) being bounded, we have immediately that the sequence \(\{y^{k_j}\}\) is also bounded. Finally, the sequence \(\{z^{k_j}\}\) is also bounded because the sequence \(\{x^{k_j}\}\) is bounded and \(z^{k_j}\) belongs to the segment \([x^{k_j};y^{k_j}]\) for all j. \(\square \)

Proposition 4.4

Assume that \(x^{k_j} \rightharpoonup \bar{x}\). If the sequence \(\{g^{k_j}\}\) satisfies one of the following conditions

-

(Cond1) \(\forall j \quad g^{k_j}\in \partial _2^{\varepsilon _{k_j}}\,f(z^{k_j},z^{k_j})\) with \(0 \le \varepsilon _{k_j}\le 1\)

-

(Cond2) \(\forall j \quad g^{k_j}\in \partial _2 f(z^{k_j},x^{k_j})\),

then there is a subsequence of \(\{g^{k_j}\}\) that is bounded.

Proof

The sequences \(\{z^{k_j}\}\) and \(\{x^{k_j}\}\) being bounded (see Proposition 4.3), it follows from Proposition 2.1 (i), that there is a subsequence of \(\{g^{k_j}\}\) that is bounded under conditions (Cond1) or (Cond2). \(\square \)

As a consequence, under conditions (Cond1) or (Cond2), it follows from Propositions 4.2 and 4.4 that a subsequence of \(\{\alpha _{k_j}\Vert x^{k_j} - y^{k_j}\Vert \}\) tends to 0. Without loss of generality, we will assume that

To obtain that any weak limit point of the sequence \(\{x^k\}\) generated by Algorithm 1 or Algorithm 2 is a solution of problem \(\textit{EP}(f,C)\), it remains to prove that \(\Vert x^{k_j}-y^{k_j}\Vert \rightarrow 0\) when the sequence \(\{x^{k_j}\}\) converges weakly to some \(\bar{x}\) (see Theorem 4.1). In that purpose, we have to precise the way the linesearch is performed in Step 2 of Algorithm 1.

5 A class of extragradient methods

Let \(x^k\) and \(y^k\) be the iterates obtained in Step 1 of Algorithm 1 or Algorithm 2. In this section we assume that \(x^k \not = y^k\) and we give several procedures for finding the steplength \(\alpha _k \in (0,1]\) and the vector \(z^k=(1-\alpha _k) x^k+\alpha _k y^k\) satisfying the inequality

where \(\varepsilon _k \ge 0\) and \(g^k \in \partial _2^{\varepsilon _k} f(z^k,z^k)\). This will be done thanks to a linesearch between \(x^k\) and \(y^k\). In the first linesearch, we suppose that \(\varepsilon _k=0\).

Linesearch 1: Given \(\alpha \in (0,1)\) and \(\rho \in (0,1)\), find m the smallest nonnegative integer such that

and set \(\alpha _k=\alpha ^m\), \(z^k=z^{k,m}\), and \(g^k = g^{k,m}\).

This linesearch was used in [10] for solving pseudo-monotone equilibrium problems. It was proven therein that this linesearch is well-defined. Furthermore, as \(x^k-z^k = \alpha _k (x^k-y^k)\), the following proposition holds immediately.

Proposition 5.1

Let \(z^k\) and \(g^k\) be generated by Linesearch 1. Then

with \(c =\rho \).

A second linesearch between \(x^k\) and \(y^k\) can be expressed as follows:

Linesearch 2: Given \(\alpha \in (0,1)\), \(\beta \in [0,\alpha ]\) and \(\rho \in (0,1)\), find m the smallest nonnegative integer such that

and set \(\alpha _k=\alpha ^m\), \(\beta _k=\beta ^m\) and \(z^k=z^{k,m}\). When \(\beta = 0\), this linesearch coincides with the one introduced by Quoc et al. in [26] and by Anh et al. in [2].

Remark 5.1

Linesearch 2 is well-defined when \(x^k \not = y^k\). Indeed suppose, to get a contradiction, that this linesearch is not finite, meaning that for all \(m \in \mathbb {N}\), we have the inequality

Taking the limit as \(m \rightarrow \infty \) and noting that \(f(\cdot ,y^k)\) is weakly continuous, we deduce that

On the other hand, from Proposition 4.1, we have

Since \(\rho \in (0,1)\), we obtain that \(y^k=x^k\), which contradicts the assumption that \(y^k \not = x^k\). Therefore, Linesearch 2 is well-defined.

Linesearch 2 is also a particular achievement of the linesearch considered in Algorithms 1 and 2.

Proposition 5.2

Let \(z^k\) be generated by Linesearch 2. Then for every \(\varepsilon _k \le \beta _k^2\) and \(g^k \in \partial _2^{\varepsilon _k}f(z^k,z^k)\), we have

with \(c = \rho \).

Proof

From the definition of \(z^k\) and \(g^k\), we deduce successively that

Since \(z^k=(1-\alpha _k)x^k+\alpha _k y^k\), we have \(z^k-y^k=(1-\alpha _k)(x^k- y^k)\). Hence it follows from the last inequality that

Multiplying both sides of this inequality by the positive number \(\alpha _k\) gives

Since \(z^k=(1-\alpha _k)x^k+\alpha _k y^k\), the last inequality implies

Hence \(\varepsilon _k + \alpha _k \rho \Vert x^k-y^k\Vert ^2 \le \langle g^k,x^k-z^k\rangle .\) \(\square \)

The next linesearch, denoted Linesearch 3, was used in [26], Algorithm 2a, for solving pseudo-monotone equilibrium problems (see also Linesearch 1 in [31]). This linesearch is well-defined and can be expressed as follows:

Linesearch 3: Given \(\alpha \in (0,1)\) and \(\rho \in (0,1)\), find m the smallest nonnegative integer such that

and set \(\alpha _k=\alpha ^m\) and \(z^k=z^{k,m}\).

For this linesearch, we have the following result.

Proposition 5.3

Let \(z^k\) be generated by Linesearch 3 and let \(g^k \in \partial _2 f(z^k,x^k)\). Then \(g^k \in \partial _2^{\varepsilon _k}f(z^k,z^k)\) with \(\varepsilon _k = \langle g^k,x^k-z^k\rangle - f(z^k,x^k) \ge 0\). Furthermore, we have

with \(c=\rho \).

Proof

From the definition of \(g^k\), we deduce that

for every \(y \in C\). Equivalently,

for every \(y \in C\). Setting

we have \(\varepsilon _k \ge 0\) because, by (5.2),

Then, for every \(y \in C\), we obtain that

Hence \(g^k \in \partial _2^{\varepsilon _k}f(z^k, z^k)\). On the other hand, since \(z^k=(1-\alpha _k)x^k + \alpha _k y^k\) and \(f(z^k,\cdot )\) is convex, we have

which implies, by definition of Linesearch 3, that

Adding \(\varepsilon _k=\langle g^k,x^k-z^k\rangle - f(z^k,x^k) \ge 0\) to both sides of (5.3), we obtain the requested inequality

\(\square \)

Remark 5.2

Let us observe that when Linesearch 3 is incorporated in Algorithm 1, the half-space

with \(\varepsilon _k = \langle g^k,x^k-z^k\rangle -f(z^k,x^k)\) coincides with the half-space

used in [26]. So our Algorithm 1 with Linesearch 3 incorporated in Step 2 and with \(C_k=C \bigcap H_k\) is very close to the extragradient Algorithm 2a proposed in [26] where \(x^{k+1}=P_C\,(P_{H_k}(x^k))\).

The last linesearch, denoted Linesearch 4, was used in [31] for solving pseudo-monotone quasi-equilibrium problems. This linesearch is well-defined and can be expressed as follows:

Linesearch 4: Given \(\alpha \in (0,1)\) and \(\rho \in (0,1)\), find m the smallest nonnegative integer such that

and set \(\alpha _k=\alpha ^m\) and \(z^k=z^{k,m}\).

Let \(z^k\) be generated by Linesearch 4. Since \(f(x^k,y^k) \le - \Vert x^k-y^k\Vert ^2\) holds by Proposition 4.1, we have that

So the next result follows from Proposition 5.3.

Proposition 5.4

Let \(z^k\) be generated by Linesearch 4 and let \(g^k \in \partial _2 f(z^k,x^k)\). Then \(g^k \in \partial _2^{\varepsilon _k}f(z^k,z^k)\) with \(\varepsilon _k = \langle g^k,x^k-z^k\rangle - f(z^k,x^k) \ge 0\). Furthermore, we have

with \(c=1-\rho \).

Remark 5.3

In the particular case of variational inequality problems, Linesearch 4 coincides with the linesearch used in [37]. Consequently, with Linesearch 4, our Algorithm 1 is a generalization to equilibrium problems of Algorithm 2.1 studied in [37] for solving non-monotone variational inequality problems.

To summarize, we can say that inequality (4.1) is satisfied when each of the linesearches considered above is incorporated in Step 2 of Algorithm 1 or Algorithm 2. Now to obtain the convergence of the corresponding algorithms, it remains to prove that in each situation the sequence \(\{\Vert x^{k_j}-y^{k_j}\Vert \}\) tends to zero when the sequence \(\{x^{k_j}\}\) converges weakly to \(\bar{x}\) (see Theorem 4.1). It is the aim of the next proposition.

Proposition 5.5

Let \(\bar{x}\) be a weak limit point of the sequence \(\{x^k\}\) and let \(\{x^{k_j}\}\) be the corresponding subsequence converging weakly to \(\bar{x}\). Then \(\Vert x^{k_j}-y^{k_j}\Vert \rightarrow 0\) as \(j \rightarrow \infty \).

Proof

Since each linesearch satisfies either condition (Cond1) or condition (Cond2), it follows from (4.3) that \(\alpha _{k_j} \Vert x^{k_j}-y^{k_j}\Vert \rightarrow 0\) as \(j \rightarrow \infty \). So to get that \(\Vert x^{k_j}-y^{k_j}\Vert \rightarrow 0\) as \(j \rightarrow \infty \), we consider two cases.

Case 1 \(\inf _j\,\alpha _{k_j} >0\). In that case, it follows directly from (4.3) that \(\Vert x^{k_j}-y^{k_j}\Vert \rightarrow 0\) as \(j \rightarrow \infty \).

Case 2 \(\inf _j\alpha _{k_j} =0\). Then \(\alpha _{k_j}\rightarrow 0\) (for a subsequence). But this implies that \(\alpha _{k_j}<1\) for j large enough and that the linesearch condition in Step 2 of Algorithms 1 and 2 is not satisfied for \(\frac{\alpha _{k_j}}{\alpha }\). Let us consider the following vector

It is immediate that \(\bar{z}^{k_j} \rightharpoonup \bar{x}\).

Now we examine separately the four linesearches.

(a) When Linesearch 1 is used, we have for all j that

where \( \bar{g}^{k_j} \in \partial _2 f(\bar{z}^{k_j}, \bar{z}^{k_j})\). On the other hand, by definition of \(\bar{g}^{k_j}\) and \(\bar{z}^{k_j}\) and by Proposition 4.1, we can write, for all j, the two following inequalities

Using successively (5.4), (5.5) and (5.6), we obtain that for all j

Let \(\bar{y}\) be a weak limit point of the bounded sequence \(\{y^{k_j}\}\). Since the sequence \(\{\Vert x^{k_j}-y^{k_j}\Vert \}\) is bounded, there is one of its subsequences (again denoted \(\{\Vert x^{k_j}-y^{k_j}\Vert \}\)) which is convergent to some \(a \ge 0\). Taking the limit in (5.7), and observing that \(\bar{z}^{k_j} \rightharpoonup \bar{x}\), \(x^{k_j} \rightharpoonup \bar{x}\) and \(\alpha _{k_j} \rightarrow 0\), we obtain, thanks to condition \((U_3)\), that

Hence \( f(\bar{x},\bar{y}) \ge 0\) and \(a=0\). But this means that \(\Vert x^{k_j}-y^{k_j}\Vert \rightarrow 0\) as \(j \rightarrow \infty \). \(\square \)

(b) When Linesearch 2 is used, we have for all j that

From Proposition 4.1 we also have

Adding these two inequalities, we obtain for all j that

Let \(\bar{y}\) be a weak limit point of the bounded sequence \(\{y^{k_j}\}\). Taking the limit in (5.8), and observing that \(\bar{z}^{k_j} \rightharpoonup \bar{x}\), \(x^{k_j} \rightharpoonup \bar{x}\) and \(\beta _{k_j}\rightarrow 0\), we obtain, thanks to condition \((U_3)\), that the left-hand side of the previous inequality tends to

Consequently, we have that \(\Vert x^{k_j}-y^{k_j}\Vert \rightarrow 0\).

(c) When Linesearch 3 is used, we have for all j that

From Proposition 4.1, we also have

Let \(\bar{y}\) be a weak limit point of the bounded sequence \(\{y^{k_j}\}\). Then combining the last two inequalities and taking the limit as \(j \rightarrow \infty \), we obtain

which implies that \(f(\bar{x},\bar{y}) \ge 0\). So

and \(\Vert x^{k_j}-y^{k_j}\Vert ^2 \rightarrow 0 \) when \(j \rightarrow \infty \).

(d) When Linesearch 4 is used, we have for all j that

Let \(\bar{y}\) be a limit point of the bounded sequence \(\{y^{k_j}\}\). Taking the limit as \(j \rightarrow \infty \) in the previous inequality, we obtain directly that the left-hand side tends to 0, and thus that \(\Vert x^{k_j}-y^{k_j}\Vert ^2 \rightarrow 0 \) when \(j \rightarrow \infty \). \(\square \)

Finally, using successively Propositions 5.1–5.4, Theorem 4.1 and Proposition 5.5, we obtain the following convergence results without any pseudo-monotonicity assumption.

Theorem 5.1

Assume that \(S_D\) is nonempty and conditions \((U_1)\) – \((U_3)\) are satisfied. Let \(\{x^k\}\) be a sequence generated by Algorithm 1 with one of the Linesearches 1 to 4 incorporated in Step 2. Then the sequence \(\{x^k\}\) converges weakly to a solution of problem \(\textit{EP}(f,C)\). The convergence of \(\{x^k\}\) is strong when Algorithm 2 is used instead of Algorithm 1.

Remark 5.4

Algorithm 2 allows us to construct a sequence of iterates converging strongly to a solution of the equilibrium problem in the non-pseudo-monotone case. However, this result is only interesting in the framework of infinite dimensional Hilbert spaces. Indeed, contrary to the pseudo-monotone situation, we cannot prove in the non-pseudo-monotone case that the sequence of iterates (strongly) converges to the projection of the starting point onto the solution set of the equilibrium problem.

Remark 5.5

When f is pseudo-monotone, the solution set \(S_E\) of the equilibrium problem is contained in \(C_\infty \). In that case, we have seen in Remark 4.2 that the sequence \(\{x^k\}\) generated by Algorithm 1 (Algorithm 2) converges weakly (strongly) to some \(\bar{x} \in S_E\) when any weak limit point of \(\{x^k\}\) belongs to \(S_E\). Now it is easy to see that this last property is a direct consequence of Theorem 4.1 and Proposition 5.5. So, in that situation, we do not need to use Proposition 3.4 or Theorem 3.2 to obtain the convergence. However, as we must take account of Proposition 4.2, we have to choose \(C_k \subset C \cap H_k\) in Step 3 of Algorithm 1.

Theorem 5.2

Assume that f is pseudo-monotone, \(S_E\) is nonempty and conditions \((U_1)\) – \((U_3)\) are satisfied. Let \(\{x^k\}\) be a sequence generated by Algorithm 1 with one of the Linesearches 1 to 4 incorporated in Step 2. If \(C_k= C \cap H_k\) for all k, then the sequence \(\{x^k\}\) converges weakly to a solution of problem \(\textit{EP}(f,C)\). The convergence of \(\{x^k\}\) is strong when Algorithm 2 is used instead of Algorithm 1.

In \(\mathbb {R}^n\), when f is pseudo-monotone and Linesearch 1 is used, we find again the convergence theorem of an algorithm proposed in [10]. When it is Linesearch 2 that is used, we obtain the convergence of an algorithm proposed in [2].

6 Numerical results

In this section, we consider some numerical examples to compare the efficiency of the methods obtained in Sect. 5. For each of these examples the corresponding equilibrium function is non-monotone. The algorithms are coded in MATLAB 7.11.0 and their behavior has been studied on two test problems with one of large size. Different values of the parameters and different starting points have been used. For each of these test problems, the number of iterations and the CPU time needed to get a solution are reported in a table where for each \(i=1,\dots ,4\), Algorithm Ai corresponds to Algorithm 1 with Linesearch i incorporated in Step 2. Furthermore, for each test, we have chosen \(\alpha =0.5\) and \(\rho = 0.01\) for Linesearches 1–3 and \(\rho = 0.99\) for Linesearch 4. The value of each \(\epsilon _k\) and \(\beta _k\) has been taken equal to zero. Finally, we have also used the stopping criterion \(\Vert x^{k}- y^k\Vert \le \epsilon \) with \(\epsilon =10^{-6}\) for all test problems.

To illustrate the behavior of our algorithms and to show their effectiveness, first we consider an equilibrium problem of dimension \(n=1000\) and afterwards an equilibrium problem arising from Nash–Cournot oligopolistic equilibrium models of electricity markets.

Problem 1

Let \(n=10^3\) and \(C=[-1,1]^n \subset \mathbb {R}^n\). The equilibrium function is defined, for every \(x=(x_1,\dots ,x_n), y=(y_1,\dots ,y_n) \in C\) by

The solution sets of this problem are

Since \(S_D \not = S_E\) and \(S_D \not = \emptyset \), our algorithms can be used for solving this problem.

Let us mention here that the subproblems \(\min _{y \in C} \{ f(x^k,y) + \frac{1}{2}\Vert y-x^k\Vert ^2 \}\) are in fact quadratic convex minimization problems

and that for all k, \(g^k = \nabla _2 f(z^k,z^k)=\Vert z^k\Vert ^2 (1,\dots ,1)^T\).

The four algorithms A1 to A4 have been applied for solving this problem with the values of parameters chosen above. The obtained solution is \((-1,\dots ,-1)\). Furthermore, the number of iterations and the CPU-time (s) are reported in Table 1. From these results, one can say that on this example, the numerical behavior of each algorithm is quite similar.

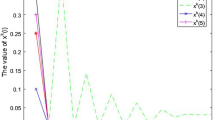

Problem 2

Consider the equilibrium problem recently investigated by Quoc, Anh and Muu in [25] and based on Nash–Cournot oligopolistic equilibrium models of electricity markets. Since, contrary to [8], the cost function f is nonsmooth and convex, the resulting equilibrium problem cannot be transformed into a variational inequality problem. More precisely, the equilibrium function \(f: \mathbb {R}^6 \times \mathbb {R}^6 \rightarrow \mathbb {R}\) is defined for each \(x,y \in \mathbb {R}^6\) by

where A is a nonpositive semidefinite matrix, B is a symmetric positive semidefinite matrix, \(a \in \mathbb {R}^6\) and d is a nonsmooth convex function. The values of A, B, and d(x) can be found in the statement (59) of [25]. Let us observe that for these values the equilibrium function is not monotone because the matrix A is not positive semidefinite. Finally the constraint set of this problem is defined by \(C = \{x \,|\,lb \le x \le ub\}\) where \(lb=(0,\dots ,0)\) and \(ub = (80,80,50,55,30,40)\).

The four algorithms A1 to A4 have been applied for solving this Problem and their numerical behavior has been compared with the one of the extragradient Algorithm (Algorithm 1 in [26]) applied on a monotone reformulation of the equilibrium problem (see Lemma 7 in [25]). The three starting points are

The number of iterations and the obtained CPU-time (s) are reported in Table 2. On this example, Algorithm A2 seems to have the best numerical behavior.

7 Conclusion

In this paper, we have presented and studied a very general class of extragradient methods for solving non-monotone equilibrium problems in a real Hilbert space. The difficulty with these methods is that projections have to be done onto intersections of half-spaces and that the number of these half-spaces increases at each iteration. So to avoid a huge number of constraints in the quadratic subproblems, a strategy would be to aggregate the constraints with the possibility of limiting their number to two. Such a technique, but for solving a similar problem, has been proposed in Sect. 7.4.4 of [28]. The use of such a method adapted to our situation could be the subject of a future research.

References

Anh, P.N.: A hybrid extragradient method extended to fixed point problems and equilibrium problems. Optimization 62, 271–283 (2013)

Anh, P.N., Kim, J.K., Hien, N.D.: A cutting hyperplane method for solving pseudo-monotone non-Lipschitzian equilibrium problems. J. Inequal. Appl. 2012, 288 (2012)

Ahn, P.N., Le Thi, H.A.: An Armijo-type method for pseudo-monotone equilibrium problems and its applications. J. Glob. Optim. 57, 803–820 (2013)

Bauschke, H., Combettes, P.: Convex Analysis Operator Theory in Hilbert Spaces. Springer, New York (2010)

Bigi, G., Castellani, M., Pappalardo, M., Passacantando, M.: Existence and solution methods for equilibria. Eur. J. Oper. Res. 227, 1–11 (2013)

Blum, E., Oettli, W.: From optimization and variational inequalities to equilibrium problems. Math. Stud. 63, 123–145 (1994)

Brønsted, A., Rockafellar, R.T.: On the subdifferentiability of convex functions. Proc. Am. Math. Soc. 16, 605–611 (1965)

Contreras, J., Klusch, M., Krawczyk, J.B.: Numerical solutions to Nash–Cournot equilibria in coupled constraint electricity markets. IEEE Trans. Power Syst. 19(1), 195–206 (2004)

Debrunner, H., Flor, P.: Ein Erweiterungssatz fur monotone Mengen. Arch. Math. 15, 445–447 (1964)

Dinh, B.V., Muu, L.D.: A projection algorithm for solving pseudo-monotone equilibrium problems and its application to a class of bilevel equilibria. Optimization 64, 559–575 (2015)

Fan, K.: A minimax inequality and applications. In: Shisha, O. (ed.) Inequality III, pp. 103–113. Academic Press, New York (1972)

Giannessi, F.: On Minty variational principle. In: Giannessi, F., Komlosi, S., Rapcsak, T. (eds.) New Trends in Mathematical Programming, pp. 93–99. Kluwer Academic Publishers, Dordrecht (1998)

Iusem, A.N., Sosa, W.: New existence results for equilibrium problems. Nonlinear Anal. 52, 621–635 (2003)

Iusem, A.N., Sosa, W.: On the proximal point method for equilibrium problem in Hilbert spaces. Optimization 59, 1259–1274 (2010)

Jahn, J.: Introduction to the Theory of Nonlinear Optimization. Springer, Berlin (2007)

Kinderlehrer, D., Stampacchia, G.: An Introduction to Variational Inequalities and Their Applications. Academic Press, New York (1980)

Konnov, I.V., Dyabilkin, D.A.: Non-monotone equilibrium problems: coercivity conditions and weak regularization. J. Glob. Optim. 49, 575–587 (2011)

Martinez-Yanes, C., Xu, H.K.: Strong convergence of the CQ method for fixed point iteration processes. Nonlinear Anal. 64, 2400–2411 (2006)

Mastroeni, G.: On auxiliary principle for equilibrium problems. In: Daniele, P., Giannessi, F., Maugeri, A. (eds.) Equilibrium Problems and Variational Models, pp. 289–298. Kluwer Academic Publishers, Dordrecht (2003)

Mastroeni, G.: Gap functions for equilibrium problems. J. Glob. Optim. 27, 411–426 (2003)

Minty, G.J.: On the generalization of a direct method of the calculus of variations. Bul. Am. Math. Soc. 73, 315–321 (1967)

Mosco, U.: Convergence of convex sets and of solutions of variational inequalities. Adv. Math. 3, 510–585 (1969)

Moudafi, A.: On the convergence of splitting proximal methods for equilibrium problems in Hilbert spaces. J. Math. Anal. Appl. 359, 508–513 (2009)

Muu, L.D., Quoc, T.D.: Regularization algorithms for solving monotone Ky Fan inequalities with application to a Nash-Cournot equilibrium model. J. Optim. Theory Appl. 142, 185–204 (2009)

Quoc, T.D., Anh, P.N., Muu, L.D.: Dual extragradient algorithms extended to equilibrium problems. J. Glob. Optim. 52, 139–159 (2012)

Quoc, T.D., Muu, L.D., Nguyen, V.H.: Extragradient algorithms extended to equilibrium problems. Optimization 57, 749–776 (2008)

Quoc, T.D., Muu, L.D.: Iterative methods for solving monotone equilibrium problems via dual gap functions. Comput. Optim. Appl. 51, 709–728 (2012)

Ruszczynski, A.: Nonlinear Optimization. Princeton University Press, Princeton (2006)

Santos, P., Scheimberg, S.: An inexact subgradient algorithm for equilibrium problems. Comput. Appl. Math. 30, 91–107 (2011)

Solodov, M.V., Svaiter, B.F.: A new projection method for variational inequality. SIAM J. Control Optim. 37, 765–776 (1999)

Strodiot, J.J., Nguyen, T.T.V., Nguyen, V.H.: A new class of hybrid extragradient algorithms for solving quasi-equilibrium problems. J. Glob. Optim. 56, 373–397 (2013)

Scheimberg, S., Santos, P.S.M.: A relaxed projection method for finite dimensional equilibrium problems. Optimization 60, 1193–1208 (2011)

Van, N.T.T., Strodiot, J.J., Nguyen, V.H.: The interior proximal extragradient method for solving equilibrium problems. J. Glob. Optim. 44, 175–192 (2009)

Van, N.T.T., Strodiot, J.J., Nguyen, V.H.: A bundle method for solving equilibrium problems. Math. Program. 116, 529–552 (2008)

Vuong, P.T., Strodiot, J.J., Nguyen, V.H.: Extragradient methods and linesearch algorithms for solving Ky Fan inequalities and fixed point problems. J. Optim. Theory Appl. 155, 605–627 (2012)

Vuong, P.T., Strodiot, J.J., Nguyen, V.H.: Projected viscosity subgradient methods for variational inequalities with equilibrium problem constraints in Hilbert spaces. J. Glob. Optim. 59, 173–190 (2014)

Ye, M., He, Y.: A double projection method for solving variational inequalities without monotonicity. Comput. Optim. Appl. 60, 141–150 (2015)

Zeng, L.C., Yao, J.Y.: Modified combined relaxation method for general monotone equilibrium problems in Hilbert spaces. J. Optim. Theory Appl. 131, 469–483 (2006)

Acknowledgments

The authors would like to thank the two anonymous referees and the Associate Editor for their valuable comments that allowed to improve substantially the original version of this paper. This research is funded by the Department of Science and Technology at Ho Chi Minh City, Vietnam. Computing resources and support provided by the Institute for Computational Science and Technology at Ho Chi Minh City (ICST) are gratefully acknowledged.

Author information

Authors and Affiliations

Corresponding author

Additional information

Dedicated to Professor Van Hien Nguyen

Rights and permissions

About this article

Cite this article

Strodiot, J.J., Vuong, P.T. & Nguyen, T.T.V. A class of shrinking projection extragradient methods for solving non-monotone equilibrium problems in Hilbert spaces. J Glob Optim 64, 159–178 (2016). https://doi.org/10.1007/s10898-015-0365-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-015-0365-5