Abstract

Constraint propagation techniques have heavily utilized interval arithmetic while the application of convex and concave relaxations has been mostly restricted to the domain of global optimization. Here, reverse McCormick propagation, a method to construct and improve McCormick relaxations using a directed acyclic graph representation of the constraints, is proposed. In particular, this allows the interpretation of constraints as implicitly defining set-valued mappings between variables, and allows the construction and improvement of relaxations of these mappings. Reverse McCormick propagation yields potentially tighter enclosures of the solutions of constraint satisfaction problems than reverse interval propagation. Ultimately, the relaxations of the objective of a non-convex program can be improved by incorporating information about the constraints.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A constraint satisfaction problem (CSP) consists of a finite set of variables, domains and constraints. A solution of a CSP is an assignment of elements of the domains to the variables so that all constraints are satisfied. In general, these problems are NP-hard and hence it is desirable to compute an enclosure of the solution set. Constraint propagation routines, or, more generally, contractors, are numerical methods that assist in this task. Using the information about the relationship between variables that is contained in a single constraint or in a set of constraints, they attempt to shrink an initial enclosure of the domains. Typically, intervals are used to enclose the solution sets whereas a constraint propagation technique for McCormick relaxations [39, 52] is proposed in this contribution that is applicable to factorable functions.

1.1 Review of constraint propagation methods

Constraint propagation was first developed for logic constraints on discrete domains [35]. Different notions of consistency, which describes the degree to which the remaining elements of the domain satisfy the constraints, have been introduced for this case [2, 9]. Constraint propagation has also been applied to connected sets that appear in so-called numerical CSPs [8, 13] and a large number of techniques have been proposed in the literature.

Many constraint propagation methods use ideas from interval analysis: they consider interval domains and use interval arithmetic. Cleary [12] and Davis [13] presented the first algorithms for constraint propagation with interval domains. Hyvönen [26] considered cases where exact numbers are insufficient and studied how interval arithmetic can be utilized in CSPs. Lhomme [33] proposed an extension of arc-consistency to numeric CSPs. Benhamou et al. [6] introduced the notion of box-consistency. Sam-Haroud and Faltings [49] approximated feasible regions by \(2^n\)-trees and presented algorithms to label leaves consistently. Benhamou and Older [5] proposed the notion of hull-consistency. Van Hentenryck et al. [59] showed how interval extensions can be used to calculate box-consistent labels, see also [60]. Benhamou et al. [7] proposed an algorithm for hull-consistency that does not require decomposing constraints into primitives. Vu et al. [61] proposed a method to construct inner and outer approximations of the feasible set using unions of intervals. Lebbah et al. [32] discussed how the reformulation-linearization technique can be used to relax nonlinear constraints and to aid in pruning the search space. Granvilliers and Benhamou [19] proposed an algorithm that prunes boxes using both constraint propagation techniques and the interval Newton method. Recently, Domes and Neumaier [15] proposed a constraint propagation method for linear and quadratic constraints and constraints and Jaulin [27] studied set-valued CSPs.

Jaulin et al. [28] discussed contractors based on interval analysis, many of which were also the subject of Neumaier’s book, though it focused on solving systems of equations in the presence of data uncertainty [43]. Recently, Schichl and Neumaier [50] studied directed acyclic graphs (DAG) to represent functions for interval evaluation. Vu et al. [62] used this representation and extended the contractor proposed in [7], which propagates interval information forward and backward along the DAG. Recently, Stuber et al. [55] extended contractors based on interval analysis to compute convex and concave relaxations of implicit functions. However, their methods require existence and uniqueness of the implicit function on the full domain.

1.2 Connection to global optimization

Continuous optimization problems are often solved to guaranteed global optimality using continuous branch-and-bound algorithms [17, 25]. It is well-known that the efficiency of these algorithms can be improved by discarding parts of the search space that are infeasible or that are known not to contain optimal solutions [56]. These tasks are often referred to as domain reduction. Obviously, global optimization is an important application of CSPs [44] and ideas originally developed for CSPs are routinely utilized in global optimization: logic-based methods can enhance and expedite optimization routines [24], constraint propagation is often used to discard parts of the domain where the solution is known not to exist (e.g., [21, 22, 47]). For example, constraint propagation routines are part of BARON’s pre-processing step [48]. It is also not coincidental that many constraint satisfaction tools use branch-and-prune frameworks inspired by global optimization algorithms to identify a set of boxes that contains all solutions (e.g., [19, 32, 59]). Also, see the recent discussion of feasibility-based bounds-tightening procedures in [3, 4]. Thus, borrowing and embracing ideas from the other field has been very beneficial for both fields.

As indicated by the “bound” keyword, branch-and-bound algorithms also require computable bounds on the range of the objective function and non-convex constraints. Interval methods have been used to provide such bounds [42, 43, 45, 56]. However, their slow convergence results in long computational times when the number of variables is large, which led to the development of several nonlinear convex relaxation techniques [1, 39, 41, 52, 56, 57] that are more accurate and have higher convergence order [10]. Methods for domain reduction, and CSPs in general, however, still rely almost exclusively on interval arithmetic.

1.3 Replacing intervals with relaxations in constraint propagation

Schichl and Neumaier [50] demonstrated that factorable functions can be represented alternatively as a DAGFootnote 1 and discussed how this representation can be used for calculations in interval analysis. Vu et al. [62] detailed how to propagate interval information on DAGs to improve interval bounds. Their method can utilize the information from equality and inequality constraints. We will refer to this idea as reverse interval propagation. In this paper, the idea is extended to convex and concave relaxations.

The remainder of the paper is organized as follows. In Sect. 2, the proposed method will be described briefly. In Sect. 3, the notion of a factorable function is defined and concepts from interval and McCormick analysis are reviewed. Section 4 recapitulates the important results for reverse interval propagation from [62], which are extended to McCormick objects in Sect. 5. Section 6 discusses how the theoretical results from the previous section can be applied to construct, improve and utilize relaxations in the context of CSPs and global optimization. Section 7 describes how the method can be implemented and some small illustrative examples are given in Sect. 8. The results of applying the method to a set of more complicated global optimization test problems are shown in Sect. 9. Section 10 summarizes the contributions and concludes the paper.

2 Method description

In this section, we summarize the proposed method and describe how it can be applied in the context of solving constraint satisfaction problems and optimization problems globally.

The class of factorable functions encompasses most functions that can be implemented as computer programs without conditional statements. It is well-known that relaxations of factorable functions can be computed using McCormick’s composition rule [39, 52]; the obtained relaxations are often referred to as McCormick relaxations. Here, it is proposed to use the DAG representation of the constraints to also propagate McCormick relaxations backward. For the benefit of the reader we provide an interpretation of relaxations in the context of constraint propagation. Suppose we partition the variables into \(p\) and \(x\). Whereas reverse interval propagation yields a constant interval bound that all feasible \((x,p)\) must satisfy, reverse McCormick propagation yields bounds that are (convex and concave) functions of \(p\). For a given \(p\) in the domain, all \(x\) so that \((p,x)\) is feasible are bounded. Figure 1 illustrates this interpretation. It shows that a domain (dash-dotted box) can be shrunk by interval constraint propagation to find an outer approximation of the feasible region (dotted box). However, the relaxations (solid and dashed lines) can provide a tighter approximation that is a function of \(p\). For example, consider \(p_1\), for which a thick solid line shows all feasible \(x\). Given \(p_1\), the relaxations restrict \(x\) to the interval (curly brace in Fig. 1) whereas the interval bounds only constrain them to the larger interval (square bracket). Furthermore, since the bounds are convex and concave functions of \(p\), it is tractable, for example, to calculate a reduced interval domain using affine relaxations based on the subgradients of the relaxations [41] or by minimizing and maximizing the relaxations of each \(x_i\) on the \(p\) domain.

Illustration of domain reduction by reverse interval and McCormick propagation. The gray area is the set of all feasible points, the dash-dotted line is the original domain, the dotted line is the reduced domain using reverse interval propagation. The solid and dashed lines are relaxations of the feasible region that are functions of \(p\)

When solving global optimization problems, the improved domains for \(x\) and \(p\) can be used as input to the calculation of the relaxations of the objective function. By taking advantage of the information about the feasible region, it is possible to improve the tightness of the objective function relaxations, a very desirable feature in global optimization.

The method can be described as follows: First, a particular partitioning of the variables is selected and initial interval bounds \(({{{\varvec{p}}}},{{{\varvec{x}}}})\) on the variables are specified. For each variable suitable initial values are then derived from these bounds. Next, for each factor of the function \({F}\) bounds and McCormick relaxations are computed. After this forward pass, bounds as well as convex and concave relaxations of the reachable set \(\{{F}(x,p):(x,p)\in {{{\varvec{x}}}}\times {{{\varvec{p}}}}\}\) have been constructed. Now, known restrictions of this reachable set, i.e., equality or inequality constraints are used to tighten these bounds and relaxations. Lastly, this information is propagated back to the variables \(x\) and \(p\) by “inverting”, in some sense, the operation related to each factor of the function. This yields the relaxations of the feasible space described above.

3 Preliminary definitions and results

In this section, factorable functions will be formally defined with the following development in mind. The notation follows [51], Chapter 2] closely. Also, some concepts from interval and McCormick analysis are reviewed. In particular, Sect. 3.3 utilizes many definitions introduced in [51], Chapter 2].

3.1 Factorable functions

Loosely speaking, a function is factorable if it can be represented as a finite sequence of simple binary operations and univariate functions.

Herein, a function will be denoted as a triple \((o,B,R)\) where \(B\) is the domain, \(R\) is the range, and \(o\) is a mapping from \(B\) into \(R\), \(o:B\rightarrow R\). Permissible functions shall include binary addition \((+,{\mathbb {R}}^2,{\mathbb {R}})\) and multiplication \((\times ,{\mathbb {R}}^2,{\mathbb {R}})\) as well as a collection of univariate functions, cf. Definition 1.

Definition 1

Let \({{\fancyscript{L}}}\) denote a set of functions \((u,B,{\mathbb {R}})\) where \(B\subset {\mathbb {R}}\). \({{\fancyscript{L}}}\) will be referred to as a library of univariate functions.

It will be required that, for each \((u,B,{\mathbb {R}})\in {{\fancyscript{L}}}\), \(u(x)\) can be evaluated on a computer for any \(x\in B\). Additional assumptions will be introduced when necessary.

Factorable functions will be defined in terms of computational sequences, which are ordered sequences of the permissible basic operations defined above. Every such sequence of computations defines a sequence of intermediate quantities called factors. In the following definition, the factors are denoted by \(v_k\), and the functions \(\pi _k\) are used to select one or two previous factors to be the operand(s) of the next operation. Note that a computational sequence is a specialization of a DAG because it allows binary and unary operations only.

Definition 2

Let \(n_i,n_o\ge 1\). A \({{\fancyscript{L}}}\)-computational sequence with \(n_i\) inputs and \(n_o\) outputs is a pair \(({{\fancyscript{S}}},\pi _o)\), where:

-

1.

\({{\fancyscript{S}}}\) is a finite sequence of pairs \(\{((o_k,B_k,{\mathbb {R}}),(\pi _k,{\mathbb {R}}^{k-1},{\mathbb {R}}^{d_k}))\}_{k={n_i+1}}^{n_f}\) with every element defined by one of the following options:

-

(a)

\((o_k,B_k,{\mathbb {R}})\) is either \((+,{\mathbb {R}}^2,{\mathbb {R}})\) or \((\times ,{\mathbb {R}}^2,{\mathbb {R}})\) and \(\pi _k:{\mathbb {R}}^{k-1}\rightarrow {\mathbb {R}}^2\) is defined by \(\pi _k(v)=(v_i,v_j)\) for some integers \(i,j\in \{1,\ldots ,k-1\}\).

-

(b)

\((o_k,B_k,{\mathbb {R}})\in {{\fancyscript{L}}}\) and \(\pi _k:{\mathbb {R}}^{k-1}\rightarrow {\mathbb {R}}\) is defined by \(\pi _k(v)=v_i\) for some integer \(i\in \{1,\ldots ,k-1\}\).

-

(a)

-

2.

\(\pi _o:{\mathbb {R}}^{n_f}\rightarrow {\mathbb {R}}^{n_o}\) is defined by \(\pi _o(v)=(v_{i(1)},\ldots ,v_{i(n_o)})\) for some integers \(i(1),\ldots ,i(n_o)\in \{1,\ldots ,n_f\}\).

A computational sequence defines a function \({F}_{{\fancyscript{S}}}:D_{{\fancyscript{S}}}\subset {\mathbb {R}}^{n_i} \rightarrow {\mathbb {R}}^{n_o}\) by the following construction.

Definition 3

Let \(({{\fancyscript{S}}},\pi _o)\) be a \({{\fancyscript{L}}}\)-computational sequence with \(n_i\) inputs and \(n_o\) outputs. Define the sequence of factors \(\{(v_k,D_k,{\mathbb {R}})\}_{k=1}^{n_f}\) with \(D_k\subset {\mathbb {R}}^{n_i}\), where

-

1.

for \(k=1,\ldots ,n_i\), \(D_k={\mathbb {R}}^{n_i}\) and \(v_k(x)=x_k\), \(\forall x\in D_k\),

-

2.

for \(k=n_i+1,\ldots ,n_f\), \(D_k=\{x\in D_{k-1}:\pi _k(v_1(x),\ldots ,v_{k-1}(x))\in B_k\}\) and \(v_k(x)=o_k(\pi _k(v_1(x),\ldots ,v_{k-1}(x)))\), \(\forall x\in D_k\).

The set \(D_{{\fancyscript{S}}}\equiv D_{n_f}\) is the natural domain of \(({{\fancyscript{S}}},\pi _o)\), and the natural function \(({F}_{{\fancyscript{S}}},D_{{\fancyscript{S}}},{\mathbb {R}}^{n_o})\) is defined by \({F}_{{\fancyscript{S}}}(x)=\pi _o(v_1(x),\ldots ,v_{n_f}(x))\), \(\forall x\in D_{{\fancyscript{S}}}\).

Definition 4

A function \({F}:D\subset {\mathbb {R}}^n\rightarrow {\mathbb {R}}^m\) is \({{\fancyscript{L}}}\)-factorable if there exists a \({{\fancyscript{L}}}\)-computational sequence \(({{\fancyscript{S}}},\pi _o)\) with \(n\) inputs and \(m\) outputs such that the natural function \(({F}_{{\fancyscript{S}}},D_{{\fancyscript{S}}},{\mathbb {R}}^m)\) satisfies \(D\subset D_{{\fancyscript{S}}}\) and \({F}={F}_{{\fancyscript{S}}}|_D\).

3.2 Interval analysis

Definition 5

We conform to the standardized interval notation outlined in [30]. For \(a,b\in {\mathbb {R}}\), \(a\le b\) define the interval \([a,b]\) as the compact, connected set \(\{x\in {\mathbb {R}}:a\le x\le b\}\). The set of all nonempty intervals is denoted as \({\mathbb {I\! R}}\), and intervals are denoted by bold face letters, \({{{\varvec{x}}}}\in {\mathbb {I\! R}}\). The set of \(n\)-dimensional boxes (Cartesian products of \(n\) intervals) is denoted by \({\mathbb {I\! R}}^n\). The “interval vector” notation \(({{{\varvec{x}}}}_1, {{{\varvec{x}}}}_2, \ldots , {{{\varvec{x}}}}_n)\) will often be used for \({{{\varvec{x}}}}_1 \times {{{\varvec{x}}}}_2 \times \cdots \times {{{\varvec{x}}}}_n\). Suppose \({{{\varvec{x}}}}\in {\mathbb {I\! R}}^n\). Then, the lower and upper bounds of \({{{\varvec{x}}}}\) are denoted as \(\underline{x}\) and \(\overline{x}\), respectively. Suppose \(Z\subset {\mathbb {R}}^n\). The set of all interval subsets of \(Z\) is denoted by \({\mathbb {I}}\! Z\subset {\mathbb {I\! R}}^n\). Lastly, if \(Z\) is nonempty and bounded, then \(\Box Z\) with \((\Box {Z})_i=[\inf _{z\in Z} z_i,\sup _{z\in Z} z_i]\), \(i=1,\ldots ,n\) denotes the interval hull of \(Z\), the tightest box enclosing \(Z\). Note that \((\cdot )^L\) and \((\cdot )^U\) will be used for more complex expressions to denote the respective lower and upper bound vectors of a box.

We will encounter functions that either return a vector of reals or the symbol \(\mathrm {NaN}\), or “not a number”, which can be thought of as undefined or unspecified. It is convenient to define \({\mathbb {R}}_\emptyset ={\mathbb {R}}\cup \{\mathrm {NaN}\}\). We also define \({}^*\mathbb {R}\! = {\mathbb {R}}\cup \{-\infty ,+\infty \}\) to denote the extended reals. For the purposes of this paper it is also necessary to extend the definition of an interval to include unbounded intervals and empty intervals, which are commonly excluded in the definition of \({\mathbb {I\! R}}\) (e.g, [30]). Here, \(\emptyset \) is used to denote the empty interval.

Definition 6

Let \({\mathbb {I}}_\emptyset {\mathbb {R}}\equiv {\mathbb {I\! R}}\cup \{\emptyset \}\), and let the set of all interval subsets of \(Z\subset {\mathbb {R}}^n\) including the empty interval be denoted by \({\mathbb {I}}_\emptyset Z\subset {\mathbb {I}}_\emptyset {\mathbb {R}}^n\). Similarly to Definition 5, define the set of all extended intervals as \({}^*\mathbb {I}\! {\mathbb {R}}=\{[a,b]:a \in {\mathbb {R}}\cup \{-\infty \},b \in {\mathbb {R}}\cup \{+\infty \}, a\le b\}\cup \{\emptyset \}\), which includes all unbounded intervals and also the empty interval. Lastly, the set of all extended interval subsets of \(Z\subset {\mathbb {R}}^n\) is denoted by \({}^*\mathbb {I}\! Z\subset {}^*\mathbb {I}\! {\mathbb {R}}^n\).

We will follow the conventions that real-valued operations involving \(\mathrm {NaN}\) result in \(\mathrm {NaN}\), that \([\mathrm {NaN},\mathrm {NaN}]=\emptyset \), that \(\mathrm {NaN}\) is an element of any interval, that every interval \({{{\varvec{x}}}}\in {\mathbb {I}}_\emptyset {\mathbb {R}}\) or \({{{\varvec{x}}}}\in {}^*\mathbb {I}\! {\mathbb {R}}\) contains the empty interval and that any interval operation involving the empty interval will again result in the empty interval with the exception of the construction of the interval hull where \(\Box \{{{{\varvec{x}}}},\emptyset \}={{{\varvec{x}}}}\) for any \({{{\varvec{x}}}}\in {}^*\mathbb {I}\! {\mathbb {R}}^n\). Note that \({{{\varvec{x}}}}=\emptyset \) for \({{{\varvec{x}}}}\in {}^*\mathbb {I}\! {\mathbb {R}}^n\) if \({{{\varvec{x}}}}_i=\emptyset \) for some \(i=1,\ldots ,n\). Otherwise, the operations of interval arithmetic extend naturally. For any \(x\in {\mathbb {R}}\) and \(\circ \in \{+,-,\cdot ,/\}\), define \(x\circ \pm \infty =\lim _{a\rightarrow \pm \infty }x\circ a\).

As described in detail in Sect. 6.1 one benefit of this definition is the ease with which potential domain violations occurring during an evaluation of the natural function can be handled. If we let points outside the natural domain evaluate to \(\mathrm {NaN}\) which, by our convention, is an element of any interval then the all-important inclusion property of interval functions, which we will define below, can be maintained.

Definition 7

Let \({F}:D\subset {\mathbb {R}}^n\rightarrow {\mathbb {R}}_\emptyset ^m\), and for any \({{{\varvec{x}}}}\subset D\), let \(\mathrm{range}({F},{{{\varvec{x}}}})\) denote the image of the box \({{{\varvec{x}}}}\) under \({F}\). A mapping \({{{\varvec{F}}}}:{{\mathfrak {D}}}\subset {}^*\mathbb {I}\! D\rightarrow {}^*\mathbb {I}\! {\mathbb {R}}^m\) is an inclusion function for \({F}\) on \({{\mathfrak {D}}}\) if \(\mathrm{range}({F},{{{\varvec{x}}}})\subset {{{\varvec{F}}}}({{{\varvec{x}}}})\), \(\forall {{{\varvec{x}}}}\in {{\mathfrak {D}}}\).

Definition 8

Let \(D\subset {\mathbb {R}}^n\). A set \({{\mathfrak {D}}}\subset {}^*\mathbb {I}\! {\mathbb {R}}^n\) is an interval extension of \(D\) if \({{\mathfrak {D}}}\subset {}^*\mathbb {I}\! D\) and every \(x\in D\) satisfies \([x,x]\in {{\mathfrak {D}}}\). Let \({F}:D\rightarrow {\mathbb {R}}_\emptyset ^m\). A function \({{{\varvec{F}}}}:{{\mathfrak {D}}}\subset {}^*\mathbb {I}\! D\rightarrow {}^*\mathbb {I}\! {\mathbb {R}}^m\) is an interval extension of \({F}\) on \(D\) if \({{\mathfrak {D}}}\) is an interval extension of \(D\) and \({{{\varvec{F}}}}([x,x])=[{F}(x),{F}(x)]\) for every \(x\in D\).

Definition 9

Let \({{{\varvec{F}}}}:{{\mathfrak {D}}}\subset {}^*\mathbb {I}\! {\mathbb {R}}^n\rightarrow {}^*\mathbb {I}\! {\mathbb {R}}^m\). \({{{\varvec{F}}}}\) is inclusion monotonic on \({{\mathfrak {D}}}\) if \({{{\varvec{x}}}}_1\subset {{{\varvec{x}}}}_2\) implies that \({{{\varvec{F}}}}({{{\varvec{x}}}}_1)\subset {{{\varvec{F}}}}({{{\varvec{x}}}}_2)\), \(\forall {{{\varvec{x}}}}_1,{{{\varvec{x}}}}_2\in {{\mathfrak {D}}}\).

Theorem 1

Let \({F}:D\subset {\mathbb {R}}^n\rightarrow {\mathbb {R}}_\emptyset ^m\) and let \({{{\varvec{F}}}}:{{\mathfrak {D}}}\rightarrow {}^*\mathbb {I}\! {\mathbb {R}}^m\) be an interval extension of \({F}\). If \({{{\varvec{F}}}}\) is inclusion monotonic on \({{\mathfrak {D}}}\), then \({{{\varvec{F}}}}\) is an inclusion function for \({F}\) on \({{\mathfrak {D}}}\).

Proof

Choose any \({{{\varvec{x}}}}\in {{\mathfrak {D}}}\) and any \(x\in {{{\varvec{x}}}}\). Since \(x\in D\), it follows that \([x,x]\in {{\mathfrak {D}}}\). Since \(\emptyset \in {{{\varvec{F}}}}({{{\varvec{x}}}})\) is always true, if \(f_i(x)=\mathrm {NaN}\) for some \(i\in \{1,\ldots ,m\}\) then \({F}(x)\in {{{\varvec{F}}}}({{{\varvec{x}}}})\). Otherwise, the result follows from [51], Theorem 2.3.4]. \(\square \)

Define the typical inclusion functions for addition and multiplication: let the functions \((+,{\mathbb {I}}_\emptyset {\mathbb {R}}^2,{\mathbb {I}}_\emptyset {\mathbb {R}})\) and \((\times ,{\mathbb {I}}_\emptyset {\mathbb {R}}^2,{\mathbb {I}}_\emptyset {\mathbb {R}})\) be defined by \(+({{{\varvec{x}}}},{{{\varvec{y}}}})\equiv [\underline{x}+\underline{y},\overline{x}+\overline{y}]\) and \(\times ({{{\varvec{x}}}},{{{\varvec{y}}}})\equiv [\min (\underline{x}\underline{y}, \underline{x}\overline{y},\overline{x}\underline{y},\overline{x}\,\overline{y}), \max (\underline{x}\underline{y},\underline{x}\overline{y},\overline{x}\underline{y},\overline{x}\,\overline{y})]\) and recall our conventionFootnote 2 that any operation involving the empty interval results in an empty interval, i.e., \(+({{{\varvec{x}}}},\emptyset )=+(\emptyset ,{{{\varvec{x}}}})=\emptyset \) or \(\times ({{{\varvec{x}}}},\emptyset )=\times (\emptyset ,{{{\varvec{x}}}})=\emptyset \) for any \({{{\varvec{x}}}}\in {\mathbb {I}}_\emptyset {\mathbb {R}}\).

Assumption 1

Assume that for every \((u,B,{\mathbb {R}})\in {{\fancyscript{L}}}\), an interval extension \((u,{\mathbb {I}}_\emptyset B,{\mathbb {I}}_\emptyset {\mathbb {R}})\) is known. Furthermore, this interval extension is inclusion monotonic on \({\mathbb {I}}_\emptyset B\).

Suppose that Assumption 1 holds and that \(({{\fancyscript{S}}},\pi _o)\) is a \({{\fancyscript{L}}}\)-computational sequence. Then, to any element \((o_k,\pi _k)\) of \({{\fancyscript{S}}}\) a corresponding \((o_k,{\mathbb {I}}_\emptyset B_k,{\mathbb {I}}_\emptyset {\mathbb {R}})\) exists. Also, the functions \((\pi _k,{\mathbb {I}}_\emptyset {\mathbb {R}}^{k-1},{\mathbb {I}}_\emptyset {\mathbb {R}})\) or \((\pi _k,{\mathbb {I}}_\emptyset {\mathbb {R}}^{k-1},{\mathbb {I}}_\emptyset {\mathbb {R}}^2)\) with \(\pi _k({{{\varvec{v}}}})=({{{\varvec{v}}}}_i)\) or \(\pi _k({{{\varvec{v}}}})=({{{\varvec{v}}}}_i,{{{\varvec{v}}}}_j)\) extend \((\pi _k,{\mathbb {R}}^{k-1},{\mathbb {R}})\) or \((\pi _k,{\mathbb {R}}^{k-1},{\mathbb {R}}^2)\) naturally. Note that we do not distinguish notationally between the real and interval versions of the elementary operations and functions \(u, \pi _k,\) and \(o_k\). Rather, they are assumed to always act on the class of the object in their argument(s).

Definition 10

For every \({{\fancyscript{L}}}\)-computational sequence \(({{\fancyscript{S}}},\pi _o)\) with \(n_i\) inputs and \(n_o\) outputs, define the sequence of inclusion factors \(\{({{{\varvec{v}}}}_k,{{\mathfrak {D}}}_k,{\mathbb {I}}_\emptyset {\mathbb {R}})\}_{k=1}^{n_f}\) where

-

1.

for all \(k=1,\ldots ,n_i\), \({{\mathfrak {D}}}_k={\mathbb {I}}_\emptyset {\mathbb {R}}^{n_i}\) and \({{{\varvec{v}}}}_k({{{\varvec{x}}}})={{{\varvec{x}}}}_k\), \(\forall {{{\varvec{x}}}}\in {{\mathfrak {D}}}_k\),

-

2.

for all \(k=n_i+1,\ldots ,n_f\), \({{\mathfrak {D}}}_k=\{{{{\varvec{x}}}}\in {{\mathfrak {D}}}_{k-1}:\pi _k({{{\varvec{v}}}}_1({{{\varvec{x}}}}), \ldots ,{{{\varvec{v}}}}_{k-1}({{{\varvec{x}}}}))\in {\mathbb {I}}_\emptyset B_k\}\) and \({{{\varvec{v}}}}_k({{{\varvec{x}}}})=o_k(\pi _k({{{\varvec{v}}}}_1({{{\varvec{x}}}}),\ldots ,{{{\varvec{v}}}}_{k-1}({{{\varvec{x}}}})))\), \(\forall {{{\varvec{x}}}}\in {\mathfrak {D}}_k\).

The natural interval extension of \(({{\fancyscript{S}}},\pi _o)\) is the function \(({{{\varvec{F}}}}_{{\fancyscript{S}}},{{\mathfrak {D}}}_{{\fancyscript{S}}},{\mathbb {I}}_\emptyset {\mathbb {R}}^{n_o})\) defined by \({{\mathfrak {D}}}_{{\fancyscript{S}}}\equiv {{\mathfrak {D}}}_{n_f}\) and \({{{\varvec{F}}}}_{{\fancyscript{S}}}({{{\varvec{x}}}})=\pi _o({{{\varvec{v}}}}_1({{{\varvec{x}}}}),\ldots ,{{{\varvec{v}}}}_{n_f}({{{\varvec{x}}}}))\), \(\forall {{{\varvec{x}}}}\in {{\mathfrak {D}}}_{{\fancyscript{S}}}\).

Theorem 2

Let \(({{\fancyscript{S}}},\pi _o)\) be a \({{\fancyscript{L}}}\)-computational sequence with associated natural function \(({F}_{{\fancyscript{S}}},D_{{\fancyscript{S}}},{\mathbb {R}}^{n_o})\). The natural interval extension \(({{{\varvec{F}}}}_{{\fancyscript{S}}},{{\mathfrak {D}}}_{{\fancyscript{S}}},{\mathbb {I}}_\emptyset {\mathbb {R}}^{n_o})\) is an inclusion monotonic interval extension of \(({F}_{{\fancyscript{S}}},D_{{\fancyscript{S}}},{\mathbb {R}}^{n_o})\) on \({{\mathfrak {D}}}_{{\fancyscript{S}}}\) and an inclusion function for \({F}_{{\fancyscript{S}}}\) on \({{\mathfrak {D}}}_{{\fancyscript{S}}}\). In particular, each inclusion factor \({{{\varvec{v}}}}_k\) of \(({{\fancyscript{S}}},\pi _o)\) is an inclusion monotonic interval extension of \(v_k\) on \({{\mathfrak {D}}}_{{\fancyscript{S}}}\) for all \(k=1,\ldots ,n_f\).

Proof

See [51], Theorem 2.3.11] together with Theorem 1. \(\square \)

3.3 McCormick analysis

Let \(D\subset {\mathbb {R}}^n\) be convex. A vector-valued function \({G}:D\rightarrow {\mathbb {R}}^m\) is convex on \(D\) if each component is convex on \(D\). Similarly, it is concave on \(D\) if each component is concave on \(D\). For any set \(A\), let \({\mathbb {P}}(A)\) denote the power set, or set of all subsets, including the empty set, of \(A\).

Definition 11

Let \(D\subset {\mathbb {R}}^n\) be a convex set and \({F}:D \rightarrow {\mathbb {P}}({\mathbb {R}}^m)\). A function  is a convex relaxation, or convex underestimator, of \({F}\) on \(D\) if

is a convex relaxation, or convex underestimator, of \({F}\) on \(D\) if  is convex on \(D\) and

is convex on \(D\) and  , \(\forall x\in D\) and \(i=1,\ldots ,m\). A convex relaxation \({G}:D\rightarrow {\mathbb {R}}^m\) is called the convex envelope of \({F}\) on \(D\) if

, \(\forall x\in D\) and \(i=1,\ldots ,m\). A convex relaxation \({G}:D\rightarrow {\mathbb {R}}^m\) is called the convex envelope of \({F}\) on \(D\) if  for all convex relaxations of \({F}\), \(\forall x\in D\) and \(i=1,\ldots ,m\). A function \(\hat{{F}}:D\rightarrow {\mathbb {R}}^m\) is a concave relaxation, or concave overestimator, of \({F}\) on \(D\) if \(\hat{{F}}\) is concave on \(D\) and \(\hat{f}_i(x)\ge \sup \{f_i(x)\}\), \(\forall x\in D\) and \(i=1,\ldots ,m\). A concave relaxation \({G}:D\rightarrow {\mathbb {R}}^m\) is called the concave envelope of \({F}\) on \(D\) if \(g_i(x)\le \hat{f}_i(x)\) for all concave relaxations of \({F}\), \(\forall x\in D\) and \(i=1,\ldots ,m\).

for all convex relaxations of \({F}\), \(\forall x\in D\) and \(i=1,\ldots ,m\). A function \(\hat{{F}}:D\rightarrow {\mathbb {R}}^m\) is a concave relaxation, or concave overestimator, of \({F}\) on \(D\) if \(\hat{{F}}\) is concave on \(D\) and \(\hat{f}_i(x)\ge \sup \{f_i(x)\}\), \(\forall x\in D\) and \(i=1,\ldots ,m\). A concave relaxation \({G}:D\rightarrow {\mathbb {R}}^m\) is called the concave envelope of \({F}\) on \(D\) if \(g_i(x)\le \hat{f}_i(x)\) for all concave relaxations of \({F}\), \(\forall x\in D\) and \(i=1,\ldots ,m\).

Remark 1

Definition 11 allows that \(f_i(x)=\mathrm {NaN}\) for some \(i\in \{1,\ldots ,m\}\) and \(x\in D\). In this case, the inequality defining a relaxation will hold for any function. However, the convexity and concavity requirement must still be met by  and \(\hat{{F}}\), respectively, and this requirement constrains the set of functions that satisfy the definition, as exemplified in Fig. 7.

and \(\hat{{F}}\), respectively, and this requirement constrains the set of functions that satisfy the definition, as exemplified in Fig. 7.

The following notation was introduced in [51]. While it differs from the previously used notation for McCormick relaxations, it is more compact and very useful for the proposed operations on computational sequences, and it also makes the relationship with interval arithmetic more apparent. In the latter, information is passed from one operation in the sequence of factors to the next in the form of intervals. McCormick’s procedure to construct relaxations [39], on the other hand, requires a box \({{{\varvec{x}}}}\) and a point \(x\in {{{\varvec{x}}}}\) as input and returns three values: a box \({{{\varvec{v}}}}_k({{{\varvec{x}}}})\), which encloses the image of \({{{\varvec{x}}}}\) under \(v_k\), and two additional values  and \(\hat{v}_k({{{\varvec{x}}}},x)\), which represent the value of the convex and concave relaxation of \(v_k\) on \({{{\varvec{x}}}}\) evaluated at \(x\). After a recent generalization, one can also consider mappings that take a box and two relaxation values as input and return a box and two relaxation values; these are called generalized McCormick relaxations [52]. One advantage of this generalization is that it yields mappings with conformable inputs and outputs, which are hence composable.

and \(\hat{v}_k({{{\varvec{x}}}},x)\), which represent the value of the convex and concave relaxation of \(v_k\) on \({{{\varvec{x}}}}\) evaluated at \(x\). After a recent generalization, one can also consider mappings that take a box and two relaxation values as input and return a box and two relaxation values; these are called generalized McCormick relaxations [52]. One advantage of this generalization is that it yields mappings with conformable inputs and outputs, which are hence composable.

Definition 12

Let \({\mathbb {M\! R}}^n\equiv \{({{{\varvec{z}}}}^B,{{{\varvec{z}}}}^C)\in {\mathbb {I\! R}}^n\times {\mathbb {I\! R}}^n:{{{\varvec{z}}}}^B\cap {{{\varvec{z}}}}^C\ne \emptyset \}\). Elements of \({\mathbb {M\! R}}^n\) are denoted by script capitals, \({{\fancyscript{Z}}}\in {\mathbb {M\! R}}^n\). For any \({{\fancyscript{Z}}}\in {\mathbb {M\! R}}^n\), the notations \({{{\varvec{z}}}}^{B},{{{\varvec{z}}}}^{C}\in {\mathbb {I\! R}}^n\) and  will be commonly used to denote the boxes and vectors satisfying

will be commonly used to denote the boxes and vectors satisfying  . For any \(D\subset {\mathbb {R}}^n\), let \({\mathbb {M}}\! D\) denote the set \(\{{{\fancyscript{Z}}}\in {\mathbb {M\! R}}^n:{{{\varvec{z}}}}^B\subset D\}\). Note that for more complex expressions, \((\cdot )^{C}\) will be used to denote the relaxation box, and \((\cdot )^{cv}\) and \((\cdot )^{cc}\) will be used to denote the convex and concave relaxation vectors, respectively, of a McCormick object.

. For any \(D\subset {\mathbb {R}}^n\), let \({\mathbb {M}}\! D\) denote the set \(\{{{\fancyscript{Z}}}\in {\mathbb {M\! R}}^n:{{{\varvec{z}}}}^B\subset D\}\). Note that for more complex expressions, \((\cdot )^{C}\) will be used to denote the relaxation box, and \((\cdot )^{cv}\) and \((\cdot )^{cc}\) will be used to denote the convex and concave relaxation vectors, respectively, of a McCormick object.

In this paper, it is also necessary to consider unbounded and empty McCormick objects. Analogous to Definition 6, define the sets \({\mathbb {M}}_\emptyset {\mathbb {R}}^n\equiv \{({{{\varvec{z}}}}^B,{{{\varvec{z}}}}^C)\in {\mathbb {I}}_\emptyset {\mathbb {R}}^n\times {\mathbb {I}}_\emptyset {\mathbb {R}}^n:{{{\varvec{z}}}}^B\cap {{{\varvec{z}}}}^C\ne \emptyset \vee {{{\varvec{z}}}}^C=\emptyset \}\) and \({}^*{\mathbb {M}}\! {\mathbb {R}}^n\equiv \{({{{\varvec{z}}}}^B,{{{\varvec{z}}}}^C)\in {}^*\mathbb {I}\! {\mathbb {R}}^n\times {}^*\mathbb {I}\! {\mathbb {R}}^n:{{{\varvec{z}}}}^B\cap {{{\varvec{z}}}}^C\ne \emptyset \vee {{{\varvec{z}}}}^C=\emptyset \}\), which are extensions of \({\mathbb {M\! R}}^n\). Also, define \({\mathbb {M}}_\emptyset D\) and \({}^*{\mathbb {M}}\! D\) for any \(D\in {\mathbb {R}}^n\) analogous to \({\mathbb {I}}_\emptyset D\) and \({}^*\mathbb {I}\! D\). Introduce \({{\mathrm{Enc}}}:{}^*{\mathbb {M}}\! {\mathbb {R}}^n\rightarrow {}^*\mathbb {I}\! {\mathbb {R}}^n\) defined by \({{\mathrm{Enc}}}({{\fancyscript{Z}}})={{{\varvec{z}}}}^B\cap {{{\varvec{z}}}}^C\) for all \({{\fancyscript{Z}}}\in {}^*{\mathbb {M}}\! {\mathbb {R}}^n\). This function is necessary since for \(z\in {\mathbb {R}}_\emptyset ^n\), \(z\in {{\fancyscript{Z}}}\) is not well-defined whereas \(z\in {{\mathrm{Enc}}}({{\fancyscript{Z}}})\) is.

Next, we formalize McCormick’s technique by defining operations on \({\mathbb {M}}_\emptyset {\mathbb {R}}^n\). We introduce the concept of a relaxation function, which is analogous to the notion of an inclusion function in interval analysis, and is the fundamental object that we want to compute for a given real-valued function. Then, we show how relaxation functions can be obtained through a simpler construction, just as inclusion functions can be constructed from inclusion monotonic interval extensions. First, however, some preliminary concepts are necessary.

Definition 13

Let \({{\fancyscript{X}}},{{\fancyscript{Y}}}\in {}^*{\mathbb {M}}\! {\mathbb {R}}^n\). \({{\fancyscript{X}}}\) and \({{\fancyscript{Y}}}\) are coherent if \({{{\varvec{x}}}}^B={{{\varvec{y}}}}^B\). A set \({{\fancyscript{D}}}\subset {}^*{\mathbb {M}}\! {\mathbb {R}}^n\) is coherent if every pair of elements is coherent. A set \({{\fancyscript{D}}}\subset {}^*{\mathbb {M}}\! {\mathbb {R}}^n\) is closed under coherence if, for any coherent \({{\fancyscript{X}}},{{\fancyscript{Y}}}\in {}^*{\mathbb {M}}\! {\mathbb {R}}^n\), \({{\fancyscript{X}}}\in {\fancyscript{D}}\) implies \({{\fancyscript{Y}}}\in {{\fancyscript{D}}}\).

For any coherent \({{\fancyscript{X}}}_1,{{\fancyscript{X}}}_2\in {}^*{\mathbb {M}}\! {\mathbb {R}}^n\) with a common box part \({{{\varvec{q}}}}\) and for all \(\lambda \in [0,1]\), define

where the rules of interval arithmetic are used to evaluate \(\lambda {{{\varvec{x}}}}^C_1+(1-\lambda ){{{\varvec{x}}}}^C_2\). For any \({{\fancyscript{X}}}_1,{{\fancyscript{X}}}_2\in {}^*{\mathbb {M}}\! {\mathbb {R}}^n\), the inclusion \({{\fancyscript{X}}}_1\subset {{\fancyscript{X}}}_2\) holds iff \({{{\varvec{x}}}}_1^B\subset {{{\varvec{x}}}}_2^B\) and \({{{\varvec{x}}}}_1^C\subset {{{\varvec{x}}}}_2^C\). Likewise, \({{\fancyscript{X}}}_1\supset {{\fancyscript{X}}}_2\) iff \({{\fancyscript{X}}}_2\subset {{\fancyscript{X}}}_1\). Also, define \({{\fancyscript{X}}}_1\cap {{\fancyscript{X}}}_2\equiv ({{{\varvec{x}}}}_1^B\cap {{{\varvec{x}}}}_2^B,{{{\varvec{x}}}}_1^C\cap {{{\varvec{x}}}}_2^C)\).

Definition 14

Suppose \({{\fancyscript{D}}}\subset {}^*{\mathbb {M}}\! {\mathbb {R}}^n\) is closed under coherence. A function \({{\fancyscript{F}}}:{{\fancyscript{D}}}\rightarrow {}^*{\mathbb {M}}\! {\mathbb {R}}^m\) is coherently concave on \({{\fancyscript{D}}}\) if for every coherent \({{\fancyscript{X}}}_1,{{\fancyscript{X}}}_2\in {{\fancyscript{D}}}\), i.e., \({{{\varvec{q}}}}={{{\varvec{x}}}}_1^B={{{\varvec{x}}}}_2^B\), \({{\fancyscript{F}}}({{\fancyscript{X}}}_1)\) and \({{\fancyscript{F}}}({{\fancyscript{X}}}_2)\) are coherent, and \({{\fancyscript{F}}}(\text {Conv}(\lambda ,{{\fancyscript{X}}}_1, {{\fancyscript{X}}}_2))\supset \text {Conv}(\lambda , {{\fancyscript{F}}}({{\fancyscript{X}}}_1),{{\fancyscript{F}}}({{\fancyscript{X}}}_2))\) for every \(\lambda \in [0,1]\).

Definition 15

Let \({F}:D\subset {\mathbb {R}}^n\rightarrow {\mathbb {R}}_\emptyset ^m\). A mapping \({{\fancyscript{F}}}:{{\fancyscript{D}}}\subset {}^*{\mathbb {M}}\! D\rightarrow {}^*{\mathbb {M}}\! {\mathbb {R}}^m\) is a relaxation function for \({F}\) on \({{\fancyscript{D}}}\) if \({{\fancyscript{D}}}\) is closed under coherence, \({{\fancyscript{F}}}\) is coherently concave on \({{\fancyscript{D}}}\), and \({F}(x)\in {{\mathrm{Enc}}}({{\fancyscript{F}}}({{\fancyscript{X}}}))\) is satisfied for every \({{\fancyscript{X}}}\in {{\fancyscript{D}}}\) and \(x\in {{\mathrm{Enc}}}({{\fancyscript{X}}})\).

Remark 2

Definition 14 is a generalization of convexity and concavity, and Definition 15 is a generalization of the notion of convex and concave relaxations. Convex and concave relaxations of \({F}\) can be recovered from a relaxation function of \({F}\) as follows. Let \({{{\varvec{x}}}}\in {\mathbb {I}}\! D\) so that there exists \({{\fancyscript{Y}}}\in {{\fancyscript{D}}}\) with \({{{\varvec{x}}}}={{{\varvec{y}}}}^B\). Define the functions \({{\fancyscript{U}}},{{\fancyscript{O}}}:{{{\varvec{x}}}}\rightarrow {\mathbb {R}}_\emptyset ^m\) for all \(x\in {{{\varvec{x}}}}\) by  . Then, \({{\fancyscript{U}}}\) and \({{\fancyscript{O}}}\) are convex and concave relaxations of \({F}\) on \({{{\varvec{x}}}}\), respectively, as shown in [51], Lemma 2.4.11].

. Then, \({{\fancyscript{U}}}\) and \({{\fancyscript{O}}}\) are convex and concave relaxations of \({F}\) on \({{{\varvec{x}}}}\), respectively, as shown in [51], Lemma 2.4.11].

Definition 16

Let \(D\subset {\mathbb {R}}^n\). A set \({{\fancyscript{D}}}\subset {}^*{\mathbb {M}}\! {\mathbb {R}}^n\) is a McCormick extension of \(D\) if \({{\fancyscript{D}}}\subset {}^*{\mathbb {M}}\! D\) and every \(x\in D\) satisfies \(([x,x],[x,x])\in {{\fancyscript{D}}}\). Let \({F}:D\rightarrow {\mathbb {R}}_\emptyset ^m\). A function \({{\fancyscript{F}}}:{{\fancyscript{D}}}\rightarrow {}^*{\mathbb {M}}\! {\mathbb {R}}^m\) is a McCormick extension of \({F}\) if \({{\fancyscript{D}}}\) is a McCormick extension of \(D\) and \({{\fancyscript{F}}}([x,x],[x,x])=([{F}(x),{F}(x)],[{F}(x), {F}(x)])\), \(\forall x\in D\).

Definition 17

Let \({{\fancyscript{F}}}:{{\fancyscript{D}}}\subset {}^*{\mathbb {M}}\! {\mathbb {R}}^n\rightarrow {}^*{\mathbb {M}}\! {\mathbb {R}}^m\). \({{\fancyscript{F}}}\) is inclusion monotonic on \({\fancyscript{D}}\) if \({{\fancyscript{X}}}_1\subset {{\fancyscript{X}}}_2\) implies that \(\fancyscript{F}({{\fancyscript{X}}}_1)\subset \fancyscript{F}({{\fancyscript{X}}}_2)\) for all \({{\fancyscript{X}}}_1,{{\fancyscript{X}}}_2\in {{\fancyscript{D}}}\).

Theorem 3

Let \({F}:D\subset {\mathbb {R}}^n\rightarrow {\mathbb {R}}_\emptyset ^m\) and let \({{\fancyscript{F}}}:{{\fancyscript{D}}}\rightarrow {}^*{\mathbb {M}}\! {\mathbb {R}}^m\) be a McCormick extension of \({F}\). If \({{\fancyscript{F}}}\) is inclusion monotonic on \({{\fancyscript{D}}}\), then every \({{\fancyscript{X}}}\in {{\fancyscript{D}}}\) satisfies \({F}(x)\in {{\mathrm{Enc}}}({{\fancyscript{F}}}({{\fancyscript{X}}}))\) for all \(x\in {{\mathrm{Enc}}}({{\fancyscript{X}}})\).

Proof

See [51], Theorem 2.4.14]. \(\square \)

We conclude that an inclusion monotonic McCormick extension that is also coherently concave is a relaxation function. Hence, it suffices to derive an inclusion monotonic, coherently concave McCormick extension. As shown in [51], Lemmas 2.4.15,2.4.17] the composition of inclusion monotonic, coherently concave McCormick extensions yields an inclusion monotonic, coherently concave McCormick extension. This motivates the derivations of inclusion monotonic, coherently concave McCormick extensions of the basic operations below.

Define the following relaxation functions for addition and multiplication: let the functions \((+,{\mathbb {M}}_\emptyset {\mathbb {R}}^2,{\mathbb {M}}_\emptyset {\mathbb {R}})\) and \((\times ,{\mathbb {M}}_\emptyset {\mathbb {R}}^2,{\mathbb {M}}_\emptyset {\mathbb {R}})\) be given by \(+({{\fancyscript{X}}},{{\fancyscript{Y}}})=({{{\varvec{x}}}}^B+{{{\varvec{x}}}}^B,{{{\varvec{x}}}}^C+{{{\varvec{x}}}}^C)\) and \(\times ({{\fancyscript{X}}},{{\fancyscript{Y}}})=({{{\varvec{x}}}}^B{{{\varvec{y}}}}^B,[,\hat{z}])\) where

Note that this definition of multiplication ensures that  [51], Theorems 2.4.22, 2.4.23]. Furthermore, the standard rules for addition and multiplication of McCormick relaxations are implied by these definitions, see [51], p. 69f], with the addition of the intersection with the bounds from interval arithmetic in the case of the multiplication rule. These functions are indeed relaxation functions and also inclusion monotonic as shown in [51], Section 2.4.2].

[51], Theorems 2.4.22, 2.4.23]. Furthermore, the standard rules for addition and multiplication of McCormick relaxations are implied by these definitions, see [51], p. 69f], with the addition of the intersection with the bounds from interval arithmetic in the case of the multiplication rule. These functions are indeed relaxation functions and also inclusion monotonic as shown in [51], Section 2.4.2].

The following assumption is needed to construct relaxation functions for the elements of \(\fancyscript{L}\). For many univariate functions, objects satisfying these assumptions are known and readily available [51], Section 2.8].

Assumption 2

Assume that for every \((u,B,{\mathbb {R}})\in {{\fancyscript{L}}}\), functions  where \(\tilde{B}\equiv \{({{{\varvec{x}}}},x)\in {\mathbb {I}}\! B\times B:x\in {{{\varvec{x}}}}\}\) and \(x^{\min },x^{\max }:{\mathbb {I}}\! B\rightarrow {\mathbb {R}}\) are known such that

where \(\tilde{B}\equiv \{({{{\varvec{x}}}},x)\in {\mathbb {I}}\! B\times B:x\in {{{\varvec{x}}}}\}\) and \(x^{\min },x^{\max }:{\mathbb {I}}\! B\rightarrow {\mathbb {R}}\) are known such that  and \(\hat{u}({{{\varvec{x}}}},\cdot )\) are convex and concave relaxations of \(u\) on \({{{\varvec{x}}}}\in {\mathbb {I}}\! B\), respectively, and \(x^{\min }({{{\varvec{x}}}})\) and \(x^{\max }({{{\varvec{x}}}})\) are a minimum of

and \(\hat{u}({{{\varvec{x}}}},\cdot )\) are convex and concave relaxations of \(u\) on \({{{\varvec{x}}}}\in {\mathbb {I}}\! B\), respectively, and \(x^{\min }({{{\varvec{x}}}})\) and \(x^{\max }({{{\varvec{x}}}})\) are a minimum of  and a maximum of \(\hat{u}({{{\varvec{x}}}},\cdot )\) on \({{{\varvec{x}}}}\), respectively. Furthermore, assume that for any \({{{\varvec{x}}}}_1,{{{\varvec{x}}}}_2\in {\mathbb {I}}\! B\) with \({{{\varvec{x}}}}_1\subset {{{\varvec{x}}}}_2\),

and a maximum of \(\hat{u}({{{\varvec{x}}}},\cdot )\) on \({{{\varvec{x}}}}\), respectively. Furthermore, assume that for any \({{{\varvec{x}}}}_1,{{{\varvec{x}}}}_2\in {\mathbb {I}}\! B\) with \({{{\varvec{x}}}}_1\subset {{{\varvec{x}}}}_2\),  and \(\hat{u}({{{\varvec{x}}}}_1,x)\le \hat{u}({{{\varvec{x}}}}_2,x)\) for all \(x\in {{{\varvec{x}}}}_1\) and that

and \(\hat{u}({{{\varvec{x}}}}_1,x)\le \hat{u}({{{\varvec{x}}}}_2,x)\) for all \(x\in {{{\varvec{x}}}}_1\) and that  for all \(x\in B\).

for all \(x\in B\).

Let \({{\mathrm{mid}}}:{\mathbb {R}}\times {\mathbb {R}}\times {\mathbb {R}}\rightarrow {\mathbb {R}}\) return the middle value of its arguments. It can be shown (cf. [51], p. 76f) that a relaxation function of \((u,B,R)\in {{\fancyscript{L}}}\) is given by \((u,{\mathbb {M}}\! B,{\mathbb {M\! R}})\) with

Note that if the convex and concave envelopes of \(u\) are known and used, then the intersection with \(u({{{\varvec{x}}}}^B)\) in (1) is redundant.

Suppose that Assumption 2 holds and that \(({{\fancyscript{S}}},\pi _o)\) is a \({{\fancyscript{L}}}\)-computational sequence. Then, for any element \((o_k,\pi _k)\) of \({\fancyscript{S}}\), the preceding developments provide an inclusion monotonic McCormick extension \((o_k,{\mathbb {M}}_\emptyset B_k,{\mathbb {M}}_\emptyset {\mathbb {R}})\). Also, the functions \((\pi _k,{\mathbb {M}}_\emptyset {\mathbb {R}}^{k-1},{\mathbb {M}}_\emptyset {\mathbb {R}})\) or \((\pi _k,{\mathbb {M}}_\emptyset {\mathbb {R}}^{k-1},{\mathbb {M}}_\emptyset {\mathbb {R}}^2)\) with \(\pi _k({{\fancyscript{V}}})=({{\fancyscript{V}}}_i)\) or \(\pi _k({{\fancyscript{V}}})=({{\fancyscript{V}}}_i,{{\fancyscript{V}}}_j)\) extend \((\pi _k,{\mathbb {R}}^{k-1},{\mathbb {R}})\) or \((\pi _k,{\mathbb {R}}^{k-1},{\mathbb {R}}^2)\) naturally.

Definition 18

For every \({{\fancyscript{L}}}\)-computational sequence \(({{\fancyscript{S}}},\pi _o)\) with \(n_i\) inputs and \(n_o\) outputs, define the sequence of relaxation factors \(\{({{\fancyscript{V}}}_k,{{\fancyscript{D}}}_k,{\mathbb {M}}_\emptyset {\mathbb {R}})\}_{k=1}^{n_f}\) where

-

1.

for all \(k=1,\ldots ,n_i\), \({{\fancyscript{D}}}_k={\mathbb {M}}_\emptyset {\mathbb {R}}^{n_i}\) and \({{\fancyscript{V}}}_k({{\fancyscript{X}}})={{\fancyscript{X}}}_k\), \(\forall {{\fancyscript{X}}}\in {{\fancyscript{D}}}_k\),

-

2.

for all \(k=n_i+1,\ldots ,n_f\), \({{\fancyscript{D}}}_k=\{{{\fancyscript{X}}}\in {{\fancyscript{D}}}_{k-1}:\pi _k({{\fancyscript{V}}}_1 ({{\fancyscript{X}}}),\ldots ,{{\fancyscript{V}}}_{k-1}({{\fancyscript{X}}}))\in {\mathbb {M}}_\emptyset B_k\}\) and \({{\fancyscript{V}}}_k({{\fancyscript{X}}})=o_k(\pi _k({{\fancyscript{V}}}_1({{\fancyscript{X}}}), \ldots ,{{\fancyscript{V}}}_{k-1}({{\fancyscript{X}}})))\), \(\forall {{\fancyscript{X}}}\in {{\fancyscript{D}}}_k\).

The natural McCormick extension of \((\fancyscript{S},\pi _o)\) is the function \(({{\fancyscript{F}}}_{\fancyscript{S}},{{\fancyscript{D}}}_{{\fancyscript{S}}},{\mathbb {M\! R}}^{n_o})\) defined by \({{\fancyscript{D}}}_{{\fancyscript{S}}}\equiv {{\fancyscript{D}}}_{n_f}\) and \({{\fancyscript{F}}}_{{\fancyscript{S}}}({{\fancyscript{X}}})=\pi _o({{\fancyscript{V}}}_1({{\fancyscript{X}}}), \ldots ,{{\fancyscript{V}}}_{n_f}({{\fancyscript{X}}}))\), \(\forall {{\fancyscript{X}}}\in {{\fancyscript{D}}}_{{\fancyscript{S}}}\).

Theorem 4

Let \(({{\fancyscript{S}}},\pi _o)\) be a \({{\fancyscript{L}}}\)-computational sequence with associated natural function \(({F}_{\fancyscript{S}},D_{{\fancyscript{S}}},{\mathbb {R}}^{n_o})\). The natural McCormick extension \(({{\fancyscript{F}}}_{{\fancyscript{S}}},{{\fancyscript{D}}}_{{\fancyscript{S}}},{\mathbb {M}}_\emptyset {\mathbb {R}}^{n_o})\) is a McCormick extension of \(({F}_{\fancyscript{S}},D_{{\fancyscript{S}}},{\mathbb {R}}^{n_o})\) and coherently concave and inclusion monotonic on \({{\fancyscript{D}}}_{{\fancyscript{S}}}\). Thus, it is a relaxation function for \({F}_{{\fancyscript{S}}}\) on \({{\fancyscript{D}}}_{{\fancyscript{S}}}\). In particular, each relaxation factor \(\fancyscript{V}_k\) of \((\fancyscript{S},\pi _o)\) is a inclusion monotonic, coherently concave McCormick extension of \(v_k\) on \(\mathfrak {D}_{\fancyscript{S}}\) for all \(k=1,\ldots ,n_f\).

Proof

See [51], Theorem 2.4.32] together with Theorem 3. \(\square \)

Thus, so far we have described forward propagation of intervals and McCormick objects, as is commonly done to compute natural interval extensions and standard McCormick relaxations. Next, we consider reverse propagation of intervals and describe its use in CSPs. The formal development of forward McCormick propagation in this section as an analogous process to forward interval propagation allows us to then extend the reverse interval propagation ideas to McCormick objects in Sect. 5.

4 Reverse interval propagation

In this section, we will focus on propagating interval bounds backwards through the computational sequence, which is a particular form of a DAG. Since the reverse McCormick propagation is similar in spirit, it is very instructive to first revisit the interval case. The results, which are stated below, have been adapted from [62], though the notation is introduced here.

Definition 19

Consider \({F}:D\subset {\mathbb {R}}^n\rightarrow {\mathbb {R}}^m\). Let \({{{\varvec{F}}}}^{{{\mathrm{rev}}}}:{\mathbb {I}}_\emptyset D\times {}^*\mathbb {I}\! {\mathbb {R}}^m\rightarrow {\mathbb {I}}_\emptyset {\mathbb {R}}^n\). If for all \({{{\varvec{x}}}}\in {\mathbb {I}}_\emptyset D\) and \({{{\varvec{r}}}}\in {}^*\mathbb {I}\! {\mathbb {R}}^m\) it holds that

then \({{{\varvec{F}}}}^{{{\mathrm{rev}}}}\) is called a reverse interval update of \({F}\).

Definition 20

Let \((\fancyscript{S},\pi _o)\) be a \({{\fancyscript{L}}}\)-computational sequence with \(n_i\) inputs and \(n_o\) outputs with natural interval extension \(({{{\varvec{F}}}}_\fancyscript{S},\mathfrak {D}_\fancyscript{S},{\mathbb {R}}^{n_o})\). Let \({{{\varvec{x}}}}\in \mathfrak {D}_\fancyscript{S}\). Suppose that \({{{\varvec{v}}}}_1({{{\varvec{x}}}}),\ldots ,{{{\varvec{v}}}}_{n_f}({{{\varvec{x}}}})\) have been calculated according to Definition 10. Let \(o_k^{{{\mathrm{rev}}}}:{\mathbb {I}}_\emptyset B_k\times {}^*\mathbb {I}\! {\mathbb {R}}\rightarrow {\mathbb {I}}_\emptyset B_k\) be a reverse interval update of \(o_k\) for each \(k=n_i+1,\ldots ,n_f\). Suppose \(\tilde{{{{\varvec{v}}}}}_1,\ldots ,\tilde{{{{\varvec{v}}}}}_{n_f}\in {\mathbb {I}}_\emptyset {\mathbb {R}}\) are calculated for any \({{{\varvec{x}}}}\in \mathfrak {D}_\fancyscript{S}\) and \({{{\varvec{r}}}}\in {}^*\mathbb {I}\! {\mathbb {R}}^{n_o}\) by the following procedure:

The reverse interval propagation of \((\fancyscript{S},\pi _o)\) is the function \(({{{\varvec{F}}}}_\fancyscript{S}^{{{\mathrm{rev}}}},\mathfrak {D}_\fancyscript{S}\times {}^*\mathbb {I}\! {\mathbb {R}}^{n_o}, {\mathbb {I}}\! D_\fancyscript{S})\) defined by \({{{\varvec{F}}}}_{\fancyscript{S}}^{{{\mathrm{rev}}}}({{{\varvec{x}}}},{{{\varvec{r}}}})\equiv \tilde{{{{\varvec{v}}}}}_1 \times \cdots \times \tilde{{{{\varvec{v}}}}}_{n_i}\).

Theorem 5

The reverse interval propagation of \((\fancyscript{S},\pi _o)\) as given by Definition 20 is a reverse interval update of \(({F}_\fancyscript{S},D_\fancyscript{S},{\mathbb {R}}^{n_o})\). If the reverse update of \(o_k\) is inclusion monotonic for each \(k=n_i+1,\ldots ,n_f\) then the reverse interval propagation of \((\fancyscript{S},\pi _o)\) is inclusion monotonic.

Proof

Finite induction yields immediately that the second inclusion in (2) holds.

Let \({{{\varvec{r}}}}\in {}^*\mathbb {I}\! {\mathbb {R}}^{n_o}\) and \({{{\varvec{x}}}}\in \mathfrak {D}_{\fancyscript{S}}\). If there does not exist a \(x\in {{{\varvec{x}}}}\) such that \({F}_{\fancyscript{S}}(x)\in {{{\varvec{r}}}}\), then the first inclusion in (2) holds trivially.

Let \(x\in {{{\varvec{x}}}}\) such that \({F}_{\fancyscript{S}}(x)\in {{{\varvec{r}}}}\). Then, there exists a sequence of factor values \(\{v_k(x)\}_{k=1}^{n_f}\) with \(v_1(x)=x_1\), \(\ldots \), \(v_{n_i}(x)=x_{n_i}\) and \(\pi _o(v_1(x),\ldots ,v_{n_f}(x))\in {{{\varvec{r}}}}\). Also, since \({{{\varvec{v}}}}_1,\ldots ,{{{\varvec{v}}}}_{n_f}\) are inclusion functions (see Theorem 2), \((v_1(x),\ldots ,v_{n_f}(x))\in ({{{\varvec{v}}}}_1({{{\varvec{x}}}}),\ldots ,{{{\varvec{v}}}}_{n_f}({{{\varvec{x}}}}))\) so that \((v_1(x),\ldots ,v_{n_f}(x))\in (\tilde{{{{\varvec{v}}}}}_1,\ldots ,\tilde{{{{\varvec{v}}}}}_{n_f})\) prior to entering the loop. In the following, let \(\tilde{{{{\varvec{v}}}}}_k^l\) denote the value of \(\tilde{{{{\varvec{v}}}}}_k\) for the given \({{{\varvec{x}}}}\) and \({{{\varvec{r}}}}\) after the \(l\)th reverse update, \(l=1,\ldots ,n_f-n_i\). Since \(o_{n_f}^{{{\mathrm{rev}}}}\) is a reverse interval update, it follows that \((v_1(x),\ldots ,v_{n_f-1}(x))\in (\tilde{{{{\varvec{v}}}}}_1^1,\ldots ,\tilde{{{{\varvec{v}}}}}_{n_f-1}^1)\). Finite induction yields that \((v_1(x),\ldots ,v_{n_i}(x))\in (\tilde{{{{\varvec{v}}}}}_1^{n_f-n_i},\ldots ,\tilde{{{{\varvec{v}}}}}_{n_i}^{n_f-n_i})\equiv {{{\varvec{F}}}}_{\fancyscript{S}}^{{{\mathrm{rev}}}}({{{\varvec{x}}}},{{{\varvec{r}}}})\). Thus, \(x\in {{{\varvec{F}}}}_{\fancyscript{S}}^{{{\mathrm{rev}}}}({{{\varvec{x}}}},{{{\varvec{r}}}})\) and the first inclusion in (2) holds.

Assume now that \(o_k^{{{\mathrm{rev}}}}\) is inclusion monotonic for each \(k=n_i+1,\ldots ,n_f\). Let \({{{\varvec{x}}}}^1,{{{\varvec{x}}}}^2\in \mathfrak {D}_\fancyscript{S}\) with \({{{\varvec{x}}}}^1\subset {{{\varvec{x}}}}^2\) and \({{{\varvec{r}}}}^1,{{{\varvec{r}}}}^2\in {}^*\mathbb {I}\! {\mathbb {R}}^{n_o}\) with \({{{\varvec{r}}}}^1\subset {{{\varvec{r}}}}^2\). Then, \((\tilde{{{{\varvec{v}}}}}_1({{{\varvec{x}}}}^1,{{{\varvec{r}}}}^1),\ldots ,\tilde{{{{\varvec{v}}}}}_{n_f}({{{\varvec{x}}}}^1,{{{\varvec{r}}}}^1))\subset (\tilde{{{{\varvec{v}}}}}_1({{{\varvec{x}}}}^2,{{{\varvec{r}}}}^2),\ldots ,\tilde{{{{\varvec{v}}}}}_{n_f}({{{\varvec{x}}}}^2,{{{\varvec{r}}}}^2))\) prior to entering the loop. Since \(o_{n_f}^{{{\mathrm{rev}}}}\) is inclusion monotonic, \((\tilde{{{{\varvec{v}}}}}_1^1({{{\varvec{x}}}}^1,{{{\varvec{r}}}}^1),\ldots ,\tilde{{{{\varvec{v}}}}}_{n_f}^1({{{\varvec{x}}}}^1,{{{\varvec{r}}}}^1)) \subset (\tilde{{{{\varvec{v}}}}}_1^1({{{\varvec{x}}}}^2,{{{\varvec{r}}}}^2),\ldots ,\tilde{{{{\varvec{v}}}}}_{n_f}^1({{{\varvec{x}}}}^2,{{{\varvec{r}}}}^2))\). Using finite induction over \(l\) yields that \((\tilde{{{{\varvec{v}}}}}_1^{n_f-n_i}({{{\varvec{x}}}}^1,{{{\varvec{r}}}}^1),\ldots ,\tilde{{{{\varvec{v}}}}}_{n_i}^{n_f-n_i} ({{{\varvec{x}}}}^1,{{{\varvec{r}}}}^1))\subset (\tilde{{{{\varvec{v}}}}}_1^{n_f-n_i}({{{\varvec{x}}}}^2,{{{\varvec{r}}}}^2),\ldots ,\tilde{{{{\varvec{v}}}}}_{n_i}^{n_f-n_i} ({{{\varvec{x}}}}^2,{{{\varvec{r}}}}^2))\). Thus, it follows that \({{{\varvec{F}}}}_{\fancyscript{S}}^{{{\mathrm{rev}}}}({{{\varvec{x}}}}^1,{{{\varvec{r}}}}^1)\subset {{{\varvec{F}}}}_{\fancyscript{S}}^{{{\mathrm{rev}}}}({{{\varvec{x}}}}^2,{{{\varvec{r}}}}^2)\). \(\square \)

Next, we will present a result very closely related to Theorem 5 that relies more on standard concepts from interval analysis.

Theorem 6

Consider \((\fancyscript{S},\pi _o)\) and assume that for each \(k=n_i+1,\ldots ,n_f\), the reverse interval update of \(o_k\) is inclusion monotonic. Define \({F}_{\fancyscript{S}}^{{{\mathrm{rev}}}}:D\times {\mathbb {R}}^{n_o}\rightarrow {\mathbb {R}}_\emptyset ^{n_i}\) for each \(x\in D\) and \(r\in {\mathbb {R}}^{n_o}\) by

Then, \({{{\varvec{F}}}}_{\fancyscript{S}}^{{{\mathrm{rev}}}}\) is an inclusion function of \({F}_{\fancyscript{S}}^{{{\mathrm{rev}}}}\) on \(\mathfrak {D}_{\fancyscript{S}}\times {}^*\mathbb {I}\! {\mathbb {R}}^{n_o}\).

Proof

Let \(r\in {}^*\mathbb {R}\! ^{n_o}\). First, consider \(x\in D\) so that \({F}_{\fancyscript{S}}(x)=r\). Since \({{{\varvec{F}}}}_\fancyscript{S}\) is an interval extension of \({F}_{\fancyscript{S}}\), each factor is a degenerate interval after the forward evaluation with \({{{\varvec{v}}}}_k([x,x])=[v_k(x),v_k(x)]\). Since \({F}_{\fancyscript{S}}(x)=r\), the intersections during the reverse interval propagation return degenerate intervals so that it is clear that \({{{\varvec{F}}}}^{{{\mathrm{rev}}}}_\fancyscript{S}\) is an interval extension of \({F}_{\fancyscript{S}}^{{{\mathrm{rev}}}}\) for such \([x,x]\). If \(x\in D\) such that \({F}_{\fancyscript{S}}(x)\ne r\) then \(\pi _o(\tilde{{{{\varvec{v}}}}}_1,\ldots ,\tilde{{{{\varvec{v}}}}}_{n_f}):=\pi _o(\tilde{{{{\varvec{v}}}}}_1, \ldots ,\tilde{{{{\varvec{v}}}}}_{n_f}) \cap {{{\varvec{r}}}}\) results in \(\tilde{{{{\varvec{v}}}}}_k = \emptyset \) for at least one \(k \in \{1, \ldots , n_f\}\). For each \(k \in \{1, \ldots , n_f\}\), \(\tilde{{{{\varvec{v}}}}}_k\) influences at least one \(\tilde{{{{\varvec{v}}}}}_j\) with \(j \in \{1, \ldots , n_i\}\) through a sequence of reverse interval updates. Any reverse interval update involving empty intervals yields empty intervals because it is an interval operation. Hence, once the loop is executed, \(\tilde{{{{\varvec{v}}}}}_1 \times \cdots \times \tilde{{{{\varvec{v}}}}}_{n_i} = \emptyset \) so that \({{{\varvec{F}}}}^{{{\mathrm{rev}}}}_\fancyscript{S}([x,x],[r,r])=\emptyset =[\mathrm {NaN},\mathrm {NaN}]=[{F}_ {\fancyscript{S}}^{{{\mathrm{rev}}}}(x,r),{F}_{\fancyscript{S}}^{{{\mathrm{rev}}}}(x,r)]\). Thus, \({{{\varvec{F}}}}^{{{\mathrm{rev}}}}_\fancyscript{S}\) is an interval extension of \({F}_{\fancyscript{S}}^{{{\mathrm{rev}}}}\). Inclusion monotonicity of \({{{\varvec{F}}}}^{{{\mathrm{rev}}}}_\fancyscript{S}\) has been established in Theorem 5. The assertion follows then from Theorem 1. \(\square \)

Here, we will demonstrate how to obtain inclusion monotonic reverse interval updates for the case of addition. Similar constructions are possible for multiplication and univariate operations [62].

Lemma 1

Consider \((+,{\mathbb {R}}^2,{\mathbb {R}})\). The function \((+^{{{\mathrm{rev}}}},{\mathbb {I}}_\emptyset {\mathbb {R}}^2\times {}^*\mathbb {I}\! {\mathbb {R}},{\mathbb {I}}_\emptyset {\mathbb {R}}^2)\) defined for all \({{{\varvec{x}}}},{{{\varvec{y}}}}\in {\mathbb {I}}_\emptyset {\mathbb {R}}\) and \({{{\varvec{r}}}}\in {}^*\mathbb {I}\! {\mathbb {R}}\) by

is an inclusion monotonic reverse interval update of \((+,{\mathbb {R}}^2,{\mathbb {R}})\).

5 Reverse McCormick propagation

In this section, the ideas for reverse interval propagation are extended to McCormick objects. Again, the enclosure property will be established, but also coherent concavity and inclusion monotonicity of the resulting relaxations will be proved.

Definition 21

Suppose \({F}:D\subset {\mathbb {R}}^n\rightarrow {\mathbb {R}}^m\). Consider \({{\fancyscript{F}}}^{{{\mathrm{rev}}}}:{\mathbb {M}}_\emptyset D\times {}^*{\mathbb {M}}\! {\mathbb {R}}^m\rightarrow {\mathbb {M}}_\emptyset {\mathbb {R}}^n\). If for all \({{\fancyscript{X}}}\in {\mathbb {M}}_\emptyset D\) and \(\fancyscript{R}\in {}^*{\mathbb {M}}\! {\mathbb {R}}^m\) it holds that

and \({{\fancyscript{F}}}^{{{\mathrm{rev}}}}({{\fancyscript{X}}},\fancyscript{R})\subset {{\fancyscript{X}}}\), then \({{\fancyscript{F}}}^{{{\mathrm{rev}}}}\) is called a reverse McCormick update of \({F}\).

Definition 22

Let \((\fancyscript{S},\pi _o)\) be a \({{\fancyscript{L}}}\)-computational sequence with \(n_i\) inputs and \(n_o\) outputs with natural McCormick extension \(({{\fancyscript{F}}}_\fancyscript{S},{{\fancyscript{D}}}_\fancyscript{S},{\mathbb {R}}^{n_o})\). Let \({{\fancyscript{X}}}\in {{\fancyscript{D}}}_\fancyscript{S}\). Suppose \(\fancyscript{V}_1({{\fancyscript{X}}}),\ldots ,\) \(\fancyscript{V}_{n_f}({{\fancyscript{X}}})\) have been calculated according to Definition 18. Let \(o_k^{{{\mathrm{rev}}}}:{\mathbb {M}}_\emptyset B_k\times {}^*{\mathbb {M}}\! {\mathbb {R}}\rightarrow {\mathbb {M}}_\emptyset B_k\) be a reverse McCormick update of \(o_k\) for each \(k=n_i+1,\ldots ,n_f\). Suppose \(\tilde{\fancyscript{V}}_1,\ldots ,\tilde{\fancyscript{V}}_{n_f}\in {\mathbb {M}}_\emptyset {\mathbb {R}}\) are calculated for any \({{\fancyscript{X}}}\in {{\fancyscript{D}}}_\fancyscript{S}\) and \(\fancyscript{R}\in {}^*\mathbb {I}\! {\mathbb {R}}^{n_o}\) by the following procedure:

The reverse McCormick propagation of \((\fancyscript{S},\pi _o)\) is the function \(({{\fancyscript{F}}}_\fancyscript{S}^{{{\mathrm{rev}}}},{{\fancyscript{D}}}_\fancyscript{S}\times {}^*{\mathbb {M}}\! {\mathbb {R}}^ {n_o},{\mathbb {M}}_\emptyset D_\fancyscript{S})\) defined for any \({{\fancyscript{X}}}\in {{\fancyscript{D}}}_\fancyscript{S}\) and \(\fancyscript{R}\in {}^*{\mathbb {M}}\! {\mathbb {R}}^{n_o}\) by \({{\fancyscript{F}}}_{\fancyscript{S}}^{{{\mathrm{rev}}}}({{\fancyscript{X}}},\fancyscript{R})\equiv \tilde{\fancyscript{V}}_1 \times \cdots \times \tilde{\fancyscript{V}}_{n_i}\).

Theorem 7

The reverse McCormick propagation of \((\fancyscript{S},\pi _o)\) as given by Definition 22 is a reverse McCormick update of \(({F}_\fancyscript{S},D_\fancyscript{S},{\mathbb {R}}^{n_o})\).

Proof

Let \(\fancyscript{R}\in {}^*{\mathbb {M}}\! {\mathbb {R}}^m\) and \({{\fancyscript{X}}}\in {{\fancyscript{D}}}_{\fancyscript{S}}\). Finite induction yields immediately that \({{\fancyscript{F}}}^{{{\mathrm{rev}}}}({{\fancyscript{X}}},\fancyscript{R})\subset {{\fancyscript{X}}}\). If there does not exist \(x\in {{\mathrm{Enc}}}({{\fancyscript{X}}})\) such that \({F}_{\fancyscript{S}}(x)\in {{\mathrm{Enc}}}(\fancyscript{R})\), then (4) holds trivially.

Let \(x\in {{\mathrm{Enc}}}({{\fancyscript{X}}})\) satisfy \({F}_{\fancyscript{S}}(x)\in {{\mathrm{Enc}}}(\fancyscript{R})\). Then, there exists a sequence of factor values \(\{v_k(x)\}_{k=1}^{n_f}\) with \(v_1(x)=x_1\), \(\ldots \), \(v_{n_i}(x)=x_{n_i}\) and \(\pi _o(v_1(x),\ldots ,v_{n_f}(x))\in {{\mathrm{Enc}}}(\fancyscript{R})\). Also, since \(\fancyscript{V}_1,\ldots ,\fancyscript{V}_{n_f}\) are relaxation functions, \((v_1(x),\ldots ,v_{n_f}(x))\in {{\mathrm{Enc}}}((\fancyscript{V}_1({{\fancyscript{X}}}),\ldots ,\fancyscript{V}_{n_f}({{\fancyscript{X}}})))\) so that \((v_1(x),\ldots ,v_{n_f}(x))\in {{\mathrm{Enc}}}((\tilde{\fancyscript{V}}_1,\ldots ,\tilde{\fancyscript{V}}_{n_f}))\) prior to entering the loop.

In the following, let \(\tilde{\fancyscript{V}}_k^l\) denote the value of \(\tilde{\fancyscript{V}}_k\) for the given \({{\fancyscript{X}}}\) and \(\fancyscript{R}\) after the \(l\)th reverse update, \(l=1,\ldots ,n_f-n_i\). Since \(o_{n_f}^{{{\mathrm{rev}}}}\) is a reverse McCormick update, it follows that \((v_1(x),\ldots ,v_{n_f-1}(x))\in {{\mathrm{Enc}}}(\tilde{\fancyscript{V}}_1^1,\ldots ,\tilde{\fancyscript{V}}_{n_f-1}^1)\). Finite induction yields that \((v_1(x),\ldots ,v_{n_i}(x))\in {{\mathrm{Enc}}}((\tilde{\fancyscript{V}}_1^{n_f-n_i},\ldots ,\tilde{\fancyscript{V}}_{n_i} ^{n_f-n_i}))\equiv {{\mathrm{Enc}}}({{\fancyscript{F}}}_{\fancyscript{S}}^{{{\mathrm{rev}}}}({{\fancyscript{X}}},\fancyscript{R}))\). Thus, \(x\in {{\mathrm{Enc}}}({{\fancyscript{F}}}_{\fancyscript{S}}^{{{\mathrm{rev}}}}({{\fancyscript{X}}},\fancyscript{R}))\) and (4) holds. \(\square \)

Lemma 2

Consider \((\fancyscript{S},\pi _o)\) and assume that for each \(k=n_i+1,\ldots ,n_f\), the reverse McCormick update of \(o_k\) is coherently concave and inclusion monotonic on \({\mathbb {M}}_\emptyset B_k\times {}^*{\mathbb {M}}\! {\mathbb {R}}\). Then, \({{\fancyscript{F}}}_{\fancyscript{S}}^{{{\mathrm{rev}}}}\) is coherently concave and inclusion monotonic on \({{\fancyscript{D}}}_{\fancyscript{S}}\times {}^*{\mathbb {M}}\! {\mathbb {R}}^{n_o}\).

Proof

Compositions of coherently concave and inclusion monotonic functions are coherently concave and inclusion monotonic [51], Lemma 2.4.15]. The result thus follows from finite induction, analogous to the proof of Theorem 7. \(\square \)

Theorem 8

Consider \((\fancyscript{S},\pi _o)\) and assume that for each \(k=n_i+1,\ldots ,n_f\), the reverse McCormick update of \(o_k\) is coherently concave and inclusion monotonic. Then, \({{\fancyscript{F}}}_{\fancyscript{S}}^{{{\mathrm{rev}}}}\) is a relaxation function of \({F}_{\fancyscript{S}}^{{{\mathrm{rev}}}}\) on \({{\fancyscript{D}}}_{\fancyscript{S}}\times {}^*{\mathbb {M}}\! {\mathbb {R}}^{n_o}\).

Proof

Let \(r\in {}^*\mathbb {R}\! ^{n_o}\). First, consider \(x\in D\) so that \({F}_{\fancyscript{S}}(x)=r\). It is clear that \({{\fancyscript{F}}}^{{{\mathrm{rev}}}}_\fancyscript{S}\) is a McCormick extension of \({F}_{\fancyscript{S}}^{{{\mathrm{rev}}}}\) for such \(([x,x],[x,x])\) since \({{\fancyscript{F}}}_\fancyscript{S}\) is a McCormick extension of \({F}_{\fancyscript{S}}\) and \(o_k^{{{\mathrm{rev}}}}(\fancyscript{B},\fancyscript{R})\subset \fancyscript{B}\) for all \((\fancyscript{B},\fancyscript{R})\in {\mathbb {M}}_\emptyset B_k\times {}^*{\mathbb {M}}\! {\mathbb {R}}\) by definition. If \(x\in D\) such that \({F}_{\fancyscript{S}}(x)\ne r\) then \(\pi _o(\tilde{\fancyscript{V}}_1,\ldots ,\tilde{\fancyscript{V}}_{n_f}):= \pi _o(\tilde{\fancyscript{V}}_1,\ldots ,\tilde{\fancyscript{V}}_{n_f}) \cap ([r,r],[r,r])\) results in \(\tilde{\fancyscript{V}}_k = \emptyset \) for at least one \(k \in \{1, \ldots , n_f\}\). For each \(k \in \{1, \ldots , n_f\}\), \(\tilde{\fancyscript{V}}_k\) influences at least one \(\tilde{\fancyscript{V}}_j\) with \(j \in \{1, \ldots , n_i\}\) through a sequence of reverse McCormick updates. Any reverse McCormick update involving empty McCormick objects yields empty McCormick objects because it is a McCormick operation. Hence, once the loop is executed, \(\tilde{\fancyscript{V}}_1 \times \cdots \times \tilde{\fancyscript{V}}_{n_i} = \emptyset \) so that \({{\fancyscript{F}}}^{{{\mathrm{rev}}}}_\fancyscript{S}(([x,x],[x,x]),([r,r],[r,r]))=\emptyset \). Thus, \({{\fancyscript{F}}}^{{{\mathrm{rev}}}}_\fancyscript{S}\) is a McCormick extension of \({F}_{\fancyscript{S}}^{{{\mathrm{rev}}}}\). The assertion follows from Lemma 2 in conjunction with Theorem 3. \(\square \)

5.1 Reverse McCormick updates of binary operations

Lemma 3

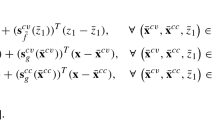

Consider \((+,{\mathbb {R}}^2,{\mathbb {R}})\) and its relaxation function \((+,{\mathbb {M\! R}}^2,{\mathbb {M\! R}})\). The function \((+^{{{\mathrm{rev}}}},{\mathbb {M}}_\emptyset {\mathbb {R}}^2\times {}^*{\mathbb {M}}\! {\mathbb {R}},{\mathbb {M}}_\emptyset {\mathbb {R}}^2)\) defined for all \({{\fancyscript{X}}},{{\fancyscript{Y}}}\in {\mathbb {M}}_\emptyset {\mathbb {R}}\) and \(\fancyscript{R}\in {}^*{\mathbb {M}}\! {\mathbb {R}}\) by

is a reverse McCormick update of \((+,{\mathbb {R}}^2,{\mathbb {R}})\).

Proof

Let \({{\fancyscript{X}}},{{\fancyscript{Y}}}\in {\mathbb {M}}_\emptyset {\mathbb {R}}\) and \(\fancyscript{R}\in {}^*{\mathbb {M}}\! {\mathbb {R}}\). If \({{\mathrm{Enc}}}(+({{\fancyscript{X}}},{{\fancyscript{Y}}})\cap \fancyscript{R})=\emptyset \), then \(\not \exists (x,y,r)\in {{\mathrm{Enc}}}(({{\fancyscript{X}}},{{\fancyscript{Y}}},\fancyscript{R})):r-y=x\). Thus, \(r-y\not \in {{\mathrm{Enc}}}({{\fancyscript{X}}})\) for all \((y,r)\in {{\mathrm{Enc}}}(({{\fancyscript{Y}}},\fancyscript{R}))\) so that \({{\mathrm{Enc}}}(\fancyscript{R}-{{\fancyscript{Y}}})\cap {{\mathrm{Enc}}}({{\fancyscript{X}}})=\emptyset \). Similarly, \({{\mathrm{Enc}}}(\fancyscript{R}-{{\fancyscript{X}}})\cap {{\mathrm{Enc}}}({{\fancyscript{Y}}})=\emptyset \) so that (4) holds trivially.

Otherwise, pick \((x,y)\in {{\mathrm{Enc}}}({{\fancyscript{X}}})\times {{\mathrm{Enc}}}({{\fancyscript{Y}}})\) so that \(x+y\in {{\mathrm{Enc}}}(\fancyscript{R})\). Since  and

and  , it follows that

, it follows that  and

and  and that

and that  and

and  so that \((x,y)\in {{\mathrm{Enc}}}(+^{{{\mathrm{rev}}}}(({{\fancyscript{X}}},{{\fancyscript{Y}}}),\fancyscript{R}))\). Thus, (4) holds. \(\square \)

so that \((x,y)\in {{\mathrm{Enc}}}(+^{{{\mathrm{rev}}}}(({{\fancyscript{X}}},{{\fancyscript{Y}}}),\fancyscript{R}))\). Thus, (4) holds. \(\square \)

Let \((\varGamma ,{\mathbb {I}}_\emptyset {\mathbb {R}}\times {}^*\mathbb {I}\! {\mathbb {R}}\times {\mathbb {I}}_\emptyset {\mathbb {R}},{\mathbb {I}}_\emptyset {\mathbb {R}})\) denote the Gauss–Seidel operator for all \({{{\varvec{x}}}},{{{\varvec{y}}}}\in {\mathbb {I}}_\emptyset {\mathbb {R}}\) and \({{{\varvec{r}}}}\in {}^*\mathbb {I}\! {\mathbb {R}}\), see [43], Proposition 4.2.1] for its description.

Definition 23

Define an extension of the Gauss–Seidel operator to \({\mathbb {M\! R}}\), denoted as \(\fancyscript{G}:{\mathbb {M}}_\emptyset {\mathbb {R}}\times {}^*{\mathbb {M}}\! {\mathbb {R}}\times {\mathbb {M}}_\emptyset {\mathbb {R}}\rightarrow {\mathbb {M}}_\emptyset {\mathbb {R}}\), for all \({{\fancyscript{X}}},{{\fancyscript{Y}}}\in {\mathbb {M}}_\emptyset {\mathbb {R}}\) and \(\fancyscript{R}\in {}^*{\mathbb {M}}\! {\mathbb {R}}\) by \((\fancyscript{G}({{\fancyscript{X}}},\fancyscript{R},{{\fancyscript{Y}}}))^B= \varGamma ({{{\varvec{x}}}}^B,{{{\varvec{r}}}}^B,{{{\varvec{y}}}}^B)\) and

where \({{\fancyscript{X}}}'=({{{\varvec{x}}}}^B,{{{\varvec{x}}}}^B\cap {{{\varvec{x}}}}^C)\), \({{\fancyscript{Y}}}'=({{{\varvec{y}}}}^B,{{{\varvec{y}}}}^B\cap {{{\varvec{y}}}}^C)\) and \(\fancyscript{R}'=({{{\varvec{r}}}}^B,{{{\varvec{r}}}}^B\cap {{{\varvec{r}}}}^C)\).

Lemma 4

Suppose \({{\fancyscript{X}}},{{\fancyscript{Y}}}\in {\mathbb {M}}_\emptyset {\mathbb {R}}\), \(\fancyscript{R}\in {}^*{\mathbb {M}}\! {\mathbb {R}}\). Then, \(\fancyscript{G}({{\fancyscript{X}}},\fancyscript{R},{{\fancyscript{Y}}})\subset \fancyscript{B}\) and

Proof

\(\varGamma ({{{\varvec{x}}}}^B,{{{\varvec{r}}}}^B,{{{\varvec{b}}}}^B)\subset {{{\varvec{b}}}}^B\) follows from [43], 4.3.2] and it is also clear that \(\left( \fancyscript{G}({{\fancyscript{X}}},\fancyscript{R},{{\fancyscript{Y}}})\right) ^C\subset ({{{\varvec{y}}}}')^C\), hence \(\fancyscript{G}({{\fancyscript{X}}},\fancyscript{R},{{\fancyscript{Y}}})\subset {{\fancyscript{Y}}}' \subset {{\fancyscript{Y}}}\). It has already been established [43], Proposition 4.2.1] that

Next, note that

since \({{\mathrm{Enc}}}({{\fancyscript{X}}})\subset {{{\varvec{x}}}}^B\), \({{\mathrm{Enc}}}({{\fancyscript{Y}}})\subset {{{\varvec{y}}}}^B\), and \({{\mathrm{Enc}}}(\fancyscript{R})\subset {{{\varvec{r}}}}^B\). Therefore, (5) holds for the second and third case. Establishing \(\left( \fancyscript{G}({{\fancyscript{X}}},\fancyscript{R},{{\fancyscript{Y}}})\right) ^C\supset \Box \{y\in {{\mathrm{Enc}}}({{\fancyscript{Y}}}):\exists x\in {{\mathrm{Enc}}}({{\fancyscript{X}}}), r\in {{\mathrm{Enc}}}(\fancyscript{R}): xy=r\}\) is sufficient to show that (5) holds in the first case.

Suppose that \(0\notin {{{\varvec{x}}}}^B\). Consider \(y\in {{{\varvec{y}}}}^C\) such that \(\exists x\in ({{{\varvec{x}}}}')^C,r\in ({{{\varvec{r}}}}')^C\) with \(xy=r\), noting that \(x\ne 0\) by assumption. If such \(y\) does not exist then \(\{y\in {{\mathrm{Enc}}}({{\fancyscript{Y}}}):\exists x\in {{\mathrm{Enc}}}({{\fancyscript{X}}}), r\in {{\mathrm{Enc}}}(\fancyscript{R}): xy=r\}=\emptyset \) and (5) holds trivially. If such \(y\) exists, then \(y=r\times \frac{1}{x}\). Also, \(\frac{1}{{{\fancyscript{X}}}'}\) exists and \(\frac{1}{x}\in {{\mathrm{Enc}}}(\frac{1}{\fancyscript{X'}})\). Since \(r\times \frac{1}{x}\in (\fancyscript{R}'\times \frac{1}{{{\fancyscript{X}}}'})^C\), \(\left( \fancyscript{G}({{\fancyscript{X}}},\fancyscript{R},{{\fancyscript{Y}}})\right) ^C\supset \{y\in {{\mathrm{Enc}}}({{\fancyscript{Y}}}):\exists x\in {{\mathrm{Enc}}}({{\fancyscript{X}}}), r\in {{\mathrm{Enc}}}(\fancyscript{R}): xy=r\}\). \(\square \)

Lemma 5

Consider \((\times ,{\mathbb {R}}^2,{\mathbb {R}})\) and its relaxation function \((\times ,{\mathbb {M\! R}}^2,{\mathbb {M\! R}})\). The function \((\times ^{{{\mathrm{rev}}}},{\mathbb {M}}_\emptyset {\mathbb {R}}^2\times {}^*{\mathbb {M}}\! {\mathbb {R}},{\mathbb {M}}_\emptyset {\mathbb {R}}^2)\) defined for all \({{\fancyscript{X}}},{{\fancyscript{Y}}}\in {\mathbb {M}}_\emptyset {\mathbb {R}}\) and \(\fancyscript{R}\in {}^*{\mathbb {M}}\! {\mathbb {R}}\) by

is a reverse McCormick update of \((\times ,{\mathbb {R}}^2,{\mathbb {R}})\).

Proof

Let \({{\fancyscript{X}}},{{\fancyscript{Y}}}\in {\mathbb {M}}_\emptyset {\mathbb {R}}\), \(\fancyscript{R}\in {}^*{\mathbb {M}}\! {\mathbb {R}}\). If \(\times ({{\fancyscript{X}}},{{\fancyscript{Y}}})\cap \fancyscript{R}=\emptyset \), there does not exist \(x\in {{\mathrm{Enc}}}({{\fancyscript{X}}})\), \(y\in {{\mathrm{Enc}}}({{\fancyscript{Y}}})\) so that \(xy\in {{\mathrm{Enc}}}(\fancyscript{R})\). Thus, (4) holds trivially. Otherwise, pick \((x,y)\in {{\mathrm{Enc}}}({{\fancyscript{X}}})\times {{\mathrm{Enc}}}({{\fancyscript{Y}}})\) so that \(xy\in {{\mathrm{Enc}}}(\fancyscript{R})\). By Lemma 4, \({{\mathrm{Enc}}}(\fancyscript{G}({{\fancyscript{Y}}},\fancyscript{R},{{\fancyscript{X}}}))\supset \{\tilde{x}\in {{\mathrm{Enc}}}({{\fancyscript{X}}}):\exists \tilde{y}\in {{\mathrm{Enc}}}({{\fancyscript{Y}}}),z\in {{\mathrm{Enc}}}(\fancyscript{R}):\tilde{x}\tilde{y}=z\}\), and hence \(x\in {{\mathrm{Enc}}}(\fancyscript{G}({{\fancyscript{Y}}},\fancyscript{R},{{\fancyscript{X}}}))\). Likewise, \(\{\tilde{y}\in {{\mathrm{Enc}}}({{\fancyscript{Y}}}):\exists \tilde{x}\in {{\mathrm{Enc}}}(\fancyscript{G}({{\fancyscript{Y}}},\fancyscript{R},{{\fancyscript{X}}})),z\in {{\mathrm{Enc}}}(\fancyscript{R}):\tilde{x}\tilde{y}=z\}\subset {{\mathrm{Enc}}}(\fancyscript{G}(\fancyscript{G}({{\fancyscript{Y}}},\fancyscript{R},{{\fancyscript{X}}}), \fancyscript{R},{{\fancyscript{Y}}}))\), hence \(y\in {{\mathrm{Enc}}}(\fancyscript{G}(\fancyscript{G}({{\fancyscript{Y}}},\fancyscript{R},{{\fancyscript{X}}}), \fancyscript{R},{{\fancyscript{Y}}}))\). Thus, \((x,y)\in {{\mathrm{Enc}}}(\times ^{{{\mathrm{rev}}}}(({{\fancyscript{X}}},{{\fancyscript{Y}}}),\fancyscript{R}))\) and (4) holds. \(\square \)

Note that \(\times ^{{{\mathrm{rev}}}}(({{\fancyscript{X}}},{{\fancyscript{Y}}}),\fancyscript{R}) = \left( \fancyscript{G}(\fancyscript{G}({{\fancyscript{X}}},\fancyscript{R},{{\fancyscript{Y}}}), \fancyscript{R},{{\fancyscript{X}}}),\fancyscript{G}({{\fancyscript{X}}},\fancyscript{R}, {{\fancyscript{Y}}})\right) \) is an alternative reverse McCormick update of \((\times ,{\mathbb {R}}^2,{\mathbb {R}})\).

5.2 Reverse McCormick updates of univariate functions

Lemma 6

Let \(B\subset {\mathbb {R}}\) and consider an injective continuous function \((u,B,{\mathbb {R}})\in {{\fancyscript{L}}}\). Furthermore, assume that \((u^{-1},\mathrm{range}(u,B),{\mathbb {R}})\in {{\fancyscript{L}}}\) where \(\mathrm{range}(u,B)\) refers to the image of \(B\) under the real-valued function \(u\). The function \((u^{{{\mathrm{rev}}}},{\mathbb {M}}_\emptyset B\times {}^*{\mathbb {M}}\! {\mathbb {R}},{\mathbb {M}}_\emptyset {\mathbb {R}})\) defined for all \({{\fancyscript{X}}}\in {\mathbb {M}}_\emptyset B\) and \(\fancyscript{R}\in {}^*{\mathbb {M}}\! {\mathbb {R}}\) by

where \(\fancyscript{T}=({{{\varvec{r}}}}^B\cap u({{{\varvec{x}}}}^B),{{\mathrm{Enc}}}(\fancyscript{R}\cap u({{\fancyscript{X}}})))\) is a reverse McCormick update of \((u,B,{\mathbb {R}})\).

Proof

Let \({{\fancyscript{X}}}\in {\mathbb {M}}_\emptyset B\). Suppose that \({{\mathrm{Enc}}}(\fancyscript{T})=\emptyset \). Since \((u,{\mathbb {M}}_\emptyset B,{\mathbb {M\! R}})\) is a relaxation function, there does not exist an \(x\in {{\mathrm{Enc}}}({{\fancyscript{X}}})\) so that \(u(x)\in {{\mathrm{Enc}}}(\fancyscript{R})\). Otherwise, since \((u,B,{\mathbb {R}})\) is continuous and injective, it is invertible on \(\mathrm{range}(u,B)\) and \(u^{-1}\) is continuous [46], Thm. 4.17]. Since \((u^{-1},\mathrm{range}(u,B),{\mathbb {R}})\in {{\fancyscript{L}}}\), \(u(x)\in {{\mathrm{Enc}}}(\fancyscript{T})\) implies \(x=u^{-1}(u(x))\in {{\mathrm{Enc}}}(u^{-1}(\fancyscript{T}))\). \(\square \)

Remark 3

Lemma 6 can be used to define the reverse McCormick update of \(-(\cdot )\), \((\cdot )^n\) for odd \(n\in \mathbb {N}\), \(\exp \), \(\log \), \(\sqrt{\cdot }\), etc. It is also applicable to \(\frac{1}{(\cdot )}\) if \(B\) is restricted to either the negative or positive reals.

Lemma 7

Let \(n\in \mathbb {N}\) be even. Consider \((u,{\mathbb {R}},{\mathbb {R}})\in {{\fancyscript{L}}}\) where \(u(x)=x^n\) and assume that \((\root n \of {\cdot },[0,+\infty ),{\mathbb {R}})\in {{\fancyscript{L}}}\). The function \((u^{{{\mathrm{rev}}}},{\mathbb {M}}_\emptyset {\mathbb {R}}\times {}^*{\mathbb {M}}\! {\mathbb {R}},{\mathbb {M}}_\emptyset {\mathbb {R}})\) defined for all \({{\fancyscript{X}}}\in {\mathbb {M}}_\emptyset {\mathbb {R}}\) and \(\fancyscript{R}\in {}^*{\mathbb {M}}\! {\mathbb {R}}\) by

where \(\fancyscript{T}=({{{\varvec{t}}}}^B, {{{\varvec{t}}}}^C) = ({{{\varvec{r}}}}^B\cap u({{{\varvec{x}}}}^B)\cap [0,+\infty ),{{\mathrm{Enc}}}(\fancyscript{R})\cap {{\mathrm{Enc}}}(u({{\fancyscript{X}}}))\cap [0,+\infty ))\) is a reverse McCormick update of \((u,{\mathbb {R}},{\mathbb {R}})\), and \(u^{{{\mathrm{rev}}}}({{{\varvec{x}}}}^B,{{{\varvec{t}}}}^B)\) denotes the reverse interval update for the operation.

Proof

Let \({{\fancyscript{X}}}\in {\mathbb {M}}_\emptyset {\mathbb {R}}\). Suppose that \({{{\varvec{r}}}}^B\cap u({{{\varvec{x}}}}^B)=\emptyset \). Since \((u,{\mathbb {I}}_\emptyset {\mathbb {R}},{\mathbb {I\! R}})\) is an inclusion function, there does not exist an \(x\in {{{\varvec{x}}}}^B\) so that \(u(x)\in {{{\varvec{r}}}}^B\).

In the following, assume that \({{{\varvec{r}}}}^B\cap u({{{\varvec{x}}}}^B)\ne \emptyset \). Note that intersecting \(\fancyscript{R}\) with the non-negative half space only ensures that no domain violation occurs. Let \(\tilde{x}\in {{\mathrm{Enc}}}({{\fancyscript{X}}})\) so that \(u(\tilde{x})\in {{\mathrm{Enc}}}(\fancyscript{R})\}\). If \(\underline{x}\ge 0\) then \(\tilde{x}\ge 0\). By definition of the relaxation function of \(\root n \of {\cdot }\), it follows that \(\{x\in {\mathbb {R}}:x\ge 0 \wedge u(x)\in {{\mathrm{Enc}}}(\fancyscript{R})\} \subset {{\mathrm{Enc}}}(\root n \of {\fancyscript{T}})\). Similarly, if \(\overline{x}\le 0\) then \(\tilde{x}\le 0\). Since \(u(-\tilde{x})=u(\tilde{x})\ge 0\) and \(u(-\tilde{x})\in {{\mathrm{Enc}}}(\fancyscript{R})\), \(u(-\tilde{x})\in {{\mathrm{Enc}}}(\fancyscript{T})\) so that \(\tilde{x}=-(-\tilde{x})=-\root n \of {u(-\tilde{x})}\in {{\mathrm{Enc}}}(-\root n \of {\fancyscript{T}})\). Hence, \(\{x\in {\mathbb {R}}:x\le 0 \wedge u(x)\in {{\mathrm{Enc}}}(\fancyscript{R})\} \subset {{\mathrm{Enc}}}(-\root n \of {\fancyscript{T}})\). Otherwise, if \(0\not \in {{{\varvec{x}}}}^B\), it is easy to see that

Intersecting with the reverse interval update does not discard any \(\tilde{x}\) for which \(u(\tilde{x})\in {{\mathrm{Enc}}}(\fancyscript{R})\) holds. \(\square \)