Abstract

In this paper, we propose a smoothing augmented Lagrangian method for finding a stationary point of a nonsmooth and nonconvex optimization problem. We show that any accumulation point of the iteration sequence generated by the algorithm is a stationary point provided that the penalty parameters are bounded. Furthermore, we show that a weak version of the generalized Mangasarian Fromovitz constraint qualification (GMFCQ) at the accumulation point is a sufficient condition for the boundedness of the penalty parameters. Since the weak GMFCQ may be strictly weaker than the GMFCQ, our algorithm is applicable for an optimization problem for which the GMFCQ does not hold. Numerical experiments show that the algorithm is efficient for finding stationary points of general nonsmooth and nonconvex optimization problems, including the bilevel program which will never satisfy the GMFCQ.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we consider a constrained optimization problem with inequality and equality constraints:

Unless otherwise specified, we assume that the objective function and constraint functions \(f, g_i( i=1,\ldots ,p), h_j( j=p+1,\ldots ,q):\mathbb {R}^n\rightarrow \mathbb {R}\) are Lipschitz around the point of interest.

The quadratic penalty method for problem (P) when all functions are smooth is an inexact penalty method which locates stationary points of a sequence of smooth penalized problems:

and takes \(c\uparrow \infty \) to find the stationary point of the original constrained problem. However, when \(c\) is large, the penalized problems may become ill-conditioned and very difficult to solve. The augmented Lagrangian method (see e.g. [21]), which is also known as the method of multipliers, reduces the possibility of ill-conditioning by adding the estimates of Lagrange multipliers into the penalty function. This method is also the basis for some high quality software such as ALGENCAN [1] and LANCELOT [35]. The augmented Lagrangian method was first proposed by Hestenes [29] and Powell [41] for equality constrained problems. Bertsekas [6] and Rockafellar [43, 44] extended the method to inequality constrained convex optimization problems and to nonconvex optimization problems respectively. The Powell–Hestenes–Rockafellar (PHR) augmented Lagrangian function [29, 41, 44] (see [8] for a comparison with other augmented Lagrangian functions) takes the form:

which is a sum of the standard Lagrangian function and the quadratic penalty function. Even when the functions \(f,g\) and \(h\) are twice continuously differentiable, the PHR augmented Lagrangian function is not twice continuously differentiable. To guarantee twice continuous differentiability, an exponential-type augmented Lagrangian function was proposed in [34, 40, 48]. The augmented Lagrangian functions were also used as merit functions for the sequential quadratic programming (SQP) methods [10, 11, 13, 26]. Other related researches for augmented Lagrangian methods may be found in [21, 27, 28, 30].

The boundedness of the penalty parameters is a basic requirement for the convergence result in most exact penalty methods for constrained optimization, including the augmented Lagrangian method. Rockafellar [43, 44] showed that the augmented Lagrangian method is an exact penalty method. However, to ensure the boundedness of the penalty parameters, it has been common to make assumptions that the Linear Independence Constraint Qualification (LICQ) holds at all feasible and infeasible accumulation points of the iteration sequence (see e.g. [22]). In fact, the MFCQ holding at all feasible and infeasible accumulation points of the iteration sequence is sufficient to ensure the boundedness of the penalty parameters (see e.g. [50]). The MFCQ is a strong condition since many problems such as the mathematical program with equilibrium constraints (MPEC), will never satisfy the MFCQ. Recently some papers [2, 3, 9] studied the boundedness of the penalty parameters for the augmented Lagrangian algorithm under the Constant Positive Linear Dependence (CPLD) constraint qualification, the MFCQ and the LICQ. The CPLD condition was proposed by Qi and Wei [42] and proved to be a constraint qualification by Andreani et al. [4]. Although the CPLD condition is weaker than the MFCQ, it is still not reasonable to expect the CPLD condition to hold for general MPECs [31].

In the case where all functions except one constraint function are smooth, Xu and Ye [50] used a family of smoothing functions with gradient consistency properties to approximate the nonsmooth function, applied the augmented Lagrangian method to the smoothing problems and updated the smoothing parameter. The boundedness of the penalty parameters and hence the global convergence of the algorithm was shown under the assumptions that the nonsmooth version of the MFCQ called the generalized MFCQ (GMFCQ) holds at all feasible and infeasible accumulation points. Unfortunately GMFCQ never holds for bilevel programs (see [53]) which was our main motivation to study an augmented Lagrange algorithm for nonsmooth and nonconvex problems. It was observed that under the calmness condition [50], the sequence of multipliers is very likely to be bounded and hence the smoothing augmented Lagrangian algorithm is efficient for searching a stationary point of bilevel program. However up to now, there is no proof that the calmness condition would guarantee the boundedness of the penalty parameters. To cope with this difficulty, recently Xu et al. [51] proposed a weaker version of the GMFCQ called the weak GMFCQ (WGMFCQ). It was shown in [51] that although the GMFCQ will never hold for the bilevel program, the weaker version of the GMFCQ may hold for bilevel programs.

In this paper, we extend the smoothing augmented Lagrange algorithm to the general nonsmooth and nonconvex problem (P). We show that if either the exact penalty sequence is bounded or if all feasible or infeasible accumulation points of the iteration sequence generated by the algorithm satisfy the WGMFCQ, then any accumulation point is a stationary point of the original problem (P). We apply the smoothing augmented Lagrangian method to the bilevel program and verify that either the exact penalty sequence is bounded or the WGMFCQ holds for all bilevel programs in this paper.

The rest of the paper is organized as follows. In Sect. 2, we present a summary of constraint qualifications that will guarantee the global convergence of the algorithm. In Sect. 3, we propose a smoothing augmented Lagrangian algorithm for locating a stationary point of a general nonsmooth and nonconvex optimization problem (P) and establish a convergence result for the algorithm. In Sect. 4, we report our numerical experiments for some general nonsmooth and nonconvex constrained optimization problems as well as some bilevel programs.

We adopt the following standard notation in this paper. For any two vectors \(a\) and \(b \) in \(\mathbb {R}^n\), we denote by \(a^T b\) their inner product. Given a function \(G: \mathbb {R}^n\rightarrow \mathbb {R}^m\), we denote its Jacobian by \(\nabla G(z)\in \mathbb {R}^{m\times n}\) and, if \(m=1\), the gradient \(\nabla G(z)\in \mathbb {R}^n\) is considered as a column vector. For a matrix \(A\in \mathbb {R}^{n\times m}, A^T\) denotes its transpose. In addition, we let \(\mathbf {N}\) be the set of nonnegative integers and \(\exp [z]\) be the exponential function.

2 Constraint qualifications

The focus of this section is on constraint qualifications. Let \(\varphi : \mathbb {R}^n\rightarrow \mathbb {R}\) be Lipschitz continuous near \(\bar{x}\). We denote the Clarke generalized gradient of \(\varphi \) at \(\bar{x}\) by \(\partial \varphi (\bar{x})\). Definition of the Clarke generalized gradient and its properties can be found in [19, 20].

From now on for \(\bar{x}\), a feasible solution of problem \((P)\), we denote by \(I(\bar{x}):=\{i=1,\ldots ,p:g_i(\bar{x})=0\}\) the active set at \(\bar{x}\).

Definition 2.1

(Stationary point) We call a feasible point \(\bar{x}\) of problem \(\mathrm{(P)}\) a stationary point if there exists a (normal) multiplier \(\lambda \in \mathbb {R}^q\) such that

The above stationary condition is a Karush–Kuhn–Tucker (KKT) type necessary optimality condition. It holds at a locally optimal solution only under certain constraint qualifications. The Fritz John type necessary optimality condition, however, holds without constraint qualification but has a multiplier attached to the objective function. When the multiplier corresponding to the objective function in the Fritz John type necessary optimality condition vanishes, a multiplier is called an abnormal multiplier (see Clarke [19, p. 235]). The Fritz John type necessary optimality condition is equivalent to saying that at an optimal solution, either there exists a normal multiplier or there is a nonzero abnormal multiplier.Hence the nonexistence of a nonzero abnormal multiplier would imply the existence of a normal multiplier. Therefore the following commonly used constraint qualification, under which any locally optimal solution of problem (P) is a stationary point, follows naturally from the Fritz John type necessary optimality condition [19, Theorem 6.1.1].

Definition 2.2

(NNAMCQ) We say that the no nonzero abnormal multiplier constraint qualification \((\mathrm{NNAMCQ})\) holds at a feasible point \(\bar{x}\) of problem \(( P)\) if

When condition (2.1) holds, we say that the vectors

where \(v_i\in \partial g_i(\bar{x}) (i \in I(\bar{x})), v_j\in \partial h_j(\bar{x}) (j=p+1,\ldots , q)\) are positively linearly independent. Jourani [32] showed that the NNAMCQ is equivalent to the GMFCQ to be defined as follows.

Definition 2.3

(GMFCQ) A feasible point \(\bar{x}\) is said to satisfy the generalized Mangasarian-Fromovitz constraint qualification \(\mathrm{(GMFCQ)}\) for problem \(( P)\) if

-

(i)

\(v_{p+1},\ldots ,v_q\) are linearly independent, where \(v_j\in \partial h_j(\bar{x}), j=p+1,\ldots ,q\);

-

(ii)

there exists a direction \(d\in \mathbb {R}^n\) such that

$$\begin{aligned}&v_i^T d<0, \quad \forall v_i\in \partial g_i(\bar{x}), \quad i \in I(\bar{x}),\\&v_j^T d=0, \quad \forall v_j\in \partial h_j(\bar{x}) , \quad j=p+1,\ldots ,q. \end{aligned}$$

Although the NNAMCQ and the GMFCQ are equivalent, it is some times easier to verify the NNAMCQ than the GMFCQ since verifying the NNAMCQ amounts to verifying the positive linear independence of some vectors. In particular when there is no inequality constraints or there are only two constraints, the positive linear independence is reduced to the linear independence. We will explain this point using the examples in Sect. 4.

In order to accommodate infeasible accumulation points in the numerical algorithm, we now extend the NNAMCQ and the GMFCQ to infeasible points. Note that when \(\bar{x}\) is feasible, ENNAMCQ and EGMFCQ reduce to NNAMCQ and GMFCQ respectively.

Definition 2.4

(ENNAMCQ) We say that the extended no nonzero abnormal multiplier constraint qualification \((\mathrm{ENNAMCQ})\) holds at \( \bar{x} \in \mathbb {R}^n\) if

imply that \( \lambda _i=0,\lambda _j=0\).

Definition 2.5

(EGMFCQ) A point \(\bar{x}\in \mathbb {R}^n\) is said to satisfy the extended generalized Mangasarian Fromovitz constraint qualification (EGMFCQ) for problem (P) if

-

(i)

\(v_{p+1},\ldots ,v_q\) are linearly independent, where \(v_j\in \partial h_j(\bar{x}), j=p+1,\ldots ,q\),

-

(ii)

there exists a direction \(d\) such that

$$\begin{aligned}&g_i(\bar{x})+v_i^T d<0,\ \quad \forall v_i\in \partial g_i(\bar{x}), \quad i=1,\ldots ,p,\\&h_j(\bar{x})+v_j^T d=0,\ \quad \forall v_j\in \partial h_j(\bar{x}), \,\, j=p+1,\ldots ,q. \end{aligned}$$

Since the ENNAMCQ and the EGMFCQ may be too strong for some problems to hold, in [51] we have proposed two weaker constraint qualifications. These two new conditions are defined for the nonsmooth problem (P) relatively with smoothing functions as to be defined next.

Definition 2.6

Let \(g: \mathbb {R}^n\rightarrow R\) be a locally Lipschitz function. Assume that, for a given \(\rho >0, g_{\rho }: \mathbb {R}^n\rightarrow R\) is a continuously differentiable function. We say that \(\{g_{\rho }: \rho >0\}\) is a family of smoothing functions of \(g\) if \(\lim _{z\rightarrow x,\ \rho \uparrow \infty }g_{\rho }(z)=g(x)\) for any fixed \(x\in \mathbb {R}^n\).

In order to guarantee the convergence to a stationary point, the smoothing function is required to have the following property.

Definition 2.7

[18] We say that a family of smoothing functions \(\{g_\rho :\rho >0\}\) satisfies the gradient consistency property if \( \limsup _{z\rightarrow x,\, \rho \uparrow \infty } \nabla g_{\rho }(z)\) is nonempty and \( \limsup _{z\rightarrow x,\, \rho \uparrow \infty } \nabla g_{\rho }(z)\subseteq \partial g(x)\) for any \(x\in \mathbb {R}^n\), where \( \limsup _{z\rightarrow x,\, \rho \uparrow \infty } \nabla g_{\rho }(z)\) denotes the set of all limiting points

Rockafellar and Wets [45, Example 7.19 and Theorem 9.67] showed that for any locally Lipschitz function \(g\), one can always find a family of smoothing functions of \(g\) with the gradient consistency property by the integral convolution:

where \(\phi _{\rho }:\mathbb {R}^n\rightarrow \mathbb {R}_+\) is a sequence of bounded, measurable functions with \(\int _{\mathbb {R}^n} \phi _{\rho }(x)dx=1\) such that the sets \(B_{\rho }=\{x:\phi _{\rho }(x)>0\}\) form a bounded sequence converging to \(\{0\}\) as \(\rho \uparrow \infty \). What is more, there are many other smoothing functions with the gradient consistency property which are not generated by the integral-convolution with bounded supports. The reader is referred to [12, 14, 16, 17] for more details.

Using the smoothing technique, one approximates the locally Lipschitz functions \(f(x), g_i(x), i=1,\ldots ,p\) and \(h_j(x), j=p+1,\ldots ,q\) by families of smoothing functions \(\{f_{\rho }(x):\rho >0\}, \{g^i_{\rho }(x):\rho >0\}, i=1,\ldots ,p\) and \(\{h^j_{\rho }(x):\rho >0\}, j=p+1,\ldots ,q\) which satisfy the gradient consistency property. Based on the sequence of iteration points generated by the smoothing SQP algorithm, in [51] we defined the new conditions as follows:

Definition 2.8

(WNNAMCQ) Let \(\{x_k \}\) be a sequence of iteration points for problem \(( P)\) and \(\rho _k\uparrow \infty \) as \(k\rightarrow \infty \). Suppose that \(\bar{x}\) is a feasible accumulation point of the sequence \(\{x_k \}\). We say that the weakly no nonzero abnormal multiplier constraint qualification \((\mathrm{WNNAMCQ})\) based on the smoothing functions \(\{g^i_{\rho }(x):\rho >0\}, i=1,\ldots ,p, \{h^j_{\rho }(x):\rho >0\}, j=p+1,\ldots ,q\) holds at \(\bar{x}\) provided that

for any \(K_0\subseteq K \subseteq \mathbf N\) such that \( \lim _{k\rightarrow \infty , k\in K} x_k=\bar{x}\) and

Definition 2.9

(WGMFCQ) Let \(\{x_k \}\) be a sequence of iteration points for problem \(( P)\) and \(\rho _k\uparrow \infty \) as \(k\rightarrow \infty \). Let \(\bar{x}\) be a feasible accumulation point of the sequence \(\{x_k \}\). We say that the weakly generalized Mangasarian Fromovitz constraint qualification (WGMFCQ) based on the smoothing functions \(\{g^i_{\rho }(x):\rho >0\}, i=1,\ldots ,p, \{h^j_{\rho }(x):\rho >0\}, j=p+1,\ldots ,q\) holds at \(\bar{x}\) provided the following conditions hold. For any \(K_0\subseteq K \subseteq \mathbf N\) such that \( \lim _{k\rightarrow \infty , k\in K} x_k=\bar{x}\) and any

-

(i)

\(v_{p+1},\ldots ,v_q\) are linearly independent;

-

(ii)

there exists a direction \(d\) such that

$$\begin{aligned}&v_i^T d<0,\quad \mathrm{for}\ \mathrm{all } \ i \in I(\bar{x}),\\&v_j^T d=0,\quad \mathrm{for}\ \mathrm{all } \ j=p+1,\ldots ,q. \end{aligned}$$

The WNNAMCQ and the WGMFCQ can be extended to infeasible points [51].

Definition 2.10

(EWNNAMCQ) Let \(\{x_k \}\) be a sequence of iteration points for problem \(( P)\) and \(\rho _k\uparrow \infty \) as \(k\rightarrow \infty \). Let \(\bar{x}\) be a accumulation point of the sequence \(\{x_k \}\). We say that the extended weakly no nonzero abnormal multiplier constraint qualification \((\mathrm{EWNNAMCQ})\) based on the smoothing functions \(\{g^i_{\rho }(x):\rho >0\}, i=1,\ldots ,p, \{h^j_{\rho }(x):\rho >0\}, j=p+1,\ldots ,q\) holds at \(\bar{x}\) provided that

implies that \(\lambda _i=0,\lambda _j=0\) for any \(K_0\subseteq K \subseteq \mathbf N\) such that \( \lim _{k\rightarrow \infty , k\in K} x_k=\bar{x}\) and

Definition 2.11

(EWGMFCQ) Let \(\{x_k \}\) be a sequence of iteration points for problem \(( P)\) and \(\rho _k\uparrow \infty \) as \(k\rightarrow \infty \). Let \(\bar{x}\) be a accumulation point of the sequence \(\{x_k \}\). We say that the extended weakly generalized Mangasarian Fromovitz constraint qualification (EWGMFCQ) based on the smoothing functions \(\{g^i_{\rho }(x):\rho >0\}, i=1,\ldots ,p, \{h^j_{\rho }(x):\rho >0\}, j=p+1,\ldots ,q\) holds at \(\bar{x}\) provided that the following conditions hold. For any \(K_0\subseteq K \subseteq \mathbf N\) such that \( \lim _{k\rightarrow \infty , k\in K} x_k=\bar{x}\) and any

-

(i)

\(v_{p+1},\ldots ,v_q\) are linearly independent;

-

(ii)

there exists a nonzero direction \(d\in \mathbb {R}^n\) such that

$$\begin{aligned}&g_i(\bar{x})+v_i^T d<0,\quad \! \mathrm{for}\ \mathrm{all } \quad i=1,\ldots ,p,\end{aligned}$$(2.4)$$\begin{aligned}&h_j(\bar{x})+v_j^T d=0,\quad \! \mathrm{for}\ \mathrm{all } \quad j=p+1,\ldots ,q. \end{aligned}$$(2.5)

It was showed in [51] that the EWGMFCQ is equivalent to the EWNNAMCQ.

3 Smoothing augmented Lagrangian method

In this section, we propose a smoothing augmented Lagrangian algorithm and show its convergence.

For each smoothing parameter \(\rho >0\), we use the PHR augmented Lagrangian function to define the smoothing augmented Lagrangian function as follows:

For each \(\rho >0, c>0, \lambda \in \mathbb {R}^{q}\), we consider the following penalized problem:

In the algorithm, we denote the residual function measuring the infeasibility and the complementarity by

Since \((\mathrm{P}_\rho ^{\lambda ,c})\) is a smooth unconstrained optimization problem for each fixed \(\rho >0, c>0, \lambda \in \mathbb {R}^{q}\), we suggest to use a gradient descent method to find a stationary point of the problem. Then we increase the smoothing parameter \(\rho \), update the multiplier \(\lambda \) and increase the penalty parameter \(c\) provided that the residual \(\sigma _{\rho }^{\lambda }(x)\) has sufficiently decreased.

We will show that any sequence of the iteration points generated by the algorithm converges to some stationary point of problem (P) when \(\rho \) goes to infinity and the penalty parameter \(c\) is bounded. Furthermore, the EWNNAMCQ guarantees the boundedness of the sequence of the penalty parameters.

We propose the following smoothing augmented Lagrangian algorithm.

Algorithm 3.1

Let \( \{\beta ,\sigma _{1}\}\) be constants in \((0,1)\) and \(\{\hat{\eta }, \sigma \}\) be constants in \((1,\infty )\). Let \(\{\varepsilon _k\}\) be a positive sequence converging to 0 and \(\sigma _k\) be a sequence approaching \(+\infty \) with \(\sigma _k\varepsilon _k\rightarrow \infty \) as \(k\rightarrow \infty \). Choose an initial point \(x^{0}=x^1\in \mathbb {R}^n\), an initial smoothing parameter \(\rho _0>0\), an initial penalty parameter \(c_0>0\) and an initial multiplier \({\lambda }^{0}\in \mathbb {R}^q\). Let constants \(\lambda _{min}<0\) and \(\lambda _{max}>0\) and take \(\bar{\lambda }^0\) as the Euclidean projection of \({\lambda }^{0} \) onto \(\bigotimes _{i=1}^p [0,\lambda _{max}]\times \bigotimes _{i=p+1}^q [\lambda _{min},\lambda _{max}]\). Set \(k:=0\).

Let \(\lambda _i^{1}=\max \{0,\bar{\lambda }_i^0+c_{0} g_{\rho _0}^i(x^{1})\},\ i=1,\ldots ,p; \lambda _{j}^{1}=\bar{\lambda }_j^0+c_{0} h_{\rho _0}^j(x^{1}),\ j=p+1,\ldots ,q\) and take \( \bar{\lambda }^{1}\) as the Euclidean projection of \( \lambda ^{1}\) onto \(\bigotimes _{i=1}^p [0,\lambda _{max}]\times \bigotimes _{i=p+1}^q [\lambda _{min},\lambda _{max}]\).

-

1.

If \(\nabla G_{\rho _{k}}^{\bar{\lambda }^{k},c_k}(x^{k+1})=0\) and \(\sigma _{\rho _k}^{{\lambda }^{k+1}}(x^{k+1})=0\), terminate. Otherwise, set \(k=k+1\), and go to Step 2.

-

2.

Compute \(d_k:=-\nabla G_{\rho _{k}}^{\bar{\lambda }^{k},c_k}(x^k)\). Let \(x^{k+1}:=x^k+\alpha _k d_k\), where \(\alpha _k:=\beta ^{l_k}\), \(l_k\in \{0,1,2\ldots \}\) is the smallest number satisfying

$$\begin{aligned} G_{\rho _{k}}^{\bar{\lambda }^{k},c_k}(x^{k+1})- G_{\rho _{k}}^{\bar{\lambda }^{k},c_k}(x^k) \le -\alpha _k\sigma _{1} \Vert \nabla G_{\rho _{k}}^{\bar{\lambda }^{k},c_k}(x^{k})\Vert ^2. \end{aligned}$$(3.1)If

$$\begin{aligned} \Vert \nabla G_{\rho _{k}}^{\bar{\lambda }^{k},c_k}(x^{k+1})\Vert < \hat{\eta } \rho _{k}^{-1}, \end{aligned}$$(3.2)set \(\rho _{k+1}:=\sigma \rho _{k}\) and go to Step 3. Otherwise, set \(k=k+1\) and repeat Step 2.

-

3.

Set

$$\begin{aligned}&\lambda _i^{k+1}=\max \{0,\bar{\lambda }_i^k+c_{k} g_{\rho _k}^i(x^{k+1})\},\quad i=1,\ldots ,p;\end{aligned}$$(3.3)$$\begin{aligned}&\lambda _{j}^{k+1}=\bar{\lambda }_j^k+c_{k} h_{\rho _k}^j(x^{k+1}),\quad j=p+1,\ldots ,q. \end{aligned}$$(3.4)Take \(\bar{\lambda }^{k+1}\) as the Euclidean projection of \(\lambda ^{k+1}\) onto \(\bigotimes _{i=1}^p [0,\lambda _{max}]\times \bigotimes _{i=p+1}^q [\lambda _{min},\lambda _{max}]\), and go to Step 4.

-

4.

If

$$\begin{aligned} \sigma _{\rho _k}^{{\lambda }^{k+1}}(x^{k+1})<\varepsilon _k, \end{aligned}$$(3.5)go to Step 1. Otherwise, set \(c_{k+1}:= \sigma _{k+1}+c_k, k=k+1\) and go to Step 2.

To prove the convergence theorems we need the following lemma.

Lemma 3.1

Suppose that Algorithm 3.1 does not terminate within finite iterations. Let \(\{x^{k}\}\) be a sequence generated by Algorithm 3.1 which has an accumulation point. Then there exists an infinite subset \(K\subseteq \mathbf {N}\) such that condition (3.2) holds for each \(k\in K\) and hence \(\lim _{k\rightarrow \infty }\rho _k=+\infty .\)

Proof

Assume for a contradiction that for any large \(k\), the condition (3.2) fails and thus there exist \(\bar{k}, \bar{\rho }, \bar{\lambda }\) and \(\bar{c}\) such that when \(k\ge \bar{k}, \rho _k=\bar{\rho }, \bar{\lambda }_k=\bar{\lambda }\) and \(c_k=\bar{c}\).

We first show that there exists an infinite subset \(K_1\subseteq \mathbf {N}\) such that

To the contrary, suppose that there exists \(\varepsilon >0\) such that for sufficiently large \(k> \bar{k}\),

From (3.1), we have that for all \(k> \bar{k}\)

Since the Armijo line search in Step 2 only requires a small number of iterations, \(\alpha _k\) will never approach to 0. It follows from (3.7) that \(G_{\bar{\rho }}^{\bar{\lambda },\bar{c}}(x^{k})\rightarrow -\infty \) as \(k\rightarrow \infty \). But this is impossible since \(G_{\bar{\rho }}^{\bar{\lambda },\bar{c}}(x)\) is continuous and \(\{x^k\}\) has an accumulation point \(x^*\). Therefore, there exists an infinite subset \(K:=\{k-1:k\in K_1\}\) such that the condition (3.2) holds for each \(k\in K\). By the updating rule in Algorithm 3.1 we must have \(\lim _{k\rightarrow \infty }\rho _k=+\infty \). \(\square \)

Theorem 3.1

Suppose Algorithm 3.1 does not terminate within finite iterations. Let \(x^*\) be an accumulation point of the sequence \(\{x^{k}\}\) generated by Algorithm 3.1. If \(\{c_k\}\) is bounded, then \(x^*\) is a stationary point of problem \((\mathrm{P})\).

Proof

Without loss of generality, assume that \(\lim _{k\rightarrow \infty }x^{k}=x^*\). From the updating rule of \(c_k\) in the algorithm, the boundedness of \(\{c_k\}\) is equivalent to saying that condition (3.5) holds for sufficiently large \(k\) and thus \( \lim _{k\rightarrow \infty } \sigma _{\rho _{k}}^{{\lambda }^{k+1}}(x^{k+1})= 0\). It follows from the definition of \(\sigma _{\rho }^{\lambda }(\cdot )\) that \(|h_{\rho _k}^j(x^{k+1})|<\varepsilon _k, j=p+1,\ldots ,q\) and \(g_{\rho _k}^i(x^{k+1})<\varepsilon _k, i=1,\ldots ,p\) for sufficiently large \(k\). Thus \(\{\lambda ^k\}\) is bounded from the updating rule (3.3) and (3.4).

Since \(\{ g_\rho ^i:\rho >0\}, i=1,\ldots , p, \{ h_\rho ^j:\rho >0\}, j=p+1,\ldots , q\) are families of smoothing functions of \(g_i, i=1,\ldots , p, h_j, j=p+1,\ldots , q\), taking limits as \(k\rightarrow \infty \) we have \(h_j(x^*)=0,\ j=p+1,\ldots ,q, g_i(x^*)\le 0,\ i=1,\ldots ,p\). Let

By calculation, we have

From the definition of \(\mu _{\rho }^{\lambda ,c}(\cdot )\) and the updating rule (3.3)–(3.4), we have \(\mu _{\rho _{k},i}^{\bar{\lambda }^{k},c_{k}}(x^{k+1})=\lambda _i^{k+1},\ i=1,\ldots ,p\) and \(\mu _{\rho _{k},j}^{\bar{\lambda }^{k},c_{k}}(x^{k+1})=\lambda _j^{k+1},\ j=p+1,\ldots ,1\). Note that, by the boundedness of \(\{\lambda ^k\}\), there is a subsequence \(K_0\subseteq \mathbf {N}\) such that \(\{\lambda ^k\}_{K_0}\) is convergent. Let \({\lambda ^*}:= \lim _{k\rightarrow \infty ,\,k\in {K_{0}}}\lambda ^{k}\).

By the gradient consistency property of \(f_{\rho }(\cdot ), g^i_\rho (\cdot ), i=1,\ldots ,p\) and \(h^j_\rho (\cdot ), j=p+1,\ldots ,q\), there exists a subsequence \(\bar{K_{0}}\subseteq K_0\) such that

From Lemma 3.1 and condition (3.2), we know that \(\lim _{k\rightarrow \infty }\nabla G_{\rho _{k}}^{\bar{\lambda }^{k},c_k}(x^{k+1})= 0\). Taking limits in (3.8) as \(k\rightarrow \infty , k\in \bar{K_{0}}\), by the gradient consistency properties, we have

The feasibility of \(x^*\) follows from taking the limits in \( \lim _{k\rightarrow \infty } \sigma _{\rho _{k}}^{{\lambda }^{k+1}}(x^{k+1})= 0\). It follows from (3.3) that \({\lambda }_i^*\ge 0, i=1,\ldots ,p\). We now show that the complementary slackness condition holds. If \(g_i(x^*)< 0\) for certain \(i\in \{1,\ldots , p\}\), we have \(g_{\rho _k}^i(x^{k+1})< 0\) for sufficiently large \(k\) since \(\{ g_\rho ^i:\rho >0\}\) are families of smoothing functions of \(g_i, i=1,\ldots ,p\). Then \(\lambda _i^*= \lim _{k\rightarrow \infty ,\,k\in \bar{K_{0}}}{\lambda }_i^{k+1}= 0\) since \( \lim _{k\rightarrow \infty } \sigma _{\rho _{k}}^{{\lambda }^{k+1}}(x^{k+1})= 0\). Therefore \(x^*\) is a stationary point of problem \((\mathrm{P})\) and the proof of the theorem is complete. \(\square \)

Theorem 3.2

Suppose Algorithm 3.1 does not terminate within finite iterations and \(\{x_k,\rho _k, \lambda _k,c_k\}\) is a sequence generated by Algorithm 3.1. If for every \(K\subseteq \mathbf {N}\) and \(x^*\) such that \(\lim _{k\rightarrow \infty , k\in K} x_k=x^*\), the \(\mathrm{EWNNAMCQ}\) holds at \(x^*\), then \(\{c_k\}\) is bounded.

Proof

Assume for a contradiction that \(\{c_k\}\) is unbounded. Since \(x^*\) is an arbitrary accumulation point, we assume there exists an infinite index set \(\tilde{K}\subseteq K\) such that condition (3.5) fails for every \(k\in \tilde{K}\) sufficiently large. Then for sufficiently large \(k\in \tilde{K}\), at least one of the following conditions hold:

-

(a)

there exists an index \(i_1\in \{1,\ldots ,p\}\) such that \(g_{\rho _k}^{i_1}(x^{k+1})\ge \varepsilon _k,\)

-

(b)

there exists an index \(j_1\in \{p+1,\ldots ,q\}\) such that \(|h_{\rho _k}^{j_1}(x^{k+1})|\ge \varepsilon _k\).

By the gradient consistency property of \(f_{\rho }(\cdot ), g^i_\rho (\cdot ), i=1,\ldots ,p\) and \(h^j_\rho (\cdot ), j=p+1,\ldots ,q\), there exists a subsequence \(\bar{K}\subseteq \tilde{K}\) such that

From the updating rule of \(c_k\), we have \(c_k \varepsilon _k\rightarrow +\infty \) as \(k\rightarrow \infty \). Thus by the definition of \(\mu _{\rho }^{\lambda ,c}(\cdot )\), under either case (a) or case (b), we have \(\Vert \mu _{\rho _{k}}^{\bar{\lambda }^{k},c_{k}}(x^{k+1})\Vert \rightarrow \infty \). There exists a subsequence \(\bar{K}_{0}\subseteq \bar{K}\) and \(\mu \in \mathbb {R}^{q}\) nonzero such that

It follows from the definition of \(\mu _{\rho }^{\lambda ,c}(\cdot )\) that \({\mu }_i \ge 0,\ i=1,\ldots , p\).

Similarly as in Theorem 3.1, \(\lim _{k\rightarrow \infty }\nabla G_{\rho _{k}}^{\bar{\lambda }^{k},c_k}(x^{k+1})= 0\). Dividing by \(\Vert \mu _{\rho _{k}}^{\bar{\lambda }^{k},c_{k}}(x^{k+1})\Vert \) in both sides of (3.8) and letting \(k\rightarrow \infty \) in \(\bar{K}_{0}\), we have

We now show that

and consequently conditions (3.10) and (3.11) contradict with the assumption that the EWNNAMCQ holds. We first show that \(\mu _ig_i(x^*)\ge 0\). If \(g_i(x^*)< 0\) for certain \(i\in \{1,\ldots , p\}, g_{\rho _k}^i(x^{k+1})< 0\) for sufficiently large \(k\) since \(\{ g_\rho ^i:\rho >0\}\) are families of smoothing functions of \(g_i, i=1,\ldots ,p\). Thus \(\mu _i= \lim _{k\rightarrow \infty ,\,k\in \bar{K_{0}}}\mu _{\rho _{k},i}^{\bar{\lambda }^{k},c_{k}}(x^{k+1})= 0\) by the definitions \(\mu _{\rho }^{\lambda ,c}(\cdot )\) and the unboundedness of \(\{c_k\}\). Consequently we have \(\mu _i=0\) if \(g_i(x^*)< 0\), for \(i\in \{1,\ldots ,p\}\). Next we show that \(\mu _j h_j(x^*) \ge 0\). Since \(c_k\rightarrow \infty \) as \(k\rightarrow \infty \), we have for sufficiently large \(k\in \bar{K}_{0}\),

Thus

and hence (3.11) holds. The contradiction shows that \(\{c_k\}\) is bounded. \(\square \)

The following corollary follows immediately from Theorems 3.1 and 3.2.

Corollary 3.1

Suppose that Algorithm 3.1 does not terminate within finite iterations. If the \(\mathrm{EWNNAMCQ}\) holds at any accumulation point of the sequence \(\{x^k\}\) generated by Algorithm 3.1, then any accumulation point is a stationary point of problem \((\mathrm{P})\).

4 Applications and numerical examples

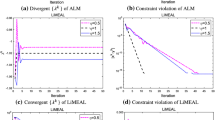

In this section, we first test our algorithm on two general nonsmooth and nonconvex constrained optimization problems. Then we apply the algorithm to the bilevel programs.

Before presenting numerical examples, we first give some remarks on choosing the parameters in the algorithm. In the Armijo line search, a small \(\sigma _1\) gives the step-size too much flexibility while increasing \(\sigma _1\) makes the search for step-size costly. \(\sigma _1=0.8\) is a good selection from our experience.

Since we would like to increase \(\rho _k\) fast in the beginning of the algorithm and then let \(\rho _k\) grow slowly to infinity, \(\hat{\eta }\) should be a large constant. In practice taking different initial parameter \(\rho _0\) usually leads to similar results. According to our experience, if a relatively large initial parameter \(\rho _0\) is chosen, then a smaller \(\sigma \) should be taken to let \(\rho _k\) goes to infinity slowly. We suggest to choose a relatively small \(\rho _0\) to guarantee a faster convergence rate.

\(\lambda _{max}\) and \(\lambda _{min}\) are upper and lower bounds for the projected multiplier \(\bar{\lambda }\) and can be chosen arbitrarily. In the algorithm, we select \(\lambda _{max}=10^4\) and \(\lambda _{min}=-10^{4}\). There are different ways for choosing \(\{\varepsilon _k\}\) and \(\{\sigma _k\}\). In our experiments, unless otherwise specified, we select the sequences as \(\varepsilon _k:=\frac{\sigma '}{\sqrt{k}},\ \sigma _k:=k^2\) for each \(k\),where \(\sigma '>0\) is a small constant.

In numerical practise, it is impossible to obtain an exact ‘0’, thus we select some small enough \(\epsilon >0, \epsilon _1>0\) and change the update rule of \(c_k\) to the case when

and terminate the algorithm when

4.1 Illustrative examples for general problems

In this subsection, we illustrate Algorithm 3.1 by two general nonsmooth and nonconvex constrained optimization problems.

The first task in designing a smoothing method is to find a family of smoothing functions with the gradient consistency property for nonsmooth functions involved. Many nonsmooth functions can be considered as a composition of a smooth function with a plus function \((t)_+:=\max \{0,t\}\). Chen and Mangasarian [16] constructed smooth approximations to the plus function by using the integral convolution with density functions as follows. Let \(\phi : \mathbb {R}\rightarrow \mathbb {R}_+\) be a piecewise continuous density function with \(\phi (s)=\phi (-s)\) and \( \int _{-\infty }^{+\infty } |s| \phi (s)ds <\infty \). Then \( \psi _\mu (t):= \int _{-\infty }^{+\infty } (t-\mu s)_+ \phi (s)ds, \) with \(\mu \downarrow 0\) is a family of smoothing functions of the plus function with the gradient consistency property. Choosing \(\phi (s):=\frac{2}{(s^2+4)^{\frac{3}{2}}}\) results in the so-called the Chen–Harker–Kanzow–Smale (CHKS) smoothing function of \((t)_+\) ([15, 33, 47]):

Choosing \( \phi (s):={\left\{ \begin{array}{l@{\quad }l} 0,&{} \mathrm{if}\ |s|>\frac{1}{2}\\ 1,&{} \mathrm{if}\ |s|\le \frac{1}{2}, \end{array}\right. } \) results in the so-called uniform smoothing function of \((t)_+\):

Since \(|t|=(t)_++(-t)_+\), approximating \((t)_+\) by \(\psi ^2_\mu (t)\) and \((-t)_+\) by \(\psi ^2_\mu (-t)\) respectively results in the following smoothing function of \(|t|\) which is used frequently:

Example 4.1

[23, Example 5.1] Consider the nonsmooth constrained optimization program of minimizing a nonsmooth Rosenbrock function subject to an inequality constraint on a weighted maximum value of the variables:

The unique optimal solution of the problem is \((\bar{x},\bar{y})=(\frac{\sqrt{2}}{2},\frac{1}{2})\).

Since the Clarke generalized gradient of the constraint function is

where \(co\) denotes the convex hull, we have \((0,0)\notin \partial g(x,y), \forall (x,y)\) which implies that the ENNAMCQ is satisfied at every point in \(\mathbb {R}^2\). Our convergent theorem guarantees that any accumulation point of the iteration sequence must be a stationary point.

Rewrite the objective function and the constraint function as

Since \((x^2-y)_+\) is a composition of the smooth function \(x^2-y\) with the plus function and \(\nabla (x^2-y)\) has full rank, by [12, Theorem 4.6], \(\psi ^1_\mu (x^2-y)\) is a smoothing function of \((x^2-y)_+\) with the gradient consistency property. Similarly \(\psi ^1_\mu (-x^2+y)\) and \(\psi ^1_\mu (2y-\sqrt{2} x)\) are smoothing functions of \((-x^2+y)_+\) and \((2y-\sqrt{2}x)_+\) with the gradient consistency property respectively. Taking \(\rho =\frac{1}{4\mu ^2}\), it follows that

form the family of smoothing functions for \(f(x,y)\) and \(g(x,y)\) with the gradient consistency property.

In our test, we choose the initial point \((x^0,y^0)=(0.5,0.3)\) and the parameters \(\beta =0.8,\ \sigma _1=0.7,\ \rho _0=100,\ c_0=100,\ \hat{\eta }=10^3,\ \sigma =10,\sigma '=10^{-3}, \lambda ^0=100\) and \(\epsilon =10^{-5}, \epsilon _1=10^{-6}\). The stopping criteria

hold with \((x^{k+1},y^{k+1})=(0.7071,0.500)\), which is a good approximation of the true optimal solution.

Example 4.2

[7, Example 5.1] Consider the nonsmooth constrained optimization program of minimizing a nonsmooth Rosenbrock function subject to one nonsmooth inequality constraint and one linear equality constraint:

The unique optimal solution of the problem is \((\bar{x},\bar{y})=(\frac{\sqrt{2}}{2},\frac{1}{2})\).

Note that \(\nabla h(x,y)=(1,-\sqrt{2})\) and

For all \((x,y)\) in a sufficiently small neighbourhood of \((\bar{x}, \bar{y}), \partial g( x, y)=\{(2 x, 1)\}\). For each \((x,y)\) in a sufficiently small neighbourhood of \((\bar{x}, \bar{y})\), the vectors \(\{(2x,1), (1,-\sqrt{2})\}\) are linearly independent, thus the ENNAMCQ holds. Our convergent theorem guarantees that any accumulation point of the iteration sequence which is in a sufficiently small neighbourhood of \((\bar{x},\bar{y})\) must be a stationary point.

Since \(|x^2-y|\) is a composition of the smooth function \(x^2-y\) with the absolute value function and \(\nabla (x^2-y)\) has full rank, by [12, Theorem 4.6], \(\psi ^3_\mu (x^2-y)\) is a smoothing function of \(|x^2-y|\) with the gradient consistency property. Similarly \(\psi ^3_\mu (y)\) is a smoothing function of \(|y|\) with the gradient consistency property respectively. Taking \(\mu =\frac{1}{\rho }\), it follows that

form the family of smoothing functions for \(f(x,y)\) and \(g(x,y)\) with the gradient consistency property respectively.

In our test, we choose the initial point \((x^0,y^0)=(0.3,0.2)\) and the parameters \(\beta =0.8,\ \sigma _1=0.7,\ \rho _0=100,\ c_0=100,\ \hat{\eta }=5\times 10^3,\ \sigma =10,\sigma '=10^{-3}, \lambda ^0=(100, 100)\) and \(\epsilon =5\times 10^{-4}, \epsilon _1=10^{-5}\). The stopping criteria

hold with \((x^{k+1},y^{k+1})=(0.70711,0.50001)\), which is a good approximation of the true optimal solution.

4.2 Applications to the bilevel program

In this subsection, we consider the simple bilevel program

where \(S(x)\) denotes the set of solutions of the lower level program

where \(Y\) is a compact subset of \(\mathbb {R}^m\) respectively, \(f, F,g_i, i=1,\ldots ,l:\mathbb {R}^n\times \mathbb {R}^m \rightarrow \mathbb {R}\) are continuously differentiable functions and \(f\) is twice continuously differentiable in variable \(y\).

The principal-agent problem [37] is one of the most important applications of the simple bilevel program. Applications and recent developments of general bilevel programs where the constraint set \(Y\) may depend on \(x\) can be found in [5, 24, 25, 46, 49].

When the lower level program is convex in variable \(y\), it is common to replace the lower level program by its first order conditions. For a general SBP where the lower level problem may not be convex, Mirrlees [37] pointed out that the first order approach is not valid to solve (SBP) since the true optimal solution may not even be a stationary point of the problem reformulated by the first order approach.

By using the value function of the lower level program defined as \( V(x) :=\inf _{y\in Y} \ f(x,y)\), Ye and Zhu [53, 54] studied the first order condition for the following equivalent formulation under the partial calmness condition:

However, Ye and Zhu [55] illustrated that the partial calmness condition is still too strong to hold for many bilevel problems (for example, the Mirrlees’ problem) and proposed a new first order necessary optimality condition by considering the combined program with both the first order condition of the lower level problem and the value function constraint. For a general bilevel program, the lower level problem may have equality and/or inequality constraints and the first order condition is the KKT condition. If the lower level has inequality constraints, then the resulting combined program is a nonsmooth mathematical program with complementarity constraints and necessary conditions of Clarke, Mordukhovich and Strong (C, M, S) type have been studied in Ye and Zhu [55] and Ye [52]. For the simple bilevel program we consider in this paper the constraint of the lower level problem is a fixed set \(Y\) independent of \(x\). If \(Y\) can be represented by some equality and/or inequality constraints, then one can use the KKT condition as the first order condition and the resulting combined program can be studied using the result of previous section. However to concentrate the main idea and simplify the exposition we assume that for any accumulation point \((\bar{x},\bar{y}), \bar{y}\) lies in the interior of the set \(Y\). Moreover this assumption is not too strong since in practice usually one has some idea on where the optimal solution lies and can enlarge the set \(Y\) so that the optimal solution lies in the interior of \(Y\). Taking this into consideration we should try to find the stationary point of the following combined program:

Since the value function \(V(x)\) is usually nonsmooth, the problems (VP) and (CP) are both nonsmooth and nonconvex optimization problems. Note that for the stationary point whose \(y\) component lies in the interior of set \(Y\), the constraint \(y\in Y\) can be ignored and hence it is a problem of the type we study in this paper. To develop a numerical algorithm for problem (VP), Lin et al. [36] proposed to approximate the value function by its integral entropy function:

and proved that \(\gamma _{\rho }(x)\) satisfies the gradient consistency property. The advantage of using the integral entropy function to approximate the value function is that we do not need to evaluate \(V(x)\) or its Clarke generalized gradient. This means that we do not need to solve the lower level program during the iteration process of our smoothing algorithms.

Xu and Ye [50] proposed a projected smoothing augmented Lagrangian method to solve the combined program (CP). They showed that if the sequence of penalty parameters is bounded, then any accumulation point is a stationary point of the combined program. Although it was observed in [50] that the smoothing augmented Lagrangian method is efficient for the problem (CP), no sufficient conditions were given under which the sequence of penalty parameters is bounded. From the results in Sect. 3, we know that EWNNAMCQ is a sufficient condition under which the sequence of penalty parameters for problem (CP) is bounded. In the rest of this subsection, we apply Algorithm 3.1 to the combined program (CP), and verify that the WNNAMCQ holds for all bilevel programs presented here except one.

Example 4.3

(Mirrlees’ problem) [37] Consider Mirrlees’ problem

where \(S(x)\) is the solution set of the lower level program

It was shown in [37] that the unique optimal solution is \((\bar{x},\bar{y})\) with \(\bar{x}=1,\bar{y}\approx 0.9575\) .

In our test, we choose the initial point \((x^0,y^0)=(0.5,0.6)\) and the parameters \(\beta =0.8,\ \sigma _1=0.7,\ \rho _0=100,\ c_0=100,\ \hat{\eta }=10^3,\ \sigma =10,\sigma '=10^{-3}, \lambda ^0=(100,100)\) and \(\epsilon =10^{-5}, \epsilon _1=5\times 10^{-4}\). The stopping criteria

hold with \((x^{k+1},y^{k+1})=(1.0004,0.95749)\). It seems that the sequence converges to \((\bar{x},\bar{y})\).

Since

it is easy to see that the vectors \(\nabla f(\bar{x},\bar{y})-(\lim _{k\rightarrow \infty } \nabla \gamma _{\rho _k}(x^{k+1}),0)\) and \(\nabla (\nabla _y f)(\bar{x},\bar{y})\) are linearly independent. Thus the WNNAMCQ holds at \((\bar{x},\bar{y})\) and our algorithm guarantees that \((\bar{x},\bar{y})\) is a stationary point of (CP).

Example 4.4

[39, Example 3.3]

Mitsos et al. [39] found an approximate optimal solution for the problem to be \((\bar{x},\bar{y})=(0.2106,1.799)\).

In our test, we choose the initial point \((x^0,y^0)=(0.3,1.5)\) and the parameters \(\beta =0.8,\ \sigma _1=0.7,\ \rho _0=100,\ c_0=100,\ \hat{\eta }=200,\ \sigma =5,\sigma '=10^{-2}, \lambda ^0=(100,100)\) and \(\epsilon =5\times 10^{-5}, \epsilon _1=5\times 10^{-6}\). We select \(\sigma _k:=k\) for each \(k\). The stopping criteria

hold with \((x^{k+1},y^{k+1})=(0.2145255,1.800723)\).

Table 1 reports results of some iterations. From the table, it seems that \((\bar{x},\bar{y}) \approx (0.2145255,1.800723)\) is a limit point of the iteration sequence \((x^{k},y^{k})\). Since the sequence \(\nabla f(x^{k+1},y^{k+1})-(\nabla \gamma _{\rho _k}(x^{k+1}),0)\) tends to 0 as \(k\rightarrow \infty \), the WNNAMCQ may not hold at \((\bar{x},\bar{y})\). However we observe that \(\sigma _{\rho _k}^{{\lambda }^{k+1}}(x^{k+1},y^{k+1})\) also approaches 0. Therefore the feasibility and the complementarity follow the gradient consistency property and the continuity of functions. Moreover from the update rule (4.1), we do not update \(c_k\) when \(\sigma _{\rho _k}^{{\lambda }^{k+1}}(x^{k+1},y^{k+1})\) is sufficiently small and hence \(c_k\) is likely to be bounded. Therefore, the limit point should be a stationary point of the bilevel program from Theorem 3.1.

Example 4.5

[38, Example 3.14] The bilevel program

has the optimal solution point \((\bar{x},\bar{y})=(\frac{1}{4},\frac{1}{2})\) with an objective value of \(\frac{1}{4}\).

In our test, we choose the initial point \((x^0,y^0)=(0.3,0.7)\) and the parameters \(\beta =0.8,\ \sigma _1=0.8,\ \rho _0=100,\ c_0=100,\ \hat{\eta }=10^3,\ \sigma =10,\sigma '=10^{-3}, \lambda ^0=(100,100)\) and \(\epsilon = 10^{-4}, \epsilon _1=10^{-5}\). The stopping criteria

hold with \((x^{k+1},y^{k+1})=(0.2500,0.49999)\). It seems that the sequence converges to \((\bar{x},\bar{y})\).

Since

it is easy to see that the vectors \(\nabla f(\bar{x},\bar{y})-(\lim _{k\rightarrow \infty } \nabla \gamma _{\rho _k}(x^{k+1}),0)\) and \(\nabla (\nabla _y f)(\bar{x},\bar{y})\) are linearly independent. Thus the WNNAMCQ holds at \((\bar{x},\bar{y})\) and our algorithm guarantees that \((\bar{x},\bar{y})\) is a stationary point of (CP).

Example 4.6

[38, Example 3.20] The bilevel program

has the optimal solution point \((\bar{x},\bar{y})=(\frac{1}{2},\frac{1}{2})\) with an objective value of \(\frac{5}{16}\).

In our test, we choose the initial point \((x^0,y^0)=(0.3,0.2)\) and the parameters \(\beta =0.8,\ \sigma _1=0.8,\ \rho _0=100,\ c_0=100,\ \hat{\eta }=10^3,\ \sigma =10,\sigma '=10^{-3}, \lambda ^0=(100,100)\) and \(\epsilon =10^{-5}, \epsilon _1=5\times 10^{-5}\). The stopping criteria

hold with \((x^{k+1},y^{k+1})=(0.5000,0.49997)\). It seems that the sequence converges to \((\bar{x},\bar{y})\).

Since

by continuity of the functions and the gradient consistency properties, it seems that the vectors \(\nabla f(\bar{x},\bar{y})-(\lim _{k\rightarrow \infty } \nabla \gamma _{\rho _k}(x^{k+1}),0)\) and \(\nabla (\nabla _y f)(\bar{x},\bar{y})\) are linearly independent. Thus the WNNAMCQ holds at \((\bar{x},\bar{y})\). Our algorithm guarantees that \((\bar{x},\bar{y})\) is a stationary point of (CP).

References

ALGENCAN. http://www.ime.usp.br/~egbirgin/tango/

Andreani, R., Birgin, E.G., Martínez, J.M., Schuverdt, M.L.: On augmented Lagrangian methods with general lower-level constraints. SIAM J. Optim. 18, 1286–1309 (2007)

Andreani, R., Birgin, E.G., Martínez, J.M., Schuverdt, M.L.: Augmented Lagrangian methods under the constant positive linear dependence constraint qualification. Math. Program. Ser. B 111, 5–32 (2008)

Andreani, R., Martínez, J.M., Schuverdt, M.L.: On the relation between the constant positive linear dependence condition and quasinormality constraint qualification. J. Optim. Theory Appl. 125, 473–483 (2005)

Bard, J.F.: Practical Bilevel Optimization: Algorithms and Applications. Kluwer, Boston (1998)

Bertsekas, D.P.: Constrained Optimization and Lagrangian Multiplier Methods. Academic Press, New York (1982)

Bian, W., Chen, X.: Neural network for nonsmooth, nonconvex constrained minimization via smooth approximation. IEEE Trans. Neural Netw. Learn. Syst. 25, 545–556 (2014)

Birgin, E.G., Castillo, R., Martínez, J.M.: Numerical comparison of augmented Lagrangian algorithms for nonconvex problems. Comput. Optim. Appl. 23, 101–125 (2002)

Birgin, E.G., Fernández, D., Martínez, J.M.: The boundedness of penalty parameters in an augmented Lagrangian method with lower level constraints. Optim. Methods Softw. 27, 1001–1024 (2012)

Boggs, P.T., Kearsley, A.J., Tolle, J.W.: A global convergence analysis of an algorithm for large-scale nonlinear optimization problems. SIAM J. Optim. 9, 833–862 (1999)

Boggs, P.T., Tolle, J.W.: Sequential Quadratic Programming, pp. 1–51. Cambridge University Press, Cambridge (1995)

Burke, J.V., Hoheisel, T., Kanzow, C.: Gradient consistency for integral-convolution smoothing functions. Set-Valued Var. Anal. 21, 359–376 (2013)

Byrd, R.H., Tapia, R.A., Zhang, Y.: An SQP augmented Lagrangian BFGS algorithm for constrained optimization. SIAM J. Optim. 2, 210–241 (1992)

Chen, B., Chen, X.: A global and local superlinear continuation-smoothing method for \(P_0\) and \(R_0\) NCP or monotone NCP. SIAM J. Optim. 9, 624–645 (1999)

Chen, B., Harker, P.T.: A non-interior-point continuation method for linear complementarity problems. SIAM J. Matrix Anal. Appl. 14, 1168–1190 (1993)

Chen, C., Mangasarian, O.L.: A class of smoothing functions for nonlinear and mixed complementarity problems. Math. Program. 71, 51–70 (1995)

Chen, X.: Smoothing methods for nonsmooth, nonconvex minimization. Math. Program. 134, 71–99 (2012)

Chen, X., Womersley, R.S., Ye, J.J.: Minimizing the condition number of a Gram matrix. SIAM J. Optim. 21, 127–148 (2011)

Clarke, F.H.: Optimization and Nonsmooth Analysis. Wiley-Interscience, New York (1983)

Clarke, F.H., Ledyaev, YuS, Stern, R.J., Wolenski, P.R.: Nonsmooth Analysis and Control Theory. Springer, New York (1998)

Conn, A.R., Gould, N.I.M., Toint, PhL: A globally convergent augmented Lagrangian algorithm for optimization with general constraints and simple bound. SIAM J. Numer. Anal. 28, 545–572 (1991)

Conn, A.R., Gould, N.I.M., Toint, PhL: Trust Region Methods, MPS/SIAM Series on Optimization. SIAM, Philadelphia (2000)

Curtis, F.E., Overton, M.L.: A sequential quadratic programming algorithm for nonconvex, nonsmooth constrained optimization. SIAM J. Optim. 22, 474–500 (2012)

Dempe, S.: Foundations of Bilevel Programming. Kluwer, Dordrecht (2002)

Dempe, S.: Annotated bibliography on bilevel programming and mathematical programs with equilibrium constraints. Optimization 52, 333–359 (2003)

Gill, P.E., Murray, W., Saunders, M.A.: SNOPT: an SQP algorithm for large-scale constrained optimization. SIAM J. Optim. 47, 99–131 (2005)

Goldfarb, D., Polyak, R., Scheinberg, K., Yuzefovich, I.: A modified barrier-augmented Lagrangian method for constrained minimization. Comput. Optim. Appl. 14, 55–74 (1999)

Griva, I., Polyak, R.: Primal-dual nonliner rescaling method with dynamic scaling parameter update. Math. Program. Ser. A 106, 237–259 (2006)

Hestenes, M.R.: Multiplier and gradient methods. J. Optim. Theory Appl. 4, 303–320 (1969)

Huang, X.X., Yang, X.Q.: Further study on augmented Lagrangian duality theory. J. Glob. Optim. 31, 193–210 (2005)

Izmailov, A.F., Solodov, M.V., Uskov, E.I.: Global convergence of augmented Lagrangian methods applied to optimization problems with degenerate constraints, including problems with complementarity constraints. SIAM J. Optim. 22, 1579–1606 (2012)

Jourani, A.: Constraint qualifications and Lagrange multipliers in nondifferentiable programming problems. J. Optim. Theory Appl. 81, 533–548 (1994)

Kanzow, C.: Some noninterior continuation methods for linear complementarity problems. SIAM J. Matrix Anal. Appl. 17, 851–868 (1996)

Kort, B.W., Bertsekas, D.P.: A new penalty method for constrained minimization. In: Proceddings of the 1972 IEEE Conference on Decision and Control, New Orleans, pp. 162–166 (1972)

LANCELOT. http://www.cse.scitech.ac.uk/nag/lancelot/lancelot.shtml

Lin, G.H., Xu, M., Ye, J.J.: On solving simple bilevel programs with a nonconvex lower level program. Math. Program. Ser. A 144, 277–305 (2014)

Mirrlees, J.: The theory of moral hazard and unobservable behaviour: part I. Rev. Econ. Stud. 66, 3–22 (1999)

Mitsos, A., Barton, P.: A test set for bilevel programs. Technical Report, Massachusetts Institute of Technology (2006)

Mitsos, A., Lemonidis, P., Barton, P.: Global solution of bilevel programs with a nonconvex inner program. J. Glob. Optim. 42, 475–513 (2008)

Nguyen, V.H., Strodiot, J.J.: On the convergence rate of a penalty function method of exponential type. J. Optim. Theory Appl. 27, 495–508 (1979)

Powell, M.J.D.: A method for nonlinear constraints in minimization problems. In: Fletcher, R. (ed.) Optimization, pp. 283–298. Academic Press, London and New York (1969)

Qi, L., Wei, Z.: On the constant positive linear dependence condition and its application to SQP methods. SIAM J. Optim. 10, 963–981 (2000)

Rockafellar, R.T.: A dual approach to solving nonlinear programming problems by unconstrained optimization. Math. Program. 5, 354–373 (1973)

Rockafellar, R.T.: Augmented Lagrange multiplier functions and duality in nonconvex programming. SIAM J. Control 12, 268–285 (1974)

Rockafellar, R.T., Wets, R.J.-B.: Variational Analysis. Springer, Berlin (1998)

Shimizu, K., Ishizuka, Y., Bard, J.F.: Nondifferentiable and Two-Level Mathematical Programming. Kluwer, Boston (1997)

Smale, S.: Algorithms for solving equations. In: Proceedings of the International Congress of Mathematicians, Berkeley, CA, pp. 172–195 (1986)

Tseng, P., Bertsekas, D.P.: On the convergence of the exponential multiplier method for convex programming. Math. Program. 60, 1–19 (1993)

Vicente, L.N., Calamai, P.H.: Bilevel and multilevel programming: a bibliography review. J. Glob. Optim. 5, 291–306 (1994)

Xu, M., Ye, J.J.: A smoothing augmented Lagrangian method for solving simple bilevel programs. Comput. Optim. Appl. 59, 353–377 (2013)

Xu, M., Ye, J.J., Zhang, L.: Smoothing SQP method for solving degenerate nonsmooth constrained optimization problems with applications to bilevel programs. Preprint, arXiv:1403.1636v1 [Math.OC] (2014)

Ye, J.J.: Necessary optimality conditions for multiobjective bilevel programs. Math. Oper. Res. 36, 165–184 (2011)

Ye, J.J., Zhu, D.L.: Optimality conditions for bilevel programming problems. Optimization 33, 9–27 (1995)

Ye, J.J., Zhu, D.L.: A note on optimality conditions for bilevel programming problems. Optimization 39, 361–366 (1997)

Ye, J.J., Zhu, D.L.: New necessary optimality conditions for bilevel programs by combining MPEC and the value function approach. SIAM J. Optim. 20, 1885–1905 (2010)

Acknowledgments

The authors would like to thank Xijun Liang for helping with the numerical experiments, Lei Guo for giving suggestions in an earlier version and the anonymous referees for their suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

The research of Jane J. Ye was partially supported by NSERC and the research of Liwei Zhang was supported by the National Natural Science Foundation of China under Projects Nos. 11071029, 91330206 and 91130007.

Rights and permissions

About this article

Cite this article

Xu, M., Ye, J.J. & Zhang, L. Smoothing augmented Lagrangian method for nonsmooth constrained optimization problems. J Glob Optim 62, 675–694 (2015). https://doi.org/10.1007/s10898-014-0242-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-014-0242-7

Keywords

- Nonsmooth optimization

- Constrained optimization

- Smoothing function

- Augmented Lagrangian method

- Constraint qualification

- Bilevel program