Abstract

We present a control problem for an electrical vehicle. Its motor can be operated in two discrete modes, leading either to acceleration and energy consumption, or to a recharging of the battery. Mathematically, this leads to a mixed-integer optimal control problem (MIOCP) with a discrete feasible set for the controls taking into account the electrical and mechanical dynamic equations. The combination of nonlinear dynamics and discrete decisions poses a challenge to established optimization and control methods, especially if global optimality is an issue. Probably for the first time, we present a complete analysis of the optimal solution of such a MIOCP: solution of the integer-relaxed problem both with a direct and an indirect approach, determination of integer controls by means of the sum up rounding strategy, and calculation of global lower bounds by means of the method of moments. As we decrease the control discretization grid and increase the relaxation order, the obtained series of upper and lower bounds converge for the electrical car problem, proving the asymptotic global optimality of the calculated chattering behavior. We stress that these bounds hold for the optimal control problem in function space, and not on an a priori given (typically coarse) control discretization grid, as in other approaches from the literature. This approach is generic and is an alternative to global optimal control based on probabilistic or branch-and-bound based techniques. The main advantage is a drastic reduction of computational time. The disadvantage is that only local solutions and certified lower bounds are provided with no possibility to reduce these gaps. For the instances of the electrical car problem, though, these gaps are very small. The main contribution of the paper is a survey and new combination of state-of-the-art methods for global mixed-integer optimal control and the in-depth analysis of an important, prototypical control problem. Despite the comparatively low dimension of the problem, the optimal solution structure of the relaxed problem exhibits a series of bang-bang, path-constrained, and sensitivity-seeking arcs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Analysis of optimal driving behavior and optimization-driven driver assistant systems (DAS) become more relevant, as we see the dawn of the era of automatic driving. They have been attracting a lot of interest in the last three decades, accelerated by recent technological advances and first successfully operating autonomous cars, developed by Google, Nissan, Volkswagen and others.

In this paper, we are interested in the optimal control of the motor of an electrical car. The control task is to drive a given distance in an energy-optimal way. The motor can be operated in two discrete modes, leading either to acceleration and energy consumption, or to a recharging of the battery. The induced current is bounded. The electrical car with a hybrid motor with acceleration-consumption and braking-recharging modes can be seen as a specific type of hybrid electrical vehicle (HEV). Recent work on controling HEVs and further references can be found in [13, 23, 41, 46]. Different approaches for DAS have been proposed. Autonomous predictive control of vehicles is studied, e.g., in [10]. A hybrid model-predictive control (MPC) approach for vehicle traction control is presented in [2] and [9] considers autonomous control of a robotized gearbox. Off-line optimal control with explicit consideration of the optimal gear choice, which again brings a discrete aspect to the optimization problems, is discussed in [15, 16, 25]. It is evident, however, that automatic cruise controllers operating solely on the knowledge of the truck’s current system state inevitably will make control decisions inferior to those of an experienced driver, cf. [18, 45]. Thus, also nonlinear model-predictive control (NMPC) has been applied to car control. Recent theoretical and algorithmic advances allow for mixed-integer nonlinear model predictive control in real time for heavy duty trucks [24], taking into account prediction horizons based on GPS data.

Still, the combination of nonlinear dynamics and discrete decisions in the context of hybrid vehicles poses a challenge to established optimization and control methods, especially if global optimality is an issue. One particular challenge when dealing with electrical motors is the different time scale of the variations of the electrical current with respect to the global control task. Whereas traditional approaches try to decompose the problem, we follow [32] in which the electrical car problem based on the electrical and mechanical dynamic equations, has first been described. As explained in [32], the control needs to switch in the range of milliseconds. Longer time intervals without switching lead to a violation on the bounds of the current inside the motor, and thus hardware destruction. For a control task of 10 s an algorithm must thus optimize over thousands to hundreds of thousands of possible switches. Note that in practice the electrical switches are designed to reliably handle time-scales above 10 kHz, i.e., below \(10^{-4}\) s.

Mathematically, the optimal control of an electrical car as considered in this paper, leads to a mixed-integer optimal control problem (MIOCP) with a discrete feasible set for the controls and state constraints. This problem class touches various disciplines: hybrid systems, direct and indirect methods for optimal control, MIOC, NMPC, global optimization and optimal control. We are interested in global solutions for MIOCP. Unfortunately, current state-of-the-art methods for global optimal control that are based on convex underestimators are not able to solve the electrical car problem in reasonable time, even for very coarse time discretizations. See [12, 42] for references to global optimization and control.

Our approach is based on a combination of recent state-of-the-art methods to analyze MIOCPs. A global optimality certificate is calculated using the method of moments. This approach consists of reformulating a given optimization problem as a generalized moment problem (GMP), i.e., a linear program defined on a measure space. For polynomial data, this GMP can be relaxed in the form of linear matrix inequality (LMI) problems of increasing order. Under mild conditions, the objective function values of the LMI relaxations converge as lower bounds to the one of the original problem [26]. The approach can naturally be extended to optimal control problems (OCP) [27], and a large class of mixed-integer optimal control problems (MIOCP) [19].

Unfortunately, the great strength of this approach—excellent global lower bounds—comes at the price of non-availability of the corresponding trajectory and control strategy. Therefore, we propose to combine it with a local approach to calculate locally optimal controls, where local optimality is meant with respect to the solution of an integer-relaxed control problem; it can be approximated arbitrarily close by a (possibly chattering) integer control. To better understand the structural properties of a problem, also first optimize, then discretize may be applied to obtain locally optimal solutions. First, we reformulate and decompose the MIOCP. Second, we solve the integer-relaxed control problem. Third, we construct an integer solution by solving a mixed-integer linear program (MILP) that minimizes a certain norm between integer controls and the relaxed continuous control from the second step. If necessary, we may adaptively refine the control discretization. For this procedure asymptotic bounds and very efficient algorithms are known [35, 37, 39].

The paper is organized as follows. In Sect. 2, we describe the optimal control problem on the energy consumption of an electrical car performing a displacement and we discuss the partial outer convexification. In Sect. 3, we discuss direct first discretize, then optimize approaches and present a very basic NLP reformulation. In Sect. 4, we review the sum up rounding strategy to derive integer controls and state some theoretical properties of the corresponding trajectory. An alternative strategy, the optimization of switching times, is discussed in Sect. 5. In Sect. 6, we state the necessary conditions of optimality in function space by deriving a boundary value problem. The optimal structure contains two bang-bang arcs, two path-constrained arcs for different state constraints and a singular arc of order 1. In Sect. 7, the method of moments is explained. It is used to compute lower bounds, using different reformulations of the considered MIOCP. In Sect. 8, numerical results for all presented algorithms and formulations are presented, analyzed, and discussed on several instances of the problem. Section 9 concludes the paper.

2 Model

We are interested in the optimal control of the motor of an electrical car. The dynamics are described with four differential states: the electrical current \({x_{0}}\), the angular velocity \({x_{1}}\), the position of the car \({x_{2}}\), and the consumed energy \({x_{3}}\). The control task is to drive a given distance in an energy-optimal way. The motor can be operated in two discrete modes, \({u}(t) \in \{1, -1\}\), leading either to acceleration with energy consumption, or to a braking-induced recharging of the battery. The induced current is bounded. In [32], this MIOCP has been first formulated as

Note that the problem is written in Mayer-form, where the state \({x_{3}}\) contains the Lagrange-type objective function. Mathematically equivalent reformulations may result in different algorithmic behavior, though, as discussed in Sect. 7.

The used model parameters correspond to an electrical solar car, see [32]. They are the coefficient of reduction \(K_r\), the air density \(\rho \), the aerodynamic coefficient \(C_x\), the area in the front of the vehicle \(S\), the radius of the wheel \(r\), the constant representing the friction of the wheels on the road \(K_f\), the coefficient of the motor torque \(K_m\), the inductor resistance \(R_m\), the inductance of the rotor \(L_m\), the mass \(M\), the gravity constant \(g\), the battery voltage \(V_{\mathrm{alim}}\), and the resistance of the battery \(R_{\mathrm{bat}}\).

The formulation of problem (1) is nonlinear in the integer control \({u}(\cdot )\). A partial outer convexification as discussed in [35, 39] for the general case, is here equivalent to simply leaving the expression \({u}^2=1\) away,

We write \(f(x,u) = (f_0(x,u), f_1(x,u), f_2(x,u), f_3(x,u))^T\) for the right hand side of the ordinary differential equation in (2) and \(J\) or \(J^*\) for the optimal objective function value, if it exists. Whenever, we refer to “relaxed problems” in the following, this indicates that we replace \({u}(t) \in \{-1,1\}\) by \({u}(t) \in [-1,1]\) for all \(t \in [0, t_\mathrm {f}]\). The resulting continuous optimal control problem is denoted by an additional \(R\), e.g., (2)\(_R\) is the relaxation of (2).

The feasible sets and optimal solutions of problems (1) and (2) are obviously identical. However, this is not true for their relaxations to \({u}(t) \in [-1,1]\), namely (1)\(_R\) and (2)\(_R\), as discussed at length in [36]. To be able to obtain the best integer-valued solution we will work with (2).

3 Direct approach: first discretize, then optimize

Direct first discretize, then optimize approaches are straightforward ways to solve the relaxed problem (2)\(_R\). The control function \({u}: [t_0,t_\mathrm {f}] \mapsto \mathbb {R}^{n_{u}}\) is discretized via basis functions with finitely many parameters that become optimization variables of a finite dimensional nonlinear optimization problem (NLP). In the context of control problems with integer control functions it makes sense to use a piecewise constant representation, \({u}(t) = q_i \in \mathbb {R}^{n_{u}} \; \forall \; t \in [t_i, t_{i+1}]\).

There are different ways to parameterize the state trajectories: single shooting [40], Bock’s direct multiple shooting method [8, 30] and direct collocation, [4], all derived from similar ideas for boundary value problems. Further links and references can be found, e.g., in [3, 5].

In the interest of clarity and reproducibility, we transform the relaxed MIOCP (2)\(_R\) into an NLP by using collocation of order 1, i.e., an implicit Euler scheme with piecewise constant controls. The relevant formulation is shown in Listing 1.

Numerical results for different values of \(N=\) nt are given in column 2 of Table 1 in Sect. 8.

4 Sum up rounding for integer controls

Problem (2) is control-affine. Thus, we can apply the integer gap lemma proposed in [37] and the constructive sum up rounding strategy. We consider a given measurable function \({u}: [0,t_\mathrm {f}] \mapsto [-1,1]\) and a time grid \(0=t_0 < t_1 < \dots < t_N = t_\mathrm {f}\) on which we approximate the control \({u}(\cdot )\). We write \(\Delta t_j := t_{j+1} - t_j\) and \(\Delta t\) for the maximum distance

Let then a function \(\omega (\cdot ) : [0, t_\mathrm {f}] \mapsto \{-1,1\}\) be defined by

where for \(j=0 \dots N-1\) the \({p}_{j}\) are values in \(\{-1,1\}\) given by

We can now formulate the following corollary.

Corollary 1

(Integer Gap) Let \((x, {u})(\cdot )\) be a feasible trajectory of the relaxed problem (2)\(_R\).

Consider the trajectory \((y,\omega )(\cdot )\) which consists of a control \(\omega (\cdot )\) determined via sum up rounding (4–5) on a given time grid from \({u}(\cdot )\) and differential states \(y(\cdot )\) obtained by solving the initial value problem in (2) for the fixed control \(\omega (\cdot )\). Then there exists a constant \(C\) such that

and

for all \(t \in [0, t_\mathrm {f}]\).

Proof

Follows from Corollary 8 in [37] and the fact that all assumptions on the right hand side function in (2) are fulfilled, as it is sufficiently smooth. \(\square \)

Corollary 1 implies that the exact lower bound of the control problem (2) can be obtained by solving the relaxed problem (2)\(_R\) in which \({u}(t) \in \text {conv } \{-1,1\}\) instead of \({u}(t) \in \{-1,1\}\). In other words, anything that can be done with a fractional control can also be done with a (practicably feasible) bang-bang control. However, the price might be a so-called chattering behavior, i.e., frequent switching between on and off. Note that the famous bang-bang principle and the references [14, 31] state similar results, however without the linear grid dependence of the Hausdorff distance that can be exploited numerically by means of an adaptive error control and the constructive derivation of the controls.

An AMPL implementation is given in Listing 2 that allows to calculate an integer control in linear time for a given control \({u}(\cdot )\).

Note that (4–5) yields a minimizer to \( \max _t \big \Vert \int _0^t {u}(\tau ) - \omega (\tau ) \mathrm{d} \tau \big \Vert \) over all feasible piecewise constant \(\omega \). In the case of linear control constraints, e.g., a maximum number of switches, these constraints can be incorporated into a MILP. For a proof, problem formulations, and a tailored branch and bound code see [22, 38].

Numerical results for different values of \(N=\) nt are given in column 3 of Table 1.

5 Switching time optimization for integer controls

One possibility to solve problem (2) is motivated by the idea to optimize the switching times directly, and to fix the values of the integer controls on given intervals. In practice one will optimize a scaled vector of model stage lengths \(h_j := {t}_{j+1} - {t}_{j}\). This concept is old and well known from (a) indirect approaches, where switching functions (derivatives of the Hamiltonian with respect to the controls) are used to determine switching times, from (b) hybrid systems, where switching functions determine phase transitions, and from (c) multi-stage processes, such as batch processes in chemical engineering, consisting of several phases with open duration, e.g. [29]. This idea is also being promoted as “control parameterization enhancing technique” for the special case of integer-valued controls, [28], and has found use as a proof technique for necessary conditions of optimality [16, 17].

For the problem at hand the time transformation boils down to an exchange of the roles of u (now a fixed parameter, alternating between 1 and \(-\)1) and dt (now an optimization variable) compared to Listing 1.

For a discussion of advantages (easy to implement and to include an upper bound on the number of switches) and of the disadvantages (nonconvex problem formulation that needs to be well initialized, may violate the practical constraint of a fixed control grid) we refer to [35, 39]. We recommend to optimize switching times only after an initialization has been found via Listing 2. Numerical results for different values of \(N=\) nt without such an initialization are given in column 4 of Table 1 in Sect. 8.

6 Indirect approach: first optimize, then discretize

We study a prototypical instance of problem (2)\(_R\) with \(\mathcal {T}=\mathbb {R}\times \mathbb {R}\times \{100\} \times \mathbb {R}\), as in subsection 8.1. In [32] the necessary conditions of optimality have been derived for the relaxed MIOCP (1)\(_R\). With a quadratically entering control \({u}\) in the Hamiltonian the optimal control for sensitivity-seeking arcs can be readily calculated from \(\mathcal {H}_{u}= 0\). Here we look at the control problem (2)\(_R\) that is linear in \({u}\).

In the interest of clarity, we omit the arguments of functions and write, e.g., \({x_{0}}\) for \({x_{0}}(t)\). We start by looking at the path constraints. On path-constrained arcs we have for components of

equality in one of the two inequalities. Hence either

Thus \({u}_{\mathrm{path}}(x) = \frac{R_m {x_{0}} + K_m {x_{1}}}{V_{\mathrm{alim}}}\) whenever \(c^\mathrm{u}(x)\) or \(c^\mathrm{l}(x)\) is active.

The Hamiltonian function is given by

Applying Pontryagin’s maximum principle, we obtain adjoint differential equations

The corresponding transversality conditions for the Mayer term \(E(x(t_\mathrm {f})) = {x_{3}}(t_\mathrm {f})\) and the end time constraint \(r(x(t_\mathrm {f})) = {x_{2}}(t_\mathrm {f}) - 100 = 0\) with corresponding Lagrange multiplier \(\alpha \in \mathbb {R}\), are given by

As \(\alpha \) does not enter anywhere else in the boundary value problem, it indicates that the terminal value \(\lambda _2(t_\mathrm {f})\) is an additional degree of freedom. As shown above, a path-constrained control \({u}_{\mathrm{path}}(x)\) can be directly calculated from active constraints \(c^\mathrm{u}\) and \(c^\mathrm{l}\), thus the transversality conditions do not need to incorporate higher order time derivatives of the path constraints.

To analyze a sensitivity-seeking arc, we define the switching function as

We try to calculate \({u}_{\mathrm{sing}}\) utilizing \(S^{(i)}(x, \lambda ) = \frac{\partial ^i{S(x, \lambda )}}{\partial {t^i}} = 0\), until this expression depends explicitly on \({u}\). The first derivative of (9) with respect to time is

The second derivative is

Hence, we have a singular arc of order 1 (because only even time derivatives of control-affine systems can depend explicitly on \({u}\)) and

It would be nice to have feedback controls \({u}_{\mathrm{path}}\) and \({u}_{\mathrm{sing}}\) as functions of \(x\) only. The problem, however, is that we have four dual states and only two conditions \(S = S^{(1)} = 0\) that we may use to eliminate them. As \({\lambda _{2}}\) and \({\lambda _{3}}\) are constant in time according to (8c–8d), we replace \({\lambda _{0}}\) and \({\lambda _{1}}\) to obtain controls that depend on \(x\) and \({\lambda _{2}}, {\lambda _{3}}\). We derive from (9)

and from \(S^{(1)}(x, \lambda ) = 0\) that

Thus, substituting \({\lambda _{0}}\) and \({\lambda _{1}}\) we get the singular control \({u}_{\mathrm{feed}}(x, {\lambda _{2}}, {\lambda _{3}})\) which depends only on \(x, {\lambda _{2}}, {\lambda _{3}}\),

Summing up, we obtain the following boundary value problem (BVP)

For the structure of the optimal solution we look at the solution of the direct approach, see Fig. 2 in Sect. 8, and make an “educated guess” for the behavior of \(S(x^*, \lambda ^*)\). We want to find \(\tau _j\) for \(j=1\dots 4\) such that

To solve the boundary value problem (10), we formulate it again in AMPL. Note that we multiply the right hand sides with stage lengths \(\tau _{j+1} - \tau _j\) that we include as degrees of freedom. To have the same order of accuracy, the overall number of time points is identical to the discretization of the direct approach. However, equidistancy holds only per stage \([\tau _j, \tau _{j+1}], j=0 \ldots 4\).

Numerical results for different values of \(N=\) nt are given in column 1 of Table 1 in Sect. 8. Note that Listing 4 is a simple, but not very efficient approach to solve a boundary value problem. A better choice would be, e.g., a multiple shooting approach, based on the original work of Osborne and Bulirsch [11, 33]. To look into more sophisticated algorithms, the book of Uri Ascher [1] is a good starting point.

The optimal control for the relaxed problem (2)\(_R\) that can be calculated with Listing 4 can be used as an input for the sum up rounding strategy of Sect. 4. As an alternative, one can directly formulate the maximum principle for hybrid systems, e.g., [44]. This basic idea of the Competing Hamiltonian approach to mixed-integer optimal control has been described already in [6, 7]. It builds on the fact that a global maximum principle does not require the set \(\mathcal {U}\) of feasible controls to be connected. Hence, it is possible to choose the optimal control \(u^*(t)\) as the point wise maximizer of a finite number of Hamiltonians. This allows to formulate the mixed-integer optimal control problem as a boundary value problem with state-dependent switches. Bock and Longman applied this to the energy-optimal control of subway trains, [6, 7]. However, the relaxed solution turns out to be of bang-bang type. In our setting, we also have path-constrained and singular arcs, for which an application is not straightforward anymore.

7 Computing lower bounds by the moment approach

In this section, we develop a moment based optimization technique to obtain lower bounds on the cost of problem (2). In Sect. 8, these bounds are compared to the costs of the candidate solutions found by the direct and indirect methods.

The moment approach in optimization consists of reformulating a problem as a generalized moment problem (GMP), which is a linear program defined on a measure space. When the problem data is polynomial, this GMP can be relaxed in the form of Linear Matrix Inequality (LMI) problems of increasing order. Under mild conditions, the costs of the LMI relaxations converge to that of the original problem. These relaxations provide lower bounds on the globally optimal value of the problem. See [26] for an extensive treatment of the approach.

In this section, after setting up the notations, we make a general presentation of the GMP. After this, we develop several instances of the approach applicable to problem (2), following [19, 27].

Before presenting the GMP, we set up the notations and terminology used in this section. Let \(\mathbf {Z} \in \mathbb {R}^n\) be a compact set of an Euclidean space. We note by \(\mathcal {M}^+(\mathbf {Z})\) the cone of finite, non-negative, Borel measures supported on \(\mathbf {Z}\), equipped with the weak-\(*\) topology. For the purpose of this paper, it is enough to consider \(\mathcal {M}^+(\mathbf {Z})\) as (isomorphic to) the space of non-negative continuous linear functionals on \(C(\mathbf {Z})\), via the operation of measure integration, see e.g. [34, §21.5] and the whole part III of that reference for a thorough introduction on the subject. For a continuous function \(f(z) \in C(\mathbf {Z})\), denote by \(\int _{\mathbf {Z}} \! f(z) \, \mu (dz)\) the integral of \(f(z)\) by the measure \(\mu \in \mathcal {M}^+(\mathbf {Z})\). When no confusion may arise, we note \(\langle f, \mu \rangle \) for the integral to simplify exposition and to insist on the duality relationship between \( C(\mathbf {Z})\) and \(\mathcal {M}(\mathbf {Z})\). The Dirac measure supported at \(z^*\), denoted by \(\delta _{z^*}\), is the measure for which \(\langle f, \delta _{z^*} \rangle = f(z^*)\), \(\forall f \in C(\mathbf {Z})\).

For multi-index \(\alpha \in \mathbb {N}^n\) and vector \(z\in \mathbb {R}^n\), we use the notation \(z^\alpha := \prod _{i=1}^n z_i^{\alpha _i}\). The moment of multi-index \(\alpha \in \mathbb {N}^n\) of measure \(\mu \in \mathcal {M}^+(\mathbf {Z} \subset \mathbb {R}^n)\) is then defined as the real \(y_\alpha = \langle z^\alpha , \mu \rangle \). A multi-indexed sequence of reals \(\lbrace y_\alpha \rbrace _{\alpha \in \mathbb {N}^n}\) is said to have a representing measure on \(\mathbf {Z}\) if there exists \(\mu \in \mathcal {M}^+(\mathbf {Z})\) such that \(y_\alpha = \langle z^\alpha , \mu \rangle \) for all \(\alpha \in \mathbb {N}^n\).

Denote by \(\mathbb {R}[z]\) the ring of polynomials in the variables \(z\). A set \(\mathbf {Z} \in \mathbb {R}^n\) is basic semi-algebraic if it is defined as the intersection of finitely many polynomial inequalities: \(\mathbf {Z} := \lbrace z \in \mathbb {R}^n : \; g_i(z) \ge 0, \, g_i(z) \in \mathbb {R}[z], \, i = 1 \ldots n_{\mathbf {Z}}\rbrace \).

Finally, we use the notation \(\underline{x}\) to denote parameters of the state space where trajectories \(x(t)\) live. We use the same convention \(\underline{u}\) for the controls \(u(t)\). This notation makes the passage from temporal integration to integration with respect to a measure transparent.

7.1 Solving the generalized moment problem

In this paper, we consider the following generalized problem of moments:

where the decision variable of the problem is a vector \(\mu \) of \(m\) measures, each posed on its own set \(\mathbf {Z}_j\) parameterized by variables \(z_j\). The cost is given by a vector \(c\) of polynomial functions, i.e., \(c_j \in \mathbb {R}[z_j]\). The constraints are materialized by at most countably many equality constraints indexed by given set \(\mathbf {I}\), with \(b_i \in \mathbb {R}\) and \(a_{ij} \in \mathbb {R}[z_j]\) for \(i \in \mathbf {I}\).

This problem, its method of resolution and several of its applications are extensively discussed in [26]. We summarize here the main results. A problem with polynomial data such as (11) (that is, a problem where each function is polynomial and each set is defined as a basic semi-algebraic set) can be relaxed as a problem on the moment sequences \(\lbrace y_\alpha \rbrace \) of the measures. This new problem has now countably many decision variables, one for each moment of each measure. It is a semi-definite program, as constraints of this form are necessary for sequences of reals to have a representing measure. When only a finite set of the moments is considered, that is when the moment sequences are truncated to their first few elements of degree less than \(2r\), one obtains a proper relaxation of (11) in the form of Linear Matrix Inequalities of finite size, with associated cost \(J_{\mathrm{GMP}}^{r}\). These can be solved by off-the-shelf software to obtain a lower bound on the cost of (11), i.e.,

See [26, Chap. 4] for an in-depth treatment of the LMI relaxations.

7.2 GMP formulations for problem (2)

A successful application of the moment approach requires measures that are supported on Euclidean spaces of small dimension. The sizes of the LMIs grow polynomially with respect to the relaxation order, when the number of variables remains constant. This limits the dimension of the underlying spaces to values below 6 on current computers with standard semi-definite solvers. However, in many cases such relaxations are sufficient to obtain sharp enough lower bounds.

In this section, we show several methods to relax problem (2) as an instance of (11), and compare their benefits from a computational point of view.

We start with the procedure proposed in [27]. For an admissible pair \((x,u)\), define the following occupation measures. The time-state occupation measure \(\mu \in \mathcal {M}^+([0,t_f] \times \mathbf {U} \times \mathbf {X})\) is defined by:

where \(\mathbf {A}\), \(\mathbf {B}\) and \(\mathbf {C}\) are Borel subsets of respectively \([0,t_f]\), \(\mathbf {U}\) and \(\mathbf {X}\). That is, for a continuous test function \(w(t,\underline{u},\underline{x})\), the property

holds by definition. Similarly, define the final state occupation measure \(\phi \in \mathcal {M}^+(\mathbf {X}_f)\) for the same admissible pair as:

where \(\mathbf {C}\) is a Borel subset of \(\mathbf {X}_f\). By definition, for a continuous test function \(w(\underline{x})\), the following relation holds

Evaluating a polynomial test function \(v \in \mathbb {R}[t,\underline{x}]\) yields by the chain rule

Making use of properties (14) and (16) in (17) leads to the following relaxation of (2) as a GMP:

where the different sets are defined by

In (18), the decision variables are arbitrary pairs of measures \((\mu ,\phi )\), and not specifically the occupation measures defined above. Hence,

Also remark that (18) is indeed an instance of (11): all functions are polynomial in their arguments and the sets are all basic semi-algebraic, if one uses the obvious characterization \([0,t_f] = \lbrace t \in \mathbb {R}: \, t \, (t_f-t) \ge 0\rbrace \). In addition, the problem has countably many equality constraints: one for each polynomial test function. We can therefore use the moment relaxations presented in the previous subsection. Note that the problem is not formulated over compact sets; it is therefore not guaranteed that the costs of the LMI relaxations do converge to \(J_M\).

In (18), the measure supported on the Euclidean space of highest dimension is \(\mu \). The dimension of the underlying space is six: time, four states and one control. As an alternative formulation, we reduce this dimension by writing problem (2) in Lagrange form, i.e., we remove the dependence on \(x_3(t)\),

where, by a slight abuse of notation, \(\mathbf {X}\) now refers to a subset of \(\mathbb {R}^3\). By the same treatment as for Mayer problem (2), problem (23) can be relaxed as an instance of GMP (11) on a space of dimension five.

We reduce the dimension further by observing that the state \(x_2\) is a simple end-constrained integrator. We reformulate (23) as

with obviously redefined \(\mathbf {X} \subseteq \mathbb {R}^2\) and \(\mathbf {X}_f \subseteq \mathbb {R}^2 \). This reformulation leads to the following instance of GMP (11):

The maximal dimension of (25) is now four. We now look at an alternative relaxation as a GMP that has been proposed in [19], reducing the size of the underlying space furthermore. It applies to switched systems of the form

with an integer-valued signal \(\sigma : [0,t_f]\rightarrow \mathbb \{1,2,\ldots ,m\}\) choosing between several available modes driven by their associated dynamics \(f^{\sigma (t)}\). Clearly, problem (2) can be reformulated as a switched system with two modes. The first mode, selected by signal \(\sigma _1(t)\), consists of driving the system with \(u(t)=1\), hence with dynamics \(\dot{x} = f^{1}(x) := f(x,1)\). The second mode drives the system with \(u(t)=-1\), hence with dynamics \(\dot{x} = f^{2}(x) := f(x,-1)\). Following [19], this yields the following GMP relaxation:

Notice that this alternative formulation involves one extra measure, but both mode measures are supported on time and state only, and the control space disappears altogether. Therefore, computation gains are expected with respect to (25) for high relaxation orders. In Sect. 8, we confirm this finding on a practical implementation of (18) and (27), and compare the sharpness of the lower bounds at given relaxation orders.

To sum up, we present in this section four different ways of relaxing problem (2) as an instance of GMP (11): Mayer form (18), Lagrange form (not explicited), integrated form (25) and switched form (27). Although mathematically equivalent, the behavior of the problems when truncated as LMI relaxations are expected to differ greatly in terms of computational load. The exact numerical results are given in Table 2 of Sect. 8.

8 Numerical results

In this section, we look at particular instances of problem (1) and apply the different reformulations and algorithms to them, by stressing that our methodology is very generic and can be easily adapted to similar control tasks. The parameter values are real world data and model an ENSEEIHT electrical solar car. They are \(R_{\mathrm{bat}}=0.05\,\Omega , V_{\mathrm{alim}}=150\,\mathrm{V}, R_m=0.03\,\Omega , K_m=0.27, L_m=0.05,r=0.33\,\mathrm{m},K_r=10,M=250\,\mathrm{kg},g=9.81,K_f=0.03,\rho =1.293\,\mathrm{kg/m}^3,S=2m^2,C_x=0.4\) and \(i_{\max }=150\,\mathrm{A}\). The electrical and mechanical parts of the model are explained and detailed in [32].

We present numerical results for the different approaches discussed so far. The results have been obtained on different computers and with different solvers, hence the computational times are only indicative and should by no means be compared among one another.

8.1 A detailed case study

We look at the particular instance of problem (2) with \(t_\mathrm {f}=10\) s and target set \(\mathcal {T}=\mathbb {R}\times \mathbb {R}\times \{100\} \times \mathbb {R}\), in which the car needs to cover 100 m in 10 s.

Table 1 summarizes the results for the different approaches from Sects. 3, 4, and 6. One observes the convergence of all three approaches to the optimal value \(J^*\) as \(N\) goes to infinity. The computational costs increase as well. One notes the low additional costs of the sum up rounding strategy to determine an integer control. The interior point solver IPOPT was used to solve both the NLPs from the direct approach (“first discretize, then optimize”) and the discretized boundary value problems from the indirect approach (“first optimize, then discretize”). It is further evident from the computational times that large-scale discretized boundary value problems are not well suited for generic black-box optimization codes. The numerical linear algebra becomes expensive due the additional adjoint variables. Instead, shooting methods should be used, as already mentioned in Sect. 6.

Also the switching time optimization approach suffers from high computational times when \(N\) increases, due to bad convergence properties which are well known from the solution of boundary value problems. Note that, for reasons of comparison, the BVP variables have not been initialized with the solution of the direct method, and the switching time interval lengths have not been initialized with the solution of the sum up rounding strategy. Doing so, a reduction of iterations and computing time can be expected.

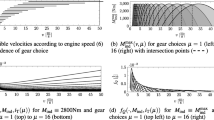

Figure 1 shows a plot of the differential states of the optimal trajectory of (2)\(_R\) for \(N=\hbox {1,000}\). One observes that the current \(x_0\) increases to its maximal value of 150A, stays there for a certain time, decreases on its minimal value of \(-\)150A, stays on this value and eventually increases slightly. This behavior corresponds to the different arcs bang, path-constrained, singular, path-constrained, bang that have been discussed in Sect. 6 and can be observed also in Fig. 2. It shows the corresponding switching function and the optimal control.

Primal states of an optimal trajectory for (2)\(_R\) on a control discretization grid with \(N=\hbox {1,000}\). The car moves from its origin (\(x_2=0\)) to its destination (\(x_2=100\)) in 10 s with minimum (non-monotonic due to recharging) energy \(x_3\)

Continuation of Fig. 1, showing the optimal control and switching function. The dotted vertical lines show the switching times \(\tau _i\) where transitions between bang, path-constrained, singular, path-constrained, and bang arc occur

Note that the plots show data from the solution with the indirect approach, but that for the chosen discretization of \(N=\hbox {1,000}\) differences to the solution using the direct approach are negligible (at least to the human eye).

Applying the sum up rounding strategy results in an integer-feasible chattering solution. The resulting primal states are shown in Fig. 3. One observes the high-frequency zig-zagging of the current \({x_{0}}(t)\) that results from the fast switches in the control. The infeasibility of the trajectory (\(x_1(t) > 150A, x_1(t) < -150A, x_2(t_\mathrm {f}) \not =100\)) is visible. As shown in Table 1, this infeasibility converges to zero as \(N\) increases.

The direct and indirect approaches from Sects. 3 and 6 are local optimization techniques and only provide upper bounds for problem (2)\(_R\) and hence for (2).

We compare them to the lower bounds from the moment approach presented in Sect. 7. For the practical implementation of the method, we used the GloptyPoly toolbox [20], which allows to build instances of (11) with high level commands. From this definition, the LMI relaxations at a given order are constructed automatically and passed on to a semi-definite solver for resolution. For this paper, we used SeDuMi [43], the default solver for GloptyPoly. We also used the verified semi-definite solver VSDP [21] that uses interval analysis to rigorously certify SeDuMi’s solutions. This can be done by noticing that all moments are bounded by \(1\) when the problem is rescaled such that each measure is supported on a unit box. This uniform bound is essential for VSDP to compute efficiently numeric lower bounds on each moment relaxation cost.

Table 2 summarizes the results for the different GMP formulations. As expected, for a given relaxation order \(r\), the switched system formulation (27) on the integrated problem statement (26) yields faster computations, and the generic GMP formulation on Mayer statement (2) is the slowest. Notice however that for the former, lower bounds \(J_M^{\, r}\) are slightly sharper at a given relaxation order. This is due to the much higher number of decision variables (moments) that are involved.

We would like to draw the reader’s attention to a beautiful reverse view of the outer convexification results. While we solved the (integer-)relaxation (2)\(_R\) and used sum up rounding to obtain an asymptotically feasible and optimal solution for (2), in the method of moments approach we reverse the procedure. We solve the (LMI-)relaxation of MIOCP (2)—which is more efficient to solve than the (LMI-)relaxation of (2)\(_R\)!—and know that the bound is also valid for (2)\(_R\). This is due to the fact that relaxing the dynamics weakly with occupation measures is equivalent to convexifying the vector field \(f(t,x,\cdot \,)\). Hence, given the affine control dependence of (2), this amounts to convexifying the control set.

Combining the upper bound from Table 1 and the lower bound from Table 2 we state for the relaxed problem (2)\(_R\)

with an optimality gap of approximately \(0.04\,\%\). Thus, the solution that is structurally equivalent to the one from Figs. 1 and 2 is certified to be \(0.04\,\%\)–globally optimal. This \(\epsilon \)–optimality carries over to the integer case of (2), as we do know that asymptotically the sum up rounding strategy will provide the same upper bound as the solution to the relaxed problem (2)\(_R\). The price for such a control would be a very fast switching behavior. We stopped at an upper bound of 22,921 with an optimality gap of \(0.7\,\%\).

8.2 Additional scenarios

To illustrate the general applicability of our proposed approach (to combine direct solution of a relaxed and partially convexified MIOCP to obtain an upper bound and a candidate solution, and the method of moments to obtain a lower bound) we provide objective function values and optimality gaps for the following scenarios.

-

1.

As in Sect. 8.1

$$\begin{aligned} \mathcal {T}=\mathbb {R}\times \mathbb {R}\times \{100\} \times \mathbb {R}, t_f=10\,\mathrm{s}. \end{aligned}$$ -

2.

Fixed final velocity

$$\begin{aligned} \mathcal {T}=\mathbb {R}\times \{0\frac{K_r}{3.6r}\}\times \{100\} \times \mathbb {R}, t_f=10\,\mathrm{s}. \end{aligned}$$ -

3.

Fixed final velocity

$$\begin{aligned} \mathcal {T}=\mathbb {R}\times \{50\frac{K_r}{3.6r}\}\times \{100\} \times \mathbb {R}, t_f=10\,\mathrm{s}. \end{aligned}$$ -

4.

Bounded velocity

$$\begin{aligned} {x_{2}}(t)\le 45\frac{K_r}{3.6r} \; \forall \; t, \mathcal {T}=\mathbb {R}\times \mathbb {R}\times \{100\} \times \mathbb {R}, t_f=10\,\mathrm{s}. \end{aligned}$$ -

5.

Bounded velocity, longer time horizon

$$\begin{aligned} {x_{2}}(t)\le 30\frac{K_r}{3.6r} \; \forall \; t, \mathcal {T}=\mathbb {R}\times \mathbb {R}\times \{100\} \times \mathbb {R}, t_f=15\,\mathrm{s}. \end{aligned}$$ -

6.

Fixed final velocity, bounded velocity, longer time horizon

$$\begin{aligned} {x_{2}}(t)\le 30\frac{K_r}{3.6r} \; \forall \; t, \mathcal {T}=\mathbb {R}\times \{30\frac{K_r}{3.6r}\}\times \{100\} \times \mathbb {R}, t_f=15\,\mathrm{s}. \end{aligned}$$

Table 3 shows lower bounds that have been calculated with relaxation (27) and \(r=6\) and upper bounds that have been calculated with the direct method of Sect. 3 and the SUR strategy of Sect. 4 for \(N=100,000\). Again, these bounds correspond to the global optima of different instances of (2) and (2)\(_R\). The computational times are omitted as they are in the same order of magnitude as in Sect. 8.1.

The alternative approach to solve the control problem globally, e.g., by applying a global NLP solver to the discretization, is outperformed by orders of magnitude. As an (unfair) comparison, we ran the solver couenne on the first scenario with \(N=10\). We stopped after 50 min of computational time. Couenne had visited 1,706,800 nodes, with 646,833 open nodes on the tree and a gap of 26,282 for the best solution minus a lower bound of 14,526.

However, as the method of moments does not provide a candidate solution, we cannot guarantee that upper and lower bound coincide. For many practical purposes our approach that provides a candidate integer solution and an \(\epsilon \) certificate should be well suited and be applied before turning to global solvers. For other instances further research in global optimal control is encouraged.

9 Conclusions

In this paper, we combine several optimal control techniques to obtain \(\epsilon \)-optimal global solutions for minimizing the energy consumption of an electric car performing a displacement.

To obtain an upper bound, we apply an outer convexification of the MIOCP (1). This allows us to solve relaxed, continuous control problems (1)\(_R\). In this paper we present two ways to solve them, a direct and an indirect approach. Based on the calculated solution, a sum up rounding strategy is applied that yields a suboptimal integer solution for (1). This solution provides an upper bound and is known to converge to the optimal objective function of (1) as the control discretization grid is refined.

To obtain a lower bound to the MIOCP (1), we apply the method of moments, making use of a particular reformulation for switched systems that was proposed in [19]. This approach yields the best results in terms of the trade-off between sharpness of the bounds and computational load. For all instances, we provided numerically certified bounds, based on interval arithmetic. Hence, the method is able to offer rigorous numeric bounds for all admissible controls in their natural functional space.

For the considered control task of an electrical car, the gaps between the upper and lower bounds are very sharp (about \(0.1\,\%\)) for all considered instances of the problem. This suggests a general way to propose numeric frameworks for globally solving this kind of mixed-integer optimal control problems (MIOCP); the direct and indirect methods provide quality candidate solutions, whereas the moment method certifies their (epsilon-)global optimality. Up to our knowledge, this is the first time that such a global framework is used successfully on a MIOCP.

The main advantage is the extremely fast computational time. This put us in the comfortable position to present all methods with prototypical implementations that facilitate the presentation from a didactical point of view. In particular, the solution of local control problems can be done more efficiently by applying more involved implementations of direct multiple shooting or direct collocation.

References

Ascher, U., Mattheij, R., Russell, R.: Numerical Solution of Boundary Value Problems for Ordinary Differential Equations. SIAM, Philadelphia (1995)

Bemporad, A., Borodani, P., Mannelli, M.: Hybrid control of an automotive robotized gearbox for reduction of consumptions and emissions. In: Hybrid Systems: Computation and Control, Springer Lecture Notes in Computer Science, vol. 2623/2003, pp. 81–96. Springer, Heidelberg (2003). doi:10.1007/3-540-36580-X_9

Betts, J.: Practical Methods for Optimal Control Using Nonlinear Programming. SIAM, Philadelphia (2001)

Biegler, L.: Solution of dynamic optimization problems by successive quadratic programming and orthogonal collocation. Comput. Chem. Eng. 8, 243–248 (1984)

Biegler, L.: Nonlinear Programming: Concepts, Algorithms, and Applications to Chemical Processes. Series on Optimization. SIAM, Philadelphia (2010)

Bock, H., Longman, R.: Optimal control of velocity profiles for minimization of energy consumption in the New York subway system. In: Proceedings of the Second IFAC Workshop on Control Applications of Nonlinear Programming and Optimization, pp. 34–43. International Federation of Automatic, Control (1980)

Bock, H., Longman, R.: Computation of optimal controls on disjoint control sets for minimum energy subway operation. In: Proceedings of the American Astronomical Society. Symposium on Engineering Science and Mechanics. Taiwan (1982)

Bock, H., Plitt, K.: A multiple shooting algorithm for direct solution of optimal control problems. In: Proceedings of the 9th IFAC World Congress, pp. 242–247. Pergamon Press, Budapest (1984)

Borrelli, F., Bemporad, A., Fodor, M., Hrovat, D.: An MPC/hybrid system approach to traction control. IEEE Trans. Control Syst. Technol. 14(3), 541–552 (2006). doi:10.1109/TCST.2005.860527

Borrelli, F., Falcone, P., Keviczky, T., Asgari, J., Hrovat, D.: MPC-based approach to active steering for autonomous vehicle systems. Int. J. Veh. Auton. Syst. 3(2–4), 265–291 (2005)

Bulirsch, R.: Die Mehrzielmethode zur numerischen Lösung von nichtlinearen Randwertproblemen und Aufgaben der optimalen Steuerung. Tech. rep, Carl-Cranz-Gesellschaft, Oberpfaffenhofen (1971)

Chachuat, B., Singer, A., Barton, P.: Global methods for dynamic optimization and mixed-integer dynamic optimization. Ind. Eng. Chem. Res. 45(25), 8373–8392 (2006)

De Jager, B., Van Keulen, T., Kessels, J.: Optimal Control of Hybrid Vehicles. Advances in Industrial Control. Springer, London (2013)

Gamkrelidze, R.: Principles of Optimal Control Theory. Plenum Press, Berlin (1978)

Gerdts, M.: Solving mixed-integer optimal control problems by branch&bound: a case study from automobile test-driving with gear shift. Optim. Control Appl. Methods 26, 1–18 (2005)

Gerdts, M.: A variable time transformation method for mixed-integer optimal control problems. Optim. Control Appl. Methods 27(3), 169–182 (2006)

Gerdts, M., Sager, S.: Mixed-integer DAE Optimal control problems: necessary conditions and bounds. In: Biegler, L., Campbell, S., Mehrmann, V. (eds.) Control and Optimization with Differential-Algebraic Constraints, pp. 189–212. SIAM, Philadelphia (2012)

Hellström, E., Ivarsson, M., Aslund, J., Nielsen, L.: Look-ahead control for heavy trucks to minimize trip time and fuel consumption. Control Eng. Pract. 17, 245–254 (2009)

Henrion, D., Daafouz, J., Claeys, M.: Optimal switching control design for polynomial systems: an LMI approach. In: 2013 Conference on Decision and Control. Florence, Italy (2013). Accepted for publication

Henrion, D., Lasserre, J.B., Löfberg, J.: Gloptipoly 3: moments, optimization and semidefinite programming. Optim. Methods Softw. 24(4–5), 761–779 (2009)

Jansson, C.: VSDP: a Matlab software package for verified semidefinite programming. Nonlinear Theory and its Applications pp. 327–330 (2006)

Jung, M., Reinelt, G., Sager, S.: The Lagrangian Relaxation for the Combinatorial Integral Approximation Problem. Optimization Methods and Software (2014). (Accepted)

Kim, T.S., Manzie, C., Sharma, R.: Model predictive control of velocity and torque split in a parallel hybrid vehicle. In: Proceedings of the 2009 IEEE International Conference on Systems. Man and Cybernetics, SMC’09, pp. 2014–2019. IEEE Press, Piscataway, NJ (2009)

Kirches, C., Bock, H., Schlöder, J., Sager, S.: Mixed-integer NMPC for predictive cruise control of heavy-duty trucks. In: European Control Conference, pp. 4118–4123. Zurich, Switzerland (2013)

Kirches, C., Sager, S., Bock, H., Schlöder, J.: Time-optimal control of automobile test drives with gear shifts. Optim. Control Appl. Methods 31(2), 137–153 (2010)

Lasserre, J.B.: Positive Polynomials and Their Applications. Imperial College Press, London (2009)

Lasserre, J.B., Henrion, D., Prieur, C., Trélat, E.: Nonlinear optimal control via occupation measures and LMI-relaxations. SIAM J. Control Optim. 47(4), 1643–1666 (2008)

Lee, H., Teo, K., Jennings, L., Rehbock, V.: Control parametrization enhancing technique for optimal discrete-valued control problems. Automatica 35(8), 1401–1407 (1999)

Leineweber, D.: Efficient reduced SQP methods for the optimization of chemical processes described by large sparse DAE models, Fortschritt-Berichte VDI Reihe 3, Verfahrenstechnik, vol. 613. VDI Verlag, Düsseldorf (1999)

Leineweber, D., Bauer, I., Bock, H., Schlöder, J.: An efficient multiple shooting based reduced SQP strategy for large-scale dynamic process optimization. Part I: theoretical aspects. Comput. Chem. Eng. 27, 157–166 (2003)

McShane, E.: The calculus of variations from the beginning to optimal control theory. SIAM J. Control Optim. 27(5), 916–939 (1989)

Merakeb, K., Messine, F., Aïdène, M.: A branch and bound algorithm for minimizing the energy consumption of an electrical vehicle. 4OR (2013, available online)

Osborne, M.: On shooting methods for boundary value problems. J. Math. Anal. Appl. 27, 417–433 (1969)

Royden, H., Fitzpatrick, P.: Real Analysis. Prentice Hall, Upper Saddle River (2010)

Sager, S.: Numerical methods for mixed-integer optimal control problems. Der andere Verlag, Tönning, Lübeck, Marburg (2005). ISBN 3-89959-416-9

Sager, S.: Reformulations and algorithms for the optimization of switching decisions in nonlinear optimal control. J. Process Control 19(8), 1238–1247 (2009)

Sager, S., Bock, H., Diehl, M.: The integer approximation error in mixed-integer optimal control. Math. Program. A 133(1–2), 1–23 (2012)

Sager, S., Jung, M., Kirches, C.: Combinatorial integral approximation. Math. Methods Oper. Res. 73(3), 363–380 (2011). doi:10.1007/s00186-011-0355-4

Sager, S., Reinelt, G., Bock, H.: Direct methods with maximal lower bound for mixed-integer optimal control problems. Math. Program. 118(1), 109–149 (2009)

Sargent, R., Sullivan, G.: The development of an efficient optimal control package. In: Stoer, J. (ed.) Proceedings of the 8th IFIP Conference on Optimization Techniques (1977), Part 2. Springer, Heidelberg (1978)

Sciarretta, A., Guzzella, L.: Control of hybrid electric vehicles. IEEE Control Syst. 27(2), 60–70 (2007)

Scott, J.K., Chachuat, B., Barton, P.I.: Nonlinear convex and concave relaxations for the solutions of parametric ODEs. Optim. Control Appl. Methods 34, 145–163 (2013). doi:10.1002/oca.2014

Sturm, J.F.: Using SeDuMi 1.02, a Matlab toolbox for optimization over symmetric cones. Optim. Methods Softw. 11–12, 625–653 (1999)

Tauchnitz, N.: Das Pontrjaginsche Maximumprinzip für eine Klasse hybrider Steuerungsprobleme mit Zustandsbeschränkungen und seine Anwendung. Ph.D. thesis, BTU Cottbus (2010)

Terwen, S., Back, M., Krebs, V.: Predictive powertrain control for heavy duty trucks. In: Proceedings of IFAC Symposium in Advances in Automotive Control, pp. 451–457. Salerno, Italy (2004)

van Keulen, T., van Mullem, D., de Jager, B., Kessels, J.T.B.A., Steinbuch, M.: Design, implementation, and experimental validation of optimal power split control for hybrid electric trucks. Control Eng. Pract. 20(5), 547–558 (2012)

Acknowledgments

The authors gratefully acknowledge financial support by the BMBF Grant “GOSSIP” (S. Sager), by the Engineering and Physical Sciences Research Council under Grant EP/G066477/1 (M. Claeys), and by the Grant ANR 12-JS02-009-01 “ATOMIC” (F. Messine).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Sager, S., Claeys, M. & Messine, F. Efficient upper and lower bounds for global mixed-integer optimal control. J Glob Optim 61, 721–743 (2015). https://doi.org/10.1007/s10898-014-0156-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10898-014-0156-4